Abstract

Clustering ensembles can obtain more superior final results by combining multiple different clustering results. The qualities of the points, clusters, and partitions play crucial roles in the consistency of the clustering process. However, existing methods mostly focus on one or two aspects of them, without a comprehensive consideration of the three aspects. This paper proposes a three-level weighted clustering ensemble algorithm namely unified point-cluser-partition algorithm (PCPA). The first step of the PCPA is to generate the adjacency matrix by base clusterings. Then, the central step is to obtain the weighted adjacency matrix by successively weighting three layers, i.e., points, clusters, and partitions. Finally, the consensus clustering is obtained by the average link method. Three performance indexes, namely F, NMI, and ARI, are used to evaluate the accuracy of the proposed method. The experimental results show that: Firstly, as expected, the proposed three-layer weighted clustering ensemble can improve the accuracy of each evaluation index by an average value of 22.07% compared with the direct clustering ensemble without weighting; Secondly, compared with seven other methods, PCPA can achieve better clustering results and the proportion that PCPA ranks first is 28/33.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cluster analysis belongs to the field of multivariate statistical analysis and is an important branch of unsupervised pattern classification in statistical pattern recognition. Its task is to divide an unlabeled sample set into several subsets according to certain criteria with the target of grouping similar samples into one class and dissimilar samples in different classes. This analysis method can quantitatively determine the relationship between research objects, so as to achieve reasonable classification and analysis. Researchers have proposed many clustering algorithms from different aspects, such as partition-based methods, hierarchical-based methods, grid-based methods, etc [1,2,3]. As a powerful auxiliary tool, cluster analysis technology has played an important role in scientific research, social services, and other fields [4,5,6].

Given a dataset, different clustering algorithms, or even the same algorithm with different initializations and parameters, can lead to different clustering results. However, without prior knowledge, it is difficult to decide which algorithm is suitable for a given clustering task. Even if a clustering algorithm is given, it is still difficult to find suitable parameters for it [7, 8]. Different clusters produced by different algorithms may reflect different views of the data. Using complementary and rich information in multiple clusters, the clustering ensemble techniques have been widely used in data clustering and attracted increasing attentions in recent years [9,10,11,12,13,14,15,16,17,18,19]. For the first time, Strehl and Ghosh [9] proposed the clustering ensemble method whose purpose is to combine multiple clusters to obtain potentially better and more robust clustering results. This method can perform better in discovering singular clusters, dealing with noise and integrating clustering solutions from multiple distributed sources.

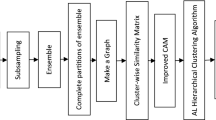

The process of clustering ensemble is shown in Fig. 1. First, M clustering results are obtained by running the M clustering algorithm. Each clustering result is regarded as a clustering member or a partition of clustering ensemble. The set of all clustering results is denoting \(P=\left\{ P^{(1)},\ldots , P^{(M)}\right\} \), this step is called clustering member generation. Then P is taken as input, they are combined, and the final clustering result is output, this step is called clustering composition/ensemble/fusion, also known as consensus function design. In terms of clustering member generation, there are usually three methods: (1) Use the same clustering algorithm. Since the randomly initialized K-means will get different clustering results each time it is run, clustering members can be generated through multiple runs. (2) Cluster different data subsets, such as random projection, projection to different subspaces, different sampling techniques, selection of different feature subsets, etc. (3) Adopt different number of clusters. For example, setting multiple different values of k or randomly selecting k in a specified interval. In the design of consensus function, since object labels are unknown in the process of cluster analysis, there is no explicit correspondence between cluster labels obtained by different cluster members. In addition, cluster members may contain different number of clusters, which makes the cluster label mapping problem very challenging. According to whether to explicitly solve the problem of cluster label correspondence, clustering ensemble methods can be divided into the following two categories: (1) Pair-wise approach, introducing the adjacency matrix H of hypergraph will represent the pair-wise relationship between objects, effectively avoiding the problem of cluster label correspondence. (2) Re-labeling approach, including cumulative voting, alternative voting, etc.

The quality of the basic clusterings plays a crucial role in the consistency of the clustering process. To improve consensus performance, researchers have attempted to evaluate base clusterings and assign them with different weights. These methods with weighting partitions do increase the accuracy of clustering ensemble to a certain extent [20,21,22,23, 28]. However, they are developed based on an implicit assumption that all of the clusters in the same base clustering have the same reliability, which cannot reflect the realistic situations of real-world datasets. They typically treat each base clustering as an individual and assign a global weight to each base clustering regardless of the diversity of the clusters inside it. To address this deficiency, references [29,30,31,32, 34] further consider the local diversity of ensembles and deal with the different reliability of clusters. Moreover, recent studies [36, 37] have shown that point can change its neighbors in different partitions and different points have different relationship stability. This difference shows that points may have different contributions to the detection of underlying data structure. Generally, a partition is made up of one or more clusters and a cluster is made up of one or more points. Therefore, the quality of the cluster directly affects the quality of the partition and the quality of the points directly affects the quality of the cluster. Therefore, there is a need to comprehensively consider the importance of points, clusters and partitions. However, very few of existing studies address the problem of how to uniformly weight these three layers together.

Aiming at the above problem, this paper proposes a three-layer weighted clustering ensemble method named PCPA based on three factors, i.e., point, cluster and partition. Firstly, PCPA adopts k-means algorithm to generate the base clusterings by iteratively running it for M times. Secondly, the base clusterings matrix is transformed into a hypergraph adjacency matrix H, and the CA matrix is fine-tuned by three-level weighted of point, cluster and partition. Finally, the average link (AL) method is used to obtain the consensus clustering.

For clarity, the contributions of this paper can be summarized as follows.

-

A clustering ensemble method, PCPA, based on point-cluster-partition three-layer weighted is proposed. To the best of our knowledge, PCPA is a three-layer weighted architecture by comprehensively consider the three factors for the first time.

-

The weighted effect of PCPA is compared with the following seven conditions: unweighted, point weighted, cluster weighted, partition weighted, point-cluster weighted, point-partition weighted and cluster-partition weighted. The results show that compared with the other 7 cases, the effect of three-layer weighting is always better than the effect of unweighted, and the ranking has little fluctuation, which proves that three-layer weighted has good stability.

-

The PCPA is compared with the more authoritative and popular weighting methods in recent years. The experimental results show that the accuracy of the proposed PCPA method is highter than seven other methods, which ranks the top two under the three commonly used evaluation indicators.

2 Related Work

Currently, different types of clustering ensemble methods have been proposed according to different applications. Representative methods include pairwise similarity methods, graph-based methods, relabeling-based methods, and feature-based methods [17]. However, a common limitation of most existing methods is that they generally treat all clusters and all base clusterings equally in clustering ensemble, and lower-quality clusters or lower-quality base clusterings may appear.

In order to avoid the influence of low-quality base clusterings, researchers have carried out some work. Yu et al. [19] focused on the selection of the base clusterings in clustering ensemble, and selected only some of the base clusterings from the base clustering set for integration according to the evaluation index. Yang et al. [20] combined some different clustering evaluation indicators to give a weight to the base clustering to obtain a better result. Huang et al. [21] used the normalized mutual information (NMI) [9] to measure the similarity between partitions firstly. Then they used them as the weights to weight the partitions and obtained the weighted CA matrix. Finally, three weighted evidence accumulation clustering (WEAC) methods based on hierarchical link were proposed: WEAC-AL, WEAC-CL, and WEAC-SL. Rouba et al. [22] used Rand index to measure the similarity between partitions and designed weights based on that. Then, three clustering algorithms (hierarchic clustering, k-means, k-medoids) was used to get the consensus results. Bai et al. [23] calculated the similarity between classes by using information entropy, and used it as a weight for the base clustering weighted. Song et al. [24] proposed a weighted ensemble Sparse Latent representation (subtype-WESLR) to detect cancer subtypes in heterogeneous omics data. This method used the weighted set strategy to fuse the base clusters obtained by different methods as prior knowledge, and the weights could be applied to different base clusters adaptively. Huang et al. [25] proposed a novel multidiversified ensemble clustering approach, which used an entropy-based cluster validity strategy to evaluate and weight each base clustering by considering the distribution of clusters in the entire ensemble. Wan et al. [26] proposed a short-text cluster integration method based on convolutional neural networks. The Gini coefficient was used to measure the reliability of base clusters, and then weighted them. Finally, hierarchical clustering was used for integration. Banerjee et al. [27] devised a polynomial heuristics that judiciously selected a subset of clusterings from the ensemble that contributed positively in forming the consensus to yield a quality consensus clustering.

However, most of these methods treat each base clustering as a whole and assign the weight to each base clustering without considering the diversity of its internal clusters. Iam-On et al. [28] presented a new link-based approach to improve the conventional matrix. It achieves that by using the similarity between clusters that are estimated from a link network model of the ensemble. And then three new link-based algorithms were proposed for the underlying similarity assessment. The final clustering result was generated from the refined matrix using two different consensus functions of feature-based and graph-based partitioning. Experimental results shows that weighted connected-triple (WCT) is more effective than the others. Huang et al. [29] introduced the concept of information entropy to calculate the uncertainty of each cluster. By calculating the uncertainty of each cluster under all base partitions, an ensemble-driven cluster index (ECI) was constructed. Then they used ECI as the weight value to weight the original CA matrix, and then integrated. Vo and Nguyen [30] first calculated the ratios of the distance from the points to the center of the cluster and the maximum distance within the cluster, and then used this as the weight to obtain a weighted object-cluster association-based (WOCA) matrix. The final clustering was finally derived by executing k-means on WOCA matrix. Rashidi et al. [31] evaluated a cluster undependability based on an information theoretic measure. Then they proposed two approaches: a cluster-wise weighted evidence accumulation and a cluster-wise weighted graph partitioning. Najafi et al. [32] obtained each cluster’s dependability by calculating the entropy and an exponential transform, which represented amount of the cluster’s distribution across various clusters of a partition in a reference set. Then two weight calculation methods were proposed: the first dependability measure (FDM) and the second dependability measure (SDM). Finally, they obtained the cluster weighed CA matrix based on FDM or SDM, and AL was used to get the consensus clustering. They call those methods ALFDM and ALSDM respectively, and experimental results show that ALSDM is superior to ALFDM. Shen et al. [33] proposed a text clustering ensemble method based on entropy criteria, which was used to evaluate the uncertainty of clusters. Two indexes were proposed according to the uncertainty of clusters, and then high-quality base clusterings were selected for integration.

In recent years, some scholars have tried to combine cluster weighting and partition weighting. Banerjee et al. [34] explored to derive a cluster-level weight from the principles of both agreement and disagreement of clusters to define reliable clusters in the ensemble. And then computed the weight of the partition by accumulating the proposed cluster-level weights of the constituent clusters. Zhang et al. [35] proposed a two-stage semi-supervised clustering ensemble framework which considerd both ensemble member selection and the weighting of clusters. Experimental results on various datasets showed that this framework outperforms most of clustering algorithms. However, the above methods still have a drawback, that is, all samples in the same cluster are given the same weight. It is to say that each sample in the same cluster is treated equally. Obviously, if on this way, the contribution of the sample cannot be accurately evaluated. Zhong et al. [36] first weights the points. The weights of points in the same cluster are related to their Euclidean distance and the distance between the two farthest points in the cluster. Then the normalized stability of each cluster is obtained by solving the average weights of all points in each cluster. Finally, the values of elements in CA matrix are defined as the product of the weights of points and the normalized stability of their clusters. Ren et al. [37] obtained a CA matrix by calculating the basic clusterings, and then the CA matrix was used to describe the difficulty of clustering around each sample and assigned corresponding weights. The idea of its weight setting is to use the idea of Boosting to assign greater weights to those samples that are difficult to divide. Then he presented three algorithms: weighted-object meta-clustering algorithm (WOMC), weighted-object similarity partitioning algorithm (WOSP), and weighted-object hybrid bipartite graph partitioning algorithm (WOHB). Li et al. [38] determined cluster centers by calculating the stability of samples, assigned sample points to clusters with the highest similarity, and finally used the single link algorithm for integration to verify its effectiveness on multiple datasets containing text datasets. Niu et al. [39] proposed a novel multi-view ensemble clustering approach using joint affinity matrix, which basic partitions were described with a sample-level weight, and the influence of incorrectly-partitioned data objects was decreased, whereby data objects could be effectively assigned to the correct partition.

Although people have proven the importance of weighting from various aspects, most people focus on weighting a certain aspect in the clustering ensemble process, such as point weighted or cluster weighted, there is still a lack of a three-layer combination of point, cluster, and partition. In this paper, we propose a weighted method based on three layers of point, cluster, and partition. Extensive experiments on various datasets show that our method (PCPA) has significant advantages in clustering accuracy and stability.

3 PCPA Weighted Method

The proposed approach first transforms the base clusterings into a hypergraph adjacency matrix \({{\varvec{H}}}\). The matrix \({{\varvec{H}}}\in {(0,1)}^{n\times k_l}\), summarizes the cluster-point relations occurring in the ensemble [40], in which n and \(k_l\) denote the number of points and clusters in the ensemble respectively, and \(k_l=k_1+k_2+\cdots +k_m+\cdots +k_M\), where \(k_m\) is the number of clusters in the m-th partition, M is the total number of partitions. Denoted by \(x_i (1\le i\le n)\) and \(C_l (1\le l\le k_l)\) i-th data point in the tested dataset and the l-th cluster in the ensemble. If \(x_i\) belongs to cluster \(C_l\), then \({{\varvec{H}}}(i,l)\)=1, otherwise \({{\varvec{H}}}(i,l)\)=0. The point-cluster-partition three-layer weighted and weights transfer are all carried out on the basis of \({{\varvec{H}}}\). Then, the weighted adjacency matrix of hypergraph is transformed into the CA matrix. Finally, the consensus clustering is obtained by AL. In this section, we will show how to set the values of three layers of weighting. The weight setting scheme, the weight value transmission scheme layer by layer, the method that converts the weighted \({{\varvec{H}}}\) into a weighted CA matrix, the process of the proposed PCPA algorithm, and complexity analysis are as follows.

3.1 Weights Setting Scheme

3.1.1 Point-Layer Weights Setting

The basic idea of Boosting technology [37] is to focus on the sample points that are difficult to be divided in the process of clustering ensemble. Motivated by the successful applications of the technology in classification and clustering, this paper assigns higher weights to these points so that they have a higher priority to be divided in subsequent clustering integration. The values of the weights are calculated by a CA matrix, which are constructed by base clustering results. The detailed implementation processes can be given by Eqs. (1)–(7).

First, construct the CA matrix A:

where \(a_{ij}\) can be calculated by

in which \(\delta _{ij}^m\) is shown in Eq. (3).

where \(P^{(m)}\left( x_i\right) \) denotes that, in the m-th \((1\le m\le M)\) partition, the cluster that \(x_i\) belongs to.

When \(a_{ij}=0\) or \(a_{ij}=1\), each basic clustering result has a high consensus on the division of \(x_{i}\) and \(x_{j}\). On the contrary, when \(a_{ij}\)=0.5, it is difficult to divide them. In this paper, a quadratic function \(y(x) = x(1-x)\), \(x \in [0, 1]\) is used to solve this problem. The uncertainty of \(x_{j} \)and \(x_{j}\) can be defined as:

When \(a_{ij}= 0.5\), the confusion index reaches the maximum value 0.25. When \(a_{ij}= 0\) or \(a_{ij}= 1\), confusion index reaches the minimum value 0, so \(confusion(x_i,x_j)\in [0, 0.25]\). The larger the \(confusion(x_i,x_j)\) is, the more difficult it is to divide samples \(x_i\) and \(x_j\). Then, the confusion is used to calculate the weight of each point:

where \(\frac{4}{n}\) is a normalization factor to ensure \(w_i^{\prime \prime }\in \)[0, 1].

We can see that the value range of \(w_i^{\prime \prime }\) contains 0. In the probability model, the weight often represents the probability value, which should not be completely zero, because even a very unlikely event should have a non-zero probability to ensure the comprehensiveness of the model and the integrity of the probability. Also, in this algorithm, a weight of 0 will completely ignore some samples or features, which is not the intent of this algorithm design. Moreover, in subsequent normalization calculations, a weight of 0 will result in a denominator of zero, resulting in a result that is either infinitely large (Inf) or not a number (NaN). In view of the above considerations, we added a smoothing term:

where e is a small positive number (according to [37], \(e=0.01\)).

After normalization, we have:

3.1.2 Cluster-Layer Weights Setting

According to [29], this paper uses ECI as the weight of the cluster.

Given the ensemble \(\Pi \), the uncertainty of the l-th cluster \(C_l\) w.r.t. the base clustering \(P^{(m)} \in \Pi \) can be computed as

with

where \(C_s^m\) is the s-th cluster in the m-th partition, \( 1\le m \le M\), \(1\le s \le k_m\), \(\cap \) computes the intersection of two sets (or clusters), and \(|C_l |\) outputs the number of objects in \(C_l\).

Thus, the uncertainty of cluster \(C_l\) w.r.t. the entire ensemble \(\pi \) can be given by

Given an ensemble \(\pi \) with M base clusterings, the ensemble-driven cluster index (ECI) for a cluster \(C_l\) can be defined as

After normalization, we get:

3.1.3 Partition-Layer Weights Setting

The NMI value can effectively measure the similarity degree of clustering members. Obviously, the higher the similarity degree of \(P^{(m)}\) is, the greater its weight is. Therefore, this paper sets the weight \({v_m}^\prime \) of partition \(P^{(m)}\) to be in proportion to the sum of the NMI of \(P^{(m)}\) and other partitions. Considering that a partition is composed of multiple clusters, this paper further sets the weight \({v_m}^\prime \) of \(P^{(m)}\) to be in proportion to the sum of weights of all clusters contained in \(P^{(m)}\). That is, the weight of m-th partition is:

After normalization, we get:

3.2 The Method of Transferring Weights Layer by Layer

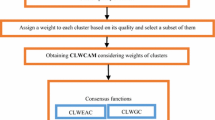

Point-cluster-partition three-layer weighted (PCPTLW) architecture and layer-by-layer weighting process are shown in Fig. 2.

3.2.1 Weight the Point-Layer

Multiply the i-th row \((1 \le i \le n)\) of \({{\varvec{H}}}\) by the weight \(w_i\) of the point \(x_i\) to get the point layer weighted matrix:

with

3.2.2 Weight the Cluster-Layer

Multiply the column corresponding to the l-th cluster \(C_l\) in \({{{\varvec{H}}}}_{{{\varvec{pt}}}}\) by its weight \(u_l(1\le l\le k_l)\) to get the cluster layer weighted matrix:

with

3.2.3 Weight the Partition-Layer

Multiply the submatrix corresponding to the m-th partition \(P^{(m)}\) in \({{{\varvec{H}}}}_{{{\varvec{cr}}}}\) by its weight \(v_m\) to obtain the partition layer weighted matrix:

with

where the number of \(v_1\), \(v_m\), and \(v_M\) are \(k_1\), \(k_m\), and \(k_M\) in Eq. (20).

3.3 Convert \({{{\varvec{H}}}}_{{{\varvec{pn}}}}\) to a CA Matrix

After obtaining the \({{{\varvec{H}}}}_{{{\varvec{pn}}}}\) matrix, we transform it into a weighted point-cluster-partition CA (PCPCA) matrix. The calculation of PCPCA is as follows

where \({H_{pn}}^T\) represents the transposed matrix of \(H_{pn}\).

3.4 The Algorithm PCPA Process

For clarity, the overall algorithm of PCPA is summarized as followed

The algorithm of PCPA:

Input: Dataset \(D=\left\{ x_1,x_2,\ldots ,x_n\right\} \), the true label of sample points and the number of true categories \(k^*\).

-

1.

Generate M base clusterings:

-

for i=1: M (in this paper, M =100)

-

run the k-means algorithm, the cluster number \(k=[{2,\ 2k}^*]\)

-

end

-

-

2.

Convert the base clusterings matrix into a hypergraph adjacency matrix \({{\varvec{H}}}\).

- 3.

- 4.

-

5.

Convert the weighted hypergraph adjacency matrix \({{{\varvec{H}}}}_{{{{\varvec{pn}}}}}\) into a CA matrix according to Eq. (21).

-

6.

Run AL to get a consistent partition \(\pi ^*\), and the cluster number is \(k^*\).

Output: The consensus clustering \(\pi ^*\).

3.5 Complexity Analysis

In the above algorithm flow, the first step is to run the k-means algorithm M times, and its time complexity is O(Mkdn), where K is the number of clusters, d is the dimension of samples, and n is the number of samples. In step 2, the time complexity of generating hypergraph adjacency matrix H is O(Mkn). From step 3 to step 4, the time complexity weighted by each layer is O(\(\textit{n}{} \textit{C}_{\textit{K}}\)), where \(\textit{C}_{\textit{K}}\) is the total number of clusters in M partitions, namely the number of edges of the hypergraph. In step 5, the time complexity of transforming \({{{\varvec{H}}}}_{{{{\varvec{pn}}}}}\) into CA matrix is O(\(\textit{C}_{\textit{K}}\times n^2\)). In step 6, the time complexity of AL algorithm is O(\(\log _2n\)). That is, steps 1–4 of the algorithm in this paper are linear, step 5 is square, and step 6 is logarithmic. In addition, step 5 is to construct the similarity matrix. This method can obtain better clustering results, but the complexity is high, that is, this method is suitable for small and medium-sized data sets. For massive datasets, MLRAA, DLRSE, K-means and other algorithms can be directly run on \({{{\varvec{H}}}}_{{{{\varvec{pn}}}}}\) to further improve operation efficiency.

4 Experiments

The experimental platform is an AMD Ryzen 7 5800H 8-core 16-thread processor, the frequency is 3.20 GHz, the memory is 16.00 GB, the graphics card is an NVIDIA GeForce RTX 3060 Laptop GPU, and the program runs under MATLAB2020a.

4.1 Datasets and Evaluation Methods

In this section, we conduct experiments on 11 datasets, namely, Ecoli, Libras Movement Data Set (LM), semeion, satimage, splice, optdigits (ODR), zoo, tr11, tr12, tr31, and tr45. The first seven ones are from the UCI machine learning repository (http://archive.ics.uci.edu/ml). The last four ones are from the Text REtrieval Conference (TREC, http://trec.nist.gov) collection. The details of the datasets are shown in Table 1.

In accordance with some popular methods [21, 29, 32], this paper adopts the following three performance metrics to evaluate the efficiency of the proposed PCPA algorithm, namely normalized mutual information (NMI), adjusted rand index (ARI) and F measure (F).

The NMI measure provides a sound indication of the shared information between two clusterings. Let \(P^\prime \) be the test clustering and \(P^G\) the ground-truth clustering. The NMI score of \(P^\prime \) w.r.t. \(P^G\) is defined as follows:

where \(n^\prime \) is the number of clusters in \(P^\prime \), \(n^G\) is the number of clusters in \(P^G\), \(n_i^\prime \) is the number of objects in the i-th cluster of \(P^\prime \), \(n_j^G\) is the number of objects in the j-th cluster of \(P^G\), and \(n_{ij}\) is the number of common objects shared by cluster i in \(P^\prime \) and cluster j in \(P^G\). It can be seen from the formula that the higher the NMI, the better the effect.

The ARI is a generalization of the rand index (RI), which is computed by considering the number of pairs of objects on which two clusterings agree or disagree. Specifically, the ARI score of \(P^\prime \) w.r.t. \(P^G\) is computed as follows:

where \(N_{11}\) is the number of object pairs that appear in the same cluster in both \(P^\prime \) and \(P^G\), \(N_{00}\) is the number of object pairs that appear in different clusters in \(P^\prime \) and \(P^G\), \(N_{10}\) is the number of object pairs that appear in the same cluster in \(P^\prime \) but in different clusters in \(P^G\), and \(N_{01}\) is the number of object pairs that appear in different clusters in \(P^\prime \) but in the same cluster in \(P^G\). The value range of ARI is [-1, 1], and the larger the value, the better the integration effect.

F measure is an indicator used in statistics to measure the accuracy of the binary classification model. It takes into account both the precision and recall of the classification model. Its calculation formula is as follows:

where the precision refers to the proportion of samples with a predicted value of 1 and a true value of 1 in all samples with a predicted value of 1. The recall refers to the proportion of samples with a predicted value of 1 and a true value of 1 among all samples with a true value of 1. The maximum value of F is 1, the minimum value is 0, and the larger the value, the better.

In the experiment, the number of base clusterings M is set as 100, in which each clusteringsis generated by randomly running of k-means. In order to make base clusterings more diverse, we set the number of cluster k in the range of \([2,\ 2k^*]\), and \(k^*\) is the true cluster number of the dataset.

4.2 Comparison of Before and After Three-Layer Weighted

In order to clearly understand the weighting effect, we divided the three-layer weighted process into the following 7 aspects: point weighted, cluster weighted, partition weighted, point-cluster weighted, point-partition weighted, cluster-partition weighted, and point-cluster-partition (PCP) three layers weighted (see Table 2). In order to ensure fairness and impartiality, all the experiments are conducted on the basis of the same base clustering, and all the presented results are the averaged values of 10 runs. The bold symbols indicate that the effect is improved after three layers weighted, and the numbers with underlines indicates the highest score among the 7 cases. The last column, percentage increase, indicates the percentage of the improvement of PCP compared with the Unweighted, which can be calculated by the difference between the values of PCP and Unweighted dividing by the value of Unweighted.

It can be seen from Table 2 that after three-layer weighted, the evaluation indicators of all datasets have increased. Among them, the ARI evaluation index of dataset tr41 has the most significant improvement effect, which is 140%. Dataset splice shows the worst improvement in F evaluation index, which is 0.58%. And by the last column, we can figure out the average improvement of each evaluation index is 22.07%. Table 2 also shows that if one or two aspects of point, cluster, and partition are weighted, the weighted effect has a great relationship with the dataset itself, and different datasets are suitable for different weighted methods. But if the three aspects are weighted at the same time, the aspect with good weighted effect will make up for the deficiencies of other weighted aspects to a certain extent, and the finally result will be more stable. Therefore, it can be judged that the three-layer weighted effect is better than the unweighted effect, and the stability is higher than that of single weighted or selecting two aspects weighted. The comparison before and after three-layer weighted is shown in Fig. 3. From Fig. 3, we can see that except for LM, splice and tr31, the clustering effect of other datasets after three-layer weighted is significantly improved. ARI, F and NMI have different enhancement effects on the same dataset, and the same evaluation index has different enhancement effects on different datasets, which is related to both the dataset itself and the evaluation index. This also reflects the scientific nature of using multiple indexes to comprehensively evaluate the experimental effect in this paper.

4.3 Compare with Other Weighted Methods

This method (PCPA) is compared with these seven weighted algorithms: ALSDM [32], WOMC [37], WOHB [37], WCT_KM [28], WEAC_AL [21], WEAC_CL [21], and LWEA [29]. The comparison results are shown in Table 3. The bold indicates the highest evaluation index value among the eight methods. Figure 4 more clearly shows the performance of each weighted method under the three evaluation indicators in each dataset. Tables 4, 5 and 6 are the ranking tables of each weighted method under ARI, F, and NMI respectively. For each evaluation index, we also use Friedman test and Nemenyi test to verify whether this method is significantly different from other methods.

From Table 3, we can see that except for the ARI index of LM, zoo, and tr45, F index of splice, and NMI index of tr11, PCPA always ranks first. The proportion of PCPA ranks first is 28/33. Among all datasets, PCPA algorithm has the most significant advantage on Ecoli, semeion, and tr12. For tr12, the ARI value of the PCPA is 0.3094, whereas for the other seven weighted algorithms, the highest value is 0.2248, which increased by 37.63%, the lowest value is 0.1017, which increased by 204.23%. And when the ranking of PCPA is not in the first place, the first-ranked method changes due to the dataset changes. In terms of the complexity of the algorithm, the time complexity of ALSDM, WOMC, WOHB and LWEA is \(O(n^2)\), the time complexity of WEAC_AL and WEAC_CL is \(O(M^{2}n^{2})\), and the time complexity of WCT_KM is \(O(n^3)\), where M is the number of base clusterings, n is the number of points. Except for WCT_KM algorithm, the time complexity of our algorithm and other algorithms is square order, so the proposed method does not sacrifice the algorithm speed while improving the clustering accuracy. Instead, as described in Sect. 3.5, in the face of large datasets, this three-layer weighted framework can directly run the K-means and other algorithms on the \(H_{pn}\) to further improve the algorithm speed.

Next, we provide the Friedman test that uses an algorithm for ARI score ranking. The Friedman test checks whether the measured average ranks in terms of ARI are significantly different from the mean rank \(R_j=4.5\) expected under the null-hypothesis: \(\chi _F^2=\frac{12\times 11}{8\times 9}({1.32}^2+{4.73}^2+{6.09}^2+{6.55}^2+{5.18}^2+{4.36}^2+{4.68}^2+{3.09}^2-\frac{8\times 9^2}{4})=35.5637,\) and \(F_F=\frac{10\times 35.5637}{11\times 7-35.5637}=8.5827\).

With 8 algorithms and 11 datasets, \(F_F\) is distributed according to the F distribution with \(8{-}1=7\) and \((8{-}1)\times (11{-}1)=70\) degrees of freedom. The critical value of F (7, 70) for \(\alpha =0.05\) is 2.143, so we reject the null-hypothesis.

Then, we use the Nemenyi test for pairwise comparisons. The critical value at \(q_{0.05}\) with 8 algorithms is 3.031 and the corresponding CD is \(3.031\times \sqrt{\frac{8\times 9}{6\times 11}}\)=3.1658. Therefore, we can identify that PCPA is significantly different from ALSDM, WOMC, WOHB, WCT_KM, and WEAC_CL. We cannot know which group WEAC_AL and LWEA belongs to. At \(p_{0.10}\), the critical value at \(q_{0.10}\) is 2.780 and the corresponding CD is \(2.780\times \sqrt{\frac{8\times 9}{6\times 11}}\)=2.9036. Therefore, we can identify that PCPA is significantly different from ALSDM, WOMC, WOHB, WCT_KM, WEAC_AL, and WEAC_CL. Now, we cannot tell which group LWEA belongs to because the experimental data is not enough to draw any conclusions.

We also use the ranks in terms of F index to compute the Friedman test of the algorithms, and reject the null-hypothesis because of the Friedman statistic \(\chi _F^2=\frac{12\times 11}{8\times 9}({1.09}^2+{4.50}^2+{5.91}^2+{7.00}^2+{5.27}^2+{3.82}^2+{4.91}^2+{3.50}^2-\frac{8\times 9^2}{4})=40.4976,\) and \(F_F=\frac{10\times 40.4936}{11\times 7-40.4936}=11.0933. \)

At p=0.05, the critical value at \(q_0.05\) is 3.031 and the corresponding CD is 3.1658. We can identify that PCPA is significantly different from ALSDM, WOMC, WOHB, WCT_KM, and WEAC_CL. We cannot tell which group WEAC_AL and LWEA belongs to. At \(p_{0.10}\), the critical value at \(q_{0.10}\) is 2.780 and the corresponding CD is 2.9036. However, we cannot tell which group WEAC_AL and LWEA belongs to because the experimental data is not enough to draw any conclusions.

Finally, we use the ranks in terms of NMI index to compute the Friedman test of the algorithms, and reject the null-hypothesis because the Friedman statistic \(\chi _F^2\)=\(\frac{12\times 11}{8\times 9}({1.09}^2+{5.18}^2+{6.27}^2+{7.36}^2+{4.55}^2+{3.55}^2+{4.64}^2+{3.36}^2-\frac{8\times {9}^2}{4})=46.9382\), and \(F_F=\frac{10\times 46.9382}{11\times 7-46.9382}=15.6289\).

At p=0.05, the critical value at \(q_{0.05}\) is 3.031 and the corresponding CD is 3.1658. We can identify that PCPA is significantly different from ALSDM, WOMC, WOHB, WCT_KM, and WEAC_CL. We cannot tell which group WEAC_AL and LWEA belongs to. At \(p_{0.10}\), the critical value at \(q_{0.10}\) is 2.780 and the corresponding CD is 2.9036. However, we cannot tell which group WEAC_AL and LWEA belongs to because the experimental data is not enough to draw any conclusions.

In general, PCPA is significantly different from ALSDM, WOMC, WOHB, WCT_KM, and WEAC_CL. From Tables 4, 5 and 6, we can see that PCPA is different from LWEA and WEAC_AL. We can also see that different algorithms have different effects on different data sets, but in general, PCPA ranks the highest, indicating that three-layer weighting can significantly improve the accuracy of clustering algorithms compared with other weighting methods, and the improvement of PCPA accuracy is not at the expense of time complexity. Therefore, we can judge that PCPA has obvious advantages over other weighted algorithms.

5 Conclusion

This paper proposes a three-layer weighted strategy called a Point-Cluster-Partition Architecture (PCPA) for weighted clustering ensemble. By weighting points, clusters and partitions in the integration process, the experiments show that:

-

The integration effect after using three-layer weighted is better than the unweighted integration effect. And the effect of three-layer weighted is more stable than that of single weighted or selecting two weighted;

-

Comparing with other weighted methods, PCPA is more prominent in terms of accuracy and stability.

The results of this paper also show that for different datasets, the effects of weighting different layers are also different. In recent years, many scholars have done a lot of research on the problem of sample unbalanced clustering [41,42,43,44]. Next, this paper will further improve the weight design scheme on these research, so that it can determine the weight adaptively according to the internal data structure characteristics of different datasets. And we hope the three-layer weighted clustering ensemble algorithm can play a good effect on more types of datasets as much as possible.

Data Availability

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

References

Xie J, Girshick R, Farhadi A (2016) Unsupervised deep embedding for clustering analysis. In: Proceedings of The 33rd international conference on machine learning. pp 478–487. arXiv:1511.06335

Jia C, Carson MB, Wang X, Yu J (2018) Concept decompositions for short text clustering by identifying word communities. Pattern Recogn 76(4):691–703. https://doi.org/10.1016/j.patcog.2017.09.045

Fern X Z, Brodley C E (2004) Solving cluster ensemble problems by bipartite graph partitioning. In: Proceeding of the 21st international conference on machine learning, pp 55–68. https://doi.org/10.1145/1015330.1015414

Wu J, Liu H, Xiong H, Cao J, Chen J (2015) K-means-based consensus clustering: a unified view. IEEE Trans Knowl Data Eng 27(1):155–169. https://doi.org/10.1109/TKDE.2014.2316512

Huang D, Lai JH, Wang CD (2016) Robust ensemble clustering using probability trajectories. IEEE Trans Knowl Data Eng 28(5):1312–1326. https://doi.org/10.1109/tkde.2015.2503753

Liu H, Wu J, Liu T, Tao D, Fu Y (2017) Spectral ensemble clustering via weighted k-means: theoretical and practical evidence. IEEE Trans Knowl Data Eng 29(5):1129–1143. https://doi.org/10.1109/tkde.2017.2650229

Nie X, Qin D, Zhou X, Duo H, Hao Y, Li B, Liang G (2023) Clustering ensemble in scRNA-seq data analysis: methods, applications and challenges. Comput Biol Med 159:106939. https://doi.org/10.1016/j.compbiomed.2023.106939

Huang Q, Gao R, Akhavan H (2023) An ensemble hierarchical clustering algorithm based on merits at cluster and partition levels. Pattern Recognit 136:109255. https://doi.org/10.1016/j.patcog.2022.109255

Strehl A, Ghosh J (2003) Cluster ensembles: a knowledge reuse framework for combining multiple partitions. J Mach Learn Res 3:583–617. https://doi.org/10.1162/153244303321897735

Antonello R, Rosa A, Massimo P (2021) A decentralized algorithm for distributed ensemble clustering. Inf Sci 578:417–434. https://doi.org/10.1016/j.ins.2021.07.028

Tao Z, Li J, Fu H, Kong Y, Fu Y (2021) From ensemble clustering to subspace clustering: cluster structure encoding. IEEE Trans Neural Netw Learn Syst 34:2670–2681. https://doi.org/10.1109/TNNLS.2021.3107354

Ji X, Liu S, Zhao P, Li X, Liu Q (2021) Clustering ensemble based on sample’s certainty. Cogn Comput 13(3):1034–1046. https://doi.org/10.1007/s12559-021-09876-z

Pho KH, Akbarzadeh H, Parvin H, Nejatian S, Alinejad-Rokny H (2021) A multi-level consensus function clustering ensemble. Soft Comput 25(21):13147–13165. https://doi.org/10.1007/s00500-021-06092-7

Chen Z, Bagherinia A, Minaei-Bidgoli B, Parvin H, Pro KH (2021) Fuzzy clustering ensemble considering cluster dependability. Int J Artif Intell Tools 30(2):2150007. https://doi.org/10.1142/S021821302150007X

Zhu X, Li J, Li HD, Xie M, Wang J (2020) Sc-gpe: a graph partitioning-based cluster ensemble method for single-cell. Front Genet 11:604790. https://doi.org/10.3389/fgene.2020.604790

Yu Z, Wang D, Meng XB, Philip Chen C L (2020) Clustering ensemble based on hybrid multiview clustering. IEEE Trans Cybernet 52:6518–6530. https://doi.org/10.1109/TCYB.2020.3034157

Iam-On N, Boongoen T (2015) Comparative study of matrix refinement approaches for ensemble clustering. Mach Learn 98(1):269–300. https://doi.org/10.1007/s10994-013-5342-y

Zhang M (2022) Weighted clustering ensemble: a review. Pattern Recognit 124:108428. https://doi.org/10.1016/j.patcog.2021.108428

Yu Z, Li L, Gao Y, You J, Liu J, Wong HS (2014) Hybrid clustering solution selection strategy. Pattern Recognit 47(10):3362–3375. https://doi.org/10.1016/j.patcog.2014.04.005

Yang Y, Chen K (2011) Temporal data clustering via weighted clustering ensemble with different representations. IEEE Trans Knowl Data Eng 23(2):307–320. https://doi.org/10.1109/TKDE.2010.112

Huang D, Lai JH, Wang CD (2015) Combining multiple clusterings via crowd agreement estimation and multi-granularity link analysis. Neurocomputing 170:240–250. https://doi.org/10.1016/j.neucom.2014.05.094

Rouba B, Bahloul SN (2017) Weighted clustering ensemble: towards learning the weights of the base clusterings. Multiagent Grid Syst 13(4):421–431. https://doi.org/10.3233/MGS-170278

Bai L, Liang J, Du H, Guo Y (2019) An information-theoretical framework for cluster ensemble. IEEE Trans Knowl Data Eng 31(8):1464–1477. https://doi.org/10.1109/TKDE.2018.2865954

Song W, Wang W, Dai DQ (2022) Subtype-WESLR: identifying cancer subtype with weighted ensemble sparse latent representation of multi-view data. Brief Bioinform 23(1):bbab398. https://doi.org/10.1093/bib/bbab398

Huang D, Wang CD, Lai JH, Kwoh CK (2021) Toward multi-diversified ensemble clustering of high-dimensional data: from subspaces to metrics and beyond. IEEE Trans Cybernet 52(11):12231–12244. https://doi.org/10.1109/TCYB.2021.3049633

Wan H, Ning B, Tao X, Long J (2020) Research on Chinese short text clustering ensemble via convolutional neural networks. In: Artificial intelligence in China: proceedings of the international conference on artificial intelligence in China 2020. pp 622–628. https://doi.org/10.1007/978-981-15-0187-6_74

Banerjee A, Pujari AK, Rani Panigrahi C, Pati B, Chandan Nayak S, Weng TH (2021) A new method for weighted ensemble clustering and coupled ensemble selection. Connect Sci 33(3):623–644. https://doi.org/10.1080/09540091.2020.1866496

Iam-On N, Boongoen T, Garrett S, Price C (2011) A link-based approach to the cluster ensemble problem. IEEE Trans Pattern Anal 13(12):2396–2409. https://doi.org/10.1109/TPAMI.2011.84

Huang D, Wang CD, Lai JH (2018) Locally weighted ensemble clustering. IEEE Trans Cybernet 48(5):1460–1473. https://doi.org/10.1109/TCYB.2017.2702343

Vo CTN, Nguyen PH (2018) A weighted object-cluster association-based ensemble method for clustering undergraduate students. In: Asian conference on intelligent information and database systems (ACCIIDS), pp 587–598. https://doi.org/10.1007/978-3-319-75417-8_55

Rashidi F, Nejatian S, Parvin H, Rezaie V (2019) Diversity based cluster weighting in cluster ensemble: an information theory approach. Artif Intell Rev 52:1341–1368. https://doi.org/10.1007/s10462-019-09701-y

Najafi F, Parvin H, Mirzaie K, Nejatian S, Rezaie V (2020) Dependability-based cluster weighting in clustering ensemble. Stat Anal Data Min ASA Data Sci J 13(2):151–164. https://doi.org/10.1002/sam.11451

Shen Q, Qiu Y (2021) A novel text ensemble clustering based on weighted entropy filtering model. In: Journal of physics: conference series, vol 2024, no 1. IOP Publishing, p 012045. https://doi.org/10.1088/1742-6596/2024/1/012045

Banerjee A, Pujari AK, Panigrahi CR, Pati B, Nayak SC, Weng TH (2021) A new method for weighted ensemble clustering and coupled ensemble selection. Connect Sci 33(3):623–644. https://doi.org/10.1080/09540091.2020.1866496

Zhang D, Yang Y, Qiu H (2023) Two-stage semi-supervised clustering ensemble framework based on constraint weight. Int J Mach Learn Cybern 14(2):567–586. https://doi.org/10.1007/s13042-022-01651-2

Zhong C, Yue X, Zhang Z, Lei J (2015) A clustering ensemble: two-level-refined co-association matrix with path-based transformation. Pattern Recogn 48(8):2699–2709. https://doi.org/10.1016/j.patcog.2015.02.014

Ren Y, Domeniconi C, Zhang G, Yu G (2017) Weighted-object ensemble clustering: methods and analysis. Knowl Inf Syst 51(2):661–689. https://doi.org/10.1007/s10115-016-0988-y

Li F, Qian Y, Wang J, Dang C, Jing L (2019) Clustering ensemble based on sample’s stability. Artif Intell 273:37–55. https://doi.org/10.1016/j.artint.2018.12.007

Niu X, Zhang C, Zhao X, Hu L, Zhang J (2023) A multi-view ensemble clustering approach using joint affinity matrix. Expert Syst Appl 216:119484. https://doi.org/10.1016/j.eswa.2022.119484

Zhou P, Wang X, Du L, Li X (2022) Clustering ensemble via structured hypergraph learning. Inf Fusion 78:171–179. https://doi.org/10.1016/j.inffus.2021.09.003

Thabtah F, Hammoud S, Kamalov F, Gonsalves A (2020) Data imbalance in classification: experimental evaluation. Inf Sci 513:429–441. https://doi.org/10.1016/j.ins.2019.11.004

Vuttipittayamongkol P, Elyan E, Petrovski A (2021) On the class overlap problem in imbalanced data classification. Knowl Based Syst 212:106631. https://doi.org/10.1016/j.knosys.2020.106631

Zhang J, Tao H, Hou C (2023) Imbalanced clustering with theoretical learning bounds. IEEE Trans Knowl Data Eng 35(9):9598–9612. https://doi.org/10.1109/TKDE.2023.3242306

Farshidvard A, Hooshmand F, MirHassani SA (2023) A novel two-phase clustering-based under-sampling method for imbalanced classification problems. Expert Syst Appl 213:119003. https://doi.org/10.1016/j.eswa.2022.119003

Acknowledgements

This paper was supported by the General Project of The National Natural Science Foundation of China (No.62076215, No.62301473), the Jiangsu Provincial Natural Science Foundation of Higher Education (21KJD520006), the Future Network Research Fund of 2021 (FNSRFP-2021-YB-46), the Graduate Research and Practice Innovation Program of Yancheng Institute of Technology (SJCX21_XZ018) and Jiangsu University Qing Lan Project.

Author information

Authors and Affiliations

Contributions

NL: Methodology, writing—original draft, data curation, visualization software. SX: Conceptualization of this study, supervision, funding acquisition, project administration. HX: Writing–review editing, project administration, funding acquisition. XX: Writing—review editing, validation. NG: Writing—review editing. NC: Writing—review editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethical and Informed Consent for Data Used

The data sets used in this paper are commonly used in clustering ensemble research. They have been published on UCI (http://archive.ics.uci.edu/ml) and TREC (http://trec.nist.gov) for free download and use by researchers.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, N., Xu, S., Xu, H. et al. A Point-Cluster-Partition Architecture for Weighted Clustering Ensemble. Neural Process Lett 56, 183 (2024). https://doi.org/10.1007/s11063-024-11618-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11618-9