Abstract

The most extensively used tools for categorizing complicated networks are community detection methods. One of the most common methods for unsupervised and semi-supervised clustering is community detection based on Non-negative Matrix Factorization (NMF). Nonetheless, this approach encounters multiple challenges, including the lack of specificity for the data type and the decreased efficiency when errors occur in each cluster’s knowledge priority. As modularity is the basic and thorough criterion for evaluating and validating performance of community detection methods, this paper proposes a new approach for modularity-based community detection which is similar to symmetric NMF. The provided approach is a semi-supervised adaptive robust community detection model referred to as modularized robust semi-supervised adaptive symmetric NMF (MRASNMF). In this model, the modularity criterion has been successfully combined with the NMF model via a novel multi-view clustering method. Also, the tuning parameter is adjusted iteratively via an adaptive method. MRASNMF makes use of knowledge priority, modularity criterion, reinforcement of non-negative matrix factorization, and has iterative solution, as well. In this regard, the MRASNMF model was evaluated and validated using five real-world networks in comparison to existing semi-supervised community detection approaches. According to the findings of this study, the proposed strategy is most effective for all types of networks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In today’s world, complex systems can be modeled by graphs. Complex networks such as social networks, traffic networks, and biological networks are examples of such applications [1,2,3]. A graph is a representation of networks using nodes and edges; the edges show the connections between the nodes in the graph. Furthermore, it is capable of forming different types of graphs, such as static graphs, dynamic graphs, hypergraphs, and multi-view graphs. As a result of analyzing networks, very useful knowledge and information can be derived from the types of complex network characteristics such as network structure, type of communication among network members, latent network communication, etc. Community detection has been found to be one of the most common and effective tools for clustering, analyzing, and exploiting complex networks. According to the type of connection in the network, this tool will show us which nodes are closer to each other and can be grouped into a bunch or cluster. It also indicates which nodes are intermediate nodes that will connect the two clusters. By clustering and shrinking networks, graph clustering will make analysis much easier [2]. In recent years, a number of methods have been proposed for clustering graphs, including modularity-based community detection [4, 5], non-negative matrix factorization (NMF) [6], label propagation [7, 8], random walk-based community detection [9, 10], evolutionary optimization-based community detection [11, 12] and multi-view semi-supervised community detection [13].

Using NMF, matrix graph similarity (such as adjacent matrices) is transformed into several dimensional matrices that are then used to generate optimal community detection and clustering. Different models and functions are utilized in this method to extract clusters, such as symmetric NMF [15], robust NMF [16, 17], three-factor NMF [18], deep NMF [19,20,21,22], graphic NMF [23, 24], multi-view clustering based on NMF [25], non-linear constrained NMF (NNMF) [26], graph regularized nonnegative matrix factorization (GNMF) [27] and NMF for dynamic graph [28]. Based on the robust model of NMF proposed in [16, 17], uncertainties such as incorrect knowledge priority and data type combination errors could be reduced through software by using \({l}_{2}\)-norms. According to three-factor NMF [18], the measurement criteria is combined with the three-factor model to produce improved performance in the final clustering step. As a result of the deep NMF [19,20,21], the error of solving the iterative method is greatly reduced. In addition, the measurement criterion has led to a significant improvement in modeling. Multi-view clustering based on NMF [25] considers several types of data or feature data to better identify dimensional matrices, however it has flaws such as poor feature extraction, slow convergence speed, and low accuracy. According to NNMF [26], dimensional matrices create a non-linear model in the NMF method. Also, graph regularized nonnegative matrix factorization (GNMF) in [27] uses graph or data feature to improve NMF models. Using Kalman filters and matching network features, [28] solves the challenge of identifying community changes in dynamic graphs. Conceptual factorization (CF) [29] is an extension of NMF. In this approach, each cluster is a linear combination of data points, and each data point is a linear combination of cluster centers. Therefore, to reduce the effect of noise for the CF method, in [30], dual graph-regularized sparse concept factorization (DGSCF) is proposed by adopting an optimization framework based on \({l}_{1}\) and Frobenius norms.

In order to detect communities, the NMF method makes use of the knowledge priority of the members of each cluster, making it one of the most common methods of semi-supervised community detection. It is important to note that all NMF- based community detection models are optimization problems; therefore, knowledge priority can be integrated into graph similarity matrices [31, 32] or added to the optimization problem as a separate expression [33,34,35]. Incorporating the knowledge priorities of each cluster will lead to errors, such as entering the wrong knowledge priority and making incorrect human decisions. Therefore, a new model based on the robust model is proposed in order to reduce error in [16]. As a result, the model reduces the errors that occur due to the correct knowledge priority compared to other semi-supervised methods and provides much better community detection quality in spite of the absence of these errors.

Detecting communities using the NMF also has the advantage of being applicable to a variety of data types, such as audio, video, text, and graphics. A number of clustering issues have been investigated using this community detection technique, including audio source separation [36, 37], image processing [38], keyword and document extraction [39], network clustering for information graphs and data [17], and semi-supervised clustering using NMF models for all data [40]. In spite of the fact that NMF is very general and can be applied to a wide range of different data types, it cannot study and identify the characteristics of a particular type of data in a specialized manner. This means that data types (audio, acoustic, text, network) will not affect the NMF-based community detection. In order to address this limitation, modularity was added to the community detection based on NMF in a linear format in order to improve the quality of clustering in complex networks in [14, 18, 21].

The concept of modularity was specifically introduced in [4] as a criterion for validating graphs. To determine the accuracy of community detection, the criterion considers the density of communication between nodes within a cluster as well as the level of communication between groups. In other words, modularity is a popular criterion for community detection methods; it is a criterion that is specialized for clustering complex networks. It has been demonstrated in [4] that solving the problem of modularity-based community detection is an NP-hard problem. The pervasiveness of this criterion has led to the development of various modularity-based methods for community detection in complex networks in recent years. Methods such as the greedy method [41], evolutionary optimization solution method [42], and the spectral method [4] are among the methods that can be mentioned. In spite of the fact that modularity-based community detection is a commonly employed method for clustering, it has limitations, including dependence on the sum of edges [43], lack of knowledge about the priority of clustering, and the complexity of the problem.

Accordingly, this paper aims to present a new solution combining the advantages of community detection based on NMF and community detection based on the modularity criterion. For this purpose, it is first demonstrated that modularity-based community detection has a similar structure to NMF-based community detection. With this advantage and innovation, robust community detection based on NMF and the modularity criterion can be combined with multi-view clustering methods. In addition, a robust model of the multi-view clustering method is employed in order to reduce the error associated with the user’s input of knowledge priority. Consequently, in addition to providing a new way to reinforce semi-supervised community detection methods, this paper provides an adaptive method that can be applied to clustering graphs utilizing graph attribute information, knowledge priority of cluster members, and network structure properties, as well as an providing an iterative solution and a convergence analysis for this method. In order to improve robust community detection based on NMF, the paper proposes a novel model for semi-supervised community detection called MRASNMF. In this regard, the main contributions of this paper can be summarized as follows:

-

1.

It is shown that the structure of modularity-based community detection is similar to that of NMF-based community detection. Using this similarity, the knowledge priority of clustering graphs is combined with a novel community detection algorithm.

-

2.

To use the proposed structure, a modularized robust adaptive semi-supervised NMF model (MRASNMF) is developed, and a multi-view robust combination is subsequently employed for detecting communities based on NMFs in a semi-supervised manner. A converging algorithm and iterative solution method are provided for the model, as well.

-

3.

The clustering method are applied to five real-world networks. The model’s efficiency is examined in relation to the quality of the solution, and it is demonstrated that the model is adaptively trained. Furthermore, this paper shows that the MRASNMF model can improve the algorithm’s efficiency when dealing with erroneous knowledge priority errors.

The remainder of this paper is structured in such a way that Sect. 2 provides a brief overview of the concept of modular community detection based on the modularity criterion and a review of previous semi-supervised community detections based on NMF. The proposed robust semi-supervised adaptive algorithm, its iterative solution method, and the convergence analysis of this method are discussed in Sect. 3. Section 4 introduces the evaluation criteria and data set for testing. Afterward, the required parameters are extracted, and the results are displayed on the actual data set. Section 5 concludes and summarizes the discussion.

2 Related Works

The literature on graph clustering is briefly discussed in this section, and in the following section, the proposed model will be described. In order to provide clarity, at first, the frequently used notations are described in Table 1.

2.1 Community Detection Based on Modularity

Graph \(G = \left(V, E\right)\) is considered, where \(V\) is a set of nodes with a total number of n nodes and \(E\) is a set of edges between two nodes with a total number of \(m\) edges. The measurement criterion determines the validity of community detection based on the density of edges in clusters and intergroup communication. This criterion is also used independently to determine clusters of complex networks [4, 18]. Therefore, in general, community detection based on the modularity criterion can be rewritten as an optimization problem with the function of the modularity criterion and is subject to the following conditions [18]:

where \(B\) is the modularity matrix, \(A\) is the graph adjacent matrix, \({k}_{i}\) is the degree corresponding to node \(i\), \(X=\left[{X}_{ij}\right]\in {R}^{n\times k}\) is the cluster members matrix for each cluster, \({\left({B}_{1}\right)}_{ij}=\frac{{k}_{i}{k}_{j}}{2m}\) and \(k\) is the number of communities in the graphs.

2.2 Community Detection Based on NMF

In NMF, the graph similarity matrix is converted to two reduced dimension matrices, and subsequently, the final clustering is yielded from the decomposed matrices. In the decomposed matrices, however, an attempt is made to preserve the latent properties of the original matrix in the later reduced matrices. In other words, assuming the clustering number \(k\), the similarity matrix \(Y\in {R}^{n\times n}\) is decomposed into two new matrices, namely \(H\in {R}^{n\times k}\) and \(X\in {R}^{n\times k}\) (\(Y\simeq H{X}^{T}\)), where \(H\) represents the community relationship matrix and \(X\) represents the community membership matrix of each cluster. To get the given reduction matrices, the optimization problem is introduced with the following cost function:

where \({\Vert .\Vert }_{{\varvec{f}}}\) is the Frobenius norm. This optimization function is a non-convex optimization function that is proposed in several studies such as [14] to yield two matrices H and X and iterative solution methods based on Lagrangian functions.

Another model is symmetric NMF (SNMF), which reduces the modeling error by decreasing the variables. As expressed in [15], the optimization function is in the form of Eq. (3).

where \(X\in {R}^{n\times k}\) represents the membership of each cluster. Comparing Eqs. (2) and (3), the advantage of the proposed model is the inclusion of community communication information in \(H\).

2.3 Semi-Supervised Community Detection Based on NMF

Semi-supervised community detection methods refer to methods in which the knowledge priority of cluster members improves community detection. There are two ways in which cluster members’ knowledge priority can be expressed: being a member of a group or not belonging to other clusters. Additionally, the knowledge priority of each cluster is calculated as a percentage of the knowledge of the members. As a result, NMF-based community detection is one of the most common and comprehensive methods for semi-supervised community detection. Semi-supervised community detection based on NMF usually follows two general approaches.

In the first approach, the similarity matrix of community detection is changed using NMF. In [31], Zhang combined the knowledge priority of node labels with the basic structure of a graph. As a result, the generalized adjacent matrix (\(\overline{A }\)) replaced the adjacent matrix. The generalized matrix can be defined as follows:

where \(\alpha \) is a positive value equal to 2 and \(I\) is the identity matrix.

In another study [32], the adjacent matrix of the graph was revised with the initial knowledge of the pairing of nodes in a cluster and the group differences between the nodes of the adjacent matrix, resulting in the Semi-Supervised Nonnegative Matrix-based semi-supervised clustering (SNMF-SS) algorithm. The input matrix is rewritten in SNMF-SS is defined as follows:

where \({W}_{ML}\) denotes the matrix of the relationship between members of each cluster and \({W}_{CL}\) represents the matrix of the relationship between members of different clusters.

In [44], using the SNMF-SS algorithm model and substituting a random matrix for the Y matrix, the community partition algorithm based on the random walk and matrix factorization (SNMFRW) algorithm yielded a different community detection in the semi-supervised mode.

In the second approach, in order to use the knowledge priority, the ultimate goal is to add a new expression to the community detection optimization function based on the NMF; therefore, the cost function of community detection consists of the sum of the clustering section based on the NMF and the section related to the knowledge priority of clustering. Equivalently, Eq. (6) shows the total cost function for this approach, as:

where \({J}_{pairs}\) is the primary knowledge optimization function and \({J}_{clustering}\) is the community detection function. In the following, further literature is briefly discussed.

In [33], Yang proposed the Pairwise Constraints-guided Nonnegative Matrix Factorization (PCNMF) method in which knowledge priority is introduced into the optimization of NMF in the form of new relationships. In the new relation, the optimization function is rewritten as follows:

where \(Y\) is the same as the adjacent matrix. Moreover, \(L=D-{A}_{1}\) where the diagonal matrix \(D\) is \({D}_{ii}=\sum_{i=1}^{n}{A}_{{1}_{ii}}\) and \({A}_{1}\) is rewritten with information from knowledge priority as follows:

In the above equation, \(\alpha \) is a positive value equal to 2.

As PCNMF in [45], in several models were proposed by considering the different matrix of \(Y\) (graph similarity) in \({\Vert Y-H{X}^{T}\Vert }_{F}^{2}\) and the quality and efficiency of the community detection methods are investigated. For instance, the semi-supervised community detection based on the Laplacian matrix (\(S{C}_{LAP}\)) considers community detection based on the Laplacian similarity matrix as the clustering section and presents the final semi-supervised community detection model as follows:

In another study [35], the knowledge of the members of each cluster is linearly combined with the clustering section with the new model. This algorithm considers the knowledge priority part of the members of each cluster as follows:

where the semi-supervised community detection model is considered as:

Here, \({D}_{M}\) is a diagonal matrix with the sum of the diagonal elements of each row of matrix \(M\).

Also, in [46], the SVDCNMF and SVDCSNMF methods extend the PCNMF models using singular value decomposition algorithm and use generalized adjacent matrix (\(\overline{A }\)) in Eq. (4). SVDCNMF and SVDCSNMF algorithms are, on the other hand, the same as Eq. (7) with the assumption of NMF and SNMF models. Therefore, the only difference in determining the Laplacian (\(L={D}_{{W}_{1}}\)) matrix is that the \({W}_{1}\) matrix is determined as follows:

Additionally, in [34] a Pairwise Constrained Symmetric Nonnegative Matrix Factorization (PCSNMF) was proposed, where the knowledge priority of members and relationships within a group and different clusters were combined with NMF. However, the proposed optimization function was as follows:

where, \({B}_{2}=\left[\begin{array}{ccc}0& 1& \begin{array}{cc}\dots & 1\end{array}\\ 1& 0& \begin{array}{cc}\ddots & \vdots \end{array}\\ \begin{array}{c}\vdots \\ 1\end{array}& \begin{array}{c}\ddots \\ \cdots \end{array}& \begin{array}{cc}\ddots & 1\\ 1& 0\end{array}\end{array}\right]\) and \(Y\) is the same adjacent matrix as defined. The knowledge priority is summarized in the following matrices:

and

Moreover, in [16], due to human errors in the initial selection of node clustering, the Robust Semi-Supervised Nonnegative Matrix Factorization (RSSNMF) method is proposed as a robust model to improve efficiency in human errors, which could improve the PCSNMF method. Its optimization cost function is rewritten as follows:

where \({\Vert .\Vert }_{2}\) is the second-order norm and \(Y\) is the same as the adjacent matrix. Due to the inherent capability of second-order norms to eliminate noise and uncertainties, the optimization function according to Eq. (16) has become robust to errors in the knowledge priority of the cluster members and enables better community detection considering the presence of these uncertainties. As a result of the inherent ability of second-order norms to reduce noise and uncertainties, the optimization function of Eq. (16) is not affected by errors in the initial knowledge of the members of each cluster and produces better detection of communities than when there are uncertainties present.

3 The Proposed Community Detection Algorithm

Based on a review of various models and requirements, including customization of the NMF-based community detection method for complex graphs and the enhancement of the model’s robustness to human errors, this paper proposes an approach to improving community detection based on NMF by using a multi-view community detection method based on modularity criterion. In order to combine the robust semi-supervised method based on NMF with the modularity criterion, first, a similar structure is proposed corresponding to the two methods of community detection based on the modularity criterion and based on NMF. An iterative solution method is then presented in order to combine the robust semi-supervised method based on NMF with the modularity criterion. Finally, the convergence of the iterative solution method is demonstrated.

3.1 Relationship Between Modularity-Based Community Detection and NMF

As presented in Sects. 2.2 and 2.1, Theorem 1 shows the equivalence between two community detection methods based on the modularity criterion and NMF.

Theorem 1

modularity-based community detection in complex networks has a structure similar to community detection based on NMF, i.e.:

Proof of Theorem 1

The modularity criterion optimization function concerning Eq. (1) is rewritten as follows:

If \(B\) is a constant matrix and \({X}^{T}X=I\), we have,

Equation (19) is rewritten as follows given to the characteristics of matrix transmissions such as \({tr(X}^{T}BX) =tr\left(BX{X}^{T}\right)\) and \(tr\left({X}^{T}X{X}^{T}X\right)=tr\left(X{X}^{T}X{X}^{T}\right)\):

Along with Eqs. (1) and (3), and via considering Eq. (20), the optimization cost function of the modularity matrix holds a structure similar to the optimization cost function of NMF. In other words:

Therefore, it can be proved that community detection based on the modularity criterion has a structure similar to community detection based on NMF.

□

3.2 MRASNMF Algorithm

As stated before, due to its widespread application in the analysis of all types of data, the NMF method requires specialization for complex graphs [14, 18, 21]. Considering the high efficiency of the modularity criterion in this context, it makes sense to use it for custom-designed community detection of complex networks based on the NMF. Theorem 1 states that community detection based on the modularity criterion is similar to community detection based on NMF with the assumption that the matrix is similar to modularity. A unique feature of this simulation is the use of the modularity criterion to detect communities using NMF. However, knowledge priority uncertainties will cause errors in semi-supervised algorithms based on NMF. For this reason, one method of reducing the error of this uncertainty is to use the robust model of the NMF-based community detection method in linear combination with the knowledge priority part of Eq. (15). Due to this, Theorem 1 utilizes the robust modularity method based on the criterion to reduce uncertainty errors. Therefore, according to the previous statements, in order to present an adaptive robust method of efficient semi-supervised community detection, several components, including NMF-based robust community detection, modularity-based robust community detection, and knowledge priority, must be incorporated in the optimization cost function, simultaneously. Utilizing and combining all of these components represent one of the primary challenges, in this area. In order to resolve the difficulty, a multi-view robust clustering approach is proposed in accordance with the optimization structure of the robust community detection model based on NMF and modular robust clustering. To this end, the final model of adaptive robust community detection, which incorporates all sections pertaining to community detection based on the modularity criterion, NMF, and knowledge priority [47], is presented as follows:

where \({B}_{2}=\left[\begin{array}{ccc}0& 1& \begin{array}{cc}\dots & 1\end{array}\\ 1& 0& \begin{array}{cc}\ddots & \vdots \end{array}\\ \begin{array}{c}\vdots \\ 1\end{array}& \begin{array}{c}\ddots \\ \cdots \end{array}& \begin{array}{cc}\ddots & 1\\ 1& 0\end{array}\end{array}\right]\) and \(W\) is the community relationship matrix. Besides, \(M\) and \(C\) are selected based on [16] as follows:

and,

As a result, considering the variable parameters, the linear combination of two robust models of NMF with adjacent and modularity similarity matrices is presented for the semi-supervised community detection model of NMF where \(\gamma \) is an adaptive parameter that balances two community detection methods based on modularity and NMF.

3.3 The First Iterative Function of the MRASNMF Optimization Function

Since matrix \(X\) is a negative matrix in (22), therefore, the final community detection is extracted through the Lagrangian-based solution method. Accordingly, Eq. (22) is rewritten as its transcript form, namely as in Eq. (25). Assuming the matrix \(\varphi \) and \(\psi \) as the Lagrangian matrices, the Lagrangian function is defined as follows:

Then, the corresponding Lagrangian function would be:

Therefore, the derivatives of the Lagrangian function concerning the variables are as follows:

From Eqs. (27) to (29), as \(B=A-{B}_{1}\) and considering the Karush–Kuhn–Tucker (KKT) conditions\({\mathrm{\varphi }}_{ij}{X}_{ij}=0, {\psi }_{ij}{X}_{ij}=0 , \phi \gamma =0\), the MRASNMF iterative rule is generated as in Eqs. (30) to (32), as:

From [19], the condition \(\sum_{r=1}^{k}{X}_{ir}=1\) is applied as follows:

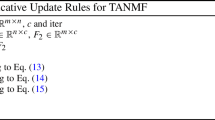

Eventually, according to Eqs. (30), (31), (32), and (33), the MRASNMF model is proposed as in Algorithm 1.

3.4 Proof of Converging

As the proposed model MRASNMF, the convergence of the two parameters \(X\) and \(W\) for the recursive Eq. (31) and Eq. (32) are explained as the following theorems.

Theorem 2

Updating parameter \(X\), assuming that the other parameters in Eq. (30) are constant, monotonically reduces the cost function of Eq. (22).

Theorem 3

Updating the \(W\) parameter, assuming that the other parameters in Eq. (31) are constant, monotonically reduces the cost function of Eq. (22).

Theorem 4

Updating the \(\gamma \) parameter, assuming that the other parameters in Eq. (32) are constant, monotonically reduces the cost function of Eq. (22).

However, Eq. (30) and Eq. (31) reduce the cost function of the MRASNMF in Eq. (22) model to a minimum. To illustrate, the auxiliary functions in [48] are proposed. If \(F\left(X\right)\) satisfies \(F\left(X\right)\le G\left(X,{X}^{t}\right)\) F and \(F\left(X\right)=G\left(X,X\right)\), then \(G\left(X,{X}^{t}\right)\) is an auxiliary function for \(F\left(X\right)\), hence:

Or according to [47], Eq. (34) can be rewriting as follows:

Therefore, as the definition of auxiliary functions implies, if an optimal auxiliary function with high conditions is found for the MRASNMF model, then the model is reduced to its minimum value and converged to a constant value. Therefore, an auxiliary function should be set for the MRASNMF model. Upon proving Theorem 2 due to the complexity, Theorems 3 and 4 are proved accordingly.

Proof of Theorem 2

According to the community detection model in Eq. (25) with the assumption of updating the parameter \(X\) and the concepts of the auxiliary function mentioned in [48], the model function required for the auxiliary functions and its first and second derivatives are defined as follows.

where \({G}_{2}\) is:

Since the parameter \(\alpha \) is adjustable, Eq. (38) could be positive considering the appropriate selection of \(\alpha \) [49]. Therefore, the function \(\check{F} \left( X \right)\) will have a local minimum. To prove that the Eq. (25) is monotonically decreasing, it is enough to find an auxiliary function for \(\check{F} \left( X \right)\) and show that the function is decreasing according to Eqs. (35) and (36). Therefore, Lemma 1 is required to find the auxiliary function and prove Theorem 2. Lemma 1 proposes an auxiliary function for \(\check{F} \left( X \right)\).

Lemma 1

The auxiliary function \({G}_{RSSNMF-Q}\)is an auxiliary function for \(\check{F} \left(X \right)\). The auxiliary function is defined as follows:

where \({G}_{1}\)is defined as:

Proof of Lemma 1

First, one should note that assuming \({X}_{ij}\), \(\check{F}_{{X_{ij} }} \left( {X_{ij} } \right) = G_{MRSSNMF - Q} \left( {X_{ij} ,X_{ij} } \right)\), then we apply the Taylor series as Eq. (42) to test the validity of \(\check{F}_{{X_{ij} }} \left( {X_{ij} } \right) \le G_{RSSNMF - Q} \left( {X_{ij} ,X_{ij}^{t} } \right)\) [45], the nonlinear function \(\check{F}_{{X_{ij} }} \left( {X_{ij} } \right)\) is linearized around the point \(X_{ij}^{t}\) by the Taylor series; hence:

From the linear Eqs. (42) and (40) and to test \(\check{F}_{{X_{ij} }} \left( {X_{ij} } \right) \le G_{RSSNMF - Q} \left( {X_{ij} ,X_{ij}^{t} } \right)\), the following relation is established:

Since \({X}_{ij}^{t}>0\), then:

Next, we rewrite the left side of Eq. (44) as:

when \({G}_{3}\)is defined as follows.

Considering Eqs. (44) and (45), then, we have:

Moreover, considering that all parameters are positive and \(0<\beta \le 1\), then Eq. (48) may be rewritten as:

Given Eqs. (44), (47), and (48), it is sufficient that \({\left({G}_{1}\right)}_{{\text{ij}}}>{\left({G}_{2}\right)}_{ii}{X}_{ij}^{t}\). Considering the definitions of \({G}_{1}\) and \({G}_{2}\) as in Eqs. (40), (38) and \({B}_{1}=A-B\), we have:

As the result, \({\left({G}_{1}\right)}_{{\text{ij}}}>{\left({G}_{2}\right)}_{ii}{X}_{ij}^{t}\).

Therefore, Lemma 1 is provable reversely from Eq. (49) to Eq. (43).

□

From Lemma 1, \({G}_{RSSNMF-Q}\) is an auxiliary function for \(\check{F}_{{X_{ij} }} \left( {X_{ij} } \right).\) Accordingly, given Eq. (35), the closed-loop form of solution is as follows:

Rewriting \(\check{F}_{{X_{ij} }}^{\prime }\) respecting \(G_{1}\) and \(G_{3}\) as \(\check{F}_{{X_{ij} }}^{\prime } \left( {X_{ij}^{t} } \right) = 2\left( { - \left( {G_{3} } \right) + \left( {G_{1} } \right)} \right)\), Eq. (50) is developed as:

Therefore, assuming Eq. (35), the aforementioned closed-loop equation is:

Ultimately, the iterative equation in Theorem 2 is proved by \(\beta =1\).

3.5 Complexity Analysis

In the MRASNMF model, the computational complexity of updating rules is of \({\rm O}\left(k{n}^{2}\right)+O\left({n}^{2}\right)+{\rm O}\left({k}^{2}n\right)+{\rm O}\left({k}^{2}\right)+O\left(k\right)+{\rm O}\left(kn\right)\), \({\rm O}\left(k{n}^{2}\right)+O\left({n}^{2}\right)+{\rm O}\left({k}^{2}n\right)\) and \({\rm O}\left(nk\right)+{\rm O}\left({n}^{2}\right)+O\left(n\right)+O\left(k\right)\) for \(W\),\(X\) and \(\gamma \), respectively. Further, the computational complexity of MMNMF models is \(O\left({n}^{2}k\right)+O\left({k}^{2}n\right)+O\left(n\right)\). Since \(k\ll n\), the total computational complexity of this part for \({I}_{t}\) iteration is \({\rm O}\left({I}_{t}k{n}^{2}\right)+{\rm O}\left({I}_{t}{k}^{2}n\right)+{\rm O}\left({{I}_{t}k}^{2}\right)+{\rm O}\left({I}_{t}kn\right)+O\left({I}_{t}k\right)+O\left({I}_{t}n\right)+{\rm O}\left({I}_{t}{n}^{2}\right)\approx {\rm O}\left({I}_{t}k{n}^{2}\right)\).

4 Results and Comparative Analysis

The knowledge priority errors, the use of the NMF methods for all data types and the lack of knowledge about modularity clustering priority prompted us to present the MRASNMF method. Therefore, in this section, we will first introduce the assessment standards, then we will explain the consideration of the basic knowledge priority and knowledge priority errors of the communities of some nodes, and the set of networks will be introduced to evaluate the methods. Then, the tuning procedure of the \(\alpha \) parameter is explained. Finally, we will compare the performance of MRASNMF method with a variety of semi-supervised and unsupervised methods.

4.1 Assessment Standards

The modularity criterion (\(Q\)) and normalized mutual information (\(NMI\)) are applied to test the community detection models. Mutual information is a comprehensive measure for evaluating community detection models. The algorithm takes advantage of the ground truth partition labels of each node and a limited number of clusters. As a result, the criterion of mutual information may be rewritten as follows:

where \({n}_{{C}_{i}}\) is the number of members in \({C}_{i}\) and \(\left|C\right|\) is the total number of clusters. The criterion of mutual information is equal to one if cluster \(C\) is completely similar to the cluster of \({C}^{\mathrm{^{\prime}}}\); otherwise, the criterion of mutual information equals zero if \(C\) and \({C}^{\mathrm{^{\prime}}}\) are entirely comparable to each other.

4.2 Knowledge Priority and Knowledge Priority Errors

For further clarification, two important features of this paper for evaluating the methods are using the basic knowledge of the communities of some nodes and introducing the basic knowledge errors in each algorithm. When using the initial knowledge section, it is first necessary to categorize \(\frac{n\left(n-1\right)}{2}\) pairs of nodes in order to implement and use the initial knowledge for each indirect graph. Random nodes are chosen based on percentages determined for the initial knowledge (1%, 5%, 10%, 20%, and 30%). Based on the knowledge of labels of real communities, the selected pairs of nodes are classified according to whether they belong to the same cluster or are present in separate clusters, and each algorithm generates matrices based on the initial understanding of the graphs. Additionally, to introduce basic knowledge errors, two nodes that are not default cluster members are considered members of one cluster, and nodes that are members of other clusters as a group. In this way, we enter different initial error percentages (2%, 4%, 6%, 8%, and 10%) into the initial knowledge error.

4.3 Network Sets

In this article, five real data sets are considered in such a way that both simple (karate network) and complex (other networks) data types are considered so that the efficiency of the proposed new algorithm can be checked for both types of data. Additional information on these five datasets is shown and explained in Table 2. In this table, the parameters \(m\) and \(n\), respectively, are the number of edges and nodes in each network, which are assumed to be known. As can be seen, complex networks have more nodes and edges. Therefore, by choosing these networks, it is possible to check how the proposed algorithm works for networks with different dimensions.

4.4 Internal Parameter Adjustment

In the proposed MRSNMF model, \(\alpha \) is the weighting parameter to control and improve the effectiveness of the initial knowledge of whether the nodes are in the same group or not in the same group. Also, these parameters can have a significant effect on improving the optimization function and reaching the best answer. According to this issue, if \(\alpha \) values are very large, the community identification part based on a non-negative decomposition of the matrix will be ineffective. Therefore, the best clustering will not be achieved. On the other hand, if \(\alpha \) values are very small, close to zero, the effect of initial knowledge from the cluster of some nodes will not enter the community identification algorithm. As a result, checking and evaluating the best value for \(\alpha \) can lead to the best cluster of complex network nodes.

According to Fig. 1, an example of different values of the measurement criteria for the dataset of political books is considered with the assumption of 10% basic knowledge. As seen in Fig. 1, different values of the \(\alpha \) parameter will affect the quality of the response. Therefore, the best values of \(\alpha \) parameters for the data set in Table 2 with different initiall knowledge percentages for use in conducting final tests are recorded in Table 3. For further explanation, to adjust the parameters of the proposed MRSNMF model, we employed the method proposed in [16] for all the data sets in Table 2. That is, at first, several tests were performed with respect to different values of \(\alpha \) in the range of 0.5–10, assuming that the step is increased to 0.5. In each step, the values of the mutual information criterion and contract criterion have been calculated and measured. Then, by choosing the mutual information criterion as the main criterion for choosing the best value of α parameters, these parameters have been obtained according to Table 3.

4.5 Comparative Performance Analysis of MRASNMF Method

In this section, several algorithms for unsupervised and semi-supervised community detection will be evaluated, in comparison with the proposed MRASNMF method. Semi-supervised community detection methods such as PCNMF [33], PCSNMF [34], RSSNMF [16], SVDCSNMF [35], and MRASNMF, SNMF-SS [31], and NMF_LSE [32] use linear combinations of knowledge priority and knowledge combination in the similarity matrix. Recent unsupervised community detection algorithms that have been considered for comparison are DPNMF [54], NMFGAAE [55], and NCNMF [56]. To determine the number of cores, the DPNMF method improves density peak clustering. To reduce the approximation, this method also employs non-negative double singular value decomposition initialization. The NMFGAAE method is composed of two major modules: NMF and Graph Attention Auto-Encoder. The NCNMF method makes use of node centrality as well as a new similarity measure that takes into account the proximity of higher-order neighbors.

Table 4 presents the experimental results of semi supervised and unsupervised algorithms on five real-world networks. As a consequence of the lack of knowledge priority, unsupervised clustering methods such as DPNMF and NCNMF will have better clustering than semi-supervised algorithms. Therefore, among the semi-supervised algorithm, MRASNMF algorithm has been able to get a better community than other algorithms. In addition, it was attempted in the following to analyze and compare the performance of semi-supervised methods with different knowledge priority ratios.

Tables 5, 6, 7, 8, 9, and 10 present the experimental results of semi-supervised algorithms in comparison to MRASNMF for various ratio of knowledge priority. To adjust the parameters of the MRASNMF algorithm according to the data, the required parameters are set as shown in Table 3. For other algorithms, the required configurable parameters, have been adjusted according to their underlying references. The following results are derived by comparing the results in Table 5, 6, 7, 8, and 9:

-

According to Table 5, the algorithm presented in this article for a simple data set like karate has been able to provide the best performance without using any initial knowledge like other methods.

-

Based on Table 5, 6, 7, 8, and 9, in all the algorithms by adding different percentages of basic knowledge, more appropriate clustering has been achieved in terms of real communities or the improvement of the mutual information criterion. But in some cases, such as Table 7 and 9, the detection of communities has decreased compared to the modularity criterion. The reason for this is that the detection of semi-supervised communities based on non-negative matrix decomposition will try to improve and reach real communities by introducing the initial knowledge of the cluster of each node. As a result, they do not consider the type and characteristics of the graph. Obviously, methods of detecting communities that aim to reach real communities improve the quality of reaching real communities or better mutual information but do not guarantee improved modularity.

-

From the comparison of the results, it can be seen that for complex data such as Table 5, 6, 7, 8, and 9, the algorithm proposed in this article can perform better than the other three algorithms. In other words, the MRSNMF algorithm is an algorithm that has been able to reach the maximum value of the mutual information criterion with less benefit than initial knowledge. In fact, it can be claimed that, on average, our algorithm has been able to provide better clustering than other up-to-date algorithms in the world with less initial knowledge.

-

According to the results presented in Table 5, 7, 8, and 9, the linear combination of basic knowledge algorithms, including SVDCSNMF, had a similar response to the basic combination of knowledge algorithms in the similarity matrix, including SNMF-SS. However, it should be noted that with the increase in the percentage of basic knowledge, a much better acceleration is observed in increasing the functional characteristics of the basic knowledge combination methods in the similarity matrix.

4.6 Robustness of Algorithms

The robustness of the method refers to how well it maintains its clustering efficiency and quality even in the presence of error information in the initial knowledge and corrects the incorrect information. Therefore, to check and evaluate the algorithms, assuming the presence of 5% of the initial correct knowledge, the wrong information from the members of each cluster is randomly considered with different percentages (1–10%), and then the accuracy of each algorithm’s result based on the information provided by those members will be assessed. Then each cluster will be measured based on the mutual information criterion. Finally, the results are shown as a diagram in Fig. 2. Table 10 also shows the execution time of each algorithm for each network. The following results can be obtained from Fig. 2 and Table 10.

-

In all algorithms, by adding initial knowledge errors with different percentages, the quality and efficiency of community identification will decrease.

-

Robust algorithms such as MRSNMF and RSSNMF have a lower slope in reducing efficiency than other algorithms.

-

The algorithm presented in this article has a lower slope in efficiency reduction and the best performance compared to other methods. It is also more resistant to uncertainties with a larger percentage of errors.

-

The noteworthy point is that with the increase of the initial knowledge percentage, the linear combination algorithms based on the initial knowledge (such as SNMF-SS) will have a faster increase in error than the algorithms combining the initial knowledge of the similarity matrix (such as PCNMF and SVDCSNMF).

-

While the execution time of the proposed algorithm is comparable and close to other algorithms, it has perfromed a better grouping quality in removing uncertainty and initial knowledge of the grouping members.

5 Conclusion

Complex networks are an approach for modeling complex systems. Various methods such as detecting communities and predicting hidden edges are used to analyze and evaluate these networks. Among the widely used tools for classification, analyzing the hidden parts of the network, and achieving predetermined goals are methods for community detection or clustering. The community detection method based on matrix non-negativity analysis is one of the common methods for detecting semi-supervised communities in graphs, which has challenges such as a lack of specialization for data and improving efficiency in the presence of errors in the initial knowledge of the members of each cluster. The modularity criterion is one of the common methods of detecting graph communities. Therefore, in order to deal with the mentioned challenges, in this paper we have proved that the method of detecting communities based on the modularity criterion has a similar structure to the method of detecting communities based on non-negative matrix analysis. Then, by using the new model of community detection based on the modularity criterion, the new model of semi-supervised robust community detection has been applied in such a way that it is possible to take advantage of basic knowledge, use the modularity criterion, and improve the matrix non-negativity analysis. Then the problem is solved by an iterative method, and the MRSNMF algorithm is developed. Also, the convergence of this algorithm has been checked. Finally, the output results of the algorithms were extracted from five different data sets. Based on its results, the efficiency and effectiveness of the proposed algorithm have been investigated. The effectiveness and robustness of the developed algorithm to initial knowledge errors have been demonstrated.

Data Availability

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

References

Kumar S, Hanot R (2021) Community detection algorithms in complex networks: a survey. Adv Signal Process Intell Recognit Syst 1365:202–215

Girvan M, Newman MEJ (2002) Community structure in social and biological networks. Proc Natl Acad Sci USA 99(12):7821–7826

Newman MEJ (2018) Networks. Oxford University Press, New York

Newman MEJ, Girvan M (2004) Finding and evaluating community structure in networks. Phys Rev E 69(2):026113

Fariahhag N, Mordi M, Wang ZJ (2019) Community structure detection from networks with weighted modularity. Pattern Recogn Lett 122:14–22

Li T, Wang X, Zhu SH, Zhu SH, Ding C (2011) Community discovery using nonnegative matrix factorization. Data Min Knowl Disc 22(3):493–521

Li C, Chen H, Li T (2022) A stable community detection approach for complex network based on density peak clustering and label propagation. Appl Intell 52:1188–1208

Wang T, Chen S, Wang X, Wang J (2020) Label propagation algorithm based on node importance. Phys A Stat Mech Appl 551:124137

Rosvall M, Bergstrom CT (2008) Maps of random walks on complex networks reveal community structure. Proc Natl Acad Sci 105(4):1118–1123

Zhou J, Li L, Zeng A, Fan Y, Di Z (2018) Random walk on signed networks. Phys A 508:558–556

Shang R, Zhao K, Zhang W, Feng J, Li Y, Jiao L (2022) Evolutionary multiobjective overlapping community detection based on similarity matrix and node correction. Appl Soft Comput 127:109397

Yin Z, Deng Y, Zhang F, Luo Z, Zhu P, Gao C (2021) A semi-supervised multi-objective evolutionary algorithm for multi-layer network community detection. In: International Conference on Knowledge Science, Engineering and Management (KSEM 2021), pp 179–190

Whang JJ, Du R, Jung S, Lee G, Drake B (2020) MEGA: Multi-view semi-supervised clustering of hypergraphs. Proc VLDB Endow 13(5):698–711

Ghadirian M, Bigdeli N (2023) Hybrid adaptive modularized tri-factor non-negative matrix factorization for community detection in complex networks. Scientia 30(3):1068–1084

Kuang D, Ding C, Park H (2012) Symmetric nonnegative matrix factorization for graph clustering. In: Proceedings of the 2012 SIAM International Conference on Data Mining, SIAM, pp 106–117

He C, Zheng Q, Tang Y, Liu S, Zheng J (2019) Community detection method based on robust semi-supervised nonnegative matrix factorization. Phys A 523(1):279–291

He C, Tang Y, Liu K, Li H, Liu S (2018) A robust multi-view clustering method for community detection combining link and content information. Phys A 514:396–411

Yan C, Chang Z (2019) Modularized tri-factor nonnegative matrix factorization for community detection enhancement. Phys A Stat Mech Appl 533:122050

Zheng PM, Zhou Z (2020) Structural deep nonnegative matrix factorization for community detection. Appl Soft Comput 97(B):106846

Chen Z, Lin P, Chen Z, Ye D (2022) Diversity embedding deep matrix factorization for multi-view clustering. Inform Sci 610:114–125

Huang J, Zhang T, Yu W, Zhu J, Cai E (2021) Community detection based on modularized deep nonnegative matrix factorization. Int J Pattern Recognit Artif Intell 35(2):2159006

Wang D, Li T, Huang W, Luo Z, Deng P, Zhang P, Ma M (2023) A multi-view clustering algorithm based on deep semi-NMF. Inf Fusion 99:101884

Jin H, Li S (2019) Graph regularized nonnegative matrix tri-factorization for overlapping community detection. Phys A 515:376–387

Chen C, Zho W, Peng B (2022) Differentiated graph regularized non-negative matrix factorization for semi-supervised community detection. Phys A Stat Mech Appl 604:127692

Wang D, Li T, Deng P, Liu J, Hueng W, Zhang F (2023) A generalized deep learning algorithm based on NMF for multi-view clustering. IEEE Trans Big Data 9(1):328

Wang D, Li T, Deng P, Zhang F, Huang W, Zhang P, Liu J (2023) A generalized deep learning clustering algorithm based on non-negative matrix factorization. ACM Trans Knowl Discov Data 17(7):1–20

Deng P, Li T, Wang H, Wang D, Horng S, Liu R (2022) Graph regularized sparse non-negative matrix factorization for clustering. IEEE Trans Comput Soc Syst 99:1–12

Zhang XY, Yuan Y (2021) Dynamic community detection via Kalman filter-incorporated non-negative matrix factorization. In: IEEE International Conference on Networking, Sensing and Control (ICNSC)

Xu W, Gong Y (2004) Document clustering by concept factorization. In: Proceedings of the 27th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp 202–209

Wang D, Li T, Deng P, Wang H, Zhang P (2022) Dual graph-regularized sparse concept factorization for clustering. Inf Sci 607:1074–1088

Zhang ZY (2013) Community structure detection in complex networks with partial background information. Europhys Lett 101(4):48005

Ma X, Gao L, Yong X, Fu L (2010) Semi-supervised clustering algorithm for community structure detection in complex networks. Phys A 389(1):187–197

Yang Y, Hu B (2007) Pairwise constraints-guided non-negative matrix factorization for document clustering. In: IEEE/WIC/ACM International Conference on Web Intelligence, pp 250–256

Shi XH, Lu HT, He YC, He S (2015) Community detection in social network with pairwisely constrained symmetric non-negative matrix factorization. In: Proceedings of the 7th IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining, pp 541–546

Liu X, Wang WJ, He DX, Jiao PF, Jin D, Cannistracic CV (2017) Semi-supervised community detection based on non-negative matrix factorization with node popularity. Inf Sci 381(12):304–321

Essid S, Fevotte C (2013) Smooth nonnegative matrix factorization for unsupervised audiovisual documen structuring. IEEE Trans Multimed 15(2):415–425

Févotte C, Vincent E, Ozerov A (2018) Single-channel audio source separation with NMF divergences, constraints and algorithms. In: Makino S (ed) Audio source separation. Springer, Berlin, pp 1–24

Peng S, Ser W, Chen B, Lin Z (2021) Robust semi-supervised nonnegative matrix factorization for image clustering. Pattern Recognit 111:107683

Huang K, Fu X, Sidiropoulos ND (2016) Anchor-free correlated topic modeling: identifiability and algorithm. Advances in Neural Information Processing Systems, pp 1794–1802

Zhang Y, Wang H, Yang Y, Zhou W, Li T, Ouyang X (2021) Deep matrix factorization with knowledge transfer for lifelong clustering and semi-supervised clustering. Inf Sci 570:795

Sanchez J, Duarte A (2018) Iterated Greedy algorithm for performing community detection in social networks. Future Gener Comput Syst 88:785–791

Guerrero M, Montoya FG, Baños R, Alcayde A, Gil C (2017) Adaptive community detection in complex networks using genetic algorithms. Neurocomputing 266:101–113

Fortunato S, Barthłemy M (2007) Resolution limit in community detection. Proc Natl Acad Sci 104(1):36–41

Li WM, Xie J, Xin MG, Jun M (2018) An overlapping network community partition algorithm based on semi-supervised matrix factorization and random walk. Expert Syst Appl 91:277–285

Yang L, Cao XC, Jin D, Wang X, Meng D (2015) A unified semi-supervised community detection framework using latent space graph regularization. IEEE Trans Cybern 45(11):2585–2598

Lu PH, Sang X, Zhao Q, Lu J (2020) Community detection algorithm based on nonnegative matrix factorization and pairwise constraints. Phys A Stat Mech Appl 545:123491

Pu J, Zhang Q, Zhang L, Du B, You J (2016) Multiview clustering based on robust and regularized matrix approximation. In: 23rd International Conference on Pattern Recognition, pp 2550–2555

Lee DD, Seung HS (2001) Algorithms for non-negative matrix factorization. Adv Neural Inf Process Syst 13:556–562

Luo X, Liu Z, Jin L, Zhou Y, Zhou M (2021) Symmetric nonnegative matrix factorization-based community detection models and their convergence analysis. IEEE Trans Neural Netw Learn Syst 33(3):1203–1215

Zachary WW (1977) An information flow model for conflict and fission in small groups. J Anthropol Res 33(4):452–473

Lancichinetti A, Fortunato S, Radicchi F (2008) Benchmark graphs for testing community detection algorithms. Phys Rev E 78(4):046110

Lusseau D, Schneider K, Boisseau OJ, Haase P, Slooten E, Dawson SM (2003) The bottlenose dolphin community of doubtful sound features a large proportion of long-lasting associations. Behav Ecol Sociobiol 54(4):396–405

Kunegis J (2013) KONECT: The Koblenz Network Collection. In: Proceedings of the 22nd International Conference on World Wide Web Companion, pp 1343–1350

Adamic LA, Glance N (2005) The political blogosphere and the 2004 US election: divided the blog. In: Proceedings of the 3rd Workshop on Link Discovery, ACM 2005, pp 36–43

Lu H, Zhao Q, Sang X, Lu J (2020) Community detection in complex networks using nonnegative matrix factorization and density-based clustering algorithm. Neural Process Lett 51:1731–1748

He C, Zheng Y, Fei X, Li H, Hu Z, Tang Y (2022) Boosting nonnegative matrix factorization based community detection with graph attention auto-encoder. IEEE Trans Big Data 8:968–981

Su S, Guan J, Chen B, Huang X (2023) Nonnegative matrix factorization based on node centrality for community detection. ACM Trans Knowl Discov Data 17(6):1–21

Funding

The authors confirm that no funding was received for this research.

Author information

Authors and Affiliations

Contributions

M. Ghadirian developed the theory and performed the simulations as part of his PhD dissertation and wrote the main manuscript text. N. Bigdeli contributed to the final version of the manuscript and supervised the project.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ghadirian, M., Bigdeli, N. A New Adaptive Robust Modularized Semi-Supervised Community Detection Method Based on Non-negative Matrix Factorization. Neural Process Lett 56, 134 (2024). https://doi.org/10.1007/s11063-024-11588-y

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11588-y