Abstract

Based on the coding mechanism and interactive features of visual information in the visual pathway, a new method of image contour detection is proposed. Firstly, simulating the visual adaptation characteristics of retinal ganglion cells, an adaptation & sensitization regulation model (ASR) based on the adaptation-sensitization characteristics is proposed, which introduces a sinusoidal function curve modulated by amplitude, frequency and initial phase to dynamically adjusted color channel response information and enhance the response of color edges. Secondly, the color antagonism characteristic is introduced to process the color edge responses, and the obtained primary contour responses is fed forward to the dorsal pathway across regions. Then, the coding characteristics of the “angle” information in the V2 region are simulated, and a double receptive fields model (DRFM) is constructed to compensate for the missing detailed contours in the generation of primary contour responses. Finally, a new double stream information fusion model (DSIF) is proposed, which simulates the dorsal overall contour information flow by the across-region response weighted fusion mechanism, and introduces the multi-directional fretting to simulate the fine-tuning characteristics of ventral detail features simultaneously, extracting the significant contours by weighted fusion of dorsal and ventral information streams. In this paper, the natural images in BSDS500 and NYUD datasets are used as experimental data, and the average optimal F-score of the proposed method is 0.72 and 0.69, respectively. The results show that the proposed method has better results in texture suppression and significant contour extraction than the comparison method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The contour, as the boundary between the target and the background, is a curve outlined and combined by numerous target edge points. In human vision, once the visual cortex area responsible for contour perception is damaged, it will cause humans to be unable to recognize objects and thus unable to perceive the scene. Therefore, in the image understanding and analysis of computer vision, the accuracy of image contour features is of great significance.

Most of the traditional contour detection methods represented by the Sobel operator are based on the jump of image pixel grayscale, extracting contours based on the change information of local neighborhood gradients, which has high detection efficiency and mathematical interpretability. However, such methods overlook the important role of visual mechanisms such as adaptability, directionality, and multi-channel collaborative coding in contour perception, and are not suitable for detailed images with complex backgrounds.

1.1 Methods Based on Deep Learning

With the widespread use of artificial intelligence algorithms, the contour detection methods based on deep learning have emerged, which can be roughly divided into two stages: the contour detection algorithm based on local area and based on global end-to-end model. In the contour detection algorithm based on local area, Bertasius et al. [1] first proposed the Deep Edge algorithm, which extracted multi-scale information from the local area of the image and used two subnetworks (classification and regression) to calculate the classification and regression results to obtain the final contour output; Subsequently, BERTASIUS et al. [2] improved it and proposed a deep network model (HFL) that utilized object level information to predict boundaries. It used feature vectors to predict the edge probability of candidate points, separating objects from image backgrounds and recognizing object contour features more accurately. Among the global end-to-end contour detection algorithm, Xie et al. [3] proposed the first end-to-end contour detection algorithm HED, which utilized the multi-output (side output and fused output) structure to extract global features of the image while restoring the original resolution image; Liu et al. [4] proposed a new CNN architecture (RCF) based on HED, which performed pixel level prediction in the form of image-to-image, fully utilizing multi-scale and multi-level information of objects to predict image edges, and compressing features of convolutional layers with the same resolution, greatly improving the performance of contour detection. However, the deep learning-based methods have obvious limitations, such as the need for massive training data and long training time, as well as problems such as model inexplicability, excessive parameter variables, and complex calculations.

1.2 Methods Based on Brain-Like Vision

With the progress of visual neurophysiology and psychological experiments, brain-like vision has received more and more attention. For example, some studies [5] have used the color antagonistic mechanism to simulate the characteristics of the cell receptive field in the optic pathway, and combined with the spatial sparsity features to suppress image texture, so as to effectively extracted the color image contours while reducing the texture background. Inspired by this model, subsequent scholars have improved it from different perspectives. Lin et al. [6] used light and dark channels to simulate the light and dark adaptation mechanism of rods and cones, effectively detecting the brightness contrast boundary, thus strengthening the contour information; Zhao et al. [7] proposed a new center-surround interaction model, which flexibly used the receptive field direction and its nearby subdomains to perceive local weak contours, and it had good robustness to suppress non contour textures. However, the above models usually utilized the functional characteristics of low-level visual areas on the visual pathway, ignoring the connection between high-level and low-level visual areas, as well as the final integration effect of high-level visual areas on the image; Therefore, some studies [8] inspired by the receptive field of neurons, believed that there was a receptive field surrounding modulation mechanism similar to neurons in V1 area in V2 area, thus introducing the connection between primary and advanced visual areas to improve the integrity of contour extraction; There was also research [9] that proposed a visual information interaction model based on bilateral attention pathways to address the characteristics of visual information diversion, transmission, and interaction response in visual pathways. The target contour was obtained through the difference in information between the dorsal and ventral sides in advanced visual regions; In addition, there were studies [10] that used HSV to encode the hue, saturation, and brightness of photoreceptor adjusted images, and introduced visual degradation mechanisms in the primary and advanced visual regions to simulate a visual perception model that was more in line with the human visual system. It is worth pointing out that the contour detection models based on initial and advanced visual regions mentioned above have good detection results, but most models ignored the impact of color edge response on the contour information of the image subjects. Some models that considered color edge response have problems with complex algorithm processing and low computational efficiency; Moreover, the above model only considered the hierarchical feedforward transmission process of visual information flow in the visual pathway, which weakened the cross-view modulation effect of the pre-nodes on the higher cortex region, which was prone to texture edge error detection, subject contour omission, etc.

To solve the problems mentioned above, this paper simulates the encoding processing and interaction characteristics of visual information in each response region of the primary and advanced information transmission pathways. It introduces a simple algorithm to enhance color edge response while adopting cross-region transmission to strengthen the information interaction between the primary and advanced visual regions. The main points of this paper are summarized as following steps:

-

(1)

This paper utilizes the visual adaptation features of ganglion cells to adaptively adjust the response of three-color channels, and an Adaptation & Sensitization Regulation model (ASR) is constructed to enhance the color edge response.

-

(2)

Considering the influence of the “angle” information coding characteristics of V2 area on the detail contour, a double receptive fields model (DRFM) is constructed to supplement the detail contour missed in the primary contour response generation. At the same time, in order to modify the image contour information more precisely, cross-view response weighted fusion model is used to simulate the output of the dorsal flow, and the multi-directional fretting is used to simulate the characteristics of the ventral flow.

-

(3)

Finally, based on the velocity of the dorsal and ventral information flow, the output response results of the double flow processing are weighted and combined to build a double stream information fusion model (DSIF), which achieves a closer initial-advanced visual information interactive response fusion, and can extract the contour information of the image subject quickly.

The rest of this paper is organized as follows. Section 2 describes in detail the specific method and implementation steps of our method. The experimental results and analysis are shown in Sect. 3. A conclusion is drawn in Sect. 4.

2 Methods

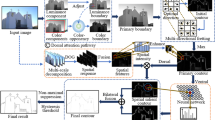

Contour is an important clue for visual perception, providing key information for object recognition, visual search, and scene analysis. In the human visual system, visual contour information is transmitted from the retina to the cerebral cortex, and neurons in each brain region process the information to achieve contour extraction. Therefore, based on the transmission mechanism of visual information transmission, a new image contour detection model (ASR+ DRFM+ DSIF, ADD) is constructed by simulating the encoding processing and interaction characteristics of visual information in each response area in the primary information transmission pathway (retina - lateral geniculate - primary visual cortex) and the higher information transmission pathway (V2 - dorsal and ventral pathways). The overall structure of the model is shown in Fig. 1.

2.1 Primary Information Transmission Pathway

In the primary information transmission pathway, the retina receives visual information and converts it into electrical signals. The lateral geniculate body (LGN) serves as an information transfer station to receive visual information transmitted by the retina, and feeds forward the processed visual information to the primary visual cortex (V1 region) for processing. In this paper, we consider that the response of ganglion cells in the red, green and blue color channels of the retina to photoreceptor input has adaptive regulation characteristics. At the same time, the color antagonism characteristics of cells are introduced to simulate different levels of neurons to process color information, and the primary contour response is calculated by using the color antagonistic receptor field.

2.1.1 Adaptive Regulation of Ganglion Cells

Neurophysiology indicates that there are three hierarchical cell layer structures in the retina, including photoreceptor cell layer, junction cell layer, and ganglion cell layer. Among them, the connecting cell layer serves as a bridge for visual information transmission between the photoreceptor cell layer and the ganglion cell layer. Studies have found that ganglion cells exhibit adaptability and sensitization, which are complementary phenomena that help expand the dynamic coding range of ganglion cells for visual signals. This characteristic is influenced by the color of the stimulus. Blue light stimulation leads to more sensitization reactions than green light stimulation, resulting in neurons being sensitive to weak input responses and relatively insensitive to strong inputs. Correspondingly, ganglion cells under green light stimulation exhibit more adaptive characteristics, which are exactly opposite to sensitization reactions [11,12,13,14]. Therefore, this paper proposes ASR model based on the adaptability and sensitization of ganglion cells, which detects the strength of the light sensing layer input signals received by ganglion cells in different color channels and adjusts the input signals of each color channel adaptively, as shown in Fig. 2.

Firstly, this paper uses Gabor filters to simulate the collection of light signals from cone cells within a certain range, as shown in Eqs. (1) to (2), and \( C_{v}(x, y; \sigma )\) represents the output response of cone cells.

Among them, \(\sigma \) represents the size of the filter, * represents convolution calculation, \(G(x,y;\sigma )\) is a Gabor filter, and \(I_{\nu }(x,y)\) represents the input images of each color channel.

Secondly, the modulated sinusoidal function is used to simulate the adaptability and sensitization of ganglion cells in the blue and green channels, the modulated sinusoidal function and its parameter form are shown in Eq. (3). At the same time, for the convenience of calculation, the output response of the cone cells is normalized.

In Eq. (3), A, f and \(\phi \) are the key information for modulating the sinusoidal function. Among them, A represents the regulatory characteristics and intensity of the visual adaptation mechanism of ganglion cells. n represents the horizontal coordinate of the sinusoidal function prior to rotation, and its range is determined by the rotation angle \(\vartheta \). It is essential to ensure that the values of the horizontal and vertical coordinated after rotation are confined within the interval [0,1]. This constraint is crucial for maintaining the desired range of values and preserving the integrity of the data during the rotation process.

When ganglion cells exhibit adaptability, set A to a positive value; When ganglion cells exhibit sensitization, set A to a negative value. f and \(\phi \) adjust the frequency and initial phase of the sinusoidal function respectively. \(C_{\textrm{r}^{\prime }}\) and \(C_{\ell ^{\prime }}\) represent the modulated color channel response, and \(\vartheta \) represents the rotation angle of the sinusoidal function in the \(C_{\ell }-C_{\ell ^{\prime }}\) response adjustment plane, which affects the adaptive change results of the response of each color channel.

2.1.2 Color Antagonism Characteristics of LGN and V1 Cells

Many studies show that there is color antagonistic space in the primary information transmission pathway, including single antagonistic receptive field and double antagonistic receptive fields, which are mainly divided into red-green and blue-yellow color antagonistic receptive field [15]. In the RGB color model, yellow light can be obtained by superposition of red light and green light, namely \( I_\textrm{y} =0.5\cdot (I_\textrm{r}+I_\textrm{g} )\). This paper utilizes the color antagonistic properties of cells to process image color information and extract primary contour features of color images.

In the LGN layer and the ganglion layer, two types of cells have similar characteristics of single-antagonistic receptive field, so in this paper, LGN cells and ganglion cells are combined as a layer to process the input information of the cone after adaptive regulation by ganglion cells. The single antagonistic receptive field has the characteristic of separating the brightness and color information in the image. The single-center-antagonistic receptive field model is used to construct the layer of neurons, the responses of red-green, blue-yellow neurons are obtained, which can be shown in Eq. (4):

In the formula, \(\omega _{\ell _1}\) and \(\omega _{\ell _2}\) represent the connection weights between cone cells and other retinal cells in each color channel. When \(\omega _{\ell _1}>0\) and \(\omega _{\ell _2}<0\), the response of type \(\ell _1+/\ell _2-\) cells is obtained, and the sign of “\(+\)” and “−” denote the role of excitation and inhibition, respectively; vice versa. When the connection weights are equal, i.e. \(\omega _{\ell _1}=\omega _{\ell _2}\), only color information responds, and the brightness information does not respond. When the connection weights are unequal, i.e. \(\omega _{\ell _1}\ne \omega _{\ell _2}\), color and brightness information are responded immediately and simultaneously.

In the V1 layer, cells have the characteristics of double antagonistic receptive fields, that is, they have both color antagonism and spatial antagonism, and their orientation selectivity can detect color boundaries with the same direction but different polarity. The stimulation response of double antagonistic receptive fields with different orientations is simulated by two-dimensional Gaussian derivative function, which is shown as Eqs. (5) to (7):

In the above formulas, \(\tilde{x} =x\cos (\theta _{i \textrm{k}} )+y\sin (\theta _{i \textrm{k}} )\) and \(\tilde{y} =-x\sin (\theta _{i \textrm{k}} )+y\cos (\theta _{i \textrm{k}} )\). In this paper, \(\lambda \) is used to control the aspect ratio of the receptive field, which simulates different shapes of the receptive field. k in Eq. (7) indicates the size of the receptive field of neurons in V1 area relative to the receptive field of LGN neurons. \(\theta _{i\textrm{k}} =\frac{2\pi \cdot (i-1)}{N_\textrm{k} }, i=1,2,\dots ,{N_\textrm{k} }\), the larger \({N_\textrm{k} }\) is, the finer the orientation of the receptive field obtained will be, and the closer the angle to the true contour will be, but the amount of calculation will also increase accordingly.

When \(\theta _{i\textrm{k}} = \theta _{i\textrm{kmax}}\), \(\overline{D_{\ell _{1} \ell _{2}}}\left( x, y; \theta _{i \textrm{kmax}}, N_{\textrm{k}}\right) =\textrm{max}\left\{ {D_{\ell _{1} \ell _{2}}}\left( x, y; \theta _{i \textrm{k}}, N_{\textrm{k}}\right) \right\} \), \(\theta _{i\textrm{kmax}}\) is the preferred orientation corresponding to the response of the largest neuron receptive field of the \(\ell _{1}\ell _{2}\) antagonistic channel in each direction. Based on this, combined with sparse encoding [5] to suppress texture, the four channel responses of red-green, green-red, blue-yellow, and yellow-blue are normalized to obtain the primary contour response \(SD_{\ell _{1}\ell _{2}}\) of the V1 region.

2.2 Advanced Information Transmission Pathway

In addition to the primary information transmission pathway, the human visual information transmission pathway also includes an advanced information transmission pathway composed of the extrastriatal layer (i.e. V2, V3, V4, V5, etc.), which performs more refined processing of visual information. David Milner and other scholars first proposed the “double flow hypothesis” model [16], that is, there are two visual transmission pathways in the visual information transmission pathway - the dorsal information flow and the ventral information flow: The dorsal information flow starts from V1 area, after being processed by V2 deep stripe area, it enters the dorsomedial area and V5 area, and then reaches the parietal cortex area. The ventral information flow starts from V1 area, passes through V2 narrowband area and V4 area in sequence, reaches the temporal lobe cortex area, and achieves information fusion with the dorsal information flow in the left lower parietal lobe [17]. Therefore, this paper takes the V2 region and the dorsal-ventral double flow information pathway as examples to demonstrate the processing of visual features by advanced information transmission pathways.

2.2.1 Encoding Characteristics of V2’s “angle” Information

Based on physiological experiments, the V2 receptive fields (V2RFs) can effectively respond and encode the scarce “angle” information in the real world, which is composed of two receptive fields in V1. The preferred orientations of these two receptive fields may be the same or different [18]. There were studies modeling V2RFs based on this characteristic, focusing on the degradation effect of visual information during transmission, and combining the pulse timing dependent plasticity mechanism of neurons to obtain the contour response of the V2 region after degradation [19]. However, the study focused on the degradation of visual information in V2 area, neglected to integrate the visual information of receptive fields from different angles in V2 area, only focused on receptive field from one fixed angle in V1 area, lacking certain generalization.

Therefore, DRFM model based on the optimal receptive field in the primary visual cortex is proposed in this paper, which can be shown in Fig. 3. The weighted combination of two receptive fields in different preferred orientations in V1 area is used to form the receptive field in V2 area, which simulates the “angle” response of V2, integrating the responses obtained from different receptive fields, and supplementing the detailed outline. The specific definition is shown in Eqs. (8)\(\sim \)(10):

In the formula, \(N_\textrm{k}\) and \(N_\textrm{r}\) represent the number of receptive field orientations, which are used to divide the circumferential angels equally and find the preferred orientation of optimal response under different circumstances. Two receptive fields in V1 area with the preferred orientation \(\theta _{i\textrm{kmax}} \) and \(\theta _{i\textrm{smax}}\) synthesize the receptive field of V2 area, which makes up for the scarcity of the visual information mapped from V1 according to the complementary characteristic of the two receptive fields. With the help of the characteristic, the effective “angle” response is obtained and the response of the weak contour is strengthened. \(\theta _{i\textrm{smax}}\) represents the preferred orientation of the receptive field in V2 area.

In Eq. (9), \(\kappa _1\) and \(\kappa _2\) are the fusion weights corresponding to the two receptive fields in V1 area respectively, and their value determine the size of the receptive field area. Utilizing DRFM to compensate for the missing detail contours in the generation of primary contour responses, advanced contour responses \(SCD_{\ell _1 \ell _2}\) in the V2 region can be obtained.

2.2.2 Double Stream Information Fusion

After being encoded by the V2 region information, visual information flows into the dorsal and ventral pathways for further processing. Studies have shown that the dorsal pathway focused on recognizing the overall contour information of objects and had a high information transmission rate. The ventral pathway focused more on regulating the local feature information perceived by visual perception, and had a lower information transmission rate compared to the dorsal pathway [20,21,22]. The brain roughly extracts visual information through the dorsal pathway, generates a rapid assessment of the surrounding environment, and then uses the ventral pathway to extract more refined features of the environmental information. Finally, the double stream information is fused in the left lower parietal lobe to obtain more accurate contour edge responses [23,24,25]. Therefore, this paper utilizes the characteristics of double stream pathways and their information fusion characteristics to construct DSIF model, which simulates the information regulation and integration of double stream pathways.

Firstly, the dorsal pathway receives a rough reading of the overall contour of the primary visual cortex within 100ms, i.e. the response of the primary contour \(SD_{\ell _1 \ell _2}\), as shown in Eq. (11):

Since the dorsal pathway quickly receives the contour responses of the primary visual cortex and also receives the feed-forward input responses after local feature adjustment of V2RFs, the weighting is used to obtain the rough contour response DP of dorsal pathway, where \(\mu \) represents the input rate of the V2 response.

Secondly, the multi-directional fretting is introduced to simulate the ventral pathway to regulate local features, as shown in Eqs. (12)\(\sim \)(13):

In Eq. (13), v determines the amplitude of micro adjustment. The larger the v is, the smaller the amplitude of micro adjustment will be, and the detected contour will be closer to the real contour. However, the calculation will be relatively more complicated. In general, using the equation can improve the effectiveness of contour detection more accurately. Finally, the Eq. (14) is used to simulate double stream information fusion, where \(\xi _1\) and \(\xi _2\) refer to the corresponding weights of two pathways respectively, achieving the final contour detection.

3 Experimental Results and Analysis

In this paper, BSDS500 dataset and NYUD dataset are selected for experiments, each image in the dataset has its corresponding baseline contour maps. The experimental results are compared with the baseline maps to effectively evaluate the contour detection effect of the proposed method on natural scene images. To facilitate this comparison, pixel sets are employed for the computation of evaluation metrics across the two datasets. The cardinality of set E, indicated as \(\textrm{card}\left( E \right) \), reflects the total number of elements within the set. This approach categorizes pixels into various sets: false pixels(\(E_\textrm{FP}\)), missed pixels (\(E_\textrm{FN}\)) and positive pixels (E), as detailed in Eqs. (15)\(\sim \)(17). The set \(E_\textrm{D}\) encompasses the contour pixels identified by the algorithm, while \(E_\textrm{GT}\) contains the baseline contour pixels. Further, T represents the set \(5\times 5\) structural unit, and the symbol \(\oplus \) is used to denote the expansion operation within the analysis.

The definitions of the relevant variables used in this paper and their setting values are shown in Table 1.

3.1 BSDS Dataset

The Berkeley Segmentation Date Set BSDS500 is used to evaluate the performance of the algorithm proposed in this paper. BSDS500 dataset includes 500 color images, each color image in the dataset had its corresponding baseline contour map.

3.1.1 Comparative Experiment

To verify the effectiveness of the method proposed in this paper, SCO, BAR, DAP, and RCF models were selected for comparison. The contour detection model (SCO) based on double antagonism and spatial sparsity constraints [5] is selected to demonstrate the superiority of the adaptive sensitization and adaptive regulation mechanism of retinal ganglion cells in our method. The contour detection model (BAR) based on bilateral asymmetric receptive field proposed in the literature [26] is selected to reflect the advantages of this cross visual area hierarchical structure model. Then, the contour detection (DAP) based on bilateral attention pathway interaction response and fusion [9] is selected to verify the superiority of the “corner” information encoding algorithm in this paper. Finally, the deep learning-based contour detection method (RCF) [4] is selected for comparison with the method proposed in this paper. In order to quantitatively analyze the results detected by the model method with the baseline contour map, it is necessary to perform non-maximum suppression and hysteresis threshold processing on the extracted contour grayscale map.

Figure 4 shows the contour detection results of some images in the BSDS500 dataset for each model. The GT column represents the corresponding baseline contour maps. The SCO algorithm introduces the color antagonism mechanism and spatial sparseness constraint to distinguish contours and textures by judging the sparsity of the response in local regions, effectively extracting more complete main body contours and better suppressing edge textures. However, in the process of suppressing textures, it is easy to overlook contour information with small color differences, resulting in the loss of some main body contours; The BAR algorithm uses the bilateral asymmetric receptive field mechanism to enhance the contrast difference of local areas, and can effectively extract the contour with significant contrast difference. However, because the algorithm does not process the image color, it is prone to more textures, and cannot balance the contour and texture well; The DAP algorithm introduces a bilateral attention pathway mechanism on this basis, utilizing the information differences between bilateral attention pathways to distinguish contours and textures, effectively suppressing texture edges, but there is also the phenomenon of discontinuous contour lines; The RCF algorithm can almost extract complete contours, but this deep learning model requires massive training data and long training time, and the model has problems such as inexplicability, excessive parameter variables, and complex calculations.

The proposed method considers visual mechanisms such as adaptability, directionality, and multi-channel collaborative coding, and simulates visual adaptation features to adaptively adjust the input response of cones, enhancing color edge response and highlighting color boundary contours; Using the complementary property of double receptive fields, different optimal directional responses are fused on the basis of the primary contour response to make up for the missing detail contour; The method of cross region fusion of visual information is also adopted to effectively integrate the initial and advanced contour responses and refine them, ultimately achieving the goal of extracting the continuous contours of the subject and suppressing regional textures efficiently and effectively. As shown in Fig. 5, the proposed method has better detection performance compared to the above comparative methods.

To further validate the feasibility of the proposed method, P-R curve (Precision Recall) is introduced to evaluate the experimental results of the above methods; At the same time, the evaluation index \(F_{\textrm{score}}\) is introduced, the larger the \(F_{\textrm{score}}\), the better its performance. The definition of \(F_{\textrm{score}} \) is shown in Eqs. (18) to (20). \(P_\textrm{r} \) (P in the P-R curve) and \(R_\textrm{c} \) (R in the P-R curve) represent accuracy and recall, respectively. The curve is drawn on the \(R_\textrm{c} \) horizontal axis and on the \(P_\textrm{r} \) vertical axis. Generally, the larger the area below the P-R curve, the better the contour detection effect; \(\beta \) in the Eq. (20) is used to balance the weight \(P_\textrm{r} \) and \(R_\textrm{c}\) in the calculation. When \(\beta \) equals one, \(P_\textrm{r} \) and \(R_\textrm{c}\) are considered equally important in this paper.

Figure 5 shows the P-R curves and \(F_{\textrm{score}} \) of BSDS500 dataset. In addition, the detection performance of each method is objectively analyzed using ODS (global best), OIS (single image best), and AP (average accuracy). The comparison results are shown in Table 2.

It can be seen from Fig. 5 that the area occupied below the P-R curve plotted by the model (ADD) in this paper and the evaluation index \(F_{\textrm{score}} \) are greater than other methods based on biological vision (SCO, BAR, and DAP); Also, it can be seen from Table 2 that the ODS, OIS, and AP of the model in this paper are 0.72, 0.76, and 0.77, respectively. All indicators are in an advantageous position, indicating that the comprehensive performance of the model in contour detection is better. However, compared to the contour detection model (RCF) based on deep learning, the performance of this model is slightly insufficient. It is generally believed that although the performance of models based on deep learning is good, there are shortcomings such as massive data, long training time, and weak interpretability. In contrast, the model proposed in this paper can handle relatively small datasets well and quickly under the premise of interpretability.

3.1.2 Ablation Experiment

To further verify the contribution of various modules in the model to contour detection, an ablation experiment is performed. This paper presents the results of ablation experiments on 112056 and 384022 in Fig. 6, and draws the differences in the results using circles of different colors. It may have many textures in the contour when there is no ASR modules, such as the purple circle of the belly of a rhinoceros in the image 112056 and those of the peaks in the image 384022; The contour lines may break when there is no DRFM module, such as the yellow circles of the back contour of the rhinoceros in the image 112056 and those of the hull contour of the small boat in the image 384022; The local main contours may loss when there is no DSIF module, such as the green circles of horn of the rhinoceros in the image 112056 and those of the oar in the image 384022.

It can be seen from the ablation experimental results in Fig. 6, the curves in Fig. 7, and the data in Table 3, that the ASR, DRFM, and DSIF modules all contribute to the model constructed in this paper. The three modules complement each other: The ASR module enhances color edge response, effectively extracts color boundaries, and reduces interference from texture edges; The DRFM module makes use of the complementary response characteristics of double receptive fields, which can effectively make up the missing details and contours, and make the main body contour more continuous; The DSIF module utilizes cross region information fusion, which can effectively integrate the previous level response and refine the processing of the response. When the ASR, DRFM, and DSIF modules removed, the F-values of the model in this paper decrease to 0.69, 0.70, and 0.70, respectively. Indicators such as ODS, OIS, and AP also show varying degrees of decline. It can be seen that each module in this paper is indispensable.

3.2 NYUD Dataset

In order to further verify the generalization of our model algorithm, the paper introduces the NYUD dataset and compared the proposed model with other biological vision based algorithm models (DAP, BAR, SCO) and deep learning based models (RCF). The image contours of NYUD dataset obtained by each algorithm are shown in Fig. 8.

From the results in Fig. 8, it can be seen that SCO demonstrates the advantages of color antagonism mechanism and spatial sparsity constraint, which can effectively suppress textures. However, some main body contours are also suppressed, resulting in discontinuous main body contours; BAR and DAP have similar effects, both of which can effectively extract significant contours. However, due to the rich details in the NYUD dataset, these two algorithms cannot effectively remove unnecessary textures from the image. The ADD algorithm proposed in this paper strengthens the color edge response of the image based on the SCO algorithm, highlights the contrast between color intervals, and more effectively extracts color boundary contours; The characteristics of double receptive fields are used to obtain complementary responses in different optimal directions, which increases the detail contour; Utilizing multi-channel encoding to fuse visual information across different regions, the main body contour is finely processed. Compared to the above three algorithms, the proposed algorithm fully considers the impact of color, direction, and multi-channel encoding on contour extraction, and can effectively extract the main body contour while suppressing texture noise.

From the results in Fig. 8, it can be seen that SCO demonstrates the advantages of color antagonism mechanism and spatial sparsity constraint, which can effectively suppress textures. However, some main body contours are also suppressed, resulting in discontinuous main body contours; BAR and DAP have similar effects, both of which can effectively extract significant contours. However, due to the rich details in the NYUD dataset, these two algorithms cannot effectively remove unnecessary textures from the image. The ADD algorithm proposed in this paper strengthens the color edge response of the image based on the SCO algorithm, highlights the contrast between color intervals, and more effectively extracts color boundary contours; The characteristics of double receptive fields are used to obtain complementary responses in different optimal directions, which increases the detail contour; Utilizing multi-channel encoding to fuse visual information across different regions, the main body contour is finely processed. Compared to the above three algorithms, the proposed algorithm fully considers the impact of color, direction, and multi-channel encoding on contour extraction, and can effectively extract the main body contour while suppressing texture noise.

In order to represent the effect of model processing more intuitively, this paper introduces quantitative evaluation indicators such as false detection \(e_\textrm{FP}\) rate, missed detection rate \(e_\textrm{FN}\) and P value to calculate the processing results of each algorithm. The smaller the \(e_\textrm{FP}\), the less texture the image has; The smaller the \(e_\textrm{FN}\), the retained main contour of the image has; The larger the P value, the better the contour detection effect of the algorithm is, as shown in Eqs. (15) to (23). The specific calculation results of each algorithm are shown in Table 4.

From the experimental results, it can be seen that the RCF algorithm performs well in all aspects. However, in the processing of the 5074 image, the algorithm proposed in this paper has a slightly better comprehensive score; The SCO algorithm generally has a high rate of missed detections \(e_\textrm{FN}\), making it easy to ignore the main contour and difficult to balance the contour and edge texture. For example, in the 5205 image, the contour of the table and cabinet cannot be detected well; The BAR algorithm has a generally high false detection rate \(e_\textrm{FP}\), exhibiting more edge textures overall, and is not friendly to dataset images with rich details such as NYUD; The DAP algorithm has fewer textures compared to the BAR algorithm, but it also exhibits discontinuous contour lines in the NYUD dataset. Considering the comparison images in Fig. 8 and the indicator data in Table 4, in the NYUD dataset with rich details, the algorithm proposed in this paper performs better than other models based on visual physiology in terms of overall performance. It can effectively balance contour extraction and texture suppression, achieving relatively accurate detection indicators.

4 Conclusion

Based on biological vision systems, this paper explores the encoding, processing, and interaction characteristics of visual information in each response region of visual pathways. The paper mainly divides visual pathways into initial and advanced information transmission pathways, and builds models based on the response characteristics of these two pathways.

In the primary information transmission pathway, the visual adaptation characteristics of retinal ganglion cell are simulated, an Adaptation & Sensitization Regulation model is constructed to enhance the color edge response and highlight the color boundary contour; The two-dimensional Gaussian function and its derivation function are used to simulate the neuronal receptive field in the LGN and V1 regions, and the primary contour response is obtained and feed forward across the viewport to the dorsal pathway in the higher-level information transfer pathway. In the advanced information transmission path, a double receptive fields model is constructed to simulate the “angle” information coding characteristics of V2 area, and the response complementarity characteristics are used to make up for the missing detail contours in the generation of the primary contour response, so as to obtain as more complete high-level contour response. Finally, the primary contour response obtained across the visual area and the advanced contour response obtained by hierarchical feed-forward are weighted to obtain the dorsal information flow response; Multi-directional fretting is used to simulate the local feature adjustment of the ventral pathway, and the maximum response in the output direction range is taken as the correction result to obtain the ventral information flow response; Simulate the diversion and collaborative processing of information flow, a double stream information fusion model based on the velocity of the dorsal and ventral information flow is constructed, and the output results of the dorsal are weighted and combined to achieve the significant contour extraction. The algorithm proposed in this paper is quantitatively compared and analyzed with other algorithms in the BSDS and NYUD datasets, verifying the effectiveness of the algorithm proposed in this paper. The image contour detection method based on the information transmission mechanism of the visual pathway proposed in this paper provides a new idea for image contour detection and analysis. Subsequently, visual mechanisms such as binocular parallax characteristics and multi morphological cell receptive field can be introduced on the basis of this method to further realize the contour extraction of complex images.

Data Availability

Database will be made available upon reasonable request.

References

Bertasius G, Shi J, Torresani L (2015) Deepedge: a multi-scale bifurcated deep network for top-down contour detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4380–4389

Bertasius G, Shi J, Torresani L (2015) High-for-low and low-for-high: efficient boundary detection from deep object features and its applications to high-level vision. In: Proceedings of the IEEE international conference on computer vision, pp 504–512

Xie S, Tu Z (2015) Holistically-nested edge detection. In: Proceedings of the IEEE international conference on computer vision, pp 1395–1403

Liu Y, Cheng M-M, Hu X, Wang K, Bai X (2017) Richer convolutional features for edge detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3000–3009

Yang K-F, Gao S-B, Guo C-F, Li C-Y, Li Y-J (2015) Boundary detection using double-opponency and spatial sparseness constraint. IEEE Trans Image Process 24(8):2565–2578

Lin C, Zhao H-J, Cao Y-J (2019) Improved color opponent contour detection model based on dark and light adaptation. Autom Control Comput Sci 53:560–571

Zhao R, Wu M, Liu X, Zou B, Li F (2017) Orientation histogram-based center-surround interaction: an integration approach for contour detection. Neural Comput 29(1):171–193

Akbarinia A, Parraga CA (2018) Feedback and surround modulated boundary detection. Int J Comput Vis 126(12):1367–1380

Xu Y, Fan Y (2022) Contour detection based on the interactive response and fusion model of bilateral attention pathways. Signal Image Video Process 16:1–9

Zhong H, Wang R (2022) A visual-degradation-inspired model with hsv color-encoding for contour detection. J Neurosci Methods 369:109423

Recugnat M, Undurraga JA, McAlpine D (2021) Spike-rate adaptation in a computational model of human-shaped spiral ganglion neurons. IEEE Trans Biomed Eng 69(2):602–612

Deepak C, Krishnan A, Narayan K (2022) Temporal characteristics of neonatal chick retinal ganglion cell responses: effects of luminance, contrast, and color

Hilgen G, Pirmoradian S, Pamplona D, Kornprobst P, Cessac B, Hennig MH, Sernagor E (2017) Pan-retinal characterisation of light responses from ganglion cells in the developing mouse retina. Sci Rep 7(1):1–14

Neumann T, Ziegler C, Blau A (2008) Multielectrode array recordings reveal physiological diversity of intrinsically photosensitive retinal ganglion cells in the chick embryo. Brain Res 1207:120–127

Yang K, Gao S, Li C, Li Y (2013) Efficient color boundary detection with color-opponent mechanisms. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2810–2817

Trevarthen CB (1968) Two mechanisms of vision in primates. Psychol Forsch 31(4):299–337

Milner AD (2017) How do the two visual streams interact with each other? Exp Brain Res 235(5):1297–1308

Hosoya H, Hyvärinen A (2015) A hierarchical statistical model of natural images explains tuning properties in v2. J Neurosci 35(29):10412–10428

Zhong H, Wang R (2021) A new discovery on visual information dynamic changes from v1 to v2: corner encoding. Nonlinear Dyn 105:3551–3570

Ayzenberg V, Behrmann M (2022) The dorsal visual pathway represents object-centered spatial relations for object recognition. J Neurosci 42(23):4693–4710

Han Z, Sereno A (2022) Modeling the ventral and dorsal cortical visual pathways using artificial neural networks. Neural Comput 34(1):138–171

Liu L, Wang F, Zhou K, Ding N, Luo H (2017) Perceptual integration rapidly activates dorsal visual pathway to guide local processing in early visual areas. PLoS Biol 15(11):2003646

Choi S-H, Jeong G, Kim Y-B, Cho Z-H (2020) Proposal for human visual pathway in the extrastriate cortex by fiber tracking method using diffusion-weighted MRI. Neuroimage 220:117145

Kaas JH, Qi H-X, Stepniewska I (2022) Escaping the nocturnal bottleneck, and the evolution of the dorsal and ventral streams of visual processing in primates. Philos Trans R Soc B 377(1844):20210293

Park B-Y, Tark K-J, Shim WM, Park H (2018) Functional connectivity based parcellation of early visual cortices. Hum Brain Mapp 39(3):1380–1390

Fang T, Fan Y, Wu W (2020) Salient contour detection on the basis of the mechanism of bilateral asymmetric receptive fields. Signal Image Video Process 14:1461–1469

Acknowledgements

This study was supported by National Natural Science Foundation of China (No. 61501154).

Funding

National Natural Science Foundation of China (No. 61501154).

Author information

Authors and Affiliations

Contributions

PC wrote the main manuscript text, while ZC prepared the figures. YF took on the role of revising and finalizing the paper, and WW conducted data analysis and reviewed the experimental section. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cai, P., Cai, Z., Fan, Y. et al. Image Contour Detection Based on Visual Pathway Information Transfer Mechanism. Neural Process Lett 56, 6 (2024). https://doi.org/10.1007/s11063-024-11486-3

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11486-3