Abstract

This paper studies the finite-time stability (FTS) of the inertial neural networks (INNs) with delayed impulses. Unlike previous related works, we consider the delayed impulses and propose a new impulsive control strategy. We extend the existing FTS results to the cases with delayed impulses. We also establish some global and local FTS criteria of INNs. Moreover, we estimate the settling-time in different cases and investigate the optimization strategy. We present three numerical examples to verify the validity of our theoretical results.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

In 1986, Babcock and Westervelt introduced the inertial term into their neural network model, which they named the Inertial Neural Networks (INNs) [1]. INNs have the information analysis and processing capabilities of traditional models, as well as various excellent dynamic characteristics such as strong robustness and fault tolerance [2]. Stability is an important dynamical property of INNs that has attracted much attention in recent years. Many effective stability criteria of INNs have been established [3,4,5,6,7].

Most studies on the stability of INNs are asymptotic, meaning the system can only reach the desired state as time approaches infinity [7, 8]. However, in many real-world engineering and biological systems, stability must be achieved in finite time [9, 10]. Recently, some interesting results about finite-time stability (FTS) of dynamical systems have been obtained [11,12,13,14]. In [11], the FTS problem under information transmission failure of partial nodes is studied, and in [12], the FTS of Markovian coupled neural networks is considered. However, there are still some relatively strict constraints in the practical use of FTS criteria. For example, in [13], FTS can only be achieved for a local range of initial values. In [14], the realization of FTS requires the parameters of the nonlinear terms sufficiently small, which may make the obtained criterion conservative. These restrictions motivate us to further study FTS theory and its applications.

In some real INNs, signal transmission may change abruptly at some discrete instants due to sudden noises or fluctuations, known as the impulsive effect of INNs. Generally, impulses can be divided into three categories: inactive impulses, destabilizing impulses [15, 16], and stabilizing impulses [17, 18]. Destabilizing impulses often destroy stability and can be seen as impulsive perturbation, while stabilizing impulses may promote stability and can be called impulsive control. Some relevant works about the asymptotic stability problem of INNs under impulse effects have been published [19,20,21,22]. A natural question arises: how do impulses affect the FTS of INNs? To the best of our knowledge, the problem of FTS of INNs under impulsive effects has not been studied. Considering the control effect of stabilizing impulses, we wonder whether the local finite-time stability (LFTS) of INNs can be extended to global finite-time stability (GFTS) under the effect of stabilizing impulses. Additionally, it is also important to estimate the settling-time for the FTS of INNs. In this paper, we also aim to estimate the settling-time and explore its relationship with the initial value and impulses.

Moreover, time delays are unavoidable in many real control systems, such as the vehicle active suspension system [23], communication security networks [24], etc [25, 26]. When impulsive signals are delayed in the transmission process, they are generally called delayed impulses [27]. In recent years, the potential effects of delayed impulses on enhancing or destabilizing network stability have been explored [26, 28]. However, few works have focused on the FTS of dynamical systems involving INNs with delayed impulses. In particular, although the GFTS and LFTS criteria under impulsive effects have been established, the impulsive delays were not taken into account in [29]. It is meaningful to study the FTS of INNs with delayed impulsive effects.

Motivated by the above discussions, this paper investigates the FTS of INNs with delayed impulses. The main contributions are as follows:

-

(1)

In contrast to the previous related work [29], the delayed impulses are considered and a new lemma about the FTS of the general nonlinear systems with delayed impulses is proposed.

-

(2)

A new hybrid control strategy is proposed and the GFTS criteria of INNs with delayed impulses are established, which improves upon [13].

-

(3)

The settling-time calculation is more accurate than [29], since more parameters are considered. It also considers the optimization problems to obtain the shorter settling-time for the same initial value.

The rest of the paper is organized as follows. Section 2 introduces some definitions, assumptions and lemmas for the next section. Section 3 studies the GFTS and LFTS of the INNs with delayed impulses. Section 4 provides three examples to demonstrate our results. Section 5 summarizes the paper.

Notations: See Table 1.

2 Preliminaries and Model Description

2.1 Model Description

The inertial neural networks (INNs) can be described as follows:

where \(x_{i}(t)\in \mathbb {R}\) is the state of the i-th neuron, n is the number of neurons, \(c_{i}\) and \(d_{i}\) are positive constants, \(H_{i}\) can be considered as the external input for i-th neuron, \(a_{ij}\) are the connection strengths, and \(g_{j}(\cdot )\) is the continuous activation function.

Let \(p_{i}(t)=\frac{dx_{i}(t)}{dt}+r_{i}x_{i}(t)\), \(i=1, \ldots , n\), \(p(t)=(p_{1}(t), \ldots , p_{n}(t))^{\textsf{T}}\) and \(x(t)=(x_{1}(t), \ldots , x_{n}(t))^{\textsf{T}}\). Then, we can transform (1) into

where \(R=\text {diag}\{r_{1},\ldots ,r_{n}\}\), \(C=\text {diag}\{c_{1}-r_{1},\ldots ,c_{n}-r_{n}\}\), \(D=\text {diag}\{d_{1}+r_{1}(r_{1}-c_{1}),\ldots ,d_{n}+r_{n}(r_{n}-c_{n})\}\), \(A=(a_{ij})_{n\times n}\) and \(H=(H_{1},\ldots ,H_{n})^{\textsf{T}}\). I(t) and J(t) donote the control inputs, which will be designed later.

Suppose \((\omega _0, \varsigma _0)^{\textsf{T}}\) is the equilibrium point of the INNs (2), i.e.,

where \(\omega _0=(\omega _{01},\ldots ,\omega _{0n})^{\textsf{T}}\) and \(\varsigma _0=(\varsigma _{01}, \ldots , \varsigma _{0n})^{\textsf{T}}\). Define the error variables as \(w(t)=x(t)-\omega _0\) and \(e(t)=p(t)-\varsigma _0\). By calculation, the following error system will be obtained:

where \(f(w(t))=g(x(t))-g(\omega _0)\).

2.2 Preliminaries

Before presenting the main theorems, we first provide some definitions, assumptions and lemmas as follows.

Definition 1

(Average Implusive Delay). [30] For impulsive delay sequence \(\left\{ \tau _k\right\} \), assume that there exist positive numbers \(\tau ^*\) and \(\tilde{\tau }\) such that

where \(N(t,t_0)\) denotes the number of impulses on the interval \([t_0, t)\). Then, we call \(\tilde{\tau }\) the average impulsive delay of impulsive delay sequence \(\left\{ \tau _k\right\} \).

Definition 2

[13] Given the initial values \((w(t_0), \, e(t_0))^{\textsf{T}}\), if there exists nonempty open sets \(\mathscr {X}_1, \, \mathscr {X}_2 \subset \mathbb {R}^n\) such that \((w(t), \, e(t))^{\textsf{T}}\) converges to \(\varvec{0}\) within a finite time T for any \((w(t_0), \, e(t_0))^{\textsf{T}} \in \mathscr {X}_1 \times \mathscr {X}_2\), i.e.,

where \(w(t)=(w_1(t),\ldots ,w_n(t))^{\textsf{T}}\) and \(e(t)=(e_1(t),\ldots ,e_n(t))^{\textsf{T}}\) are the error variables of INNs (2) related to the equilibrium point \((\omega _0, \varsigma _0)^{\textsf{T}}\) as defined in (4). Then, the INNs (2) is said to realize local finite time stability (LFTS). Especially, if \(\mathscr {X}_1=\mathscr {X}_2=\mathbb {R}^n\), the INNs (2) will be said to realize global finite time stability (GFTS).

We make the following assumptions in this paper.

Assumption 1

There exist the constants \(\ell _{i}>0\) such that for any \(y_{1}, y_{2}\in \mathbb {R}\), the activation function \(g(\cdot )=(g_1(\cdot ),\ldots ,g_n(\cdot ))^{\textsf{T}}\) satisfies

and \(g_i(0)=0\). Denote \(L={\text {diag}}\{\ell _{1}, \ldots , \ell _{n}\} \in \mathbb {R}^{n \times n}\).

Assumption 2

The impulsive instants \(\{t_k\}_{k=1,\ldots ,n}\) satisfy

where \(t_0 \ge 0\) is the initial time and \({\tau _k}\) is the implusive delay. \(\underline{\tau }\), \( \overline{\tau }\) are called the impulsive-delay interval (IDI).

Remark 1

Assumption 2 outlines the relationship between \({t_k}\) and \({\tau _k}\). This assumption is logical, as impulsive control is a crucial component of our controllers, and dense impulses are essential for achieving finite-time stability in the systems. In fact, this assumption implies that \(t_k-\tau _k \ge t_{k-1}\). It’s important to note that \(\underline{\tau }\) can be equal to 0.

Lemma 1

[22] If \(\upsilon _1,\upsilon _2, \ldots , \upsilon _n \ge 0\), then the following inequality holds:

where \(0 <\sigma \le 1\).

Lemma 2

[31] Suppose a nonnegative continuous function V(t) satisfies

where \(\alpha \>0\), \(\gamma \>0\), \(\beta \in (0,1)\). If \(V^{1-\beta }(t_0) \le \frac{\gamma }{\alpha }\), one has

In addition, it holds that \(V(t)=0, t\ge t_0+T_0\), where

Lemma 2 suggests that, provided condition (8) is met, V(t) can decrease towords 0 if \(V^{1-\beta }(t_0) \le \frac{\gamma }{\alpha }\). However, it is uncertain whether V(t) can reach 0 if \(V^{1-\beta }(t_0) \>\frac{\gamma }{\alpha }\). This will be examined in the subsequent lemma.

Lemma 3

Soppose the nonnegative piecewise continuous function V(t) satisfies

where \(\alpha \ge 0\), \(\gamma \ge 0\), \(\beta \in (0,1)\) and \(\eta \in (0,1)\) are constants. \(\{t_k\}_{k=1,\ldots ,n}\) and \(\{\tau _k\}_{k=1,\ldots ,n}\) are the sequences of impulses and impulsive delays respectively, which satisfied Assumption 2. Then, for any \(\epsilon \in [0,1)\) and \(0 \le V(t_0) \le \rho ^ \frac{1}{1-\beta }\), there exixts \(k_0 \in \mathbb {N^+}\) such that

where

\(\delta =\eta e^{\alpha (1-\beta )\underline{\tau }}\) and \(\delta ^*=\eta e^{\alpha (1-\beta )\overline{\tau }}\).

Proof

By applying Lemma 2, we can get

While \(t=t_k\), one has

that is

By recurrence method, we can get

Let

It follows from Assumption 2 that

Combined with (11), we have

where \(\delta =\eta e^{\alpha (1-\beta )\underline{\tau }}\).

Notice that

and

where \(\delta =\eta e^{\alpha (1-\beta )\underline{\tau }}\) and \(\delta ^*=\eta e^{\alpha (1-\beta )\overline{\tau }}\). Based on (16), we examine the following cases:

\(Case \, I\): \(\delta =1\). That is \(\eta e^{\alpha (1-\beta )k \underline{\tau }}=1\), i.e., \(\underline{\tau }=\frac{1}{\alpha (\beta -1)}\text {ln}\eta \). From (15), we have

According to (17), if \(V^{1-\beta }(t_0) <\frac{\gamma }{\alpha }\), one has

Hence, \(V^{1-\beta }(t_k) \le \epsilon \frac{\gamma }{\alpha }\) will hold if

is satisfied. Noting \(\eta \in (0,1)\), one implies that there must exist a \(k_0\) such that \(V(t_{k_0}) \leqslant \left( \epsilon \frac{\gamma }{\alpha }\right) ^ \frac{1}{1-\beta }\) holds, and \(k_0\) can be choosen as the form

In fact, according to (17), if \(V^{1-\beta }(t_0) \ge \frac{\gamma }{\alpha }\), one has

We calculate

to obtain the sufficient conditions for \(V^{1-\beta }(t_k) \le \epsilon \frac{\gamma }{\alpha }\). Then, we can get

Due to \(\delta ^*=\eta e^{\alpha (1-\beta )\overline{\tau }}\>1\), the left term \(\delta ^{*k} [V^{1-\beta }(t_0)-\frac{\gamma }{\alpha }]\) grows exponentially. Let

and get the derivative

Let \(\dot{d}(k)=0\), then we can get

By simple calculation, we can know that \(k^0\) is the minimum point of d(k) and

This means \(d(k^0) \le 0\) for any \(V^{1-\beta }(t_0) \le \frac{1}{\frac{\ln \delta ^*}{1-\eta }{\delta ^*}^(\frac{1}{\ln \delta ^*}+\frac{\epsilon -1}{\eta -1})}\frac{\gamma }{\alpha }+\frac{\gamma }{\alpha }\). Meanwhile,

can realize \(V(t_{k_0}) \leqslant (\epsilon \frac{\gamma }{\alpha }) ^ \frac{1}{1-\beta }\).

\(Case \, II\): \(\delta \ne 1\). If \(V^{1-\beta }(t_0) <\frac{\gamma }{\alpha }\), one has

Let

to get the sufficient conditions, which indicate

\(\delta <1\) implies \(0<\eta<\delta <1 \). Hence, it holds that \(\frac{\delta -\eta }{\delta -1} <0\) and \(-\frac{1-\eta }{1-\delta }\frac{\gamma }{\alpha }+\frac{\gamma }{\alpha } <0\). This implies there must exist a \(k_0\) such that \(V(t_{k_0}) \leqslant (\epsilon \frac{\gamma }{\alpha }) ^ \frac{1}{1-\beta }\). In addition, \(k_0\) can be determined as the form

Furthermore, \(\delta \>1 \) means that \(\delta ^k[V^{1-\beta }(t_0)-\frac{\delta -\eta }{\delta -1}\frac{\gamma }{\alpha }]\) decreases exponentially. Hence, \(k_0\) can be determined as the same form with (24).

If \(V^{1-\beta }(t_0) \ge \frac{\gamma }{\alpha }\), one has

To get the \(k_0\) exactly, we need to consider the following situations.

Firstly, if \(\delta ^*=1\), according to (25), we have

Let

Notice that \(\delta <\delta ^* =1 \) and \(\frac{1-\eta }{1-\delta }\delta ^k\frac{\gamma }{\alpha }\) is monotonically decreasing about k. Hence, there exists a \(k_0\) as the form

such that \(V(t_{k_0}) \leqslant \left( \epsilon \frac{\gamma }{\alpha } \right) ^ \frac{1}{1-\beta }\) holds if \(V^{1-\beta }(t_0) \le (\epsilon +\frac{1-\eta }{1-\delta })\frac{\gamma }{\alpha }\).

Secondly, if \(\delta ^* <1\), according to (25), let

It follows from \(\delta<\delta ^* <1\) that \(\frac{1-\eta }{1-\delta } \>0 \) and \(\frac{\eta -\delta }{1-\delta } <0 \). Hence, when k is large enough, the inequality (27) is true. That means there will exist a \(k_0\) as the form

such that \(V(t_{k_0}) \leqslant (\epsilon \frac{\gamma }{\alpha }) ^ \frac{1}{1-\beta }\) holds.

Thirdly, if \(\delta ^* \>1\), according to (25), let

Similar to the discussion in (21), we have d(k) as the form

and get it derivative \(\dot{d}(k)\). By calculation, we have

as its minimum point. This implies that \(d(k^0) \le 0\) for any

Meanwhile,

can satisfy \(V(t_{k_0}) \leqslant \left( \epsilon \frac{\gamma }{\alpha }\right) ^ \frac{1}{1-\beta }\). This concludes the proof. \(\square \)

Remark 2

Lemma 3 demonstrates the impact of delayed impulses on the system’s evolution. It is evident that \(\delta \) and \(\delta ^*\) are key parameters that influence the radius \(\rho \) of the initial value set. It should be noted that \(\delta \) and \(\delta ^*\) contain the lower and upper bounds of IDI (7), respectively. In fact, they can also be seen as an extension of the \(impulsive degree \) defined in [29]. Furthermore, it can be easily deduced that smaller values of \(\delta \) and \(\delta ^*\) indicate stronger controlled strength. Meanwhile, it needs to be emphasized that large difference between \(\delta \) and \(\delta ^*\) may result in a smalle allowable set for the initial value.

Remark 3

The framework proposed in Lemma 2 consists of linear and nonlinear terms. Under certain conditions, variable V can converge to 0 in a finite time T. Such a framework can generate many models, from which the logistic regression model is critical for predicting the population size [32]. In the logistic model, V denotes the population number, and the parameters capture the population’s features. The impulsive effect in Lemma 3 represents a sudden shock that accounts for some “accidents” in the population’s growth, such as epidemics, natural disasters, etc. By Lemma 3, we can explore how the population number changes after such shocks.

Remark 4

Lemma 3 studies an impulsive differential system with finite and discrete impulses at times \(\{t_k\}\). The control strategy of Lemma 3 uses finite impulses to bring V(t) to a small interval, and then apply Lemma 2 to make the system converge to 0. The \(\alpha \), \(\beta \), \(\gamma \) determine the system’s properties. A large \(\alpha \) and small \(\beta \), \(\gamma \) hinder the system’s convergence to 0. The parameter \(\eta \) shows the impulsive strength, and a smaller \(\eta \) means a stronger impulse. Our control strategy in Lemma 3 can overcome the initial value restriction, meaning that the differential system can converge to 0 for any initial value under certain conditions. Since the system itself can be used to describe quantitative changes in biological populations. For real population numbers, Lemma 3 implies that any population can die out quickly if the external destructive force is large enough.

3 Main Result

In this section, we will examine the global and local finite-time stability of system (4) under the designed controller, which takes the following form:

where \(k_{i1},k_{i2},\xi _{i1},\xi _{i2} \in \mathbb {R}^+\), \(i \in \mathbb {N}^+\), \(k \in \mathbb {K}^0\), \(k_0 \ge 1\) is the number of impulses that occur, \(\mathbb {K}^0=\{1,2,\dots ,k_0\}\). u, v are the constants satisfying \(0<u <v\). \(w_i(t), \, e_i(t)\) are the error variables of the system. \(t_0\) is the initial time and \(\delta (\cdot )\) is the Dirac function. The continuous feedback controller terms are crucial to achieve FTS of INNs as shown by many previous studies [14, 22, 29]. The impulsive controller terms depend on the impulsive instants \(t_k, \, k \in \mathbb {K}^0\). The value of \(k_0\) is influenced by the impulsive strength, controller gain, initial state and other factors. We will discuss these factors in more detail later.

3.1 Finite-Time Stability and Settling-Time

Theorem 1

Under the Assumptions 1 and 2, suppose the average impulsive delay of the impulsive delay sequences \(\{\tau _k\}_{k=1,\ldots ,n}\) is \(\tilde{\tau }\) and there exists a constant \(\eta \in (0,1)\) such that

Then, the following conclusions can be drawn:

-

(1)

the INNs (2) under the controller (30) can achieve global finte-time stability (GFTS) when \(\zeta ^* <1\);

-

(2)

the INNs (2) under the controller (30) can achieve local finte-time stability (LFTS) when \(\zeta ^* \ge 1\).

Furthermore, the settling-time T can be estimated as

and \(\rho ^*\) can be determined as

where u, v are the constants satisfy \(0<u <v\), \(\phi _i=(d_i+r_i(r_i+c_i))\), for \(i=1,\ldots ,n\), \(\varphi =\max _{1 \le i \le n}(\sum _{j=1}^{n}|a_{ij}|l_j)\), \(\kappa =\max _{1 \le i \le n}(|1-2r_i-2k_{i1}+\phi _i+\varphi |,|1-2(c_i-r_i)-2\xi _{i1}+\phi _i+\varphi |)\), \(\lambda =(\frac{1}{2})^{\frac{2v}{u+v}}\min _{1 \le i \le n}(k_{i2},\xi _{i2})\), \( V(t_0)=\frac{1}{2}\sum _{i=1}^{n}{{w_i}^2(t_0)}+\frac{1}{2}\sum _{i=1}^{n}{{e_i}^2(t_0)}\), \(\zeta = \eta e^{\kappa \frac{v-u}{2v}\underline{\tau }}\), \(\zeta ^*= \eta e^{\kappa \frac{v-u}{2v}\overline{\tau }}\), \(\lceil \cdot \rceil \) is the upward rounding function, \(\hat{a}=\frac{\zeta -\eta }{\zeta -1}\), \(\check{a}=\frac{1-\zeta }{1-\eta }\), \( \nabla = \frac{\lambda }{\kappa }\), \(\check{V}=V^{\frac{v-u}{2v}}(t_0)\), \(\hat{b}=\frac{\epsilon -1}{\eta -1}\), \(\check{b}=\ln {\zeta ^*}\), \(\tilde{b}=\frac{\ln {\zeta ^*}}{\ln \zeta }\), \(\Theta =\frac{v}{\kappa (u-v)}\) and \(\Upsilon =\tilde{\tau }+\overline{\tau }\).

Proof

Construct the Lyapunov function:

For \(t \in [t_{k-1},t_k)\), it holds that

While \(t=t_k\), we can get

According to Lemma 3, we can conclude that, for any \(\epsilon \in [0,1)\) and initial value \(0<V(t_0) <\rho \), there exist a \(k_0\) such that \(V(t_{k_0}) \le (\epsilon \frac{\lambda }{\kappa })^{\frac{2v}{v-u}}\), i.e., \(V(t_{k_0}) \le (\epsilon \nabla )^{\frac{2v}{v-u}}\).

Next, we shall calculate the settling-time. Firstly, we will compute how long it takes to achieve \(V(t) \le (\epsilon \nabla )^{\frac{2v}{v-u}}\). Note that \(k_0\) is the number of impulse occured. Considering the average impulsive delay (5), we can get

Secondly, because of \(\epsilon \in [0,1)\), so we have \(V(t_{k_0}) \le \nabla ^{\frac{2v}{v-u}}\). Then, Lemma 2 can be applied to determine that the time taking from \(t_{k_0}\) to reach the equilibrium point is

Therefore, we can deduce that the settling-time T is equal to

We can now examine the settling-time for each specific case:

-

(a.)

For any \(\check{V} \>\nabla \) and \(\zeta ^* <1\), we have \(k_0=\lceil \log _{\zeta ^*}{\frac{\epsilon \nabla -\hat{a}\nabla }{\check{V}-\hat{a}\nabla }} \rceil \), which indicates

$$\begin{aligned} T&= (\tilde{\tau }+\overline{\tau })k_0+\tau ^*+\Theta ln(1-\epsilon )\nonumber \\&= \Upsilon \left( \bigg \lceil \log _{\zeta ^*}{\frac{\epsilon \nabla -\hat{a}\nabla }{\check{V}-\hat{a}\nabla }} \bigg \rceil \right) +\tau ^*+\Theta \ln (1-\epsilon ). \end{aligned}$$(38) -

(b).

For any \(\nabla<\check{V} <(\epsilon +\frac{1}{\check{a}})\nabla \) and \(\zeta ^*=1\), we have \(k_0=\lceil \log _\zeta (\epsilon \check{a}-\frac{\check{a}\check{V}}{\nabla }+1) \rceil \), which indicates

$$\begin{aligned} T&= (\tilde{\tau }+\overline{\tau })k_0+\tau ^*+\Theta ln(1-\epsilon )\nonumber \\&= \Upsilon \left( \bigg \lceil \log _\zeta (\epsilon \check{a}-\frac{\check{a}\check{V}}{\nabla }+1) \bigg \rceil \right) +\tau ^*+\Theta \ln (1-\epsilon ). \end{aligned}$$(39) -

(c).

For any \(\nabla<\check{V} <\root -\frac{1}{\tilde{b}} \of {[\frac{\epsilon -\hat{a}}{1-\tilde{b}}\nabla ]^{1-\frac{1}{\tilde{b}}}(-\frac{\check{a}\tilde{b}}{\nabla })}+\nabla \) and \(\zeta ^* \>1 \), \(k_0\) can be calculated as \(\lceil \frac{1}{1-\frac{1}{\tilde{b}}}\log _{\zeta ^*}{(-\frac{1}{\check{a}\tilde{b}}\frac{\nabla }{\check{V}-\nabla })} \rceil \), which indicates

$$\begin{aligned} T&= (\tilde{\tau }+\overline{\tau })k_0+\tau ^*+\Theta ln(1-\epsilon )\nonumber \\&= \Upsilon \left( \bigg \lceil \frac{1}{1-\frac{1}{\tilde{b}}}\log _{\zeta ^*}{(-\frac{1}{\check{a}\tilde{b}}\frac{\nabla }{\check{V}-\nabla })} \bigg \rceil \right) +\tau ^*+\Theta \ln (1-\epsilon ). \end{aligned}$$(40) -

(d).

For any \(\epsilon \nabla<\check{V} <\nabla \) and \(\zeta =1\), we have \(k_0=\lceil \frac{1}{\eta -1}(\epsilon -\frac{1}{\nabla }\check{V}) \rceil \), which indicates

$$\begin{aligned} T&= (\tilde{\tau }+\overline{\tau })k_0+\tau ^*+\Theta \ln (1-\epsilon )\nonumber \\&= \Upsilon \left( \bigg \lceil \frac{1}{\eta -1}(\epsilon -\frac{1}{\nabla }\check{V}) \bigg \rceil \right) +\tau ^*+\Theta \ln (1-\epsilon ). \end{aligned}$$(41) -

(e).

For any \(\epsilon \nabla<\check{V} <\nabla \) and \(\zeta \ne 1\), we have \(k_0=\lceil \log _\zeta {\frac{\epsilon \nabla -\hat{a}\nabla }{\check{V}-\hat{a}\nabla }} \rceil \), which indicates

$$\begin{aligned} T&= (\tilde{\tau }+\overline{\tau })k_0+\tau ^*+\Theta ln(1-\epsilon )\nonumber \\&= \Upsilon \left( \bigg \lceil \log _\zeta {\frac{\epsilon \nabla -\hat{a}\nabla }{\check{V}-\hat{a}\nabla }} \bigg \rceil \right) +\tau ^*+\Theta \ln (1-\epsilon ). \end{aligned}$$(42) -

(f).

For any \(\check{V} \le \epsilon \nabla \), we have \(k_0=0\), which indicates

$$\begin{aligned} T=T_o = \Theta \ln (1-\frac{1}{\nabla }\check{V}). \end{aligned}$$(43)

Based on the discussion above, we have completed the proof and calculated the settling-time T. \(\square \)

Remark 5

In Theorem 1, \(\zeta \) and \(\zeta ^*\) are key parameters in determining whether the INNs (2) can achieve GFTS. Unlike [22], the system does not required to be stable. In other words, even if the system itself is unstable, controllers can still be designed to achieve FTS. This will be demonstrated in Example 2.

Remark 6

This paper studies more general cases of FTS of INNs with impulses than the literature, despite many results have been published [11,12,13,14]. Firstly, many FTS studies of INNs neglect impulsive effect which occurs frequently in nature. For instance, [13] uses a new integral method, but the control is continuous and expensive. Our result achieves GFTS with lower cost. Secondly, unlike [29], this paper considers impulsive delays in the impulsive controller, which is more realistic due to the communication constraints. Moreover, we add a linear term to the differential equation model, i.e., \(\dot{V}(t) \le \alpha V(t)-\gamma V^{\beta }(t)\), compared with the model in [33]. The form in this paper has more applications in nature, as [32, 34,35,36] show, so our results are more applicable.

Remark 7

The algorithms or stability criteria in this paper mainly involve solving matrix multiplication by vector and calculating some parameters, such as Cp(t), Dx(t), \(\varphi =\max _{1 \le i \le n}(\sum _{j=1}^{n}|a_{ij}|l_j)\) and \(\kappa =\max _{1 \le i \le n}(|1-2r_i-2k_{i1}+\phi _i+\varphi |,|1-2(c_i-r_i)-2\xi _{i1}+\phi _i+\varphi |)\). The algorithmic complexity here is generally \(O(n^2)\), where n is the order of the matrix. The algorithmic complexity can be completed in polynomial time. Therefore, as long as the matrix dimension is not very large, the complexity is relatively small.

When the impulsive delays \(\{\tau _{k}\}\) are 0, we get the following corollary.

Corollary 1

Under the Assumptions 1, suppose the impulsive instants \(\{t_k\}\) satisfy

and there exists a constant \(\eta \in (0,1)\) such that (31) holds. Then, the conclusions of Theorem 1 also can be drawn. Moreover, the settling-time T can be estimated as

where the range of initial value \(\rho ^*\), u, v, \(\phi _i\), \(\varphi \), \(\kappa \), \(\lambda \), \( V(t_0)\), \(\zeta \), \(\zeta ^*\), \(\hat{a}\), \(\check{a}\), \( \nabla \), \(\check{V}\), \(\hat{b}\), \(\check{b}\), \(\tilde{b}\) and \(\Theta \) are the same as in Theorem 1.

3.2 Optimization Problems

In Theorem 1, both \(T_I\) and \(T_o\) are dependent on the uncertain parameter \(\epsilon \). As a result, we can calculate \(\epsilon \) to determine the optimal settling-time.

Denote the \(T_I\) and \(T_o\) as the functions with respect to parameter \(\epsilon \), in the following form:

Then, we have \(T = T_I+T_o \le \theta _1(\epsilon )+\theta _2(\epsilon )\). We denote \(\theta (\epsilon )=\theta _1(\epsilon )+\theta _2(\epsilon )\). Now let’s consider the following optimization problem:

The above optimization problems can be summarized into the following Algorithm 1:

Then, we have the following cases:

-

(a).

If \(\check{V} \>\nabla \) and \(\zeta ^* <1\), from (38), we can get

$$\begin{aligned} \theta (\epsilon )=\Upsilon \left( \bigg \lceil \log _{\zeta ^*}(\frac{1 }{\frac{\check{V}}{\nabla }-\hat{a}}\epsilon -\frac{\hat{a}\nabla }{\check{V}-\hat{a}\nabla }) \bigg \rceil \right) +\tau ^*+\Theta \ln (1-\epsilon ), \end{aligned}$$which implies that

$$\begin{aligned} \dot{\theta }(\epsilon ) =\frac{\Upsilon }{\check{b}(\epsilon -\hat{a})}+\frac{\Theta }{\epsilon -1}; \end{aligned}$$and

$$\begin{aligned} \ddot{\theta }(\epsilon ) =-\frac{\Upsilon }{\check{b}(\epsilon -\hat{a})^2}-\frac{\Theta }{(\epsilon -1)^2}. \end{aligned}$$Then, we solve the equation \(\dot{\theta }(\epsilon )=0\) to get the stationary point

$$\begin{aligned} \epsilon _0=1-\frac{\Theta \check{b}(1-\hat{a})}{\Upsilon +\Theta \check{b}}. \end{aligned}$$(45)Notice that \(\epsilon _0 \in [0,1)\) implies that \(\ddot{\theta }(\epsilon _0) \>0\), based on the relationship among parameters. This indicates that the value of \(\epsilon _0\) given in (45) is the minimum point, and \(T=\theta (\epsilon _0)\) is the optimal settling-time for this situation.

-

(b).

If \(\nabla<\check{V} <(\epsilon +\frac{1}{\check{a}})\nabla \) and \(\zeta ^* =1\), similar to (a) above, we can constract \(\theta (\epsilon )\) and get \(\dot{\theta }(\epsilon )\), \(\ddot{\theta }(\epsilon )\). Through simple calculations, the extreme point can be determined as follows:

$$\begin{aligned} \epsilon _0=1-\frac{(\nabla +\check{a}{\nabla }-\check{a} \check{V})\Theta \ln {\zeta }}{\check{a}\nabla \Upsilon +\Theta \check{a} \nabla \ln {\zeta } }. \end{aligned}$$(46)Similarly, we can get that \(\epsilon _0 \in [0,1)\) implies \(\ddot{\theta }(\epsilon _0) \>0\) by computing. In this situation, \(T=\theta (\epsilon _0)\) is the solution to the optimization problem.

-

(c).

If \(\nabla<\check{V} <\root -\frac{1}{\tilde{b}} \of {[\frac{\epsilon -\hat{a}}{1-\tilde{b}}\nabla ]^{1-\frac{1}{\tilde{b}}}(-\frac{\check{a}\tilde{b}}{\nabla })}+\nabla \) and \(\zeta ^* \>1\), \(k_0=\bigg \lceil \frac{1}{1-\frac{1}{\tilde{b}}}\log _{\zeta ^*}{(-\frac{1}{\check{a}\tilde{b}}\frac{\nabla }{\check{V}-\nabla })}\bigg \rceil \) can be obtained, which implies the function \(\theta _1(\epsilon )\) is independent on the parameter \(\epsilon \) in this situation. Hence, the settling-time \(T=\theta (\epsilon )\) is only influenced by \(\theta _2(\epsilon )\). The reason for this is that the initial value \(\check{V}\) is related to parameter \(\epsilon \). By calculation, we have \(V^{\frac{v-u}{2v}}(t_{k_0-1}) \ge \nabla \) and \(V^{\frac{v-u}{2v}}(t_{k_0}) \le \epsilon \nabla \). Thus, the value of \(\theta _1(\epsilon )\) only depends on the initial value \(\check{V}\). Due to \(\theta _2(\epsilon )\) is monotonically increasing, we choose

$$\begin{aligned} \epsilon _0=0 \end{aligned}$$(47)to get the optimal settling-time for this situation.

-

(d).

If \(\check{V} <\nabla \) and \(\zeta =1\), we can get

$$\begin{aligned} \epsilon _0=1-\Theta \frac{\eta -1}{\Upsilon }. \end{aligned}$$(48)Taking \(\epsilon _0\) into \(\theta (\epsilon )\), we can get the optimal settling-time T for this situation.

-

(e).

If \(\check{V} <\nabla \) and \(\zeta \ne 1\), we can get

$$\begin{aligned} \epsilon _0=1-\frac{(1-\hat{a})\Theta \ln {\zeta }}{\Upsilon +\Theta \ln {\zeta }}. \end{aligned}$$(49)Then, \(T=\theta (\epsilon _0)\) is the solution of optimization problem for this situation.

4 Numerical Examples

In this section, three numerical examples are given to demonstrate the theoretical results.

Example 1

Suppose the parameters in INNs (2) are chosen as

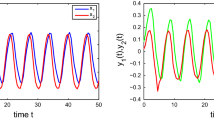

\(H=(10,5,-11)^{\textsf{T}}\) and \(f(x)=sinx\). The equilibrium point is \((\omega _0, \varsigma _0)^{\textsf{T}}=(5.04523, 2.4\) \(2879, -5.5 2197, 10.09046, 4.85758, -11.04394)^{\textsf{T}}\) by simple computation. Figure 1a shows the evolution of the error states of the system (2) without the controller.

We can obsever that the system without the controller takes more than 8s to achieve FTS. Taking the controller (30) into consideration. Suppose the delay sequence and the impulsive sequence satisfy Definition 1 and Assumption 2, respectively, where \(\tilde{\tau }=0.1\), \(\tau ^*=0.001\), \(\underline{\tau }=0.04\), \(\overline{\tau }=0.05\). Set the parameters of the controllers as \(k_{11}=k_{21}=k_{31}=3\), \(k_{12}=k_{22}=k_{32}=4\), \(\xi _{11}=\xi _{21}=\xi _{31}=4\), \(\xi _{12}=\xi _{22}=\xi _{32}=3\), \(\mu _1=\mu _2=\mu _3=-0.5\), \(u=1\), \(v=5\), \(\eta =0.6\), which indicate that \(\zeta =0.6397\) and \(\zeta ^*=0.65\). We set the initial time \(t_0=0\), the initial value \(w(t)=(w_1(t), \, w_2(t), \, w_3(t))^{\textsf{T}}=(3,7,10)^{\textsf{T}}\), \(e(t)=(e_1(t), \, e_2(t), \, e_3(t))^{\textsf{T}}=(6,14,20)^{\textsf{T}}\) and \(\epsilon =0.8\).

By simple calculation, in this simulations, we can get \(V(t_0)=786.8139\), which imply that \(V(t_0) \>(\frac{\lambda }{\kappa })^{\frac{2v}{v-u}}=0.0271\). Notice that \(\zeta<\zeta ^* <1\). Accroding to Theorem 1, the INNs (2) can realize GFTS and the settling-time is \(T=1.5529s\), based on (38), where the number of occurrence impulses \(k_0\) is 7. Figure 2a, b display the state and error trajectories of system (2) with controller (30), respectively. By comparing Figs. 1a and 2b, it can be seen that the time to achieve stability is significantly reduced after adding the controller (30). This demonstrates that the controller (30) can promote stability. Additionally, it can be observed that the system reaches the equilibrium point at around 1.2s, indicating that our estimation of the settling-time is relatively accurate.

By considering the optimization problem, we can determine that \(\epsilon _0\) is equal to 0.4749 according to equation (45). The optimal settling-time T is 1.4013s and the number of occurrence impulses \(k_0\) is 8. As shown in Fig. 5a, a smaller value of \(\epsilon \) implies a higher frequency of impulses. In Fig. 2b, the last impulse occurs at \(t=0.8s\), while the previous one occurs at \(t=0.7s\). This indicates that the FTS of system (2) can be achieved faster by using the optimal \(\epsilon _0\).

Example 2

In this example, consider an unstable INNs (2) with the parameters

\(H=(0,0,0)^{\textsf{T}}\) and \(f(x)=sinx\). Figure 1b shows the evolution of the states of the system (2) without the controller.

Set the the delay sequence and the impulsive sequence satisfy Definition 1 and Assumption 2, respectively, where \(\tilde{\tau }=0.1\), \(\tau ^*=0.001\), \(\underline{\tau }=0.03\) and \(\overline{\tau }=0.05\). The parameters of the controllers are chosen as \(k_{11}=k_{21}=k_{31}=5\), \(k_{12}=k_{22}=k_{32}=5\), \(\xi _{11}=\xi _{21}=\xi _{31}=2\), \(\xi _{12}=\xi _{22}=\xi _{32}=3\), \(\mu _1=\mu _2=\mu _3=-0.8\), \(u=2\), \(v=5\) and \(\eta =0.45\), which indicate that \(\zeta =0.4849\) and \(\zeta ^*=0.5097\). Set \(t_0=0\), \(w(t)=(w_1(t), \, w_2(t), \, w_3(t))^{\textsf{T}}=(3,7,10)^{\textsf{T}}\), \(e(t)=(e_1(t), \, e_2(t), \, e_3(t))^{\textsf{T}}=(1.503,3.507,5.01)^{\textsf{T}}\) and \(\epsilon =0.2\) in this simulations.

In this example, our goal is to stabilize the system to the original point. We find that \(V(t_0) \>(\frac{\lambda }{\kappa })^{\frac{2v}{v-u}}=0.0012\) and \(\zeta<\zeta ^* <1\). According to Theorem 1, the INNs (2) can achieve GFTS with a settling-time of \(T=1.5448s\), as determined by equation (38), where the number of occurrence impulses \(k_0\) is 10. Figure 3a, b show the state and error trajectories of system (2) with controller (30), respectively. By comparing Figs. 1b and 3a, it can be seen that the addition of controller (30) stabilizes system (2). This demonstrates the effectiveness of controller (30) in promoting stability. Additionally, the system reaches the equilibrium point at around 1s, indicating that our estimation of the settling-time is relatively accurate. We also notice that the values of \(\zeta \) and \(\zeta ^*\) in this example are smaller than those in Example 1, indicating stronger control strength. As a result, even though the initial value is the same and the system is unstable, after adding controller (30), the INNs (2) can achieve finite-stability in a shorter settling-time and with fewer impulses compared to Example 1.

According to Eq. (45), the optimal value for \(\epsilon \) is 0.4936 when considering the optimization problem. The optimal settling-time is \(T=1.3366s\) and the number of occurrence impulses \(k_0\) is 8. Figure 4b shows that a larger value of \(\epsilon \) results in a lower frequency of impulses. The last impulse occurs at \(t=1s\), while in Fig. 3b it occurs at \(t=0.8s\). Using the optimal value of \(\epsilon _0\) results in a shorter estimated settling-time.

Example 3

In this example, we assume that the controlled strength is relatively weak, meaning \(\zeta ^* \>1\). We also assume that the parameters of INNs (2) are the same as in Example 2, which results in an unstable system as shown in Fig. 1b. The delay sequence and impulsive sequence satisfy both Definition 1 and Assumption 2, where \(\tilde{\tau }=0.25\), \(\tau ^*=0.001\), \(\underline{\tau }=0.2\), \(\overline{\tau }=0.25\). The parameters of the controller are chosen as \(k_{11}=k_{21}=k_{31}=6\), \(k_{12}=k_{22}=k_{32}=30\), \(\xi _{11}=\xi _{21}=\xi _{31}=6\), \(\xi _{12}=\xi _{22}=\xi _{32}=30\), \(\mu _1=\mu _2=\mu _3=-0.65\), \(u=1\), \(v=25\), \(\eta =0.45\), which indicate that \(\zeta =1.0753\) and \(\zeta ^*=1.3769\). We set \(t_0=0\), \(w(t)=(w_1(t), \, w_2(t), \, w_3(t))^{\textsf{T}}=(0.6,0.8,1.15)^{\textsf{T}}\), \(e(t)=(e_1(t), \, e_2(t), \, e_3(t))^{\textsf{T}}=(0.3006,0.4008,0.5752)^{\textsf{T}}\) and \(\epsilon =0.06\) in the simulations.

In this example, the controlled strength is weaker than in Example 2, as indicated by \(1<\zeta <\zeta ^*\). By calculation, we find that \(0.7680=\frac{\lambda }{\kappa }<V^{\frac{v-u}{2v}}(t_0) <\rho ^*=1.1544\). According to Theorem 1, the INNs (2) can achieve LFTS with a settling-time of \(T=3.0063s\). Figure 5a, b show the state and error trajectories of system (2) with controller (30), respectively. The system reaches the equilibrium point at around 2s, indicating that our estimated settling-time is relatively accurate. A comparison of Figs. 5b and 3b shows that a weaker control strength leads to a smaller range of initial values and a longer settling-time.

5 Conclusion

In this paper, we investigated the FTS of INNs with delayed impulses. Through designing new hybrid controllers, we proposed a useful FTS criterion for impulsive nonlinear dynamical systems. Unlike previous works, our criterion takes into account delayed impulses and can achieve GFTS. We then applied these results to INNs to obtain GFTS and LFTS criteria, respectively. Additionally, we estimated the settling-time by choosing optimized parameters. Numerical examples were provided to verify the correctness of our theoretical results.

Some possible directions for future work are as follows. One is to consider the INNs with delay, that is the inertial delayed neural networks (IDNNs), which may have more complex dynamics and stability properties. Another is to relax the restriction of impulsive and delay interval, and allow them to vary randomly or dependently. A third one is to add more complex and even destabilizing impulses, and study how they affect the synchronization of the INNs.

References

Babcock K, Westervelt R (1986) Stability and dynamics of simple electronic neural networks with added inertia. Physica D 23(1–3):464–469

Zhang M, Wang D (2019) Robust dissipativity analysis for delayed memristor-based inertial neural network. Neurocomputing 366:340–351

Xiao Q, Huang T, Zeng Z (2019) Global exponential stability and synchronization for discrete-time inertial neural networks with time delays: a timescale approach. IEEE Trans Neural Netw Learn Syst 30(6):1854–1866

Xiao Q, Huang T, Zeng Z (2022) On exponential stability of delayed discrete-time complex-valued inertial neural networks. IEEE Trans Cybern 52(5):3483–3494

Zhao Y, Zhang L, Shen S, Gao H (2011) Robust stability criterion for discrete-time uncertain Markovian jumping neural networks with defective statistics of modes transitions. IEEE Trans Neural Netw 22(1):164–170

Hui J, Hu C, Yu J, Jiang H (2021) Intermittent control based exponential synchronization of inertial neural networks with mixed delays. Neural Process Lett 53(6):3965–3979

Singh S, Kumar U, Das S, Cao J (2021) Global exponential stability of inertial Cohen–Grossberg neural networks with time-varying delays via feedback and adaptive control schemes: non-reduction order approach. Neural Process Lett 53(6):3965–3979

Zhong X, Gao Y (2021) Synchronization of inertial neural networks with time-varying delays via quantized sampled-data control. IEEE Trans Neural Netw Learn Syst 32(11):4916–4930

Tang R, Yang X, Shi P, Xiang Z, Qing L (2023) Finite-time L2 stabilization of uncertain delayed T-S fuzzy systems via intermittent control. IEEE Trans Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2023.3292233

Wang Q, Wu Z, Xie M, Wu F, Huang H (2023) Finite-time prescribed performance trajectory tracking control for the autonomous underwater helicopter. Ocean Eng 280:114628

Li Y, Zhang J, Lu J, Lou J (2023) Finite-time synchronization of complex networks with partial communication channels failure. Inf Sci 634:539–549

Tang R, Su H, Zou Y, Yang X (2022) Finite-time synchronization of Markovian coupled neural networks with delays via intermittent quantized control: linear programming approach. IEEE Trans Neural Netw Learn Syst 30(5):5268–5278

Zhang Z, Cao J (2019) Novel finite-time synchronization criteria for inertial neural networks with time delays via integral inequality method. IEEE Trans Neural Netw Learn Syst 30(5):1476–1485

Ramajayam S, Rajavel S, Samidurai R, Cao Y (2023) Finite-time synchronization for T-S fuzzy complex-valued inertial delayed neural networks via decomposition approach. Neural Process Lett. https://doi.org/10.1007/s11063-022-11117-9

Lu J, Ho DWC, Cao J (2010) A unified synchronization criterion for impulsive dynamical networks. Automatica 46(7):1215–1221

Zhang W, Tang Y, Miao Q, Du W (2013) Exponential synchronization of coupled switched neural networks with mode-dependent impulsive effects. IEEE Trans Neural Netw Learn Syst 24(8):1316–1326

Li X, Peng D, Cao J (2020) Lyapunov stability for impulsive systems via event-triggered impulsive control. IEEE Trans Autom Control 65(11):4908–4913

Yang X, Li X, Lu J, Cheng Z (2020) Synchronization of time-delayed complex networks with switching topology via hybrid actuator fault and impulsive effects control. IEEE Trans Cybern 50(9):4043–4052

Li H, Zhang W, Li C, Zhang W (2019) Global asymptotical stability for a class of non-autonomous impulsive inertial neural networks with unbounded time-varying delay. Neural Comput Appl 31(10):6757–6766

Ouyang D, Shao J, Hu C (2020) Stability property of impulsive inertial neural networks with unbounded time delay and saturating actuators. Neural Comput Appl 32(11):6571–6580

Li H, Li C, Ouyang D, Nguang SK (2021) Impulsive synchronization of unbounded delayed inertial neural networks with actuator saturation and sampled-data control and its application to image encryption. IEEE Trans Neural Netw Learn Syst 32(4):1460–1473

Zhu S, Zhou J, Lu J, Lu J (2021) Finite-time synchronization of impulsive dynamical networks with strong nonlinearity. IEEE Trans Autom Control 66(8):3550–3561

Wang G, Chadli M, Chen H, Zhou Z (2019) Event-triggered control for active vehicle suspension systems with network-induced delays. J Frankl Inst-Eng Appl Math 356(1):147–172

Khadra A, Liu XZ, Shen X (2009) Analyzing the robustness of impulsive synchronization coupled by linear delayed impulses. IEEE Trans Autom Control 54(4):923–928

Li X, Song S, Wu J (2019) Exponential stability of nonlinear systems with delayed impulses and applications. IEEE Trans Autom Control 64:4024–4034

Li X, Li P (2021) Stability of time-delay systems with impulsive control involving stabilizing delays. Automatica 124:109336

Yang H, Wang X, Zhong S, Shu L (2018) Synchronization of nonlinear complex dynamical systems via delayed impulsive distributed control. Appl Math Comput 320:75–85

Lu J, Jiang B, Zheng WX (2022) Potential impacts of delay on stability of impulsive control systems. IEEE Trans Autom Control 67(10):5179–5190

Xi Q, Liu X, Li X (2022) Finite-time synchronization of complex dynamical networks via a novel hybrid controller. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2022.3185490

Jiang B, Lu J, Liu Y (2020) Exponential stability of delayed systems with average-delay impulses. SIAM J Control Optim 58(6):3763–3784

Hu C, Yu J, Jiang H (2014) Finite-time synchronization of delayed neural networks with Cohen–Grossberg type based on delayed feedback control. Neurocomputing 143:90–96

Nicolis (1991) Dynamics of error growth in unstable systems. Phys Rev A 43(10):5720–5723

Li X, Ho D, Cao J (2019) Finite-time stability and settling-time estimation of nonlinear impulsive systems. Automatica 99:361–368

Qu C, Liu C (1995) Heterotic Liouville systems from the Bernoulli equation. Phys Lett A 199(6):349–352

Wiegmann P, Abanov G (2014) Anomalous hydrodynamics of two-dimensional vortex fluids. Phys Rev Lett 113(3):034501

Finley J (2022) A fluid description based on the Bernoulli equation of the one-body stationary states of quantum mechanics with real valued wave-functions. J Phys Commun 6(4):45–62

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61503115 and the University Natural Sciences Research Project of Anhui Province (Project Number: KJ2021A0996).

Author information

Authors and Affiliations

Contributions

XW wrote the man manuscript text. LL did the numerical simulations. LW revised the draft paper. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wan, X., Li, L. & Wang, L. Finite-Time Stability of Inertial Neural Networks with Delayed Impulses. Neural Process Lett 56, 48 (2024). https://doi.org/10.1007/s11063-024-11476-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s11063-024-11476-5