Abstract

Surrogate-based optimization, nature-inspired metaheuristics, and hybrid combinations have become state of the art in algorithm design for solving real-world optimization problems. Still, it is difficult for practitioners to get an overview that explains their advantages in comparison to a large number of available methods in the scope of optimization. Available taxonomies lack the embedding of current approaches in the larger context of this broad field. This article presents a taxonomy of the field, which explores and matches algorithm strategies by extracting similarities and differences in their search strategies. A particular focus lies on algorithms using surrogates, nature-inspired designs, and those created by automatic algorithm generation. The extracted features of algorithms, their main concepts, and search operators, allow us to create a set of classification indicators to distinguish between a small number of classes. The features allow a deeper understanding of components of the search strategies and further indicate the close connections between the different algorithm designs. We present intuitive analogies to explain the basic principles of the search algorithms, particularly useful for novices in this research field. Furthermore, this taxonomy allows recommendations for the applicability of the corresponding algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Modern applications in industry, business, and information systems require a tremendous amount of optimization. Global optimization (GO) tackles various severe problems emerging from the context of complex physical systems, business processes, and particular from applications of artificial intelligence. Challenging problems arise from industry on the application level, e.g., machines regarding manufacturing speed, part quality or energy efficiency, or on the business level, such as optimization of production plans, purchase, sales, and after-sales. Further, they emerge from areas of artificial intelligence and information engineering, such as machine learning, e.g., optimization of standard data models such as neural networks for different applications. Their complex nature connects all these problems: they tend to be expensive to solve, and with unknown objective function properties, as the underlying mechanisms are often not well described or unknown.

Solving optimization problems of this kind relies necessarily on performing costly computations, such as simulations, or even real-world experiments, which are frequently considered being black-box. A fundamental challenge in such systems is the different costs of function evaluations. Whether we are probing a real physical system, querying the simulator, or creating a new complex data model, a significant amount of resources is needed to fulfill these tasks. GO methods for such problems thus need to fulfill a particular set of requirements. They need to work with black-box style probes only, so without any further information on the structure of the problem. Further, they must find the best possible improvement within a limited number of function evaluations.

The improvement of computational power in the last decades has been influencing the development of algorithms. A massive amount of computational power became available for researchers worldwide through multi-core desktop machines, parallel computing, and high-performance computing clusters. This has contributed to the following fields of research: firstly, the development of more complex, nature-inspired, and generally applicable heuristics, so-called metaheuristics. Secondly, it faciliated significant progress in the field of accurate, data-driven approximation models, so-called surrogate models, and their embodiment in an optimization process. Thirdly, the upcoming of approaches which combine several optimization algorithms and seek towards automatic combination and configuration of the optimal optimization algorithm, known as hyperheuristics. The hyperheuristic approach shows the close connections between different named algorithms, in particular in the area of bio-inspired metaheuristics. Automatic algorithm composition has shown to be able to outperform available (fixed) optimization algorithms. All these GO algorithms differ broadly from standard approaches, define new classes of algorithms, and are not well integrated into available taxonomies.

Hence, we propose a new taxonomy, which:

-

1.

describes a comprehensive overview of GO algorithms, including surrogate-based, model-based and hybrid algorithms,

-

2.

can generalize well and connects GO algorithm classes to show their similarities and differences,

-

3.

focusses on simplicity, which enables an easy understanding of GO algorithms,

-

4.

can be used to provide underlying knowledge and best practices to select a suitable algorithm for a new optimization problem.

Our new taxonomy is created based on algorithm key features and divides the algorithms into a small number of intuitive classes: Hill-Climbing, Trajectory, Population, Surrogate, and Hybrid. Further, Exact algorithms are shortly reviewed, but not an active part of our taxonomy, which focusses on heuristic algorithms. We further utilize extended class names as descriptions founded on the abstracted human behavior in pathfinding. The analogies Mountaineer, Sightseer, Team, Surveyor create further understanding by using the image of a single or several persons hiking a landscape in search of the desired location (optimum) utilizing the shortest path (e.g., several iterations).

This utilized abstraction allows us to present comprehensible ideas on how the individual classes differ and moreover, how the respective algorithms perform their search. Although abstraction is necessary for developing our results, we will present results that are useful for practitioners.

This article mainly addresses different kinds of readers: Beginners will find an intuitive and comprehensive overview of GO algorithms, especially concerning common metaheuristics and developments in the field of surrogate-based and hybrid and hyperheuristic optimization. For advanced readers, we also discuss the applicability of the algorithms to tackle specific problem properties and provide essential knowledge for reasonable algorithm selection. We provide an extensive list of references for experienced users. The taxonomy can be used to create realistic comparisons and benchmarks for the different classes of algorithms. It further provides insights for users, who aim to develop new search strategies, operators, and algorithms.

In general, most GO algorithms were developed for a specific search domain, e.g., discrete or continuous. However, many algorithms and their fundamental search principles can be successful for different problem spaces with reasonably small changes. For example, evolution strategies (ES), which are popular in continuous optimization (see Hansen et al. 2003), have their origin in the discrete problem domain. Beyer and Schwefel (2002) describe how the ES moved from discrete to continuous decision variables. Based on this consideration, the article is focused but not limited to illustrating the algorithm variants for the continuous domain, while they have their origins or are most successful in the discrete domain.

Moreover, we focused this taxonomy on algorithms for objective functions without particular characteristics, such as multi-objective, constrained, noisy, or dynamic. These function characteristics pose additional challenges to any algorithm, which are often faced by enhancing available search schemes with specialized operators or even completely dedicated algorithms. We included the former as part of the objective function evaluation in our general algorithm scheme and provide references to selected overviews. Dedicated algorithms, e.g., for multi-objective search, are not discussed in detail. However, their accommodation in the presented taxonomy is possible if their search schemes are related to the algorithms described in our taxonomy. If further required, we outlined the exclusive applicability of algorithms and search operators to specific domains or problem characteristics.

We organized the remainder of this article as follows: Sect. 2 presents the development of optimization algorithms and their core concepts. Section 3 motivates a new taxonomy by reviewing the history of available GO taxonomies, illustrates algorithm design aspects, and presents extracted classification features. Section 4 introduces the new intuitive classification with examples. Section 5 introduces best practices suggestions regarding the applicability of algorithms. Section 6 summarizes and concludes the article with the recent trends and challenges in GO and currently essential research fields.

2 Modern optimization algorithms

This section describes the fundamental principles of modern search algorithms, particular the elements and backgrounds of surrogate-based and hybrid optimization.

The goal of global optimization is to find the overall best solution, i.e., for the common task of minimization, to discover decision variable values that minimize the objective function value.

We denote the global search space as compact set \({\mathscr {S}} =\{\mathbf {x} \mid \, \mathbf {x}_l \le \mathbf {x} \le \mathbf {x}_u\}\) with \(\mathbf {x}_l, \mathbf {x}_u \in \mathbb {R^n}\) being the explicit, finite lower and upper bounds on \(\mathbf {x}\). Given a single-objective function f: \(\mathbb {R}^n \rightarrow \mathbb {R}\) with real-valued input vectors \(\mathbf {x}\) we attempt to find the location \(\mathbf {x^*} \in \mathbb {R}^n\) which minimizes the function: \({{\,\mathrm{arg\,min}\,}}{f(\mathbf {x})}, \mathbf {x} \in {\mathscr {S}}\).

Finding a global optimum is always the ultimate goal and as such desirable, but for many practical problems, a solution improving the current best solution in a given budget of evaluations or time will still be a success. Particularly, in continuous GO domains an optimum commonly cannot be identified exactly; thus, modern heuristics are designed to spend their resources as efficiently as possible to find the best possible improvement in the objective function value while finding a global optimum is never guaranteed.

Törn and Zilinskas (1989) mention three principles for the construction of an optimization algorithm:

-

1.

An algorithm utilizing all available a priori information will outperform a method using less information.

-

2.

If no a priori information is available, the information is completely based on evaluated candidate points and their objective values.

-

3.

Given a fixed number of evaluated points, optimization algorithms will only differ from each other in the distribution of candidate points.

If a priori information about the function is accessible, it can significantly support the search and should be considered during the algorithm design. Current research on algorithm designs that include structural operators, such as function decomposition, is known as grey-box optimization (Whitley et al. 2016; Santana 2017). However, many modern algorithms focus on handling black-box problems where the problem includes little or no a priori information. The principles displayed above lead to the conclusion that the most crucial design aspect of any black-box algorithm is to find a strategy to distribute the initial candidates in the search space and to generate new candidates based on a variation of solutions. These procedures define the search strategy, which needs to follow the two competing goals of exploration and exploitation. The balance between these two competing goals is usually part of the algorithm configuration. Consequently, any algorithm needs to be adapted to the structure of the problem at hand to achieve optimal performance. This can be considered during the construction of an algorithm, before the optimization by parameter tuning or during the run by parameter control (Bartz-Beielstein et al. 2005; Eiben et al. 1999). In general, the main goal of any method is to reach their target with high efficiency, i.e., to discover optima fast and accurate with as little resources as possible. Moreover, the goal is not mandatory finding a global optimum, which is a demanding and expensive task for many problems, but to identify a valuable local optimum or to improve the currently available solution. We will explicitly discuss the design of modern optimization algorithms in Sect. 3.2.

2.1 Exact algorithms

Exact algorithms also referred to as non-heuristic or complete algorithms (Neumaier 2004), are a special class of deterministic, systematic or exhaustive optimization techniques. They can be applied in discrete or combinatorial domains, where the search space has a finite number of possible solutions or for continuous domains, if an optimum is searched within some given tolerances. Exact algorithms have a guarantee to find a global optimum with using a predictable amount of resources, such as function evaluations or computation time (Neumaier 2004; Fomin and Kaski 2013; Woeginger 2003). This guarantee often requires sufficient a priori information about the objective function, e.g., the best possible objective function value. Without available a priori information, the stopping criterion needs to be defined by a heuristic approach, which softens the guarantee of solving to optimality. Well-Known exact algorithms are based on the branching principle, i.e., splitting a known problem into smaller sub-problems, which each can be solved to optimality. The Branch-and-bound algorithm is an example for exact algorithms (Lawler and Wood 1966).

2.2 Heuristics and metaheuristics

In modern computer-aided optimization, heuristics and metaheuristics are established solution techniques. Although presenting solutions that are not guaranteed to be optimal, their general applicability and ability to present fast sufficient solutions make them very attractive for applied optimization. Their inventors built them upon the principle of systematic search, where solution candidates are evaluated and rewarded with a fitness. The term fitness has its origins in evolutionary computation, where the fitness describes the competitive ability of an individual in the reproduction process. The fitness is in its purest form the objective function value \(y= f(\mathbf {x})\) concerning the optimization goal, e.g., in a minimization problem, smaller values have higher fitness than larger values. Moreover, it can be part of the search strategy, e.g., scaled or adjusted by additional functions, particular for multi-objective or constrained optimization.

Heuristics can be defined as problem-dependent algorithms, which are developed or adapted to the particularities of a specific optimization problem or problem instance (Pearl 1985). Typically, heuristics systematically perform evaluations, although utilizing stochastic elements. Heuristics use this principle to provide fast, not necessarily exact (i.e., not optimal) numerical solutions to optimization problems. Moreover, heuristics are often greedy to provide fast solutions but get trapped in local optima and fail to find a global optimum.

Metaheuristics can be defined as problem independent, general-purpose optimization algorithms. They apply to a wide range of problems and problem instances. The term meta describes the higher-level general methodology, which is utilized to guide the underlying heuristic strategy (Talbi 2009).

They share the following characteristics (Boussaïd et al. 2013):

-

The algorithms are nature-inspired; they follow certain principles from natural phenomena or behaviors (e.g., biological evolution, physics, social behavior).

-

The search process involves stochastic parts; it utilizes probability distributions and random processes.

-

As they are meant to be generally applicable solvers, they include a set of control parameters to adjust the search strategy.

-

They do not rely on the information of the process which is available before the start of the optimization run, so-called a priori information. Still, they can benefit from such information (e.g., to set up control parameters)

During the remainder of this article, we will focus on heuristic, respectively, metaheuristic algorithms.

2.3 Surrogate-based optimization algorithms

Surrogate-based optimization algorithms are designed to process expensive and complex problems, which arise from real-world applications and sophisticated computational models. These problems are commonly black-box, which means that they only provide very sparse domain knowledge. Consequently, problem information needs to be exploited by experiments or function evaluations. Surrogate-based optimization is intended to model available, i.e., evaluated, information about candidate solutions to utilize it to the full extent. A surrogate model is an approximation which substitutes the original expensive objective function, real-world process, physical simulation, or computational process during the optimization. In general, surrogates are either simplified physical or numerical models based on knowledge about the physical system, or empirical functional models based on knowledge acquired from evaluations and sparse sampling of the parameter space (Søndergaard et al. 2003). In this work, we focus on the latter, so-called data-driven models. The terms surrogate model, meta-model, response surface model and also posterior distribution are used synonymously in the common literature (Mockus 1974; Jones 2001; Bartz-Beielstein and Zaefferer 2017). We will briefly refer to a surrogate model as a surrogate. Furthermore, we assume that it is crucial to distinguish between the use of an explicit surrogate of the objective function and general model-based optimization (Zlochin et al. 2004), which additionally refers to methods, where a statistical model is used to generate new candidate solutions (cf. Sect. 3.2). We thus distinguish between the two different terms surrogate-based and model-based to avoid confusion. Another term present in the literature is surrogate-assisted optimization, which mostly refers to the application of surrogates in combination with population-based evolutionary computation (Jin 2011).

A surrogate-based optimization process with the different objective function layers: real-world process, computational model, and surrogate. The arrows mark different possible data/application streams. Dotted arrows are in the background, i.e., they pass through elements; each connection always terminates with an arrow. Surrogates are typically either models for the simulation or real-world function. Direct optimization of the problem layers is also possible. Two examples of processes are given to outline the use of the architecture in a business data and robot control task

Important publications featuring overviews or surveys on surrogates and surrogate-based optimization were presented by Sacks et al. (1989), Jones (2001), Queipo et al. (2005), Forrester and Keane (2009). Surrogate-based optimization is commonly defined for but not limited to the case of complex real-world optimization applications. We define a typical surrogate-based optimization process by three layers, where the first two are considered as problem layers, while the latter one is the surrogate, i.e., an approximation of the problem layers. We could transfer the defined layers to different computational problems with expensive function evaluations, such as complex algorithms or machine learning tasks.

Each layer can be the target of optimization or used to retrieve information to guide the optimization process. Figure 1 illustrates the different layers of objective functions and the surrogate-based optimization process for real-world problems. In this case, the objective function layers, from the bottom up, are:

-

L1

The real-world application \(f_1(\mathbf {x})\), given by the physical process itself or a physical model. Direct optimization is often expensive or even impossible, due to evaluations involving resource-demanding prototype building or even dangerous experiments.

-

L2

The computational model \(f_2(\mathbf {x})\), given by a simulation of the physical process or a complex computational model, e.g., a computational fluid dynamics model or structural dynamics model. A single computation may take minutes, hours, or even weeks to compute.

-

L3

The surrogate \(s(\mathbf {x})\), given by a data-driven regression model. The accuracy heavily depends on the underlying surrogate type and amount of available information (i.e., function evaluations). The optimization is, compared to the other layers, typically cheap. Surrogates are constructed either for the real-world application \(f_1(\mathbf {x})\) or the computational model \(f_2(\mathbf {x})\).

Furthermore, the surrogate-based optimization cycle includes the optimization process itself, which is given by an adequate optimization algorithm for the selected objective function layer. No surrogate-based optimization is performed, if the optimization is directly applied to \(f_1(\mathbf {x})\) or \(f_2(\mathbf {x})\). The surrogate-based optimization uses \(f_1(\mathbf {x})\) or \(f_2(\mathbf {x})\) for verification of promising solution candidates. Moreover, the control parameters of the optimization algorithm or even the complete optimization cycle, including the surrogate modeling process, can be tuned.

Each layer imposes different evaluation costs and fidelities: the real-world problem is the most expensive to evaluate, but has the highest fidelity, while the surrogate is the cheapest to evaluate, but has a lower fidelity. The main benefit of using surrogates is thus the reduction of needed expensive function evaluations on the objective function \(f_1(\mathbf {x})\) or \(f_2(\mathbf {x})\) during the optimization. The studies by Loshchilov et al. (2012), Marsden et al. (2004), Ong et al. (2005) and Won and Ray (2004) feature benchmark comparisons of surrogate-based optimization. Nevertheless, the model construction and updating of the surrogates also require computational resources, as well as evaluations for verification on the more expensive function layers. An advantage of surrogate-based optimization is the availability of the surrogate model, which can be utilized to gain further global insight into the problem, which is particularly valuable for black-box problems. The surrogate can be utilized to identify important decision variables or visualize the nature of the problem, i.e., the fitness landscape.

2.4 Meta-optimization and hyperheuristics

Meta-optimization or parameter tuning (Mercer and Sampson 1978) describes the process of finding the optimal parameter set for an optimization algorithm. It is also an optimization process itself, which can become very costly in terms of objective function evaluations, as they are required to evaluate the parameter set of a specific algorithm. Hence, particular surrogate-model based algorithms have become very successful meta-optimizer (Bartz-Beielstein et al. 2005). Figure 1 shows where the meta-optimization is situated in an optimization process. If the algorithm adapts parameters during the active run of optimization, it is called parameter control (Eiben et al. 1999). Algorithm parameter tuning and control is further discussed in Sect. 3.2.

A hyperheuristic (Cowling et al. 2000, 2002) is a high-level approach that selects and combines low-level approaches (i.e., heuristics, elements from metaheuristics), to solve a specific problem or problem class. It is an optimization algorithm that automates the algorithm design process by searching an ample space of pre-defined algorithm components. A hyperheuristic can also be utilized in an online fashion, e.g., trying to find the most suitable algorithm at each state of a search process (Vermetten et al. 2019). We regard hyperheuristics as hybrid algorithms (cf. Sect. 4.5).

3 A new taxonomy

The term taxonomy is defined as a consistent procedure or classification scheme for separating objects into classes or categories based on specific features. The term taxonomy is mainly present in natural science for establishing hierarchical classifications. A taxonomy fulfills the task of distinction and order; it provides explanations and a greater understanding of the research area through the identification of coherence and the differences between the classes.

Several reasons drive our motivation for a new taxonomy: the first reason (I) is that considering available GO taxonomies (Sect. 3.1, cf. Fig. 2), we can conclude that during the last decades, several authors developed new taxonomies for optimization algorithms. However, new classes of algorithms have become state-of-the-art in modern algorithm design, particularly model-based, surrogate-based, and hybrid algorithms dominate the field. Existing taxonomies of GO algorithms do not reflect this situation. Although there are surveys and books which handle the broad field of optimization and give general taxonomies, they are outdated and lack the integration of the new designs. Available up-to-date taxonomies often address a particular subfield of algorithms and discuss them in detail. However, a generalized taxonomy, which includes the above-described approaches and allows to connect these optimization strategies, is missing.

This gap motivated our second reason (II) the development of a generalization scheme for algorithms. We argue that the search concepts of many algorithms are built upon each other and are related. While the algorithms have apparent differences in their strategies, they are not overall different. Many examples for similar algorithms can be found in different named nature-inspired metaheuristics that follow the same search concepts. However, certain elements are characteristic of algorithms, which allow us to define classes based on their search elements. Even different classes share a large amount of these search elements. Thus our new taxonomy is based on a generalized scheme of five crucial algorithm design elements (Sect. 3.2, cf. Fig. 3), which allows us to take a bottom to top approach to differentiate, but also connect the different algorithm classes. The recent developments in hybrid algorithms drive the urge to generalize search strategies, where we no longer use specific, individual algorithms, but combinations of search elements and operators of different classes to find and establish new strategies, which cannot merely be categorized.

Our third reason (III) is the importance of simplicity. Our new taxonomy is not only intended to divide the algorithms into classes, but also to provide an intuitive understanding of the working mechanisms of each algorithm to a broad audience. To support these ideas, we will draw analogies between the algorithm classes and the behavior of a human-like individual in each of the descriptive class sections.

Our last reason (IV) is that we intend our taxonomy to be helpful in practice. A common issue is the selection of an adequate optimization algorithm if faced with a new problem. Our algorithm classes are connected by individual properties, which allows us to utilize the new taxonomy to propose suitable algorithm classes based on a small set of problem features. These suggestions, in detail discussed in Sect. 5 and illustrated in Fig. 4 shall help users to find best practices for new problems.

3.1 History of taxonomies

In the literature, one can find several taxonomies trying to shed light on the vast field of optimization algorithms. The identified classes are often named by a significant feature of the algorithms in the class, with the names either being informative or descriptive. For example, Leon (1966) presented one of the first overviews on global optimization. It classified algorithms into three categories: 1. Blind search, 2. Local search 3. Non-local search. In this context, blind search refers to simple search strategies that select the candidates at random over the entire search space, but following a built-in sequential selection strategy. During the local search, new candidates are selected only in the immediate neighborhood of the previous candidates, which leads to a trajectory of small steps. Finally, non-local search allows to escape from local optima and thus enables a global search. Archetti and Schoen (1984) extends the above scheme by also adding the class of deterministic algorithms, i.e., those who are guaranteed to find the global optimum with a defined budget. Furthermore, the paper stands out in establishing a taxonomy, which for the first time includes the concepts to construct surrogates, as they describe probabilistic methods based on statistical models, which are iteratively utilized to perform the search. Törn and Zilinskas (1989) reviewed existing classification schemes and presented their classifications. They made that the most crucial distinction between two non-overlapping main classes, namely those methods with or without guaranteed accuracy. The main new feature of their taxonomy is the clear separation of the heuristic methods in those with direct and indirect objective function evaluation. Mockus (1974) also discussed the use of Bayesian optimization. Today’s high availability of computational power did not exist; therefore, Törn and Zilinskas (1989) concluded the following regarding Bayesian models and their applicability for (surrogate-based) optimization:

Even if it is very attractive, theoretically it is too complicated for algorithmic realization. Because of the fairly cumbersome computations involving operations with the inverse of the covariance matrix and complicated auxiliary optimization problems the resort has been to use simplified models.

Still, we find the scheme of dividing algorithms into non-heuristic (or exact), random (or stochastic) and further surrogate-based frequently. Several following taxonomies added different algorithm features to their taxonomies, such as metaheuristic approaches (Arora et al. 1995), surrogate-based optimization (Jones 2001), non-heuristic methods (Neumaier 2004), hybrid methods (Talbi 2002), direct search methods (Audet 2014; Kolda et al. 2003), model-based optimization (Zlochin et al. 2004), hyperheuristics (Burke et al. 2010), surrogate-assisted algorithms (Jin 2011), nature-inspired methods (Rozenberg et al. 2011), or population-based approaches (Boussaïd et al. 2013). We created an overview of different selected taxonomies and put them into the comparison in Fig. 2.

Global optimization taxonomy history. Information from Leon (1966), Archetti and Schoen (1984), Törn and Zilinskas (1989),Arora et al. (1995), Jones (2001), Talbi (2002), Neumaier (2004), Zlochin et al. (2004),Burke et al. (2010), Jin (2011) and Boussaïd et al. (2013) are illustrated and compared. Different distinctions between the large set of algorithms were drawn. A comprehensive taxonomy is missing and introduced by our new taxonomy, which concludes the diagram and is further presented in Sect. 3

3.2 The four elements of algorithm design

Any modern optimization algorithm, as defined in Sect. 2, can be reduced to the four key search strategy elements Initialization, Generation and Selection. A fourth element controls all these key elements: the Control of the different functions and operators in each element. The underlying terminology is generic and based on typical concepts from the field of evolutionary algorithms. We could easily exchange it with wording from other standard algorithm classes (e.g., evaluate = test/trial, generate=produce/variate). Algorithm 3.1 displays the principal elements and the abstracted fundamental structure of optimization algorithms (Bartz-Beielstein and Zaefferer 2017). We could map this structure and elements to any modern optimization algorithm. Even if the search strategy is inherently different or elements do not follow the illustrated order or appear multiple times per iteration.

The initialization of the search defines starting locations or a schema for the initial candidate solutions. Two common strategies exist:

-

1.

If there is no available a priori knowledge about the problem and its search space, the best option is to use strategically randomized starting points. The initial distribution target is often exploration, i.e., a broad distribution of the starting points if possible. Particularly interesting for surrogate-based optimization are systematic initialization schemes by methods from the field of design of experiments (Crombecq et al. 2011; Bossek et al. 2020).

-

2.

Suppose domain knowledge or other a priori information is available, such as information from the data or process from previous optimization runs. In that case, it is beneficial to utilize this information, e.g., by using a selection of these solutions, such as these with the best fitness. However, known solutions can also bias the search towards them. Thus, e.g., restart strategies intentionally discard them. In surrogate-based optimization, the initial modeling can use available data.

The initial candidates have a large impact on the balance between exploration and exploitation. Space-filling designs with large amounts of random candidates or sophisticated design of experiments methods will lead to an initial exploration of the search space. Starting with a single candidate will presumably lead to an exploitation of the neighborhood of the selected candidate location. Hence, algorithms using several candidates are in general more robust, while a single candidate algorithms are sensitive to the selection of the starting candidate, particular in multi-modal landscapes. Multi-start strategies can further increase the robustness and are particularly common for single-candidate algorithms, and also frequently recommended for population-based algorithms (Hansen et al. 2010b).

The generation during the search process defines the methods for finding new candidates, with particular regard on how they use available or obtained information about the objective function. A standard approach is the variation of existing observations, as it utilizes, and to a certain extent preserves, the information of previous iterations. Even by the simplest hill-climber class algorithms, which do not require any global information or stored knowledge of former iterations (Sect. 4.1), use the last obtained solution to generate new candidate(s). Sophisticated algorithms generate new candidates based on exploited and stored global knowledge about the objective function and fitness landscape. This knowledge is stored by either keeping an archive of all available or selected observations or implicitly by using distribution or data models of available observations. Another option to generate new candidates is combining information of multiple candidates by dedicated functions or operators, particular present in the trajectory class (Sect. 4.2). The exact operators for generation and variation of candidate solutions are various and an essential aspect of keeping the balance between exploration and exploitation in a search strategy.

The selection defines the principle of choosing the solutions for the next iteration. We use the term selection, which has its origins in evolutionary computation. Besides the most straightforward strategy of choosing the solution(s) with the best fitness, advanced selection strategies have emerged, which are mainly present in metaheuristics (Boussaïd et al. 2013). These selection strategies are particularly common in algorithms with several candidates per generation step; thus, evolutionary computation introduced the most sophisticated selection methods (Eiben and Smith 2015). The use of absolute differences in fitness or their relative difference is the most common strategy and called ranked selection, i.e., based on methods such as truncation, tournament or proportional selection.

The Control parameters determine how the search can be adapted and improved by controlling the above mentioned key elements. We distinguish between internal and external parameters: External parameters, also known as offline parameters, can be adjusted by the user and need to be set a priori to the optimization run. Typical external parameters include the number of candidates and settings influencing the above mentioned key elements. Besides standard theory-based defaults (Schwefel 1993), they are usually set by either utilizing available domain knowledge, extensive a priori benchmark experiments (Gämperle et al. 2002), or educated guessing. Sophisticated meta-optimization methods were developed to exploit the right parameter settings in an automated fashion. Well-known examples are sequential parameter tuning (Bartz-Beielstein et al. 2005), iterated racing for automatic algorithm tuning (López-Ibáñez et al. 2016), bonesa (Smit and Eiben 2011) or SMAC (Hutter et al. 2011). In comparison to external parameters, internal ones are not meant to be changed by the user. They are either fixed to an absolute value, which is usually based on physical constants or extensive testing by the authors of the algorithm, or are adaptive, or even self-adaptive. Adaptive parameters are changed during the search process based on fixed strategies and exploited problem information (Eiben et al. 1999) without user influence. Self-Adaptive parameters are optimized during the run, e.g., by including them into the candidate vector x as an additional decision value. Algorithms using adaptive schemes tend to have better generalization abilities than those with fixed parameters. Thus, they are especially successful for black-box problems, where no prior information about the objective function properties is available to setup parameters in advance (Hansen et al. 2003). In general, the settings of algorithm control parameters directly affect the balance between exploration and exploitation during the search and are crucial for the search strategies and their performance.

Further, the evaluation step computes the fitness of the candidates. The evaluation is a crucial aspect, as it defines how and which information about any candidate solution is gathered by querying the objective function, which can significantly influence the search strategy and also the utilized search operators. However, as important aspects of the evaluation are mostly problem-dependent, such as noise, constraints and multiple objectives. The handling of these aspects sometimes requires unique strategies, operators, or even specialized algorithm designs. These unique algorithms will not be covered in our taxonomy. However, often strategies for handling these particular characteristics are enhanced versions of in this taxonomy presented algorithms, e.g., for handling multiple objectives. Multi-objective problems include several competing goals, i.e., an improvement in one objective leads to a deterioration in another objective. Thus, no single optimal solution is available, but a set of equivalent quality, the non-dominated solutions, or so-called Pareto-set, where reasonable solutions need to be selected from (Fonseca and Fleming 1993; Naujoks et al. 2005). A so-called decision-maker is needed to select the final solutions, which is often the user himself. Further, Multi-objective algorithms can include special search operators, such as hyper-volume-based selection or non-dominated sorting for rank-based selection (Deb et al. 2002; Beume et al. 2007). While most computer experiments are deterministic, i.e., iterations using the same value set for the associated decision variables should deliver the same results, real-world problems are often non-deterministic. They include non-observable disturbance variables and stochastic noise. Typical noise handling techniques include multiple evaluations of solutions to reduce the standard deviation and special sampling techniques. The interested reader can find a survey on noise handling by Arnold and Beyer (2003). Moreover, optimization problems frequently include different constraints, which we need to consider during the optimization process. Constraint handling techniques can be directly part of the optimization algorithm, but most algorithms are designed to minimize the objective function and add constraint handling on top. Thus, algorithms integrate it by adjusting the fitness, e.g., by penalty terms. Different techniques for constraint handling are discussed by Coello (2002) and Arnold and Hansen (2012).

4 The definition of intuitive algorithm classes

In his work about evolution strategies, Rechenberg (1994) illustrated a visual approach to an optimization process: a mountaineer in an alpine landscape, attempting to find and climb the highest mountain. The usage of analogies to the natural world is a valuable method to explain the behavior of search algorithms. In the area of metaheuristics, the behavior of the nature and animals inspired the search procedure of the algorithms: Evolutionary algorithms follow the evolution theory (Rechenberg 1994; Eiben and Smith 2015); particle swarm optimization (Kennedy and Eberhart 1995; Shi and Eberhart 1998) utilizes a strategy similar to the movement of bird flocks; ant colony optimization (Dorigo et al. 2006) mimics, as the name suggests, the ingenious pathfinding and food search principles of ant populations.

We take up the idea of optimization processes being human-like individuals and use it in the definition of our extended class names: the mountaineer, sightseer, team, surveyor and chimera. This additional naming shall accomplish the goal of presenting an evident and straightforward idea of the search strategies of the algorithms in the associated class.

4.1 Hill-climbing class: “The Mountaineer”

Intuitive Description 1

(The Mountaineer) The mountaineer is a single individual who hikes through a landscape, concentrating on achieving his ultimate goal: finding and climbing the highest mountain. He is utterly focussed on his goal to climb up that mountain. So while he checks different paths, he will always choose the ascending way and not explore the whole landscape.

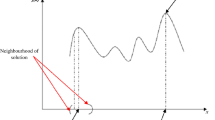

Hill-Climbing algorithms focus their search strategy on greedy exploitation with minimal exploration. Hence, this class encompasses fundamental optimization algorithms with direct search strategies, which include gradient-based algorithms as well as deterministic or stochastic hill-climbing algorithms. Gradient-based algorithms, also known as first-order methods, are in first case applicable to differentiable functions, where the gradient information is available. If the gradient is not directly available, it can be approximated or estimated, for example, by stochastic gradient descent (SGD) algorithms (Ruder 2016).

These algorithms have, by design, fast convergence to a local optimum situated in a region of attraction and commonly no explicit strategy for exploration. Overviews of associated algorithms were presented by Lewis et al. (2000) and Kolda et al. (2003). Common algorithms include the quasi-Newton Broyden-Fletcher–Goldfarb-Shanno algorithm (Shanno 1970), conjugate gradients (CG) (Fletcher 1976), the direct search algorithm Nelder-Mead (Nelder and Mead 1965), and stochastic hill climbers such as the (1+1)-Evolution Strategy (Rechenberg 1973; Schwefel 1977).

Famous SGD algorithms are adaptive moment estimation (ADAM) (Kingma and Ba 2014) and the adaptive gradient algorithm (AdaGrad) (Duchi et al. 2011). They are frequently applied in machine learning, particularly for optimizing neural network weights with up to millions of parameters.

As this class defines fundamental search strategies, hill-climbers are often part of sophisticated algorithms as a fast-converging local optimizer. Hill-climbers do not utilize individual operators for the initialization of the single starting point. Thus, it is typically selected at random in the valid search space or based on prior knowledge.

The variation of the last observed selected candidate generates new candidates, commonly in the current solution’s vicinity. For example, the stochastic hill climber utilizes random variation with a small step size compared to the range of the complete search interval. Gradient-based methods directly compute or approximate the gradients of the objective function to find the best direction and strength for the variation. Algorithms such as Nelder-Mead create new candidates by computing a search direction using simplexes.

The most common selection methods are elitist strategies, which evaluate the new candidate, compare it to the old solution, and keep the one with the best fitness as a new solution. Always selecting the best is known as a greedy search strategy, as it tries to improve as fast as possible. This greedy strategy leads to the outlined hill-climbing search which performs a trajectory of small, fitness-improving steps, which forms in the ideal case a direct line to the nearest optimum. In general, these algorithms search locally for an optimum and do not exploit or use global function information.

The most critical control parameter is the variation step size, which directly influences the speed of convergence. As a result of this, the state of the art is to use an adaptive variation step size that changes online during the search, often based on previous successful steps, for example as defined in the famous 1/5 success rule (Rechenberg 1973).

4.2 Trajectory class: “The Sightseer”

Intuitive Description 2

(The Sightseer) The intuitive idea of this class is a single hiker looking for interesting places. During the search, the sightseer takes into account that multiple places of interest exist. It thus explores the search space or systematically visits areas to gather information about multiple locations and utilizes this to find the most desired ones.

Trajectory class algorithms still focus on exploitation but are supported by defined exploration methods. This class encompasses algorithms that utilize information from consecutive function evaluations.

They are the connecting link between the hill-climbing and population class. While trajectory algorithms are a step towards population algorithms and also allow the sampling of several solutions in one iteration, they use the principle of initializing and maintaining a single solution. This solution is the basis for variation in each iteration. Again, this variation forms a trajectory in the search space over consecutive iterations, similar to the hill-climbing class. Thus these methods are known as trajectory methods (Boussaïd et al. 2013). While the initialization and generation of the trajectory class are similar to those of the hill-climbing class, the main differences can be found during the selection, as they utilize operators to guide the search process in a global landscape in specific directions. Two different strategies can be differentiated, which define two subclasses:

-

(i)

The exploring trajectory class utilizes functions to calculate a probability of accepting a candidate as the (current) solution.

-

(ii)

The systematic trajectory class utilizes a separation of the search space into smaller sub-spaces to guide the search into specific directions.

These different strategies are susceptible to the correct parametrization, which need to be selected adequate to the underlying objective function.

4.2.1 Exploring trajectory algorithms

The exploring trajectory subclass encompasses algorithms that implement selection operators to balance exploration and exploitation to enable global optimization. The introduction of selection functions that allow to expand the search space and escape the region of attraction of a local optimum achieves exploration. Simulated annealing (SANN) (Kirkpatrick et al. 1983), which is known to be a fundamental contribution to the field of metaheuristic search algorithms, exemplifies this class. The continuous version (Goffe et al. 1994; Siarry et al. 1997; Van Groenigen and Stein 1998) of the SANN algorithm extends the iterated stochastic hill-climber. It includes a new element for the selection, the so-called acceptance function. It determines the probability of accepting an inferior candidate as a solution by utilizing a parameter called temperature, in analogy to metal annealing procedures. This dynamic selection allows escaping local optima steps by accepting movement in the opposite direction of improvement, which is the fundamental difference to a hill-climber and ultimately allows the global search. At the end of each iteration, a so-called cooling operator adapts the temperature. This operator can be used to further balance the amount of exploration and exploitation during the search (Henderson et al. 2003). A common approach is to start with a high temperature and steadily reduce T according to the number of iterations or to utilize an exponential decay of T. This steady reduction of T leads to a phase of active movement and thus exploring in the early iterations, while with decreasing T, the probability of accepting inferior candidates reduces. With approaching a T value of zero, the behavior becomes similar to an iterative hill-climber. Modern SANN implementations integrate self-adaptive cooling-schemes which use alternating phases of cooling and reheating (Locatelli 2002). These allow alternating phases of exploration and exploitation but require sophisticated control.

4.2.2 Systematic trajectory algorithms

This subclass encompasses algorithms, which base their search on a space partitioning utilizing the exposed knowledge of former iterations. They create sub-spaces that are excluded from generation and selection, or attractive sub-spaces, where the search is focused on. These search space partitions guide the search by pushing candidate generation to new promising or previous unexplored parts of the search space. An outstanding paradigm for this class is Tabu Search (Glover 1989). A so-called tabu list contains the last successful candidates and defines a sub-space of all evaluated solutions. In the continuous version, Siarry and Berthiau (1997), Hu (1992) and Chelouah and Siarry (2000), small (hypersphere or hyperrectangle) regions around the candidates are utilized. The algorithm will consider these solutions or areas as forbidden for future searches, i.e., it selects no candidates situated in these regions as solutions. This process shall ensure to move away from known solutions and prevents identical cycling of candidates and getting stuck in local optima. The definition of the tabu list parameters can control exploration and exploitation by, e.g., by the number of elements or size of areas.

The areas of search can also be pre-defined, such as in variable neighborhood search (VNS) (Hansen and Mladenovic 2003; Hansen et al. 2010c; Mladenović et al. 2008). The search strategy of VNS is to perform sequential local searches in these sub-spaces to exploit their local optima. The idea behind this search strategy is that by using an adequate set of sub-spaces, the chance of exploiting a local optimum, which is near the global optimum, increases.

4.3 Population class: “The Team”

Intuitive Description 3

(The Team) The intuitive idea of this class is a group of individuals, which team up to achieve their mutual goal together. They split up to explore different locations and share their knowledge with other members of the team.

Population class algorithms utilize distributed exploration and exploitation. The idea of initializing, variation, and selection of several contemporary candidate solutions defines this class. The algorithms are commonly metaheuristics, whose search concepts follow processes found in nature. Moreover, it includes algorithms building upon the population-based concept by utilizing models of the underlying candidate distributions. Due to utilizing a population, the generation and selection strategies of these algorithms differ significantly from the hill-climber und trajectory class. We subdivide this class into the regular population and model-based population algorithms, which particularly differ in how they generate new candidates during the search:

-

(i)

The regular population (Sect. 4.3.1) generate and maintain several candidates with specific population-based operators.

-

(ii)

The model-based population (Sect. 4.3.2) generate and adapt models to store and process information.

4.3.1 Regular population algorithms

Well-known examples of this class are particle swarm optimization (PSO) (Kennedy and Eberhart 1995; Shi and Eberhart 1998) and different evolutionary algorithms (EA). We regard EAs as state of the art in population-based optimization, as their search concepts are dominating for this field. Nearly all other population-based algorithms use similar concepts and are frequently associated with EAs. Fleming and Purshouse (2002) go as far to state:

In general, any iterative, population-based approach that uses selection and random variation to generate new solutions can be regarded as an EA.

Evolutionary algorithms follow the idea of evolution, reproduction, and the natural selection concept of survival of the fittest. In general, the field of EAs goes back to four distinct developments, evolution strategies (ES) (Rechenberg 1973; Schwefel 1977), evolutionary programming (Fogel et al. 1966), genetic algorithms (Holland 1992), and genetic programming (Koza 1992). The naming of the methods and operators matches with their counterparts from biology: candidates are individuals who can be selected to take the role of parents, mate and recombine to give birth to offspring. The population of individuals is evolved (varied, evaluated, and selected) over several iterations, so-called generations, to improve the solutions.

Different overview articles shed light on the vast field of evolutionary algorithms (Back et al. 1997; Eiben and Smith 2003, 2015).

EAs generate new solutions typically by variation of a selected subset of the entire population. Typically, competition-based strategies, which also often includes probabilistic elements, select the subsets. Either random variation of this subpopulation or recombination by crossover, which is the outstanding concept of EAs, generates new candidates. Recombination partly swaps the variables of two or more candidates, aggregated or combined to create new candidate solutions.

The population-based selection strategies allow picking solutions with inferior fitness for the variation process, which allows exploration of the search space. Several selection strategies exist. For instance, in roulette wheel selection, the chance of being selected is proportional to the ranking while all chances sum up to one. A spin of the roulette wheel chooses each candidate, where the individual with the highest fitness also has the highest chance of being selected. Alternatively, in tournament selection, different small subsets of the population are randomly drawn for several tournaments. Within these tournaments, the candidates with the best fitness are selected based on comparisons to their competitors. This competition-based selection also allows inferior candidates to win their small tournament and participate in the variation.

EAs usually have several parameters, such as the selection intensity (i.e., the percent of truncation), variation step size, or recombination probability. Parameter settings, in particular adaptive and self-adaptive control for evolutionary algorithms is discussed in Angelin (1995), Eiben et al. (1999), Lobo et al. (2007), Doerr et al. (2020) and Papa and Doerr (2020).

4.3.2 Model-based population algorithms

The model-based population class encompasses algorithms that explicitly use mathematical or statistical models of the underlying candidates. These algorithms generally belong to the broad field of EAs (Sect. 4.3.1), and use similar terminology and also operators.

Estimation of distribution algorithms (EDAs) are a well-known example for this class (Larrañaga and Lozano 2001; Hauschild and Pelikan 2011). Compared to a regular population-based approach, a distribution model of selected promising candidates is learned in each iteration, which is then utilized to sample new candidates. The sampling distribution will improve and likely converge to generate only optimal or near-optimal solutions over the iterations. EDAs utilize models from univariate, over bivariate to multivariate distributions, e.g., modeled by Bayesian networks or Gaussian distributions with typical parameters, such as mean, variance, and covariance of the modeled population. The search principle of EDAs was first defined for discrete domains and later successfully extended for continuous domains (Hauschild and Pelikan 2011). Popular examples for EDAs are population-based incremental learning (PBIL) (Baluja 1994; Gallagher et al. 1999), the estimation of Gaussian networks algorithm (EGNA) (Larrañaga et al. 1999), the extended compact genetic algorithm (eCGA) (Harik 1999), and the iterated density estimation evolutionary algorithm (IDEA) (Bosman and Thierens 2000). The surrogate class distinction is that the underlying learned distribution models are directly utilized to sample new candidates, instead of substituting the objective function.

A well-known and successful model-based algorithm is the covariance matrix adaption—evolution strategy (CMA-ES) (Hansen et al. 2003). While it also utilizes a distribution model, its central idea extends the EDA approach by learning a multivariate Gaussian distribution model of candidate steps, i.e., their changes over iterations, instead of current locations (Hansen 2006). Moreover, instead of creating a new distribution model of selected candidates in each iteration, the model is kept and updated. This principle of updating the model is similar to applying evolutionary variation operators, such as recombination or mutation, to the candidates in a regular population-based algorithm. However, in the CMA-ES, the variation operators’ target is the distribution model and not individual candidates.

Again, this class has several control parameters, which are often designed to be adaptive or self-adaptive. For example, the CMA-ES utilizes a sophisticated step-size control and adapts the mutation parameters during each iteration following the history of prior successful iterations, the so-called evolution paths. These evolution paths are exponentially smoothed sums for each distribution parameter over the consecutive prior iterative steps.

4.4 Surrogate class: “The Surveyor”

Intuitive Description 4

(The Surveyor) The intuitive idea of the surveyor is a specialist who systematically measures a landscape by taking samples of the height to create a topological map. This map resembles the real landscape with a given approximation accuracy and is typically exact at the sampled locations and models the remaining landscape by regression. It can then be examined and utilized to approximate the quality of an unknown point and further be updated if new information is acquired. Ultimately it can be used to guide an individual to the desired location.

Surrogate class algorithms utilize distributed exploration and exploitation by explicitly relying on landscape information and a landscape model. These algorithms differ from all other defined classes in their focus on acquiring, gathering, and utilizing information about the fitness landscape. They utilize evaluated, acquired information to approximate the landscape and also predict the fitness of new candidates.

As illustrated in Sect. 2.3, the surrogates depict the maps of the fitness landscape of an objective function in an algorithmic framework. A surrogate algorithm utilizes them for an efficient indirect search, instead of performing multiple, direct, or localized search steps. We divide this class into two subclasses:

-

Surrogate-based algorithms utilize a global surrogate model for variation and selection.

-

Surrogate-assisted algorithms utilize surrogates to support the search.

The distinction between the two subclasses is motivated by the different use of the surrogate model. While a surrogate-based algorithm generates new candidates solely by optimizing/prediction of the surrogate, surrogate-assisted algorithms use it to support their search by individual operators (i.e., for the selection of candidates).

For both classes, the surrogate model is a core element of the variation and selection process during optimization and essential for their performance. A perfect surrogate provides an excellent fit to observations, while ideally possessing superior interpolation and extrapolation abilities. However, a large number of available surrogate models all have significantly differing characteristics, advantages, and disadvantages. Model selection is thus a complicated and challenging task. If no domain knowledge is available, such as in real black-box optimization, it is often inevitable to test different surrogates for their applicability.

Common models are: linear, quadratic or polynomial regression, Gaussian processes (also known as Kriging) (Sacks et al. 1989; Forrester et al. 2008), regression trees (Breiman et al. 1984), artificial neural networks and radial basis function networks (Haykin 2004; Hornik et al. 1989) including deep learning networks (Collobert and Weston 2008; Hinton et al. 2006, 2012) and symbolic regression models (Augusto and Barbosa 2000; Flasch et al. 2010; McKay et al. 1995), which are usually optimized by genetic programming (Koza 1992).

Further, much effort in current studies is to research the benefits of model ensembles, which combine several distinct models (Goel et al. 2007; Müller and Shoemaker 2014; Friese et al. 2016). The goal is to create a sophisticated predictor that surpasses the performance of a single model. A well-known example is random forest regression(Freund and Schapire 1997), which uses bagging to fit a large number of decision trees (Breiman 2001). We regard ensemble modeling as the state of the art of current research, as they can combine the advantages of different models to generate outstanding results in both classification and regression. The drawback of these ensemble methodologies is that they are computationally expensive and pose a severe problem concerning efficient model selection, evaluation, and combination.

4.4.1 Surrogate-based algorithms

Surrogate-based algorithms explicitly utilize a global approximation surrogate in their optimization cycle by following the concept of efficient global optimization (EGO) (Jones et al. 1998) and Bayesian Optimization (BO) (Mockus 1974, 1994, 2012). They are either fixed algorithms designed around a specific model, such as Kriging, or algorithmic frameworks with a choice of possible surrogates and optimization methods sequential parameter optimization (Bartz-Beielstein et al. 2005; Bartz-Beielstein 2010). Further well-known examples for continuous frameworks are the surrogate management framework (SMF) (Booker et al. 1999) and the surrogate modeling toolbox (SMT) (Bouhlel et al. 2019). Versions for discrete search spaces are mixed integer surrogate optimization (MISO) (Müller 2016) and efficient global optimization for combinatorial problems (CEGO) (Zaefferer et al. 2014). The basis for our descriptions of surrogate-based algorithms is mainly EGO, and it is to note that the terminology of BO differs partly from our utilized terminology.

A general surrogate-based algorithm can be described as follows (Cf. Sect. 2.3):

-

1.

The initialization is done by sampling the objective function at k positions with \(\mathbf {y}_{i}=f(\mathbf {x}_i), 1 \le i \le k\) to generate a set of observations \({\mathscr {D}}_{t}=\{(\mathbf {x}_i,\mathbf {y}_i), 1 \le i \le k \}\). The sampling design plan is commonly selected according to the surrogate.

-

2.

Selecting a suitable surrogate. The selection of the correct surrogate type can be a computational demanding step in the optimization process, as often no prior information indicating the best type is available.

-

3.

Constructing the surrogate \(s(\mathbf {x})\) using the observations.

-

4.

Utilizing the surrogate \(s(\mathbf {x})\) to predict n new promising candidates \(\{\mathbf {x}^*_{1:n}\}\), e.g., by optimization of the infill function with a suitable algorithm. For example, it is reasonable to use algorithms that require a large number of evaluations as the surrogate itself is (comparatively) very cheap to evaluate.

-

5.

Evaluating the new candidates with the objective function \(y^*_i=f(\mathbf {x}^*_{i}), 1 \le i \le n\).

-

6.

If the stopping criterion is not met: Updating the surrogate with the new observations \({\mathscr {D}}_{t+1} ={\mathscr {D}}_{t} \cup \{(\mathbf {x}^*_i, y^*_i),1 \le i \le n\}\), and repeating the optimization cycle (4.-6.)

For the initialization, the model building requires a suitable sampling of the search space. The initial sampling has a significant impact on the performance and should be carefully selected. Thus, the initialization commonly uses candidates following different information criteria and suitable experimental designs. For example, it is common to built linear regression models with factorial designs and preferably couple Gaussian process models with space-filling designs, such as Latin hypercube sampling (Montgomery et al. 1984; Sacks et al. 1989).

The generation has two aspects: the first is the choice of surrogate itself, as it is used to find a new candidate. The accuracy of a surrogate strongly relies on the selection of the correct model type to approximate the objective function. By selecting a particular surrogate, the user makes certain assumptions regarding the characteristics of the objective function, i.e., modality, continuity, and smoothness (Forrester and Keane 2009). Most surrogates are selected to provide continuous, low-modal, and smooth landscapes, which renders the optimization process computationally inexpensive and straightforward. The second aspect is the optimizer which variates the candidates for the search on the surrogate and the approximated fitness landscape. As the surrogates are often fast to evaluate, exhaustive exact search strategies, such as branch and bound in EGO Jones et al. (1998) or multi-start hill-climbers, are often utilized, but it is also common to use sophisticated population-based algorithms.

The surrogate prediction for the expected best solution is the basis of the selection of the next candidate solution. Instead of a simple mean fitness prediction, it is common to define an infill criterion or acquisition function. Typical choices include the probability of improvement (Kushner 1964), expected improvement (Jones et al. 1998) and confidence bounds (Cox and John 1997). Expected improvement is a common infill criterion because it is a balance of exploration and exploitation by utilizing both the predicted best mean value of the model, as well as the model uncertainty. The optimization of this infill criterion then selects the candidate. Typically, in each iteration for evaluation and the model update, the algorithm selects only a single candidate. Multi-infill selection strategies are also possible.

Surrogate-based algorithms include a large number of control elements, starting with necessary components of such an algorithm, including the initialization strategy, the choice of surrogate and optimizer. In particular, the infill criteria, as part of the selection strategy, has an enormous impact on the performance. Even for a fixed algorithm, the amount of (required) control is extensive. The most important are the model parameters of the surrogate.

4.4.2 Surrogate-assisted algorithms

Surrogate-assisted algorithms utilize s search strategy similar to the population class, but employ a surrogate particular in the selection step to preselect candidate solutions based on their approximated fitness and assist the evolutionary search strategy (Ong et al. 2005; Jin 2005; Emmerich et al. 2006; Lim et al. 2010; Loshchilov et al. 2012). Commonly, only parts of the new candidates are preselected utilizing the surrogate, while another part follows a direct selection and evaluation process. The generation and selection of a new candidate are thus not based on an optimization of the surrogate landscape, which is the main difference to the surrogate-based algorithms. The surrogate can be built on the archive of all solutions, or locally on the current solution candidates. An overview of surrogate-assisted optimization is given by Jin (2011), including several examples for real-world applications, or by Haftka et al. (2016) and Rehbach et al. (2018), with focus on parallelization.

4.5 Hybrid class: “The Chimera”

Intuitive Description 5

(The Chimera) A chimera is an individual, which is a mixture, composition, or crossover of other individuals. It is an entirely new being formed out of original parts from existing species and utilizes their strengths to be versatile.

We describe the explicit combination of algorithms or their components as the hybrid class. Hybrid algorithms utilize existing components and concepts, which have their origin in an algorithm from one of the other classes, in new combinations. They are particularly present in current research regarding the automatic composition or optimization of algorithms to solve a certain problem or a problem class. Hyperheuristic algorithms also belong to this class. Overviews of hybrid algorithms were presented by Talbi (2002), Blum et al. (2011) and Burke et al. (2013). There are two kinds of hybrid algorithms:

-

1.

Predetermined Hybrids (Sect. 4.5.1) have a fixed algorithm design, which is composed of certain algorithms or their components.

-

2.

Automated Hybrids (Sect. 4.5.2) use optimization or machine learning to search for an optimal algorithm design or composition.

The hybrid class contains a large number of algorithms and it can be difficult to draw a distinction to a certain class. However, particularly the predetermined hybrids can be additionally described by their main components, so that their origin remains clear, e.g., an evolutionary algorithm coupled with simulated annealing could be defined as population-trajectory hybrid. For automated hybrids this definition is not longer possible, as they couple a large amount of different components and also the algorithms structure is part of their search, so the final algorithm structure can differ for each problem.

4.5.1 Predetermined hybrid algorithms

The search strategies of this class improve or tackle algorithm weaknesses or amplify their strengths. The algorithms are often given distinctive roles of exploration and exploitation, as they are combinations of an explorative global search method paired with a local search algorithm. For example, population-based algorithms with remarkable exploration abilities pair with local algorithms with fast convergence. This approach gives some benefits, as the combined algorithms can be adapted or tuned to fulfill their distinct tasks. Also well known are Memetic algorithms, as defined by Moscato et al. (1989), which are a class of search methods that combine population-based algorithms with local hill-climbers. An extensive overview of memetic algorithms is given by Molina et al. (2010). They describe how different hybrid algorithms can be constructed by looking at suitable local search algorithms with particular regard to their convergence abilities.

4.5.2 Automated hybrid algorithms

Automated hybrids are a special kind of algorithms, which do not use predetermined search strategies, but a pool of different algorithms or algorithm components, where the optimal strategy can be composed of (Lindauer et al. 2015; Bezerra et al. 2014). Hyperheuristics belong to this class, particularly those that generate new heuristics (Burke et al. 2010; Martin and Tauritz 2013). Automated algorithm selection tries to find the most suitable algorithm for a specific problem based on machine learning and problem information, such as explorative landscape analysis (Kerschke and Trautmann 2019). Instead of selecting individual algorithms, it is tried to select different types of operators for, e.g., generation or selection, to automatically compose a new, best-performing algorithm for a particular problem (Richter and Tauritz 2018). Similar to our defined elements, search operators or components of algorithms are identified, extracted, and then again combined to a new search strategy.

Generation, variation, and selection focus in this class on algorithm components, instead of candidate solutions. The search strategies on this upper level are similar to the presented algorithms; hence, for example, evolutionary or Bayesian techniques are common (Guo 2003; van Rijn et al. 2017).

4.6 Taxonomy: summary and examples

Figure 3 illustrates an overview of all classes and connected features. It outlines initialization, generation, selection, and control of the individual components for each class. The algorithm features are partly distinct and define their class, while others are shared. The figure shows the strong relationship between the algorithm classes; for example, the hill-climbing and trajectory class share similar characteristics. The algorithms of these classes are similar in their search strategy and built upon each other. The presented scheme is intended to cover most concepts. However, available algorithms can also have characteristics of different classes and do not fit the presented scheme. For example, some components of the more complex classes can also be utilized in the less complex classes, e.g., self-adaptive control schemes also apply for hill-climbers, but are typically found in the population class. Table 1 describes examples for each of the defined algorithm classes and outlines their specific features. Again, the table is not intended to present a complete overview, instead, for each class and subclass, at least one example is given to present the working scheme. Other algorithms can be easily classified utilizing the scheme presented in Fig. 3.

5 Algorithm selection guideline

The selection of the best-suited algorithm poses a common problem for practitioners, even if experienced. In general, it is nearly impossible to predict the performance of any algorithm for a new, unknown problem. We thus recommend first to gather all available information about the problem if confronted with a new optimization problem. The features of a problem can be an excellent guideline to select at least an adequate algorithm class, where the users’ choice and experience can select a concrete implementation.

Our guideline is strongly connected to the idea of exploratory landscape analysis (ELA) (Mersmann et al. 2011), which aims to derive problem features with the final goal of relating those features to suitable algorithm classes. For example, these features include information about convexity, multi-modality, the global structure of the problem, problem separability, and variable scaling. ELA’s final goal is to provide the best-suited algorithms to previous unknown optimization problems based on the derived landscape features. This goal requires rigorous experimentation and benchmarking to match algorithms or algorithm classes to the landscape features (Kerschke and Trautmann 2019). As this information is not yet available, we extracted a small, high-level set of these features for our guideline, considering mainly the multi-modality and unique function properties, as being expensive to evaluate.

Moreover, our selection is based on the available resources, both in terms of available evaluations and computational resources. To help with the selection, we developed a small decision graph which builds upon these significant features. The provided guideline is experience-based and utilizes basic concepts; it is not intended to serve as an absolute policy; instead, as the first recommendation if a new problem is considered. The graph is outlined in Fig. 4.

A hill-climbing algorithm is in the first place suitable for unimodal functions or to exploit local optima or for cases where gradient information can be derived from the objective function. Gradient-based algorithms are incredibly successful in optimizing large scale optimization problems, such as frequently found in AI. However, they always have a high risk of getting stuck in local optima. It can be applied for global optimization to multimodal landscapes if an adequate multi-start strategy is employed. These multi-start strategies typically demand a high number of function evaluations and are most reasonable to be used if objective functions are not expensive.

Exploring trajectory algorithms are suitable for searches in unimodal and multimodal problems. As they do not rely on stored information of former iterations during their search, they are also an excellent choice to handle dynamic objective functions (Carson and Maria 1997; Corana et al. 1987; Faber et al. 2005). However, the rather simplistic utilization of exploited global information renders them not efficient for challenging and expensive optimization problems. Moreover, the control parameters have a significant effect on the performance of these algorithms. We thus advise to tune them in an offline or online fashion.

The central concept of systematic trajectory algorithms is to use the information of evaluated solutions and to direct the search to former unknown regions to avoid early convergence to a non-global optimum. The strategic use of sub-spaces allows precise control of exploration and exploitation and mainly ensures a high level of exploration. They include a large number of parameters, such as the number or size of sub-spaces, which makes them very vulnerable to false setups and less good universal solvers. If correctly tuned, algorithms from this class are suitable and efficient for multimodal problems. Algorithms using a pre-defined separation of the search space, such as VNS, can utilize domain knowledge for the initial definition of the sub-spaces. This pre-defined separation renders them useful for problems where the region of the global optimum is roughly known, but not particularly suitable for black-box problems.