Abstract

Mirror and glass are ubiquitous materials in the 3D indoor living environment. However, the existing vision system always tends to neglect or misdiagnose them since they always perform the special visual feature of reflectivity or transparency, which causes severe consequences, i.e., a robot or drone may crash into a glass wall or be wrongly positioned by the reflections in mirrors, or wireless signals with high frequency may be influenced by these high-reflective materials. The exploration of segmenting mirrors and glass in static images has garnered notable research interest in recent years. However, accurately segmenting mirrors and glass within dynamic scenes remains a formidable challenge, primarily due to the lack of a high-quality dataset and effective methodologies. To accurately segment the mirror and glass regions in videos, this paper proposes key points trajectory and multi-level depth distinction to improve the segmentation quality of mirror and glass regions that are generated by any existing segmentation model. Firstly, key points trajectory is used to extract the special motion feature of reflection in the mirror and glass region. And the distinction in trajectory is used to remove wrong segmentation. Secondly, a multi-level depth map is generated for region and edge segmentation which contributes to the accuracy improvement. Further, an original dataset for video mirror and glass segmentation (MAGD) is constructed, which contains 9,960 images from 36 videos with corresponding manually annotated masks. Extensive experiments demonstrate that the proposed method consistently reduces the segmentation errors generated from various state-of-the-art models and reach the highest successful rate at 0.969, mIoU (mean Intersection over Union) at 0.852, and mPA (mean Pixel Accuracy) at 0.950, which is around 40% - 50% higher on average on an original video mirror and glass dataset.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Mirrors are primarily known for their reflective properties, with a smooth reflective surface on one side of the glass, while glass, in general, is known for its transparency and versatile uses in various forms, e.g., big dressing mirror, glass window/door, glass table and so on. Such mirrors and glasses always have a critical impact on the existing vision systems and would heavily affect intelligent decisions such as robot navigation, drone tracking, 3D reconstruction and signal transmission. For example, unmanned aerial vehicles (UAVs) often face challenges in avoiding collisions with glass surfaces due to their inability to recognize glass [1]. Similarly, indoor robots may encounter navigation errors and failures in position estimation when they misinterpret objects within mirrors [2, 3] or glass [4]. Besides, reflections in mirrors and glass will cause wrong reconstruction results of the 3D world [5, 6]. In pose estimation [7, 8], the reflection of the mirror provides an additional view of the human body and solves the depth ambiguity problem of the monocular camera [9]. Additionally, high-reflectivity materials such as glass and mirrors can also pose obstacles to the transmission of 5G and 6G signals [10]. Mirror and glass segmentation from single images [11, 12] are unable to directly apply for complex tasks such as robot navigation and 3D reconstruction which needs multiple information from videos. Existing video segmentation for action recognition works [13, 14] deal with general motions well, but they failed in the case with reflective motions in mirror and glass. In order to meet these needs, it is vital to segment mirror and glass first to avoid errors from reflections. Hence, it is essential for vision systems to be able to detect and segment the mirror and glass from input video sequences.

Using some special equipment, e.g., laser sensor [15, 16], ultrasonic sensor [17], polarization camera [18], thermal camera [19], light-field camera [20], depth camera [21, 22] to segment mirror and glass materials is considered in recent years. Although the laser and ultrasonic data are very intuitive for mirror and glass segmentation, these special types of equipment are always expensive and sensitive, sometimes inconvenient to set up in an indoor environment. If the environment is too complex, the laser or ultrasonic wave reflects many times or is absorbed by some special materials so that the equipment is unable to receive the information of the wave, the accuracy becomes dramatically down [23]. Previous special camera based methods always require additional input data such as polarization image, thermal image, light-field image and depth image. These extra inputs increase both the cost and the complex processes. Within the existing body of research for vision-based systems, there is a notable absence of methodologies dedicated to the video-based segmentation of glass and mirrors. While image-based segmentation techniques for glass and mirrors exist [12, 24, 25], their direct application to video sequences yields unsatisfactory results, making them impractical for real-world applications. Furthermore, conventional video object segmentation (VOS) methods without suitable video mirror and glass dataset fail to consider the unique motion characteristics associated with high-reflectivity materials like glass and mirrors [26,27,28,29,30,31]. Due to lack of video mirror and glass dataset and no consideration on reflective features, general deep-learning based VOS methods always tend to segment the reflections in mirrors or glass as real objects, resulting in inaccurate segmentation [32,33,34,35,36]. Recently, Wang et al. [37] uses ORB-SLAM3 [38] to extract the key points trajectories to segment the reflective regions, however, it fails at the regions that are lack of enough stable feature points.

In response to these limitations, this work proposes extra motion features of reflective textures and depth information as a post-processing mechanism to reduce the segmentation errors generated from existing models. To refine segmentation errors generated from existing models, special motion features which represent the converse motions of reflective textures are extra applied to remove the wrong segmentation on other objects. Different from the existing image-based deep-learning methods, the proposed method uses videos as input and it is suitable for any indoor environment without complex training. To decrease the wrong results from the wrong key points, the distinction between the predicted frame and real frame is extracted. It uses all pixels and calculated camera pose to find the mirror and glass region with reflections and refine the wrong results on general objects. Besides adding the motion features to refine the wrong results, a multi-level depth distinction based method is proposed to improve the segmentation quality on the boundary of the mirror and glass region for further accurate results. With the depth information as the prior information, the glass which segment the indoor and outdoor scenes without clear reflective textures is also predicted to improve the segmentation result quality. Moreover, addressing the scarcity of dedicated video datasets for glass and mirror segmentation, this work presents an entirely new video dataset to support future research in this domain. In particular, the following contributions are made:

-

This work proposes the first video-based refinement framework for mirror and glass segmentation, which fill the empty of mirror and glass segmentation in video processing field.

-

This work proposes a key points trajectory distinction based module to refine the wrong segmentation results from any other image-based mirror and glass segmentation model or video-based general object segmentation model. A multi-level depth map based module is proposed to refine the wrong region segmentation result.

-

A new mirror and glass segmentation dataset (MAGD) with mirror and glass videos in diverse indoor scenes and corresponding manually labeled masks is constructed. By comparing with state-of-the-art models, superior mirror and glass segmentation results are achieved on this original dataset. It reaches the highest successful rate at 0.969, mIoU at 0.852, and mPA at 0.950, which is around 40% - 50% higher on average.

2 Related works

2.1 Image-based mirror and glass segmentation

Directly applying image-based mirror and glass segmentation methods in video sequences is very challenging because of the unfixed textures in mirror and glass regions which make it difficult to keep accuracy in the whole video. Mei et al. [39] extracts the contextual contrasted feature to detect the mirror region in images. Lin et al. [40] uses the reflection information as prior information to help glass segmentation. However, they all fail in complex scenes. MirrorNet [11] is a mirror segmentation network based on a deep learning method that extracts both high-level and low-level features in a single image to detect the discontinuity of mirror boundary. Lin et al. [41] proposed a progressive method which contains relational contextual contrasted local module for extracting and comparing mirror and contextual features for correspondence, and edge detection and fusion module for extracting multi-scale mirror edge features. GDNet [12] is a glass segmentation network, in which multiple well-designed large-field contextual feature integration modules are embedded to harvest abundant low-level and high-level contexts from a large receptive field, for accurately detecting glass of different sizes in various scenes. Lin et al. [40] proposed a rich context aggregation module to extract multi-scale boundary features and designed a refinement module for reflection detection. But it always fails in non-reflection regions. He et al. [25] proposed an enhanced boundary learning module that focuses on the boundary segmentation of glass-like objects to refine the results with edge errors. Recently, a progressive glass segmentation network [42] has been proposed to aggregate features from high-level to low-level to pay more attention to edge segmentation of glass regions, resulting in higher accuracy. Lin et al. [43] exploited the semantic relation to infer the correlation between glass objects and other objects for glass surface detection. However, these methods are all based on images, which perform unsatisfactory results in video sequences.

2.2 Video object segmentation

Yuan et al. [29] aims to automatically segment the salient objects from videos without any manual prompt. It proposes a context-sharing transformer and semantic gathering-scattering transformer to model the contextual dependencies from different levels. However, not all glass or mirror objects are salient. Cho et al. [28] designs a novel motion-as-option network that treats motion cues in videos as an optional part for accurate salient object segmentation to decrease the influence of unreliable motion. Pei et al. [26] proposes a concise and practical architecture to align appearance and motion features in order to solve the nonaligned problems of optical flows among consecutive frames. Schmidt et al. [27] develops a novel operator that is used as drop-in replacements for standard convolutions in 3D CNNs to improve the performance on video segmentation tasks. Song et al. [44] propose a fast video salient object detection model, based on a novel recurrent network architecture to extract multi-scale spatio-temporal information for more accurate video object segmentation. Siam et al. [45] propose a novel teacher-student learning paradigm to segment the objects in both static or dynamic environment. Tan et al. [30] propose a stepwise attention emphasis transformer for polyp segmentation, which combines convolutional layers with a transformer encoder to enhance both global and local feature extraction. Miao et al. [31] utilizes a fast object motion tracker to predict regions of interest (ROIs) for the next frame, and proposes motion path memory to filter out redundant context by memorizing features within the motion path of objects between two frames. GFA [46] significantly improves the generalization capability of video object segmentation models by addressing both scene and semantic shifts, by leveraging frequency domain transformations and online feature updates. DeVos [47] introduces an architecture that combines memory-based matching with motion-guided propagation, resulting in robust matching under challenging conditions and strong temporal consistency. Wang et al. [34] propose dynamic visual attention prediction in spatio-temporal domain, and attention-guided object segmentation in spatial domain to focus on the consistency of visual attention behavior for low-cost segmentation. COSNet [48] emphasize the importance of inherent correlation among video frames and incorporate a global co-attention mechanism to improve segmentation results. ADNet [36] proposes a long-term segmentation model based on temporal dependencies to solve the accumulating inaccuracies in short-term segmentation. AGNN [35] builds a fully connected graph to efficiently pass parametric message between frames for more accurate foreground estimation. MATNet [49] presents a Motion-Attentive Transition Network for zero-shot video object segmentation, which provides a new way of leveraging motion information to reinforce spatio-temporal object representation. WCSNet [50] employs a Weighted Correlation Block (WCB) for encoding the pixel-wise correspondence between a template frame and the search frame for detecting and tracking salient objects. DFNet [51] captures the inherent correlation among video frames to learn discriminative features for unsupervised video object segmentation task. 3DC-Seg [52] applies 3D CNNs to dense video prediction tasks. F2Net [53] delves into the intra-inter frame details for the foreground objects segmentation. RTNet [54] proposes a reciprocal transformation network to discover primary objects by correlating the intra-frame contrast, the motion cues, and temporal coherence of recurring objects. Although Perazzi [55] proposed a benchmark dataset for VOS task, there are no clear categories for mirror and glass.

Directly using the above VOS approaches for mirror and glass segmentation (i.e., regarding mirror and glass as one of the object categories) is inappropriate when some real objects are reflected in the mirror or glass regions. On the one hand, due to the special motion features of the mirror and glass which have the converse movement from other general objects in the background, straightly using these models causes the wrong segmentation results in mirror and glass regions. On the other hand, due to lack of the video mirror and glass dataset, it is unable to relevantly train the network for specific mirror and glass segmentation by applying above VOS works. Wang et al. [37] uses key points trajectories and motion features of reflections to segment the mirror and glass in videos, however, it always fails when the reflection is not clear or no reflection in some glass such as windows connect the dark indoor and bright outdoor scene. This paper aims to address the video mirror and glass segmentation problem by constructing a benchmark dataset and proposing an effective refinement method for the problem.

3 Methodology

3.1 Framework formulation

Figure 1 shows the framework of the whole mirror and glass segmentation algorithm and the key points trajectory distinction (KPTD), and multi-level depth distinction (MLDD) module. Given a video \(\textbf{V}\), the goal of this work is to improve the accuracy of mirror and glass segmentation results \(\textbf{M}\) from related works, i.e.

where \(\mathbf {M_{ r }}\), \(\mathbf {M_{ t }}\), and \(\mathbf {M_{ d }}\) represents the mask result from other related works, KPTD and MLDD, respectively. In details, \(\mathbf {M_{ r }}\), \(\mathbf {M_{ t }}\), and \(\mathbf {M_{ d }}\) involve \(\textrm{F}\) frames with the resolution of width \(\textrm{w}\) and height \(\textrm{h}\). \(\rho \) and \(\omega \) are the functions simply representing for the processing on video and masks generated by different procedures to be introduced below.

Overall framework. First, the key points distinction module uses the trajectory information of every key point to predict the candidate mirror or glass region. Second, region distinction module calculated from camera pose is used to remove the wrong candidate regions wrong from the previous module. Meanwhile, multi-level depth map is used to extract the depth distinction region to provide candidate mirror or glass regions. Finally, all candidate regions are combined to refine the error segmentation results from related works to improve the accuracy

The input of the framework is the video sequence. We first use a state-of-the-art Simultaneously Location And Mapping (SLAM) method [38] to extract the trajectories of key points. Then based on the distinctive features of the key points, distinction of trajectories can be used to coarsely segment the possible mirror and glass region. Some wrong key points can be refined by reflective region distinction predicted by camera poses. Meanwhile, multi-level depths extracted from video sequences are used to detect the sudden depth change, which can be the candidate of the mirror an glass regions since they have the depth discontinuity distinction from the surroundings.

3.2 Key points trajectory distinction

In this module, our method highly relies on feature points. Consequently, if the texture of the reflection is too weak, (i.e., a white wall or a texture-less object appearing in the mirror and glass region, or if the drift problem occurs during extremely long-term tracking [56]), the accuracy significantly decreases. Nevertheless, considering typical living environments where textures are abundant in most scenarios, and since our focus does not encompass very long-term tracking and segmentation, ORB-SLAM3 [38] which performs the state-of-the-art efficiency and accuracy is utilized for key point trajectory extraction.

Based on the extracted rough key points results, a wrong points correction step corrects the wrong points to increase the reliability. Combining the detected key points which have different motions from others and boundaries extracted from input videos by using a simple sobel edge extraction algorithm [57], the possible reflective mirror and glass regions are extracted. The concept of key points trajectory distinction is shown in Fig. 2.

In detail, define \(x_{n1}\) and \(x_{n2}\) as the normalized coordinates of the two key points pixels in their respective camera coordinates at different times. The relation between \(x_{n1}\) and \(x_{n2}\) is defined as follows:

where \(\textbf{R}\) is the rotation matrix and \(\textbf{t}\) is the translation matrix. Meanwhile, \(\textbf{K}\) is defined as the camera’s internal parameters from the camera calibration. According to the pinhole model, the pixel coordinates of the two pixel points \(p_1\) and \(p_2\) are:

By knowing the camera’s internal parameters, the trajectory of every key point can be calculated from the rotation matrix and translation matrix, and the camera motion direction is defined as \(\textbf{V}\). Knowing the trajectory of every key point and the motion direction of camera. We classify key points into positive and negative by observing if the key point has the same motion direction as the camera or not. Combining (2) and (3), which uses the trajectory and boundary information, the candidate mirror and glass region is extracted by the KPTD module as:

where \(\mathbf {M_{ k } }\) means the mask generated from the KPTD module. \(\left( \left( \textbf{R};\textbf{t} \right) \cdot \textbf{V} > 0? \quad 1:0\right) \) denotes that if \(\textbf{R}\) and \(\textbf{t}\) have the same motion direction with the camera motion direction \(\textbf{V}\), the key point is marked as reflective point and it will be segmented as 1. Since it highly relies on the accuracy of extracted key points, it is necessary to check the reliability of the key points. Figure 3 shows the workflow of the wrong key points correction. The feature points always appear grouping spatial features, so using the local correlation algorithm [58] to correct the wrong outer points and temporal features to correct the wrong grouping points proves reasonable.

After the wrong points correction, the target region is extracted by graph cut [59] method. For example, by using the spatial feature of the key points, some key points are separated from the key points which are classified as the same category. It is plain to see that glass or mirrors will never be separated into several pieces, which means that they never perform separation in the spatial space. Hence, by calculating the constraints between different key points, if some key points are surrounded by key points that are classified to another category, it means that the surrounded key points are wrong key points that need correction. An improved K-NN method [60] is used to find the nearest 20 neighbor points for correction. Sometimes, the key points perform wrong in groups, which means that many key points are wrong and they are unable to be corrected by the spatial feature. The trajectory of the key points which is also called the temporal feature of these key points is utilized to correct the key points. Key points of one object never suddenly change to other categories in several adjacent frames when the camera moves slowly. So if some key points are different from the previous frames and the next frames in the same trajectory, it is obvious that these key points are wrong key points. By using both temporal features and spatial features to correct the wrong key points that are extracted by the SLAM system, the accuracy of the mirror and glass region segmentation results is improved.

But some errors occur when the key points are extracted inaccurately. To overcome this wrong case, camera poses calculated by key points trajectories are utilized to increase the accuracy of mirror and glass segmentation results.

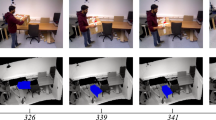

By comparing the predicted frame with the real frame, the target region with reflection is detected as Fig. 4 shows.

The predicted frame \(\mathrm {I_{t} ^{p} } \) is calculated as follows:

where \(\mathrm {I_{t-1} ^{r} } \) represents real previous frame, \(\textrm{C}\) denotes the camera pose and \(\varrho \) denotes the frame moving prediction function. Then by analyzing the distinction between the real next frame \(\mathrm {I_{t} ^{r} }\) captured by the camera with the predicted frame \(\mathrm {I_{t} ^{p} } \) calculated from (5), the different region is the reflective mirror or glass region as follows:

where \( \left( \left| \mathrm {I_{t}^{p}} - \mathrm {I_{t}^{r}} \right| > 0 ? \quad 1:0 \right) \) represents the distinction between the predicted frame from the real frame. If the result is not 0, it means that the reflection region exists, which will be marked as 1.

In detail, for example, assuming there is an object which is the other material and it is on the same plain beside the reflective mirror or glass, which means they are supposed to have the same motion features. When the camera moves to the right, the reflection in the mirror region and the other non-reflective material is supposed to move to the left relatively in the frames taken by the camera. By using the information calculated above, the next frame is able to be predicted. However, there is always the special motion of the reflection. As the example shows, when the camera moves to the right, the reflection and the other material are supposed to move to the same direction. But in fact, the reflection has an adverse motion to the other material, which contributes to the wrong prediction result of the next frame. Since the key points of the reflection are not enough for KPTD to segment the reflective regions, it is also impossible for SLAM to detect the abnormal motion in the reflective regions. So the final predicted frame has a different region compared to the real frame taken by the camera. By using the distinction between the predicted frame and the real frame, the reflective region is extracted from the comparison as Fig. 5 shows.

3.3 Multi-level depth distinction

Key points trajectory distinction highly relies on the reflection in the mirror and glass region. But in the general living environment, not all reflections are clear and stable in videos. However, we notice that depth inside of the mirror and glass and outside of the mirror and glass region always perform the depth distinction feature with multiple levels, which can be used to segment the sudden depth changing regions and boundaries. Figure 6 shows the workflow of the multi-level depth distinction.

We denote there are \(\textit{n}\) levels depth regions \(D_\textit{n}\) in current frame. Knowing the relative depth of every pixel and the camera location, the candidate mirror and glass region with sudden depth change is detected as \(\mathbf {M_{ d }}\).

In detail, first, the video sequence is inputted into the SLAM. After the depth calculation of different key points from SLAM, both 3D point cloud and camera poses are generated. With the camera poses from SLAM, locations of camera views are known as prior information. Based on the distance between point clouds and locations of camera views, a K-means cluster method [61] is used to cluster the inside feature points and outside feature points. Second, a multi-level depth map is generated by a depth map prediction method SC-Depth [62]. SC-Depth enables the scale-consistent prediction at inference time, which focuses on the depth consistency between adjacent views and can automatically deal with the moving object in videos. Unless no surroundings around the mirror or glass, nor no key points inside the mirror or glass region, the depth map will show the depth distinction around the boundary of mirror and glass. After obtaining the multi-level depth map, we project the clustered point cloud to the depth map for refinement. Since point cloud with depth information can guide the depth change around boundary and the multi-level depth map shows the pixel-level depth distinction. By fusing the results of the point cloud map and the pixel-level depth map, the final depth map is generated to guide the refinement step. The mirror or glass region has a sudden change in depth compared with the adjacent regions, and the most sudden depth difference is denoted as:

where, \(\textit{i}\) and \(\textit{j}\) denote the adjacent frames in the input video and \(D_i\), \(D_j\) denote the depth of the adjacent pixels. We define the deeper region as the higher value. Combining the (7), the candidate mirror and glass region \(\mathbf {M_{ d }}\) is:

After getting all outputted mask \(\mathbf {M_{ r }}\), \(\mathbf {M_{ t }}\), \(\mathbf {M_{ d }}\) from (4), (6), (8) and (1), we fuse them as follows:

At least two of them regard the region as a candidate mirror and glass region, the region will be segmented successfully to remove the errors in some outputs and improve the final accuracy.

4 Experiment

4.1 Dataset

The performance of the proposed method is evaluated by an original mirror and glass segmentation dataset (MAGD) including 36 video sequences which contain 9,960 frames in the most common indoor environment with 3840 \(\times \) 2160 resolution, then they are resized to 1280 \(\times \) 720 resolution for implementation, and the pixel-level mirror and glass masks are labeled manually. Considering that most videos in real life are at 30 fps, 60 fps, etc., and that 60 fps can capture more frames and information at same time, we use 30 fps as the dataset standard and consider the experiment more challenging. The length of these video sequences varies from 130 frames to 600 frames. To the best of our knowledge, MAGD is the first indoor benchmark specifically for video mirror and glass segmentation.

Dataset comparison Table 1 shows the comparison of different datasets from the relevant areas, including image-based mirror segmentation [11] (top group), image-based glass detection [12] (sencond group), video object segmentation [55, 63, 64] (third group), and ours. Our dataset embodies superior video quality (i.e., high resolution videos), substantial quantity (i.e., a large number of annotated frames), and it is the first step for long-term (i.e., long and constant frames in most videos) video mirror and glass segmentation.

Dataset construction The size, category, description and camera motion of the dataset are shown in Table 2. The mirror and glass video sequences are shown in Figs. 7 and 8 respectively. They are classified into simple and complex categories based on different numbers of obstacles and the complexity of camera motion. Sequences in the mirror dataset are classified into the simple mirror category since there are less than 3 surroundings around the target mirror. In contrast, sequences in the mirror dataset are classified into the complex mirror category since the surroundings of the target mirror region are complex. As same as the mirror dataset, sequences in the glass dataset are classified into the simple glass category and sequences in the glass dataset are classified into the complex glass category.

Dataset analysis To validate the diversity of MAGD and how challenging it is, its statistics are shown as follows:

-

Dataset category. Inspired by GDD dataset [12] and considering glass windows and glass walls are prominently used in both residential and commercial buildings with the distribution of 50% - 60% and 30% - 40% under real-world usage patterns, various mirror and glass targets are classified into such categories and proportions in this dataset. Besides, since our main target is to help robots and drones to avoid misleading position by the reflections or crashing into both mirror and glass from indoor videos, we increase the proportion of large glass and mirror in our dataset. As shown in Fig. 9(a), there are various categories of common glass in MAGD (e.g., glass table, glass window, glass cabinet, glass bookcase and glass wall). And Fig. 9(b) also shows the common mirrors (e.g., makup mirror, dressing mirror and wall mirror with different shapes and sizes) in different environments to add diversity.

-

Dataset location. This MAGD has mirror and glass located at different positions of the image, as illustrated in Fig. 9(c). The probability maps that indicate how likely each pixel belonging a mirror or glass region are computed to show the location distribution in MAGD. The overall spatial distribution tends to be centered, as mirror and glass are typically large and covers the center.

-

Dataset area. The size of the mirror and glass region is defined as a proportion of pixels in the image. In Fig. 9(d), it shows that the mirror and glass in MAGD varies in a wide range in terms of size to add diversity of the dataset.

4.2 Experimental environment and evaluation metrics

The basic experimental environment for the proposed algorithms is C++ and Python 3.6 on a machine with Intel i7 4700 CPU, Nvidia K620 graphic card, and 8 GB RAM. And the testing environment is different depending on different requirements of state-of-the-art works. Basically, a Nvidia RTX 4090 graphic card and 64 GB RAM machine is mainly used for implementation of state-of-the-art works.

The IoU, mIoU and mPA which are widely used in the semantic segmentation field [12, 65,66,67,68,69,70], are adopted for quantitatively evaluating the mirror and glass segmentation performance in every frame. Besides mIoU and mPA, an evaluation metrics named “successful rate” is utilized to further evaluate the results, since it is considered to be more relevant to practical applications. If the IoU is higher than the threshold, the current frame is regarded as a “successful frame” which is defined as:

Based (10), it is able to figure out how many percent frames are accurately detected in a video, and human can set different accuracy threshold for different applications for further evaluations.

4.3 Experimental results and analysis

As a first attempt to detect mirror and glass regions from video sequences, the effectiveness of the proposed methods is validated by comparing them with state-of-the-art methods from other related fields in Tables 3 and 4. Specifically, we choose MirrorNet [11], GDNet [12] from the image-based mirror and glass segmentation field, MOTAdapt [45], COSNet [48], AD Net [36], AGNN [35], MATNet [49], F2Net [53], HFAN [26], TMO [28] from video-based object segmentation field. For a relatively fair comparison, both the publicly available codes and the implementations with recommended parameter settings are used. All image-based methods are evaluated on the all MAGD dataset and all video-based methods are retarined on the MAGD training set. We set the simple mirror 1, 5, complex mirror 3, 6 ,9, 11, simple glass 1, 4, and complex glass 1, 2, 9, 10 as the test dataset, and the rest videos are used for retraining. On average, 1 frame needs 1.72 seconds for processing on KPTD module and 1.43 seconds on MLDD module in our experimental environment.

From Tables 3 and 4, since image-based mirror and glass segmentation methods have no concern about the temporal information, they always fail in the video sequences. Video-based object segmentation methods have no concern about the special motion information of reflection, they always fail in the mirror and glass detection. And our work can help to refine the errors in the state-of-the-art works to increase the accuracy for mirror and glass segmentation in video sequences.

Figure 10 shows the visual comparison of proposed methods to the state-of-the-art method on the MAGD dataset. From left to right are original frames, ground truth, results from SOTA works, and results refined by our work. After analysis of the results, there are two main reasons for failure cases. Firstly, for the reflective region, the main failed case is that if the target region is near the border of the image, the boundary segmentation results may have a low accuracy on the edge of mirror or glass. The proposed methods are sensitive to the motion of the contents in the mirror and glass regions, so when the contents in the mirror and glass regions have no clear edge compared with the contents close to the edge of mirror and glass, the segmentation error happens. Secondly, when there is the target region with unclear reflection and no clear depth difference with surroundings, the segmentation results may be wrong.

Table 5 reports the results in different frame rate conditions. The 15 fps, 30 fps and 60 fps are settled for experiment. Initially, a 60 fps video is taken. Next, from every 2 frames, 1 frame is selected for down-sampling to a 30 fps video. Finally, a 15 fps video is created by using the same strategy. GDNet [12] is used for evaluation as a typical example. From the table, it shows that 60 fps has the best performance. This is because of the abundant temporal information and contents in more frames which contribute to higher accuracy. However, in this paper, 30 fps is mainly selected as the experimental target, which is more common and relatively challenging in the daily life.

4.4 Ablation study and component analysis

Ablation experiments are conducted to verify the effectiveness of our design. First, we set the “base” as the GDNet [12] as an example, which shows advanced accuracy in overall dataset. Next, we add the temporal feature for key points correction, whose experiment is settled as “base (+temporal)”. Then, we add the predicted-real frames correction step for further improvement, which is represented as “base (+predict)”. Table 6 shows the performances of different ablated modules. As shown in the last row, our final proposed method with the all modules show clear improvement on baseline method. Table 7 evaluates the effectiveness of the proposed modules. It shows that both key points trajectory distinction and multi-level depth distinction are able to improve the segmentation performance.

4.5 Failure cases and limitations

Although the mirror and glass segmentation quality in video sequences is improved by implementing KPTD and MLDD methods, some problems and limitations still remain. Figure 11 shows typical failure cases that our method tends to segment wrong boundary when the motion nearby the edge of mirror or glass is not clear in reflections. Besides, if the edges overlapped in a complex situation and the reflection is not clear, our method may ignore such regions.

5 Conclusions

In this paper, we have explored the mirror and glass segmentation problem in video sequences. To the best of our knowledge, we have constructed the first video mirror and glass segmentation dataset (MAGD). It contains 9,960 images from 36 videos with manually annotated masks. Besides, a refinement framework has been proposed for mirror and glass segmentation in the indoor environment. It fully utilizes multiple information, including the key points trajectory, and multi-level depth map by analyzing the special motion information to refine the inaccurate results from other existing segmentation models which has limited performance in a video sequence. The experimental results show that the segmentation accuracy reaches the highest successful rate at 0.969, mIoU at 0.852, and mPA at 0.950, which is around 40% - 50% higher on average.

As a future work, we are working on improving our method by focusing on complex boundary information and paying more attention to the unclear reflections and tiny motions to help segment mirrors and glass boundaries more precisely in videos.

Data Availability

The data that supports the findings of this work can be available upon reasonable request. The original dataset is planned to open in a public dataset website V7 labs (https://www.v7labs.com) in the near future.

References

Gandhi D, Pinto L, Gupta A (2017) Learning to fly by crashing. In: 2017 IEEE/RSJ International conference on intelligent robots and systems (IROS). IEEE, pp 3948–3955

Dao T-K, Tran T-H, Le T-L, Vu H, Nguyen V-T, Mac D-K, Do N-D, Pham T-T (2016) Indoor navigation assistance system for visually impaired people using multimodal technologies. 2016 14th International Conference on Control, Automation, Robotics and Vision (ICARCV) 1–6

Dong E, Xu J, Wu C, Liu Y, Yang Z (2019) Pair-navi: peer-to-peer indoor navigation with mobile visual slam. In: IEEE INFOCOM 2019-IEEE conference on computer communications. IEEE, pp 1189–1197

Badrloo S, Varshosaz M, Pirasteh S, Li J (2022) Image-based obstacle detection methods for the safe navigation of unmanned vehicles: a review. Remote Sens 14(15):3824

Zhang Y, Ye M, Manocha D, Yang R (2017) 3d reconstruction in the presence of glass and mirrors by acoustic and visual fusion. IEEE Trans Pattern Anal Mach Intell 40(8):1785–1798

Whelan T, Goesele M, Lovegrove SJ, Straub J, Green S, Szeliski R, Butterfield S, Verma S, Newcombe RA, Goesele M et al (2018) Reconstructing scenes with mirror and glass surfaces. ACM Trans Graph 37(4):102–1

Liu Y, Cheng X, Ikenaga T (2024) Motion-aware and data-independent model based multi-view 3d pose refinement for volleyball spike analysis. Multimedia Tools Appl 83(8):22995–23018

Liu H, Iwamoto N, Zhu Z, Li Z, Zhou Y, Bozkurt E, Zheng B (2022) Disco: Disentangled implicit content and rhythm learning for diverse co-speech gestures synthesis. In: Proceedings of the 30th ACM international conference on multimedia. pp 3764–3773

Fang Q, Shuai Q, Dong J, Bao H, Zhou X (2021) Reconstructing 3d human pose by watching humans in the mirror. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 12814–12823

Zhang Y, Chen C, Yang S, Zhang J, Chu X, Zhang J (2020) How friendly are building materials as reflectors to indoor los mimo communications? IEEE Internet Things J 7(9):9116–9127

Yang X, Mei H, Xu K, Wei X, Yin B, Lau RWH (2019) Where is my mirror? In: Proc IEEE Int Conf Comput Vis (ICCV). pp 8808–8817

Mei H, Yang X, Wang Y, Liu Y-A, He S, Zhang Q, Wei X, Lau RWH (2020) Don’t hit me! glass detection in real-world scenes. In: Proc IEEE Conf Comput Vis Pattern Recognit. pp 3684–3693

Arnab Dey D-NL Samit Biswas (2024) Workout action recognition in video streams using an attention driven residual dc-gru network. Comput, Mater Continua 79(2):3067–3087

Wang J, Wang Z, Zhuang S, Hao Y, Wang H (2024) Cross-enhancement transformer for action segmentation. Multimedia Tools Appl 83(9):25643–25656

Li Z, Huang M, Yang Y, Li Z, Wang L (2022) A mirror detection method in the indoor environment using a laser sensor. Math Probl Eng 2022

Wang X, Wang J (2017) Detecting glass in simultaneous localisation and mapping. Rob Auton Syst 88:97–103

Wu S, Wang S (2021) Method for detecting glass wall with lidar and ultrasonic sensor. In: Proc. IEEE 3rd Eurasia Conf. IOT, Commun. Eng. (ECICE). pp 163–168

Mei H, Dong B, Dong W, Yang J, Baek S-H, Heide F, Peers P, Wei X, Yang X (2022) Glass segmentation using intensity and spectral polarization cues. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 12622–12631

Huo D, Wang J, Qian Y, Yang Y-H (2023) Glass segmentation with rgb-thermal image pairs. IEEE Trans Image Process 32:1911–1926

Xu Y, Nagahara H, Shimada A, Taniguchi R-i (2015) Transcut: transparent object segmentation from a light-field image. In: Proceedings of the IEEE international conference on computer vision. pp 3442–3450

Zhu Y, Qiu J, Ren B (2021) Transfusion: a novel slam method focused on transparent objects. In: Proceedings of the IEEE/CVF international conference on computer vision. pp 6019–6028

Mei H, Dong B, Dong W, Peers P, Yang X, Zhang Q, Wei X (2021) Depth-aware mirror segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 3044–3053

Tondin Ferreira Dias E, Vieira Neto H, Schneider FK (2020) A compressed sensing approach for multiple obstacle localisation using sonar sensors in air. Sens 20(19):5511

Tan X, Lin J, Xu K, Chen P, Ma L, Lau RW (2022) Mirror detection with the visual chirality cue. IEEE Trans Pattern Anal Mach Intell 45(3):3492–3504

He H, Li X, Cheng G, Shi J, Tong Y, Meng G, Prinet V, Weng L (2021) Enhanced boundary learning for glass-like object segmentation. In: Proceedings of the IEEE/CVF international conference on computer vision. pp 15859–15868

Pei G, Shen F, Yao Y, Xie G-S, Tang Z, Tang J (2022) Hierarchical feature alignment network for unsupervised video object segmentation. In: European conference on computer vision. Springer, pp 596–613

Schmidt C, Athar A, Mahadevan S, Leibe B (2022) D2conv3d: dynamic dilated convolutions for object segmentation in videos. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision. pp 1200–1209

Cho S, Lee M, Lee S, Park C, Kim D, Lee S (2023) Treating motion as option to reduce motion dependency in unsupervised video object segmentation. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision. pp 5140–5149

Yuan Y, Wang Y, Wang L, Zhao X, Lu H, Wang Y, Su W, Zhang L (2023) Isomer: isomerous transformer for zero-shot video object segmentation. In: Proceedings of the IEEE/CVF international conference on computer vision. pp 966–976

Tan Y, Chen L, Zheng C, Ling H, Lai X (2024) Saeformer: stepwise attention emphasis transformer for polyp segmentation. Multimedia Tools Appl 1–21

Miao B, Bennamoun M, Gao Y, Mian A (2024) Region aware video object segmentation with deep motion modeling. IEEE Trans Image Process

Tokmakov P, Alahari K, Schmid C (2017) Learning video object segmentation with visual memory. In: Proceedings of the IEEE international conference on computer vision. pp 4481–4490

Zhang K, Zhao Z, Liu D, Liu Q, Liu B (2021) Deep transport network for unsupervised video object segmentation. In: Proceedings of the IEEE/CVF international conference on computer vision. pp 8781–8790

Wang W, Song H, Zhao S, Shen J, Zhao S, Hoi SC, Ling H (2019) Learning unsupervised video object segmentation through visual attention. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition pp 3064–3074

Wang W, Lu X, Shen J, Crandall DJ, Shao L (2019) Zero-shot video object segmentation via attentive graph neural networks. In: Proceedings of the IEEE/CVF international conference on computer vision. pp 9236–9245

Yang Z, Wang Q, Bertinetto L, Hu W, Bai S, Torr PH (2019) Anchor diffusion for unsupervised video object segmentation. In: Proceedings of the IEEE/CVF international conference on computer vision. pp 931–940

Wang Z, Liu Y, Cheng X, Ikenaga T (2023) Key points trajectory and predicted-real frames distinction based mirror and glass detection for indoor 5g signal analysis. In: Journal of physics: conference series, vol 2522. IOP Publishing, p 012033

Campos C, Elvira R, Rodr’iguez JJG, Montiel JMM, Tardós JD (2020) Orb-slam3: an accurate open-source library for visual, visual-inertial, and multimap slam. IEEE Trans Robot 37:1874–1890

Mei H, Yu L, Xu K, Wang Y, Yang X, Wei X, Lau RW (2023) Mirror segmentation via semantic-aware contextual contrasted feature learning. ACM Trans Multimedia Comput Commun Appl 19(2s):1–22

Lin J, He Z, Lau RW (2021) Rich context aggregation with reflection prior for glass surface detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 13415–13424

Lin J, Wang G, Lau RW (2020) Progressive mirror detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 3697–3705

Yu L, Mei H, Dong W, Wei Z, Zhu L, Wang Y, Yang X (2022) Progressive glass segmentation. IEEE Trans Image Process 31:2920–2933

Lin J, Yeung Y-H, Lau R (2022) Exploiting semantic relations for glass surface detection. Advances in Neural Information Processing Systems 35:22490–22504

Song H, Wang W, Zhao S, Shen J, Lam K-M (2018) Pyramid dilated deeper convlstm for video salient object detection. In: Proceedings of the European conference on computer vision (ECCV). pp 715–731

Siam M, Jiang C, Lu S, Petrich L, Gamal M, Elhoseiny M, Jagersand M (2019) Video object segmentation using teacher-student adaptation in a human robot interaction (hri) setting. In: 2019 International conference on robotics and automation (ICRA). IEEE, pp 50–56

Song H, Su T, Zheng Y, Zhang K, Liu B, Liu D (2024) Generalizable fourier augmentation for unsupervised video object segmentation. In: Proceedings of the AAAI conference on artificial intelligence, vol 38. pp 4918–4924

Fedynyak V, Romanus Y, Hlovatskyi B, Sydor B, Dobosevych O, Babin I, Riazantsev R (2024) Devos: flow-guided deformable transformer for video object segmentation. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision. pp 240–249

Lu X, Wang W, Ma C, Shen J, Shao L, Porikli F (2019) See more, know more: unsupervised video object segmentation with co-attention siamese networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 3623–3632

Zhou T, Wang S, Zhou Y, Yao Y, Li J, Shao L (2020) Motion-attentive transition for zero-shot video object segmentation. In: Proceedings of the AAAI conference on artificial intelligence, vol 34. pp 13066–13073

Zhang L, Zhang J, Lin Z, Měch R, Lu H, He Y (2020) Unsupervised video object segmentation with joint hotspot tracking. In: Computer vision–ECCV 2020: 16th European conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XIV 16. Springer, pp 490–506 (2020)

Zhen M, Li S, Zhou L, Shang J, Feng H, Fang T, Quan L (2020) Learning discriminative feature with crf for unsupervised video object segmentation. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXVII 16. Springer, pp 445–462

Mahadevan S, Athar A, Ošep A, Hennen S, Leal-Taixé L, Leibe B (2020) Making a case for 3d convolutions for object segmentation in videos. arXiv:2008.11516

Liu D, Yu D, Wang C, Zhou P (2021) F2net: learning to focus on the foreground for unsupervised video object segmentation. In: Proceedings of the AAAI conference on artificial intelligence, vol. 35. pp 2109–2117

Ren S, Liu W, Liu Y, Chen H, Han G, He S (2021) Reciprocal transformations for unsupervised video object segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 15455–15464

Perazzi F, Pont-Tuset J, McWilliams B, Van Gool L, Gross M, Sorkine-Hornung A (2016) A benchmark dataset and evaluation methodology for video object segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 724–732

Strasdat H, Montiel J, Davison AJ (2010) Scale drift-aware large scale monocular slam. Robot: Sci Syst VI 2(3):7

Kanopoulos N, Vasanthavada N, Baker RL (1988) Design of an image edge detection filter using the sobel operator. IEEE J Solid-State Circ 23(2):358–367

Suzuki T, IKENAGA T (2014) Spatio-temporal feature and mrf based keypoint of interest for cloud video recognition. IIEEJ Trans Image Electron Visual Comput 2(2):150–158

Barath D, Matas J (2018) Graph-cut ransac. In: Proc. IEEE Conf. Comput. Vis. Pattern Recognit. pp 6733–6741

Mahdaoui A, Sbai EH (2020) 3d point cloud simplification based on k-nearest neighbor and clustering. Adv Multimedia 2020:1–10

Lloyd S (1982) Least squares quantization in pcm. IEEE Trans Inf Theor 28(2):129–137

Bian J-W, Zhan H, Wang N, Li Z, Zhang L, Shen C, Cheng M-M, Reid I (2021) Unsupervised scale-consistent depth learning from video. Int J Comput Vision 129(9):2548–2564

Ochs P, Malik J, Brox T (2013) Segmentation of moving objects by long term video analysis. IEEE Trans Pattern Anal Mach Intell 36(6):1187–1200

Li F, Kim T, Humayun A, Tsai D, Rehg JM (2013) Video segmentation by tracking many figure-ground segments. In: Proceedings of the IEEE international conference on computer vision. pp 2192–2199

Zheng Z, Huang G, Yuan X, Pun C-M, Liu H, Ling W-K (2022) Quaternion-valued correlation learning for few-shot semantic segmentation. IEEE Trans Circ Syst Video Technol

Cheng B, Schwing A, Kirillov A (2021) Per-pixel classification is not all you need for semantic segmentation. Adv Neural Inf Process Syst 34:17864–17875

Xie E, Wang W, Yu Z, Anandkumar A, Alvarez JM, Luo P (2021) Segformer: simple and efficient design for semantic segmentation with transformers. Adv Neural Inf Process Syst 34:12077–12090

Wang W, Xie E, Li X, Fan D-P, Song K, Liang D, Lu T, Luo P, Shao L (2022) Pvt v2: improved baselines with pyramid vision transformer. Comput Visual Media 8(3):415–424

Yu W, Luo M, Zhou P, Si C, Zhou Y, Wang X, Feng J, Yan S (2022) Metaformer is actually what you need for vision. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 10819–10829

Liu Z, Mao H, Wu C-Y, Feichtenhofer C, Darrell T, Xie S (2022) A convnet for the 2020s. In: Proc. IEEE Conf. Comput. Vis. Pattern Recognit. pp 11976–11986

Acknowledgements

This work was supported by KAKENHI (21K11816).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, Z., Liu, Y., Cheng, X. et al. Key points trajectory and multi-level depth distinction based refinement for video mirror and glass segmentation. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-19627-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-024-19627-5