Abstract

The lack of symptoms in the early stages of liver disease may cause wrong diagnosis of the disease by many doctors and endanger the health of patients. Therefore, earlier and more accurate diagnosis of liver problems is necessary for proper treatment and prevention of serious damage to this vital organ. We attempted to develop an intelligent system to detect liver failure using data mining and artificial neural networks (ANN), this approach considers all factors impacting patient identification and enhances the probability of success in diagnosing liver failure. We employ multilayer perceptron neural networks for diagnosing liver failure via a liver patient dataset (ILDP). The proposed approach using the backpropagation algorithm, improves the diagnosis rate, and predicts liver failure intelligently. The simulation and data analysis outputs revealed that the proposed method has 99.5% accuracy, 99.65% sensitivity, and 99.57% specificity, making it more accurate than Previous related methods.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

The tremendous development of data has been one of the main challenges of medical science during the last two decades [1]. Data mining and machine learning (ML) approaches are expanding in tandem with the fast growth of biotechnology procedures and biodata analysis [2, 3]. Data analysis and the construction of numerous models are being employed in the medical industry to predict and diagnose illnesses. The primary benefit of extracting the disease’s diagnostic model is that clinicians may make decisions with greater certainty and avoid medical errors [4, 5]. Furthermore, because medical data contains a significant number of vital elements for disease diagnosis and researchers only consider a fraction of these features, the study of such significant characteristics should be done with caution [6,7,8].

The acquisition of intelligent approaches in bioinformatics has grown in tandem with the advent of contemporary computers. Obtaining comprehensible information has become a key challenge for physicians due to unclear medical data. This obstacle can lead to disease misdiagnosis and, as a result, inadequate disease treatment [9,10,11]. Therefore, if specialists can use intelligent techniques with the ability to make decisions and carefully examine complex and ambiguous data in the process of patient diagnosis and treatment, they will be successful. Medical errors, costs, and time can be minimized through intelligent systems [12,13,14].

Applying data mining knowledge in liver disease diagnosis is one of the intelligent approaches that can identify liver issues more quickly and accurately by analyzing the effective factors [15, 16]. The application of data mining in medical contexts differs greatly from others. One of which is the low-quality medical data in comparison to other sectors. ML is a branch of computer science that employs computer algorithms to detect patterns in big data and to forecast various events based on that data [17,18,19]. A machine can analyze a set of data and make or predict decisions based on what it has learned in ML. Because clinical information is readily available, ML plays a critical role in medical decisions and the timely identification of patients [20]. The purpose of the feature selection procedure for model creation is to select an optimal set of features for classification. Choosing the proper feature can improve the model’s performance and minimize data size. The feature selection problem may be formulated as a hybrid optimization problem to select a feature subset that is optimized by the evaluation criterion. Data mining approaches are utilized by researchers to select features because ML algorithms have been widely employed in recent years to address the big data dimension problem and extract optimal features [21,22,23].

The liver, which is placed below the ribs and above and to the right of the stomach, constitutes one of the most essential organs in the body. The liver has a smooth, squishy, rubbery surface and a conical shape. It is a dark reddish-brown hue. This organ, which few people consider, is so vital that failure to complete its functions would result in death within a day. Liver disease is one of the most frequent and dangerous illnesses in the world today, and it is one of the major causes of mortality. Early diagnosis of this condition can greatly improve the management, treatment, and prevention of its consequences [24, 25]. The liver diagnosis and treatment, like any other disease, generally begins with a history and physical examination of the patient. Checking the cardiac rhythm, the function of the lungs when breathing, and the appearance of atypical lumps in the abdomen are some of the initial investigations. However, in many cases, these symptoms cannot be cited on their own because the clinical symptoms seen in liver disease frequently appear in others. As a result, diagnosing liver disease necessitates the laboratory procedures such as ultrasonography or magnetic resonance imaging (MRI) [24, 26].

On the other hand, the human liver plays an important role in many vital functions, therefore, with the appearance of a liver disorder, many symptoms can be expected, but due to the progressive course of liver problems, most liver diseases do not show any specific symptoms until advanced stages. The lack of symptoms in the early stages may cause the wrong diagnosis of the disease by many doctors, and this wrong diagnosis can lead to the wrong treatment and prescription of inappropriate medicine, and as a result, the creation of acute and long-term symptoms of this disease or other problems. Therefore, earlier and more accurate diagnosis of liver problems with the help of a detailed analysis of the effective features of an automatic medical diagnosis system, for correct treatment and prevention of serious damage to this vital organ, seems necessary. Since the diagnosis of co-insufficiency diseases has a large amount of patient data, the MLP network can provide good results for problems with a large amount of data.

In the past, various methods have been used to diagnose liver disease, including intelligent systems based on data mining techniques. Most of the previously proposed methods have not been able to detect liver failure in people well and also do not have a low error rate and are sensitive to converting and detecting values. And in this article, we tried to improve the problems of previous works and reduce the error rate by using the backward neural network. We designed a fully automatic system that can be used as a smart and reliable assistant alongside the treatment staff in diagnosing liver patients in medical centers and specialized clinics.

We attempted to develop an intelligent system to detect liver failure. Using data mining and artificial neural networks (ANN), this approach considers all factors impacting patient identification and enhances the probability of success in diagnosing liver failure. We employ multilayer perceptron neural networks for diagnosing liver failure via a liver patient dataset. When compared to previous methods, the proposed solution utilizes the backpropagation algorithm, improves the diagnosis rate, and predicts liver failure more accurately and intelligently. Figure 1 depicts the proposed approach architecture. As the limitations of the proposed approach, we can mention the desired learning model. The neural network learning model is highly dependent on the type of input classified data that evolutionary algorithms can be used in the training phase of this type of network.

The main contribution of this paper are as follows:

-

Intelligent diagnosis and prediction of liver diseases.

-

Introducing a reliable assistant and smart tool to help the treatment staff.

-

Reducing doctors’ misdiagnoses and consequently helping patients’ health.

-

Using multilayer neural network and backpropagation algorithm in introducing the proposed model.

-

Increasing the three criteria of accuracy, sensitivity and specificity compared to other previous methods.

The rest of the paper is structured as follows. Section 2 represents the related works. Then, the methods are described in Sect. 3, including dataset, ANN, and backpropagation algorithm. In Sect. 4, We introduce the proposed method, and in Sect. 5, we discuss about the advantages of the proposed approach and illustrate the simulation details and compare our proposed solution with others. Finally, Sect. 6 concludes the paper.

2 Related work

Intelligent mechanisms have been employed for years in all stages of liver failure diagnosis, from the medical image pre-processing and features analysis (including features extraction and selection), to train, classify, and produce optimal desired outputs from inputs. These techniques are generally categorized as ML methods, evolutionary algorithms, and fuzzy systems. Researchers incorporate various solutions such as Naïve Bayes (NB), Decision Tree Algorithms, k-nearest neighbor (KNN), support vector machine (SVM), Bayesian Networks, Artificial Neural Networks (ANN), evolutionary and optimization algorithms such as Genetic Algorithms, and Particle Swarm Optimization (PSO), deep learning, and hybrid mechanisms to predict and diagnose liver disease [13, 27, 28]. In the following, we review some of the latest studies between 2019 and 2023.

A chaotic multi-order optimization algorithm-based new method was proposed by Sreejith et al. [29] for feature subset selection. Indian Liver Patient Database (ILPD) [30], thoracic surgery, and Pima datasets were tested by a new model and random forest classifier. The missing values and noise affect the classification accuracy. If the number of missing items for a feature is greater than 10, the feature is rejected. Otherwise, these missing values can be calculated using the KNN method and by finding k-nearest neighbors to the missing data. They split the data into two training and testing sets equal to 70% and 30%, and used the Minkowski distance to measure the distance between two observations. Kumar and Thakur [31] proposed the NWFKNN approach to predict liver disease with the aim of unbalanced data classification performance improvement. The improved NWFKNN is a combination of NWKNN and Fuzzy-NWKNN algorithms. The authors evaluated their method on BUPA, ILPD, and MPRLPD datasets and found that the improved NWFKNN performs better than the NWFKNN and Fuzzy-NWKNN mechanisms in ILPD.

A hybrid model based on the Antlion Optimization algorithm and k-nearest neighbor algorithm to diagnose liver disease was provided by Javadzadeh et al. [32]. Their solution based on machine learning algorithms was designed to classify people into two categories: healthy and those with liver disease The University of California, Irvine (UCI)’s ILPD ML Dataset contains 583 independent records with 10 features for liver disease. Singh et al. [33] employed several classification techniques such as SMO, J48 tree, KNN, logistic regression, basic Bayesian, and random forest algorithm with the liver patient dataset. They processed ILPD on the WEKA simulator using a feature selection technique with 10 stages of validation testing. The results showed that the accuracy of logistic regression and random forest was higher compared to other algorithms. Ardam and Gharehchopogh [34] diagnosed liver disease by enhancing AdaBoost and Firefly algorithms. Their dataset consisted of 583 samples with 10 individual features taken from the UCI ML dataset. The purpose of integrating the AdaBoost algorithm and Firefly was to strengthen and increase the accuracy of liver disease diagnosis. The training data included 80% and the test data included 20% of the total data, and outcomes demonstrated that the hybrid model’s accuracy% with feature selection is better compared to the model without feature selection. The accuracy percentage with five features was equal to 98.6% in the best case and 94.1% in the general case with all features.

The liver patients were classified by Ahmadi et al. [35], based on laboratory indicators using a data mining approach. They used PSO and adaptive neural-fuzzy inference systems to diagnose liver disorders in healthy and sick people and received the dataset from the valid database of the UCI reference. The authors considered the accuracy, sensitivity, and accuracy criteria for evaluation. The combination of ANFIS and PSO algorithms has an average accuracy of 99.14 for ILPD. Joloudari et al. [36] represented decision tree algorithms called Boosted C5.0 and CHAID to diagnose liver disease. In total, 92 rules were generated with Boosted C5.0, and there was a gender factor in 32 generated rules. The results showed that women are about 1.13 times more susceptible to liver disease than men, and DB, ALB, SGPT, TB, and A/G factors have a significant effect on the prediction of liver disease.

An ANN was employed by Musunuri et al. [37] to predict liver disease. Their model was based on a Multi-Layer Perceptron and has been made of input, hidden layer, and output layers. Each neuron in a layer was connected to all neurons in the adjacent layers through acyclic arcs and received data in the form of external input and produced an output using its learning algorithm. Okanoue et al. [38] demonstrated an AI/NN method for screening nonalcoholic fatty liver disorders. They enlisted 324 and 74 histologically diagnosed patients, respectively, for training and validation experiments, and assessed their approach using sensitivity, specificity, positive predictive, and negative predictive value factors, achieving satisfactory results. Constantinescu et al. [39] suggested several convolutional neural networks (CNN) architectures for classifying fatty liver damage in photos using just pixels and diagnostic labels as input. The authors trained and verified suggested models via a dataset of 629 pictures that included two types of liver imaging: normal and steatosis.

Considering the inferior quality of ultrasound images taken from different stages of fatty liver disease, Gaber et al. [40] provided an ML and a vote-based classifier-based CAD mechanism for liver tissue classification. The authors’ 4-step solution, after image classification without segmentation, uses a matrix and statistical mechanism to extract different features, and finally, the vote-based classifier will identify the fatty liver tissue. Mutlu et al. [41] developed a CNN-based technique for extracting valuable data from massive medical data and automated diagnosis of liver failure, which compares to conventional ML-based approaches such as SVM and NB using ILPD and BUPA datasets achieved higher accuracies for liver diseases images classification. Ghazal et al. [42] represented a neural networks-based mechanism for optimal prediction and diagnosis of liver failure. The authors’ proposed solution, using the UCI ML dataset, achieved high accuracy and low error in the real-time diagnosis of the disease.

In [43], the authors proposed a novel architecture based on ensemble learning and enhanced preprocessing to predict liver disease using the Indian Liver Patient Dataset (ILPD. They used several data preprocessing methods, such as data balancing, feature scaling, and feature selection, to improve the accuracy with appropriate imputations. Multivariate imputation was applied to fill in missing values. On skewed columns, log1p transformation was applied, along with standardization, min–max scaling, maximum absolute scaling, and robust scaling techniques. The selection of features was carried out based on several methods including univariate selection, feature importance, and correlation matrix. Their proposed model using extra tree classifier and random forest, outperformed the other methods with the highest testing accuracy of 91.82% and 86.06%, respectively.

In [44], the authors proposed an integrated feature extraction approach to categorize liver patients. In the pipeline, the proposed method first imputes the missing values and outliers for pre-treatment. Then, integrated feature extraction applies the pre-processed data to extract the significant features for classification. Their proposed approach incorporates several ML algorithms, including logistic regression (LR), random forest (RF), K-nearest neighbor (KNN), support vector machine (SVM), multilayer perceptron (MLP), and the ensemble voting classifier andhas an accuracy of 88.10%, a precision of 85.33%, a recall of 92.30%, an F1 score of 88.68%, and an AUC score of 88.20% in predicting liver diseases.The findings suggest that their system could be used to supplement a physician’s diagnosis of liver disease.

Ramana et al. studied selected classification algorithms on medical datasets The classification algorithms considered in their study were Bagging, IBK, J48, JRip, Multilayer perceptron (MP) and Naive Bayes (NB) classifiers. And from the obtained results, the bagging method had an accuracy of 69.3% and had a better performance than the rest of the algorithms [45].

Kabir et al. have been using different ML techniques to obtain better performance of their models; ensemble techniques were also used to combine multiple base learner models [46]. The ML technique called super learning or stacked-ensemble was an ensemble method that found the optimal weighted average of diverse learning models. They have used super learning or stacked-ensemble achieving better performance on four benchmark data sets that are related to healthcare. Experimental results showed that super learning had a better performance compared to the individual base learners and the baseline ensemble and the simulation results also showed that their proposed method had an accuracy of 71.80% compared to other methods.

Kumar et al. showed classification mode of dataset of liver disorder patients in to forecast/predict correct liver diagnosis using various algorithms of data minin [47]. They have analyzed with real patient dataset for constructing classification models to forecast liver diagnosis. They applied five classification algorithms to given dataset and parameters such as precision, recall, and accuracy were analyzed to determine performance of these classifiers. Investigations were carried for performance improvement of classification models. Ultimately, the results showed promising results in diagnosis of liver disease during the nascent stages.

Le TL et al. presented a fuzzy c-means clustering interval type-2 cerebellar model articulation neural network (FCM-IT2CMANN) method to help physicians improve diagnostic accuracy [48]. They combined two classifiers, in which the IT2CMANN was the primary classifier and the fuzzy c-means algorithm was the pre-classifier. First, they divided data into n c groups using the pre-classifier, and then applied the main classifier to determine whether the sample was in a healthy or diseased state and they also Implemented the gradient descent method for updating the FCM-IT2CMANN parameter. They used classification of breast cancer and liver disease datasets from the University of California at Irvine to illustrate the effectiveness of their proposed classifier and they showed that the accuracy of this classification model was 74.83%.

Anand et al. proposed a new hybrid model for liver syndrome classification for analysis of the patient’s medical data via hybrid artificial neural network [49]. The medical records were classified based on the possibility of existence of disease. They used M-PSO for feature selection of input variables and M-ANN algorithm for disease classification. The hybrid approach significantly improved the accuracy compared to existing classification algorithms. They examined and evaluated the results of the algorithm using Spark tool [38].

3 Methods

In this section, we will give a brief explanation about the data set that we use in the training phase and evaluation of the proposed method, as well as the generalities of the Artifical neural network and the backpropagation algorithm.

3.1 Dataset

The ILPD dataset of liver patients obtained at the Department of Gastroenterology of Andhra Pradesh, India, to evaluate the classification algorithms, is utilized in this study. There are 416 liver and 167 non-liver patient records in this dataset. The selector is a class label that is used to categorize groups (hepatic or non-hepatic). There are 441 male and 142 female patients in the dataset. Table 1 contains information on the features of this dataset. The numbers in the normal value column are for a healthy person who does not have liver disease.

In the following, we show an image from the used dataset of the affected and healthy livers in Fig. 2.

3.2 Artificial Neural Network (ANN)

Some constructed features may be unrelated to the type of network traffic. These features will reduce classification accuracy as well as sample processing speed. As a result, in the second phase, we employ SVD to extract features and lessen the destructive effect of irrelevant features on traffic types.

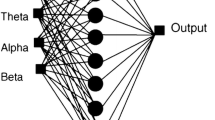

An ANN is a computing machine inspired by the structure of a biological neural system. The most basic part of building a neural network is the neuron. A neuron is a processing unit that receives input from a source and reaches the final output by defining nonlinear behavior to reach the network [50]. You can see the neural network structure in Fig. 3.

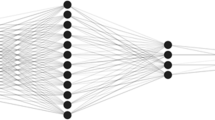

The main purpose of a neural network is to build a model using inputs so that this trained network can predict outputs using new inputs that the network has not encountered before. In the diagnosis of diseases and the medical field, the multilayer perceptron neural network (MLP) is mostly used, which consists of an input layer, hidden inner layers, and an output layer. We considered the number of hidden layers to be 20. On the one hand, this number of layers, accelerates calculations and provides acceptable accuracy of the result and on the other hand, increasing the number of layers causes the complexity of the network and, as a result, creates a wrong answer. The training of a neural network is done by matching weights to reduce network error. It refers to the discrepancy between the network result and the real values. At the end of the training phase, the network provides a model that can predict the output values by the inputs. In this research, the backpropagation algorithm, which is one of the most widely used training algorithms of multilayer perceptron neural networks, has been used. In the next section, we will explain how this tutorial algorithm works.

3.3 Backpropagation algorithm

The backpropagation algorithm serves as an extension of the Widrow-Hoff learning rule for multilayer networks and sigmoid functions. This method uses the delta rule or gradient descent algorithm to reduce the error and the weights start to correct in the opposite direction of the gradient in the error function. The term post-diffusion refers to a behavior in which the gradient is calculated for nonlinear multilayer networks [50]. The system for backpropagation is split into two phases: moving forward and backward. Each sample joins the neural network during the forward phase, and the parameters of the neurons are successively updfated across the ingress to the egress layer (Algorithm 2 in Fig. 4). It should be noted that we used TANSIG and Logsig activation functions for intermediate layers and Liner function for the output layer of neural network. These two functions are widely used in the back propagation algorithm because they are continuous and differentiable.

All the input values to a neuron are calculated as follows:

where ai is the number of ith neurons and wij is the weight of the connection of ith neuron to jth neuron. After calculation using the above formula, by applying the activation function f, the value of xj is converted to the output value of that neuron (input of the next layer neuron).

In these systems, the tangent-sigmoid activation process is frequently utilized. Multilayer perceptron structures are another name for them. The backpropagation technique is employed to train these structures. The weights are not altered and stay fixed in the forward process. The weights are compared to the true amounts at the exact same time the egress is generated, and an error number is computed. This error then propagates in the opposite direction of the forward mode within the network. At this point, the weights are adjusted to approach the actual values. In the backward stage, the weights of the neurons begin to adjust starting from the outer layer to the input layer, which moves to the left from the last layer to the first layer [51]. The purpose of adjusting the weights is to reduce the network error, which is usually the least mean square error and is defined as follows:

Here w denotes the weight, aj, k represents the egress amount of the jth neuron in the final layer taken from the training sample k, and oj, k is the real amount of the jth neuron in the training sample k. There are several approaches to reducing the amount of E (w). The steepest descent technique is one of the most employed, with weights modified following the subsequent calculation:

So, \({w}_{ji}^{n}\) appears the weight of the i to the jth neuron during the period n, as well as ŋ will be the training rate, which is a constant amount between 0 and 1, and \(\frac{\partial E}{{\partial W}_{ji}^{n}}\) gradient (derivative) at point \({ w}_{\text{j}\text{i}}^{\text{n}}\), which can be considered as a change in the value of error E due to a change in weight \({ w}_{\text{j}\text{i}}^{\text{n}}\). The initial values of the weights are usually small and random. The slope value in the above equation is expanded as follows:

Where f ′(xj) is the first derivative of the transfer function, which is usually a sigmoid function, ai is the input value of neurons and δj is the error value of jth neuron. X is the error value for the last layer neurons. The above equation is simplified as follows:

So oj is the actual value and oj is the predicted value which is adjusted from the combination of equations and weights for the output layer. For other layers, the value δj is obtained using the following equation:

Where k represents the neurons that receive the output value from the jth layer. This rule with the adjustment of weights and network connections is also known as the generalized delta rule. Sometimes another parameter called momentum is added to the equation:

The momentum value is between 0 and 1 and is used to prevent oscillation in finding the minimum value at the error level and to increase the level of convergence. Backpropagation networks are controlled by several factors. Selecting model parameters, network topology, and training examples to build an optimal network is often a difficult task [51]. The ingress layer neurons amount in the proposed NN is equivalent to the ingress elements, and the amount of hidden layer neurons is chosen for each ingress set. The number of neurons used in the hidden layer is 3 neurons. On the one hand, this number of neurons in the hidden layer speeds up the calculations and provides acceptable accuracy of the result and on the other hand, increasing the number of hidden neurons causes the network to over-fit, resulting in the wrong answer. Finally, two classes in the output layer are used to display the results for the classification of individuals (with liver failure and non-liver failure). In the proposed method, Tansig and Logsig activation functions are used for the middle layers, and the Linear function is used for the output layer. These two functions are widely used in the backpropagation algorithms due to their continuity and derivability.

Considering that in the proposed approach we used the back propagation learning algorithm and the gradient method is one of the common methods in this training algorithm for updating the weights, we used it in the network training section. The logic of the gradient method is to find the weights that the network has the least error, exactly the goal that we are looking for in our proposed approach, so that we can diagnose the disease with the least error by using the weight change. The output of each layer in each step is recorded on the same neuron that has a high error, and this process is repeated until the error propagation algorithm can obtain the minimum possible error for that neuron.

4 Proposed method

We describe a system based on multilayer perceptron neural networks to diagnose liver failure. Initially, the backpropagation approach is applied to train our neural network using the ILPD dataset, and the neural network can then predict liver disease with high accuracy. The proposed solution’s pseudo-code and its flowchart are illustrated in Figs. 5 and 6, respectively.

The reasons for using MLP and Backpropagation algorithm in the proposed method are as follows:

-

1.

The diagnosis of diseases such as liver failure has a large amount of patient data and the multi-layered perceptron model can provide good results for problems with a large amount of data.

-

2.

The treatment of liver disease requires quick diagnosis, that is why the time parameter is very important and necessary in the data training phase, and the MLP model does not need much time to predict the test data after the training phase.

One of the basic problems of training MLP networks is deciding how to learn (i.e. choosing the weights and biases of hidden layer nodes). Since the nodes of the hidden layer do not have a target output in MLP networks, it is not possible to define an error function that is specific to that node. Instead, each error function for that node depends on the parameter values in the previous layers (because the previous layers determine the input to that node) and the subsequent layers (because the output of that node affects the calculation of the error function E(X,θ)). This coupling of parameters between layers can make the math of the problem very difficult (primarily as a result of using the multiplication rule), and slow down the final gradient descent calculations if not implemented intelligently. Backpropagation solves both problems by simplifying the mathematics of gradient descent, while also facilitating its efficient computation.

5 Discussion

All the previous researches presented are all able to diagnose and predict liver patients using supervised solutions, but in reality, supervised solutions cannot discover suitable prediction patterns, for this purpose, in this article, we tried to use an intelligent and unsupervised approach based on artificial neural network techniques to predict liver patients. The neural network in the proposed method discovers hidden patterns in sick people compared to healthy people by examining the target data set. We implemented and evaluated the performance of the proposed system in MATLAB simulator. The results of the implementation of the proposed system have shown that the performance and accuracy of the algorithm in the target data set is acceptable and it is also improved compared to previous similar methods. Among the limitations of this article, we can mention the desired learning model because the neural network has a learning model that is highly dependent on the type of input categorized data.

In this paper, evaluation metrics (accuracy, specificity, and sensitivity) are employed to access the proposed method, and the ILPD data set was employed to compare our solution with other methods. The results of similution will be presented in Fig. 7.

Since the liver plays an essential role in the body’s metabolism, the diagnosis of liver disease is of great importance. Recognizing a diseased liver as a healthy liver or a diseased liver will have irreversible consequences. Hence, the above evaluation criteria are considered to access the proposed method.

Accuracy

The most well-known and general criterion for determining the performance of a classification algorithm is the percentage of items that the model has categorized correctly.

Sensitivity

is the percentage of positive items that the model correctly categorizes as positive. It is sometimes called the real positive rate (RPR) or recall.

Specificity

Thet rue negative characteristic or rate (TNR) is the percentage of positives that the model correctly classifies as negative.

ROC is a measure that is used to check the quality of classifiers. For each classifier, threshold values between zero and one are used for the outputs. We calculated 2 values of true positive rate and false positive rate for each threshold value. As you can see in the Fig. 8, the true positive rate is always higher than the false positive rate.

5.1 Data analysis

Since entering data into the multilayer perceptron neural network in its original form reduces the speed and accuracy of the network, to prevent this and to equalize the value of the data, a normalization operation has been performed in the network, thus preventing the weights from becoming too small and the neurons from saturating too early. For this purpose, to introduce the input data to the software, the normalized data between + 1 and − 1 has been used as follows.

Where Xnormal is the normalized value, X0 indicates the observed value, and Xmax and Xmin are the maximum and minimum values of the data, respectively.

5.2 Data evaluation

The percentage of internal error between the observed vector and the predicted vector is used to evaluate the performance results of the designed neural networks as follows.

Where x0 is equal to the actual values and x is equal to the calculated values of the software. Data analysis was performed using different input patterns, several layers, multiple iterations, and different analysis functions, as a result of which different errors were predicted. It should be noted that due to the non-normalization of output data, the output layer functions are all selected as purlin or linear functions. In the experiment, 75% of the data was put to work for training, while 25% was employed for testing.

5.3 Findings

Table 2 provides the details of previous and proposed approaches and wee compare the performances of the proposed method and some related works in Fig. 9.

As you can see the proposed method correctly diagnosed liver disease (Sensitivity factor) in 99.65% of cases and diagnosed normal people (without disease) in 99.57% of cases (Specificity factor) and has better performance than other methods.

6 Conclusions

To diagnose liver failure, doctors face challenges with conventional medical methods that may lead to incorrect diagnosis or make them unable to diagnose the disease in its early stages. This problem hinders the quick treatment of patients and regaining their health. As a result, researchers are currently looking for a way to diagnose this disease accurately and early. Intelligent technologies for diagnosing liver failure can assist medical staff in making decisions and carrying out operations to diagnose and predict liver failure patients. This approach evaluates all parameters affecting patient identification and enhances the probability of success in liver failure diagnosis Using data mining and ANN. The presence of a software system to incorporate programming techniques and ANN might be advantageous to increase the efficiency of the medical diagnostic and professional knowledge to have great success in detecting and forecasting liver disorders in collaboration with medical professionals. Multilayer perceptron neural networks are employed in the proposed strategy to diagnose liver failure leveraging a liver patients’ dataset. The proposed scheme through the backpropagation algorithm can improve the diagnosis rate and predict liver failure more accurately and intelligently. We simulated our proposed system in the MATLAB environment and obtained an accuracy criterion of 99.5%, a sensitivity criterion of 99.65%, and a specificity criterion of 99.57%. when compared to previous works. These values show the efficient performance and high accuracy of the proposed method compared to previous methods. We may point to future efforts like the design and development of software for liver failure diagnosis, which, like other applications used in medical centers, can considerably aid in disease identification and, consequently, accelerate treatment.

Data availability

The data that support the findings of this study are openly available in UCI ML Repository’s ILPD (Indian Liver Patient Dataset) Data Set at https://archive.ics.uci.edu/ml/datasets/ILPD+(Indian+Liver+Patient+Dataset).

References

Chen P-T, Lin C-L, Wu W-N (2020) Big data management in healthcare: adoption challenges and implications. Int J Inf Manag 53:102078

Tenali N, Babu GRM (2023) A systematic literature review and future perspectives for handling big data analytics in COVID-19 diagnosis. New Gener Comput 41(2):243-280

He B et al (2020) A neural network framework for predicting the tissue-of-origin of 15 common cancer types based on RNA-Seq data. Front Bioeng Biotechnol 8:737

Välimäki MA et al (2021) Measured and perceived impacts of evidence-based leadership in nursing: a mixed-methods systematic review protocol. BMJ Open 11(10):e055356

Xu H et al (2020) SUMO1 modification of methyltransferase-like 3 promotes tumor progression via regulating Snail mRNA homeostasis in hepatocellular carcinoma. Theranostics 10(13):5671

Boddu RSK, Karmakar P, Bhaumik A, Nassa VK, Bhattacharya S (2022) Analyzing the impact of machine learning and artificial intelligence and its effect on management of lung cancer detection in covid-19 pandemic. Mater Today: Proc 56:2213–2216

Al-Tashi Q, Rais H, Abdulkadir SJ (2018) Hybrid swarm intelligence algorithms with ensemble machine learning for medical diagnosis. In: 2018 4th International Conference on Computer and Information Sciences (ICCOINS), IEEE, pp 1–6

Lin X, Lu L, Pan J (2021) Hospital market competition and health technology diffusion: an empirical study of laparoscopic appendectomy in China. Soc Sci Med 286:114316

Katsuki M et al (2023) Developing an artificial intelligence-based headache diagnostic model and its utility for non-specialists’ diagnostic accuracy. Cephalalgia 43(5):03331024231156925

Haick H, Tang N (2021) Artificial intelligence in medical sensors for clinical decisions. ACS Nano 15(3):3557–3567

Zhang J et al (2022) Calcium homeostasis in Parkinson’s Disease: from Pathology to Treatment. Neurosci Bull 38(10):1267–1270

Wu B, Moeckel G (2023) Application of digital pathology and machine learning in the liver, kidney and lung diseases. J Pathol Inf 14:100184

Singh A, Pandey B (2014) Intelligent techniques and applications in liver disorders: a survey. Int J BioMed Eng Technol 16(1):27–70

Gao T-H et al (2022) Curcumae rhizoma and its major constituents against hepatobiliary disease: Pharmacotherapeutic properties and potential clinical applications. Phytomedicine 102:154090

Xia Y et al (2019) Meta-analysis of the association between MBOAT7 rs641738, TM6SF2 rs58542926 and nonalcoholic fatty liver disease susceptibility. Clin Res Hepatol Gastroenterol 43(5):533–541

Xiong T et al (2019) TO901317 inhibits the development of hepatocellular carcinoma by LXRα/Glut1 decreasing glycometabolism. Am J Physiol-Gastrointestinal Liver Physiol 316(5):G598–G607

Azmi J, Arif M, Nafis MT, Alam MA, Tanweer S, Wang G (2022) A systematic review on machine learning approaches for cardiovascular disease prediction using medical big data. Med Eng Phys 105:103825

Gupta A, Jain V, Singh A (2022) Stacking ensemble-based intelligent machine learning model for predicting post-COVID-19 complications. New Gener Comput 40(4):987–1007

Fan Z, He Y, Sun W, Li Z, Ye C, Wang C (2023) Clinical characteristics, diagnosis and management of Sweet syndrome induced by azathioprine. Clin Exp Med 23(7):3581–3587

Wu C-C et al (2019) Prediction of fatty liver disease using machine learning algorithms. Comput Methods Programs Biomed 170:23–29

Dhaenens C, Jourdan L (2022) Metaheuristics for data mining: survey and opportunities for big data. Ann Oper Res 314(1):117–140

Lu S et al (2023) Iterative reconstruction of low-dose CT based on differential sparse. Biomed Signal Process Control 79:104204

Huang H, Wu N, Liang Y, Peng X, Shu J (2022) SLNL: a novel method for gene selection and phenotype classification. Int J Intell Syst 37(9):6283–6304

Arias IM et al (2020) The liver: biology and pathobiology. Wiley

Zhou L, Zhang Q, Deng H, Ou S, Liang T, Zhou J (2022) The SNHG1-Centered ceRNA Network regulates cell cycle and is a potential prognostic biomarker for Hepatocellular Carcinoma. Tohoku J Exp Med 258(4):265–276

Qi M et al (2022) Multi-region nonuniform brightness correction algorithm based on L-channel gamma transform. Secur Commun Netw 2022

Khan RA, Luo Y, Wu F-X (2022) Machine learning based liver disease diagnosis: a systematic review. Neurocomputing 468:492–509

Spann A et al (2020) Applying machine learning in liver disease and transplantation: a comprehensive review. Hepatology 71(3):1093–1105

Sreejith S, Nehemiah HK, Kannan A (2020) Clinical data classification using an enhanced SMOTE and chaotic evolutionary feature selection. Comput Biol Med 126:103991

Asuncion A, Newman D (2007) UCI machine learning repository. Available: https://archive.ics.uci.edu/ml/datasets/ILPD+(Indian+Liver+Patient+Dataset)

Kumar P, Thakur RS (2021) Liver disorder detection using variable-neighbor weighted fuzzy K nearest neighbor approach. Multimed Tools Appl 80:16515–16535

Javadzadeh S, Shayanfar H, Soleimanian Gharehchopogh F (2020) A hybrid model based on ant lion optimization algorithm and K-Nearest neighbors Algorithm to Diagnose Liver Disease. J Ilam Univ Med Sci 28(5):76–89

Singh J, Bagga S, Kaur R (2020) Software-based prediction of liver disease with feature selection and classification techniques. Procedia Comput Sci 167:1970–1980

Ardam S, Soleimanian Gharehchopogh F (2019) Diagnosing liver disease using firefly algorithm based on Adaboost, (in eng). J Health Adm Res vol 22(1):61–77

Ahmadi M, Ramezanpour M, Khorsand R (2019) Diagnosis of Liver disorders using a combination of adaptive neuron-fuzzy inference system and particle swarm optimization algorithm. Health Inform Manage 16(3):115–121

Liang C, Peng L (2013) An automated diagnosis system of liver disease using artificial immune and genetic algorithms. J Med Syst 37(2):1–10

Musunuri B et al (2021) Acute-on-chronic liver failure mortality prediction using an artificial neural network. Eng Sci 15:187–196

Okanoue T et al (2021) Artificial intelligence/neural network system for the screening of nonalcoholic fatty liver disease and nonalcoholic steatohepatitis. Hepatol Res 51(5):554–569

Constantinescu EC et al (2021) Transfer learning with pre-trained deep convolutional neural networks for the automatic assessment of liver steatosis in ultrasound images. Med Ultrasonography 23(2):135–139

Gaber A, Youness HA, Hamdy A, Abdelaal HM, Hassan AM (2022) Automatic classification of fatty liver disease based on supervised learning and genetic algorithm. Appl Sci 12(1):521

Mutlu EN, Devim A, Hameed AA, Jamil A (2022) Deep learning for liver disease prediction. In: Pattern Recognition and Artificial Intelligence: 5th Mediterranean Conference, MedPRAI 2021, Istanbul, Turkey, December 17–18, 2021, Proceedings, Springer, pp 95–107

Ghazal TM, Rehman AU, Saleem M, Ahmad M, Ahmad S, Mehmood F (2022) Intelligent Model to Predict Early Liver Disease using Machine Learning Technique. In: 2022 International Conference on Business Analytics for Technology and Security (ICBATS), IEEE, pp 1–5

Md AQ, Kulkarni S, Joshua CJ, Vaichole T, Mohan S, Iwendi C (2023) Enhanced preprocessing approach using ensemble machine learning algorithms for detecting liver disease. Biomedicines 11(2):581

Amin R, Yasmin R, Ruhi S, Rahman MH, Reza MS (2023) Prediction of chronic liver disease patients using integrated projection based statistical feature extraction with machine learning algorithms. Inf Med Unlocked 36:101155

Ramana BV, Boddu RSK (2019) Performance comparison of classification algorithms on medical datasets. In: 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), IEEE, pp 0140–0145

Kabir MF, Ludwig SA (2019) Enhancing the performance of classification using super learning. Data-Enabled Discovery Appl 3:1–13

Kumar S, Katyal S (2018) Effective analysis and diagnosis of liver disorder by data mining. In: 2018 International Conference on Inventive Research in Computing Applications (ICIRCA), IEEE, pp 1047–1051

Le T-L (2019) Fuzzy C-means clustering interval type-2 cerebellar model articulation neural network for medical data classification. IEEE Access 7:20967–20973

Anand L, Syed Ibrahim S (2018) HANN: a hybrid model for liver syndrome classification by feature assortment optimization. J Med Syst 42:1–11

Beale MH, Hagan MT, Demuth HB (2010) Neural Netw Toolbox 7. User’s Guide MathWorks 2

Mather P, Tso B (2016) Classification methods for remotely sensed data. CRC Press

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest regarding the publication of this article.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dashti, F., Ghaffari, A., Seyfollahi, A. et al. A self-predictive diagnosis system of liver failure based on multilayer neural networks. Multimed Tools Appl (2024). https://doi.org/10.1007/s11042-024-18945-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11042-024-18945-y