Abstract

Despite being reliable, palmprints have not received as much attention as other biometrics such as fingerprints, face or iris. Amount of information provided by high resolution palmprints and the fact that they have huge forensic value makes them a preferred biometric choice for large scale identification systems. In palmprints, extraction of reliable features for identification is still a challenging task especially because most of palmprints found in the real world, e.g., in crime scenes, are of poor quality. This makes palmprint enhancement a crucial pre cursor to identification. Errors during enhancement result in extraction of un-reliable features which deteriorate identification accuracy. Recent works in palmprints have focused more on matching algorithms and limited novelty has been introduced in enhancement. Enhancement techniques used on high resolution palmprints recently are either borrowed from fingerprint techniques or are built on the high-risk assumption that palm ridge pattern is stationary or smooth in a local area. Large size and abruptly changing ridge pattern of palmprints dictates the need for a more robust enhancement scheme. This paper proposes a novel deep learning based high resolution palmprint enhancement approach that is able to process large areas of palmprint without making the assumption that underlying ridge pattern is stationary. We have tested proposed enhancement approach on a renowned high resolution palmprint dataset which shows that proposed technique performs favourably in comparison to state of the art.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Biometric systems have seen a sharp rise in utility due to increased security concerns and migration of human interaction and businesses to digital platforms. Global biometric industry is expected to reach $100 Billion growing at a compound annual growth rate (CAGR) of 14.6% by 2030 [26]. Palmprint based systems along with fingerprints were leader in biometric industry in the last decade and palmprint based systems are expected to show a CAGR of 18.28% and touch around $2.6 Billion by 2030 [40]. As a result of growing popularity of palmprints, research community’s interest in palmprints has also seen sharp increase [3, 15, 27, 32, 33]. Palmprints are an attractive biometric as they provide identification features at multiple image resolutions which are listed in Table 1. Level 1 features provide a certain level of convenience due to easy and inexpensive image acquisition methods like a smartphone or a CCD camera. But level 1 features provide low to middle level security and feature templates stored in a database are vulnerable to spoofing attacks. As a result, feature templates have to be protected using encryption techniques [32], or palmprint features are fused with other biometrics such as finger, iris etc. to form a multi-modal identification system [3]. Level 2 features (ridges/ valleys and minutiae) extracted at high resolution are considered most reliable and have the advantage of providing latent-to-full palmprint matching. Figure 1 illustrates different types of intrinsic palmprint features at low and high resolution. Since high resolution palmprints are similar to fingerprints, same acquisition methods or sensors can be employed with the advantage that palmprints are much more robust than fingerprints. Palmprints provide roughly 8 times more information than fingerprints [24]. Secondly, palmprints cannot be easily faked like fingerprints which may leave traces on flat surfaces. Palmprints also have more forensic value than fingerprints. A study by Federal Bureau of Investigation (FBI) reveals that 30% of evidence found in crime scenes is in the form of palmprints [1]. In 2013, FBI introduced National Palmprint System (NPPS), which stores 29 million individual palmprints and 15 million unique palmprint identities.

Palmprint features: Level 1 features (Distal, Proximal and Radial Transverse Creases), level 2 features (Ridges, Minutiae), level 3 features (Pores) and different palm regions Interdigital, Hypothenar and Thenar [24]

Palmprints cannot be used directly for identification and need to be enhanced to extract reliable and unique features. High resolution palmprint enhancement techniques try to recover palm ridge structure that may be degraded in various ways. This is a challenging task because most palmprints found in the nature suffer from multiple degradations such as incompleteness, poor ridge/valley contrast, broken ridges and addition of external noise such as stains or background texture. Performance achieved in enhancement dictates performance achieved during identification. Hence, it is imperative for enhancement technique to be adaptive to variable image qualities. Most palmprint enhancement techniques are borrowed from fingerprint enhancement techniques, but palmprints differ from fingerprints in few aspects, namely:

-

Large number of creases resulting in abrupt changes in ridge orientation

-

Flexibility of skin resulting in abrupt changes in inter-ridge distance or ridge frequency.

-

Palmprints are much larger and contain much more information which increases computation complexity.

Most important step in palmprint enhancement is the estimation of ridge orientation. High resolution palmprint enhancement methods found in the literature can be divided into two categories based on techniques used for estimation of ridge orientation:

1.1 Gradient based techniques

By far the most popular technique for enhancement. Local ridge orientation is estimated using pixel wise gradients. Enhancement filters which are usually Gabor filters or other contextual filters, need to adapt their orientation and width according to local ridge orientation and frequency respectively. Filtering can be applied in spatial domain [8, 16, 19, 21, 35, 44] or frequency domain [9, 17]. In spatial domain, filtering is applied pixel wise, where filter orientation and width are adapted to ridge pattern in a small area centred at a pixel (x, y). In frequency domain, filtering is applied patch wise by performing Fourier analysis of a patch of palmprint and multiplying with suitable filter, Gabor or a raised cosine filter. These techniques work well in fingerprints but cannot handle abruptly changing ridge orientation and non-uniform ridge frequency resulting from flexibility of skin [17]. This results in inexact configuration of contextual filters and error prone enhancement.

1.2 Region growing techniques

Local ridge pattern in small non overlapping patches (usually 8 × 8) is reconstructed by modelling it as a 2D sine wave [11, 12, 22, 24, 42]. Fourier analysis of a high-quality patch shows a pair of clear peaks corresponding to ridge pattern. Orientation of these peaks is taken as a good estimate of local ridge orientation. Subsequently, any directional filters can be used to enhance ridge structure. However, in poor quality regions containing a lot of creases or background textures, peak corresponding to ridge lines is not very clear and multiple peaks are detected in DFT.

Pixel wise contextual filtering is more robust to abrupt changes in ridge orientation and frequency but proves computationally costly in case of palmprints. And patch wise frequency domain operations work on the assumption that underlying ridge orientation and ridge frequency in a local area is stationary or uniform. This assumption may be convenient in fingerprints but in palmprints can lead to misleading results. Furthermore, in local areas with high creases, conventional techniques pick up contextual information pertaining to creases and end up enhancing creases rather than ridges. There is a need to find an enhancement technique that is able to process sufficiently large patches without making the assumption that underlying ridge orientation and ridge frequency are stationary or uniform.

We propose a palmprint enhancement technique that is inspired by recent works in image restoration and segmentation employing pixel-to-pixel learning in an end-to-end fashion using Convolutional Neural Networks (CNNs) [4, 36,37,38, 41]. These models have achieved great success but have not been employed specifically for the problem of palmprint enhancement. In proposed Palmprint Enhancement Network (PEN), we have trained an image-to-image regression CNN (Rnet) which is able to enhance sufficiently large patches of palmprint with adequate robustness. Sufficient depth and adequate number of kernels enable it to learn complex ridge patterns containing abrupt changes in ridge orientation and frequency. Unlike conventional methods, Rnet does not have to configure its kernels for every patch or perform Fourier analysis as it converts a patch directly into its enhanced version. Even in high crease areas, Rnet is able to enhance ridge patterns and subdue creases. Major contributions of this paper are following:

-

A frequency domain palmprint segmentation technique as a pre-processing step to remove background noise from the palmprint. This reduces region of interest (ROI) to only the foreground pixels, thereby reducing computation.

-

A two-step palmprint enhancement network (PEN) that is able to work on sufficiently large patches of palmprint, i.e., 96 × 96 without assuming underlying ridge pattern to be stationary or uniform.

-

In the first step, palm patches are passed through a classification CNN (Cnet) which predicts dominant ridge orientation in the patch and classifies them accordingly. Cnet is a fine-tuned version of alexnet [29] which is originally trained on ImageNet dataset [13]. Since the patch is of 96 × 96 pixels, prediction of dominant orientation is more like a global average instead of a strict local estimate.

-

In the second step, guided by orientation prediction of Cnet, patch is passed through Rnet. Rnet converts the patch to its corresponding enhanced version without adapting filters for each patch or performing Fourier analysis. Rnet is a 4-layer deep image enhancing CNN which we have designed and trained from scratch. Further architectural details are given in Section 5. Rnet enhancement is independent of ridge frequency, thereby, completely eliminating the need for frequency normalization [17] or other pre-processing techniques.

-

Skilful creation of training datasets for both Cnet and Rnet extracted from THUPALMLAB palmprint database [45].

Rest of the paper is formulated as follows: Section 2 highlights available literature on high resolution palmprints, Section 3 explains overall architecture of PEN, Section 4 presents proposed ROI segmentation technique, Section 5 and 6 explain offline and online stages of PEN respectively, Section 7 shows the results of proposed approach followed by a discussion on experimentation and findings in Section 8.

2 Related work

As a pre-processing step, most enhancement approaches have employed region of interest (ROI) segmentation methods which separate foreground pixels (palmar regions of image) from background pixels. ROI segmentation is required in the case of palmprints because large area of interest can slow down the process of enhancement and subsequent feature extraction. Secondly, palmprints taken in real world suffer from multiple degradations or noise. Noisy areas need to be identified and discarded to prevent extraction of false features that may affect matching performance.

ROI segmentation techniques are usually based on identifying textural differences [19] in the image. Block range filtering has also been used in ROI segmentation for fingerprints [14]. Images acquired in real world suffer from rotation, motion blur, stains and even occlusion. Deep study of image gray levels distribution can help distinguish noise from actual information. For example in [5], Barra, Silvio, et al. while working on iris segmentation explained four discriminative properties of image gray levels distribution to discriminate noise from actual information in an image portion, namely, Skewness, Kurtosis, Entropy and Gini index: a measure of homogeneity or heterogeneity. Since, palmprints are large and computationally intensive, we have restricted our goal of de-noising to only ROI segmentation (extracting image portions containing ridge lines) to save processing time. Palm ridge structure can be thought of as a 2D sine wave which has specific frequency throughout the image, i.e., 10 pixels in a 500 ppi image [24]. We have designed a frequency domain segmentation technique using a bandpass filter to isolate ridge frequency and discard all other frequencies from the image as they correspond to background pixels.

At the core of palmprint enhancement problem is the estimation of local ridge orientation and frequency. For palmprint enhancement, gradient-based contextual filtering techniques are by far the most popular choice which make use of ridge orientation estimated through gradient based techniques. Contextual filtering techniques were initially designed for fingerprints [9, 16, 17, 21] which were later improvised for palmprints [8, 19, 44]. These techniques are applied pixel wise and are computationally inefficient when applied on palmprints.

Jain et al. [24], proposed a DFT based palmprint enhancement method that estimated local ridge orientation and frequency simultaneously in a patch of 8 × 8 pixels. Ridge structure in a patch is assumed to be stationary and is modelled by 2D sine waves. Ridge/ valley structure in a patch is considered like a 2D sine wave and frequency peaks with highest amplitude are used to reconstruct ridge structure in that patch. Multiple patches with good connectivity are joined together to form regions of reconstructed ridge structure. This technique was later adopted and improved by Dai and Zhou [11] by incorporating smoothing of peak orientations in consecutive patches to remove blocking effect and applying Gabor filters subsequently in frequency domain to produce an enhanced image. Dai et al. [12] used same technique on larger patches 64 × 64 to reduce computational requirements. However, all DFT based techniques assume underlying ridge pattern to be stationary.

Recently, in the closely related field of fingerprints, CNNs have been successfully used for enhancement and classification problems. In [7], Cao and Jain used CNNs to predict local ridge orientation in fingerprints. Similarly, Ghafoor et al. [18] classified fingerprints based on the natural ridge patterns found in the fingerprints, i.e., whorl, left loop, right loop, arc, tented arc. This CNN-based classification can be considered as a coarse level comparison between fingerprints. This restricts subsequent feature-based matching to only relevant class of fingerprints, thereby reducing computational overhead. Li et al. [31] proposed a multi-task learning approach using an encoder-decoder architecture which is closely related to palmprint enhancement method proposed in this paper. Their proposed architecture had one convolutional branch to extract intrinsic features, and two deconvolutional branches: enhancement branch and orientation branch. Enhancement branch is used to enhance fingerprints while orientation branch guides the enhancement branch using a multi-task learning strategy. However, both these deconvolution branches work independently, whereas enhancement is highly dependent on ridge orientation information, especially in the case of palmprints owing to abruptly changing ridge patterns. Wong and Lai [49] also proposed a multi-task learning approach with the improvement that the predicted ridge orientation estimates are fed into the enhancement branch to directly guide enhancement. As a more recent example of use of CNNs, Wyzykowski et al. [50] employed Generative Adversarial Network (GANs) to synthesize realistic fingerprints.

All of the aforementioned CNN-based fingerprint classification, enhancement or reconstruction methods rely heavily on the naturally existing ridge patterns in the fingerprints, namely, whorl, left loop, right loop, arc, and tented arc. In palmprints, these patterns do not exist. In addition, large size, abruptly changing ridge patterns, large number of scars and creases, make orientation learning and subsequent enhancement very challenging. Hence, like the classical fingerprint approaches, deep learning based fingerprint approaches can also not be directly applied on palmprints. Also, challenges offered by palmprint can make training process of deep or multi-task networks very difficult.

There is a lack of literature on employment of Convolutional Neural Networks (CNNs) for enhancement of high resolution palmprints. In [15], Fanchang, Hao, et al. used CNNs to classify multiscale high resolution palmprint patches according to quality of ridge structure contained in them. Classifying palm patches on the basis of quality with reasonable accuracy helps in creating an accurate image quality map. Using this quality map, palm patches of only acceptable quality values can be selected for subsequent enhancement and matching and the rest can be discarded. Similarly, Ahmadi and Soleimani [2], used CNNs to align two palmprint images before matching. Aligning palmprint prior to matching reduces computational overhead. A more recent work related to high resolution palmprint enhancement is presented by Bing and Feng [33] who employed attention based GANs to estimate ridge orientation only. Their method does not incorporate enhancement of palmprints. Apart from that, recent research on palmprints [9, 11, 12, 17, 19, 22, 27, 33, 42] has focussed more on palmprint matching rather than enhancement, ergo limited novelty has been introduced in palmprint enhancement process. To the best of our knowledge, no approach has been proposed for the specific problem of converting a palmprint directly into its corresponding enhanced version using CNNs.

Our work focuses on exploiting the success of deep learning in image-to-image learning to find an out of the box solution for directly converting a sufficiently large patch (96 × 96) of palmprint to its enhanced version without estimating ridge orientation, ridge frequency or estimating a region quality map. Deep learning solutions are computationally intensive, but by harnessing the power of GPUs, deep learning is quickly replacing classical approaches.

3 PEN architecture

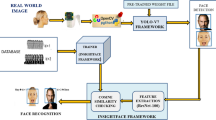

Figure 2 illustrates the overall architecture of PEN. Palmprint is first pre-processed to extract only the foreground pixels (palm area) in the image. We propose a DFT based ROI segmentation procedure (Section 4) that effectively removes background noise. Removal of background noise is essential during both offline and online stage in order to limit computation to valid region of the image.

PEN Architecture: Offline phase illustrates preparation of separate datasets for training of Cnet and Rnet. Online phase illustrates that after ROI extraction, 96 × 96 patches are extracted from palmprint. Each patch is then passed through Cnet to predict dominant ridge orientation. Based on this information, patches are rotated (if required) and passed through Rnet to yield enhanced palmprint. All patches are later joined to form a complete enhanced palmprint

After ROI segmentation, palmprint is broken down into patches for subsequent processing. We use two separately trained CNNs to carry out enhancement. Cnet, which is a classification CNN, classifies the palm patch according to dominant local ridge orientation which it predicts directly from the patch. Guided by orientation prediction of Cnet, palm patches are rotated (if required) to align with kernels of Rnet. Rnet, which is an image-to-image regression CNN, then outputs an enhanced version of the patch. Enhanced palm patches are then rotated back to their original orientation. Patch size of 96 × 96 is chosen after sufficient experimentation. Prediction scores of Cnet on patches bigger than 96 × 96 were found to be a poor estimate of dominant ridge orientation and patches smaller than these were found to require greater overall computational time. At the end, all enhanced patches are joined to produce complete enhanced palmprint. Both CNNs in the offline stage undergo separate training cycles using separate datasets containing palm patches carefully extracted from THUPALMLAB [45] database. Details about PEN components are given in subsequent sections.

4 ROI segmentation

We carry out ROI segmentation of palmprint in the frequency domain (Fig. 3). Palmprint is converted to its DFT equivalent and multiplied by a bandpass filter. Parameters of bandpass filter are estimated in the following way. Average distance between consecutive ridges in a 500 ppi palmprint is around 10 pixels [8]. Ridge frequency fridge can be easily isolated in DFT of the image. Most applications of 2D DFT involve shifting the DFT of the image in such a way that zero frequency f0 appears in the centre of DFT image. Maximum frequency fmax in an image is the one which has a wavelength of just 2 pixels, i.e., it shifts from high to low and back to high intensity in just 2 pixels. DFT of an image depicts frequency components in such a way that the higher the spatial frequency, the farther it is from the centre (f0). This implies that a spatial frequency with a minimum wavelength of 2 pixels (λmin) along x-axis will be represented by a sample (fmax) on the far right of x-axis in DFT.

ROI Segmentation: Palmprint is multiplied by a bandpass filter in frequency domain to remove noise. In spatial domain, Otsu’s thresholding is used to binarize the image and heavy blurring is used subsequently to yield a binary mask which is multiplied with original palmprint to yield only the foreground pixels

Since image and its DFT have the same dimensions, highest frequency fmax along x-axis in an N × N image will be at a distance (dmax) of N/2 from centre (f0). Based on this conclusion we can find approximate distance of ridge frequency, fridge (1/λridge, where λridge = 10 pixels [8]) from the centre (f0).

Now since fmax is at a distance of dmax (N/2) from the centre, dridge can be calculated as:

In our implementation, we have used Gaussian bandpass filter to extract ridge frequency. Which is defined as:

Where, D(u, v) is the distance of each frequency component from the centre and Do is the radial distance of band centre from the DFT image centre. W is the width of the filter and defines the Gaussian spread in both directions. While dealing with 2040 × 2040 images, bandpass filter with a Do at 180 pixels from centre and bandwidth W of 50 was found to be effective in catering for variations in ridge frequencies and ridge orientations. DFT analysis of palmprint is presented in Fig. 4.

Palmprint is brought back into spatial domain using Inverse DFT and the image is binarized using a threshold obtained by Otsu’s method. Image obtained after applying Otsu’s method is then smoothed through heavy blurring using a Gaussian filter with standard deviation set at 20. This gives us a binary mask which is subsequently multiplied by the input palmprint to reveal only the foreground pixels (ROI) of the palmprint. Results of our ROI extraction method are illustrated in Fig. 5.

5 Offline stage

5.1 Dataset preparation for Cnet and Rnet

We have prepared our training and validation data using THUPALMLAB high resolution palmprint dataset [45]. THUPALMLAB dataset has been used in all state-of-the-art studies on high resolution palmprints [8, 11, 12, 19, 22, 24, 42, 44]. This dataset contains a total of 1280 palmprints from 80 subjects. There are 16 palmprints corresponding to each subject out of which 8 belong to left palm and 8 belong to right palm of the subject. Palmprints in the dataset are of size 2040 × 2040 taken at 500 ppi. Both Cnet and Rnet are trained on patches of 96 × 96 pixels. On the average one palmprint could produce around 100 to 150 valid patches (of 96 × 96 pixels) that may qualify for inclusion in training datasets. Since different palm regions provide different levels of ridge structure quality, special emphasis was paid while creating training data to include patches from all palm regions so that trained CNNs are robust to variable ridge structure. Process of associating ground truths and labels with training patches during the offline stage is inspired by [31] and is explained below.

Using widely used gradient based methods [39], an orientation image Oxy is created that contains pixel wise ridge orientations in a training patch. Oxy is constructed by taking inverse tangent of the gradient of the palmprint image in vertical Gy and horizontal Gx directions, as given by Eq. (5):

G x and Gy are calculated using Eq. (6) and Eq. (7), where ∇x and ∇y are x and y gradient components of the palmprint image computed using Gaussian filter. Gxy is the product of gradients given by Eq. (8).

Variance in ridge orientation within a patch was chosen as the quality measure that determined whether to include a patch in the training set or not. Variance of orientation distribution of a patch with well-defined ridge/ valley structure is much lower than a patch with broken ridges. Variance threshold was set at 8 which was found to be a good trade-off between achieving good training accuracy and imparting necessary robustness against bad patches which might be experienced in the online stage. Figure 6 shows distribution of pixel wise orientations available in good and relatively bad patches with a Gaussian curve fitted on them.

Mode of the orientation distribution in the patch was taken as indicator of the dominant orientation as mode gives the most frequently occurring value in data. We quantized 0 to 180 degrees range of orientation in steps of 15 degrees, thereby leaving only 12 classes for patch classification. As a result, a patch with dominant orientation between 0 to 15 degrees is placed in a bin labelled 15 and a patch with dominant orientation between 16 and 30 is placed in a bin labelled 30 and so on. Bin labels are used as ground truths or class labels for Cnet. Once selected patches have been placed in separate bins, gradient based 2D contextual filtering method [19] is used to produce corresponding enhanced version of these patches. These enhanced versions of patches are used as ground truth for Rnet. The whole process is explained in Fig. 7.

5.2 Training Cnet

We have used transfer learning to fine tune pre-trained alexnet for classification of palm patches based on dominant ridge orientation. Alexnet [29] is a deep CNN which is trained on a subset of imagenet dataset [13] with over 1 million images. It is originally capable of classifying between 1000 classes. Alexnet consists of 5 convolutional layers, 3 max pooling layers, 2 normalization layers, 2 fully connected layers and 1 softmax layer. We have replaced last three layers of pre-trained alexnet with a fully connected layer, a softmax layer and classification output layer to classify between 12 distinct ridge orientations. During fine-tuning, weight learning rate for the new layers was increased to focus learning more on the new layers and bring minimal changes to weights from the transferred layers. ReLUs have become default activation function (Eq. (9)) in the recent past because they help train the models faster. Pooling layers help in reducing the dimensions of the image to improve processing speed and also impart robustness in terms of location of features of interest in the image. Values obtained from output of softmax layer (Eq. (10)) indicate class predictions.

In fingerprints, 5 easily identifiable ridge patterns exist, namely, whorl, left loop, right loop, arc, tented arc [48] that help in making identification through CNNs easy. In palmprint, owing to large ROI, it is not easy to pre define local patterns of ridge structure. Hence, orientation prediction in palms using CNNs is a potentially difficult task. Carefully selected images in the dataset contain a good compromise between good and bad quality images that help in improving training performance while achieving robustness at the same time. With extensive trials we were able to achieve an accuracy of 90% in identifying dominant local ridge orientation. Owing to a large number of creases in the palmprints coupled with variable quality of images and lack of predefined patterns in the palmprints, 90% is an encouraging accuracy. We use 20% of data for validation while 80% is used for improving training performance. During online testing, classification errors made by Cnet (if any) are removed using smoothing of class prediction values within neighbouring patches.

5.3 Rnet - architecture and training

Apart from image classification, CNNs have proved to be remarkable in image-to-image regression tasks as well. Output layer of a traditional CNN is modified to use it as a regression CNN. Image regression CNNs have attained state of the art performance in computer vision problems such as head pose estimation [34], human pose estimation [47], facial landmark detection [6] or image registration [10]. Rnet is inspired by recent developments in pixel-to-pixel learning which includes image restoration and image segmentation [4, 36,37,38, 41]. Rnet is a 4-layer deep CNN illustrated in Fig. 8. Convolutional layers extract features and encode primary components while eliminating unwanted abstractions (creases or background noise) in the image. We have not used pooling layers as low-level image enhancement problem is more focussed on reducing corruptions in low-level features rather than learning complex image abstractions. Secondly, pooling layers remove vital image details while reducing feature space which we cannot afford.

Rnet architecture: 4 Convolutional layers Conv1, Conv2, Conv3 and Conv4 with ReLU activation function. Conv1 has 20 kernels of size 15 × 15, Conv2 has 16 kernels of size 11 × 11, Conv3 has 8 kernels of size 7 × 7 and Conv4 has 1 kernel of size 5 × 5. Euclidean loss (MSE) between output of Conv4 and ground truth patch is used for training

Since padded convolutional layers have been used, size of output image equals input image i.e., 96 × 96. Training data for Rnet is in the form (X, Y) where X is a 96 × 96 patch and Y is corresponding enhanced version or ground truth for X. Convolution layer can be expressed as:

X i is the ith training sample and Wk and Bk represent kernels and biases, respectively. Output Al of a layer l (where l = 1, 2, 3, 4) is given by:

G l is the ReLU activation function of lth layer, given by:

We use output of Conv4 (A4) as final activation. Pixel wise prediction of enhanced patches given the real patches involves estimating convolutional kernel weights

for all pairs (Xi, Yi) in a training dataset, where i = 1,2, …, N and Xiand Yi represent real and enhanced patch (or the ground truth) respectively. Objective function is to minimize Mean Squared Error (MSE) expressed in Eq. (14) between output of Conv4 and ground truth image. We have trained Rnet on Caffe [25] with an initial learning rate of 0.00001, momentum of 0.85, batch size of 64 using SGD solver. Figure 9 illustrates training curve of Rnet.

for all pairs (Xi, Yi) in a training dataset, where i = 1,2, …, N and Xiand Yi represent real and enhanced patch (or the ground truth) respectively. Objective function is to minimize Mean Squared Error (MSE) expressed in Eq. (14) between output of Conv4 and ground truth image. We have trained Rnet on Caffe [25] with an initial learning rate of 0.00001, momentum of 0.85, batch size of 64 using SGD solver. Figure 9 illustrates training curve of Rnet.

6 Online stage

Online stage of the PEN is illustrated in Fig. 10. Palmprint first goes through the ROI extraction to obtain only the foreground pixels. Palmprint is then broken down into overlapping 96 × 96 patches and passed through Cnet and Rnet. Dominant orientation of ridges in the patch is predicted by Cnet and guided by this information, patch is passed through Rnet. We have trained Rnet on patches of only one orientation class. If Cnet predicts the orientation class of a patch to be other than the one Rnet is trained on, the patch undergoes two rotations: one before and one after passing through Rnet. First rotation will align patch orientation with Rnet and second rotation will bring patch back to its original orientation. At the end, all patches are joined together to output a complete enhanced palmprint. Since overlapping patches are used, discontinuity in patches while joining can be avoided through careful implementation. Results of explained procedure after joining all enhanced patches are illustrated in Figs. 11 and 12.

7 Results

The proposed Palmprint enhancement network (PEN) is designed to eliminate two fundamental assumptions in previous enhancement techniques, namely, ridge pattern in a local area is stationary and ridge frequency is constant. In order to validate performance of PEN, we needed to ascertain the following: 1) PEN is robust to abruptly changing ridge orientation and frequency without assuming ridge pattern to be stationary in a local area 2) Even in the high crease areas, PEN is able to extract underlying ridge pattern, and 3) Palmprints enhanced by PEN produce promising results during matching.

7.1 Dataset and experimental setup

Results are acquired on renowned and challenging THUPALMLAB dataset [45]. To the best of our knowledge, this high resolution palmprint dataset has been used in all state of the art studies on high resolution palmprints. Dataset consists of 1280 palmprint images taken from both left and right hands of 80 different subjects, with eight impressions per hand. The images have a resolution of 2040 × 2040 pixels at 500 ppi with 256 grey levels. Performance assessment of PEN is based on both enhancement of palmprints and accuracy of subsequent matching or identification process. Equal Error Rate (EER) is chosen as the benchmark for evaluating palmprint matching accuracy. EER is a threshold where false match rate (FMR) and false non-match rate (FNMR) are equal. Apart from EER, Rank-1 accuracy and Detection Error Trade-off (DET) results are also calculated to verify quality of enhancement.

All experiments pertaining to this article are performed on a system equipped with Intel i7–6700 HQ CPU, 8 GB of RAM and an NVIDIA GTX 960 M GPU with 4GB memory.

7.2 Enhancement results

We have compared enhancement results of PEN with contextual filtering methods [8, 9, 16, 17, 19, 21, 35, 44], since they have been most popularly used for recovering palmprint ridge structure. Figure 11 shows a variety of regions extracted from different palmprints. Performance of PEN can be observed to be robust to quality of input patches. In Fig. 12, we have shown conversion of a complete palmprint to its enhanced version. Figures 11 and 12 highlight adaptability of PEN on high curvature areas of palmprints which are challenging for any enhancement algorithm. It can be seen in Fig. 12, that hypothenar region provides best results for enhancement and subsequent minutiae extraction due to smooth ridge orientation and less number of creases. Comparison between PEN and state of the art contextual filtering methods is drawn in Fig. 13. Some poor quality patches have been chosen for the comparison. It can be seen that PEN performs better at maintaining ridge consistency than contextual filtering methods.

Enhancement Comparison between PEN and contextual filtering. a Original palm patch b Enhancement by contextual filtering c Enhancement by PEN. Results show that contextual filters applied in small local areas containing creases (indicated by red circles) pick up contextual information pertaining to creases and end up enhancing creases instead of ridges, while PEN is able to enhance ridge structure and subdue creases

7.3 Matching results

In order to further verify enhancement performance of PEN, we evaluated palmprint matching results as well. Minutiae based palmprint matching scheme was used where minutiae are described as the ridge endings or ridge bifurcations (Fig. 1). We have used a recently published two stage minutiae based matching technique [19], with the difference that instead of using contextual filtering-based enhancement of palmprints, we use PEN. Without paying much focus to post enhancement and matching algorithm we were able to achieve remarkable matching results which strengthens confidence in PEN. Minutiae are extracted from the enhanced palmprint produced by PEN after binarization and thinning [35]. Spurious or false minutiae are removed in the post processing.

Valid minutiae extracted from each palmprint are then represented as Minlist = [m1, m2 .... mN]. Each minutiae mj is a triplet, mj = [xj, yj, θj], where j = 1, 2…. N. (x, y) are the coordinates of minutiae and θ is the ridge orientation at minutia point with respect to x-axis. Each minutia is then encoded based on its n nearest neighbouring minutiae. Value of n is chosen to be 10. Encoding of all minutiae in a palmprint is stored as its PalmCode. During matching, PalmCode of input palmprint called the query palmprint is matched with every other PalmCode in the database. Matching occurs in two stages; local and global. At the local stage, each out of M minutiae in query palmprint is matched with each out of N minutiae in candidate palmprint. For a single minutia pair to match, at least thn amount of neighbouring minutiae need to match. Value of thn is chosen to be 5, i.e., at least 5 out of 10 neighbours of both minutiae must match. Similarity score is calculated for every minutia in the same way. Minutiae matched at this local stage are further processed at global stage, while unmatched minutiae are discarded. At the global stage, each minutia is encoded again with respect to its top 20 nearest neighbours. Similarity score of these minutiae is calculated in the same way as on local stage to give final matching score of palmprints.

Equal Error Rate (EER) is used to assess the accuracy of matching results. EER is calculated by computing the False Matching Rate (FMR) and the False Non-Matching Rate (FNMR). EER represents a threshold where both FMR and FNMR are equal. FNMR (also called genuine matching) is determined by comparing each palmprint sample of a hand of a subject with other samples of the same subject’s hand. The total number of genuine matches is calculated as ((8 × 7)/2) × 80 × 2 = 4480. FMR (also called impostor matching) is determined by comparing the first palmprint sample of each hand of a subject with the first palmprint sample of the same hand of the remaining subjects. The total number of imposter matches is calculated as (80 × 79)/2) × 2 = 6320.

Results of matching algorithm on palmprints enhanced by PEN are shown in Table 2. Without spending any effort on optimization of minutiae encoding and matching algorithm for deep learning based enhancement, we were able to achieve EER of 0.15 (see PEN in Table 2) which is remarkably low. Matching scores of palmprints were inspected and it was found that palmprints performing poorly in matching stage showed some common properties. Three properties of palmprints were picked to identify poorly performing images, namely, number of valid minutiae (MinValid) in a palmprint, mean ridge curvature (CurvMean) of complete palmprint, and mean of local curvatures around each minutia in a palmprint in a 15 × 15 window (LocCurvMean). Average of these three attributes was calculated for each palmprint to depict palmprint quality, i.e.,

PalmQuality was calculated for each palmprint and results were analysed. In our implementation, value of PalmQuality varied from 0.40 to 1.32. It was observed that palmprints that severely affected EER results had produced lowest PalmQuality values. During the matching stage, the value of thn was lowered to 4 instead of 5 for palmprints that produced PalmQuality values lower than 0.45. Incorporating this simple adaptability into the system lowered the overall EER to 0.13 (see PENadapt in Table 2) which is lower than most state-of-the-art methods. This value of EER is impressive because it comes without making any improvement to minutiae encoding and matching algorithm. And by only dropping 10 palmprints with lowest PalmQuality from the matching stage as a pre-processing step, EER was calculated as 0.06 (see PENpreproc in Table 2).

Seeing EER results, it can be easily argued that by using superior minutiae encoding and matching techniques [22] and limiting feature search space to only good quality areas using pre-processing techniques like [15], proposed enhancement scheme can provide foundation for the best minutiae matching results. In order to further verify results, FNMR values at different FMR threshold were calculated and compared with other state of the art methods. Results are presented in Table 3 which show that proposed method performs favourably in comparison to state of the art.

Detection error trade-off (DET) graph is an alternative metric to EER. DET graph plots False Non Match Rate (FNMR) against False Match Rate (FMR) on a logarithmic scale. DET graph showing comparison between PEN and other enhancement schemes which use similar minutiae encoding and matching algorithms is presented in Fig. 14. Graph shows that PEN gives lower FNMR values as compared to other enhancement schemes. This is a testament to quality and reliability of features extracted using proposed enhancement scheme.

As another test of matching accuracy, rank-1 identification rate was calculated and compared with state of the art. In order to calculate rank-1 identification rate, each palmprint is compared with at least two palmprints of each hand of all 80 subjects in THUPALMLAB database. It can be seen in Table 4, that our enhancement method is able to extract good quality features that are able to attain high rank-1 identification rate.

8 Discussion

8.1 Analysis of Rnet architecture

Traditionally in CNNs, number of kernels increase in deeper layers and kernel size decreases. Initial layers act like Gabor filters and extract low level features such as edges, blobs etc. which can be represented using small number of kernels. On the other hand, deeper layers are trained to extract various high-level features which need large number of kernels for correct representation. But for our low-level image enhancement problem, this proves counter-productive. We have observed that large number of kernels in deeper layers make Rnet more receptive to complex structures in palmprint which include creases and other kinds of noises. This is equivalent to enhancing noise rather than original ridge pattern and this is exactly what we want to avoid. Hence, number of kernels in Rnet are gradually decreased in every subsequent layer to keep focus on low-level features only, i.e., ridge lines. Further details about Rnet architecture are listed in Table 5.

Extensive tests were performed to optimize performance of Rnet. Various alterations of following hyper parameters were performed to arrive at best results:

-

Number of convolutional layers: Number of convolutional layers was changed from 3 to 6. Architectures with convolutional layers between 4 to 5 showed promising results.

-

Size of kernels: Different sizes of kernels were employed in different layers to improve enhancement results.

-

Training optimization algorithms: Training was conducted using SGD [30], RMSProp [46] and Adam [28] optimization algorithms.

-

Activation layers: Performance comparison of ReLU and Leaky-ReLU was performed.

-

Pooling Layers: We have not used pooling layers as they reduce feature space which is not desirable in pixel-to-pixel image learning problems.

During experiments, different variations of Rnet architecture were tested to find best results. Architecture illustrated in Fig. 8 was empirically chosen to be best performing architecture after extensive testing. Details of tested Rnet architectures and matching results are given in Table 6. EER results in Table 6 show that increasing number of convolutional layers and kernels deteriorates EER. This is because palmprint enhancement is a low-level image enhancement problem. Deeper and complex architectures end up extracting high-level objects in the image such as creases and noise which undermines the ridge pattern in palmprint. Keeping convolutional layers up to 4 and gradually reducing number and size of kernels in 2nd and 3rd layer gives best results.

Figure 15 shows training curves of Rnet and its variations. Although variations of Rnet show similar training curve as Rnet, their performance during the matching stage was found to be below par.

While comparing performance of various training optimization algorithms, SGD was found to be most suited to our problem. Comparison of SGD, Adam and RMSProp optimization algorithms during training is illustrated in Fig. 16.

8.2 Comparison of proposed Rnet with recent deep learning paradigms

In the recent past, CNNs have seen remarkable success in image-to-image regression. Various image processing fields, especially image restoration, image denoising, and image segmentation [4, 36,37,38, 41] have seen an extensive application of image-to-image regression. Deep encoding-decoding architectures consisting of symmetric convolutional-deconvolutional layers have been a popular choice for calculating end-to-end mappings between corrupted and clean images. U-net [41] is an advanced type of encoder-decoder architecture containing additional connections between encoder and decoder parts. It was first proposed for medical image segmentation but gained an overwhelming success in other image processing problems too. Residual network (ResNet) [20] is another remarkably succesful deep learning framework. ResNet has mitigated the problem of training very deep networks by using residual blocks. Main concept of a residual block is that output of a layer is not only fed to next layer but also added to the output of another layer much deeper in the path. This connection to the deeper layer is called a “skip connection”. These connections also help gradients to flow without interruptions during training, thus overcoming the problem of vanishing gradients in deeper layers.

Although these architectures are immensely popular but to the best of our knowledge, they have not been used for the specific problem of palmprint enhancement. In this section we compare palmprint enahncement performance of proposed Rnet architecture with U-net and ResNet. U-net is originally designed for image segemenation and has been adapted for image-to-image regression. For ResNet, we have used ResNet-18 with necessary modifications for it to perform image-to-image regression as it is originally a deep image classification network.

Figure 17 illustrates a thinned palm patch derived from a palm patch enhanced by Rnet, U-net, and ResNet. It can be seen that Rnet is able to maintain continutity of ridges while very deep U-net and ResNet models create some artefacts during enhancement that are manifested as anomalies in the thinned images. These anomalies are depicted by red circles in Fig. 17. These anomalies act as false minutiae and deteriorate matching accuracy. This is because deeper layers and a large number of convolutional kernels in very deep CNN architectures tend to learn complex structures in the image and end up creating false structures in the enhanced image.

Table 7 presents two more comparisons between the proposed Rnet, U-net, and ResNet. Firstly, it compares the number of trainable parameters in all three networks. Training time and training complexity are directly linked to the number of trainable parameters in a network. Secondly, Table 7 presents the comparison of matching accuracy achieved by the features extracted from all three networks. It can be seen that Rnet performs favourably in comparison to the other two. Feaures extracted after enhancement by Rnet show superior matching accuracy. Also, due to a very simple architectue, Rnet has very little number of trainable parameters that require very little training time and offers minimal complexity as compared to U-net and ResNet.

Both Figs. 17 and Table 7 reiterate the fact that deeper convolutional networks, although very successful for complex image mappings, are not suited for low level palmprint enhancement problem. As a further proof of this concept, proposed Rnet architecture consisting of 4 convolutional layers is compared with Rnet-var4 (Section 8.1) consisting of 5 convolutional layers and results are illustrated in Fig. 18. It can be seen that increasing number of convolutional layers deteriorates ridge continuitty which is depicted by red circles in Fig. 18 b.

8.3 Impact of Cnet on performance of Rnet

In order to simplify design and training, Rnet is trained on palm patches of a specific ridge orientation. Patches with other orientations are rotated to align with Rnet and then passed through it. This requires estimation of dominant ridge orientation in a patch. During experiments, Gradient based method was used at each point (x,y) to provide ridge orientation in a patch of 96 × 96. Since ridge orientation is not constant in the patch, dominant orientation was estimated using two statistical measures separately, namely, mean and mode. However, after extensive trials it was ascertained that estimation of dominant ridge orientation using these statistical properties did not give optimal results. As a result, trials were conducted to predict dominant orientation in patch using a classification CNN (Cnet). It was found that matching results of palmprints enhanced by Rnet were better when it was aided by Cnet. EER results with and without Cnet are given in Table 8.

Process of training dataset creation and training is explained in Section 5.2. Various architectures were trained using transfer learning to find best results. Results of tests are presented is Table 9. Owing to superior accuracy and relatively lower prediction time, alexnet was chosen as best candidate for Cnet in PEN.

9 Conclusion and future work

In this paper, a novel, efficient, and robust high resolution palmprint enhancement method was proposed which bypasses traditional enhancement methods completely using deep learning techniques. Both Cnet and Rnet are trained on intuitively created datasets. Previously published works on palmprint enhancement are borrowed from fingerprint enhancement schemes which do not cater for large number of major and minor creases found in palmprints. All enhancement methods rely on estimation of local ridge orientation and frequency to apply relevant enhancement filters. In this paper we present a deep learning based palmprint enhancement method that converts a palm patch directly from spatial domain to its corresponding enhanced version. Furthermore, proposed method is able to work on sufficiently large patches of palmprint which reduces overall processing time.

Palmprint is split into 96 × 96 patches which are classified by Cnet on the basis of dominant ridge orientation. Base architecture of Cnet is alexnet. Last three layers of Cnet are replaced by a fully connected layer, a softmax layer and a classification output layer to classify between 12 discrete ridge orientations. After prediction by Cnet, Rnet produces corresponding enhanced versions of patches which are subsequently joined to produce complete enhanced palmprint. Rnet is an image-to-image regression CNN which we have designed and trained from scratch. It directly converts a patch to its corresponding enhanced version without need for any ridge orientation or frequency estimate. It has sufficient depth and kernels to accommodate difficult ridge patterns encountered in palmprints due to abruptly changing ridge orientation and various types of noise.

It is highlighted that our work presented here was focussed primarily on enhancement of high resolution palmprints. For future, we intend to focus on coupling proposed enhancement method with superior minutiae encoding schemes such as Minutiae Cylindrical Code and developing a robust minutiae matching algorithm that is both fast and efficient. We also intend using GANs to reconstruct corrupted images prior to feature enhancement for better matching accuracy. Lastly, we intend to use the Graphical Processing Unit (GPU) to speed up the compute intensive and time consuming palmprint matching process.

Data availability

The datasets generated during the current study are available from the corresponding author on reasonable request.

References

Ahmad MI, Woo WL, Dlay S (2016) Nonstationary feature fusion of face and palmprint multimodal biometrics. Neurocomputing 177:49–61

Ahmadi M, Soleimani H (2019) Palmprint image registration using convolutional neural networks and Hough transform. arXiv preprint arXiv:1904.00579

Attia A, Mazaa S, Akhtar Z et al (2022) Deep learning-driven palmprint and finger knuckle pattern-based multimodal person recognition system. Multimed Tools Appl 81:10961–10980

Badrinarayanan V, Kendall A, Cipolla R SegNet (2015) A deep convolutional encoder-decoder architecture for image segmentation. arXiv preprint arXiv:1511.00561 5

Barra S et al (2019) F-FID: fast fuzzy-based iris de-noising for mobile security applications. Multimed Tools Appl 78(10):14045–14065

Burgos Artizzu, XP, Perona P, Dollár, P (2013) Robust face landmark estimation under occlusion. In: Proceedings of the IEEE international conference on computer vision, pp 1513–1520

Cao K, Jain AK (2015) Latent orientation field estimation via convolutional neural network, in: International conference on biometrics, IEEE, pp.349–356

Cappelli R, Ferrara M, Maio D (2012) A fast and accurate palmprint recognition system based on minutiae, IEEE transactions on systems, man, and cybernetics. Part B (Cybernetics) 42(3):956–962

Chikkerur S, Cartwright AN, Govindaraju V (2007) Fingerprint enhancement using stft analysis. Pattern Recogn 40(1):198–211

Chou CR, Frederick B, Mageras G, Chang S, Pizer S (2013) 2d/3d image registration using regression learning. Comput Vis Image Underst 117(9):1095–1106

Dai J, Zhou J (2010) Multifeature-based high-resolution palmprint recognition. IEEE Trans Pattern Anal Mach Intell 33(5):945–957

Dai J, Feng J, Zhou J (2012) Robust and efficient ridge-based palmprint matching. IEEE Trans Pattern Anal Mach Intell 34(8):1618–1632

Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei, L (2009) Imagenet: a large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp 248–255)

Fahmy MF, Thabet MA (2013) A fingerprint segmentation technique based on morphological processing. In IEEE International Symposium on Signal Processing and Information Technology. IEEE, pp. 000215–000220

Fanchang H et al (2020) Local image quality measurement for multi-scale forensic palmprints. Multimed Tools Appl 79(19):12915–12938

Ghafoor M, Taj IA, Ahmad W, Jafri NM (2014) Efficient 2-fold contextual filtering approach for fingerprint enhancement. IET Image Process 8(7):417–425

Ghafoor M, Taj IA, Jafri NM (2016) Fingerprint frequency normalisation and enhancement using two-dimensional short-time Fourier transform analysis. IET Comput Vis 10(8):806–816

Ghafoor M et al (2019) Fingerprint identification with shallow multifeature view classifier. IEEE Trans Cybern 51(9):4515–4527

Ghafoor M, Tariq SA, Taj IA, Jafri NM, Zia T (2020) Robust palmprint identification using efficient enhancement and two-stage matching technique. IET Image Process 14(11):2333–2342

He K, Zhang X, Ren S, Sun J, (2016) Deep residual learning for image recognition. In proceedings of the IEEE conference on computer vision and pattern recognition (pp 770–778)

Hong L, Wan Y, Jain A (1998) Fingerprint image enhancement: algorithm and performance evaluation. IEEE Trans Pattern Anal Mach Intell 20(8):777–789

Hussein IS, Sahibuddin SB, Nordin MJ, Sjarif NNBA (2020) Multimodal recognition system based on high-resolution palmprints. IEEE Access 8:56113–56123

Iandola FN, Han S, Moskewicz MW, Ashraf K, Dally WJ, Keutzer K (2016) SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and < 0.5 MB model size. arXiv preprint arXiv:1602.07360

Jain AK, Feng J (2008) Latent palmprint matching. IEEE Trans Pattern Anal Mach Intell 31(6):1032–1047

Jia Y, Shelhamer E, Donahue J, Karayev S, Long J, Girshick R, ... Darrell T (2014) Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the 22nd ACM international conference on Multimedia, pp. 675–678

Justina A (2022) Biometric authentication and identification market revenue worldwide in 2019 and 2027. https://www.statista.com/statistics/1012215/worldwide-biometric-authentication-and-identification-market-value/. Accessed 1 March 2022

Khodadoust J, Medina-Pérez MA, Loyola-González O, Monroy R, Khodadoust AM (2022) A secure and robust indexing algorithm for distorted fingerprints and latent palmprints. Expert Syst Appl 206:117806

Kingma, DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980

Krizhevsky A, Sutskever I, Hinton GE (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

Le Cun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Li J, Feng J, Kuo CCJ (2018) Deep convolutional neural network for latent fingerprint enhancement. Signal Process Image Commun 60:52–63

Li H, Qiu J, Teoh ABJ (2020) Palmprint template protection scheme based on randomized cuckoo hashing and MinHash. Multimed Tools Appl 79:11947–11971

Liu B, Feng J (2021) Palmprint orientation field recovery via attention-based generative adversarial network. Neurocomputing 438:1–13

Liu X, Liang W, Wang Y, Li S, Pei M (2016) 3d head pose estimation with convolutional neural network trained on synthetic images. In: 2016 IEEE international conference on image processing (ICIP). (IEEE. pp 1289–1293

Maltoni D, Maio D, Jain AK, Prabhakar S (2009) Handbook of fingerprint recognition (Vol. 2). Springer, London

Mao XJ, Shen C, Yang YB (2016) Image restoration using convolutional auto-encoders with symmetric skip connections. arXiv preprint arXiv:1606.08921

Mao X, Shen C, Yang YB (2016) Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. In: Advances in neural information processing systems, pp 2802–2810

Noh H, Hong S, Han B (2015) Learning deconvolution network for semantic segmentation. Proceedings of the IEEE international conference on computer vision

Ratha NK, Chen S, Jain AK (1995) Adaptive flow orientation-based feature extraction in fingerprint images. Pattern Recogn 28(11):1657–1672

Researchnester (2022) Palm recognition biometric market segmentation by type. https://www.researchnester.com/reports/palm-recognition-biometrics-market/2865. Accessed 1 Mar 2022

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5–9, 2015, Proceedings, Part III 18. Springer International Publishing, pp. 234–241

Soleimani H, Ahmadi M (2018) Fast and efficient minutia-based palmprint matching. IET Biom 7(6):573–580

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, ... Rabinovich A (2015) Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1–9

Tariq SA , Iqbal S , Ghafoor M, Taj IA , Jafri NM, Razzaq S, Zia T (2019) Massively parallel palmprint identification system using GPU. Clust Comput, 1–16

THUPALMLAB (2012) http://ivg.au.tsinghua.edu.cn/dataset/THUPALMLAB.php. Accessed 7 Feb 2022

Tieleman T, Hinton G (2012) Lecture 6.5-rmsprop: divide the gradient by a running average of its recent magnitude. COURSERA Neural Netw Mach Learn 4(2):26–31

Toshev A, Szegedy C (2014) Deeppose: Human pose estimation via deep neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1653–1660

Wilson CL, Candela GT, Watson CI (1994) Neural network fingerprint classification. Journal of Artificial Neural Networks 1(2):203–228

Wong WJ, Lai S-H (2020) Multi-task CNN for restoring corrupted fingerprint images. Pattern Recogn 101:107203

Wyzykowski ABV, Segundo MP, de Paula Lemes R (2021) Level three synthetic fingerprint generation. In 2020 25th International Conference on Pattern Recognition (ICPR). IEEE, pp. 9250–9257

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mehmood, A.B., Taj, I.A. & Ghafoor, M. Palmprint enhancement network (PEN) for robust identification. Multimed Tools Appl 83, 14449–14476 (2024). https://doi.org/10.1007/s11042-023-16043-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16043-z