Abstract

Histogram Equalization (HE) is one of the most popular techniques for this purpose. Most histogram equalization techniques, including Contrast Limited Adaptive Histogram Equalization (CLAHE) and Local Contrast Modification CLAHE (LCM CLAHE), use a fixed block size technique for feature enhancement. Due to this, all these state of art techniques are used to give poor denoising performance after feature enhancement. In this paper, a deep learning based new approach, namely Dynamic Block Size Technique (DBST), is used to improve image denoising. In this approach, we use the Categorical Subjective Image Quality (CSIQ) image set, an image database generally used for preprocessing of images. The results obtained from experiments show better performance for different important parameters (used by state of art techniques). The work is novel in the preprocessing of images because in this work, we classify the image depending upon the image features for selecting appropriate block sizes dynamically during preprocessing. Proposed work outperforms in terms of PSNR, MSE, NRMSE, SSIM and SYNTROPY. The average respective values are 18.92, 863.86, 0.25, 0.81 and 19.35 and are better in comparison of CLAHE and LCM CLAHE.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Images help in creating thoughts, senses, and responses in our society. May it be a video or an image, both speak volumes of information while dispersing fast perceiving and understandings compared to text data. Thereby, they acts as essential tools for outspreading towards society. Further, images are also used in areas such as medical diagnosis, digital image forensic labs, to name a few. However, advancing editing tools and technologies are available for being supported by the cloud [2] to everyone, the credibility, and genuineness of images used in deferent applications are extremely problematic. Nowadays, there are numerous techniques for checking out the originalities of an image or a video such as forgery detection, feature extraction, clustering and classifications, digital forensics, medical image processing, and so on. All these techniques require some preprocessing over images before applying feature enhancement techniques to achieve better performance. Preprocessing commonly refers to those image operations when abstraction seems to be at its extreme moderate level. The process aims at improving image data and essentially focusing on further processing while avoiding suppression of distortions or enhancements of certain features of image. [39].

Given the above, we proposed a new preprocessing technique for receiving quality image reconstruction from available original image. In a real world scenario, this preprocessing technique may be helpful whenever its is necessary to critically analyze an image similar to a medical image processing, image forensics, and satellite image data analysis.

Histogram equalization (HE) is essentially a preferential technique for preprocessing and enhancing image features. It is a global preprocessing technique. Further, it enhances the contrast of the image to analyze, but it sometimes suffer from over contrast enhancement which, in turn, degrades the quality of the image. This degradation happens by over enhancement.

Histogram equalization is a method in image processing of contrast adjustment using the images histogram.

It is a technique used in image processing to enhance the contrast of an image by redistributing its intensity values. Histogram equalization works by transforming the image's histogram so that the intensities are spread out over a wider range. In many images, the usable data is often represented by closely spaced contrast values, which can result in a low overall contrast. Histogram equalization addresses this issue by stretching the intensity values across the histogram, making the distribution more uniform. This process effectively increases the global contrast, making the darker regions darker and the brighter regions brighter. By spreading out the intensity values, areas of the image that initially had lower local contrast can gain a higher contrast. This can lead to improved visual quality, making details more distinguishable and enhancing overall image appearance. Histogram equalization is a widely used technique in image processing and computer vision applications. However, it's worth noting that it may not always produce the desired results, especially when applied to images with specific characteristics or when preserving the original image content is crucial. Various modifications and advanced algorithms have been developed to address these limitations and provide more sophisticated contrast enhancement methods. [8]).

Adaptive Histogram Equalization (AHE) is an alternative contrast enhancing technique for overcoming problems in global preprocessing such as HE for natural images, medical images, and other non visual images. Adaptive Histogram Equalization (AHE) is indeed an effective contrast enhancement method for a wide range of images, including natural images and medical images. It is particularly well suited for initially nonvisual images, such as those obtained through medical imaging techniques. AHE operates automatically and effectively utilizes all the available contrast in the image data, making it a competitor to the standard method of contrast enhancement known as interactive intensity windowing.

In medical imaging, AHE's automatic operation and ability to present all available contrast in the image data make it a compelling alternative to interactive intensity windowing. Observer studies have indicated that for certain image classes, AHE provides local contrast presentation without significant disadvantages in any contrast range compared to intensity windowing. AHE offers advantages such as automatic and reproducible operation, as well as the convenience of requiring the observer to examine only a single image [29].

Block based histogram equalization is a variant of histogram equalization that operates on image blocks or patches instead of the entire image. It aims to improve local contrast enhancement by dividing the image into smaller regions and applying histogram equalization independently to each block. In block-based histogram equalization, the image is divided into non-overlapping blocks of equal size. For each block, a local histogram is computed, representing the distribution of pixel intensities within that block. The histogram equalization algorithm is then applied to each block individually, stretching the intensity values within the local histogram to enhance local contrast. By processing image blocks independently, block based histogram equalization can adapt to local variations in contrast and better preserve details within each block. This approach is particularly useful when an image contains regions with significantly different contrast levels or when global histogram equalization may lead to undesirable effects such as over-enhancement or loss of important details. Block-based histogram equalization can be implemented using various strategies, such as non overlapping square blocks or more sophisticated methods that consider image content and adaptive block sizes. The choice of block size and other parameters depends on the specific image characteristics and the desired enhancement outcome. It's worth noting that while block-based histogram equalization can improve local contrast, it may introduce block artifacts along the boundaries of the blocks, especially when the blocks' sizes are noticeable. Researchers have developed advanced techniques, such as contrast limited adaptive histogram equalization (CLAHE), to mitigate these artifacts and achieve more visually pleasing results. Overall, block-based histogram equalization provides a localized approach to contrast enhancement, enhancing details and preserving local contrast variations within an image [36, 45].

Deep learning has revolutionized various aspects of image processing due to its ability to automatically learn and extract complex features from large amounts of data. Here are some common applications of deep learning in image processing: for example, Image Classification, Object Detection, Image Segmentation, Image Super-Resolution, Image Denoising, Image Style Transfer, Image Captioning.

These are just a few examples of how deep learning is used in image processing. Deep learning techniques continue to advance the field, enabling more accurate and efficient solutions to a wide range o Deep learning techniques have gained significant attention in the field of image denoising. However, there are notable variations among the different types of deep learning methods employed for this purpose [12, 31, 42].

1.1 Motivation

These findings highlight the potential benefits of AHE in medical imaging and other domains where contrast enhancement is crucial. However, it's important to note that the choice of contrast enhancement technique should consider the specific characteristics of the images, the desired outcome, and any domain specific requirements. Different methods may be more appropriate in certain scenarios, and it's always recommended to evaluate and compare different techniques to determine the most suitable approach for a particular application. The primary method used in histogram equalization is that it maps each pixel of a given image. This mapping is based on nearby pixels around the pixel (known as its contextual region). Generally, they use interpolative mapping. The value is generated by applying four mappings (HE, AHE, CLAHE, and LCM CLAHE). In these mappings, the image is considered mosaic and grouped into a grid called equivalent contextual region (ECR) [29], even if the primary form of AHE is non interpolated. Still, it suffer from over enhancement of noise and slow speed. To overcome the problem of primary AHE, later on, the author suggested Interpolated AHE with contextual regions [29].

Furthermore, all these four types of HE use fixed block adaptive processing. CLAHE is a method shown to help assign displayed intensity levels in medical images (S.M. Pizer et al. n.d.). AHE related noise issues can possibly be minimized by restricting contrast intensifications in variable areas that shall be distinguished by histogram peaks in association with provisional zones (which implies numerous pixels to fall into similar grayscale). CLAHE has gray level assignment scheme related slopes that are restricted to limited pixel quantities in each bin alternatively associated with local histograms. Clipping of histogram and pixels equitably admeasures the entire histogram as well as keep its count equivalent. [58]. LCM CLAHE, on the other hand, depends on the user defined fixed sized block and selection feature for checking the quality of output image done in a repetitive methodology [38]. Some reviews on deep learning [14] for denoising the images exist, but actual implementation is minimal. Deep learning for denoising is also available for seismic data [53]. CNN model is used to denoise the Image [11], but the histogram equalization technique is equally important in this process [48]. An overview of CNN to denoise the image is also available in many research papers [42], but the fusion of HE and deep learning is rarely available.

In the presented work, it is observed that most HE techniques do not provide better performance on account of fixed block techniques in contrast enhancing processes. We have proposed a new technique to get a better denoised image by optimizing block size in the HE technique to overcome this problem. For this purpose, we have modified, particularly, LCM CLAHE. In this method block evaluation is performed by using PSNR to evaluate the reconstructed images.

1.2 Contribution

In this paper, we developed a moderated LCM CLAHE [35, 36] for optimizing the size of blocks using a dynamic block size before performing subsequent operations. There are a variety of color images that have different categories of noises that may include images with highly poor quality, ultrasonic, and realistic achieved from an unreliable and insecure environment [10]. There are so many filtering methods for filtering noise from an image signal. In the proposed work, we developed a new method for enhancing image quality by trimming down the noise from images before applying any filtering technique. Absolute Mean Brightness Error (AMBE), Entropy, and Peak Signal to Noise Ratio (PSNR) are the most favoured frameworks for estimating the HE quality [4]. We have taken PSNR, MSE, NRMSE, SSIM, and ENTROPY to evaluate our proposed method. These parameters are discussed in detail in Section 4.1. To experiment with our method, we have taken the CSIQ Image Database [3], which contains 30 original images of non monotonous features and has deferent variants of noise, distortion, and compression [3]. This database contains hundreds of images to experiment with in terms of preprocessing and noise reduction.

We have organized the rest of our paper in six sections. Section 2 reviews existing state of art techniques. Section 3 discusses a strategy proposed for improving LCM CLAHE with the help of deep learning based DBST. Section 4 theorizes the attributes regarding the strategy proposed in Section 3 and later about its associated algorithms. Section 5 discusses implementations, experimentations, and results of the proposed strategy. Finally, Section 6 concludes with our future works and plans regarding this particular subject and methodology.

2 Related work

In one of the significant researches in this area, [35, 36] proposed a method to enhance the features of finger print images with dynamic block size. However, they did not discuss the types of images for which it will give better performance. Moreover, they also did not highlight any relationship between block size and the feature of the object image. Furthermore, they did not evaluate the effect of type of image on block size selection, that is why, whenever different images except for those with finger prints for feature enhancing, it does not provide satisfactory results. [8, 10] have discussed the significances of enhancing images and the proper use of histogram. [25] proposed Fuzzy logic based histogram equalization (FHE) where they compare the proposed method with the noisy images. Hence, it is not clear that this method may be helpful in case of noisy images or not.

In the same line of research, another team of the researcher [27, 41] initiated LCM CLAHE depending upon user defined window sizes of block and selection features for examining the standard of the output image in a continuous repetitive manner [18] but excluding the parameters of PSNR for later hikes. [34] differentiated both global and local contrast enhancement techniques in their work. [43] represented an approach for assembling a 2 D histogram by dynamic window size selection. With this research work, after converting a user defined block, the active size and position window are implemented for selecting block groups for further operations. [15] proposed a contrast enhancement technique using weighted transformation functions. The research showed promising results by providing better performance than certain non block techniques, but they did not discriminate it for those block based techniques. In several research papers [17, 55], key point based techniques conserve brightness that are later initiated for enhancing contrasts with the help of histogram equalization. This same histogram equalization is again made in use by [16] for changing the feature enhancing concept entirely depending on Just Noticeable Deference (JND) technique and overcoming shortcomings eventuated within HE. The importance of image preprocessing is actively discussed by [2] for investigation better copy move forgery and regarding it as as one of most important techniques of histogram. [54] organized a histogram for de noising the images between over enhancing and over smoothening textures and controlling its quality at the same time. Researchers such as [40, 44, 50] effectively utilized HE blocks in various propositions for constructive feature enhancements and de noising of images.

In continuation of related work study [26] have surveyed the SOTA for brain MRI denoising techniques in which researcher have mentioned that among different hybrid techniques of image denoising combination of Gaussian based undecimated NLM and Principal Component Analysis (UNLM-PCA) is more promising. For better understanding of ML techniques in the area of classification and text sequencing and denoising [21, 37] have mentioned the use of recurrent network and NN.

As discussed above, HE blocks do not consider image features while implementing themselves in user defined dynamic block sizes that somehow are indistinctive about maximum feature enhancements because of low performing PSNR. The present paper introduces a new LCM CLAHE that depends on DBST and de noises the color images with the help of a deep learning approach.

2.1 Assessment of related work

S.No. | Reference | Methods | Cons and Pros |

|---|---|---|---|

1 | [38] | Modified Contrast Limited Adaptive Histogram Equalization depends on Local Contrast Enhancement | Cons: Image variants remain unchecked. Pros: Better contrast enhancement and information preservation |

2 | [4] | Image quality measuring method using HE based contrast enhancement techniques | Cons: Not suitable for real time processing due to more time complexity. Pros: Good correlation with human visual perception (HVP) |

3 | [25] | Fuzzy Logic Based Histogram Equalization for Image Contrast Enhancement | Cons: Suitable for only consumer electronic product Pros: The visual enhancement results of proposed FHE algorithm were better |

4 | [17] | Contrast Enhancement for Cephalometric Images using Wavelet based Modified Adaptive Histogram Equalization | Cons: The time complexity of wavelet-based methods are remarkably high Pros: Best contrast enhancement between skeletal structure and the background and also between the soft tissue and background. |

5 | [48] | Histogram equalization combined with dark detail enhancement algorithm | Cons: Image evaluation and selecting procedures are possible only after using image information entropy metrics. Pros: Makes it easier to observe the metal corrosion area and reduces the difficulty in carrying out research on metal products affected by corrosion |

6 | [7]) | multilevel edge features a guided network | Cons: Not Effective for high noise images Pros: MLEFGN achieves competitive results on AWGN and real noisy image denoising tasks |

7 | [21] | Unpair deep learning methods | Cons: Only investigation of denoising methods Pros: Uses a lightweight network and achieve the best denoising performance. |

8 | [5]) | wavelet transform | Cons: Investigated only on UAV videos Pros: From manual identifications. The results showed that the extracted trajectories were reasonably close to the ground truth data. |

9 | [47] | 3 D quasi recurrent neural network for hyperspectral Image (HSI) denoising | Cons: Investigated only on hyperspectral Image. Pros: Achieve better performance and faster speed |

10 | [51] | Regularization deep cascade broad learning system (DCBLS) architecture | Cons: Do not deal with blind Image. Pros: Also works with natural images. |

11 | [57]) | Generative adversarial network (GAN) | Cons: Performed on self-created data set Pros: |

12 | [13] | Flexible Hysteresis smoothing (FHS) | Cons: Relatively independent of noise distribution but not cover a wide range of type of noises and need to modify some parameter for adoptability Pros: method can directly learn the physical parameters from data and recover clean images from hazy ones in an end-to-end manner |

13 | [33] | A hybrid model of learning based and reconstruction based method | Cons: The method is slow in computation Pros: Can exploit both deep CNN and the knowledge of the observation model |

14 | [6] | ML techniques to predict the threshold of VisuShrink de-noising technique | Cons: self-estimation the visushrink threshold is not done Pros: Efficient framework in terms of different noises |

15 | [46] | Unsupervised Random Denoising Method | Cons: Only limited to Seismic data Pros: Can provide some performance guaranties and an insight into the denoising system |

16 | [58] | Convolutional Sparse Coding from a Multi-Scale Perspective | Cons: It is necessary to investigate the effect of scale number on the denoising performance. Pros: Proposed MCSC Net significantly advances the denoising performance, with an average PSNR improvement of 0.32 dB over the state-of-the-art (SOTA) CSC based method |

17 | [24] | Similarity-Informed Self-Learning | Cons: Not applied on other type of images, only limited to seismic images. Pros: effective and robust for seismic image denoising |

18 | [23] | noise-sample to noise-sample (NS2NS) | Cons: NS2NS cannot accurately recover the original ground truth unless we are able to evaluate the mean value of the noise in advance Pros: Uses original noisy images to construct the training dataset |

19 | [20] | Gradient Domain Guided Filtering and NSST | Cons: Effect of NSST denoising alone is not obvious. Pros: Restore the natural appearance of the image |

3 Proposed method

This particular section discussed briefly about the theories of HE initiated strategy for denoising the images. Figures 1, 2 and 3 features the category variants of images and also represents the operation flow charts of CLAHE and LCM CLAHE. Figure 4, on the other hand, features graphic presentation of our initiated strategy following its details in below subsections.

3.1 Selection of enhancement parameters

Image parameters are systematically enhanced at this particular stage on the basis of contrast and brightness. On exemplification, contrast is regarded as the enhancing parameter where its major difference is between the brightness and the color that distinctively differentiates the image of an object or the actual physical object itself. In similar words, the contrast in the real time and real world is identified whenever a particular color or brightness of one object is compared with the same features of another object. Contrast has many definitions but are defined differently in each different situation. Therefore, luminance contrast is exemplified here but having its formulas get applied to other physical quantities. The definitions of a contrast is generally represented in the form of a ratio where luminance deference is usually compared to average luminance. Mathematically contrast can be defined as follows

Equation 1 represent the contrast where c is contrast, \({l}_{d}\) and \({l}_{a}\) are luminance deference and average luminance respectively.

Image processing methods substantially require contrast enhancements for processing valuable scientific images such as images taken from either a satellite or during an X-ray, and also for enhancing the details for better effect. Precisely, the histogram equalization stands out to be one of the best and the mainstream methods for enhancing image contrast.

Figure 1 shows 30 sample images of the image set CSIQ that is used to experiment. These images are various images in terms of features like color distribution, contrast, brightness, and noise. Images are numbered from top left corner to right then row wise till bottom left corner as image0, image1, image3… to image29.

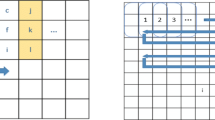

Figure 2 shows the process of CLAHE. In this histogram equalization procedure, six key steps start from selecting the CLAHE parameter for the input image and end with the result. Image is processed in 2nd step then in 3rd step contrast is locally controlled, and modified further gray level mapping is done then after gray level mapping is interpolated to assemble the image before producing the output. Figure 3 has a quality check step used to control the modification in local contrast, and accordingly, CLHAE parameters are adjusted.

Figure 4 shows the process of the proposed method in which selection block size that is one of the parameters for HE is based on the feature of the image, and it is selected dynamically (i.e., at run time)

3.2 Local contrast modification

Local contrast modification is the second important step where it itself gets implemented on the input image. The function [38]of this step is designed to abstract data both locally and globally following the production of the enhanced images. Under transformation functions, we provide an expression for both the local mean and the standard deviation for the user defined local window of size n ∗ n.

On calculating the local mean,' m', and standard deviation σ from Eqs. 2 and 3, all the user defined windows produce the average of these given values for achieving finer details of an image with the help of Eqs. 4 and 5. Finally, image with finer details act as an input and modify CLAHE, for using DBST and optimizing the Image PSNR during HE.

Here, f and g are input images whereas LCM stands for enhanced image, respectively. E is the enhancement parameter, M and m are global respectively.

3.3 Selection of block size based on the feature of an image

Our concerned research work found a fascinating factor regarding the unique relationship between block size used in the HE technique and features of an input image that may improve PSNR after preprocessing. The relationship is shown in Fig. 5, which is divided into two parts, Fig. 6. a and b. Figure 6. a depicts the categories of images taken for the experiment, and Fig. 6. b represents how a uniquely built block size produces maximum capability value of PSNR in each type of image. The graph in this figure represents block size in the x-axis and PSNR in the y-axis. Reconstructed images qualities are effectively measured using PSNR. Which means, here, the signal acts as an original data and the noise acts as that particular error found during the resconstruction of an image. PSNR is corresponding to the human perception of reconstruction quality and with its highest impact, it allows us to take this parameter for optimizing block sized LCM CLAHE.

A general CNN Model to classify the images [32]

4 1Extracting the feature using CNN

Several objects and colors available in any image are the key factors in selecting the block size for DBST LCM CLAHE.

Figure 5 shows the CNN model to classify the object based on the feature. This model is used to classify the image into the category of 2k where k is an integer number and further "i" is selected by equation number 6. Then the model is trained for the value of I and k, and new image block size is predicted ask for input i.

Peak Signal-to-Noise Ratio (PSNR) is a widely used metric in image processing to evaluate the quality of reconstructed or compressed images. PSNR has important role in the measurement of quantitative image quality, quality control in image compression, standardized evaluation, sensitivity to perceptual important distortion and many more.

In Eq. 7, R is the maximum fluctuation in the input image data type, whereas MSE stands for Mean Square Error between original and reconstructed images. Equation 6 provides a suitable value of 'i' for which PSNR stays maximum and 'i' remains block size as i∗i pixels for histogram equalization. Therefore, the value of' i’ stays as an input to CLAHE as the block size allowing Eq. 7 to show the PSNR value.

Figure 6 shows the block size effect over PSNR value during the histogram equalization. Figure 6.a shows 3 sample images to calculate the HE for different block sizes, and 6.b shows the relation graph between PSNR and block size. The x-axis of Fig. 6.b shows block size, whereas the y-axis shows the PSNR. It is clear from Fig. 6.b that a single value of block size PSNR is maximum for the image, but this single value of block size is not the same for different images.

4.1 Applying proposed CLAHE

On calculating ’i’ value, CLAHE get implemented on the object image with block size ’i’. This particular method is outlined accurately for non monotonous noisy images. Figure 2 shows all the steps involved in CLAHE.

4.2 Performance measurement

The working performance of our initiated strategy is measured critically from image quality parameters such as Peak to Signal Noise Ratio (PSNR), Mean Square Error (MSE), Normalize Root Mean Square Error (NRMSE), Structure Similarity Index Map (SSIM), and Entropy.

Peak Signal-to-Noise Ratio (PSNR) is a measurement used to assess the quality of a reconstructed or compressed signal by comparing it to the original signal. It quantifies the ratio between the maximum possible power of a signal and the power of the noise that affects it. Higher PSNR values indicate better quality and less distortion.

Mean Square Error (MSE) is a common metric used to quantify the average squared difference between the values of the original signal and the reconstructed or compressed signal. It provides a measure of the overall distortion or error between the two signals, with lower MSE values indicating better fidelity.

Normalized Root Mean Square Error (NRMSE) is an extension of MSE that scales the error metric by the range of the original signal. It provides a normalized measure of the error, making it easier to compare across different signals or datasets.

Structural Similarity Index Map (SSIM) is an image quality assessment method that measures the similarity between two images based on luminance, contrast, and structural information. It evaluates the perceptual quality of the reconstructed or compressed image by considering both local and global image characteristics. Higher SSIM values indicate better image similarity and quality.

Entropy is a measure of the amount of information or randomness in a signal or data. It quantifies the average amount of uncertainty or surprise in the values of the signal. In image processing, entropy can be used to assess the complexity or diversity of pixel values, with higher entropy values indicating greater complexity or randomness in the image.

The higher the value of PSNR, SSIM, Entropy, the lower is the value of MSE and NRMSE regarding better image quality. The PSNR calculation is done with the help of Eq. 7, and Eqs. 8-11 offer the formulas for the remaining parameter:

In Eqs. 7 and 8, I1 and I2 are the original and reconstructed images of size M ∗ N.

In Eq. 10, μx is the average of x , μy is average of y, σx2 is the variance of x, σy2 is the variance of y, σxy is the covariance of x and y, c1 =(k1L)2, and c2 =(k2L)2 are two variables for sustaining the division with a weak denominator, L. Here, L is the dynamic range of pixel values (2bitperpixel -1) where, k1=0.01 and k2=0.03 by default. The SSIM indulges with symmetry conditions, i.e., SSIM(x,y)=SSIM(y,x), where x and y stand for equivalently proportional windows from primary and reconstructed images, respectively. Image entropy aggregately represents an image business which includes certain data proportions that must be encoded by a reconstructive algorithm. Entropy must be consistent for any image in case of reconstruction. Shannon Entropy is very suitable for estimating the data proportions in a given image.

In Eq. 11, pi is the probability that the difference between two adjacent pixels equals i.

4.3 The algorithm

DBST LCM CLAHE is an initiated algorithm that is renamed due to its uses that deal with dynamic block sizes that do not stabilize for image variants but are dependent on image categories such as feature distribution and noises. Our proposed strategy represents the algorithm in following steps:

5 Experiment result and discussion

Our initiated strategy is implemented in Python 3.6 and in Python image packages such as skimage, OpenCV, and other science packages. Ubuntu 16 is operated on a corei3 system as a hardware platform, 8 GB RAM and 500 GB HDD.

Figure 7 shows the performance of CLAHE for different categories of images. Here, many pixels vs. contrast graphs are presented only for 2 out of 8 images taken for simulation of the graphical representation of results. Images are shown in grayscale values, and graphs have the number of pixels in the y-axis containing a particular value of contrast shown in the x-axis.

Figure 8 shows the performance of LCM CLAHE for different categories of images. Here, the number of pixel vs. contrast graphs is presented only for 2 out of 8 images taken for simulation of graphical representation of results. Images are shown in grayscale values, and the graph has the number of pixels in the y-axis containing a particular value of contrast shown in the x-axis.

Figure 9 represents the functioning capabilities of the initiated methodology in different categories of images. Here number of pixel vs contrast graph is presented only for 2 images out of 8 images taken for simulation of graphical representation of results. Images are shown in grayscale values, and the graph has the number of pixels in the y-axis containing a particular value of contrast shown in the x-axis. In proposed methods, it is promising that contrast distribution for pixel is smooth and resultant images are more promising than state of art techniques. The following figure (Fig. no. 10) shows five graphs, graph a, graph b, graph c, graph d, and graph e. Graph a shows the performance of all three methods, b states of art techniques, and one of our proposed methods. In this representation, the y-axis represents the PSNR value, and the x-axis represents the respective image taken for the experiment. There are three lines inside the graph red, blue, and green; the red line shows the proposed method, the green line shows the LCM CLAHE, and the blue line shows the CLAHE. The graph shows that our proposed method is more promising than the state of the art technique for PSNR performance. Further graph b, c, d, e shows the performance of MSE, NRMSE, ENTROPY, and SSIM. All graphs show the promising performance of the proposed method.

5.1 Comparing with existing techniques

In this section, we compare the state of the art methods with our proposed method regarding PSNR, MSE, NRMSE, SSIM, and SEntropy. The comparison results are shown in Tables 1, 2, 3, 4, and 5, which prove promising improvements. We have used three generalized categories of images as shown in Figure 1. Figures 7, 8, and 9, and 10 show potentialities of CLAHE, LCM CLAHE, and the advanced form of DBST LCM CLAHE. Figure 10 shows potentialities of CLAHE, LCM CLAHE, DNN, GradNet and SMNET with the advanced form of DBST LCM CLAHE.

5.2 Comparing with existing techniques

Throughout this section, the state of the art methods are analogized with our initiated strategies regarding PSNR, MSE, NRMSE, SSIM, and Entropy. The comparitive results are shown in Tables 1, 2, 3, 4, 5, and 6 which prove promising improvements.

A quick start on initiated approaches necessitated distinctive refinements with PSNR so that the performance graphs (as shown in Fig. 10) provide comprehensive results and from comparing Tables 1, 2, 3, 4, 5 and 6, the proposed method may show satisfactory improvements.

6 Conclusion and future work

Our work has introduced a new HE method using deep learning based DBST LCM CLAHE to denoise an image depending on CLAHE’s dynamic block size selection method. Further, we have taken the image database having three categories of images with different types of noises. The structural strategy initiated in this paper is analysed using parameter variants of images such as MSE, NRMSE, and SSIM as well as preeminently enhancing those with rich featured noisy images. Henceforward, the future research may include an automatic block size selection procedure regulated by input image attributes during the preprocessing conditions conducted by image foregey detecting variants so that the state-of-the-art performance techniques are vigorously upgraded. Also, the proposed method can be applied to medical images to check the performance in different scenarios since it is only checked in natural images.

Data availability

Data is available on request.

References

Dhir V, Ashish KC, Sugandha C (2016) Architectural and Qos issues in mobile cloud computing environment for real-time video streaming. Int J Adv Comput Sci Appl

Dhir V (2017) A review on image forgery & its detection procedure. Int J Adv Res Comput Sci 8(4)

Larson EC, Damon MC (2010) Most apparent distortion: full-reference image quality assessment and the role of strategy J Electron Imaging 19(1):011006-011006

Chen Soong-Der (2012) A New Image Quality Measure for Assessment of Histogram Equalization-Based Contrast Enhancement Techniques. Digit. Signal Process. 22:640–47

Chen X et al (2021) High-resolution vehicle trajectory extraction and denoising from aerial videos. IEEE Trans Intell Transp Syst 22(5):3190–3202 https://ieeexplore.ieee.org/document/9133275/

Eltahawi Ahmed, Mostafa Iman, Ghuniem Atef (2021) Image de-noising using intelligent parameter adjustment. Int J Intell Comput Inf Sci 20(2):53–66

Fang Faming et al (2021) Multilevel edge features guided network for image denoising. IEEE Trans Neural Netw Learn Syst 32(9):3956–70 https://ieeexplore.ieee.org/document/9178433/

Fari Muhammad Abubakar (2012) Image enhancement using histogram equalization and spatial filtering. Int J Sci Res (IJSR) 1(1):15–20

Gao J, Chen Q, Blasch E (2012) Image denoising in the presence of non-gaussian, power-law noise. In 2012 IEEE National Aerospace and Electronics Conference (NAECON), pp 103–8

Garg R, Mittal B, Garg S (2011) Histogram equalization techniques for image enhancement. Int J Electron Commun Technol 2(1):107–111

Ghose S, Singh N, Singh P (2020 January) Image denoising using deep learning: Convolutional neural network. In 2020 10th International Conference on Cloud Computing, Data Science & Engineering (Confluence) (pp. 511-517). IEEE

Ghose S, Singh N, Singh P (2020, January) Image denoising using deep learning: Convolutional neural network. In 2020 10th International Conference on Cloud Computing, Data Science & Engineering (Confluence) (pp. 511–517). IEEE

Golshan Hanif, Hasanzadeh Reza P. R (2021) Fuzzy hysteresis smoothing: a new approach for image denoising. IEEE Trans Fuzzy Syst 29(3):686–97 https://ieeexplore.ieee.org/document/8937730/

Ilesanmi AE, Ilesanmi TO (2021) Methods for image denoising using convolutional neural network: A review. Complex Intell Syst 7(5):2179–2198

Jabeen A, Riaz MM, Iltaf N, Ghafoor A (2016) Image contrast enhancement using weighted transformation function. IEEE Sensors J 16(20):7534–7536

Jung C, Sun T (2017) Optimized perceptual tone mapping for contrast enhancement of images. IEEE Transactions on Circuits and Systems for Video Technology 27(6):1161–1170

Kaur A, Singh C (2017) Contrast enhancement for cephalometric images using wavelet-based modified adaptive histogram equalization. Appl Soft Comput 51:180–191

Kim S. Min BS, Lim DK, JH (2013) Determining parameters in contrast limited adaptivehistogram equalization. In Proceedings of the 7th International Conference on Information Security and Assurance vol. 21, pp. 204–207

Li Z et al (2021) Investigation of low-dose ct image denoising using unpaired deep learning methods. IEEE Trans Radiat Plasma Med Sci 5(2):224–34 https://ieeexplore.ieee.org/document/9134929/

Li Zhe, Liu Hualin, Cheng Libo, Jia Xiaoning (2023) Image denoising algorithm based on gradient domain guided filtering and NSST. IEEE Access 11:11923–33

Lin -W, Shao Y, Djenouri Y (2021) ASRNN: A recurrent neural network with an attention model for sequence labeling. Knowl-Based Syst 212:106548

Liu Y, Anwar S, Zheng L, Tian Q (2020) Gradnet image denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops pp. 508–509

Liu N, Wang J, Gao J, Chang S et al. (2022b) Similarity-informed self-learning and its application on seismic image denoising. IEEE Trans Geosci Remote Sens 60:1–3

Liu N, Wang J, Gao J, Chang S, Lou Y (2022a) NS2NS: Self-learning for seismic image denoising. IEEE Trans Geosci Remote Sens 60:1–3

Magudeeswaran V, and Ravichandran CG (2013) Fuzzy logic-based histogram equalization for image contrast enhancement. Math Probl Eng

Mishro Pranaba K, Agrawal Sanjay, Panda Rutuparna, Abraham Ajith (2022) A survey on state-of-the-art denoising techniques for brain magnetic resonance images. IEEE Rev Biomed Eng 15:184–99

Mohan S, Ravishankar M (2013) Optimized histogram based contrast limited enhancement for mammogram images. Short Paper, ACEEE International Journal on Information Technology 3(1),– pp.1–6

Pizer SM et al. (1987) 39 Computer vision, graphics, and image processing In Adaptive Histogram Equalization and Its Variations. Computer vision, graphics, and image processing 39(3):355–368

Pizer SM et al. (1987) Adaptive Histogram Equalization and Its Variations. Comput Vis Graph Image Proc 39(3)

Pizer SM (1990) Contrast-limited adaptive histogram equalization: Speed and effectiveness stephen m. pizer, r. eugene johnston, james p. ericksen, bonnie c. yankaskas, keith e. muller medical image display research group. In Proceedings of the first conference on visualization in biomedical computing, Atlanta, Georgia 1Vol. 337 p. 1

Prabbhu (2018) Understanding of convolutional neural network (CNN)—deep learning. MediumCom 1(11)

Prabbhu (2022) Understanding of Convolutional Neural Network (CNN) — Deep Learning. https://medium.com/@RaghavPrabhu/understanding-of-convolutional-neural-network-cnn-deep-learning-99760835f148. Acessado em, 24, p.45.

Ren C, He X, Pu Y, Nguyen TQ (2021) Learning image profile enhancement and denoising statistics priors for single image super resolution. IEEE Trans Cybern 51(7):3535–48 https://ieeexplore.ieee.org/document/8809912/

Shah Nisarg, Dahiya Vishal (2015) Comparison of global–local contrast enhancement in image processing. Int J Applic Innov Eng Manag 4(11):16–22

Shalash WM, Abou-Chadi FE (2006) Fingerprint image enhancement with dynamic block size. In Radio Science Conference, 2006. NRSC 2006. Proceedings of the 23rd, C24, pp. 1–8 IEEE

Shalash WM, Abou-Chadi FE (2006a) Fingerprint image enhancement with dynamic block size. In Proceedings of the Twenty Third National Radio Science Conference (NRSC'2006) (pp. 1–8). IEEE

Shao Yinan et al (2021) Self-attention-based conditional random fields latent variables model for sequence labeling. Pattern Recogn Lett 145:157–64

Shelda Mohan, Ravishankar M (2013) Modified contrast limited adaptive histogram equalization based on local contrast enhancement for mammogram images. In: Das Vinu V, Yogesh Chaba (eds) Mobile Communication and Power Engineering. Springer Berlin Heidelberg, Berlin, Heidelberg, pp 397–403

Sonka Milan, Hlavac Vaclav, Boyle Roger (1993) Image Processing, Analysis and Machine Vision. Springer, US, Boston

Tang JR, Isa NAM, 2017. Bi-histogram equalization using modified histogram bins. Applied Soft Computing 55:31–43

Tian C, Fei L, Zheng W, Xu Y, Zuo W, Lin CW (2020) Deep learning on image denoising: An overview. Neural Networks 131:251–275

Tian Chunwei et al (2020a) Deep learning on image denoising: An overview. Neural Netw 131:251–75

Tsai YW. Cheng FC, Ruan SJ (2017) An efficient dynamic window size selection method for 2-D histogram construction in contextual and variational contrast enhancement. Multimedia Tools and Applications 76:1121–1137

Wang Y, Pan Z (2017b) Image contrast enhancement using adjacent-blocks-based modification for local histogram equalization. Infrared Phys Technol 86:59–65

Wang Yang, Pan Zhibin (2017) Image contrast enhancement using adjacent-blocks-based modification for local histogram equalization. Infrared Phys Technol 86:59–65

Wang F, Yang B, Wang Y, Wang M (2022) Learning from noisy data: an unsupervised random denoising method for seismic data using model-based deep learning. IEEE Trans Geosci Remote Sens 60:1–14

Wei Kaixuan, Ying Fu, Huang Hua (2021) 3D quasi recurrent neural network for hyperspectral image denoising. IEEE Trans Neural Netw Learn Syst 32(1):363–75 https://ieeexplore.ieee.org/document/9046853/

Xiong J, Yu D, Wang Q, Shu L, Cen J, Liang Q, Chen H, Sun B (2021) Application of histogram equalization for image enhancement in corrosion areas. Shock and Vibration pp.1–13

Xu Jingyi, Deng Xin, Mai Xu (2022) Revisiting convolutional sparse coding for image denoising: from a multi-scale perspective. IEEE Signal Process Lett 29:1202–6

Yao M, Zhu C (2017) June. Study and comparison on histogram-based local image enhancement methods. In 2017 2nd International Conference on Image, Vision and Computing (ICIVC) (pp. 309-314). IEEE

Ye Hailiang, Li Hong, Philip Chen CL (2021) Adaptive Deep Cascade Broad Learning System and Its Application in Image Denoising. IEEE Trans Cybern 51(9):4450–63 https://ieeexplore.ieee.org/document/9044296/

Yu W, Liang J, Li L, Xiao N (2020) Single image de-noising via staged memory network. In MM 2020 Proceedings of the 28th ACM International Conference on Multimedia, Association for Computing Machinery, Inc, pp. 37–45

Yu S, Ma J, Wang W (2019) Deep learning for denoisingdeep learning for denoising. Geophysics 84(6):V333–V350

Zhang M, Desrosiers C (2017) Image denoising based on sparse representation and gradient histogram. IET Image Proc 11(1)

Zhang W, Yang Z, Niu S, Wang J (2017) Detection of copy-move forgery in flat region based on feature enhancement. In Digital Forensics and Watermarking: 15th International Workshop, IWDW 2016, Beijing, China, September 17–19, 2016, Revised Selected Papers 15 (pp. 159-171). Springer International Publishing IWDW

Zheng Yifeng et al (2021) Denoising in the Dark: Privacy-Preserving Deep Neural Network-Based Image Denoising. IEEE Trans Depend Secure Comput 18(3):1261–75

Zhu Hongyuan et al (2021) Single-Image Dehazing via Compositional Adversarial Network. IEEE Trans Cybern 51(2):829–38 https://ieeexplore.ieee.org/document/8946591/

Zuiderveld K (1994) Contrast limited adaptive histogram equalization. Graph Gems 474–85. http://ci.nii.ac.jp/naid/10031105927/en/ (October 5, 2021).

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions No funding received to perform this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors have no conflict of interest. All authors equally contributed in this work.

Competing Interests

The authors have no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chakraverti, S., Agarwal, P., Pattanayak, H.S. et al. De-noising the image using DBST-LCM-CLAHE: A deep learning approach. Multimed Tools Appl 83, 11017–11042 (2024). https://doi.org/10.1007/s11042-023-16016-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16016-2