Abstract

The interpretation of medical images into a natural language is a developing field of artificial intelligence (AI) called image captioning. This field integrates two branches of artificial intelligence which are computer vision and natural language processing. This is a challenging topic that goes beyond object recognition, segmentation, and classification since it demands an understanding of the relationships between various components in an image and how these objects function as visual representations. The content-based image retrieval (CBIR) uses an image captioning model to generate captions for the user query image. The common architecture of medical image captioning systems consists mainly of an image feature extractor subsystem followed by a caption generation lingual subsystem. We aim in this paper to build an optimized model for histopathological captions of stomach adenocarcinoma endoscopic biopsy specimens. For the image feature extraction subsystem, we did two evaluations; first, we tested 5 different vision models (VGG, ResNet, PVT, SWIN-Large, and ConvNEXT-Large) using (LSTM, RNN, and bidirectional-RNN) and then compare the vision models with (LSTM-without augmentation, LSTM-with augmentation and BioLinkBERT-Large as an embedding layer-with augmentation) to find the accurate one. Second, we tested 3 different concatenations of pairs of vision models (SWIN-Large, PVT_v2_b5, and ConvNEXT-Large) to get among them the most expressive extracted feature vector of the image. For the caption generation lingual subsystem, we tested a pre-trained language embedding model which is BioLinkBERT-Large compared to LSTM in both evaluations, to select from them the most accurate model. Our experiments showed that building a captioning system that uses a concatenation of the two models ConvNEXT-Large and PVT_v2_b5 as an image feature extractor, combined with the BioLinkBERT-Large language embedding model produces the best results among the other combinations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the last few years, deep neural networks have made notable advancements in image processing tasks, such as image classification [2, 5, 24] and object detection [3, 20, 26, 37]. These advancements have led to improved accuracy and efficiency in processing visual data. One important application of image processing is image retrieval, which involves retrieving images from a large database that are relevant to a given query. Consequently, image retrieval systems are crucial for obtaining relevant images. This is a difficult topic since the system must comprehend both the individual components of an image as well as its entire context. Images that are more relevant to the query should be displayed by an ideal image retrieval system. Image retrieval that uses annotated images and CBIR are two areas of current research in this field. The development of neural networks and processing ability in recent years has led to an increase in research into image retrieval through image captioning. In the medical sector; everything in medicine, from diagnosis to therapy, includes medical pictures. However, the problems with their description are unique, making it a difficult and time-consuming chore for medical professionals. And because medical professionals have a limited amount of time to write textual diagnosis reports to describe the findings on the growing number of medical images, several issues arise, such as missed findings, inconsistent quantification of findings, and a patient's hospital stay prolonged, which raises the price of hospital treatment. Many computer-aided report generation systems based on image captioning have been proposed to simplify the reporting process for medical images. These systems automatically extract the findings from medical images and produce textual reports with fine-grained information, just like a skilled physician. This reduces the amount of time required for doctors to manually extract features from photos before creating a textual report. Additionally, it lessens the need for additional professionals to prepare reports because the creation of medical reports is a fully automated and effective process. Images can be used by humans to obtain visual information. The key goal is to create automatic medical image captioning using this human ability to extract meaningful words from digital images. Various machine and deep learning models are used in medical image captioning to describe the content of an input image in natural language. Convolutional neural networks (CNN) are commonly used in deep learning to find features in images and recurrent neural networks (RNN) to produce captions for the found features.

In this paper, we proposed a method for selecting the best model that yields efficient image feature extraction models. To create the relevant caption, this feature vector is fed into the language embedding model. The choice of the language embeddings model was made after comparing BioLinkBERT-Large to an LSTM and testing it with various image feature extractors to determine which combinations worked best. Our tests revealed that, when combined with the BioLinkBERT-Large language embedding model, the two vision models ConvNEXT-Large and PVT_v2_b5, which were utilized as image feature extractors, generated the best results of all the combinations.

This paper is organized as follows; Section 2 for background about the models used for four parts in this paper; augmentation, features extraction, the concatenation models and the pre-trained word embedding. Section 3 for the related work for image captioning in general and medical image captioning and the word embedding used in image captioning. Section 4 discusses the proposed framework. Section 5 presents the evaluation stage in two sub-sections one for the five vision models with (LSTM, RNN, and bidirectional-RNN) and then the evaluation for the vision models with using (augmentation, without augmentation, and BioLinkBERT-Large as an embedding layer); the other sub-section for the concatenation vision models with others and applied also the pre-trained word embeddings and make comparison with LSTM for them to find the best one. Finally, Section 6 presents the conclusion and future work.

2 Background

Writing a simple caption while seeing the image is a relatively easy task because humans can detect the objects in an image, and their interactions, and connect them to form relevant statements. Similar to how people learn from different sights and accumulate experience, a computer may be taught to do the same. We aim in this paper to optimize the medical image captioning systems for generating histopathological captions of stomach adenocarcinoma endoscopic biopsy specimens [28]. For the vision part, we use two different vision models: the first one is an individual vision model, and the second is a concatenation of two vision models that enhance the feature extraction part of the image captioning process. Then, to optimize the language part, we evaluate the use of LSTM with pre-trained language embedding models, namely BioLinkBERT-Large [35] to get the best model among them to generate the most accurate generated captions.

2.1 Augmentation

Increasing the accuracy and efficiency of deep learning models usually requires a large amount of training data. However, annotating a sizable amount of training data would be a difficult and time-consuming task. Therefore, appropriate automated data augmentation can be helpful to improve the performance of deep learning models. Hence, to increase the effectiveness of our proposed model, we resorted to using argumentation as the dataset that we use in our work contains only one sentence as a caption for each image. For augmenting the dataset, we used the nlpaug library [13]; especially the type contextualized word embedding which is better than using word embedding. The contextualized word embedding that we used is with BioLinkBERT-Large [35]. We generated 5 sentences for each image and used them in our captioning model.

2.2 Feature extraction models

When a large dataset is available and it is required to conserve resources without compromising any crucial or relevant information, the feature extraction technique makes life easier. A data set's extraneous data can be reduced with the help of feature extraction. We extract features from the final layer of the vision models, which comes before the fully connected layer. The entire model now learns about the items in the image and their relationships rather than just concentrating on the image class. Here are the models that we compared, for instance, VGG [22], ResNet [4], PVT [31], SWIN-Large [11], and ConvNeXT-Large [12], which are now applied for resolving visual recognition issues because their main challenge is the classification of objects in images into classes to demonstrate their performance and efficiency in image captioning. In comparison to other models, we discovered that the ConvNeXT-Large model is better at learning in deep networks.

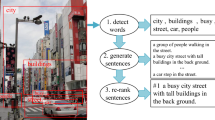

2.3 Feature concatenation

A set of concatenated features in the form of a feature map can be derived from individual models and the input of dataset photos, as shown in Fig. 1. Concatenating one feature vector with a separate model to produce merged features [12] is how we further combine them to create a concatenated feature map.

Example of one the feature concatenation we used

2.4 Word embedding’s

One of the deep learning's greatest contributions to NLP problems is the process of encoding words and documents known as word embedding. Word embedding refers to the word representation technique that enables the numeric representation of words with comparable meanings. This section discusses BioLinkBERT-Large [35] method for learning a word embedding from text data, which is an efficient language model pre-training technique that takes into account document link knowledge. To construct language model inputs from linked documents placed in the same context as a single document or a random selection of documents as in BERT, they first obtain linkages between documents, such as hyperlinks, from a text corpus. It has different versions and we used the largest one which is called BioLinkBERT-Large.

3 Related work

There are kinds of research in image captioning, whether in image captioning in general or medical image captioning in particular. We presented some of this work in the following subsections. On the other hand, there is also research in decoder “language subsystem” or the use of embedding layer; we presented it in Section 3.3.

3.1 Image captioning overall

Three categories can be made out of the techniques used to create image captions:

-

Methods based on templates: The fundamental steps in template-based approaches are object detection and attribute recognition from the input image, followed by the use of caption templates and the generation of captions using this template. For instance, Li et al. [19] concentrated on identifying the interconnections between the various items in a picture in addition to segmenting a sentence into different phrases and ultimately identifying the connections between the image's objects and the sentence's constituent phrases. Kulkarni et al. [9] employed a conditional random field (CRF) to learn the prepositions and best labels for each object in the image.

For template-based approaches, stiff, hand-drawn templates are needed. Because there are fewer potential sentences as a result, performance suffers. The generation of phrases with different lengths is also challenging using this method.

-

Methods based on retrieval: The methods that depend on retrieval fall within this group.

These methods turn to creating captions for photos into a retrieval effort by getting pictures with captions that are similar and then editing and combining them to form new captions. Kuznetsova et al. [7] utilize a distance metric to locate photos with comparable captions. Furthermore, by applying an intermediate "generalization" step that fine-tunes the description, these systems eliminate information from the recovered images that aren’t pertinent to the image for which the caption is being generated.

-

Deep neural network techniques: Kiros et al. [8] employed the encoder-decoder framework for image captioning and the Structure Content Neural Language Model (SC-NLM) as a decoder. To translate phrases into a vector and retrieve images, Socher et al. [6] introduced the Dependency Tree-Recursive Neural Network (DT-RNN). Mao et al. [23] created the Multimodal Recurrent Neural Network (m-RNN), which combined word embeddings and representations of visual features before mapping them to the same vector space and providing it as input to the RNN. Additionally, Vinyals et al. [14] suggested a technique for employing word embeddings in the same space as the hidden representations of the LSTM. Xu et al. [29] proposed an attention mechanism to focus attention on key areas of the image. Additionally, You et al. [34] suggested semantic attention model is integrated with recurrent neural networks to generate captions. In the bottom-up method, the authors employ semantic notions as attributes; in the top-down method, they use features from images added with an attention mechanism as an encoder that the RNN will decode.

3.2 Image captioning with medical images

Chest X-ray images were used by Shin et al. [36] to create image annotations in addition to classifying and detecting disease from images. They first trained Network-In-Network [21] and GoogleNet [10] CNN. They then extracted 17 disease labels corresponding to MESH terms that were frequently used in the reports but did not frequently co-occur with other MESH terms, and they performed better on GoogleNet. To generate the context of diagnosed diseases, an LSTM or GRU was trained to decode the CNN embedding of the input image. The already trained CNN-RNN was retrained in the second training phase to produce combined image/text context vectors on the image/text.

Wu et al. [25] utilized a framework based on Vinyals et al. [32]. For encoding the 37,000 retinopathy photos, they employed a pre-trained CNN using dropout and ensemble approaches. The encoded features were then passed into an LSTM, which translated the image features into a caption. Only the abnormalities discovered were revealed by the created description. They then trained the model to produce a caption with four anomalies (microaneyryms, softexudates, hardexudates, and hemorrhages). They obtained generally positive results, however because softexudates only emerged in a tiny portion of the dataset, results for average sensitivity of each abnormalities were poor. When they combined softexudates and hardexudates as one aberration in their subsequent experiment, they obtained better results than in the first one, but over-fitting remained since the dataset was too small.

A deep hierarchical encoder-decoder network (DHN) was put into use by Xiao et al. for captioning medical images. Encoder and decoder functions are separated by DHN. To obtain medical captions, it can evaluate the possible information by fusing high-level linguistic and visual semantics [33]. Natural language processing was used by Zakraoui et al. to assess the textual content of stories. Then, a pre-trained deep-learning technique for medical image captioning is taken into account [39].

To examine the potential qualities to encode the content of medical images using an attention approach without taking the bells and whistles into account, Wang et al. suggested a cascade semantic fusion (CSF) architecture [30]. An efficient framework for captioning the remote sensing image was created by Yuan et al. Convolution of a multi-label attribute graph and multilevel attention are the foundations of their framework [38].

3.3 Word embedding related work

For Image Captioning, word embeddings have been used as a unique embedding layer that takes a sequence of words as input, converts them to integers according to their position in the vocabulary, and then produces a vector of length n, where n is the embedding length. The embedding layer's weights are either derived from weights used in previous language modeling techniques or learned through backpropagation. As in You et al. [18], who use Pennington et al. [15]'s pre-trained Glove word vectors to encode word information into the LSTM, pre-trained word embeddings have also been used. The skip-gram model by Mikolov et al. [1] is improved by Embeddings of Glove, but both models depend on the text's linear sequential features. To put it another way, both generate representations of the text using word co-occurrence features. Pre-trained embeddings do not enhance model performance, according to other studies like Vinyals et al. [32]. According to Viktar et al. [31], who used a pre-trained embedding called GloVe with fine-tuning, they discovered that it can enhance the model's performance and is best suited for improving image captioning model training.

4 The proposed framework

Because automated text captioning is a challenging process, it is typically developed using a complicated architectural paradigm. In our proposed model for medical image captioning, there are two stages for the task of captioning; a vision stage that acts as a vision encoder that extracts features from input images using a computer vision model, and the other stage is a language stage that acts as a decoder which converts the features into natural sentences. Our purpose in this paper is to enhance the proposed image captioning architectural model. This purpose can be done by doing the two stages mentioned above: the first one is to optimize the vision encoder by extracting features from images using the best models. The second is to get the most accurate generated captions by optimizing the language decoder. To achieve the first sub-goal, we conducted a set of existing vision models to find the best vision encoder model among them. In these experiments, we first used the chosen vision models which are VGG [22], ResNet [4], PVT [31], SWIN-Large [11], and ConvNexT-Large [12] individually with three different phases; LSTM, RNN and bidirectional RNN to find the most accurate one as the first improvement then compare the vision models with LSTM (without augmentation) and (with augmentation) and (with augmentation with using BioLinkBERT-Large as an embedding layer). Then, we built our new vision models as concatenations of some vision models (SWIN-Large, ConvNexT-Large, and PVT_v2_b5 [31]) with augmentation and LSTM once and with augmentation and BioLinkBERT-Large as an embedding layer once again.

Figure 2 illustrates our suggested "vision model," which refers to the stage of extracting the feature vectors from the input images. To find the most effective vision model for image captioning, we presented a new feature concatenation for the vision model by combining two separate feature vectors from two different vision models or more. To extract the features for the image using the vision models, we first select several images for training and testing. We then process the images by converting them to grayscale and shrinking the original image before extracting the features. To achieve the second stage, we compared the performance of a set of image captioning systems built using the developed vision encoder models combined with a pre-trained embedding which is BioLinkBERT–Large to get the best combination, to find the language decoder with the highest performance.

Figure 3 shows our proposed image captioning model where our contribution is the concatenation of the vision models which are SWIN-Large, ConvNEXT-Large, and PVT_v2_b5 in two versions. In the first version, we used the concatenated model using augmentation and LSTM. In the second version, we used the concatenated model using augmentation and with using BioLinkBERT-Large as an embedding layer.

5 Evaluation

The main goal of the evaluation process in this paper is to find the best model of a vision encoder and a language decoder models to build a high performance captioning model for histopathological captions of stomach adenocarcinoma endoscopic biopsy specimen. The vision encoder models tested in the evaluation include 5 individual models and 3 concatenated models. Each of these models is tested a as an encoder that extracts features from images. Also, we used a pre-trained embeddings models in the language phase and compared it to LSTM to get the most accurate model to be use in our image captioning model.

So, we conducted the following individual experiments:

-

Experiments for the evaluation of the individual vision encoders with three phases (without augmentation, with augmentation and with pre-trained Language embedding model)

-

Experiments for the evaluation of the encoders using feature concatenation models with the pre-trained Language embedding model compared to LSTM.

To be consistent with previous work [28], we used 34.000 images for training and 5700 images for testing. Dataset is available at https://zenodo.org/record/6021442 and https://github.com/masatsuneki/histopathology-image-caption. See the example of the patches in Fig. 4. We trained our models in an end-to-end using Keras model using a laptop with one GPU (2060 RTX). The hyper parameter settings for the experimental models in all of these experiments are as follows: Maximum Epochs is 30, LSTM dropout settings is [0.5], learning rate is [0.00001], Optimizer: Adam optimizer and finally the batch size is 16. Vocabulary size is different with using (without augmentation or with using augmentation) to be (158 or 2360). The details of each of these experiments are presented next. Figure 5 shows the Plot of the Caption Generation Deep Learning Model for using ConvNEXT-Large with PVT_v2_b5 model, where input1 is the input of image features, input2 is the text sequences or captions and dense is a vector of 2560 elements that are processed by a dense layer to produce a 128 element representation of the image as all the settings are the same in the other models used in this paper with their different methods except the shape of the image will be change upon the concatenation model shape. The parameters are the same in the phase of without using augmentation except the dense layer is 64 instead of 128. We used netron site [16] for plot the model by uploading the file of the model.

Example of patches [28]

5.1 Experiments for the evaluation of the individual vision encoders with pre-trained language embedding models

In these experiments, five different models have been tested as an image encoder model. These image encoder models are VGG [22], ResNet [4], PVT [31], SWIN-Large version of SWIN-transformer presented in [11] and CovnNEXT model [12]. Each of the above image encoders has been tested in a separate experiment three times (LSTM, RNN and bidirectional RNN) to find the most accurate vision model and also the most accurate language model then experimented the vision models with (without augmentation (LSTM), with augmentation (LSTM) and augmentation (LSTM) with using a pre-trained embedding layer). The pre-trained embedding model is BioLinkBERT-Large [35]. We applied these decoder models in the language stage by using fixed language decoder which is LSTM-based model [27] which uses the feature vectors obtained from the proceeding tested vision models to generate the captions. We tested pre-trained embeddings model after extracting features from different vision models for comparing the accuracy. The results of these experiments are presented and discussed next with visual evaluation available on this link (https://github.com/Elbedwehy/histopathological-patches).

5.1.1 Efficiency of captioning model

-

A)

To show how the captioning model is effective for creating captions for the input images for the first improvement to find the most accurate vision model with the LSTM, RNN and bidirectional RNN, we utilized a popular metric for this criterion which is bilingual evaluation understudy (BLEU-1, 2, 3, and 4). In-text translation, BLEU scores [17] are used to compare translated content to one or more reference translations. We evaluated each generated caption to all of the image's reference captions and determined that it was quite popular for captioning tasks. For cumulative n-grams, BLEU scores for 1, 2, 3, and 4 are determined. Table 1 shows the final results of comparing all models on all metrics on the validation dataset. We find the ConvNexT as the vision encoder is the most accurate one with LSTM as the language model which used with all vision models due to the ability to handle the vanishing gradient problem, which is a common issue in traditional RNNs as LSTMs use a gating mechanism to regulate the flow of information through the network, allowing them to avoid this problem and learn from longer sequences of data. Also, it designed to handle both short-term and long-term dependencies. While bidirectional RNNs can be effective in some cases, they may not be suitable for handling long-term dependencies, which is where LSTMs excel. Figure 6 show a visual comparison of the BLEU scores results scored in these experiments. While VGG, ResNet and PVT have similar scores due to these feature extractors are typically pre-trained on large-scale datasets like ImageNet, and are designed to extract generalizable image features due to the data from the hospital being similar in terms of visual appearance and content, it is possible that the same set of image features can be informative for generating captions across different images.

-

B)

We did the experiments again between the vision models but with LSTM (without augmentation) from the previous comparison as it is the best one we got and (with augmentation) and (with augmentation with using BioLinkBERT-Large as an embedding layer) as in Table 2. It demonstrates that using pre-trained BioLinkBERT–Large with most of the image encoder models is the best option which is ConvNexT–Large with using also augmentation. Also the similar scores between VGG, ResNet and PVT due to the same reason we mentioned in the previous paragraph except ResNet with augmentation LinkBERT is very little bit different with score 0.557980 which the other is 0.557986. Figure 7 show a visual comparison of the BLEU scores results scored in these experiments

5.1.2 Time evaluation

For each of the tested model, we compared the time taken for training for each model to get the best epoch for captioning. As shown in Fig. 8, PVT with RNN was the fastest in training as it took the least training time (2.5 h), and VGG with bidirectional-RNN was the slowest, as it finished training in 10.5 h while ConvNexT with LSTM, which is the most efficient in producing caption, has taken 8.5 h.

For the second improvement, we compared the time taken for training for each model to get the best epoch for captioning. As shown in Fig. 9, ResNet with (augmentation LinkBERT) was the fastest in training as it took the least training time (5 h), and PVT (Without augmentation LSTM) was the slowest and also SWIN with (augmentation LinkBERT) as they finished training in 9 h while ConvNexT with (augmentation LinkBERT), which is the most efficient in producing caption, has taken 7 h.

5.1.3 Performance evaluation

The performance evaluation is an important criterion as it reflects how fast the images captioning model in generating captions of an input image is. We used the FLOPs metric to evaluate the performance for the tested captioning models with (LSTM, RNN and bidirectional-RNN), as FLOPs are used to describe how many operations are required to run a single instance of a given model. The more the FLOPs the more time model will take for inference, i.e., the better models have a smaller number of FLOPS. Table 3 shows number of FLOPS of each of the tested captioning models. The worst model VGG with LSTM, while PVT with (bidirectional-RNN) was the fastest models in generating the captions.

Also the same evaluation did with the vision models using the LSTM (without augmentation) and (with augmentation) and (with augmentation with using BioLinkBERT)]. Table 4 shows number of FLOPS of each of the tested captioning models. The worst model VGG with BioLinkBERT-Large was the worst, while PVT without using augmentation were the fastest models in generating the captions.

5.2 Experiments for the evaluation of the encoders using feature concatenation models with pre-trained language embedding models

We evaluate in these experiments the different vision encoder models using feature concatenations from the set of individual vision models that are tested in our proposed model. These new concatenated models are PVT_v2_b5 with SWIN-Large, PVT_v2_b5 with ConvNexT-Large and SWIN-Large with ConvNexT-Large. We have chosen to build these concatenated versions from the most efficient vision encoder models as seen from the results of the experiments made using individual image encoder models. In each experiment, done in the previous experiments, the concatenated model is used to extract features from images and these extracted features are fed into one of the tested pre-trained embeddings decoder (BioLinkBERT-Large) compared to LSTM. We used the previous criteria defined earlier for evaluating the use of different feature concatenation for image captioning model. The evaluation details are given next with visual evaluation available on this link [32].

5.2.1 Efficiency of image captioning

Table 3 show the final BLEU score values of the experiments of using each of these tested concatenated image encoder models with one of the three pre-trained embedding decoder as presented earlier. It demonstrates that using the feature concatenation encoder model (ConvNexT-Large + PVT_v2_b5) with the BioLinkBERT-Large pre-trained embeddings language decoder is the best combination as it improves the BLEU score when compared to other concatenated models as shown in Table 5. Figure 10 shows the comparison of BLEU scores for using the tested models. Also, we note that using the BioLinkBERT-Large decoder always produces BLEU scores for all models very close to the highest scored scores. This significantly indicates the high efficiency of this decoder model.

5.2.2 Time evaluation

For each of the conducted experiments, we compared the time taken for training each model to get the best epoch for captioning. As shown in Fig. 11 SWIN-Large + ConvNexT-Large model with BioLinkBERT-Large pre-trained embedding’s was the fastest in training best conducted model took the least training time (6.4 h) while SWIN-Large + PVT_v2_b5 with BioLinkBERT-Large was the slowest, as it finished training in 11.7 h while ConvNexT-Large + PVT_v2_b5, which is the most efficient in producing caption, has taken 11.6 h.

5.2.3 Performance evaluation

We measure the performance to evaluate how fast the image captioning model is in generating captions for an input image is. We use for this evaluation the Flops metric, presented earlier to evaluate the performance for the 3 concatenated tested captioning models with the BioLinkBERT-Large pre-trained embedding and LSTM, the better models should get a smaller number of FLOPs. Table 6 shows number of FLOPs of each of the 3 concatenated tested captioning models with the tested decoder. All over the experiments the worst model was SWIN-Large + ConvNexT-Large both BioLinkBERT-Large, while SWIN-Large + PVT_v2_b5 with LSTM were the fastest model in generating the captions.

6 Conclusion and future work

In this paper, we focused on obtaining the best (image encoder-language decoder) integration to build a highly efficient Captioning model for histopathological captions of stomach adenocarcinoma endoscopic biopsy specimens. We evaluated 5 different image captioning models individually (VGG, ResNet, PVT, SWIN-Large and ConvNexT-Large) with (LSTM, RNN, biderctional-RNN) to find the better one then we compared the vision models with using (LSTM-without augmentation, LSTM with augmentation and augmentation with BioLinkBERT-Large). We found the BioLinkBERT language decoder is very effective in producing accurate captions with ConvNexT-Large as it always gives the best accuracy results with most tested image encoders. We also tested concatenated models (SWIN-Large, PVT_v2_b5 and ConvNexT-Large) and found that ConvNexT-Large with PVT_v2_b5 is very efficient image encoder when used alone and its efficiency is enhanced more when concatenated with the BioLinkBERT-Large pre-trained embedding layer. Hence, as concluded from the results, building a patches captioning model that uses ConvNexT-Large + PVT_v2_b5 as an image encoder and BioLinkBERT-Large as a language decoder can be considered an optimized architectural model for image captioning with relatively high accuracy. We aim in the future to use this architecture for producing Arabic captions of images as research on generating Arabic descriptions of an image is extremely limited. Also, we aim to use it in applications such as image retrieval systems and Web mining.

Data availability

The data used to support the findings of this study are included within the article.

References

Atliha V, Šešok D (2021) Pretrained word embeddings for image captioning. In: 2021 IEEE Open Conference of Electrical, Electronic and Information Sciences (eStream). IEEE, pp 1–4

Chen T, Kornblith S, Norouzi M, Hinton G (2020) A simple framework for contrastive learning of visual representations. In: International conference on machine learning. PMLR, pp 1597–1607

Chen B, Li P, Chen X, Wang B, Zhang L, Hua X-S (2022) Dense learning based semi-supervised object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4815–4824

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

He K, Fan H, Wu Y, Xie S, Girshick R (2020) Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9729–9738

Kiros R, Salakhutdinov R, Zemel R (2014b) Unifying visual-semantic embeddings with multi-modal neural language models. ArXiv: 1411.2539

Kulkarni G, Premraj V, Ordonez V, Dhar S, Li S, Choi Y, Berg AC, Berg T (2013) Babytalk: understanding and generating simple image descriptions. IEEE Trans Pattern Anal Mach Intell 35(12):2891–2903

Kuznetsova P, Ordonez V, Berg AC, Berg T, Choi Y (2012) Collective generation of natural image descriptions. In: ACL, vol 1. ACL, pp 359–368

Li S, Kulkarni G, Berg TL, Berg AC, Choi Y (2011) Composing simple image descriptions using web-scale n-grams. In: CoNLL. ACL, pp 220–228

Lin M, Chen Q, Yan S (2014) Network in network. In: 2nd Int. Conf. Learn. Represent.ICLR 2014 - Conf. Track Proc., pp 1–10

Liu Z, Lin Y, Cao Y, Hu H et al (2021) Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 10012–10022

Liu Z, Mao H, Wu C-Y, Feichtenhofer C, Darrell T, Xie S (2022) A convnet for the 2020s. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11976–11986

Ma E (2019) NLP augmentation. https://github.com/makcedward/nlpaug

Mao J, Xu W, Yang Y, Wang J, Huang Z, Yuille A (2014) Deep captioning with multimodal recurrent neural networks (m-rnn). arXiv preprint arXiv:1412.6632

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781

Netron: a visualizer for neural network, deep learning and machine learning models. Retrieved from https://netron.app/

Papineni K, Roukos S, Ward T, Zhu W-J (2002) Bleu: a method for automatic evaluation of machine translation. In: Proceedings of the 40th annual meeting of the Association for Computational Linguistics, pp 311–318

Pennington J, Socher R, Manning CD (2014) Glove: global vectors for word representation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pp 1532–1543

Saad W, Shalaby WA, Shokair M, Abd El-Samie F, Dessouky M, Abdellatef E (2021) COVID-19 classification using deep feature concatenation technique. J Ambient Intell Humaniz Comput:1–19

Shah A., Chavan P, Jadhav D (2022) Convolutional neural network-based image segmentation techniques. In: Soft Computing and Signal Processing: Proceedings of 3rd ICSCSP 2020, Volume 2. Springer Singapore, pp 553–561

Shin X, Su H, Xing F, Liang Y, Qu G (2016) Interleaved text/image deep mining on a large-scale radiology database for automated image interpretation. J Mach Learn Res 17:1–31. http://www.jmlr.org/papers/volume17/15-176/15-176.pdf

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Socher R, Karpathy A, Le QV, Manning CD, Ng AY (2014) Grounded compositional semantics for fnding and describing images with sentences. Trans Assoc Comput Linguist 2(2014):207–218

Song J, Zheng Y, Wang J, Ullah MZ, Jiao W (2021) Multicolor image classification using the multimodal information bottleneck network (MMIB-Net) for detecting diabetic retinopathy. Opt Express 29(14):22732–22748

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., pp 1–9. https://doi.org/10.1109/CVPR.2015.7298594

Tan M, Pang R, Le QV (2020) Efficientdet: scalable and efficient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10781–10790

Tarján B, Szaszák G, Fegyó T, Mihajlik P (2019) Investigation on N-gram approximated RNNLMs for recognition of morphologically rich speech. In: International conference on statistical language and speech processing. Springer, Cham, pp 223–234

Tsuneki M, Kanavati F (2022) Inference of captions from histopathological patches. arXiv preprint arXiv: 2202.03432

Vinyals O, Toshev A, Bengio S, Erhan D (2016) Show and tell: lessons learned from the 2015 mscoco image captioning challenge. IEEE Trans Pattern Anal Mach Intell 39(4):652–663

Wang S, Lan L, Zhang X, Dong G, Luo Z (2019) Cascade semantic fusion for image captioning. IEEE Access 7:66680–66688

Wang W, Xie E, Li X, Fan D-P, Song K, Liang D, Tong Lu, Luo P, Shao L (2022) Pvt v2: improved baselines with pyramid vision transformer. Comput Vis Media 8(3):415–424

Wu L, Wan C, Wu Y, Liu J (2018) Generative caption for diabetic retinopathy images, in: 2017 Int. Conf. Secur. Pattern Anal. Cybern. SPAC 2017, pp 515–519. https://doi.org/10.1109/SPAC.2017.8304332

Xiao X, Wang L, Ding K, Xiang S, Pan C (2019) Deep hierarchical encoder-decoder network for image captioning. IEEE Trans Multimedia 21(11):2942–2956

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhudinov R, Zemel R, Bengio Y (2015) Show, attend and tell: neural image caption generation with visual attention. In International conference on machine learning, pp 2048–2057

Yasunaga M, Leskovec J, Liang P (2022) LinkBERT: pretraining language models with document links. arXiv preprint arXiv: 2203.15827

You Q, Jin H, Wang Z, Fang C, Luo J (2016) Image captioning with semantic attention. In: CVPR, pp 4651–4659

Yu F, Wang D, Chen Y, Karianakis N, Shen T, Yu P, Lymberopoulos D, Lu S, Shi W, Chen X (2019) Unsupervised domain adaptation for object detection via cross-domain semi-supervised learning. arXiv preprint arXiv:1911.07158

Yuan Z, Li X, Wang Q (2020) Exploring multi-level attention and semantic relationship for remote sensing image captioning. IEEE Access 8:2608–2620

Zakraoui J, Elloumi S, Alja’am JM, Ben Yahia S (2019) Improving Arabic text to image mapping using a robust machine learning technique. IEEE Access 7:18772–18782

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest to report regarding the present study.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Elbedwehy, S., Medhat, T., Hamza, T. et al. Enhanced descriptive captioning model for histopathological patches. Multimed Tools Appl 83, 36645–36664 (2024). https://doi.org/10.1007/s11042-023-15884-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15884-y