Abstract

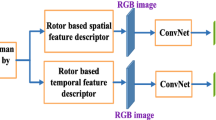

Skeleton-based human action recognition has attracted considerable attention and succeeded in computer vision. However, one of the main challenges for skeleton action recognition is the complex viewpoint variations. Moreover, existing methods may be prone to develop the complicated networks with large model size. To this end, in this paper, we introduce a novel viewpoint-guided feature by adaptively selecting the optimal observation point to deal with the viewpoint variation problem. Furthermore, we present a novel multi-stream neural network for skeleton action recognition, namely Viewpoint Guided Multi-stream Neural Network (VGMNet). In particular, by incorporating four streams from spatial and temporal information, the proposed VGMNet can effectively learn the discriminative features of the skeleton sequence.We validate our method on three famous datasets, i.e., SHREC, NTU RGB+D, and Florence 3D. On SHREC, our proposed method has achieved better performance in terms of accuracy and efficiency against the state-of-the-art approaches. Furthermore, the highest scores on Florence 3D and NTU RGB+D show that our method is suitable for real application scenario with edge computing, and compatible to the case of multi-person action recognition.

Similar content being viewed by others

Data availability

The datasets analysed during the current study are available in the following links.

\(\bullet \) SHREC: http://www-rech.telecom-lille.fr/shrec2017-hand

\(\bullet \) NTU RGB+D: https://rose1.ntu.edu.sg/dataset/actionRecognition

\(\bullet \) Florence 3D: https://www.micc.unifi.it/resources/datasets/florence-3d-actions-dataset

References

Aggarwal JK, Xia L (2014) Human activity recognition from 3d data: A review. Pattern Recogn Lett 48:70–80

Ahad MAR, Ahmed M, Antar AD, Makihara Y, Yagi Y (2021) Action recognition using kinematics posture feature on 3d skeleton joint locations. Pattern Recogn Lett 145:216–224

Anirudh R, Turaga P, Su J, Srivastava A (2015) Elastic functional coding of human actions: From vector-fields to latent variables. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3147–3155

Caputo FM, Prebianca P, Carcangiu A, Spano LD, Giachetti A (2017) A 3 cent recognizer: Simple and effective retrieval and classification of mid-air gestures from single 3d traces. In: STAG, pp 9–15

Chaudhry R, Ofli F, Kurillo G, Bajcsy R, Vidal R (2013) Bio-inspired dynamic 3d discriminative skeletal features for human action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 471–478

Chen X, Wang G, Guo H, Zhang C, Wang H, Zhang L (2019) Mfa-net: Motion feature augmented network for dynamic hand gesture recognition from skeletal data. Sensors 19:239

Cho S, Maqbool M, Liu F, Foroosh H (2020) Self-attention network for skeleton-based human action recognition. In: Proceedings of the IEEE/CVFWinter conference on applications of computer vision, pp 635–644

De Smedt Q, Wannous H, Vandeborre JP, Guerry J, Le Saux B, Filliat D (2017) Shrec’17 track: 3d hand gesture recognition using a depth and skeletal dataset. In: 3DOR-10th Eurographics Workshop on 3D Object Retrieval, pp 1–6

De Smedt Q, Wannous H, Vandeborre JP (2016) Skeleton-based dynamic hand gesture recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp 1–9

Devanne M, Wannous H, Berretti S, Pala P, Daoudi M, Del Bimbo A (2015) 3-d human action recognition by shape analysis of motion trajectories on riemannian manifold. IEEE transactions on cybernetics 45(7):1340–1352

Devineau G, Xi W, Moutarde F, Yang J (2018) Convolutional neural networks for multivariate time series classification using both inter-and intra-channel parallel convolutions. In: Reconnaissance des formes image apprentissage et perception (RFIAP–2018)

Ding Y, Zhu Y, Wu Y, Jun F, Cheng Z (2019) Spatio-temporal attention lstm model for flood forecasting. 2019 International Conference on Internet of Things (IThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber. Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), IEEE, pp 458–465

Ellis C, Masood SZ, Tappen MF, LaViola JJ, Sukthankar R (2013) Exploring the trade-off between accuracy and observational latency in action recognition. Int J Comput Vis 101:420–436

Goel N, Kaur S, Bala R (2021) Dual branch convolutional neural network for copy move forgery detection. IET Image Process 15:656–665

Hinton GE, Srivastava N, Krizhevsky A, Sutskever I, Salakhutdinov RR (2012) Improving neural networks by preventing co-adaptation of feature detectors.arXiv preprint arXiv:1207.0580

Hou B, Miolane N, Khanal B, Lee MC, Alansary A, McDonagh S, Hajnal JV, Rueckert D, Glocker B, Kainz B (2018a) Computing cnn loss and gradients for pose estimation with riemannian geometry. In: International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer, pp 756–764

Hou J, Wang G, Chen X, Xue JH, Zhu R, Yang H (2018) Spatialtemporal attention res-tcn for skeleton-based dynamic hand gesture recognition. In: Proceedings of the european conference on computer vision (ECCV) workshops, pp 0–0

Huang G, Yan Q (2020) Optimizing features quality: a normalized covariance fusion framework for skeleton action recognition. IEEE Access 8:211869–211881

Ioffe S, Szegedy C (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning, PMLR, pp 448–456

Jg Feng, Xiao J (2015) View-invariant human action recognition via robust locally adaptive multi-view learning. Front Inf Technol Electron Eng 16:917–929

Ji X, Liu H (2009) Advances in view-invariant human motion analysis: A review. IEEE Trans Syst Man Cybern Part C Appl Rev 40:13–24

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980

Li C, Hou Y, Wang P, Li W (2017) Joint distance maps based action recognition with convolutional neural networks. IEEE Signal Proc Lett 24:624–628

Li M, Chen S, Chen X, Zhang Y, Wang Y, Tian Q (2019) Actionalstructural graph convolutional networks for skeleton-based action recognition. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3595–3603

Li S, Li W, Cook C, Zhu C, Gao Y (2018b) Independently recurrent neural network (indrnn): Building a longer and deeper rnn. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5457–5466

Liu J, Wang G, Duan LY, Abdiyeva K, Kot AC (2018) Skeleton-based human action recognition with global context-aware attention lstm networks. IEEE Trans Image Process 27:1586–1599

Li L, Zheng W, Zhang Z, Huang Y,Wang L (2018) Skeleton-based relational modeling for action recognition. 1:3. arXiv preprint arXiv:1805.02556

Li C, Zhong Q, Xie D, Pu S (2018a) Co-occurrence feature learning from skeleton data for action recognition and detection with hierarchical aggregation. arXiv preprint arXiv:1804.06055

Ma B, Su Y, Jurie F (2014) Covariance descriptor based on bio-inspired features for person re-identification and face verification. Image Vis Comput 32:379–390

Nunez JC, Cabido R, Pantrigo JJ, Montemayor AS, Velez JF (2018) Convolutional neural networks and long short-term memory for skeleton-based human activity and hand gesture recognition. Pattern Recog 76:80–94

Ofli F, Chaudhry R, Kurillo G, Vidal R, Bajcsy R (2014) Sequence of the most informative joints (smij): A new representation for human skeletal action recognition. J Vis Commun Image Represent 25:24–38

Pandey P, Gupta R, Goel N (2021) A fast and effective vision enhancement method for single foggy image. Eng Sci Technol Int J 24:1478–1489

Paoletti G, Cavazza J, Beyan C, Del Bue A (2021) Subspace clustering for action recognition with covariance representations and temporal pruning. In: 2020 25th International Conference on Pattern Recognition (ICPR), IEEE, pp 6035–6042

Rao C, Shah M (2001) View-invariance in action recognition. In: Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001

Seidenari L, Varano V, Berretti S, Bimbo A, Pala P (2013) Recognizing actions from depth cameras as weakly aligned multi-part bag-of-poses. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp 479–485

Shahroudy A, Liu J, Ng TT, Wang G (2016) Ntu rgb+ d: A large scale dataset for 3d human activity analysis. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1010–1019

Shao Z, Li Y (2013) A new descriptor for multiple 3d motion trajectories recognition. In: 2013 IEEE international conference on robotics and automation, IEEE, pp 4749–4754

Shi L, Zhang Y, Cheng J, Lu H (2020) Skeleton-based action recognition with multi-stream adaptive graph convolutional networks. IEEE Trans Image Process 29:9532–9545

Singh I, Zhu X, Greenspan M (2020) Multi-modal fusion with observation points for skeleton action recognition. In: 2020 IEEE International Conference on Image Processing (ICIP), IEEE, pp 1781–1785

Sun N, Leng L, Liu J, Han G (2021) Multi-stream slowfast graph convolutional networks for skeleton-based action recognition. Image Vis Comput 109:104141

Vemulapalli R, Arrate F, Chellappa R (2014) Human action recognition by representing 3d skeletons as points in a lie group. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 588–595

Wang P, Li W, Ogunbona P, Gao Z, Zhang H (2014) Mining mid-level features for action recognition based on effective skeleton representation. In: 2014 International Conference on Digital Image Computing: Techniques and Applications (DICTA), IEEE, pp 1–8

Wang H, Wang L (2017) Modeling temporal dynamics and spatial configurations of actions using two-stream recurrent neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 499–508

Wang C, Wang Y, Yuille AL (2013) An approach to pose-based action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 915–922

Xia L, Chen CC, Aggarwal JK (2012) View invariant human action recognition using histograms of 3d joints. In: 2012 IEEE computer society conference on computer vision and pattern recognition workshops, IEEE, pp 20–27

Xu B, Wang N, Chen T, Li M (2015) Empirical evaluation of rectified activations in convolutional network. arXiv preprint arXiv:1505.00853

Yang X, Tian YL (2012) Eigenjoints-based action recognition using naivebayes-nearest-neighbor. In: 2012 IEEE computer society conference on computer vision and pattern recognition workshops, IEEE, pp 14–19

Yang F, Wu Y, Sakti S, Nakamura S (2019) Make skeleton-based action recognition model smaller, faster and better. In: Proceedings of the ACM multimedia asia, pp 1–6

Yan S, Xiong Y, Lin D (2018) Spatial temporal graph convolutional networks for skeleton-based action recognition. In: Proceedings of the AAAI conference on artificial intelligence

Zabrovskiy A, Agrawal P, Mathá R, Timmerer C, Prodan R (2020) Complexcttp: Complexity class based transcoding time prediction for video sequences using artificial neural network. In: 2020 IEEE Sixth international conference on multimedia big data (BigMM), pp 316–325

Zanfir M, Leordeanu M, Sminchisescu C (2013) The moving pose: An efficient 3d kinematics descriptor for low-latency action recognition and detection. In: Proceedings of the IEEE international conference on computer vision, pp 2752–2759

Zhang P, Lan C, Xing J, Zeng W, Xue J, Zheng N (2017) View adaptive recurrent neural networks for high performance human action recognition from skeleton data. In: Proceedings of the IEEE international conference on computer vision, pp 2117–2126

Zhang P, Lan C, Zeng W, Xing J, Xue J, Zheng N (2020) Semanticsguided neural networks for efficient skeleton-based human action recognition. In: proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1112–1121

Zhu G, Zhang L, Li H, Shen P, Shah SAA, Bennamoun M (2020) Topology-learnable graph convolution for skeleton-based action recognition. Pattern Recogn Lett 135:286–292

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China Grant 61876042, and the Guangdong Basic and Applied Basic Research Foundation (No. 2020A1515011493).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interests

We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

He, Y., Liang, Z., He, S. et al. Viewpoint guided multi-stream neural network for skeleton action recognition. Multimed Tools Appl 83, 6783–6802 (2024). https://doi.org/10.1007/s11042-023-15676-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15676-4