Abstract

The most important elements in the realm of commercial food standards are effective pest management and control. Crop pests can make a huge impact on crop quality and productivity. It is critical to seek and develop new tools to diagnose the pest disease before it caused major crop loss. Crop abnormalities, pests, or dietetic deficiencies have usually been diagnosed by human experts. Anyhow, this was both costly and time-consuming. To resolve these issues, some approaches for crop pest detection have to be focused on. A clear overview of recent research in the area of crop pests and pathogens identification using techniques in Machine Learning Techniques like Random Forest (RF), Support Vector Machine (SVM), and Decision Tree (DT), Naive Bayes (NB), and also some Deep Learning methods like Convolutional Neural Network (CNN), Long Short-Term Memory (LSTM), Deep convolutional neural network (DCNN), Deep Belief Network (DBN) was presented. The outlined strategy increases crop productivity while providing the highest level of crop protection. By offering the greatest amount of crop protection, the described strategy improves crop efficiency. This survey provides knowledge of some modern approaches for keeping an eye on agricultural fields for pest detection and contains a definition of plant pest detection to identify and categorise citrus plant pests, rice, and cotton as well as numerous ways of detecting them. These methods enable automatic monitoring of vast domains, therefore lowering human error and effort.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Pests are one of the most serious issues in agricultural production. As per, Food and Agriculture Organization (FAO), each year, bugs cause 20% upto 40% loss in global meal yield [28]. Recognizing and detecting crop pests was one of the most complicated tasks in crop output. Pests harm crops and have a major impact on crop yield productivity. Due to their complicated form and a great degree of similarity in appearance between different categories, classifying insects was a challenging undertaking [60]. Agricultural pests have long faced a serious threat to crop production and agriculture product preservation [12]. By the time farmers realize pest spoil, it has repeatedly tripled also spread unmanageable. To get rid of bugs and reduce agricultural damage, wide number of fungicides must be sprinkled in farm at the time [10]. Infections in plants are discovered by checking for visible symptoms in various areas of the plants, including leaves and stems. To accurately diagnose disease classes, however, specialist knowledge was essential. It is difficult to reach rural areas, especially in developing countries where farmers produce the majority of the crop [1]. Visual inspection or laboratory testing are used to confirm the diagnosis of crop diseases. The visual inspection was performed. It can only be done by a professional and takes a long time. Laboratory experimentation necessitates the use of different chemicals as well as a prolonged procedure [53].

Identifying diseases on crop leaves have mainly focused on several techniques. i.e., Hyperspectral imaging, polymerase chain reaction (PCR), Thermography, fluorescence in situ hybridization (FISH), enzyme-linked immunosorbent test (ELISA) [23]. These are carried out by directly collecting features and using a related filtration to identify different pest types, that take a duration when the collection was vast [38]. Insect identification and classification are typical applications, computer-aided image classification systems related to traditional machine learning approaches [6], and CNN has continuously been being used [41]. Different types of classifications are classified using a detection and classification system. The two steps are outlined as the Training model and the Testing model [48] also deep learning is used for classification [56]. Deep learning seems to be more successful in diagnosing crop problems in agricultural production. Crop growth may be monitored, diagnosed, and prevented using a deep learning model [2] and fine-tuned to increase identification accuracy [32]. In the end, that research creates information for optimizing and improving the structure and training parameters of the CNN model for pest image characteristics for typical agricultural and forestry pests and offers a deep network model for agricultural pest detection [67].

The following is the suggested methodology’s main contribution:

-

To maintain farming by detecting leaf diseases that aid in the development of healthy plants.

-

Utilizing photographs of leaves, plant diseases may be automatically detected using computer vision and soft computing approaches.

-

For the purpose of defining plant pest diagnosis, identifying citrus plant pests, rice pests, and cotton insects, as well as other methods of tracking, in agricultural settings.

-

The identification of agricultural pests and illnesses uses both machine learning techniques and some Deep Learning approaches.

2 Crop pest classification

This section mainly focus on, Citrus pest classification, Rice Pest Detection and Cotton Pest Detection is discussed.

2.1 Citrus pest classification

In 2021, Morteza et al., [31] presented Citrus was a significant source of ascorbic acid and ascorbate for human health in a variety of industries. Citrus crops have low quality and quantity due to pests and sickness. Sooty Mold, General citrus pests, Leafminer, and Pulvinaria, are identified using a deep learning-based intelligent method. When creating ensemble, three degrees of variety are considered: classifier, feature, and a broad range of data.

In 2020, Utpal et al., [8] proposed real-time citrus leaf disease classification by CNN using smartphone images. Self-Structured (SSCNN) classifiers and MobileNet are two types of CNN used to categorize diseases of citrus leaves during the productive stage. Suggested research created a citrus disease dataset based on smartphone images. The citrus dataset was employed to test and train both models. According to the approach, the SSCNN was more precise for smartphone image-based citrus leaf classifier. Furthermore, Janarthan, et al., [25] discovered Citrus Disease Classification Using Deep Metric Learning and Sparse Data. Consistent deep metric learning-based architecture was offered for pest identification in citrus. To accurately diagnose citrus diseases, offer a categorization network based on spots that includes cluster prototype module, an embedding module, and basic neural network classifier. The efficiency of suggested approach in reliably diagnosing various diseases from leaf photos has been demonstrated using a collection of citrus fruit that is widely accessible.

In 2019, Pan, et al., [49] presented that CCN connected smart mobile identification for citrus-related diseases. Densely Connected convolutional networks (DenseNet) is a citrus disease intelligent sensor that detects six different image kinds to diagnose the disease. Implementation was operated on a smartphone and makes use of the WeChat applet, which allows users to post photographs and receive comments. The recognition accuracy of citrus diseases has increased to almost 88%, and the predicted time consumption has been decreased.

In 2018, Muhammed et al., [54] presented some combinations for pest detection and classification in citrus trees. There are two key phases to the suggested technique. They are the identification of infection marks on fresh fruit and the classification of citrus disorders on leaves. An optimal weighted segmentation algorithm was used to recover the citrus lesion spots from an upgraded input image. Figure 1 explains citrus diseases including citrus scab, melanose, black spot, citrus canker and anthracnose were detected and classified using these datasets.

In 2017, Ali et al., [3]. presented about identifying and classifying illnesses in citrus plants used by ΔE segmentation algorithm, HSV, RGB, and LBP as descriptors. Classification at both the image and illness levels was applied. KNN, Boosted tree, Cubic SVM, and Bagged tree methods of ensemble classification are also used. When compared to other classifiers, Bagged tree performs better when any color features are used. Table 1 shows the review about Citrus pest classification.

2.2 Rice pest detection

In 2021, Tian et al., [61] researched the most dangerous disease was rice burst, which threatens global production and causes significant financial loss across the universe. The findings showed that rice plants had a significant pattern of variability continued during plant-pathogen interactions. The high accuracy of over 80% during the beginning phases of infection suggested that consistent hyperspectral signals could be used for non-destructive RLB infection detection.

In 2020, Qing et al., [50] focused on machine vision to develop an autonomous system for rice light-trap pests. That system consists desktop and a cloud server. The light trap kills, and disperses insects then collects and sends photos of the captured insects to a cloud server. The automatic light trap monitoring system’s efficacy and accuracy were demonstrated in field tests. Moreover, Jiang et al., [26] discovered deep learning with SVM used to recognize four rice leaf diseases in images. CNN to extract the features of the rice leaf disease photos was utilized. The SVM method was used to classify and predict the disease. The average rate of recognition of rice illness based on deep learning and SVM was 96.8% when the kernel parameter g = 50 and penalty parameter C = 1, according to the experimental data. The accuracy was higher than that of typical backpropagation neural networks. Using deep learning and SVM to predict rice problems and provide technical plans for various crop fields can be extremely valuable.

In 2019, Zhou et al., [79] detected Rice Disease Using Faster R- CNN Fusion and additionally FCM-KM. FCM- KM addresses a variety of issues with rice disease photos, including noise, blurry image edges, excessive background interference, poor detection rate, also Faster R-CNN fusion is suggested. FCM-KM analysis was used in conjunction with the R-CNN algorithm for identifying rice diseases to select different widths of the Faster R-CNN target frame. A rice pest picture database was built for the identification of rice burst, bacterial blight, and sheath blight diseases.

In 2018, Xiao et al., [72] detected rice blast recognition using a neural network and its principal component analysis to address the issues of rice blast artificial recognition. For every illness, 10 morphological features, six color features, and five texture traits were retrieved from a picture of the harvested abscess. Next, the correlation between the distinctive parameters was investigated using stepwise regression analysis. When compared to the BP neural network recognition approach, the average recognition rate increased. Moreover, Shiba et al., [57] detected Rice stripe disease in paddies. The small brown planthopper spreads the rice stripe virus (RSV). Because of dramatic rise in disease-related rice crop loss in Japan, it was critical to establish management strategies as soon as possible. Research found that sick Plants appear from mid-June to July for the first time and that the disease then spreads from damaged plants to neighboring ones. For effective, pest restrain should target either first cultivated rice paddies can lead to sudden evolution as in quantity of infected plants inside of the meadow. Three Rice diseases are shown in the Fig. 2. Table 2 shows the review on rice pest detection.

2.3 Cotton pest classification

In 2022, Wang et al., [69] proposed UAV remote sensing and Cotton habitat estimate by helpers and detection at the farm level based on GIS. GIS network tool used to determine cotton transit routes and developed to locate and display prospective volunteer cotton-growing sites. According to the findings, remote sensing UAVs in combination with used image processing approaches may be able to know the exact location of volunteer cotton and hence aid in its removal along transit routes.

In 2020, Wei et al., [70] detected Cotton was resistant to Verticillium wilt when the acetolactate synthases VdILV2 and VdILV6 are silenced by the host. Verticillium dahliae causes cotton Verticillium wilt, which was a damaging fungal disease and one of the most difficult to treat. HIGS technology suppresses the expression of VdILV2 in order to describe their role in V. dahliae. of HIGS or VdILV6 prevents a fungicide and effective control for V. dahliae-caused Verticillium wilt. Moreover, Chen et al., [11] predicted Bidirectional long short-term memory networks related to the temperature and wind patterns. Use of bi-directional RNN with LSTM units for identifying the development of cotton pests and illnesses using weather parameters was discussed. The problem of pest and disease occurrence prediction was defined as a time series prediction in the starting stage. The challenge was then solved using a bi-directional LSTM network (Bi-LSTM), which can represent long-term dependence on sequential input’s past and future contexts. Table 3 shows the reviews about cotton pest classification.

In 2019, Barbedo et al., [7] detected plant diseases from individual lesions and patches. The natural variation within each image was indirectly considered by the segmentation into small bites, and the proposed solution can not only greatly expand the number of image datasets, but also massively enhance variety.

In 2018, ZHANG et al., [75] detected segmentation of photos automatically for diseased cotton in a natural setting. The Active Contour model was presented, which was based on worldwide slope and local information. As a bit of global system, the pictorial gradient was integrated in the sectional tone diminishing the border compound function. Cotton leaves photos with a single backdrop can be segmented using the proposed approach. The suggested approach perform better in the primary lesion area over the target leaf edges for cotton leaves images with fuzzy edges and staggered condition. Moreover, Wang et al., [66] In China, miridae insect family of Apolygus lucorum was a major pest related to cotton. The data are summarized in which Primary data of beetle populations and PCR-based sensing of components in order to identify plants DNA in insect bound between A. lucorum population and preference for eating for host plants, Humulus scandens and Alfalfa is to recognize the residual plant and food kinds particularly A. lucorum was created. Clear evidence. of A. lucorum’s feeding choice on H. scandens and M. sativa at various growth stages, which indicated a positive association among populations and A. lucorum’s eating preference on field circumstances, on several plants was presented.

2.3.1 Pest detection by Random Forest (RF)

In this section, random forest model is discussed based on spectral, image, and fusion features of both spectral and image features of crops to study the ideal features dataset necessary to detect pest.

In 2020, Xu et al., [73] identified damage to Dendrolimus punctatus Walker was discovered using BP algorithms and RF. Relevance filtering by RF and the connection linkages from the input layer to the hidden layer, in real numbers of BP methods, reveal that the seven distinctive indicators were responsive to D. In BP neural networks, a “over-fitting” issue occurs, but the RF technique was more resistant, and BP neural networks have a better identification effect. As a result, It was possible to use the RF technique for pest impact and higher variables which Indicates that ratio of collecting information from a variety of sources should be observed when collecting information. Moreover, Zhang et al., [77] developed hyperspectral system was utilized to capture Fusarium-infected wheat head spectral and data, that was used to build models they might detect the FHB disease in wheat. Models that combine spectral and image properties may be more useful in detecting FHB illness than models based solely on spectral and image features. DCNN may utilised as a new tool for detecting and forecasting FHB in wheat was detected. Also, Zhang et al., [78] developed digital imaging, and tested the effects of fungi for the control of wheat Fusarium Head Blight. An unique way to estimate FHB symptoms and assessing the success of fungicide spraying programmes promptly was established. The results reveal that the segmentation method was effective in discriminating wheat ears from a crowded field. A normalized green-blue difference vegetation index (NGRBDI) was produced to enhance the effect of fine identification by choosing 18 elements in the trial. According to the findings, the offered method can accurately measure the performance of fungicides in the environment for mitigating wheat FHB. The impact of various fungicide treatment rates on control effectiveness has to be investigated further.

In 2019, Dhingra et al., [17] created new neutrosophic technique for leaf disease vision-based classification and identification. Three membership elements identify the segmented neutrosophic image: true, false, and average. Recent subsets based on segmented regions, such as texture, colour, histogram, and disease sequence region, are analyzed to determine if a leaf was diseased or healthy. The suggested system tested using a total of 400 instances and it was a useful tool for identifying diseases in leaves. A new feature set appears promising, with a classification accuracy of 98.4%. It is also possible to investigate a previously unrecognised combination feature extraction, feature selection, and learning techniques to improve the accuracy of illness detection and classification models. Table 4 shows the review about Random Forest.

In 2018, Gao et al., [21] identified RF algorithm with hyperspectral close picture collage images, weeds in maize crop. Image processing, feature engineering, and machine learning approaches are often used to build an effective classifier for the three categories of weeds and maize. Overall 185 spectral data were created, including reflectance and vegetation aspects of the index. When determining the best number of features to use in the classification model, precision-oriented feature minimization was applied. At 0.05 significance level, the McNemar test revealed that the best RF algorithm outperformed the KNN model. The results could encourage more field uses of this camera for SSWM implementation. In addition, Su et al., [59] investigated a moderate spectral bands camera with five bands and a low-altitude flying route for sustainable farming are used to assess yellow rust virus causes gene distress in various crops. The main purpose to see whether it’s easy to tell the difference between grains that are both strong and sick with yellow rust and choosing a region of spectral and Spectral Vegetation Index can discriminate well also creating a detection tool for yellow rust at a cheap price for agriculture size applications. With monitoring results, the learned method was also deployed to the entire area of interest.

2.4 Pest detection by SVM

This section comprises about SVM and is used for detecting insects from crop surface and it produces superior results.

In 2020, Mustafa et al., [43] detected an automated hybrid intelligence system for the identification of herbal species and the detection of herb plant illness. The purpose was to develop a computerized approach that was used to discriminate among plants and discover diseases in plants at an early stage. That study used computer vision and electronic nose to develop a system for recognising species and finding illness in herbs at a beginning period, concentrating on the removal of herb leaves smell, structure, hue, and thickness, as well as a hybrid intelligent system that included SVM, NB, fuzzy inference, and probabilistic neural network (PNN). Moreover, Ebrahimi et al., [19] detected pest using vision and the SVM classification method. In greenhouses, automatic pest sensing was a vital way to monitor insect attacks. To detect parasites on strawberry plants, experts used a novel image processing technique. For parasite classification and thrips identification, the SVM approach with difference kernel function was applied. The results demonstrate that the SVM with area index and deepen as color index creates the most accurate categorization, with the average percent error of under 2.25%. Reviews of Pest Detection by SVM is shown in below Table 5.

In 2019, Hu et al., [24] detected Tea leaf disease using a low-shot learning method. A low recognition technique for illness assessment in tea leaves to stop and heal tea leaf illnesses in a timely basis. The SVM method split disease spots in tea leaf infection pictures, which extracts color and texture information. The improved conditional deep convolutional generative adversarial networks (C-DCGAN) for data augmentation generate fresh training samples from segmented disease spot images, are trained VGG16 deep learning model to detect ailments in tea leaves.

In 2018, Khaled et al., [30] investigated the use of electrical characteristics was a potential such as dielectric constant, obstruction and conductivity in the identification of BSR illness in oil palm trees. The differences between the features selection models, electrical properties, and classes were shown to be significant in investigation. The study findings will help to expand understanding of how electrical characteristics might be used to detect BSR illness in oil palm plants early. Beside, Chen et al., [9] investigated high spatial resolution satellite data to map wheat rust. Wheat rust photos from Henan Province, China, indicate a serious imbalance in sampling distribution. The categorization results can attain an accuracy of more than 90%, changing between 90.80% and 95.10%. The filter methods has a better overall correctness of 93.60% than the filters characteristic method of selecting, it has a 92.65% accuracy level. Additionally, Deng et al., [15] detected image Insect pest surveillance and identification using bio-inspired approaches. Recognizing and detecting insect pests was critical for food security, a healthy life, and the agricultural economy. In that research, approaches based on the human visual system were proposed for quick Insect pest detection and diagnosis. The SIFT-HMAX model for insect pests was suggested. Finally, the recognition was carried out using the SVM model.

Kaur et al., [29] had provided N-gram and KNN classifier-based sentiment analysis system. Various strategies have been developed for sentiment analysis during the past few years. The approach used to categorize tweets into good, negative, and neutral ones using an SVM classifier is what motivated the design of the suggested system. N-gram and KNN classifiers are the foundation of the suggested system.

Vishnoi et al., [65] had used to automate the plant disease classification and identification system utilising computer vision and machine learning approaches is summarised in this publication. To reduce agricultural losses, India was in desperate need of an automated system that is efficient at detecting plant diseases. A number of widely accepted methods for image capture, pre-processing, lesion segmentation, feature extraction, and finally classifiers are presented in the survey. Additional difficulties during the feature extraction module have been listed. In order to increase efficiency without jeopardising the current tools, the limitations of existing systems have also been considered.

2.4.1 Pest detection by decision tree

This section comprises a detailed facts about Decision trees, and is used in Sunn Pest migration from winter quarters to wheat fields and to detect pest in leaves.

In 2020, Van et al., [64] detected Hyperspectral imaging was used to spot Alternaria solani in agricultural potato crops. The automatic finding of Alternaria solani early blight could result in a significant reduction in the usage of plant care chemicals and the resulting output losses. A practice area and validated proximal sensing apparatus was used to take high-resolution hyperspectral images, which were subsequently used to accurately map Alternaria lesions.The employment of sophisticated object classification algorithms, such as CNN, to boost model performance by integrating the geographic environment in the classifier might boost detection capability even further. That may lead to Alternaria mapping using UAVs or tractors. Furthermore, Amirruddin et al., [5] presented about chemical analysis, which was time-consuming, is a typical method of determining chlorophyll (chl). The chl-sensitive wavelengths were chosen using feature selection, and the chl sufficiency levels were classified using Decision Tree (DT) utilizing the wavelengths chosen. Also, the data revealed that chl-sensitive wavelengths and machine learning classification were reliant, as evidenced by distinct chl-sensitive wavelengths and classification accuracy assessments. Findings confirm the theory that older fronds are less capable of sending out a powerful signal of chl.

In 2019, Deng et al., [16] developed Hyperspectral reflectance to acknowledge and label citrus Huanglongbing in fields. To citrus HLB detection, a strategy for choosing distinctive bands of hyperspectral information was investigated. To model the disease, the use of various forms of data and machine learning was contrated. It was discovered that there were 20 useful bands based on high entropy distance, which were primarily situated in the regions like green, red edge, and near-infrared. There was some redundancy among the 20 pre-selected bands, especially in the green and Zones of near-infrared light. Furthermore, Johnson et al., [27] focused on harvesting decisions by farmers and vegetable losses in primary production. Since the revelation that 40% of food in the United States was never more eaten, issues of food loss and waste have gained traction. However, losses in the field are poorly understood, and the economic and growing variables that influence fruit and vegetable growers’ decisions can influence how much product was left unharvested. In North Carolina, the authors conducted seventeen semi structured interviews with commercial vegetable growers ranging in size from mid-sized to big. The researches focus on the key factors that influence food waste on the farm, such as whether growers can find a consumer for their crops, the quality of the produce, the available price, the cost of disapproval of a product, and the priority of another crop maturing and are harvestable. Reviews about Pest Detection by Decision Tree is shown in Table 6.

In 2018, Shuaibu et al., [58] investigated apple hyperspectral band selection without supervision Detection of Marssonina blotches. Indoor hyperspectral pictures depicting the evolution of AMB disease were gathered and analyzed. The spectral domain of the images was subjected to unsupervised feature extraction was an effective approach termed orthogonal subspace projection (OSP), which selected ten ideal bands from reflectance data. The OSP algorithm simultaneously chose features and reduced redundancy, with the number of optimal features established by simple end criteria due to the difference among sequential OSP norm values. With 84% accuracy, the ensemble bagged classifier achieved the greatest overall performance.

In 2017, Rady et al., [51] detected Codling moth infestation in GoldRush apples via hyperspectral imaging. It was critical to detect pest infestations quickly in order to keep the freshness of fruits including apples. The goal was to see how effective hyperspectral imaging in the visible and near-infrared in the diffuse reflectance mode was at spotting also codling moth (CM) categorizing infestation in fruits. For the photos, mean reflectance spectra (MRS), Knn, DT, feed-forward artificial neural networks (FFNN) were generated. By sequential forwards selection (SFS) method, the most influential wavelengths were identified. This research reveals that VIS/NIR spectral portrait can be used as an undestroyed method of identifying CM infection in apples.

3 Crop pest detection by Naive Bayes

This section comprises about Naive Bayes classifier, and is simple and quick to forecast the test data set’s class. It’s good at multi-class prediction in crop pest detection.

In 2021, Rajendran et al., [52] discovered an ontology design, that present a NB Classifier. Details for the dataset were gathered from a variety of government agriculture documents and websites. The Matthews correlation coefficient (MCC), ROC area, PRC region, accuracy, sensitivity were chosen. The estimated performance was verified using the soil, insect, and weather classifications, as well as the overall class findings. Sensitivity performance of phrase extraction method and the found instance correlations are verified using random samples.

In 2020, da et al., [14] discovered that Coffee berry necrosis was a fungal infection that has a substantial impact on coffee productivity when it occurs at a top standard. Landsat 8 OLI images were obtained three times throughout the grain filling period, nearest to anthracnose data collected, and were subjected to atmospheric adjustments using DOS, ATCOR, and 6SV techniques. Random sampling was used to test the photos categorized by machine learning techniques such as RF, Multilayer Perceptron, and Naive Bayes 30 times. Further, Mustafa et al., [43] recognized about species and plant early sickness are both demanding tasks that are difficult to automate. Manual identification was a time-consuming method that needs a prior awareness of the plant’s structure, flavour, and texture. As a result, the objective was to develop a computerized system for recognizing species and monitoring severe sickness in herbs depending on these traits. The function of species identification and early disease detection was also delivered by equipment that was transportable and simple, such device that was integrated, at any time. Reviews about Crop pest Detection by Naïve Bayse is shown in Table 7.

In 2017, Mondal et al., [42] identified about leaves, entropy-based binning and the NB were used to grade the momordica and yellow mosaic virus disease of okra also a structural operation-based simple leaf diagnosis technique based on NB to determine YVMV disease of lady’s finger and bitter gourd leaf pictures. Following an analysis of the comparable works in the literary and taking into consideration the information of agriculture experts in that area, forty-three features were chosen. The leading feature set for lady’s finger and bitter gourd leaf photos was then determined using the Pearson Correlation Coefficient approach.

3.1 Pest detection by CNN

This section comprises by CNN, in agricultural fields like as plant pest detection, CNN has gotten a lot of interest. The systematic identification of relevant attributes from the data set is the reason for the CNN-based model’s attractiveness. It is created to detect and diagnose plant diseases using simple healthy leaf and damaged plants.

In 2022, Hassan et al., [22] discovered a deep learning method based on feedback connections and the initial layer. To reduce the parameter numbers, depthwise separable convolution was employed. Three independent plant disease datasets are trained and tested in executed model. The performance accuracy on the plant record was 99.39%, 99.66% on the rice illness dataset, and 76.59% on the cassava dataset. Inception design uses depth-wise separable convolution, it lowers processing costs by a factor of 70 by reducing the set of variables. Moreover, Wu et al., [71] presented about CNN for Fine-Grained Categorization of Plant Leaf Diseases. The complex network takes up a lot of memory storage and wastes a lot of processing power, making it hard to fulfill the demand for low-cost devices. To overcome the challenge, that research provides a fine-grained classification of disease rules based on a network. During training, “Reconstruction-Generation Model” was included. A fine-grained disease identification strategy built by visual dnns for peach and tomato disease leaflet detection was provided to solve the shortcomings of deep neural networks in crop disease identification.

In 2020, Li et al., [39] discovered about SCNN-RF and SCNN-KSVM are the ways for resolving this ambiguity. On three separate datasets, comparison experiments with various deep learning models were performed. The SCNN-RF and SCNN-KSVM perform other pre-trained deep models on fidelity, recall, and F1-score with less variable, according to the results. The CNN with a traditional machine learning algorithm it was a significant make attempt to detect plant diseases. Furthermore, Li et al., [37] developed data enhancement technique for CNN-based methods that were effective. Test Time Augmentation (TTA) technique, was used in the trained multi-scale model to infer input images with different resolutions separately. For the final result, apply Non-Maximum Suppression (NMS) to blend the detection findings from multiple image scales. Experiments on the wheat sawfly, mite, aphid, and rice planthopper in property source illustrate data preprocessing technology gives a highest accuracy of 81.4% for Mean Average Precision (MAP), it performs methods by 11.63%, 7.93%, and 4.73%.

In 2019, Ai et al., [2] proposed that CNN was used to detect crop bugs automatically. For development, the Inception-ResNet-v2 model was employed. An inter convolution with a merge immediate edge was included in the leftover component of design. The connection into the ReLu function activates it when the combined convolution procedure was completed. The experimental findings demonstrate that model’s overall recognition accuracy was 86.1%, proving its efficiency. Following the model’s training, built and implement a Wechat applet for recognizing crop diseases and insect pests.

In 2018, Ferentinos et al., [20] discovered deep learning approaches, by healthy leaf images and diseased plants. CNN models were created to conduct plant disorder identification and diagnosis. A total of 87,848 photoes had been used to classify. There were 25 distinct tree and 58 different classes of illness pairs, including healthy plants. Several designs were developed, with the best-obtained success rate was 99.53% for find the related illness pair (or healthy plant). That approach has shown a lot of potentials and getting more and better information to develop the system and make it more reliable in real-life situations. Reviews on Pest Detection by CNN are shown in Table 8.

Negi et al., [68] had described a technique for identifying different plant species in color photos by a deep CNN model. According to experimental results, the CNN-based approach achieves an accuracy of around 96.02% with a logarithm loss of 0.01 in only 32 epochs on 10 plant classes. Even if the outcomes of our suggested study are better, even better results might be achieved by using more epochs.

3.2 Pest detection by LSTM

This section is composed of LSTM and is used to locate pests such as Tessaratoma papillosa and analyse environmental data from weather stations in order to predict pest outbreaks.

In 2021, Liu et al., [40] investigated the difficulty of plant ailment that can be seen in a systematic way. A comprehensive crop illness record with 271 crop disease classes and 220,592 photos was prepared to aid plant disease identification research. Plant disease recognition using information and adjusting the weights all visual regions loss the disease sections were handled. To determine the discriminative level of each patch, and the cluster arrangement of these portions, determine the strengths of all the separated pieces from each picture. The proposed methods efficiency was demonstrated by quantitatively assessments on the PDD271 and PlantVillage datasets. Table 9 describes about Pest Detection by LSTM.

In 2020, Chen et al., [10] proposed about pest identification, that image recognition technologies with artificial intelligence are integrated into the landscape and the Internet of Things (IoT). To find the location of Tessaratoma papillosa, apply deep learning YOLOv3 for picture segmentation and use LSTM to analyze environmental data from weather sensors in order to predict pest presence. The suggested method alerts farmers to the presence of certain pests before they become a major problem. It increases the overall economic worth of agriculture by offering appropriate pest management strategies that limit agricultural failures.

In 2019, Turkoglu et al., [62] suggested Multi-model LSTM-based Pre-trained Convolutional Neural Networks (MLP-CNNs). The hybrid model is made on the basis of LSTM networks and CNN models that have already been pre-trained. In transfer learning, obtain deep features from many completely linked regions of these pre-trained deep models. The feature extraction models utilized in research are AlexNet, DenseNet201, and GoogleNet.

3.3 Pest detection by DCNN

This section comprises by DCNN and recognises the relevant features without the need for human intervention. Using a deep learning approach, the DCNN procedure can detect plant illness in great detail.

In 2020, Shi et al., [56] detected eight common stored grain insects, to detect neural network architecture based on R-FCN. The network has improved its capability to detect insects in tough surroundings and has increased its response time. A modified DenseNet-121 was suggested to improve the detection exactness of deep convolutional neural networks in identifying grain storage insects. Furthermore, Vaishnnave et al., [63] suggested an efficient method for detecting and classifying groundnut illnesses using DCNN. The DCNN classification was employed to categorise the diseases, and the deep learning technique was mainly used to detect leaf disease. DCNN achieved a comparison accuracy of 94.96%, which is the best in the industry. When comparing to other DCNN layers, the 6th layer has a 95.28% accuracy rate. DCNN’s overall accuracy performance in each class was 99.88%. Table 10 shows about Pest Detection by DCNN.

In 2019, Thenmozhi et al., [60] introduced a challenging problem faced by farmers in correctly classifying and identifying all sorts of agricultural insects due to their identical appearance during the early stages of crop growth. In image classification applications, CNN with deep architectures was used to solve that problem because it extracts elements instantly and learns complex high-level features. On available insect datasets, effective deep CNN model for classifying insect species was developed. To prevent the network from generalization, data preprocessing processors such as Projection, rotation, spinning, and translation are used. The findings revealed that the suggested CNN model was more effective than pre-trained models at classifying various species of insects in field crops.

In 2018, Zhang et al., [76] improved Cifar10 and GoogLeNet based on deep learning for maize leaf disease recognition. Adjusting parameters, with different combinations and reducing the number of classifiers resulted in two better models that were used to train and test nine types of leaf pictures related to maize. The improved models have much lower number of parameters than the VGG and AlexNet structures. It may have enhanced illness of the corn leaf accuracy and reduced parallel iterations, resulting in improved model training and identification efficiency.

4 Pest identification by DBN

This section is composed of DBN and it detects plant diseases and predicts their occurrence.

In 2020, Cristin et al., [13] identified Plant disease using the RiderCuckoo Search Algorithm, which was positioned on deep neural networks. The subjected image was in pre-processing phase to remove distortions. Following the capture of the pre-processed image, it comes through a classification stage, which uses piecewise fuzzy C-means clustering (piFCM). The classification phase, employs the DBN for effective plant disease identification, was applied to the texture feature. The suggested Rider-CSA was used to train the DBN in this application. Table 11 shows the Reviews about DBN.

5 Advanced prediction methods

Dong et al., [18] had suggested a brand-new event data gathering strategy called RMER (Reliability and Multi-path Encounter Routing). As a result, it was possible to significantly cut energy usage, which will enable an even longer network lifetime. The use of RMER to event detection outperforms alternatives, according to theoretical and experimental simulation results.

Yadav et al., [74] had proposed machine learning methods like PCA, K-means, random forest, Multilayer Perceptron (MLP), and naive Bayes to properly forecast the diabetic illness. The diabetes prediction model goes through many processes, including pre-processing of the data, feature extraction using PCA, and classification using voting classifier. This research’s primary goal is to increase forecast accuracy. Voting classifier was implemented for the diabetes prediction in order to increase accuracy.

Negi et al., [46] had suggested about detect the common human actions automatically using the ResNet-50 architecture, which is built on residual learning and transfer learning. In the proposed study, a wealth of empirical data is used to show that residual networks are easier to optimise and benefit from much higher depth. The UTKinect Action-3D public dataset of human daily actions is used in the research.

Kumari et al., [35] had presented classification algorithms in machine learning and their application to therapeutic datasets was being debated everywhere. Dataset has been trained on different methods, and the highest voted class is anticipated as the outcome. This was done in recognition of the fact that integrating many predictions yields results that are more accurate than relying just on one prediction. Since liver disease was the only main cause of mortality that was still a perpetual problem, it was imperative to diagnose and treat it early on in order to greatly lower the risk of death. The dataset of Indian Liver Patients was used in the suggested study, and it clearly shows that grouping classification algorithms effectively increase the probability of sickness prediction.

Alok et al., [4] had considered whether AI may be used to forecast deadly malaria in open-source, affordable, and accurate deep learning technologies and discussed about deep neural convolution (CNN) networks-based categorization of malaria-infected cells with reasonable precision also go over how to put together a pathologist-cleared visual data set for deep neural network training, as well as some approaches to data augmentation that are used to greatly increase the dimensionality of the data given the overfitting issue that is particularly prevalent in deep CNN training.

Negi et al., [47] had suggested deep learning models based on CNN and VGG16 to implement and enforce AI-based safety precautions to identify the face mask on Simulated Masked Face Dataset (SMFD). The method can distinguish between faces that are disguised and those that aren’t, making it easier to wear face masks and maintaining a safe working environment while keeping an eye out for safety violations.

Sharma et al., [55] had presented about government, security, and law enforcement in India are challenged by the country’s enormous population. Public sentiment toward upcoming and previous events, such as popular meetings and governmental policies, reveals public attitudes and may be used to assess how much support, disorder, or disruption there was in such circumstances. Therefore, analysing the emotions expressed in online material may be useful for a variety of businesses, especially those involved in security, law enforcement, and the public sector. The state-of-the-art model for lexicon-based sentiment analysis algorithm was extended in this research to analyse human emotions.

Negi et al., [44] had presented AI group. The outcome-based strategy was used to scan, evaluate, forecast, and keep tabs on both existing patients and perhaps new patients. In particular, advancements for measuring social distances or identifying face coverings have drawn attention. The majority of the most cutting-edge face mask identification techniques now in use are based on deep learning, which requires a huge number of face samples. In order to successfully stop the transmission of the COVID-19 virus during an outbreak of the corona virus, almost everyone dons a mask. Our objectives are to examine the idea of model pruning with Keras-Surgeon and train a tailored deep learning model that aids in detecting whether or not a person is wearing a mask.

Kumar et al., [34] had proposed on browse, retrieve, and analyse the vast amount of video footage produced continuously by the different cameras used in multi-view video systems. Due to inter-view relationships, lighting changes, and the bearing of many dormant frames, accessing and processing such massive amounts of data in real time becomes a very difficult operation. The paper demonstrates a method for detecting and summarising the event in multi-view surveillance movies using boosting, a machine learning algorithm, as a solution to the initial issues.

Kumar et al., [33] had suggested a local-alignment-based FASTA technique to summarise the action in multi view films. The issue of lighting changes was fixed, fine texture details are eliminated, and things in a frame are detected via an object detection framework that uses deep learning. Then, using local alignment and the FASTA method, interview relationships between various video views are recorded. The frames with little activity are extracted when object tracking is used. Evaluations, both subjective and objective, unequivocally show that the suggested strategy was effective. Research demonstrates that the suggested summarising technique successfully cuts the video material while retaining important information in the form of events. Review about Advanced Prediction System is shown in below Table 12.

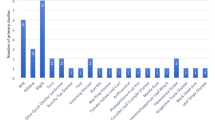

In the Below section, an overview graph of year wise and its journals plot are discussed.

Figure 3, shows that in the year wise plot in the year 2022 three journals are taken, in the year 2021 Fifteen journals are taken, in the year 2020 Thirty-one journals are taken, in the year 2019 Twelve journals are taken, and in the year 2018, Fifteen journals are taken, in the year 2017, Seven journals are taken, and in the year 2016 one journal is taken.

6 Conclusion

In this work, several existing techniques are analyzed based on machine learning and deep learning to detect plant disease. The techniques such as RF, SVM, DT, and NB are machine learning-based approaches that provide a huge impact on the prediction of plant leaf disease identification. Similarly, the deep learning-based model provides more effective performance than the machine learning technique. In order to improve the performance of disease prediction from various plants, such as citrus pest detection, rice pest detection, and cotton pest detection, certain deep learning algorithms, such as CNN, LSTM, DCNN, and DBN, are already used. The current approaches, nevertheless, are still not as good at making predictions, thus a more improved model has to be created. So, in order to improve plant disease identification with the addition of a metaheuristic method, we want to create a unique hybrid machine learning/deep learning-based strategy. With this model, the prediction performance can be enriched, and obtained better classification results. In the future, it will also be necessary to combine Internet facilities, such as agricultural Internet of Things and mobile terminal processors, to achieve real-time monitoring and pest identification of grain storage warehouses, which is conducive to promoting the modernization and intelligence of the agricultural industry.

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Ahmad M, Abdullah M, Moon H, Han D (2021) Plant disease detection in imbalanced datasets using efficient convolutional neural networks with stepwise transfer learning. IEEE Access 9:140565–140580

Ai Y, Sun C, Tie J, Cai X (2020) Research on recognition model of crop diseases and insect pests based on deep learning in harsh environments. IEEE Access 8:171686–171693

Ali H, Lali MI, Nawaz MZ, Sharif M, Saleem BA (2017) Symptom based automated detection of citrus diseases using color histogram and textural descriptors. Comput Electron Agric 138:92–104

Alok N, Krishan K, Chauhan P (2021) Deep learning-based image classifier for malaria cell detection. Machine learning for healthcare applications, pp 187–197

Amirruddin AD, Muharam FM, Ismail MH, Ismail MF, Tan NP, Karam DS (2020) Hyperspectral remote sensing for assessment of chlorophyll sufficiency levels in mature oil palm (Elaeis guineensis) based on frond numbers: analysis of decision tree and random forest. Comput Electron Agric 169:105221

Ayan E, Erbay H, Varçın F (2020) Crop pest classification with a genetic algorithm-based weighted ensemble of deep convolutional neural networks. Comput Electron Agric 179:105809

Barbedo JGA (2019) Plant disease identification from individual lesions and spots using deep learning. Biosyst Eng 180:96–107

Barman U, Choudhury RD, Sahu D, Barman GG (2020) Comparison of convolution neural networks for smartphone image based real time classification of citrus leaf disease. Comput Electron Agric 177:105661

Chen D, Shi Y, Huang W, Zhang J, Wu K (2018) Mapping wheat rust based on high spatial resolution satellite imagery. Comput Electron Agric 152:109–116

Chen CJ, Huang YY, Li YS, Chang CY, Huang YM (2020) An AIoT based smart agricultural system for pests detection. IEEE Access 8:180750–180761

Chen P, Xiao Q, Zhang J, Xie C, Wang B (2020) Occurrence prediction of cotton pests and diseases by bidirectional long short-term memory networks with climate and atmosphere circulation. Comput Electron Agric 176:105612

Cheng X, Zhang Y, Chen Y, Wu Y, Yue Y (2017) Pest identification via deep residual learning in complex background. Comput Electron Agric 141:351–356

Cristin R, Kumar BS, Priya C, Karthick K (2020) Deep neural network-based Rider-Cuckoo search algorithm for plant disease detection. Artif Intell Rev 53(7):4993–5018

da Rocha Miranda J, de Carvalho Alves M, Pozza EA, Neto HS (2020) Detection of coffee berry necrosis by digital image processing of landsat 8 oli satellite imagery. Int J Appl Earth Obs Geoinf 85:101983

Deng L, Wang Y, Han Z, Yu R (2018) Research on insect pest image detection and recognition based on bio-inspired methods. Biosyst Eng 169:139–148

Deng X, Huang Z, Zheng Z, Lan Y, Dai F (2019) Field detection and classification of citrus huanglongbing based on hyperspectral reflectance. Comput Electron Agric 167:105006

Dhingra G, Kumar V, Joshi HD (2019) A novel computer vision based neutrosophic approach for leaf disease identification and classification. Measurement 135:782–794

Dong M, Ota K, Liu A (2016) Reliable and energy-efficient data collection for large-scale wireless sensor networks. IEEE Internet Things J 3(4):511–519

Ebrahimi MA, Khoshtaghaza MH, Minaei S, Jamshidi B (2017) Vision-based pest detection based on SVM classification method. Comput Electron Agric 137:52–58

Ferentinos KP (2018) Deep learning models for plant disease detection and diagnosis. Comput Electron Agric 145:311–318

Gao J, Nuyttens D, Lootens P, He Y, Pieters JG (2018) Recognising weeds in a maize crop using a random forest machine-learning algorithm and near-infrared snapshot mosaic hyperspectral imagery. Biosyst Eng 170:39–50

Hassan SM, Maji AK (2022) Plant disease identification using a novel convolutional neural network. IEEE Access 10:5390–5401

Hou C, Zhuang J, Tang Y, He Y, Miao A, Huang H, Luo S (2021) Recognition of early blight and late blight diseases on potato leaves based on graph cut segmentation. J Agric Food Res 5:100154

Hu G, Wu H, Zhang Y, Wan M (2019) A low shot learning method for tea leaf’s disease identification. Comput Electron Agric 163:104852

Janarthan S, Thuseethan S, Rajasegarar S, Lyu Q, Zheng Y, Yearwood J (2020) Deep metric learning based citrus disease classification with sparse data. IEEE Access 8:162588–162600

Jiang F, Lu Y, Chen Y, Cai D, Li G (2020) Image recognition of four rice leaf diseases based on deep learning and support vector machine. Comput Electron Agric 179:105824

Johnson LK, Bloom JD, Dunning RD, Gunter CC, Boyette MD, Creamer NG (2019) Farmer harvest decisions and vegetable loss in primary production. Agric Syst 176:102–672

Karar ME, Alsunaydi F, Albusaymi S, Alotaibi S (2021) A new mobile application of agricultural pests recognition using deep learning in cloud computing system. Alexandria Eng J 60(5):4423–4432

Kaur S, Sikka G, Awasthi LK (2018) Sentiment analysis approach based on N-gram and KNN classifier. In: 2018 First International Conference on Secure Cyber Computing and Communication (ICSCCC). IEEE, pp 1–4

Khaled AY, Abd Aziz S, Bejo SK, Nawi NM, Seman IA (2018) Spectral features selection and classification of oil palm leaves infected by basal stem rot (BSR) disease using dielectric spectroscopy. Comput Electron Agric 144:297–309

Khanramaki M, Asli-Ardeh EA, Kozegar E (2021) Citrus pests classification using an ensemble of deep learning models. Comput Electron Agric 186:106192

Krishnamoorthy N, Prasad LN, Kumar CP, Subedi B, Abraha HB, Sathishkumar VE (2021) Rice leaf diseases prediction using deep neural networks with transfer learning. Environ Res 198:111275

Kumar K, Shrimankar DD (2017) F-DES: fast and deep event summarization. IEEE Trans Multimedia 20(2):323–334

Kumar K, Shrimankar DD (2018) Deep event learning boost-up approach: Delta. Multimedia Tools and Applications 77(20):26635–26655

Kumari S, Singh M, Kumar K (2019) Prediction of liver disease using grouping of machine learning classifiers. In: International conference on deep learning, artificial intelligence and robotics. Springer, Cham, pp 339–349

Li Y, Yang J (2020) Few-shot cotton pest recognition and terminal realization. Comput Electron Agric 169:105240

Li R, Wang R, Zhang J, Xie C, Liu L, Wang F, Chen H, Chen T, Hu H, Jia X, Hu M (2019) An effective data augmentation strategy for CNN-based pest localization and recognition in the field. IEEE Access 7:160274–160283

Li Y, Wang H, Dang LM, Sadeghi-Niaraki A, Moon H (2020) Crop pest recognition in natural scenes using convolutional neural networks. Comput Electron Agric 169:105174

Li Y, Nie J, Chao X (2020) Do we really need deep CNN for plant diseases identification? Comput Electron Agric 178:105803

Liu X, Min W, Mei S, Wang L, Jiang S (2021) Plant disease recognition: a large-scale benchmark dataset and a visual region and loss reweighting approach. IEEE Trans Image Process 30:2003–2015

Lv M, Zhou G, He M, Chen A, Zhang W, Hu Y (2020) Maize leaf disease identification based on feature enhancement and dms-robust alexnet. IEEE Access 8:57952–57966

Mondal D, Kole DK, Roy K (2017) Gradation of yellow mosaic virus disease of okra and bitter gourd based on entropy based binning and Naive Bayes classifier after identification of leaves. Comput Electron Agric 142:485–493

Mustafa MS, Husin Z, Tan WK, Mavi MF, Farook RSM (2020) Development of automated hybrid intelligent system for herbs plant classification and early herbs plant disease detection. Neural Comput Appl 32(15):11419–11441

Negi A, Chauhan P, Kumar K, Rajput RS (2020) Face mask detection classifier and model pruning with keras-surgeon. In: 2020 5th IEEE international conference on recent advances and innovations in engineering (ICRAIE). IEEE, pp 1–6

Negi A, Kumar K, Chauhan P (2021) Deep neural network-based multi‐class image classification for plant diseases. Automation using the IoT and machine learning, agricultural informatics, pp 117–129

Negi A, Kumar K, Chaudhari NS, Singh N, Chauhan P (2021) Predictive analytics for recognizing human activities using residual network and fine-tuning. In: International conference on big data analytics. Springer, Cham, pp 296–310

Negi A, Kumar K, Chauhan P, Rajput RS (2021) Deep neural architecture for face mask detection on simulated masked face dataset against covid-19 pandemic. In: 2021 international conference on computing, communication, and intelligent systems (ICCCIS). IEEE, pp 595–600

Nigam A, Tiwari AK, Pandey A (2020) Paddy leaf diseases recognition and classification using PCA and BFO-DNN algorithm by image processing. Mater Today: Proc 33:4856–4862

Pan W, Qin J, Xiang X, Wu Y, Tan Y, Xiang L (2019) A smart mobile diagnosis system for citrus diseases based on densely connected convolutional networks. IEEE Access 7:87534–87542

Qing YAO, Jin FENG, Jian TANG, Xu WG, Zhu XH, Yang BJ, Jun L, Xie YZ, Bo YAO, Wu SZ, Kuai NY (2020) Development of an automatic monitoring system for rice light-trap pests based on machine vision. J Integr Agric 19(10):2500–2513

Rady A, Ekramirad N, Adedeji AA, Li M, Alimardani R (2017) Hyperspectral imaging for detection of codling moth infestation in GoldRush apples. Postharvest Biol Technol 129:37–44

Rajendran D, Vigneshwari S (2021) Design of agricultural ontology based on levy flight distributed optimization and Naïve BaSyes classifier. Sādhanā 46(3):1–12

Sethy PK, Barpanda NK, Rath AK, Behera SK (2020) Deep featurebased rice leaf disease identification using support vector machine. Comput Electron Agric 175:105527

Sharif M, Khan MA, Iqbal Z, Azam MF, Lali MIU, Javed MY (2018) Detection and classification of citrus diseases in agriculture based on optimized weighted segmentation and feature selection. Comput Electron Agric 150:220–234

Sharma S, Kumar P, Kumar K (2017) LEXER: Lexicon based emotion analyzer. In: International conference on pattern recognition and machine intelligence. Springer, Cham, pp 373–379

Shi Z, Dang H, Liu Z, Zhou X (2020) Detection and identification of stored-grain insects using deep learning: a more effective neural network. IEEE Access 8:163703–163714

Shiba T, Hirae M, Hayano-Saito Y, Ohto Y, Uematsu H, Sugiyama A, Okuda M (2018) Spread and yield loss mechanisms of rice stripe disease in rice paddies. Field Crops Research 217:211–217

Shuaibu M, Lee WS, Schueller J, Gader P, Hong YK, Kim S (2018) Unsupervised hyperspectral band selection for apple Marssonina blotch detection. Comput Electron Agric 148:45–53

Su J, Liu C, Coombes M, Hu X, Wang C, Xu X, Li Q, Guo L, Chen WH (2018) Wheat yellow rust monitoring by learning from multispectral UAV aerial imagery. Comput Electron Agric 155:157–166

Thenmozhi K, Reddy US (2019) Crop pest classification based on deep convolutional neural network and transfer learning. Comput Electron Agric 164:104906

Tian L, Xue B, Wang Z, Li D, Yao X, Cao Q, Zhu Y, Cao W, Cheng T (2021) Spectroscopic detection of rice leaf blast infection from asymptomatic to mild stages with integrated machine learning and feature selection. Remote Sens Environ 257:112350

Turkoglu M, Hanbay D, Sengur A (2019) Multi-model LSTM-based convolutional neural networks for detection of apple diseases and pests. J Ambient Intell Humaniz Comput:1–11

Vaishnnave MP, Suganya Devi K, Ganeshkumar P (2020) Automatic method for classification of groundnut diseases using deep convolutional neural network. Soft Comput 24(21):16347–16360

Van De Vijver R, Mertens K, Heungens K, Somers B, Nuyttens D, Borra-Serrano I, Lootens P, Roldán-Ruiz I, Vangeyte J, Saeys W (2020) In-field detection of Alternaria solani in potato crops using hyperspectral imaging. Comput Electron Agric 168:105106

Vishnoi VK, Kumar K, Kumar B (2021) Plant disease detection using computational intelligence and image processing. J Plant Dis Prot 128(1):19–53

Wang Q, Bao W, Yang F, Yang Y, Lu Y (2018) A PCR-based analysis of plant DNA reveals the feeding preferences of Apolygus lucorum (Heteroptera: Miridae). Arthropod-Plant Interact 12(4):567–574

Wang J, Li Y, Feng H, Ren L, Du X, Wu J (2020) Common pests image recognition based on deep convolutional neural network. Comput Electron Agric 179:105834

Wang F, Wang R, Xie C, Zhang J, Li R, Liu L (2021) Convolutional neural network based automatic pest monitoring system using hand-held mobile image analysis towards non-site-specific wild environment. Comput Electron Agric 187:106268

Wang T, Mei X, Thomasson JA, Yang C, Han X, Yadav PK, Shi Y (2022) GIS-based volunteer cotton habitat prediction and plant-level detection with UAV remote sensing. Comput Electron Agric 193:106629

Wei C, Qin T, Li Y, Wang W, Dong T, Wang Q (2020) Host-induced gene silencing of the acetolactate synthases VdILV2 and VdILV6 confers resistance to Verticillium wilt in cotton (Gossypium hirsutum L). Biochem Biophys Res Commun 524(2):392–397

Wu Y, Feng X, Chen G (2022) Plant Leaf Diseases Fine-Grained categorization using Convolutional neural networks. IEEE Access 10:41087–41096

Xiao M, Ma Y, Feng Z, Deng Z, Hou S, Shu L, Lu Z (2018) Rice blast recognition based on principal component analysis and neural network. Comput Electron Agric 154:482–490

Xu Z, Huang X, Lin L, Wang Q, Liu J, Yu K, Chen C (2020) BP neural networks and random forest models to detect damage by Dendrolimus punctatus Walker. J Forestry Res 31(1):107–121

Yadav A, Verma HK, Awasthi LK (2021) Voting classification method with PCA and K-means for diabetic prediction. In: Innovations in computer science and engineering. Springer, Singapore, pp 651–656

Zhang JH, Kong FT, Wu JZ, Han SQ, Zhai ZF (2018) Automatic image segmentation method for cotton leaves with disease under natural environment. J Integr Agric 17(8):1800–1814

Zhang X, Qiao Y, Meng F, Fan C, Zhang M (2018) Identification of maize leaf diseases using improved deep convolutional neural networks. Ieee Access 6:30370–30377

Zhang DY, Chen G, Yin X, Hu RJ, Gu CY, Pan ZG, Zhou XG, Chen Y (2020) Integrating spectral and image data to detect Fusarium head blight of wheat. Comput Electron Agric 175:105588

Zhang D, Wang Z, Jin N, Gu C, Chen Y, Huang Y (2020) Evaluation of efficacy of fungicides for control of wheat fusarium head blight based on digital imaging. IEEE Access 8:109876–109890

Zhou G, Zhang W, Chen A, He M, Ma X (2019) Rapid detection of rice disease based on FCM-KM and faster R-CNN fusion. IEEE Access 7:143190–143206

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chithambarathanu, M., Jeyakumar, M.K. Survey on crop pest detection using deep learning and machine learning approaches. Multimed Tools Appl 82, 42277–42310 (2023). https://doi.org/10.1007/s11042-023-15221-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15221-3