Abstract

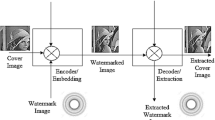

With the growing use of mobile devices and Online Social Networks (OSNs), sharing digital content, especially digital images is extremely high as well as popular. This made us convenient to handle the ongoing COVID-19 crisis which has brought about years of change in the sharing of digital content online. On the other hand, the digital image processing tools which are powerful enough to make the perfect image duplication compromises the privacy of the transmitted digital content. Therefore, content authentication, proof of ownership, and integrity of digital images are considered crucial problems in the world of digital that can be accomplished by employing a digital watermarking technique. On contrary, watermarking issues are to triumph trade-offs among imperceptibility, robustness, and payload. However, most existing systems are unable to handle the problem of tamper detection and recovery in case of intentional and unintentional attacks concerning these trade-offs. Also, the existing system fails to withstand the geometrical attacks. To resolve the above shortcomings, this proposed work offers a new multi-biometric based semi-fragile watermarking system using Dual-Tree Complex Wavelet Transform (DTCWT) and pseudo-Zernike moments (PZM) for content authentication of social media data. In this research work, the DTCWT-based coefficients are used for achieving maximum embedding capacity. The Rotation and noise invariance properties of Pseudo Zernike moments make the system attain the highest level of robustness when compared to conventional watermarking systems. To achieve authentication and proof of identity, the watermarks of about four numbers are used for embedding as a replacement for a single watermark image in traditional systems. Among four watermarks, three are the biometric images namely Logo or unique image of the user, fingerprint biometric of the owner, and the metadata of the original media to be transmitted. In addition, to achieve the tamper localization property, the Pseudo Zernike moments of the original cover image are obtained as a feature vector and also embedded as a watermark. To attain a better level of security, each watermark is converted into Zernike moments, Arnold scrambled image, and SHA outputs respectively. Then, to sustain the trade-off among the watermarking parameters, the optimal embedding location is determined. Moreover, the watermarked image is also signed by the owner’s other biometric namely digital signature, and converted into Public key matrix Pkm and embedded onto the higher frequency subband namely, HL of the 1-level DWT. The proposed system also accomplishes a multi-level authentication, among that the first level is attained by the decryption of the extracted multiple watermark images with the help of the appropriate decryption mechanism which is followed by the comparison of the authentication key which is extracted using the key which is regenerated at the receiver’s end. The simulation outcomes evident that the proposed system shows superior performance towards content authentication, to most remarkable intentional and unintentional attacks among the existing watermarking systems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

ONLINE social media play an important role to connect people across the world through which every individual can connect with his/her friends and family, and share their information which is private using application software such as Microsoft Teams, Zoom, Google Meet, Duo, etc. Zoom app expanded from 10 M users in Dec’19 to 200 M in Mar’20 to 300 M in Apr’20. Since the Covid-19 pandemic lockdown has been activated all over the world, people started using online social networks such as WhatsApp, Twitter, Facebook, Instagram, LinkedIn, MySpace, Google+, Sina Weibo, Vkontakte, Mixi, and more for their communication not only for entertainment purpose but also basic needs. Multimedia data such as texts, images, audio, and videos are distributed easily over online social networks using the latest technology. However distributed data over online social media is often affected by malicious users that cause copyright violations, authenticity, ownership identity, and confidentiality issues. The discrepancy among the principles of online social media and copyright leads not only to misperception but also the susceptibility of users [18]. The authors recommended that Instagram should inform a better way to its users about the consequences when content is shared with a third party along with the agreement terms of its user by the copyright implementation strategy.

A recent report states that in a Design Thinking Challenge about sixty-eight people who are young helped those to have a deeper grasp of their experiences in electronic image-sharing, the harmful as well as helpful of images sharing repercussions on the mental health of adolescents, and safety, and also the interferences which are promising that makes young people take positive decisions in minimizing the dangers they face when exchanging photographs via technology devices [19]. Android App COVID Tracker attacks using ransomware virus. It forces a change in the unlock passwords AKA screen-lock attacks. Covid-19 tax payouts take the victim to fake government webpages and it Harvests all their financial and tax information. Two leading medical professionals Dr. Naresh Trehan, Medanta Medicity, and Dr. Devi Shetty from Narayana Health’s attacked by fake news which leads to a nationwide emergency. Thus, there is an emergency in the requirement of data privacy on social media. An inclusive study of diverse privacy and safety and suspicions in social networking sites is presented in [46]. In addition, they also an emphasis on numerous extortions which ascend because of multimedia content sharing over online sites of social networks, and state-of-the-art defense solutions have been discussed to protect users of the social network from this kind of threats. Some social networks, like Twitter, MySpace, and Facebook always do not allow their users in disclosing their significant private information, but attackers may disclose their undisclosed information which is private. In 2005, the Sammy worm attacked MySpace, which exploited the susceptibilities in MySpace and transmitted very quickly. Similarly, in 2009, the Mikeyy worm attacked Twitter by replacing user data with some data which is unusable. In the same year, the Koobface worm attacked Facebook targeting it towards stealing users’ important facts, namely the password of the user [39].

The rising use of social media by hackers cannot go ignored, according to the Internet Security Threat Report (ISTR) [54]. In 2015, such services were perverted into a source of unlawful online money-making through the use of malware and spam. Mark Zuckerberg, the CEO of Facebook, had his Pinterest and Twitter accounts hijacked recently, with the intruder attempting his LinkedIn password. The authors provided a collaborative analysis approach employing computational intelligence techniques to determine whether any online transmitted image has been edited or changed, and the material used to define which is accurate as in [25]. They investigated trending photographs using a collaborative search technique that took advantage of descriptive tags and user interaction histories. The authors of [64] provided a comprehensive overview of the current state of social media network security and reliability. They also addressed several open concerns and cutting-edge challenges in their research contribution.

[43] Proposes an adaptive LSB substitution picture steganography approach employing uncorrelated color space to address the privacy of graphic materials in online social networks (OSNs). To ensure the privacy of protected multimedia content on online social networks, a selective encryption strategy is utilized to lower the computational needs for large-size multimedia content. Only the most important parts of the cover media contents/any one of the color channels are encrypted [36].

After reviewing the aforementioned attack statistics, we concluded that the greatest route for an attacker to link cybercrime is through social media. To diminish these threats many researchers and industrialists have proposed various solutions that include watermarking [13, 35, 50, 65], steganalysis [34, 44, 58], and digital oblivion [22, 52] for protecting online social network users from hazards posed by multimedia data. To ensure the secure transmission of multimedia data over online social media various techniques such as cryptography [29,30,31, 45, 48], steganography [2, 23], and watermarking are used. Among these techniques, a watermarking-based system is suitable for all types of multimedia data transformation in a secured way over unsecured social media. Numerous research papers have been proposed to provide secure transformation of data using an image watermarking system over insecure networks. It is divided into two categories namely spatial domain and frequency domain approaches. Later one provides a robust and more complex system when compared to the former one [4,5,6, 8,9,10, 37, 63].

Some online social networks [41] use visible watermarking describes embedding the logo or text of the owner onto the cover contents that identifies multimedia content’s ownership. This type of visible watermarking inclines to shield the data and is difficult to remove. After standard signal processing, fragile watermarks cannot be retrieved or validated. The hybrid of robustness and fragility defines a technique called semi-fragile watermarks. [44] Offers a unique approach for exchanging data which is on online social networks that minimize user privacy disclosure. To establish unified privacy regulations across numerous online social networks, it applies a public watermarking technology on multimedia material. They addressed the identity ownership issue by embedding multiple watermarks in place of the single, thus providing the additional security level and also condensed the requirements of bandwidth and storage mostly important for secure multimedia content applications on online social networks namely, education, E-health, driver’s license/passport, secured E-Voting systems, digital cinema, and insurance companies. A modification of the Discrete Wavelet Transform (DWT) coefficient-based digital watermarking approach for online social networks is introduced by Thongkor et al. [56]. To protect cyberspace predominantly concentrating on information theft namely identity and credit card theft, thus cyber watermarking techniques are introduced by authors in [3].

When attackers damage the visible watermarks [57], it fails to offer authentication.

It is suggested to use a complete, hybrid, and reliable digital image watermarking. By using the most advantageous characteristics of an appropriate sub-band of DWT and the most optimal coefficients of DCT domain, color component, and appropriate embedding capacity, this method may establish a trade-off between imperceptibility, robustness, and embedding capacity. In order to attain particular parameters and reliable results for this study, multilateral examinations in a variety of scenarios have been tested [1].

A digital watermarking technique based on a rough Hadamard transform has been presented in [21]. The algorithm is comparable to the Hadamard transform, but the transform domain coefficients are multiplied, making it easier to precisely modify the quantization step size and improving the robustness that is readily available. Additionally, the technique makes use of various quantization step sizes in the RGB layers of color images via the variable step size theory to enhance invisibility.

By utilising the properties of the Slant transform, the suggested method [53] may directly extract the highest energy coefficient of the image block in the spatial domain. The coefficient is then quantized to contain the color digital watermark image after choosing the best quantization step using an adaptive quantization step technique. Additionally, the suggested method is compatible with colour digital photographs of various sizes in copyright protection and can adaptively select the watermark encoding type. The proposed method has demonstrated strong robustness and high real-time performance in the face of conventional image processing and geometric attacks, according to a substantial body of experimental data, which also confirms that it can satisfy the requirements of watermark invisibility and blind extraction.

An approach to watermarking that was suggested in [42] for patient identification and watermark integrity checking. In this method, the mid-frequencies of a discrete wavelet transform of the medical image have been merged with the patient’s information fingerprint and its encrypted photography. The imperceptibility and robustness experimental findings show that the suggested solution preserves high quality watermarked images and a decent robustness against numerous common attacks.

[60] Introduces a durable digital image watermarking using Pseudo – Zernike moments, which uses image normalization to discover the geometrically invariant space. The normalized image’s Pseudo Zernike moments are then figured out and from that, only the low-order moments are chosen for embedding. The authors of [11] presented ANiTW, a unique intelligent text watermarking technology. It hides an invisible watermark in Latin text-based information using an instance-based learning technique. Even if malicious individuals change or corrupt a section of the watermarked data, the system works flawlessly. But, this system fails to withstand all image forgery attacks. All the above existing systems, mostly detect the tampered region and classify it as malicious and non-malicious. Thus, the tampered recovery and determining the severity of the malicious or intentional attack is a challenging task. To avoid these downsides, not only the fragile watermarking systems [24] and also the semi-fragile-based watermarking systems which are both robust and fragile were developed. Most semi-fragile watermarking systems use Zernike moments-based feature vectors for tamper localization and recovery [40]. Some use edge features and Zernike features for the same [49]. Using semi-fragile watermarking and error-locating codes in the DWT domain, we offer an approach for localizing image tampering in this paper. We demonstrate the advantages of employing control code error localization as an authentication function as well as its complexity by introducing several families of codes. In fact, to find image tampering, the proposed system in [33] demonstrate experimentally that error localization block codes are just as accurate as utilizing traditional error correcting codes (Reed-Solomon and BCH codes). However, the complexity of the relevant decoding algorithms is at least quadratic, making them unsuitable for some real-time applications. In order to address this issue, we develop error-control codes known as Error-Locating codes, where error localization is condensed to a single syndrome computation carried out using a minimal amount of binary operations.

Besides the above, to provide the balance among the semi-fragile watermarking systems’ basic requirements, adaptivity is introduced in embedding and extraction. Even under unintended attacking situations, the encoded information cannot be accurately recovered when there is no adaptivity in the semi-fragile watermarking of images. Recently, the nature-inspired algorithms that are metaheuristics that mimic nature are now leading the techniques of digital watermarking for answering these issues either by finding an optimized location or embedding strength. In [27], the evolutionary algorithm namely the Genetic Algorithm is mainly used for acquiring the optimal embedding location for the insertion of a watermark. In [7], the adaptive robustness factor or scaling factor is calculated using a Firefly-based watermarking system. Recently, [8, 12] were used for determining the optimal embedding location and optimal scaling factor using a new optimization technique called cuckoo search optimization. Hence, as mentioned in [61], cuckoo search optimization performs well when equated to other metaheuristic algorithms.

1.1 Motivations

-

(i).

From the survey, it is clear that the confidential multimedia data distributed over insecure social networks requires a higher level of authenticity as well as proof of ownership. Unauthenticated multimedia information needs to be further classified into intentional and unintentional attacks.

-

(ii).

The visual quality of the semi-fragile watermarking systems is improved by determining the feature vectors of an image using the Zernike moments. Also, its robustness characteristic is improved with its rotation invariance.

-

(iii).

To maintain the balance between the most important watermarking criteria, an adaptive embedding and extraction mechanism were introduced.

-

(iv).

All these presented Zernike moments for tamper detection and recovery are vulnerable to high-density noise and have lesser embedding capacity. This motivates us to develop a Pseudo Zernike Moments-based watermarking system not only for the detection of tampers but also for their recovery.

The aforementioned literature review motivates us to create a nature-inspired image watermarking system that is both durable and safe using Cuckoo search optimization and DTCWT for proof of ownership and the authenticity of the multimedia information transmitted over insecure online social networks.

We have used the following terms in the proposed work: the Pseudo-Zernike Moments based Complex Wavelet Transform for a completely dissimilar purpose, such as embedding and extraction of confidential images on online social networks. In addition, using the available Dual-tree complex wavelet transform coefficients, our technique presents a new optimized system that identifies optimal scale coefficients used for watermarks embedding. In the proposed image work, the cover image is first undergoing DTCWT to obtain the transform coefficients. The invariant feature points are detected on the resultant coefficients of DTCWT using the Harris corner detector and the corner values are limited to 30. After that, use cuckoo search optimization is used to find the suitable block of pseudo-Zernike moments which is obtained on the feature points for the insertion of watermarks. The principal components of the multiple watermark images namely the unique logo image, owner’s fingerprint, metadata, and Pseudo Zernike moments of the cover image are embedded onto the selected feature point’s singular values which are obtained as an optimization output. Pseudo-Zernike moments’ feature points are generally invariant to rotation and the detector namely the Harris corner helps to obtain the rotation and scaling invariant feature points; however, SVD provides translation or shift-invariant operations. Thus, to attain the RST property all these transformations are used. The watermarked image is the result of the inverse process of all of the preceding operations.

To improve the suitability of the suggested system for content authentication, various security levels are attained by authentication key generation that is obtained by combining watermarked image which is of zero attacks with the owner’s digital signature. The resultant authentication key is then transmitted over a noisy channel which might be vulnerable to noise. It is then compared with the regenerated key at the receiver end with the help of the owner’s digital signature and the received watermarked image (very likely assaulted). This authentication process can help the receiver to determine the authenticity of the received watermarked image if it is successful, the process of extraction has happened else, the received image is considered unauthenticated which might be tampered with either intentionally or unintentionally. The proposed system is based on semi-fragile because, the tamper detection and recovery happen for unintentional attacks namely noise additions, geometric attacks, and JPEG. On the other hand, based on the localization of tampered regions the severity of the intentional attack is determined. If the severity is greater than the threshold, the received watermarked image gets collapsed and thus gives no information. Thus the proposed technique achieves better visual quality with an average SSIM of a maximum of 0.9998 and a minimum of 0.8959 and the minimum and maximum PSNR values of 59 dB and 68 dB respectively. This proposed multi-biometric-based semi-fragile system is resistant to all types of geometric and some image forgery attacks that are highly correlated coefficients and stumpy bit error rates.

The following is a breakdown of the paper’s structure. Section 2 details the existing systems review and contributions of our work. The preliminary notions are introduced in Section 3. The suggested semi-fragile multi-biometric watermarking scheme is detailed in Section 4. The experimental study and comparison of the suggested and conventional systems are covered in Sections 5 and 6. Section 7 concludes the article and deliberates the future directions.

2 Existing systems review and work contributions

This part expands on the existing systems’ extensive analysis of the present challenges and also the contributions of our work.

2.1 Existing Systems Review and Challenges

The present systems’ flaws were discovered as a result of the investigation mentioned above:

-

(i).

None of the existing pseudo-Zernike moments based watermarking have been attempted for tamper detection and recovery.

-

(ii).

Researchers have attempted a general watermarking system that is semi-fragile in the majority of existing techniques mainly to achieve tamper detection as well as recovery and image authenticity with limited attacks but not targeted towards online social media data. As the scope of transferring confidential information through social media increased nowadays and requires a higher level of authenticity and more image forgery needs to be classified.

-

(iii).

The majority of contemporary digital image watermarking systems perform well for a variety of image processing operations and geometric distortion, but they are extremely vulnerable to image forgery assaults. Image forging attacks such as copy-move, image splicing, image flipping, and others decrease the detector’s ability to identify the watermark.

-

(iv).

None of the existing tamper detection and recovery systems dealt with Dual tree complex wavelet transform and optimal embedding location.

2.2 Work contributions

All the aforementioned factors are considered in this research work and devised a novel embedding and extraction approach:

-

(i).

Introducing an efficient system that protects the multimedia data distributed over online social networks by providing authenticity, and proof of ownership using the concept of multi-biometrics and digital watermarking.

-

(ii).

To cultivate a semi-fragile watermarking system that is both robust and secure against unauthorized access to or modification of multimedia data that is transmitted or uploaded over insecure online social networks.

-

(iii).

In this approach, the basic semi-fragile watermarking systems are extended not only for localizing the tampered regions of various intentional attacks and also for their recovery based on the severity.

-

(iv).

The meta-heuristic optimization technique is used to decide the embedding location which is optimal with the help of two objective functions, one depends on the maximum imperceptibility, Correlation coefficient, and capacity and the other depends on an average of a minimum of false-positive probabilities and false negative probabilities and squared Euclidean distance between the original cover image and watermarked image. Therefore, watermarking characteristics such as better visual quality, robustness, privacy, and embedding capacity are all achieved.

-

(v).

The multi-biometric images as watermarks and the metadata and pseudo-Zernike moments of the cover images are embedded onto the pseudo-Zernike moment’s singular values on the Harris feature points of DTCWT coefficients, which results in achieving robustness against noises, geometric, and other intentional attacks. Thus, one can attain better tamper detection and recovery.

3 Preliminary concepts

3.1 Pseudo-Zernike moments

Pseudo-Zernike moments (PZM) are due to Pseudo-Zernike polynomials and these are complex-valued polynomials which are orthogonal sets defined within the unit circle’s interior x2 + y2 > =1 [33, 49]. The polynomials set is represented by Pnm(x,y)} and it is given as,

where,

- ρ:

-

sqrt(x2 + y2), θ = tan−1(y/x).

- n:

-

represents a non-negative integer,

- m:

-

always has a value with condition |m| ≤ n and.

- PZRnm(ρ):

-

represents radial Pseudo-Zernike polynomial and it is expressed as,

An analog image f(x,y) can be decomposed using the above Pseudo-Zernike polynomial and it is expressed as,

where,

- APnm:

-

represents n order pseudo-Zernike moments with m repetition and it is expressed as,

In real-time, we mostly deal with digital images, but the above Pseudo Zernike moments APnm won’t be directly applied, only its approximate version \({\widehat{AP}}_{nm}\) will be obtained as

In Eq. (5), the i and j value are taken from the condition that xi2 + yj2 ≤ 1, and hnm(xi,yj) is given as

where ∆x = 2/M, ∆y = 2/N.

Thus the Pseudo-Zernike moments used for discrete images in most of the literature are expressed PZM simply as,

The reconstruction of an image f(x,y)’ from the obtained PZM is also possible due to its orthogonality and completeness property using the following expression,

From [60], it is clear that when compared to Zernike and other existing moments, pseudo-Zernike moments are superior and various other moments particularly for image tamper detection and recovery mainly because of their properties, namely:

-

(i).

More Feature representation capabilities: The capabilities of PZM in feature representation are found to be higher when compared to other moments namely Zernike moments because it affords more feature vectors as given in Table 1.

-

(ii).

Robust against noise: PZM is orthogonal. As we know that the orthogonal moments are generally robust against noise, an image flipping or rotation does not affect their magnitudes,

-

(iii).

Reconstruction ability: PZM can efficiently be used for image reconstruction.

-

(iv).

Multilayer-based expression: Any given image outline can be represented in the form of moments which is low-order moments, whereas, the image details are represented using the high-order moments.

-

(v).

Implementation ability: PZM can be implemented easily as its implementation is easier with the higher-order Pseudo-Zernike moments, unlike Hu moments.

Overall, PZM is a noble operator for image description. However, it is not invariance to scaling and translation directly, that can be attained with the help of image preprocessing via image normalization technology [51, 60]. Thus, invariance concerning scaling and translation is accomplished when an image is scaled to normal size after being translated to its centroid (Fig. 1).

For orders of about n numbers repetitions of about m numbers, the PZM and ZM values are depicted in Table 1. From the above table, both the pseudo-Zernike moments and Zernike moments can be expressed as lower-order and higher-order moments. From the Table, it is also evident that, for the same n number of orders, the m number of repetitions of PZM is more and thus has more numbers of feature vectors when compared to Zernike moments which in turn increase the embedding capacity. In particular, for applications namely information hiding, not all orders of moments are suitable [16, 55, 59] . Hence, the suitable orders as given in [60] are used. (i) The maximum orders that can be used are nmax = 20 and thus the corresponding number of feature vectors is given in Table 1 (ii) The moments with ‘m’ number of repetitions whose value are the multiples of 4. i.e. 4i, for i = 0, 1, 2,3,4…p are not suitable for reconstruction. The eligible PZME for any given image suits to perform information hiding is expressed based on the above conditions,

The process of reconstruction is depicted in Fig. 2 for a ‘Lena’ test image of size 512*512, the reconstructed images are generated using the Eq. (8). It is obvious from the figure that the gross shape information is captured in the lower-order moments and the higher-order moments capture the high-frequency details. In our experiment, the 15th order of 136 moments shows a better trade-off between the detection accuracy and the computation time, and the same is demonstrated in the Section 4.

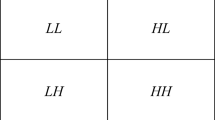

3.2 Dual–tree complex wavelet transform (DTCWT)

Transform domain techniques have a significant impact on various image processing applications. Various transforms are used to convert the spatial domain image into frequency coefficients, among those transforms discrete wavelet transform (DWT) performs better. The DWT transform, on the other hand, fails to maintain shift-invariance, resulting in large fluctuations when small shifts in the input data occur, there is a shift in the energy distribution between DWT coefficients, as well as poor directional selectivity for diagonal features [28]. Thus, DWT’s shortcomings can be addressed by Kingsbury who developed the Dual-Tree Complex Wavelet Transform. Its properties include shift-invariance, strong directional selectivity, perfect reconstruction, limited redundancy, and low computing complexity. It is derived from the DWT and Complex wavelet transformations (CWT) [28]. Here, the complex coefficients are obtained by employing DTCWT on two real DWTs, each of which works on distinct filter sets for generating real as well as imaginary transform parts. To get two real signals, these parts are inverted namely real and imaginary using each of the two real DWTs’ inverses. The final signal is then reconstructed by averaging these two signals [47].

Let f(x,y) input image gets decomposes into sequences of translations and dilations using DT-CWT using complex scaling function Φ(x,y) and thus results in complex wavelet functions of about six numbers ѱϴj,l(x,y) and it is expressed as

In Eq. (10), θϵ{±15°, ±45°, ±75°} represents the complex wavelet function’s directionality, Z represents natural numbers, and j and l represent shifts and dilations, Cj,l represents the wavelet coefficient which is complex with \({\varphi}_{j0,l}(x)={\varphi^r}_{j0,l}(x)+\sqrt{-1{\varphi}_{j0,l}^i}(x)\) and \({\uppsi}_{j,l}(x)={\uppsi^r}_{j,l}(x)+\sqrt{-1{\uppsi}_{j,l}^i}(x)\) where i and r represent the imaginary and real parts respectively.

Applying DTCWT on an image f(x,y) generates lower frequency subbands that are complex-valued of two numbers and higher frequency subbands that are also complex-valued of six numbers at every decomposition level. Each of these high-frequency subbands has a ϴ direction which is unique at angles of ±15°, ±45°, and ± 75° [63].

For better understanding, the two complex valued low frequency coefficients are given as,

and the six complex valued high frequency coefficients are expressed as,

thus,

Rl(.) and Imy(.) stands for real and imaginary parts respectively.

L1, and L2 represent low-frequency sub-bands.

∧ represents the level of decomposition, here it is 2.

ϴ represents direction of subband and its values are {−75, −45, −15, +15, +45, +75}.

u, v represents the location of the coefficient in each sub-band and its values vary as.

\(0\le u\le \frac{N}{2^{\wedge }}-1\) and \(0\le v\le \frac{N}{2^{\wedge }}-1.\)

The DTCWT solves the problem which generally suffers by the DWT namely, lack of directionality, oscillations, shift variance, and aliasing on the 2D image. Figure 3 shows the application of DTCWT on test image ‘lena’. The DTCWT has several advantages, including shift-invariance, strong directional selectivity, flawless reconstruction, little redundancy, and low computing complexity, all of which make it appealing for developing efficient watermarking systems with a larger embedding domain.

Furthermore, the DTCWT has powerful perceptual features because of its good directional sensitivity at higher frequency sub-bands, resulting in increased embedded watermark imperceptibility. Loo and Kingsbury [38] proposed the first work in this area, and this approach has attracted researchers for embedding watermark images [17, 32] and videos [15] in the previous decade.

3.3 Harris corner points

The strong invariance to rotation makes the Harris corner points a popular interest point detector due to their resistance against variation in illumination, scale, rotation, and image noise. This detector depends on the signal’s local auto-correlation function; where such functions measure the signal changes locally with the help of patches moved in various directions by a modest amount. In general, the geometrical attack involves changing the positions of pixels in the watermarked images, thus leads to affects its synchronization during the extraction of the watermark. Thus, it’s a difficult task to introduce an approach that is robust against all types of geometrical disturbances. One solution to handle this synchronization attack is to find the invariant points which are robust against geometrical attacks for embedding the watermark [20]. Henceforth, to make our proposed approach robust against rotation and translation, the Harris corner feature points of [8] are used. The average optimized Harris points detected in our experiment are 4100 on our standard test images. The three watermark images of size 32*32 of about 3072 bits are embedded into the identified optimized points. Finally, the pseudo-Zernike moments of about 136 moments for 15th order computed from the original cover image are embedded as the fourth watermark. As there is more several identified optimized blocks, these moments can be embedded repeatedly. This helps for better recovery of the tampered region due to unintentional attacks.

Let I as an image. Let’s sweep a window w(x,y) on I and compute the intensity variation.

where,

- w(x, y):

-

is the window at position (x, y)

- I(x, y):

-

represents intensity at (x, y)

- I(x + u, y + v):

-

represents moved window position’s (x + u, y + v) (intensity)

Since the window with corners is needed, we concentrated on large intensity variation windows. Hence, the above equation has to be maximized as,

Using Taylor expansion:

Expanding the equation and cancelling properly:

It is represented in a matrix as:

Let’s denote:

So, our equation now is:

For each window, a score is calculated, for determining whether the corner is contained,

where,

- det(M):

-

λ1λ2

- trace(M):

-

λ1 + λ2

Here, λ1 and λ2 are the M’s Eigen values. For a window whose R score is greater than a “corner”.

3.4 Cuckoo search optimization

A new metaheuristic approach based on Nature-Inspired Algorithms (NIA) is Cuckoo search Optimization. The cuckoo’s breeding behavior was inspired by the modern optimization approach and here it also combines the behavior that can be exposed in some birds and fruit flies namely the Lévy flight. The egg of the cuckoo is considered a solution that is new among the solutions (each egg) on the nest. The main aim is to replace the solutions in the nest which is not that good with cuckoo solutions that are new and potential. To make it simple, let’s consider one egg in each nest [61, 62].

The general rules of Cuckoo Search are:

-

(i)

An egg that is laid by cuckoo at a time is dumped into a nest that is randomly chosen.

-

(ii)

Best nests (solutions) with high-quality eggs will be passed down to future generations;

-

(iii)

Here, the host nests number that is accessible is fixed, and the pa [0, 1] is defined as the probability of finding an alien egg by a host. To do so, the host either throws the eggs or abandons the nest, allowing it to create a new nest in a different site.

For ease, the latest statement can be estimated by a fraction pa of the n number of nests that are being replaced by nests that are new and thus having a new random solution.

The following Levy flight is utilized when the new solution cxi(t + 1) for the ith cuckoo is generated.

where α represents step size that is related to the size of the problem of interest. ⨁ represents multiplications that are based on entry-wise.

4 Proposed system

This section elaborated on the key aspects incorporated into the proposed image watermarking technique which uses meta-heuristic based watermarking in the DTCWT domain. The suggested work’s novelty can be summarized as: In this research work, the DTCWT-based coefficients are used for achieving maximum embedding capacity. The Rotation and noise invariance properties of Pseudo Zernike moments make the system attain the highest level of robustness when compared to conventional watermarking systems. To achieve authentication and proof of identity, the watermarks of about four numbers are used for embedding as a replacement for a single watermark image in traditional systems. Among four watermarks, three are the biometric images namely Logo or unique image of the user, fingerprint biometric of the owner, and the metadata of the original media to be transmitted. In addition, to achieve the tamper localization property, the Pseudo Zernike moments of the original cover image are obtained as a feature vector and also embedded as a watermark. To attain a better level of security, each watermark is converted into Zernike moments, Arnold scrambled image, and SHA outputs respectively. In addition, the watermarked image is also signed by the owner’s other biometric namely digital signature, and converted into Public key matrix Pkm and embedded onto the higher frequency subband namely, HL of the 1-level DWT. The better visual quality is achieved by converting the input cover image into the YCbCr form of an image and this YC component is transformed into DTCWT coefficients. After that, optimization using cuckoo search is applied to the resultant DTCWT coefficients which find the suitable embedding position with the parameters of the fitness function such as SSIM (for imperceptibility), Capacity, and Pseudo Zernike moments centered on the high Energy Harris Corner points. Then, calculate SVD on the identified location which is optimal among the DTCWT coefficients, and the singular values which are obtained are further modified with the principal components of the modified multiple watermarks discussed above. The computation of inverse DTCWT results in the watermarked image WI. The proposed system also accomplishes a multi-level authentication, among that the first level is attained by the decryption of the extracted multiple watermark images with the help of the appropriate decryption mechanism which is followed by the comparison of the authentication key which is extracted using the key which is regenerated at the receiver’s end. The result of authentication is considered a success when the likeness is above threshold value T, authentication is considered successful otherwise, the received watermarked image is considered unauthenticated. In the terms of successful scenarios, the watermark images get extracted and for the unsuccessful cases, the severity of tampering is determined based on unintentional or intentional attacks. The recommended work involves the following stages namely, Embedding, Authentication, Extraction, and Tamper detection and recovery Process.

4.1 Algorithm 2: Embedding process

The novel embedding idea used here involves embedding randomized multiple watermark images on the optimized embedding pseudo-Zernike feature vectors calculated over the Transform coefficients using DTCWT. The embedding process can be elaborated as follows: (i) Pre-processing of cover image, (ii) optimal embedding location, (iii) Multiple watermark images pre-processing, (iv) Process of embedding, and (v) generation of the authentication key. Pre-processing of Cover Image: First, convert the RGB color image into YCbCr components and apply a two-level DTCWT on YC component. From the resultant 16 sub-bands of DTCWT, Harris corner points that are invariant are obtained. Optimization: Next, we compute pseudo-Zernike moments for each block of size 15*15 which is centered on the invariant Harris corner feature points. Then, the optimization is accomplished with the help of Cuckoo search’s fitness function that varies based on two sets of parameters one based on SSIM, NCC, and payload and the other on PZM which is followed by SVD calculation on the selected coefficients. Watermark Images Pre-processing: For authentication and security of the transferred content, multiple watermarks are used and these watermarks are randomized as for watermark 1, the Zernike moments are extracted, for watermark 2 the Arnold scrambling is applied using key K (i.e., 32 for this experiment), for watermark 3, SHA-128 is applied and for watermark 4, the PZM of the cover image is obtained (i.e., order 14 for this experiment). Calculate SVD on these watermarks. Process of Embedding the Watermarks: The resultant randomized watermark images are now embedded onto the cover image’s singular values. Then the inverse SVD and DTCWT result in a watermarked image. The optimal embedding location increases visual quality, robustness, payload, and security. The process of embedding in the DTCWT coefficients provides more embedding capacity, which in turn promotes its visual perception. The introduction of optimized embedding also improves the robustness and security of the proposed system. Authentication Key’s Generation: The key used for authentication is formed from the received image and the digital signature, the biometric image of the owner is used to generate the public key matrix which is used to test the authenticity of the watermarked image. The flowchart representation of the meta-heuristic embedding process using the coefficients of DTCWT is shown in Fig. 4. A similar approach is elaborated with the help of the following algorithm.

Input: Cover image ‘CI’, Watermark images ‘wi’, and owner’s biometric image ‘Bi’

Output: watermarked image ‘WI’ and authentication key ‘Ka’

-

Step 1.

Pre-processing of Cover Image:

-

(i).

Divide the RGB cover image ‘CI’ into YCbCr as

-

(ii).

Perform DTCWT for two levels on the luminance part Y of the obtained image YC

where,

L 1 , L 2− 2 real and 2 imaginary low frequency subbands of the first and second level.

H 1 , H 2 , H 3 , H 4 , H 5 , H 6 –6 real and imaginary high frequency subbands of the first and second level.

-

(iii).

On each sub-bands of DTCWT coefficients, the feature points which is invariant are obtained using using Harris corner detector,

where

r = 1,2,3 ……12 direction of sub-bands

-

(iv).

The energy is calculated for the coefficients of DTCWT blocks that are centered on each low-frequency and high-frequency subband point as

where,X – points on subbands of low frequency points(LpR, pI) and high frequency points(HqR, pI)r with p = 1,2 and q = 1,2,….12 directions

-

Step 2.

Determination of suitable location for embedding using optimization:

-

(i).

The Pseudo Zernike Moments are calculated for the maximum energy invariant blocks and it is considered as an input to the meta-heuristic optimization algorithm.

-

(ii).

The optimization using the Cuckoo search (discussed in Algorithm 6) is for finding the blocks that are invariant as well as suitable to embed the multi-biometric watermarks.

-

(iii).

The optimization process mainly depends on higher Imperceptibility (SSIM), Embedding Capacity, and PZME which are computed in Step 2(i) as an objective function.

-

Step 3.

Preprocessing of Multiple Watermark Images:

-

(i).

Watermark 1: The RGB Watermark image which is either logo or unique image ‘WUimg’ is converted into YCbCr component and finds its Zernike Moments as,

-

(ii).

Watermark 2: To verify the authentication of the content, the expected receivers’ fingerprint ‘WFb’ of size M*N are randomized using Arnold transformation with Key K, and from that encrypted fingerprints generate a matrix of size P*Q using Bi-directional Associative Memory Networks as given in [48] where P – number of receivers and Q – M*N and encrypt the with pseudorandom number Kp as

where,b = 1,2, 3……..Q number of receivers

-

(iii).

Watermark 3: The cover image Mc’s metadata is considered as 3rd watermark image and which is encrypted using Secure Hash Algorithm SHA to provide a level of security.

-

(iv).

Watermark 4: The PZM of the input cover image is calculated and used for tamper detection and recovery,

-

Step 4.

Embedding of Multiple Watermarks:

-

(i).

Calculate SVD on each PZM invariant point (OPZME) of the optimized and embeddable cover image generated by Algorithm 6, and it is given as,

where,

- Bcpt:

-

represents the singular values of t number of matrix points p.

- Acpt:

-

and Ccpt represent p matrix points and t orthogonal matrices.

-

(ii).

Similarly, compute SVD on each multi-biometric watermark image i which is obtained as a result of Step 3 as,

where, i = 1, 2, 3, 4 number of watermarks

-

(iii).

Calculate the modified singular values of points t as Bcpt’, by simply combining the cover image singular value Bcpt with the principal component (Awi × Bwi) of the watermark image whose significance is determined by multiplying with the scaling factor α.

where, α represents robustness factor.

-

(iv).

Inverse SVD is performed to obtain the modified coefficients of the optimized blocks as:

-

(v).

The watermarked image namely qcf1’ is thus obtained using the inverse DTCWT as,

-

(vi).

The resultant Yc’ in (vi) is converted to a normal RGB image resulting in the watermarked image ‘WI’.

-

Step 5.

Generation of Authentication key and Embedding:

To provide additional authentication at the receiver side, the key Keya is generated as an authentication key, that is used for checking the legitimacy of the received watermarked image before extraction. To do so, the owner’s digital signature biometric and the uncorrupted watermarked image which is the original WI are used to generate the public key Pkm. The key generation process is elaborated as,

-

(i).

Apply adaptive thresholding on watermarked image WI, so that binary image is obtained as

-

(ii).

Compute the authentication key Keya as

-

(iii).

The authentication key once generated is embedded onto the HL subband of two-level DWT of the watermarked image WI and it is described below,

-

a.

The DWT is performed mainly to acquire the subbands which are multiresolution.

-

b.

The PCA is computed on the HL subband to obtain the uncorrelated coefficients on the subband as HLp

-

c.

The above obtained uncorrelated PCA coefficients are modified as

-

a.

where for this experiment the robustness factor α is considered as 0.6

-

4.

The Inverse PCA is performed.

-

(iv).

Thus, the watermarked image which is of signed form SWI is obtained.

4.2 Algorithm 3: Watermark Detector and the process of Extraction

The watermark detector and process of extraction involve the reverse of the embedding process. It includes the following stages (i) the first level of authentication of watermarked image, (ii) Multi-biometric Watermarks Detection, (iii) Extraction of Multi-biometric Watermarks, and (iv) Next level of authentication - Tamper detection and Recovery and Tamper detection and Fragile. The first level of Authentication: To check whether the watermarked image which is received is authenticated or not, the key Ka” is computed from a received image using the same process as in embedding and it is compared with key Ka’ (extracted from HL band of 2-level DWT). If both match, then there is “No change” in the received content else, the received content has been tampered with. Thus, the first level of authentication is proved. Detection: The watermark’s presence is verified by computing the normalized correlation coefficient between the received image (which might be corrupted) and the cover image. Then this correlation result is compared with the predefined threshold. Hence, if the value of correlation is higher than a defined threshold, then a ‘watermark’ is available, else, there is ‘no watermark’. Extraction: During extraction, the second level of authentication is performed and it leads to the following cases (i) when the authentication succeeds, the tampered region is detected and the recovery has happened as it is due to unintentional attacks, and (ii) when the authentication does not succeed the tampered region is detected and fragile as the tampering is due to intentional attacks. For the mentioned cases of the authentication process, we start extracting the watermark image and the comparison of pseudo-Zernike moments of received watermarked image with extracted watermark 4 happens after watermark post-processing. Watermark post-processing: From the extracted multi-biometric watermarks namely (i) watermark 1, extracted Zernike moments are reconstructed to get the original image unique image or logo, (ii) watermark 2, scrambled fingerprint image of the receiver can be extracted using the appropriate (Arnold) key by an authorized user and it can also be compared with the receiver’s fingerprint to validate the second level of authentication, (iii) watermark 3, metadata of the cover image is also used to validate the authenticity of the content and it leads to the third level of authentication, and watermark 4, extracted Pseudo Zernike moments of the original cover image is used as a feature vector not only for the detecting the region of tampering but also for recovering it. The detailed flowchart representing the extraction process is given in Fig. 5 and the same is elaborated below:

Input: Unique owner’s biometric image ‘Bi’, Signed watermarked image ‘SWI’ (might be corrupted)

Output: multi-biometric watermark images wi’, the decision on tamper detection

-

Step 1.

Watermarked Image Authentication (first – level):

-

(i).

Apply adaptive thresholding on the received signed watermarked image ‘SWI’ similar to the one performed at the embedding (Step 5 - i) to extract the binary image as,

-

(ii).

Calculate an authentication key Keyai’ as

-

(iii).

On received watermarked image,

-

a.

Apply DWT for obtaining the multiresolution subbands.

-

b.

To find the uncorrelated coefficients, the PCA is calculated on the HL subband as HLp”

-

c.

Then, from those PCA coefficients, the key which is used for authentication is extracted as

-

a.

where α is the factor for robustness

-

(iv).

C = Equate

If C == true,

Received content is authenticated

Do

The Process of Extraction (Step 2)

else,

Received content is unauthenticated

Do

If EDs(PZM’,PZM”) < TA then

“Unauthentication happens due to Unintentional attacks”

Do

Tamper Detection and Recovery (Algorithm 4)

Else

“Unauthentication due to Intentional attacks”

Do

Tamper Detection and fragile (Algorithm 5)

End If

End If

-

Step 2.

Extraction and the post-processing of Multi-biometric Watermarks:

-

(i).

For the received watermarked image, apply DTCWT as in the embedding process Step 1 (iii).

-

(ii).

The Harris corner detector is used to extract an invariant feature point similar to an embedding process Step 1 (iv).

-

(iii).

Calculate energy and Pseudo Zernike Moments for coefficients of DTCWT which is focused on every Harris point as in Step 1(v) for obtaining the PZM from the maximum energy block.

-

(iv).

Then, to obtain the location which is suitable for embedding OEf, the PZM, and energy calculated in step (iii) are provided as an input to Algorithm 6.

-

(v).

Assume that each optimized PZM invariant point OEf’ has SVD applied to it and given as

where,

- Bcpt”:

-

asingular values of p matrix points for t number.

- Acpt’:

-

and Ccpt’ – orthogonal matrices of p matrix points for t number.

-

(vi).

The multi-biometric watermarks principal component is extracted by the equation as,

-

(vii).

Over the principal component, Bw’* Aw which is extracted apply inverse SVD to obtain each watermark image i,

-

(viii).

Apply Inverse DTCWT which results in a randomized form of each watermark image.

-

(ix).

Apply Zernike moments reconstruction on Extw1, Arnold Key K′ on Extw2, and SHA-128 on Extw3 to get the original form of watermarks

-

(x).

Repeat the above five steps from (vi) to (x) of this extraction process aimed at various values of i = 1 to 4 to get the multiple watermarks wi’, where we normally consider the watermark image length equal to half of the length of cover image m.

4.3 Algorithm 4: Tamper detection and recovery

To deal with the authentication of data over insecure online social networks, the robust and semi-fragile form of a watermark detector is introduced in our proposed approach. The proposed approach deals with the term semi-fragile because the attacks that happened during communication can be both intentional and unintentional, where the invariant optimized feature points are resilient to unintentional attacks, whereas intended attacks are vulnerable. The attacks namely noise addition, geometrical variation, and JPEG compression are normally treated as unintentional because these variations won’t change the complete image or its meaning. Therefore, such alterations or tampers need to be detected and recovered (leads to Tamper detection and recovery). On the other hand, the attacks such as cropping, replacing, copy-move, and image splicing are considered intentional and will have severe implications. Hence, such attacks need to be detected but not recovered instead it has to be collapsed to avoid conveying wrong information from such images (leads to Tamper detection and fragile).

During this stage, the received watermarked image WI’, is considered unauthentic thus, when the first level of authentication fails. This process of authentication is carried out by setting a threshold TA (i.e 3120 in our experiment) to classify the image which is received either as intentional or unintentional. It is deliberated by computing squared Euclidean distance EDs among the extracted pseudo-Zernike moments PZM’ and the one which is computed from the corrupted image PZM”. The absolute form of squared Euclidean distance is used because certain variations are shown deeper and there won’t be any compromise between the rate of recognition and robustness to alterations.

The pseudo-Zernike moments are generated as per Eq. (8) centered on the Harris corner points over the DTCWT coefficients. Also, extract the watermarks from the optimized location identified using Algorithm 6. Then, compare the extracted pseudo-Zernike moments PZM’ (watermark 4) with the computed PZM”. The distance between the two different feature vectors is calculated using the squared Euclidean distance. To decide on the distances the threshold TA is used and it is obtained from the meta-heuristic optimization algorithm (Algorithm 6) were calculated by defining an objective function based on average over a minimum of false positive and false negative probability on Receiver operating characteristics and a minimum of squared Euclidean distance obtained by performing various manipulations on the watermarked image. The false-negative and positive probability for various threshold limits from 2500 to 5000 are depicted in the Table 2

where PZM’ and PZM” are the pseudo-Zernike feature vectors of the images f1(x,y) = CI original cover image and f2(x,y) = WI. The PZMi’ = (PZMi,1,PZMi,2,. ..,PZMi,N) = (PZ15,0, PZ15,1, PZ15,2, PZ15,3, PZ15,4, PZ15,5, PZ15,6, PZ15,7, PZ15,8, PZ15,9, PZ15,10, PZ15,11, PZ15,12, PZ15,13, PZ15,14, PZ15,15 for order 15 from Table 2. When the different attacks happened on the watermarked image i.e., the feature vectors f1(x, y) and we get f 2(x, y) and its distance are calculated.

From the above distance equation, it is clear that tamper detection and recovery will be performed for the identified unintentional attacks such that EDs < TA. Whereas, tamper detection and fragile happen when it is identified as intentional attacks.

4.3.1 Tamper detection and recovery

To detect the location of the tamper and to proceed with the recovery, the following activities are done. First, we used the rotation and translation invariant properties of the Harris corner detector by computing its feature points on the DTCWT coefficients of the original image. The pseudo-Zernike feature points are computed centered on these maximum energy Harris feature points of size 15*15 (in our experiment). This utilizes the noise and rotation invariant property of pseudo-Zernike moments. Secondly, to use it better, the pseudo-Zernike moments of about 136 obtained from 15th order on the original image are also embedded onto these pseudo-Zernike feature points obtained from the Harris corner feature points. Thus, the tampered regions are perfectly located on the watermarked image by simply comparing the extracted pseudo-Zernike moments PZM’ of an ith block from the regenerated moments on the received watermarked image PZM” of the same ith block with the threshold TB (i.e 512 in our experiment). The region of moments that do not match helps us to locate the region of tampering.

4.3.2 Recovery

The reconstruction ability of pseudo Zernike moments helps to reconstruct the region which is detected as tampered if it is due to the unintentional attacks.

4.4 Algorithm 5: Tamper detection and fragile

On the other hand, once the received image fails the first level of authenticity and is treated as intentional attacks using Eq. 48, there is a need to decide on the restoration of the tampered area or not. The area which is tampered is considered as sensitive if it is greater than the threshold TC then instead of tamper recovery, fragile happens otherwise tamper recovery will proceed.

4.5 Algorithm 6 Metaheuristic-based embedding location selection using Cuckoo Search (CS) OE f

-

Step 1.

Initialization

-

(i).

The CS parameters are initialized as nest number (n) = 50, size of nest (s) = 5*watermarks’ size, minimum generations in number as t = 0, step size α = 0.25, number of maximum generations in number as Gmax = 1200

-

(ii).

The Fitness or objective (FOn) is chosen to maximize the visual quality (SSIM), robustness (NCC), and Capacity as,

where,

- CI:

-

Cover Image,

- WIA:

-

tampered watermarked image

- N:

-

total attacks

- Adjustment:

-

factors as λ and β for adjusting SSIM, Capacity, and Robustness

- SSIM:

-

is assigned the value between 0.9 and 1.0

- Capacity:

-

is considered as wi the multiple watermarks

- TA:

-

Authentication threshold

- FPP:

-

False positive probability

- FNP:

-

False-negative probability

- EDs:

-

is the squared Euclidean distance computed between Pseudo Zernike moments of the original cover image and attacked image of Oth order and N attacks.

-

Step 2.

Output: The location which is suitable for watermark image embedding is determined using the Fn as in Eq. (50) as is shown in Fig. 6.

-

Step 3.

Selection Process:

-

(i).

WHILE t < Gmax

-

a.

Levy Flights gives a cuckoo at random by i.

-

b.

Calculate FOi using Eq. (50)

-

c.

From the random nests n, select a j nest and calculate its function FOj

-

d.

IF FOi < FOj

-

e.

Substitute j with the new solution.

-

f.

ELSE

-

i.

Consider the solution be i and

-

ii.

The poorest nest gets abandoned and the nest which is new are built using Levy flights.

-

iii.

Keep the best current one

-

iv.

The numerous n nests Fn is ranked to get R(Fn)

-

v.

The best Fn is identified and it is used as optimal embedding location OEf

5 Experimental analysis

The proposed semi-fragile multi-biometric based content authentication system is validated with the help of various watermarking characteristics namely visual perception, security, payload, and robustness. This section deals with datasets description, quality measures, experimental setup, and results and discussions.

5.1 Datasets Description

The data on online social networks are vulnerable to both intentional and unintentional attacks. The specific datasets have been used to verify the robustness against unintentional and intentional cases. The former case involves attacks namely, image processing operations, geometric distortions, JPEG compression, and Multiple JPEG which can be tested using the standard test image dataset. On the other hand, the latter case involves attacks namely copy-move forgery, image splicing, collage, and cropping which can be tested using MICC, CISDE, and standard test image datasets respectively. To make the forgery image Adobe Photoshop is used.

5.1.1 Standard test images

The standard images which are often used in the literature are lena, peppers, cameraman, lake, etc. The standard images are normally in uncompressed ‘tif’ format which comprises the images with bright colors, dark colors, textures, smooth areas, lines, and edges are all of the same size 512 × 512 is used as shown in Fig. 7a

5.1.2 MICC – F600

This dataset is a collection of original images of about 440, 160 tampered images, and 160 ground truth images for testing the copy-move forgery detection [14] (see Fig. 7b)

5.1.3 CSIDE

Image splicing also known as copying and pasting is the most common tampering seen today. Columbia Image Splicing Detection Evaluation Dataset (CISDE) provides a benchmark set with only the splicing operation these images are in high resolution and uncompressed. This dataset is not restricted to splicing detection but is also suitable for other computer vision algorithms since the ground truth of exposure settings is accessible [26] (see Fig. 7c)

5.1.4 Fingerprint dataset

The fingerprints of various sets of receivers are captured at various angles and recorded for the experiments. The device used for capturing is MFS100 which merely depends on the optical sensing technology that proficiently identifies even the fingerprints that are of poor quality. This MFS100 is mostly designed for identification, and verification and also for the authentication of the owner when their fingerprints are acts like a digital password. It comprises about 100 images uncompressed images of size 32*32 (see Fig. 8c).

5.1.5 Digital signature

The digital signatures of the owners are created using the WACOM One Graphic Tablet. It comprises about 100 images uncompressed images of size 128*32. (see Fig. 8d)

The cover images from the datasets’ standard test images, MICC – F600, CSIDE are converted to the same dimensions, and the watermark images namely logo or unique images, receiver’s fingerprints, and owner’s digital signatures of size smaller than the cover images are considered here for evaluation. The sample cover images of each dataset are shown in Fig. 7 and the watermark images namely logo, unique image, receivers fingerprint, and digital signature are presented in Fig. 8. test images from each dataset and its description are given in Table 3 below.

5.2 Experimental setup

The experiments were carried out with a Windows 10 Professional (64-bit) environment of Lenovo Thinkpad P15v with Intel Core i7 FHD IPS Workstation in a single NVIDIA Quadro P620 4GB Graphics @ 2.60GHz processor speed and 32GB capacity of RAM. For storage purposes, we used a 1 TB Seagate Hard disk drive. The software used for simulation purposes is MATLAB R2019a.

Quality Metrics

The proposed multi-biometric semi-fragile based watermarking algorithm is developed to protect the multimedia data on online social networks in terms of authentication, visual quality, capacity, and robustness. The metrics generally for measuring visual perception are both the objective one SSIM meant for Structural Similarity Index Measure and the subjective one PSNR meant for Peak Signal-to-Noise Ratio. In addition, to measure the robustness, the metrics used are mostly BER and NCC abbreviated as Bit Error Rate and Normalized Correlation Coefficient respectively. Also a metric for measuring the capacity is introduced in this approach which dealt with the maximum number of multi-biometric watermark images that can be allowed to embed over the cover image. Finally, the authentication is measured at various levels and the corresponding decision is made based on the intentional and unintentional attacks.

5.2.1 Visual perception or imperceptibility

The SSIM and PSNR are the most important metric used for measuring the humiliation caused by the attacks which happen during communication over an insecure network. The minimum acceptable limit of PSNR is above 38 dB [37] and on the other hand, the acceptable limit of SSIM varies from 0 (Zero matches) to 1 (perfect match).

where Mean Square Error (MSE) between the cover image CI and attacked watermarked image WIA is defined as,

where T represents a total number of pixels in each image.

The SSIM between two images namely cover image CI and the attacked watermarked image WIA is given as,

where,

- LCi and LWa:

-

denote the factor of luminance between two given images i.e., the mean of CI and WIA

- VCi and VWIa:

-

denote the factor of contrast between two given images i.e., the standard deviation of CI and WIA

- VCi VWIa:

-

denotes the normalized correlation coefficient between CI and WIA.

5.2.2 Robustness

The embedded watermark’s robustness might be affected by various unintentional and intentional attacks and it can be measured by using NCC and BER. The acceptable limit of all these metrics should range from 0 to 1.

-

(i).

NCC: This metric is computed among the original and extracted watermark to identify its correlation, if its value is close to 1 then those two images are assumed to be correlated otherwise, it is considered to be uncorrelated. This can be calculated with the help of the equation and it is expressed as,

where, OWk,i represents the ith position of original watermark and its kth pixel intensity, similarly, EWk,i represents the ith position of extracted watermark and its kth pixel intensity, OWi,m represents ith position of original watermark and its mean intensity, and EWi,m represents mean intensity of ith position of extracted watermark.

(ii) BER: The proportion of extracted bits of the watermark which is wrong to the total embedded watermark bits. When there is no error in the message received, then the value of the bit error rate will be 0, else, it will be closer to 1.

It is represented as follows,

where, OWk,i is the intensity of the kth pixel in an ith original watermark, EWk,i is the intensity of the kth pixel in an ith extracted watermark, and m is the total number of embedded watermark bits which is obtained as m1 + m2 + m3 + m4.

5.2.3 Payload

In general, the payload of the watermark dealt with the maximum number of coefficients obtained due to the transform being suitable for watermark embedding, to be precise it should not only degrade the visual perception but also the robustness of the watermarking system. Here, DTCWT provides more transform coefficients and it is most suitable for embedding the watermark. To achieve the maximum capacity as in [3], we also used Pseudo Zernike Moments. It is expressed as, for an order of 15, we can have 136 moments and from these 130 can be used. Hence, for the given image size of 512 × 512, approximately 230,000 bits are suitable to embed without the degradation of mere visual perception as well as robustness level. Therefore, the most desirable size of the used watermark images is in the form of r × s which should be smaller than the p × q size of the cover image. In this approach, the authors introduced a form metaheuristic technique namely the cuckoo search for determining the suitable location for Multi-biometric watermarks embedding which in turn improves the watermarking characteristics namely visual quality and robustness.

5.2.4 Processing speed

The processing speed of the proposed watermarking algorithm is computed using the computational time of the embedding and extraction algorithm. It is defined as follows:

6 Embedding algorithm

-

DTCWT – To obtain complex coefficients that are shift-invariance, strong directional selectivity, perfect reconstruction, limited redundancy, and low computing complexity

-

Pseudo-Zernike moments and Harris corner points – To determine invariant feature points.

-

Cuckoo search optimization – optimal embedding location

-

Zernike moments, Arnold scrambling and SHA-128 – To provide security for watermarks

-

Process of embedding - The process of embedding in the DTCWT coefficients provides more embedding capacity, which in turn promotes its visual perception. The introduction of optimized embedding also improves the robustness and security of the proposed system, and

-

Generation of the authentication key - To test the authenticity of the watermarked image.

7 Extraction algorithm

-

First level of authentication of watermarked image,

-

Multi-biometric Watermarks Detection,

-

Extraction of Multi-biometric Watermarks, and

-

Next level of authentication –

-

Tamper detection and Recovery and

-

Tamper detection and Fragile.

Thus to process the sample cover image of size 512 × 512, the time taken for various stages of embedding and extraction are depicted in Table 4.

The proposed processing speed is better when compared to the existing approaches due to the low computing complexity of DTCWT. Unlike Hu moments, PZM can be reconstructed using the higher order moments itself. The proposed system not only concentrates on maintaining the trade-off among visual quality, robustness, security and payload.

7.1 Attacks

The WI namely watermarked image might get corrupted due to either intentional or unintentional attacks by the proscribed users in the insecure network. The visual quality and the robustness measure of the proposed system for all such degraded scenarios are authenticated on the sample images (lena, cameraman, house, jetplane, lake, Barbara, baboon, pepper) concerning the numerous attacks considerations namely: (i) Zero or No attack, (ii) Unintentional attack namely, image processing operation, geometrical, JPEG compression, and Multiple JPEG. (iii) Intentional attacks namely copy-move forgery, image splicing, cropping, and collage.

7.1.1 No or zero attack

In general, the watermark embedding itself influences the cover image’s visual perception and it can be estimated efficiently by considering the watermarked image with no attack. It can be measured with the help of PSNR and SSIM metrics. Figure 9 shows the watermarked images in the “Zero Attack” and its corresponding extraction of the watermarks are presented in Fig. 10.

From Fig. 9. And Fig. 10, it is clear that the minimum PSNR we obtained is 61.12 dB for pills and the maximum PSNR value obtained is 68.69 dB for elaine, whereas, the maximum robustness factor NCC is obtained as 0.9998 and 0.9983 for suzie and DS1.

7.1.2 Unintentional attacks

-

a)

Operations of Image Processing:

The various image processing operations are deliberated for authenticating proposed multi-biometric semi-fragile system performance are given as: (i) Image Noising – with variances of Gaussian noise for values 0.05, 0.1, and 0.5 respectively, with densities of Salt and pepper noise values of 0.01, 0.02 and 0.06 respectively, (ii) Image Filtering – Median filter, Average Filter, Gaussian Filter with various filter sizes of 3 * 3, 5 * 5 and 7 * 7 respectively, (iii) Compression Attack – JPEG with various quality factors of 90%, 70% and 50% and JPEG - JPEG Compression (90% - 70%, 70% - 50%, 90% - 50%). Figure 11 displays the multiple watermarks which are extracted from the above-discussed image processing attacked watermarked image.

Figure 11 clears that the proposed multi-biometric based semi-fragile system provides a higher NCC value of 0.9989 and BER value of 0.03 even at the highest quality factor value of 90 and 70 JPEG-JPEG compressions, where existing systems fail.

-

b)

Geometric Attack:

This section dealt with the validation of the proposed multi-biometric based semi-fragile system against geometric attacks, where the conventional systems fail namely the RST (rotation, scaling, and translation) property. The robustness measure of the proposed system concerning RST is made with the following considerations as (i) rotation with different degrees of 10°, 25°, and 45°, and (ii) scaling with different percentages of 50%, 75%, and 125% and (iii) translation at different directions of x and y namely, Tx = −25 and Ty = 10, Tx = −50 and Ty = 50 and Tx = 45 and Ty = −25 respectively. Figure 12 exhibits degraded RST watermarked image and the extraction of corresponding multiple watermarks. From Fig. 12, we determine that the proposed multi-biometric based semi-fragile system can tolerate the rotation on the standard image lena, for translation and scaling on baboon image demonstrates the results which are good when related to the other standard test images respectively. Normally, the system becomes desynchronized due to the stated RST attacks and it is a challenging one where most conventional systems fail. To sort out this RST issue, the proposed multi-biometric system computed SVD on Pseudo Zernike Moments over Harris corner feature points in the DTCWT coefficients on the higher energy blocks. These parameters with the attacked images SSIM and NCC value and capacity are then set as input for an optimization process. As a result, an optimized location that is suitable for embedding the watermarks is determined. Accordingly, embedding the identified optimal locations overcomes the problem of desynchronization by retrieving the watermarks, especially in the case of unintentional attacks.

7.1.3 Intentional attacks

The performance of the proposed semi-fragile multi-biometric watermarking systems is evaluated using intentional attacks such as Adding, cropping, copy-move forgery, and Image Splicing. Adding in the process of adding some new information onto the image randomly. Cropping is the process of removing a part of the image. Copy move forgery is copying and placing the part of an object in an image. Image Splicing is introducing some new image or part of an image randomly. The proposed semi-fragile system can detect the tampered region and perform fragile on the watermarked image as these tampers have happened intentionally. It is shown in Fig. 13.

From the above results, it is clear that the minimum and maximum visual quality in terms of SSIM, and PSNR are 0.8855, 0.9985, and 45.9234, 68.6967 respectively. Similarly, the minimum and maximum of robustness in terms of NCC are 0.8953, and 0.9994 respectively. All these values are within the acceptable limit.

8 Comparative study

To justify the proposed system’s performance, we implemented the existing tamper detection-based watermarking algorithms [24, 33], Zernike moments-based watermarking system [40, 49], Pseudo Zernike moments-based watermarking system [60], Dual-tree Complex Wavelet transform-based watermarking algorithm [63], color image watermarking [1] with the dataset used for the proposed work and the same also been compared with the introduction of various attacks. Tables 5, 6, 7, 8 and 9 depicts the interpretations during No or Zero Attack conditions, Unintentional - Image processing attacks, Unintentional - Geometric attacks, Intentional Attacks and Processing speed respectively.

Table 5 clears that the proposed multi-biometric based semi-fragile watermarking system attains higher visual quality on the standard test images lena, cameraman, baboon, elaine, and brandyrose for the value of PSNR value when related to the conventional systems. Similarly, the robustness value of the proposed system in terms of NCC is obtained as 0.999 which is very great when related to the other state-of-the-art approaches. Also, Zebbiche et al. [63] achieves an NCC value of about 0.9892 which is the next better one after the proposed multi-biometric based semi-fragile watermarking system.

The proposed multi-biometric based semi-fragile watermarking system that is vulnerable to various unintentional and intentional attacks in terms of imperceptibility and robustness is tested and related to the relevant watermarking systems and the same are depicted in the following Tables 6, 7 and 8.