Abstract

This review investigates how Deep Machine Learning (DML) has dealt with the Covid-19 epidemic and provides recommendations for future Covid-19 research. Despite the fact that vaccines for this epidemic have been developed, DL methods have proven to be a valuable asset in radiologists’ arsenals for the automated assessment of Covid-19. This detailed review debates the techniques and applications developed for Covid-19 findings using DL systems. It also provides insights into notable datasets used to train neural networks, data partitioning, and various performance measurement metrics. The PRISMA taxonomy has been formed based on pretrained(45 systems) and hybrid/custom(17 systems) models with radiography modalities. A total of 62 systems with respect to X-ray(32), CT(19), ultrasound(7), ECG(2), and genome sequence(2) based modalities as taxonomy are selected from the studied articles. We originate by valuing the present phase of DL and conclude with significant limitations. The restrictions contain incomprehensibility, simplification measures, learning from incomplete labeled data, and data secrecy. Moreover, DML can be utilized to detect and classify Covid-19 from other COPD illnesses. The proposed literature review has found many DL-based systems to fight against Covid19. We expect this article will assist in speeding up the procedure of DL for Covid-19 researchers, including medical, radiology technicians, and data engineers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Covid-19 originated from Wuhan, China [29] and has affected all the country’s citizens worldwide since December 2019 [3, 6]. As of January 25, 2023, a total of 664,873,023 affirmed patients of Covid-19 were conveyed to the WHO worldwide, including 6,724,248 deaths. As of January 23, 2023, a total of 13,156,047,747 doses of vaccine had been directed [134, 162]. As per Ministry HFW Covid Report [105], until January 27, 2023, the Covid-19 active cases were 1896, total deaths were 530,739, and 2,203,602,459 doses of vaccine had been directed to citizens of India itself. According to a recent BBC article [153], COVID19 totaled 59,938 fatalities in China between December 8 to January 12, 2023, and the amount of confirmed cases has climbed. The effectiveness of Sinovac and Sinopharm, the two primary vaccinations produced in China, against Omicron, the greatest common strain, is under question(Due to China’s refusal to use vaccinations from other nations) [153]. However, the situation is different in other nations throughout the world, where the fatality rate and the number of positive cases have sharply decreased.

The Covid-19 pandemic affects worldwide health and causes economic disasters [73, 99]. Detecting the novel coronavirus [147] from the human body is remarkably important and vital in the primary phases. Currently, the global healthcare community uses RT-PCR [18, 68, 133] tests and is looking for alternate ways to detect Covid-19 from radiology images. WHO recommends that all corona infection diagnoses be validated using RT-PCR [69]. Unfortunately, the earlier days of screening using RT-PCR require hours to days [32], which is a threat for COVID19 individuals. But As a result, radiological imagery is conducted initially with the early identification of COVID19, followed by the RT-PCR examination to assist clinicians in finalizing real-time confirmation [22]. However, at present, the time cost and money cost of RT-PCR are lower than that of CT, but the sensitivity of CT is higher not only in recognizing COVID19 but also COPD [19]. COVID-19 is diagnosed using multiple healthcare visualization tools: X-ray [103], CT-scan [10], and Ultrasound [139], ECG-trace images [20]. The period between contamination [18] and the appearance of the first Covid19 symptoms [22] can extend up to 15-days [46]. In the nonexistence of regular signs such as fever, the choice of a radiograph image of the lung has a comparatively excellent facility to sense the illness [131]. The percentage portion of the lungs affected due to Covid-19 (determined by using appropriate deep transfer learning CNN variants [45]) can be found efficiently using radiological images like X-ray, CT, and ultrasound. Although maximum patients have mild symptoms of Covid-19, physicians must apply the same caring methods to isolate, treat and monitor [163].

Apart from RT-PCR, the primary method that can be adopted to identify COVID19 is an X-ray, ECG, and ultrasound technique that has the benefit of becoming cheaper and low-risk in terms of radiation harm to patients [140, 146]. Finding Covid-19 via the X-ray and Ultrasound technique is a reasonably complex job. A radiographer should carefully examine those radiographs to identify the white patches comprising liquid and abscess [18, 22], which would be time-consuming and difficult. Various disorders, including respiratory TB, might be misdiagnosed as Covid-19 by radiologists or expert physicians [119].

The X-ray and ultrasound have a significant erroneous ratio to the radiologist [18]. Hence, CT scans could be employed for better accurate identification [8, 17]. However, CT picture costs are considerably higher than X-ray and Ultrasound [94]. Multiple sections are presented from each patient accused of carrying Covid-19 at the moment of CT-scan collection. Large CT-scan samples demand a strenuous effort from practitioners and doctors to confirm Covid-19 [14, 76, 78,79,80, 92, 100, 113, 128, 135, 137, 145, 166].

The infection can be categorized by lung GGO (Ground Glass Opacity) in initial phases, trailed by paving and growing consolidation. So, these outcomes are directed to the increased demand for CT machines in China, mainly in the Hubei region, ultimately flattering a competent prognosis instrument [57]. The study [60] stated that the Covid-19 affected patients’ CT scan images found abnormalities on chest CT-scan, wherein all showed multifocal patchy GGOs particularly close to the marginal segments of the lungs. The study [16] states that distinctive lung CT results are 2-sided lung parenchymal GGO and consolidative pulmonic opacities.

In past centuries, implementations of DML in the healthcare domain have resulted in several types of research aimed at diagnosing various illnesses, like brain cancer from MRI [51, 156], brain diseases from electroencephalography [4, 50], mammary disease from digital mammograms [106], and respiratory illnesses like Covid-19 from X-ray [131], CT-scan [115], ultrasound [27], ECG [20], DNA [61]. DL, a subgroup of Machine Learning(ML), has shifted aspirations in several fields of artificial intelligence(AI) in information analytics over the previous decade by achieving the highest precision [47] in a variety of jobs, particularly in the health domain [102]. This indicates that the proposed review is best suitable for recognizing Covid-19 from different imaging modalities and genome sequences.

The large population of countries like China and India suffered from Covid-19 vaccination because of mismanagement. To address such issues, the best blockchain technology can be practiced to develop and circulate Covid-19 vaccines [163], for example, delivering a patient’s regular medicine to the patient’s doorstep or nearby local pharmacy center. Along with DL, the IoT, Big Data, AI, and blockchain technologies play a crucial role in the Covid-19 healthcare domain, like observing, investigating, finding, and preventing using the Covid-19 system. IoT examples include virtual clinics-PingAn [127], real-time tracing, and live information in numerous virtual databases worldwide [70]. Big data provides business modeling on pharmaceutical supplies for various medications, demonstrating infection movement, possible evolution, and spread zones. Examples of AI include the discovery of Covid-19 through radiography chest imaging [96] and the use of a medical chatbot to answer public inquiries about Covid-19 Arogya-Setu [65]. Blockchain examples like life cover assertions from Covid-associated disease and demise. The following precise research questions (RQ) motivated our study:

-

RQ 1: How can DL be utilized to detect and classify Covid-19?

-

RQ 2: What are the possible diagnosis methods using radiography images?

-

RQ 3: Which radiography modality(X-ray, CT, Ultrasound, ECG) and genome sequence is primarily used to detect Covid-19 using DL-based pretrained, custom/hybrid models?

-

RQ 4: The technical challenges and limitations associated with Covid-19 detection using DL?

This review article emphasizes on DL based technical results for Covid-19 detection, with the following key contributions:

-

This is the principal survey reviewing Covid-19 applications exclusively in the domain of Deep Learning.

-

A systematic review with advanced development of Covid-19 diagnosis methods built on DL using radiography modalities(CT, ultrasound, X-ray, paper-based ECG trace imaging) and COVID19 genomic sequencing.

-

Presents the analysis work and related information in a rich, brief, and manageable form, considering key elements, such as the dataset used for experimentation, partitioning methods, and recommended diagnosis and performance analysis frameworks.

-

Establish the taxonomy of the examined research to have a better insight into the developments.

-

To emphasize and debate the complex aspects of existing DL-based Covid-19 assessment systems.

-

Presents scrutiny of the critical results in DL techniques, including analytical and technical information of experimental setups and performance benchmark metrics explained in theoretical and practical aspects.

-

Recommendation for upcoming research to advance accurate and scalable Covid-19 identification systems.

Following is the breakdown of the upcoming sections. The taxonomy of important study directions, categorizing the researched systems using neural networks, imaging modality-based classification tasks, and datasets with practical understanding and quantitative analytical diagrams are described in Section 3. Section 3 to 7 enlightens the recent relative work for Covid-19 diagnosis using radiography images and genome sequencing with pre-trained, hybrid, and custom-trained experimental architecture with DTL. Benchmark performance evaluation metrics used in this study are listed in Section 8.2.1. Section 8 focuses on the discussion (strengths), challenges (weaknesses), and future plan. The survey boundaries are given in Section 9. Finally, Section 10 has been concluded with closing remarks. The list abbreviations are as follows:

2 Literature taxonomy of Covid-19 detection, diagnosis, and classification systems

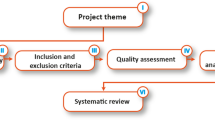

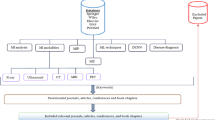

WoS, Scopus, MEDLINE, PubMed Central(PMC), DOAJ, Google Scholar, etc., are the indexing databases used for searching Covid-19 research articles in this review study, which assesses the usage of DL-based Covid19 recognition and classification systems. These systems were utilized for diagnostic imaging to solve serious illnesses and analyze the performance of each method. The primary goal of this study is to implement the literature review, which entailed searching for significant published articles using PRISMA. Until Sept. 2021, a query study was conducted using “Deep Learning,” “Covid-19,” “Diagnosis,” “Radiological Imaging” and “genome sequencing” which were the most common keywords used for obtaining 348 articles (Fig. 1). Ninety-five articles have been ultimately chosen (the remaining articles were not considered because of excluding criteria and their provided performance metrics were not in the acceptable range). Finally, 42 studies were included for qualitative synthesis, and the remaining 20 studies were selected for quantitative analysis. This comprehensive analysis aimed to provide a general overview of the researched studies that relied on DL with radiography pictures published. Figure 2 shows several databases that selected the number of papers published on DML techniques for Covid19.

2.1 Deep neural network architecture

Pre-trained and custom models are used for taxonomies distribution of DL networks. Automatic detection of Covid19 system has been developed using pre-trained DL architectures, such as CNN [72], RNN [7], GAN [98], hybrid networks like CNN-RNN and CNN-LSTM [7], and custom-built frameworks DeCovNet [164], AnamNet [124], AD3D-MIL [59], PQIS-Net [93], Puldi-COVID [19], etc.

Apart from heavy and large-scale networks like ResNet [75], GoogleNet [165], NasNetLarge [19], Inception [142], VBNet [171], DenseNet [24], Xception [101], etc. the lightweight feature space also plays a vital role in the detection of Covid19 like AnamNet [124], MNas3DNet41 [62], LEDNet, UNet++ [26], Reg-STN [139], LDC-NET [21] and DeepLabV3+ [26] that is simple to install on machines with limited resources. Also, the custom lightweight models are SPPCOVID-Net [2] and shallow-CNN [109]. DenseNet, AlexNet [112], GoogleNet [10], Inception [111], ResNet [46], SqueezeNet, VGG [98], and Xception were recognized to be the DL structure designs employed for the assessment of Covid19 from radiological images [132].

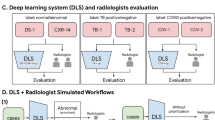

Figure 3 shows the general flowchart used for Covid19 diagnosis containing data preprocessing, DML models, operations, and final results. Methods accomplished in preprocessing step are removing noisy/blurry samples, rescaling, labeling, augmentation, normalization, data partitioning(train, val, test set), etc. The training sample is emplyed to produce a specific model(pretrained, custom/hybrid) for feature extraction [138, 141], segmentation, and classification, which is then supported using the val dataset. The effectiveness of the produced model-feature space is assessed by employing a test dataset. Typical deep CNN architecture consists of a convo-layer, subsampling/down sampling/pooling layers(PL), and a fully connected(FC) layer. The convo-layer uses convolution action ‘x’ (multiply), a set of learnable filters called kernels that act as parameters. The prime objective of the convo-layer is to isolate the feature structure formed in the given input image of the local region. That is public data throughout the data sets, represented by a feature-structure map. The results of every convo-layer are served towards activation function to familiarize intermittence. The most popular activation function in DL is ReLU. The PL is optional and responsible for decreasing the multi-dimensional shape of input and resulting in reduced hyper-parameter from the network [109]. A PL gains every feature-structure map outcome from the convo-layer, and downsamples, i.e., the pool region reviews an area of neurons in the convo-layer. The famous pool is max-pool; it yields the highest rate in the input area. Each neuron of the FC layer is responsible for the previous layer coupled to the next layer of all neurons. Each significant weight and bias shows how powerfully a value equals a specific label-class [24]. The result of the last FC layer is directed to the activation function, which brings the outputs of the class totals to the highest value as a result. Finally, the recommended technique is assessed using performance metrics(Section 8.2.1) such as accuracy, F1-score, specificity, precision, recall, etc.

Figure 4 shows the studied paper’s analysis by the number of CNN architectures adopted to sense Covid19. The statistical analysis indicates that among all pre-trained DL models, mostly 34 reviewed systems used ResNet, and 24 systems used VGG. Because of various versions of ResNet including ResNet18, ResNet34, ResNet50, ResNet101, ResNet110, ResNet152/V2, ResNet164, ResNet1202 etc. ResNet makes utilization of residual blocks to increase classifier efficiency. The cornerstone of ResNet is the idea of “skip connections,” which is root of residual blocks. Figure 5 shows the various DML tools and libraries used in the experimentation part for the automated recognition of Covid19. The more popular tools for automated Covid19 determination are Keras and TensorFlow because of their easy implementation and the accessibility of pre-trained models in these libraries.

2.2 Taxonomy of deep machine learning-based class wise task formulation and image modalities

Using radiography(X-ray, CT, ultrasound, ECG) images and genome sequence, DML approaches for classification have been used to construct several computer-aided diagnosis(CAD) systems. The major goal of classification systems is to recognize Covid-19 cases, which entails feature extraction, prioritizing the features, and classifying with deep layers. DML frameworks are employed to generate network structure that help in diagnosis of multi-class(Covid19, healthy, bacterial pneumonia, viral pneumonia) [75, 107, 131] or multi-class(Covid19, healthy, SARS1, SARS2) [46], 3-class(Covid19, healthy, pneumonia) [110, 122] and 2-class/binary(nonCovid, Covid19) [66, 70, 167]. Every disease label represents one or more classification categories.

Figure 6a shows the taxonomy distribution of the five categories of radiography images and genome sequencing from recently developed systems, and Fig. 6b shows the distribution of pre-trained and custom models. Taxonomy for categorizing the Covid19 detection method is offered throughout current work to aid field map-reading. We have selected taxonomy on the three multiple views of radiography modalities (X-ray, CT, and ultrasound) and other(ECG, genome sequence) used; each modality is linked to two DL methodologies (pre-trained and custom system). 2 categories of DL approaches are compared, such as pre-trained models with DTL and custom DL techniques. Furthermore, every DL-based diagnostic process is classified into X-ray, CT, ultrasound, ECG, and genome sequencing modalities. Throughout this study, we looked at 62 Covid19 detection systems. Using a broader point of perception, 32 examined methods (52% of the entire studied methods) deployed X-ray modality as a data source, while the 19 examined methods(31% of the total reviewed systems) applied on CT scans, 2 systems of ECG(3%), 2 systems of genome sequencing(3%) while the rest 7 systems (11% of the total studied systems) applied on ultrasound as shown in Fig. 6a. For Covid19 detection, 45 techniques (73% of the entire studied methods) employed pre-trained models, and 17 techniques (27% of the entire studied methods) were utilized by custom DL models, as shown in Fig. 6b.

2.3 COVID-19 dataset collection

From the reviewed papers, a total of 35 different datasets were retrieved. A summary of X-ray, CT, LUS, ECG, and genome sequence datasets is presented in Table 1. Several authors worked extremely hard and succeeded in creating a new clean Covid19 dataset [26, 62, 132, 142] from various medical institutes and research facilities and distributed it on public online platforms to open the community (Kaggle, Github, SIRM, Mendeley, Biorxiv, Medrxiv, NBAI Cancer imaging archive, Radiopedia, figure1, Medicalsegmentation, Zenodo, IEEE-Dataport, NIH, Wiki-Cancerimagingarchive). On the other hand, To comply with patients, health centre, and scientific laboratory privacy laws, the other researchers chose not to publish the database. Still, they did use the dataset for experiments purpose only [10, 82, 115, 136, 165, 167]. The dataset, resolution, mode, type, sampels/label, URLs, dataset location, and which researcher have used the set of data. These results indicate that the most popular dataset was from Cohen [54] Covid19 X-ray sample. The COVID19 multimodal data samples are shown in Fig. 7.

3 COVID-19 classification using X-ray images

3.1 Real-world pre-trained deep learning methodologies, evaluations with recent schemes

Islam et al. [72], in 2020, developed a Covid19 diagnosis system using a combined CNN and LSTM network. CNN was utilized for feature extraction, while LSTM was used to classify Covid19 on extracted features. The system considered 4575 X-ray samples from the multisource public dataset [33, 36, 37, 83, 116, 157] with 3 classes. Where 1525 images of Covid19, 1525 images of normal, and 1525 images of pneumonia individuals. Samples were rescaled to 224 × 224 pixels. The composed data set was divided into 2-sets, i.e., train and test, in 80%(3660) and 20%(915), respectively. Accuracy and loss were attained by using 5-fold cross-validation. The suggested experimentation gained accuracy, specificity, sensitivity, and F1-score of 99.2%, 99.2%, 99.3%, and 98.9%, respectively. Bassi et al. [13], in 2021, suggested an innovative accumulation to the twofold transfer learning method, i.e., the network would be first trained on ImageNet with five DNNs, named NetworkA, NetworkB, NetworkC, NetworkD, and NetworkE. Network A, B, and C structure has 201 layers of DenseNet, and Network D, E has 121-layer of DenseNet with added arbitrary weights and biases. The dataset was randomly partitioned with 80% of the samples for the training and validation set of 20%. The dataset [116] was divided into three classes (normal, pneumonia, Covid19). For the test set, 50 random images were chosen among every class (normal, pneumonia, Covid19). After eliminating 150 test samples, 90% of the remaining samples casted for training and 10% for validating. The experimental results on the proposed system achieve the highest accuracy by DNN C Network with 100%, whereas A Networks achieve 99.3%, and B Network reaches 98.7%. Jain et al. [75], in Oct. 2020, proposed a DTL scheme with a two-stage strategy, i.e., data augmentation, and preprocessing in stage-1 and stage-2 contains designing the deep network model. The assembled 1215 X-ray images from Github [83] and Kaggle [54] datasets. Further data augmentation strategy raises the sample size to 1832 and trains the network on pre-trained ResNet50, ResNet-101, and ImageNet networks. The scheme used a 5-fold cross-validation process. The first stage model distinguishes between viral pneumonia, bacterial pneumonia, and healthy, and the second phase network detects the occurrence of Covid19. The simulation outcomes achieved accuracy, recall, and precision of 97.77%, 97.14%, and 97.14%, respectively. Ozcan [121], in May 2020, proposed a DL structure for corona virus disease classification from X-ray using a grid search(GS) and pretrained assisted DNN model. Classical DL variants (GoogleNet,ResNet18,ResNet50) were used for categorization, feature mining, transfer learning purposes, and selecting the best hyper-parameter with the GS technique. Ozcan assembled 721 images from 3 public multisource datasets [37, 54, 83], where the images of 242 for bacteria, 200 for normal, 148 for viral, and 131 for Covid19 classes. The assembled dataset was partitioned to 50:30:20 ratios in the proportion of train, test, and validation sets, respectively. After experimentation on the X-ray dataset with four classes, the projected method has achieved the best results for the GS ResNet50 technique with an 97.69% accuracy, 95.95% precision, 97.26% sensitivity, and 97.90% specificity, and 96.60% F1-measure.

Additionally, Joshi et al. [82], Jan. 2021, proposed a robust DTL adopted Covid19 sorting structure from X-ray. Covid19 positive X-ray images from various nations, including three hospitals in India, Australia, and 12 other countries, were chosen for training model performance assessment. The pre-trained DarkNet53 was used for feature extraction and generated a feature pyramid network with ImageNet. Assembled multisource CXR dataset contains 659 instances of Covid19, 1660 images of healthy, and 4265 images of nonCovid. The dataset was divided into training, 5-fold cross-validation, and test. They attained test accuracy of 97.11% for ternary-class(Covid19, normal, pneumonia) and 99.81% for binary(Covid19, nonCovid) classification with disease detection 0.137 s per image. Furthermore, Fontanellaz et al. [49] proposed a completely automated study in June 2021 that employs depth-wise with an inverted convolution block for feature extraction on a prognostic structure to find X-ray-based Covid19 pneumonia. The inverted bottleneck chunks were utilized to construct down sample and feature maps. The combined and down sampled feature maps were fed to an MLP, which delivers the model produced in the form of a likely possibility for the healthy, other-pneumonia, and Covid19-pneumonia class. They employed multisource publically available online datasets [33, 38, 54, 129] containing normal X-ray, Covid19-pneumonia confirmed, and other-pneumonia classes. After synchronization of the dataset, the training set comprised 7966(normal), 5451(other-pneumonia), and 258(Covid19-pneumonia) classes. However, 100 samples were assigned to each class in the test set. The projected system attained overall diagnostic accuracy of 94.3%, the sensitivity for normal CXR, other-pneumonia, and Covid19-pneumonia 98.0%, 88.0%, 97.0% respectively, PPV of 94.5%, F1-score of 94.3%. After merging other pneumonia and Covid19-pneumonia cases, the projected network acquired an accuracy of 95.7%. Moreover, Irmak [70], in Nov. 2020, designed a robust CNN model and planned to detect Covid19 using publicly available multisource datasets. Assembled datasets from two online public platforms [38, 54] were categorized into 625 for Covid19 and 625 for nonCovid19 classes. This experimented model is adopted to resolve whether a specified CXR image has Covid19 or not with an 99.20% accuracy, 98.41% specificity, 100% sensitivity, 98.41% precision, and AUC of 99.98%.

Rafi [130], in Oct. 2020, used two pretrained models(ResNet152, DenseNet121) to identify Covid19. The obtained dataset was sliced into 70%:30%(training:testing) for binary classification(Covid19 and normal). The proposed experimented ensemble (ResNet152 + DenseNet121) model achieves the highest results with an 98.43% accuracy, 99.23% specificity, and 98.71% sensitivity. Panwar et al. [126], in Sept. 2020, developed an algorithm using DNN based method called “nCOVnet” to identify Covid19 using Xrays. They employed datasets from three public platforms [36, 48, 54] containing 142 instances of Covid19 positive and 142(healthy patients), and 5863(pneumonia). Xray samples were sliced into healthy, bacterial-pneumonia, and viralPneumonia with different class values. The assembled dataset was separated into 70% for training(127 patients) and 30% for testing (31 cases) set. Because of the small sample size and the lack of a pixel ratio, they transformed all images to 224 × 224 pixels and used the data augmentation technique with a rotation range of 20 degrees. The experimental results correctly sense the Covid19 positive individuals with an 97% accuracy, whereas 88% inclusive accurateness, 97.62% sensitivity, and the specificity of 78.57%. Nayak et al. [112], in Feb. 2021, used eight variants of CNN (VGG16, ResNet34, MobileNetV2, AlexNet, SqueezeNet, and InceptionV3) for the prediction of Covid19 from CXR. Nayak composed multisource data from 3 public platforms [54, 55, 116]. The data collection was divided into 70%:30% for training, testing. A sum of 286 frontal-view CXR samples were considered, comprising 143(Covid19) and 143(healthy) class in the training and 120(Covid19), and 60(healthy) class in the test set. However, the selected dataset was small; later data augmentation was used to increase the number of instances. In terms of model results, ResNet outperformed the competitive edge. Compared with other networks, the best results were attained by ResNet34 with an accuracy, AUC, precision, specificity, and F1-score of 98.33%, 98.36%, 96.77%, 96.67%, and 98.36%, respectively.

Furthermore, Das et al. [110] in Jul. 2020, created an autonomous Covid19 finding technique with DTL based on Inception (Xception) from CXR. They collected a single-source dataset [54] and divided it into 70%(training), 20%(testing), and 10%(validation) ratios. Covid19(+), pneumonia(+), and Covid19(−) were used as classifications in this investigation. The system achieved a performance of training analysis with 99.52% accuracy, 98.62% F-measure, 99.12% sensitivity, 99.46% specificity, 98.08% kappa stats, and testing analysis with 97.40% accuracy, 96.96% F-measure, 97.09% sensitivity, 97.29% specificity, 97.19% kappa stats respectively. Ozturk et al. [122], in June 2020, projected a novel model (DarkNet) for automatic recognition of Covid19 using raw CXRIs. The Darknet model was trained on 127 CXRI of the dataset repository [54, 55]. The assembled multisource dataset contains 1125 images for training the proposed model, including 125 frames for the Covid19(+) class, 500 frames for the pneumonia class, and 500 frames for the no-findings class. The experiment achieved 98.08% binary classification accuracy(Covid, no-findings) and 87.02% for 3-class(Covid, no-findings, pneumonia) classification and 95.13% average sensitivity, 95.30% specificity, and 96.51% F1score. Narin et al. [111], in May 2021, suggested automaed discovery of COVID illness detection using CXR radiographs. They employed 5 pre-trained CNN variants (InceptionV3, InceptionResNetV2, ResNet50, ResNet101, and ResNet152). This study fulfilled three altered binary classifications with 4-lables, i.e., Covid19, normal, viralPneumonia, and bacterialPneumonia via 5-fold cross-validation. From 3-datasets [54, 83, 116], accuracy with 96.1%(Dataset1), 99.5%(Dataset2) and 99.7%(Dataset3) attained by pre-trained ResNet50.

In an alternative study, Pandit et al. [125], in May 2021, suggested the spontaneous discovery of Covid19 from CXR using the pretrained VGG16 model. The projected model uses a non-connection procedure to define if the radiography image is diseased. In this work, 2-public datasets [39, 54] were used, containing 1428 images with 224 for Covid19 confirmed positive class, 700 for communal bacteria pneumonia class, and 504 for healthy class with no infection. Randomly dividing the dataset into training and test sets, with 70% belonging to training and 30% to the testing set. Experiment achieved accuracy of 96% and 92.5%, sensitivity(TPR) of 92.64% and 86.7%, specificity(TNR) of 97.27% and 95.1% in binary(Covid and nonCovid) and 3-class(Covid, nonCovidPneumonia, and healthy) cases, respectively. Later, Medhi et al. [103], in May 2020, developed spontaneous recognition of Covid19 contamination from CXR using the DL multilayer CNN. The publicly available dataset from Kaggle [84] has been used in this study contains 150 confirmed Covid19 patients. A patchy white shadow over the lung region led to the diagnosis of Covid19 infection. The proposed system has data acquisition, pre-processing (noise removal using 2D Gaussian filter), segmentation (gray level threshold), extracting the features using multilayer CNN structure, and classification (softmax activation function) steps. The experimented system shows the projected system’s outcomes that recognize the Covid19 cases with 93% accuracy. Abbas et al. [46], in May 2020, proposed the classification of Covid19 from CXR using DeTraC (Decompose-Transfer-Compose) DCNN. To prove the strength of DeTraC, it internally uses 5 variants of CNN(ResNet, GoogleNet, AlexNet, VGG19, and SqueezeNet) in the transfer learning stage. The AlexNet pretrained network centered on a shallow learning approach to mine discriminative features of the 3-classes (normal, Covid19, SARS) for the class decomposition layer. The decompose class aims at improving small discrepancy classifiers assisting additional flexibility to their decision boundaries. The authors collected the data source from multisource public platforms [54], and 2 datasets from JSRT contain high-resolution images, i.e., 4020 × 4892 and 4248 × 3480 pixels. The PCA technique was used to plan the high-aspect feature space with a target on a lower dimension to deal with high-resolution images. The dataset was sliced into 70:30 ratios for training:validation set into norm1 class with 441 images, norm2 class with 279 images, Covid19–1 class with 666, Covid19–2 class with 283, SARS1 class with 63, and SARS2 class with 36 images. The performance of DeTraC was compared with pre-trained models for both shallow and deep tuning modes. DeTraC, through VGG19, has succeeded with the uppermost 97.35% accuracy, 98.23% sensitivity, and 96.34% specificity.

3.2 Real-world hybrid & custom deep learning methodologies, evaluations with recent schemes

Abdani et al. [2] in Jul. 2020 suggested a lightweight DL model by implanting an altered spatial pyramid pooling (SPP) module into the CNN to monitor the possibility of Covid19. The 4-open-source databases [37, 38, 54, 83] containing 219(Covid19 positive), 1341(normal), and 1345 images of viral pneumonia class. The suggested method is called SPP-COVID-Net and has a sum of 862,331 parameters. The base of the SPPCOVIDNet network is imitative from DarkCovid-Net. In DarkCovid-Net, a multi-scale approach was implanted by exchanging the last few layers of the new structure with parallel PLs of SPP. The system classifies an CXRIs into 3-classes(Covid19,healthy and other types of viral-pneumonia). The experimental setup for SPPNet achieve 94.6% accuracy. Rahimzadeh et al. [131], in 2020, introduced a new DL model based on hybrid networks of Xception and ResNet50V2. Based on [129] open-source dataset, the system trained a hybrid structure for distinguishing CXRIs into 3-classes(healthy, pneumonia, Covid19). The merged network is created by combining the extracted features of Xception and ResNet50V2 and then feeding the integrated extracted features to the convo-layer of the classifier. According to experimental findings, the suggested network’s average accuracy for identifying Covid19 instances was 99.56%, while the overall accuracy for altogether classes is 91.4%.

Nour et al. [118], in Dec. 2020, offered a Covid19 intelligence diagnostic model based on CNNs. The suggested model ensures end-to-end learning and is trained from the start (contrary to transfer learning), with weights of 9 × 9 × 3 × 128, bias 1 × 1 × 128 that may directly learn extracted features from the input X-ray samples. This CNN model was used for feature extraction. Deep features were served in ML techniques for the decision tree, SVM, and KNN. The Bayesian optimization technique was utilized to maximize the ML model hyperparameter. The experimentations were carried out on images from an open Covid19 database [38, 54, 83], with original images of 219(Covid19), 134(healthy), and 1345(viral pneumonia) classes. Later data augmentation was applied to increase the sample size of the data set. The SVM classifier offers the highest 98.97% accuracy, 89.39% sensitivity, 99.75% specificity, and 96.72% F1-measure.

A recent study by Aslan et al. [12] in Jan. 2021 proposed hybrid architecture (mAlexNet+BiLSTM). Two learning algorithms were suggested for accurately identifying positive Covid19 patients using CT and CXRIs. As part of preprocessing, the lung segmentation in CT scans to obtain robust features was given as input to these suggested designs, and it was achieved spontaneously using ANN. Both structures comprise AlexNet for transfer learning. The second suggested structure was a hybrid arrangement containing a BiLSTM layer. The system considered 2905 samples, including Covid19(219), Viral-Pneumonia(1345), and Normal(1341) cases. ANN-based lung segmentation results in image conversion to 1024 × 1024 pixels. With data augmentation to the Covid class, the samples increased to 1095. The input image fed to mAlexnet requires the image input layer 227 × 227 × 3 images with each convo-layer followed by ReLU. The final FC8 layer weights are fed to BiLSTM. BiLSTM uses RNN to process sequential data. The CNN and BiLSTM network time for training was 139 and 85 seconds. The training time for the mAlexnet structure took 139 seconds. Whereas training hybrid structure took 224(139 + 85) seconds. The simulation results of the suggested system for the first mAlexNet Covid19 recognition rate of 98.14% accuracy, 98.26% recall, 99.06% specificity, 98.16% precision, 98.20% F1-score. The second result by, mAlexNet+BiLSTM hybrid architecture with 98.70% accuracy, 98.76% recall, 99.33% specificity, 98.77% precision, and 98.76% F1measure.

4 COVID-19 classification using computed tomography (CT) images

4.1 Real-world pre-trained deep learning methodologies, evaluations with recent schemes

Wang et al. [165] reported a method to sense Covid19 in CT scans using the concept of pretrained models in 2021. The suggested method took advantage of two prominent pre-trained models (GoogleNet, InceptionV3). The combined dataset consisted of CT scans from 3 different universities and hospitals consisting of 1065 Covid19 images. The proposed neural network system was divided into 2-parts: in the first portion, the pretrained Inception was used to transform samples to 1-D vectors. The second portion is a FC network primarily used for classification. Parameters were trained for 1500 epochs, with the initial learning rate of the pretrained model being 0.01. The experimental setup reveals that the network attained accuracy, AUC, specificity, sensitivity, and F1-measure of 89.5%, 95%, 88%, 87%, and 77%. Ni et al. [115], in Dec. 2020, proposed a DL algorithm for illness detection, classification, and positive pathogen determination. They compiled private datasets from three hospitals in China containing approved 14,435 chest CT images. MVP-Net and 3DUnet were used in the proposed network. The procedure was assessed in a non-duplicated datasource of 96 affirmed Covid19 individuals. The results obtained were compared with three radiological inhabitants and two experienced radiologists. The experimented results achieve precision, AUC, affectability, specificity, and F1-score of 93%, 86%, 97%, 97%, and 94%, respectively.

Using DL, Xu et al. [167], in Oct. 2020, created the Covid19 diagnostic system. For feature extraction, ResNet-18 with a location-attention network structure was employed. A pooling method was also utilized to reduce dimensionality and prevent overfitting. They compiled private datasets from four Chinese hospitals and universities. The sum of CT scans in the compiled dataset is 618, with samples of 219 for Covid19, 224 for IAVP, and 175 for healthy classes. A model discovery comprises image classification to detect the appearance and structure of distinct illnesses. The radius out from the edge was utilized to identify Covid19, an additional weight for the algorithm, to acquire the relative position of the patched on the pulmonary scan. The experimental result brings an 86.7% accuracy, 81.3% precision, and 83.9% F1-score. Loey et al. [98], in Oct. 2020, used the data augmentation technique with the conditional generative adversarial network (CGAN) with DTL to detect the corona virus. It employs 5-distinct (AlexNet, VGG16,VGG19, GoogleNet, ResNet50) pre-trained DCNN models. The reason for adopting CGAN is that the amount of Covid19 samples was limited; thus, they added data augmentation with CGAN for expanding the samples size with improved accuracy. The dataset was compiled from three online public platforms [25, 40, 104], with 742 samples divided into four label classes for experimentation (Covid19, Augmented-Covid19, CGANCovid19, and AugCGANCovid19). According to the experimental results based on different class levels, a high balanced ResNet has a 82.64% testing accuracy, 88.30% sensitivity, and 93.33% specificity. In comparison, VGGNet19 has a testing accuracy of 76.54%.

Saeedi et al. [142], in Oct. 2020, introduced a CAD web-service to detect Covid19. The suggested web-based DNN hybrid architecture uses ResNet, Inception, and MobileNet. A single public dataset [40] was used for the experiment. 746 samples were selected, including Covid19(349) and healthy(397) class. The suggested approach uses nuSVM, which acts as anti overfitting bottleneck. The operation includes reducing image dimensions (to reduce parameters) and increasing system performance by utilizing the previous layer’s outputs as inputs. Lastly, an amalgamation of DenseNet was used to classify Covid19 and healthy classes. The proposed experiment produced 90.61% accuracy, 90.80% recall, 89.76% precision, and 95.05% AUC. Cruz [63], in 2021, proposed the stacked ensemble method and binary phase TL to sense Covid19. The system used 6 variants of CNN (VGG16, ResNet50, ResNet50–2, DenseNet161, DenseNet169, and Inceptionv3). The network contains 128 neurons and ReLU activation classifier layers by replacing the last layers of VGG16. Dataset was assembled from multisource online public platforms [25, 104]. A sum of 746 CT scans was considered for the experiment among 349 for Covid19 and 397 for non-Covid. The results were obtained from binary classification. The experimented results achieved an 86.70% accuracy, 85.86% F1-measure of and 90.82% AUC.

Through High-Resolution Computed Tomography (HRCT) scans, Ardakani et al. [10] in Jun. 2020 suggested a method to identify Covid19 utilizing 10 CNN forms (ResNet18, ResNet501, ResNet101, VGG16, VGG19, AlexNet, SqueezeNet, GoogleNet, MobileNetV2, and Xception). They collected private datasets from 16 hospitals. For the simulation, a sum of 1020 CT pictures were considered, including Covid19(510 images) and non-Covid (510 images) individuals. The datasource were partitioned into 80:20% for training:validation sets. ResNet101 and Xception give better results than the other 10-networks. The Xception network achieved an 99.02% accuracy, 98.04% sensitivity, 87.3% AUC, and 100% specificity based on the experimental data. The ResNet101, on the other hand, attained 99.51% accuracy, 100% sensitivity, 99.4% AUC, 99.02% specificity, 99.27% PPV, and 100% NPV.

4.2 Real-world hybrid & custom deep learning methodologies, evaluations with recent schemes

In 2020, Rahimzadeh et al. [132] suggested a completely automated DL-based feature pyramid network (FPN) for the Covid19 prediction based on large CT samples. They introduced a novel dataset COVID-CT Set [114]. COVID-CT dataset comprises 48,260 healthy CT images and 15,589 images of Covid19 contamination. Custom-developed FPN automatically removes those CT samples that are not adequately visible or are impure. This benefit shrinks the number of samples needed for processing and false discovery. FPN aids the system in improved learning and sensing multiscale elements that may be present in an image. The experimental setup used a modified DCNN variant of ResNet50V2 and was improved by the FPN for categorizing the carefully chosen samples to Covid19 or normal classes. ResNet50V2-FPN reached 98.49% accuracy with 7996 validation samples during the image classification phase. To identify Covid19, Wang et al. [164] in Aug. 2020 recommended a 3D-DCNN(DeCoVNet). Weakly supervised neural network architecture was developed for Covid19 identification using 3D CT scans. Using Unet, the segmentation of the chest section was pre-trained. The privately assembled CT datasets from various radiology departments (Union Hospital, Tongji Medical College, HUST) were considered for the experiment. For acquiring a CT lung mask, regions were segmented using unsupervised learning and manually discarded failed cases. Experimented results obtained an accuracy of 90.10%.

Han et al. [59], in Aug. 2020, proposed a custom AD3DMIL learning algorithm. Attention-based 3D multiple instances learning(AD3D-MIL) contains a container of instances where each patient marker was allotted to 3D CT lung images. The primary goal of AD3D-MIL was to compute a separate group label for CT. The primary responsibilities were classifying Covid19 as common pneumonia or no pneumonia and locating the infected lung area. AD3DMIL generates deep 3D cases like the possible infection area of Covid19, common pneumonia, without pneumonia. Further, AD3D-MIL relates the attention-based pooling method to 3D cases to deliver comprehension into the involvement of every case to the sack label. At last, for more accessible learning, AD3D-MIL learns Bernoulli distributions of the bag-level labels. A total of 460 chest CT scans collected privately were considered for the experiment, including 230 CT images for Covid19, 100 CT images for common pneumonia, and 130 CT images without pneumonia. Experiment results reveal that the AD3D-MIL algorithm achieves 97.9% inclusive accuracy, 99.0% AUC, and a kohen-kappa-score of 95.7%.

Moreover, Paluru et al. [124] in Mar. 2021 proposed a scheme for anamorphic depth (AD) implanting-based lightweight CNN to segment irregularities in Covid19 CT images. The projected AnamNet consumes 7.8 times lesser parameters equated to Unet. AnamNet becomes lighter as a result of this feature and capable of providing inference to resource constraint devices like mobile, IoT(Raspberry Pi) [21] platforms. AnamNet first extracts the lung region called a lung mask using U-net. Then extracted lung region was used for forecasting Covid19. The proposed experimented AnamNet offers 98.80% accuracy, 99.70% specificity, 91.10% sensitivity, and an overall 94.10% dice-score. Still, 95% accuracy in the normal lung region, and 68.00% in abnormal region. Konar et al. [93], in 2021, proposed a semi-supervised shallow learning network with an automatic diagnostic for Covid19 using pulmonary CT imaging. The suggested architecture incorporates a Parallel Quantum-Inspired Self-supervised network (PQIS-Net) without pretrained CNN models for automated segmentation of lung CT slices traveled over FC layers. The PQIS-Net model works parallel three-layer configurations consisting of interrelated quantum bits, the second-order n-connected neighborhood-based network for segmentation of lung CT images, and differences in local intensities. They have collected three publicly available datasets [34, 55, 56] for the experiment. The dataset [54] was used for segmentation, and the other two datasets were utilized to classify Covid19. The examined system achieved an 98.4% accuracy, 98.6% precision, 98.5% recall, 97.8% AUC, and 98.3% F1-score.

5 COVID-19 classification using ultrasound images

Ultrasound has historically been used for diagnoses in cardiovascular [88, 161] and maternity care, and more recently for a variety of new illnesses, including kidney disease detection [117], spondyloarthropathies [41], atherosclerosis [154], etc. Another cause would be that technology improvements, particularly computer vision, have enabled the determination of important data using the poor level and the high signal-to-noise images of the ultrasound modality [64], which is increasingly used in ultrasound. Currently, several studies have employed ultrasound as just a useful diagnostic tool for hepatocellular carcinoma [95], endometriosis [169], and pseudo tumor cerebri [44]. The integration of pulmonary ultrasound utilizing DNN has been proven beneficial for evaluating lung ultrasonography quicker and easier at the bedside with respiratory failure. Covid19 detection using DL-based lung ultrasound (LUS) needs to perform essential tasks like frame-based sorting, video-based scale classifying, and pathological artifact segmentation.

LUS imaging is an instrumental technique when the patient flow limit exceeds the clinic radiology set-up competencies due to its wide range of applicability and relatively affordable budget. Because of the low fees, it is also accessible to lower-economic countries. However, in addition to X-ray, CT radiology DL-based image processing, LUS datasets are accessible in the form of images/frames and videos for training. If a dataset is of the video type, converting it to a frames dataset requires additional computation. In addition, the time-series procedure adds further preprocessing to the LUS dataset.

5.1 Real-world pre-trained deep learning methodologies, evaluations with recent schemes

In August 2020, Roy et al. [139] developed a novel deep network(imitative of STN) that calculates the infection strictness mark connected to an input frame and provides the weakly monitored location of infected items. Roy developed a new technique based on uni-norms for in-effect aggregation of frame scores on the video level. Finally, the standard deep model was created to evaluate pixel-level segmentation of the Covid19 ultrasound image. The ultrasound dataset was collected from Italian hospitals(ICLUS-DB) [74] for experiment purposes. The employed dataset contains annotations on 4-level scales on frame, and video-based. It also comprises a subsection of pixel-level tagged LUS frames. The proposed model named Reg-STN uses CNN, STN, Unet, Unet++, and Deeplabv3+ as the backbone. Acquired a total of 58,924 frames corresponding to 277 videos, comprising 45,560 frames using convex probes and 13,364 frames using linear probes, which were labeled based on the scoring criterai (score range of 0 to 3). The labeled frames dataset consists of 19,973 frames for score-0(34%), 14,295 frames for score-1 (24%), 18,972 frames for score-2 (32%), and 5684 frames for score-3 (10%). Also, 60 videos sample was selected for video-level annotations. A total of 1005 convex probes and 426 linear probes frames acquired were marked at a pixel level semantically. The division test consists of 80 videos from eleven patients and 10,709 frames. The training set includes all frames from the remaining videos. The video-based predictor is then trained and evaluated using 5-fold cross-validation (entire movies from the matching patient on that identical fold). The evaluated performance of the proposed frame-based scoring method achieves an average accuracy of 71.4%, whereas video-based score prediction(uni-norms) achieves an 60% F1score, 60% precision, and recall. Pixel-based semantic segmentation for the ensemble of UNet, UNet++, and DeepLabV3 using online augmentation achieves the highest accuracy of 96%, dice-coefficient for unification Covid19 related score of 75%, and mean dice score of 65%.

Born et al. [26] created an ultrasound frame-based approach in Jan. 2021 that correctly separates Covid19 LUS recordings from healthy, pneumonia individuals. The system used five CNN variants(VGG16, VGGCAM, UNet, UNet++, DeepLabV3+). The collected dataset [53] contains more than 200 LUS convex, linear videos, including 70 videos and 22 images for Covid19 class, 51 videos and 22 images for bacterial pneumonia class, 6 videos for viral pneumonia class, 73 videos, and 15 images for healthy class. Also, the author has released the same dataset on the public platform. The system used only three classes; it excluded the viral pneumonia class for the analysis due to the small amount of instances (3 convex-videos). Later, manually handled all convex LUS data, including 179(videos), 53(images), and divided the videos into frames at a frame rate of 3 Hz (maximal 30 frames/video). The final analysis dataset leads to a database of 1204 frames of Covid19, 704 frames of bacterial pneumonia, and 1326 healthy classes. All frames were rescaled to 224 × 224 pixels. Outcomes were attained in 5-fold cross-validation by sample numbers per class. The suggested frame-based model with VGG provides the best performance for the Covid19 class, with metrics of 88% recall, 90% accuracy, 89% F1 score, and 94% specificity. Whereas VGG-CAM obtains 90% recall, 92% precision, 91% F1-score, and 96% specificity for video-based outperformance, the Genesis model achieves 74% recall, 77% precision, 75% F1-score, and 87% specificity.

Furthermore, Muhammad and Hossain [108], in Aug. 2020, proposed a classification of Covid19 in healthy individuals using multi-layers fusion from LUS. The proposed model is a light CNN network containing fewer than 0.4 million learnable parameters. Then extracted features from diverse layers were merged to create a feature vector to be served into MLP, using FC-layer, for classification. The dataset collected from [28] contains 121 videos, including 45(Covid19), 23(bacterial-pneumonia), 53(normal), other 40 frames containing 18(Covid19), 7(bacterial pneumonia), and 15(normal) classes. Frames with a resolution of 512 × 512 pixels were used in the experiment. The proposed fusion method with ResNet, SqueezeNet, achieves 92.5% precision and 91.8% accuracy. Rojas-Azabache et al. [136] proposed DL-based recognition of Covid19 illness from LUS images. The dataset was obtained from a different private health clinic in Lima and consisted of 50 LUS videos, from which 750 frames were mined for situations with Covid19 and 750 frames for the healthy class. Grayscale frames with 512 × 512 pixels were available. The dataset was categorized into Covid19 and healthy classes. The experimental results were 100% accurate. A profound learning classifier for recognizing Covid19 using LUS frames was produced in another investigation by ArntField et al. [11]. Model has been trained on LUS-frames through B-lines using various etiologies. The dataset was collected from LHS-center 2 hospitals and consists of 600 LUS videos (121,381 frames) classified as Covid19, nonCovid severe lung suffering illness (nonCovid), and hydrostatic pulmonic edema (HPE). For training, 99,471 frames (500 videos) were considered, and for test-1, 9540 frames (49 Videos), and for test-2, 12,370 frames (63 videos) were evaluated. The proposed system achieves 100% AUC for Covid19, HPE class, whereas 93.40% for nonCovid class.

5.2 Real-world hybrid & custom deep learning methodologies, evaluations with recent schemes

Born et al. [27], in Jan. 2021, proposed spontaneous recognition of Covid19 from ultrasound images. The proposed LUS model (POCOVID-Net) used VGG16 as its backbone. The large dataset was collected and made publicly available on [28] called POCUS. The method analyzed 1103 images from 64 videos, including 654 frames of Covid19, 277 frames of bacterial pneumonia, and 172 frames of healthy controls. The system implemented using 5-fold cross-validation. The POCUS contains a total of 2,392,963 trainable and 12,355,008 non-trainable parameters. The POCOVID-Net model achieves an average accuracy of 89%. Whereas for the Covid19 class, the POCOVIDNet model with five-fold cross-validation achieves 96% sensitivity, 79% specificity, 88% precision, and 92% F1score. The recent study [17] in Nov. 2022, implements LUS-based COVID19 classification utilizing the combination of custom graph+Capsule NN. The experimental simulation trained on single POCUS [28] datasource containing 1560 samples of COVID19, pneumonia, and healthy labels. In comparison between capsule and graph network, the graph module performed well w.r.t. classification accuracy. The capsule attained 95.21% accuracy whereas the graphs network attained 97.26% accuracy, 96.93% AUC, 95.92% F1-score, and (93.11, 98.68) CI.

In addition to the above theoretical classification explanation, we have tabularized the continuing(apart from the above-discussed systems) Covid19 detection methods in Table 2. Table 2 shows the critical traits of technical and numerical analysis, such as datasets, network, samples/label, data splitting criteria, and the quantitative performance of the examined Covid19 diagnosis systems. It also classifies the different imaging modalities using pretrained, hybrid/custom-made models with DML.

6 COVID-19 classification using paper-based ECG-trace images and genome sequencing

6.1 Genome sequence for COVID-19 classification

To classify the coronavirus genomic information, a novel concept called Neurochaos Learning (NL) was introduced by Harikrishnan et al. in 2022 [61]. The scientific findings of chaotic and inhomogeneity at the scale of neurons in biological brain circuits served as the inspiration for NL. The authors assembled multisource dataset [1, 123] for collecting RNA strands. A multilayer structure made up of chaotic neurons makes up the NL-framework. When employing leaving one out cross-validation, the mean 0.998 sensitivity, 0.998 specificity, 0.998 accuracy for NL for the multiclass classification issue (SARSCoV2, Coronaviridae, Metapneumovirus, Rhinovirus, and Influenza). The evaluation performance demonstrates a mean macro F1-score greater than 99 for the recognition of SARSCoV2 and 1 genome analysis using just single trained model per subclass for 1000 separate randomized train sessions. The effectiveness of NL is compared to that of KNN, LR, RF, SVM, and NBC classifiers. A concentricity method to categorise the SARSCoV2 utilising comparable DNA created with the single-stranded RNA virus, is presented in another paper by Singh et al. [151]. 1582 cases in aggregate, with varying genomic scenes sizes across distinct areas, was gathered from multiple data sources and split into SARSCoV2 and nonSARSCoV2 groups. Applying DSP approaches, we isolated eight biomarkers depending on three frequency, and we ordered them employing a filter-based attribute extraction. The ranking markers were employed as input for the KNN, SVM, DT, and RF algorithms to distinguish SARSCoV2 against different coronaviruses. Through 10-fold-validation, the effectiveness of the predictors depending on correctness and F-measure was evaluated using the trained model. After being evaluated using unknown data, RF was determined to be the most accurate classifier, discriminating the SARSCoV2 virus from other coronaviruses and control subjects with 97.4% accuracy, 96.2% sensitivity, and 98.2% specificity. Additionally, the suggested approach outperformed earlier research in terms of combinatorial efficiency, computing the genomic indicators in just 0.31 s.

6.2 Paper-based ECG-trace images for COVID-19 classification

In response to the issues the COVID19 infection causes in pulmonary circulation, a unique DCNN method is presented by Irmak [71] in 2021. For the assessment of COVID19 illness by employing just ECG tracing pictures produced with ECG impulses of COVID19 affected individuals. The single source ECG-trace dataset [91] contains 650 COVID19, 300 MI(Myocardial Infarction), 720 healthy, 600 irregular heart pulses samples. ECG-trace dataset details are tabulated in Table 1. The whole 2170 ECG dataset is divided using classical hold-out method in ratios of 60–20-20%. For the 2-class objectives of (COVID19/Healthy), (COVID19/Irregular heart-rate), and (COVID19/MI), the aggregate classification accuracy of 98.57%, 93.20%, and 96.74% and AUC score of 99.6, 97.7, and 99.05 are obtained, consecutively. Additionally, for the 3-class(COVID19, Irregular Heart rates, MI) and 4-class(Healthy, COVID19, Irregular Heartbeats, MI) classification problems, average classification accuracy of 86.55% and 83.05%, respectively, were attained. Furthermore, in addition to above study, ECG-based COVID19 detection using multi-DCNN ensemble approach was suggested by [20] in Nov. 2022. The simulation utilizes 4 base models(VGG16,DenseNet201, MobileNetV2,ResNet152V2) and 11 meta/ensemble models. The pair of [M2, M3] and [M1, M2, M3] offers well similar results with 93.5% accuracy, 87% recall, 87.03% F1score, and 95.67% specificity 95.67.

7 Segmentation approaches used for COVID-19

We also reviewed some segmentation-based articles to comprehend the segmentation of Covid19 lung-infected regions. After adjusting the general intensity, the edge of the diseased lung may be improved. Image segmentation can be accomplished in two methods. The first step is to mine features above an image patch. The second step is to train using a labeled data set approach. The DL can employ either traditional CNN over image patches or FC networks across the entire image. Jiang et al. [81], in Feb. 2021, suggested a dual-generator structure (global-local) and dual-discriminator (multi-resolution) for Covid19 CT image fusion using conditional-GAN. A dual generator is used to report and replicate different stages of information from CT. A dual discriminator aimed at learning at full-FR (512 × 512) and half-HR (256 × 256) resolution CT data. GAN was used to learn to differentiate input as original or forged. A DESUM at the generators helps to equilibrium the lung part and minor injury region data by energetically weighing two positions through the element-wise totality method and stopping the generator from being excessive. A DFM was at the discriminator for weighing the loss from 2 inputs with different resolutions. It also permits the HR discriminator to obtain additional lung structure or injury region data. It also deals with additional features of minor injury areas to the FR discriminator. These twin multiple discriminators assisted in alleviating training procedures and increased the appearance feature of artificial data. Such techniques could be efficiently used for data augmentation tasks to help trainee radiologists who want great snapshots of Covid19 samples and semantic segmentation for Covid19 chest radiography digital imaging. The network of the global info-generator utilizes a 4-convo-layer with ReLU for upsampling and downsampling and 9-res-blocks. The system used 829 lung CT samples from radiopedia [36] database. Experimented synthesis segmentation for Covid19 infection achieved a 90.19% dice score, 90.34% sensitivity, and 99.84% specificity.

For automatic segmentation of Covid19 infected areas and entire lung CT Chest scan Yan et al. [168] in Mar. 2021 proposed a 3D-convolution-based DL structure called Covid-SegNet. The used dataset contains 165,667 annotated 861 scans of Covid19 patients. A total of 731 individuals CT were arbitrarily selected for training. For the testing set, 130 patients. The proposed feature variation(FV) block efficiently improves the ability of feature demonstration for the Covid19 infected segment. By injecting the features at changed measures by offering progressive atrous spatial pyramid pooling (PA-SPP) for managing refined contamination regions of various looks and contours. The Covid-SegNet model contains res-blocks, FV-blocks, PA-SSP-blocks, and down sampling for encoding layers and res-blocks and de-convolution layers kernels with a stride of half(1/2) for decoding layers. To generate segmentation results was the responsibility of the last layer. For lung and Covid19 segmentation, the proposed technique attained dice similarity scores of 98.7% and 72.6%, respectively.

The summary report with technical details for experimentation/simulation setup from reviewed articles with detailed values are provide in Table 3.

Complexity of a network is measured using number of layers. The capability of the model rises as the depth increases. Training DL networks, including those with multiple hidden layers, can be more operationally expensive than a single-layer network. Table 3 statistical analysis shows that 16 systems use standard layers, 20 systems employ customized layers, and the remaining 30 systems do not disclose the number of layers they use (Fig. 8a). Almost 92% of the studied systems provided accuracy as the performance metric. A too-high learning rate may force the network to diverge too rapidly to an unsatisfactory result, while a too-low learning rate can lead the system to freeze. The difficulty in training DNN is that the learning rate must be chosen appropriately. Twenty one methods applied the 0.0001 learning rate, which is the highest (shown in Fig. 8c). Kernel size plays a vital role in automatically extracting valuable features from the network for image classification or object detection. From the 62 studied systems, 28 systems use 3 × 3 kernel size (shown in Fig. 8b), batch size from 1 to 512 samples per iteration, and epochs ranging from 5 to 1500 for all systems.

The activation function is added to the network to learn the complex pattern from the data. Thirty-six systems utilize ReLU, 18 systems use softmax, two systems for each sigmoid and Leaky ReLU, whereas Bernoulli, PQIS, and Tanh used once in 3 systems (shown in Fig. 8d). Generally, we employ ReLU in the hiding layers to prevent the critical gradient issue and improve computing speed and Softmax in the final layer. Optimizers are strategies for minimizing an error (loss function). Optimizers are scientific tasks that are based on the learnable parameters of a model, such as weights and biases. Thirty six systems used the Adam optimizer in the studied network (Fig. 8e).

8 Open discussion, challenges, and future trends

8.1 Open discussion

This research aims to identify the best DML architectures for detecting, segmenting, and classifying Covid19. Table 3 summarizes detailed technical analysis outcomes of the individual systems for simulation operations stated in the peer-reviewed papers. Throughout this study, 62 diagnosis methods were surveyed; including X-ray(32), CT(19), 7 systems based on ultrasound, and 4 other CAD systems(ECG, genome sequence). 33 systems used multi-source data, only 16 systems employed a solitary dataset, and the rest system did not disclose the dataset from the planned system. Covid19 is supported by the usage and necessity of massive records with an accurate highest diagnostic like RT-PCR, and results like mortality and duration for discharge are ubiquitous to DL approaches for imaging Covid19 [43]. The benchmarking datasets or real-world datasets as output from the medical ecosystem are inputs to DL models shown in Table 1. Although a few frameworks included a large dataset [62, 139], the quantity of Covid19 instances is quite small [86, 110, 122, 126]. All across the assessment, the binary [7, 57, 77, 85, 86, 144, 160] and multi-class [90, 101, 107, 170] methods were found. Incorporating medical data, perhaps in time series including repetitive images, vital elements, and blood tests, may be preferred to increase training samples substantially [150,151,152,153,154,155,156,157,158,159,160,161, to 162]. Several investigators and radiological reports have publicly released Covid19 imagery datasets. Theoretically, we summarized the recognized systems by factoring in some characteristics such as datasets, class-wise range of samples, data splitting strategies, the DL approach utilized for detection, and finally, the assessment methods for performance measurement. Also, we tabulated technical and numerical analysis of the remaining systems, which highlights the critical aspects in Table 2. In terms of data splitting, various models used 4-fold to 10-fold cross-validation methods [72, 75, 85, 86, 90, 111], whereas others utilized a specific ratio approach [11, 77, 143, 144] for both the pretrained and custom DML frameworks.

The confusion matrix [159] is a performance-assessment tool for ML-based classification problems with two or more classes as output. Across the study, typical assessment metrics such as accuracy, sensitivity, specificity, precision, recall, F1score, AUC, PPV, NPV, kohen-kappa score, etc., are used. In terms of individual highest performance, 100% accuracy [136], 100% AUC [11], 99.44% F1-score [158], 100% sensitivity [70, 158], 99.75% specificity by [118], and kappa statistics of 98.08% by [110]. By a factor of 30, the X-ray [54] collection is the most utilised resource. The model created in [82] achieved 99.81% accuracy for 2-class and 3-class classification, with the highest accuracy of 99.34% achieved by [158], and for 4-class classification, the highest accuracy was 99% by [90]. The system created in [110] reached 99.52% accuracy for training and 97.50% for the testing phase; the majority of the created techniques resulted in higher precision and the next in terms of sensitivity. The Covid19 cases in the reviewed systems, which used the highest of 152,791 samples and lowest of 80 samples for the experiment, are [62, 160], respectively. Most of the created methods did not disclose the computation time because they employed various data sources for the study; however, some solutions estimated the computing time. The highest time required by the hybrid mAlexNet+BiLSTM [12] model is 224 s, whereas the least execution time(seconds/image) was 1.06 s, 0.136 s seconds by [21, 85]. However, the computation time for a custom model depends on the number of layers and hardware specifications. 90% of researchers did not include the model computation time required for testing/training/ validation based on their hardware configuration. The system developed by [82] using pre-trained networks has taken 0.137 s per image, which is the least execution time for Covid19 detection.

Many of the assessed strategies outperformed the pre-trained network as compared to the custom. The effectiveness of the created DML-network differs (depending on the parameters, layers, sizes, etc.) and therefore is not comparative because various data sizes were utilized practically in each study. When comparing imaging modalities, the X-ray fared better than CT, ultrasound, and ECG. The primary challenges in medical imaging [97, 152] are the quasi-technique of gathering imagery data. Hence, it has been witnessed from the research papers that the diagnosis of Covid19 illness, finding the anomalies for chest radiological images like CT, X-ray, and ultrasound scans show an essential part, and image handling with DL algorithms can make it feasible. The systems in the studied papers are resource-greedy for computation and data sources. However, unique methods for a flow of structural design are required for lung segmentation, feature mining, and the detection of specific injury regions on the lung. Those boundaries motivated us to point toward a novel technique for discovering Covid19 illness with minimal resources in collaboration with real-time radiologists [125].

The CT scan-based Covid19 identification method is time-consuming and laborious, necessitating the professionals participation. CT scanning equipment is challenging for Covid individuals since the patients must frequently be relocated to the CT laboratory. The equipment must be thoroughly cleaned, and there is an increased risk of radiation exposure. While CT also isn’t indicated as a major treatment technique, it’s been utilized successfully as a supplementary tool for Covid19 mapping [64]. Like traditional X-ray imaging, CT scans employ X-ray as infrared radiation, but they provide far high temporal contrast due to a much more controlled X-ray beam used to make cross-sectional pictures of the subject. CT is commonly recognized as the primary imaging modality for lung tissue and is universally recognized as the gold standard by doctors. The data engineer [120] has to study and observe doctors’ internal findings and medical observations [167]. The radiologist has to gain a structural and functional knowledge of radiological images of Covid19, pneumonia, and normal patients. Because every imaging method varies in conditions of price tag, accessibility, and the scale of medical skill essential to precisely interpret the produced radiography pictures [64], X-ray and ultrasound can be useful at a low price, portability, and used at the bedside. But the CT scan machine is substantially immovable at an extraordinary cost and is accessible inside the boundaries of hospices and medicinal clinics. Globally ~30,000 CT machines exist [27]. Medical image investigation approaches are extremely subtle to dissimilarities of image intensities. According to the study [27], an automatic modeling tool’s effectiveness demands that “the luminance of the same tissue must always be equal inside one picture but not differ with tissue position.” Almost all photos inside the example data sets must have equal intensity for the same tissues across the board and within a single picture.

According to Hassan et al. [66], the collected necessary information from clinicians to understand the dissimilarity among normal, Covid19, pneumonia-viral, pneumonia-bacterial from X-ray radiography images are:

-

A)

In coronavirus, affected X-ray radiography lung samples found that lung inflation is characterized by greater transparencies of the lung region and fewer broncho-vascular lines than elsewhere in the healthy lung. It’s also produced by restricted inhaled air, which tiny airflow limitations can make throughout the context of bronchi and bronchiolitis produced by Covid19 contamination. Overinflated lobes are more extensive than average lungs, and the distance between the ribcage is considerable.

-

B)

Non-Covid identification inside an X-ray imaging revealed normal opacity, regular broncho-vascular patterns, and a cardiac proportion with open cardio-phrenic and costo-phrenic degrees. The tracheal is in the center, and gastric gas may be seen underneath the left ribcage. As a result, this X-ray image illustrates a typical chest radiogram.

-

C)

In pneumonia-viral detection, X-ray images found bigger opaqueness dispersed jointly, giving GGO presence, the feature usually detected in viral pneumonia.

-

D)

In the diagnostic of pneumonia-bacterial detection, the X-ray image has a circular hollow region which was discovered throughout the bottom section of the right upper lobe. This suggests aggregation and enhanced broncho-vascular marks on the same side.

Another study [64] of medical observation contains the flaky investigation or opacities that resemble viral pneumonia characteristics are common themes seen in X-ray pictures of Covid19 individuals. No anomalies are found in X-ray images in the initial phases of Covid19. Nevertheless, because the illness continues, Covid19 eventually emerges as a distinctive unilateral patchy infiltration, including the lung’s middle, top, and bottom regions, sometimes with indications of condensation. Keeping in mind the potential ECG [20] aspects including availability, dependability, affordability, real-time observation, and safety, a potential high and efficient way to close the diagnostic barrier for COVID19 illness has been developed. However, corona variants classification can be effectively implemented using single stranded genome sequencing. The new tools developed by [21] for portable IoT deployable lightweight lung disease classification with COVID19. Similarly, the cloud based remote-diagnosis application implemented in [19] will be helpful for radiographer to detect COVID+COPD illnesses in single shot.

8.2 Challenges and future plan

This section discusses the challenges (gap analysis) for detecting and classifying Covid19 using DL models and offers necessary future plans to address those challenges. A gap analysis from the reviewed articles is depicted in Fig. 9.

8.2.1 Challenges

A lack of gold-standard data is one of the significant challenges [98, 167]:

Removing already bio-marked images from publicly downloaded datasets [9] also specific imageries comprise verbatim information, which may hamper network forecast [150]. Ultrasound imageries are inclined to be noisy due to the comparatively short penetration of sound waves into organic tissue equated to X-rays [64]. The complex data disproportions are found in the studied papers [13, 63, 98, 167]. The imbalance of data often increases bias through the training stage of DL techniques. The lack of a Covid19 class dataset leads to class imbalance problems.

Based on the different types of pneumonia and other lung diseases:

Several authors have merged different types of pneumonia(almost 15) into a single class for classification with Covid19. This leads to a collision between multiple chronic lung diseases and false predictions [37]. There is a chance of overlapping manifestations(biomarkers) of Covid19 with other pneumonia (IAVP) cases, forming pneumonia and eosinophilia Pneumonia [167].

Medical and radiography domain knowledge

Problems in separating lenient tissue produced by low contrast in X-ray imageries have directed confident investigators to improve contrast [3]. It’s a pre-processing phase in which radiography-based findings are examined. Furthermore, lung separation of X-ray pictures is critical in diagnosing pulmonary lesions. Several segmentation algorithms rely on linear filtering/thresholding, rolling ball lenses, and, most lately, CNNs [64] have been presented in the research.

Accuracy:

To check the robustness of the DL model, it’s recommended to train DL architecture having more than ~5500 samples [87]. However, it’s observed that from [7, 13, 121, 144, 170], developed systems achieved above 90% accuracy, whereas their Covid19 dataset class was too small(less than 51 samples); this leads to an overfitting DL model. Apart from accuracy, other performance metrics(sensitivity, specificity, F1-score, recall, precision, etc.) need to consider while testing the DL model. Performance evaluation metrics were used in the various studied papers. A confusion matrix is a method of measuring an algorithm’s effectiveness. The confusion matrix allows us to see whether the classifier is befuddled when distinguishing between the classes. It is particularly useful for calculating accuracy, recall, precision, specificity, AUC-ROC curves. Mathematical details about benchmark performance metrics are as follows:

-

True Positive(TP): The model predicted a positive outcome, and it came true.

-

True Negative(TN): The model anticipated a negative outcome, and it came true.

-

False Positive(FP), also known as Type 1 Error, occurs when the model predicts a positive outcome but turns out to be false.

-

False Negative(FN), also known as Type 2 Error: The model anticipated a negative outcome, but it was incorrect.

-

a)

Accuracy: How many of the classes (both positive and negative) have we accurately anticipated?

Accuracy = (TP + TN)/(TP + FP + TN + FN)

-

b)

Precision: How many of the accurately anticipated instances were found to be positive?

Precision = TP/(TP + FP)

-

c)

Recall (Sensitivity): How many of the actual positive instances might we accurately anticipate using our model?

Recall = TP/(TP + FN)

-

d)

F-score(F1-score/F-Measure): Represents the average of precision and recall.

F1-score = 2*(Recall*Precision)/(Recall + Precision)

-

e)

Specificity: The proportion of actual negatives, which got predicted as the negative (TP).

Specificity = TN/(TN + FP)

Custom DML models:

DarkCovidNet identifies Covid19 quite well for binary categorization. However, there’s room to improve in 3-class categorization. This approach requires additional contributions to distinguish Covid19 from several other lung infections [2]. Also, for 3-class classification, the low accuracy shown for Covid19 circumstances is due to the limited number of training images. In [2], the hybrid model manually concatenates the Convo-layers of Xception and ResNet, increasing the model’s complexity. The studies expect to design significantly tiny simulations without sacrificing accuracy [2]. Because EfficientNet has a low computing expenditure regarding latencies and memory, it is feasible to use better resolution source imagery to examine increasing the intake resolution on simulation quality [150]. The custom model developed QIS-Net is slower than FC-layers [93]. Subsequently, there is an inequality in dealing with segmentation using QIS-Net and cataloging at the FC layers rather than extracting a (32 × 1).

Hardware resources & computation cost: