Abstract

Recently, the detection and segmentation of salient objects that attract the attention of human visual in images is determined by using salient object detection (SOD) techniques. As an essential computer vision problem, SOD has increasingly attracted the researchers’ interest over the years. While a lot of SOD models and applications have been proposed, there is still a lack of deep understanding of the issues and achievements. A comprehensive study on the recent techniques of SOD is provided in this paper. Precisely, this paper presents a review of SOD techniques from various perspectives. Various image segmentation techniques are presented such as segmentation based on machine learning or deep learning, the second perspective concentrates on classifying them into supervised and unsupervised learning techniques and the last one based on manual approach, semi-automatic approach, and fully automatic approach and so on. Then, the paper presents a summarization of datasets used for SOD. Finally, analyses of SOD models and comparison results are presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The first stage in image classification models is the detection and segmentation of salient objects [1]. As known that humans have an amazing ability to visually determine the salient objects (attention center) accurately and quickly than any machine [2]. For a machine to solve this problem, salient object detection (SOD) is used. The importance of SOD in computer vision applications lies in its ability to reduce the degree of computational complexity. SOD in computer vision is interpreted as a process of two stages: detecting the salient object in the image and segmenting the region of the object respectively [3]. The SOD has been used on a large scale as a preprocessing stage in computer vision applications such as image understanding, object detection and semantic segmentation [4]. Recently, image community spread very rapidly such as Instagram application. Not only the image but also the video material which is basically based on image in every single frame. So, image recognition field has become the milestone for all researchers in this field, especially SOD. The artificial intelligence methods which are used in medical imaging segmentation have been divided into two kinds; the first one is the traditional machine learning techniques, and the second is the deep learning techniques [5]. According to machine learning, the algorithms of medical image segmentation was classified into two types: supervised and unsupervised learning [6]. Also, segmentation techniques are divided into three categories: Manual approach, semi-automatic approach, and fully automatic approach [7]. However, on MRI and CT medical data, segmentation methods grouped into four classes. These classes are region growing, active contour, edge detection, and hybrid techniques [8, 9].

Researchers have conducted several studies in a lot of fields such as medical diagnosis, remote sensing images, Agriculture field, transportation field and so on to identify different targets automatically to reduce errors caused by human and save effort and time [10]. Object detection has a great importance in medical diagnosis of many diseases such as Prostate Cancer [11], Breast Cancer [12], bone diseases [13], and teeth disease [14]. Also, SOD has attracted researchers’ attention in remote sensing field; Variations in target size and kind, wide range, vertical views, and Complex backgrounds make the object detection process a challenging task. In the agriculture field, the diseases of the plant cause huge damage, leading to significant crop losses.

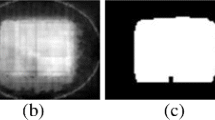

The SOD deep learning models offer a robust tool and accurate results for the detection of plant disease. Intelligent Transportations systems can play a significant role in developing the transportation field. The desired information can be extracted from the cameras by the different techniques of artificial intelligence. Samples of salient object detection from many references are presented in In Fig. 1. There are a lot of popular datasets which are used for SOD such as DUTS [15], SBU [16], ISTD [17], UCF [18], SOC [19], DUT-OMRON [20], HKU-IS [21], ECSSD [22], PASCAL-S [23], and SOD [24].

The models which are used for salient object detection are divided into two types; feature-based models and deep learning models as illustrated in Fig. 2. Feature-Based Models are the traditional methods of salient object detection which are considered saliency cues. The contrast is one of them, which analyzes the uniqueness of each patch or pixel in the image regarding local or global contexts. But these methods fail to retain image details and they are not capable of locating salient objects of very large size [30]. Traditional methods can generally be categorized in two ways based on the types of features they exploit [3].

Region-based vs. Block based analysis

Block based (i.e., patches and pixels) is an early technique of detecting a salient object, while regions are spread out on a large scale with the super-pixel algorithms development [31].

Extrinsic cues vs. intrinsic cues

The difference between intrinsic and extrinsic cues is represented in the use of attributes. When using attributes from single image, this is called intrinsic cues. But, in the case of a collaboration of similar images (e.g. Depth map, statistical information, or user annotations) to make the detection of salient object in the image easier, this is called extrinsic cues.

Deep learning-based models is one of the most significant techniques that has contributed to the improvement of salient object detection. Recently, many researchers have tended to use deep learning-based techniques to solve the SOD problems, which greatly improves the performance. Convolutional Neural Networks (CNNs) is one of the most used deep learning models and are discussed in detail. [32, 33]

After the detection of salient objects in the image and feature extraction, classification models are used to determine the class label of these objects such as, Random Forest, SVM, logistic regression, KNN and deep learning models. Random Forest (RF) is a Tree-based method for making decisions based on characteristics of multiple Decision Trees. The following techniques use RF for classification [34,35,36,37,38]. The Pseudo Code of RF is shown below, and Fig. 3 illustrates the idea of the algorithm [39].

Random Forest pseudo-Code

-

1-

Select "m" samples from total "k" samples, where m<<k.

-

2-

Among the "m" samples, calculate the node "n" using the best split point.

-

3-

Using the best split, divide the node into daughter nodes.

-

4-

Repeating steps 1 to 3 until "X" nodes number has reached.

-

5-

Repeating steps 1 to 4 for "t" times to build forest and create "t" number of trees.

-

6-

predict the outcome of each decision tree.

-

7-

For each predicted target, voting will be performed and select the most voted prediction result.

Support Vector Machine (SVM) is a non-parametric supervised machine learning model for classification and regression. There are two types of SVM, Linear SVM is the first type which is used for dataset that can be classified using a single straight line into two classes. The second type is Non-linear SVM which is used for datasets that cannot be separated by straight line. The following techniques use SVM for classification [34, 40, 38, 41]. The illustration of SVM algorithm is shown in Fig. 4 [42].

The objective is the selection of maximum marginal hyperplane, and it is selected in the following steps:

-

1-

Generate hyperplanes which separate the classes in the best way.

-

2-

Select the right hyperplane with the max separation from nearest data.

The Logistic regression is a supervised machine learning algorithm used to determine the probability of a target variable. In the logistic regression the sigmoid function is used. There are three logistic regression types: the first type is binomial or binary, in this type a dependent variable has two possible values 0 or 1 such as yes or no, success or failure, etc. Multinomial is the second type which has more than two unordered values, such as type A or B or C. the final type is the ordinal that has more than two ordered values such as good, very good, excellent and the score of each category like 1,2,3. The logistic function is the core of the logistic regression technique and is defined as in Eq. 1:

Where y= w0 + w1. x1 + w2. x2 + … + wn. xn. The regression coefficients w0, w1, w2, ... wn are calculated using Maximum Likelihood Estimation, and x1, x2, x3,..., xn represent the features. [43]. The following techniques use logistic regression for classification [38, 44,45,46].

The K-nearest neighbors (KNN) is a supervised machine learning technique used for solving regression and classification problems. The following techniques use KNN for classification [34, 47, 48, 40], the Pseudo Code of KNN is shown below. [49]

KNN Pseudo Code

-

1.

Load the data.

-

2.

Initialize the value of K.

-

3.

For each sample in the training data.

-

1.1.

Calculate the distance between each row of training data and test data.

-

1.2.

Sort the distances in ascending order.

-

1.3.

Pick the top k rows.

-

1.4.

Pick the most repeated class of these rows.

-

1.5.

Return the class label.

-

1.1.

Although the traditional classification methods have been used in many problems, the classification accuracy is unsatisfactory. While the deep learning models have a powerful ability in learning that integrates the process of feature extraction and classification into one model which improves the classification accuracy. The most popular deep neural network model for the problem of image classification is Convolutional Neural Networks (CNNs) [50]. The following techniques use CNN for classification [51,52,53,54].

1.1 The list of contributions

This paper presents the following contributions:

-

A comprehensive review for salient object detection models has been presented. This paper includes many methods that have not mentioned in previous work such as weakly-supervised methods, omni supervised methods, transformer-based methods.

-

In addition to semantic and instance segmentation, panoptic segmentation, and the recent panoramic panoptic segmentation have been presented.

-

Visual saliency detection in adverse conditions such as the nighttime are presented in this paper.

-

Popular datasets for salient object detection have been discussed in detail.

-

F-measure, accuracy, and S-measure between 57 approaches has been presented. Also, a computational complexity comparison in terms of elapsed time for each method is presented.

-

Comparative study in terms of model, dataset, execution time and result has been introduced.

The rest of the paper is organized as follows; in section 2 the popular datasets for SOD are presented. In section 3, evaluation matrices are described. In section 4, the feature-based approaches are discussed. Section 5 contains a review of deep learning-based models. Section 6 includes the performance evaluation for 41 models. And the conclusion is finally presented in section 7.

2 Datasets

There are a lot of images datasets used for SOD for example DUTS which is a large-scale dataset that includes challenging scenarios for salient object detection collected from ImageNet DET datasets that contains mammals and vehicles images. It includes 15572 images divided into 10,553 images for training and 5,019 images for testing. Another example is SOC dataset which includes images with salient objects from daily life object categories in real-world scenes. It provides 6000 images (3600 for training, 1200 for validation, and 1200 for testing). Also HKU-IS dataset consists of 4447 challenging images with pixel annotations of salient objects most of these objects have either multiple salient objects or low contrast. The PASCAL-S dataset consists of 850 images which is used to evaluate the Models performance over images with cluttered backgrounds and multiple objects on the scene. Complex scene saliency dataset (CSSD) [55] contains only 200 images and the extension of CSSD is ECSSD dataset which includes 1000 images with complex scenes collected from the internet. Finally, DUT-Omron dataset consists of 5,168 images with high quality. These images have one or more salient objects and complex backgrounds.

There are also a lot of video datasets used for SOD Such as FBMS-59 which includes 59 videos for motion segmentation, DAVIS-2016 includes 50 videos for video segmentation with pixel-wise annotations per-frame, and VOS includes 200 indoor/outdoor videos that are divided into VOS-E which contains 97 easy videos with obvious foreground objects and VOS-N which contains 103 normal videos with complex foreground objects. Table 1 Illustrates the details of the SOD datasets.

3 Evaluation metrics

The evaluation of the SOD Models is based on Mean Absolute Error (MAE), F-MEASURE (F1 score), and Structure-measure (S-MEASURE), the mathematical background behind each of them is described in detail.

Mean absolute error (MAE)

MAE is a loss function often used in evaluating vector-to-vector (also known as multivariate) regression models. The loss function is defined in Eq. 2.

Where N is the length of predicted vectors A= {x1, x2, x3…, xN} and A* = {y1, y2, y3…, yN}. [64]

F-MEASURE

F-measure is also called F1 score which is used for assessing the performance of algorithms especially when dataset is imbalanced; it is a harmonic mean of precision and recall. The confusion matrix for an unbalanced dataset is shown in Table 2. [65]

Precision and Recall are required for calculating F-measure and their equations are as in Eq. 3, Eq. 4:

The F-measure calculated as in Eq. 5:

S-MEASURE

Structure-measure simultaneously assesses object-aware and region-aware similarity between ground-truth (GT) map and saliency map (SM). The formulation of s-measure is described in Eq. 6. [66]

Where a= {a1, a2…. aN} and b= {b1, b2…. bN} be the pixel values of SM and GT, respectively.\( \overline{a} \),\( \overline{b} \), σa, σb are the mean and standard deviations of a and b. σab is the covariance between a and b.

4 Feature-based approaches for SOD

The models were grouped into three subgroups block-based models with intrinsic cues, area-based models with intrinsic cues, and models with extrinsic cues (area and block based). Feature descriptors are also presented [3].

4.1 Block-based methods with intrinsic cues

In this subsection, salient object detection methods which use intrinsic cues from blocks is reviewed. Salient object detection is defined as detecting the rarity, uniqueness, or distinctiveness in a scene [67]. The rarity was calculated as the pixel-based center-surround contrast. The approaches which based on patches or pixels have two shortcomings: i) the edges of the high contrast usually stand out and this prevents the detection of salient object. ii) When using blocks of large size, the salient object boundary is not preserved well [3]. Therefore, the need for different methods to overcome these problems appeared and the region- based methods will be discussed in the next subsection.

4.2 Region-based models with intrinsic cues

The region-based segmentation techniques are a segmentation technology depends on discovering regions directly. There are two main types of region-based extraction techniques: the first is region growth, which begins from one pixel then gradually combines to form the required segmentation region; the second one is split and merge (Quadtree Method) whose task is to cut the required segmentation region from the overall situation step by step [68, 69].

The region based models for salient object detections adopt signals from image regions created using techniques such as mean-shift [70, 71], TurboPixels [72], graph-based segmentation [73,74,75], or Simple Linear Iterative Clustering (SLIC) [76, 77].

The regional saliency score is the average score of its segmented patch and contained pixels based on multi-scale contrast [78]. Gargi Srivastava and Rajeev Srivastava [79] compute the score of background for each region based on the difference between the image regions and the feature vector of the boundary.

4.2.1 Mean Shift algorithm

The mean shift is a non-parametric clustering algorithm with no assumptions and used in unsupervised learning. The data points are assigned to the clusters iteratively and the points are shifted towards the highest intensity of data points. It needs only one parameter called bandwidth which determines the clusters number automatically. The multivariate kernel K(a) is calculated as in Eq. 7. [80]

In the above equation, fd and sd represent the feature dimensionality and spatial domains, respectively, and hf and hs are the kernel bandwidths and are set by the features vector, n is the constant of normalization and KX is the Epanechnikov-kernel which is shown in Eq. 8.

In [81,82,83,84] the mean shift algorithm is used. Fatemi, et al. [81] use the color space in the feature extraction step. Based on features, the clusters are created. Then, the mean shift algorithm is used to detect and separate the maximum pixels of the object from the background. Xia, et al. [82] Use a regional based algorithm for salient object detection. First, the image segmentation is done using a superpixel algorithm and for each region, the feature vector is extracted. A mean shift algorithm of ten different bandwidth is used to obtain the Clustered region and ten clustered maps. After that, ten saliency maps are generated from the calculation of the ten clustered maps. XGBoost model is used to merge the ten saliency maps into one saliency map. Fatemi, et al. [83] use a mean shift algorithm for object detection. The steps can be as follows: the image segmentation is applied according to their segment border distance, segment distance and color, five features are obtained. Next, the classification is performed by the mean shift algorithm according to the similarity of their feature and the output image is created for the object detection by setting a salient score for each cluster. Shen and Wu [84] used mean shift algorithm for image segmentation. For each segment, the average saliency is computed. Also, for the entire image the total mean saliency value is obtained. If the average saliency in the segment is greater than twice of the total mean saliency value, this segment is considered as foreground.

4.2.2 Simple linear iterative clustering (SLIC)

The SLIC algorithm is used for clustering pixels and generates super pixels based on the proximity in the plane of the image and the color similarity. This is applied in the five-dimensional space. So, the spatial distances must be normalized to use the Euclidean distance in the five-dimensional space. [85]

In 2015, Tong, et al. [86] used the SLIC algorithm to generate the super pixels and construct the weak saliency map. Then, SVM classifier is used to detect salient pixels.

4.2.3 Multi-scale contrast

Contrast is the most widely used local feature for salient detection because the contrast factor mimics the human optical receptive fields. It is usually calculated at multiple scales without knowing the salient object size. The feature of multiscale contrast is defined as in Eq. 9. [87]

Where Im is the mth-level image in the Gaussian image pyramid and M represents the number of pyramid levels. W(y) represents the window.

4.2.4 Frequency and spatial domain analysis

Frequency analysis offers an opportunity to manipulate the global information in the input image. This analysis is based only on the Fourier Transform. While the spatial domain deals with salient pixels and local information in the image. Li, et al. [88] proposed a new model for saliency detection by merging global information from frequency domain and local information from spatial domain. The non-salient regions are modeled in the frequency domain analysis instead of modeling salient regions. While in the spatial domain those informative regions are enhanced through using a center-surround mechanism. Finally, the saliency map is produced by combining the outputs from these two analysis channels.

4.2.5 Window composition

The window composition is one of salient object detection techniques. The image is segmented, and window composition is measured. The saliency score of a window is used to determine how likely the window includes a salient object. The score function is applied to all windows of different object sizes in the whole image and windows with maximum score are detected as salient objects. This detector is more likely to achieve high results than utilizing an intermediate saliency map as it works on the whole original image and searches the window space. [89]

4.3 Models with extrinsic cues

In the third subgroup, models that adopt external cues will be discussed. In addition to the visible signals observed from one input image, the external signals may be derived from the ground truth captions of the training images, video sequence, similar images, or a group of input images of common salient objects [3].

4.3.1 The detection of salient objects with similar images

With a growing large amount of visible content which is available on the web, the detection of salient object has been studied in recent years with images which are visually like an input image. In general, given the image O, S similar images CO = {Os}Ss = 1 are retrieved firstly from a large set of images C. The salient object detection on the input o can be helped by checking up these similar images.

4.3.2 Co-saliency object detection

Co-salient object detection (CoSOD) is a recently emerging and growing branch of SOD. Instead of focusing on computing the saliency on only one image, the algorithms of CoSOD concentrate on detecting the salient objects which is shared by various input images {Xm}Ym = 1. This means that such objects may be of the same category which shares similar visual appearances or the same object with various viewpoints. The main feature of co-salient detection algorithms is that their inputs are a collection of images, whereas traditional models of salient object detection need only one input image [89, 90].In 2020, Fan, et al. [91] build a new dataset called CoSOD3k, which is a high-quality dataset consisting of co-salient objects, the similarities are in the level of conceptual or semantic. What is worth mentioning, CoSOD3k is the most challenging Co-salient object detection dataset so far that consists of 160 groups and 3,316 images annotated with classes, instance-level annotations, object-level, and bounding boxes. Moreover, a comprehensive study is also provided by summarizing 34 algorithms of cutting-edge algorithms, 19 of them are benchmarked over the CoSOD3k dataset in addition to other 4 existing datasets.

4.4 Feature descriptor

The development of feature descriptors has received attention and attracted a lot of researchers. The main idea of feature descriptor algorithm is that it takes an image as an input then outputs feature vectors or feature descriptors [92]. The feature descriptors that are commonly used are Histogram of Orientated Gradients (HOG) [93] , Scale Invariant Feature Transform (SIFT) [94, 95], Speeded-Up Robust Features (SURF) [96], Gray-Level Co-occurrence Matrix (GLCM) [97, 98], and Local Binary Patterns (LBP) [99, 100]. A review of each feature descriptor algorithm will be provided.

4.4.1 Histogram of orientated gradients (HOG)

HOG descriptor is a well-known appearance and shape algorithm. It was invented in 2005 by Dalal and Triggs to reveal a pedestrian in an image. The idea of the Hog algorithm is to locally compute the gradient orientation for each pixel and extract the normalized histogram features over overlapping and dense grid. [101] The steps of HOG algorithm is illustrated in Fig. 5. In the HOG algorithm, the image is divided into blocks and each block of them is divided into cells. For each cell, the gradient is computed by calculating the horizontal and vertical derivatives using the Surrounding pixels. The gradient is computed as illustrated in Eq. 10 and Eq. 11.

Where L (x, y) is the image pixel at the location x and y, Gx is the horizontal gradient and Gy is the vertical gradient. The second phase in the HOG algorithm is to compute the orientation and magnitude of each pixel as illustrated in Eq. 12 and Eq. 13.

The third step is to create the histogram which is based on the calculated pixel’s orientation in each cell that could be in range of 0 to 180 or 0 to 360 depending on the configuration of the implementation. A histogram is a plot used to illustrate the frequency distribution of the continuous data. On the x-axis, the orientation or angle in the form of bins is exist and the frequency is on the y-axis.

In the fourth step, the histogram normalization for each cell within each block is applied. Finally, concatenating all values of the histogram in the selected window to obtain the Hog features. [102]

4.4.2 Scale-invariant feature transform (SIFT)

The SIFT is an algorithm to detect and identify the local features in images. It was invented in 1999 by David Lowe. SIFT can transform images into feature vectors which are used for recognizing objects. Five stages are applied for SIFT descriptor: [103]

Detection of scale-space extreme

In the first step, several octaves of the data image are generated. Gradually blurred out images are generated from the original image. After that, the original image is resized to half size. Then blurred out images are generated again. And this step is repeated. The scale range is computed based on Eq. 14.

Where B is a blurred image, G is the operator of Gaussian Blur, I is the data image, a, b are the location coordinates, σ is the amount of blur or scale parameter. The greater the value is, the higher the blur is. And the * is used to apply Gaussian blur G at location a, b of the image I. [104]

LoG approximations

In the second step of SIFT, Difference-of-Gaussians (DOG) which is a Laplacian-of-Gaussian (LoG) estimate, is applied to identify keypoint invariants and location in the scale space. Localizing scale space D (a, b, σ) by computing the variance in two images, one of them with scale h multiplies the other. Eq. 15 illustrates the difference between two Gaussians. [105]

Keypoint localization & filtering

In the third step, the minimum and maximum values of DOG images is detected with comparing its eight neighbors in the current scale, and the 9 neighbors in the scale below and the scale above. If this value is the greatest or lowest value of all other points, so this point is an extremum. After that, the keypoints number is reduced by removing keypoints of low contrast or keypoints that located on the edge. This done by computing the Laplacian as illustrated in Eq. 16 [105]

Keypoint orientations

Keypoint orientations is the fourth step. The goal of orientation assignment is to designate a specific orientation to the keypoints based on the characteristics of the local image. To apply orientation assignment the histogram and small area around it is used. After creating an orientation histogram, the most salient gradient orientation(s) are detected. If there is just one peak, it is designated to the keypoint. But in case of several peaks above 80% mark, all of them are transformed into a new keypoint taking into consideration their respective orientations. [106]

Generating a feature vector

This is the final step of SIFT. So far, the rotation and scale invariance are calculated. The remaining is to differentiate between keypoints by making a fingerprint for every keypoint. A 16x16 window of pixels around the keypoint is split into sixteen 4x4 windows. A histogram of 8 bins is generated from each 4x4 window. The first bin contains gradient orientations in the range 0 to 44 degrees. The second bin contains gradient orientations in the range 45 to 89 degrees and so on. This is done for all 16 pixels (4x4 blocks) and sixteen 4x4 regions. So, you finally need 4x4x8 = 128 numbers. Once 128 numbers are gotten, normalize them to get the feature vector ready. The final feature vector consists of the 128 normalized values. [106]

4.4.3 Speeded-up robust features (SURF)

Speeded up Robust Features (SURF) is one of the computer vision techniques which is used for classification and object recognition. SURF follows the idea of SIFT algorithm, But SURF is faster and robust when it is compared to SIFT. In SIFT, approximated LoG with DoG for detecting scale-space. But SURF approximates LOG with use of Box filter. SURF depends on Hessian matrix determinant for location and scale. The hessian matrix is used to find the max value. For each point A= (a, b) in image I, the Hessian Matrix H (A, σ) inside A, on the σ scale determined as the formula in Fig. 6.

Where Laa(A, σ)is the second Gaussian convolution from image I at point A and the same for Lab(A, σ) andLbb(A, σ). Wavelet responses is used for feature description task in SURF for both vertical and horizontal direction as shown in Eq. 17.

When this equation is represented in a form of vector, the SURF feature descriptor is obtained [107].

The performance evaluation and elapsed time of different feature-based approaches for SOD in different SOD datasets is shown in Table 3.

4.4.4 Local Binary Pattern (LBP)

Local Binary Pattern (LBP) is a simple texture operator that describes the relation between pixel and its neighbors. The most common LBP approaches is that each 3×3 window is processed to extract the code of LBP. the processing includes thresholding the pixel in the center of window with its around pixels using the window median, window mean, or the center pixel itself as thresholds. Then, the code of LBP is given by Eq. 18. [108]

Where I_thresh is the threshold value and I_n are the surrounding window pixels intensities with (n=0, 1, 2…,7). The algorithm steps of LBP as follow:

Local Binary Pattern steps | |

1- Convert the image to grayscale image. 2- For each pixel in the image, select a neighbor of size r surrounding the center pixel. 3- For each pixel, compare the center value and the neighbor. In binary operation, if the neighbor values are less than the center, record 0 else record 1. 4- Convert the binary value to decimal. |

4.4.5 Binary robust independent elementary features (BRIEF)

Binary Robust Independent Elementary Features (BRIEF) is a fast feature descriptor algorithm that cannot identify the key points by itself, so it is combined with key point detector. it uses a smoothed image; pixel pairs are selected and the gray values between them are compared. Test (t) on patch (P) of size (M×M) is defined as in Eq. 19.

Where I (p, a) is the intensity of the pixel in a smoothed version of p. Define a set of binary tests by choosing a set of nd(a, b) -location pairs. The BRIEF descriptor is taken to be the nd dimensional bit that corresponds to the decimal representation in Eq. 20. [109]

4.4.6 Oriented FAST and rotated BRIEF (ORB)

ORB is a very robust binary descriptor which based on FAST feature detector and BRIEF binary descriptor. The algorithm steps are as follows: [110]

ORB algorithm | |

1. Reduce the size of the input image to various scale levels. 2. On all levels, extract FAST features. 3. Apply grid filtering. 4. Feature orientation extraction. 5. Descriptor's extraction. |

4.5 Saliency detection in adverse conditions

While saliency detection methods achieve high accuracy rate in many complex problems, research shows that there may be unexpected situations or adverse conditions such as saliency detection in the nighttime. In [119], a novel Bayes saliency detection method was proposed for nighttime RGB traffic images. In this approach, prior estimation, weight estimation, feature extraction, and Bayes rule are applied to compute saliency maps. Then, an effective and simple object proposal generator which is based on the Bayes saliency map was proposed. In [120], the gap between day and nighttime images for semantic segmentation was analyzed. Generative Adversarial Networks (GANs) method was used to propose two methods to tackle the gab. On the other hand, during night inference, night to day conversion was performed to convert the input data into a suitable domain that were trained in daylight images.

5 Deep learning models for SOD

Convolutional Neural Networks is the most popular deep learning model for image recognition and classification. The components of CNN and different CNN architectures are presented below. [32, 33]

5.1 Convolutional neural networks (CNNs)

CNN is one of the most used tools in the field of machine learning. Many vision problems are solved using CNN such as semantic segmentation [121, 122], edge detection [123], and object recognition [124]. Also, most recent research has shown that CNNs are effective in SOD [125].

5.1.1 CNN Components

The CNN consists of several types of layers:

Convolutional layer

The convolutional layer consists of set of neurons where each neuron represents a kernel. The kernel works by splitting the image into blocks which helps in feature extraction. Kernel used a set of weights to convolve with the images by calculating a dot product between the small region and the weights. The operation is shown in Eq. 21.

Where \( {f}_l^m \) (a, b) represents a (a, b) element of feature matrix for lth layer and mth neuron, ih (x, y) represents (x, y) element of hth channel of an image i and \( {e}_l^m \)(t, v) represents (u, v) element of mth kernel of lth layer.

Pooling layer (downsampling)

In this layer, the amount of information which was generated by the convolutional layer is scaled down and the most important information is maintained.

Activation function

It is a decision function which helps in complex pattern learning. The appropriate activation function selection can accelerate the process of learning. There are several activation functions such as tanh, sigmoid, SWISH, maxout, ReLU, and different types of ReLU such as leaky ReLU and ELU.

Batch normalization

The normalization is applied to address the problem related to the distribution change of hidden units' values. After applying the normalization, the distribution of feature map values is unified by setting them to 0 mean and unit contrast batch normalization.

Fully connected layer (FC)

FC layer is the final layer in the network used for the data classification and the determination of the class label. [126]

In 2020, Üreten, et al. [127] developed a CNN method to help doctors during the diagnosis of rheumatoid arthritis. The dataset which contains 180 radiograph images from the faculty of medical in Kırıkkale University is used in this study. 81 patients of them are normal and the 99 patients suffer from RA. The dataset is divided into 135 radiographs images for training (74 RA and 61 normal) and 45 for testing (25 RA and 20 normal). The results show that the network achieved 73.33% accuracy, 0.0167 error rate, 0.6818 sensitivity, 0.7826 specificity and 0.7500 precision.

5.1.2 CNN Architecture

Over the years, CNN have evolved greatly, and the most important CNN models will be presented. [128]

LeNet-5

LeNet-5was created in 1998 by LeCun [129]. It consists of two convolutional layers, three fully connected layers and has a subsampling layer which is currently known as pooling layer. It contains around 60,000 parameters and is widely used for handwritten digits recognition.

The first layer is the input layer which is built to take 32x32, and these are the input dimensions to the next layer. The grayscale images used in [129] had normalized their pixel values from (0 to 255), to values between (-0.1 and 1.175) to ensure that the images batch have standard deviation of 1 and mean of 0 to reduce the training time. The first convolutional layer C1 outputs 6 feature maps with dimensions 28x28, and the size of the kernel is 5x5. The layer that follows C1 is the subsampling layer S2 and known as downsampling layer. This layer halves the dimension of feature maps which were received from the previous convolution layer and outputs 6 feature maps of size 14×14. The third convolution layer is C3 with 16 convolution kernel of size 5×5. The input of the first six feature maps of C3 is continuous subset of the three S2 feature maps, the input of the following six feature maps is the four continuous subsets input, and the input of the input of the following three feature maps is the four discontinuous subsets. The last feature map input is all S2 feature maps. Layer S4 and S2 are similar but S4 with size of 2 ×2 and 16 feature maps with dimensions 5×5. The convolution layer C5 is with 120 convolution kernels with kernel size equal 5×5. The fully connected layer F6 is connected to C5, and the outputs are 84 feature maps.

AlexNet

AlexNetwas developed in 2012 by Krizhevsky, Hinton, and Sutskever [130]. The AlexNet architecture won the 2012 ImageNet competition and consists of three fully connected layers and five convolutional layers. The architecture of AlexNet is like LeNet-5 architecture, only much deeper and larger, and rather than stacking a downsampling layer on top of every convolutional layer, it was directly stack convolutional layers on top of each other. AlexNet contains around 60 million parameters. In AlexNet, Rectified Linear Units (ReLUs) is used as activation functions.

The first layer is a convolution layer, the size of the input image is 224×224×3, and number of filters is 96 with filter size 11×11×3. The second layer is a max pooling layer with 55×55×96 input and 256 filters of size 5×5×48. The network adds max pooling layers with window of 3×3 and a strid of 2 after the first, second, and fifth convolution layers and layers 3,4,5 follow on the same lines. Layer 6 is a fully connected layer with input 13×13×128 and output 2048. Layers 7, 8 follow on the same lines. There are two Fully connected (FC) layers after the last convolution layer. These huge FC layers include 4096 outputs.

VGG-16

In 2014, Visual Geometry Group (VGG) [131] introduced the VGG-16 which consists of 13 convolutional layers and 4 fully connected layers. Just like AlexNet, VGG-16 used ReLU as activation function. It contains 138 million parameters. Developers of VGG also developed a deeper version called VGG-19.

The input to VGG is RGB image of 224 x 224. in the first two layers, there are 64 channels of 3×3 filter dimensions and stride 1. After max pooling layer of stride 2, there are a stack of convolutional layers with different depth in different architectures. Layers 3 and 4 are convolution layers using 128 filters. After max pooling, there are three convolution layers with 256 filters. After max pooling, there are six convolution layers with 512 filters with max pooling after each three layers. Then, there are three fully connected layers: each of the first two layers have 4096 channels, and the third contains 1000 channels. The SoftMax layer is the last layer.

ResNet-50

The residual network (ResNet-50) was the winner of the 2015 ILSVC challenge and created by He, et al. [132]. It contains 26 million parameters and 50 layers, each ResNet block have two or three convolutional layers.

The input image to the network has height and width as multiples of 32. In the first layer of ResNet architecture, the convolution using 7×7 kernel sizes and 64 different kernels, followed by max-pooling using 3×3 kernel sizes and stride size of 2. Afterward, there are 3 Residual blocks with 3 layers each. The kernel size in all 3 layers is 64, 64 and 128 respectively and the convolution operation in each Residual block is applied wit stride 2. Finally, there are average pooling layers followed by fully connected layers that have 1000 nodes and softmax function at the end.

Basically, deep-learning based SOD models can be divided into two categories: the first category is Classic Convolutional Network (CCN-based) models. The second one is Fully Convolutional Networks based (FCN-based). In CCN-based models, the multi-layer perceptron (MLP) is used for the detection of saliency. The input image is over-segmented into small regions. Then, the high-level features are extracted using CNN. After that, these features are fed to a MLP to determine the value of saliency of small region. Unlike FCNs, the spatial information from features that are extracted from CNNs cannot be preserved due to the use of MLPs.

5.2 Classic convolutional network (CCN)-based models

CCN models is the two-stage object detection models such as Region-based Convolutional Networks(R-CNN) [133], Fast R-CNN [134], Faster R-CNN [135], and Mask R-CNN. [136]

The R-CNN family contains several models for object detection. All these models are region-based and have achieved significant development with time, resulting in increased efficiency and accuracy. [137]

5.2.1 Region-based convolutional networks (R-CNN)

The R-CNN model is based on selective search to detect the objects in the image. This model divides the image into huge number of regions and working collectively on them. These collections are checked if they have any object or not. The main factor for the success of this method is the accuracy of object classification.

In 2020, Ucar, et al. [138] proposed a Regions-based Convolutional Neural Network (R-CNN) model for the detections of aircraft using the satellite images from airports in Turkey. The dataset contains 32,000 images: 8,000 images from the class plane and 24,000 images from the class not aircraft (NPlane). For balance, only 16,000 images are used from equal number of plane and NPlane. The results show that the model achieved %98.40 accuracy, %98.62 sensitivity, %98.13 Specificity, 0.0187 FP Rate, and 0.0138 FN Rate.

5.2.2 Fast R-CNN

The Fast R-CNN model uses the R-CNN structure with the Spatial Pyramid Pooling (SPP-net) to make the R-CNN faster. The SPP-net is used in Fast R-CNN to calculate the representation of CNN only once for the whole image. This representation is then used to calculate the representation of CNN for each region generated by the approach of selective search. The bounding box regression is also included in the Fast R-CNN Model along with the process of training. This makes both of classification and localization processes in a single process which make the process faster.

5.2.3 Faster R-CNN

The Faster R-CNN model is faster than the Fast R-CNN. The selective search process in Fast R-CNN is replaced with Region Proposal Network (RPN) in Faster-RCNN. The RPN implements a small convolutional network which makes the selection process faster and generates area of interest. Anchor Boxes is also used in this method along with RPN to handle the scale of objects and multiple aspect ratios. Faster R-CNN is considered one of the most efficient and accurate object detection models.

In 2020, Wei, et al. [139] proposed a detection and classification model for breast cancer with use of VGG-16 and Faster Region-based CNN (Faster R-CNN) on OASBUD dataset of ultrasonic images. This dataset contains 200 ultrasonic images for both training and testing. There are 112 ultrasound images for training, 48 images for validation and 40 images for testing with no duplicate images; each ultrasound image has only one region of interest (ROI). The results show that Faster R-CNN improve the performance and obtain accuracy more than 95%.

In 2020, Alalharith, et al. [140] developed a deep learning model for the periodontal disease detection in orthodontic patients. Two faster Region based CNN using ResNet-50 network were developed; one model for the teeth detection to determine the region of interest (ROI) while the other model for the gingival inflammation detection. The dataset was obtained on 7 October 2019 from the Dentistry College, university of Imam Abdulrahman bin Faisal. It contains 134 intraoral images which divided into 107 images for training and 27 imaged for testing. The results show that the model of teeth detection achieved 100% accuracy, 100% precision, 51.85% recall, and 100% mAP, while the model of the inflammation detection achieved 77.12% accuracy, 88.02% precision, 41.75% recall, and 68.19% mAP.

In 2020, Li, et al. [141] proposed a deep learning architecture for rice disease detection in videos. The video is first transformed into still frame, then the frame is sent to a detection model based on faster region-based CNN. In this study, the dataset was collected between June and August 2018 in Anhui, Hunan Province, and Jiangxi, China. It consists of videos and images of rice disease; 1760 images for stem borer symptoms, 1760 images for brown spot, and 1800 images for sheath blight. The dataset was divided randomly into training and test with the ratio of 9:1. In terms of blight Precision, Borer Precision, and Spot Precision Custom DCNN achieved 90.5, 100.0, and 0, respectively. Custom DCNN was also compared to YOLOv3, the sensitivity (Recall) of custom DCNN was greater than YOLOv3.The Blight Recall, Borer Recall, and Spot Recall was 74.1, 45.5, and 75.5 respectively in custom DCNN. However, YOLOv3 had higher precision than custom DCNN; Blight Precision, Borer Precision, and Spot Precision was 100.0, 100.0, and 100.0 respectively in YOLOv3.

In 2020, Zhang, et al. [142] proposed a model for the detection of four tomato diseases: ToMV, powdery mildew, leaf mold fungus and blight. The model which used in this study is based on improved Faster RCNN, the VGG16 network was replaced by ResNet to obtain a feature map. Then, the K-means technique was used for clustering. The dataset is a laboratory data from AIChallenger. This dataset includes 61 categories, the selected images of four tomato diseases includes 4,178 images. The dataset is randomly divided into 60% training, 10%validation and 30% test. In terms of mAP, the results show that faster RCNN, faster RCNN-mobile, and Faster RCNN-res101 achieved 95.83, 94.67, and 97.18, respectively; While in case of using K-means, faster RCNN, faster RCNN-mobile, and Faster RCNN-res101 achieved 97.01, 97.37, and 98.54, respectively.

In 2019, Tourani, et al. [143] proposed a model for the detection of vehicles in videos. This model is based on faster R-CNN. In this study, two datasets were used. The Stanford University provides a cars dataset which includes 16,185 images of 196 vehicles classes which is divided into 8,144 images for training and 8,041 for testing. And the authors of this study provide a testing dataset which contains 928 images. The sensitivity factor of the system is 0.985 and needs 74 milliseconds for vehicles detection in real condition data.

5.2.4 Mask R-CNN

Mask R-CNN is the extension of Faster R-CNN and aims to solve the problems of instance segmentation in computer vision applications i.e., to separate various objects in a video or an image. A mask branch on every region of interest (ROI) must be included in Mask R-CNN for object prediction Along with the bounding box (BB) and class label branches. The final three outputs are: a bounding box coordinates, object mask, and class label. Mask R-CNN detects objects efficiently in the image and in parallel generates a segmentation mask for each object with high quality.

In 2020, Dai, et.al. [144] Proposed a deep neural network model for the detection of intraprostatic lesions (ILS). The segmentation of prostate gland and IL was carried out using T2 weighted (T2WIs) images. The mask R-CNN model applied the prostate segmentation with Dice similarity coefficient (DCS) of (0.88±0.04), (0.86±0.04), and (0.82±0.05); sensitivity of 0.93, 0.95, and 0.95 (true positive rate); and specificity of 0.98, 0.85, and 0.90 (true negative rate). However, intraprostatic lesions were segmented with Dice similarity coefficient of (0.64±0.11), (0.56±0.15), and (0.46±0.15); sensitivity of (0.57±0.23), (0.50±0.28), and (0.33±0.17); and specificity of (0.980±0.009), (0.969±0.016), and (0.977±0.013).

5.3 Fully convolutional networks (FCN)-based models

FCN models is the one-stage object detection models such as OverFeat [145], You Only Look Once (YOLO) [146], YOLO V2 [147], YOLO V3 [148, 149] and edge guidance network (EGNet) [150].

5.3.1 OverFeat

OverFeat is a pioneer method of integrating the object detection, object localization and classification into one CNN. The idea of OverFeat is to use the multi-scale rapid sliding window on the final pooling layer to extract the patch. The patches will be merged according to the score of classification for each patch. In this way, the problems of multi-size objects and complex shape are solved. Compared to RCNN, OverFeat has advantages in speed, but shortcomings in accuracy.

5.3.2 You only look once (YOLO)

The R-CNN family that we discuss above focuses on the split of an image into parts and then concentrates on the parts that may contain an object, whereas the YOLO family focuses on the whole image and uses bounding boxes, then determines the class label. The YOLO family is a fast and powerful object detector. The first version of YOLO is YOLO v1 or YOLO unified, the reason for this name is due to that this model unifies both object detection and the object classification in one detection network. YOLO predicts a restricted bounding boxes number to achieve real time detection. The main idea of YOLO is to divide the input image into MxM parts and having every cell immediately regress the location of bounding box and the score of confidence if the center of the object falls into that cell. Many bounding box regressors is used in each cell of YOLO because of the different sizes of objects. During training, the bounding box regressor with the highest Intersection over Union (IOU) will be compared with the label of ground-truth, so bounding boxes at the same position will handle various scales over time. Meanwhile, each cell will predict the probabilities of Class C, provided the grid cell incudes an object with high confidence score.

In 2020, Mandal, et al. [151] proposed a You Only Look Once (YOLO) model for monitoring traffic footage. The dataset consists of 18,509 images were collected from traffic camera in RITIS, New York State DOT, Iowa 511, Transportation and Development Department of Louisiana, and Iowa DOT Open Data. For the traffic prediction queues, Mask R-CNN achieved 90.5% while the highest accuracy achieved is 93.7% by YOLO.

5.3.3 YOLOV2

The first YOLO version contains many Shortcomings such as low localization accuracy because of the predictions which is based on coarse grid and the small objects may be difficult to be recognized because the cell contains two scale agnostic regressors. YOLO v2 is also called YOLO9000 and improves the shortcomings of YOLO v1 by focusing on the localization and recall. The batch normalization, high resolution classifiers, anchor boxes, fine-grained features and multi-level classifiers is used in YOLO v2. A new network called Darknet is used in YOLO v2 for feature extraction, it includes nineteen convolutional layers, five max pooling layers, and finally softmax layer for objects classification. To solve the problem of small objects detection, a pass-through layer is added in YOLO v2 to integrate features from an early layer. It was realized that the resolution of the input is a silver bullet for detecting small object. The input for the backbone not only doubled from 224x224 to 448x448 but also a multi-scale schema of training is invented, that includes various input resolutions at various training periods. YOLO v2 is tested with a version which trained on datasets of 9000 classes hierarchical. Which is an early trial of object detector with multi-label classification. All the mentioned features make YOLO v2 better than YOLO v1.

5.3.4 YOLOV3

YOLO v3 is the final version of YOLO family. It balanced the accuracy, complexity, and speed. Instead of the softmax used by YOLO v2, logistic classifiers are used in YOLO v3 which classifies the objects accurately and makes the multi label classification possible. In YOLO v3 the Darknet53 is used as a feature extractor instead of Darknet19 used by YOLO v2. Darknet53 contains 53 convolutional layers, and this increases the accuracy. V3 also detect small objects in the image which is not possible in YOLO v1. So, YOLO v3 got popular because of all these advantages.

In 2020, Cao, et al. [152] proposed an improved model based on You Only Look Once v3 (YOLO-V3) Algorithm for the detection of the target in the remote sensing images. Ships and airplanes complex backgrounds were selected as MyShip and MyPlane respectively, from DOTA dataset to evaluate the network. In MyPlane dataset, there is 2,368 images; while in MyShip dataset, there is 3,017 images. The results of four network models were compared; in MyPlane, the improved algorithm achieved the best average precision (AP) which is 94.29. While in MyShip, the best AP result is 93.13 for the improved algorithm. The performance of MyShip and MyPlane improved by 1-3% which verifies the effectiveness of the improved algorithm.

In 2019, Zhao and Ren [153] used one-stage object detector called YOLOv3 for the detection of small aircraft of remote sensing images. The dataset consists of 350 remote sensing images which were collected from DOTA dataset and Google Earth. The dataset is divided into 224 images for training, 56 images for verification, and 70 images for testing. The YOLOv3 model was compared with Faster SSD and R-CNN. In terms of AP, the results show that YOLOv3 achieved best result which is 0.925; while in terms of detection time the YOLOv3 achieved 0.023s which mean that YOLOv3 achieved low processing time and excellent accuracy for detection.

5.3.5 Edge guidance network (EGNet)

EGNet is used for detecting salient object in three steps. In the first step, the features of the salient object are extracted by a progressive fusion way. In the next step, the salient edge features are obtained by integrating the information of local edge and global location. Finally, to make use of these features, the salient edge features and salient object features are coupled at various resolutions. [150]

5.4 RGB-D methods

The aim of RGB-D salient object detection (SOD) is to distinguish the most visually distinct regions or objects in a scene from the RGB and depth data. The depth-produced saliency is a helpful complement to color-based saliency models [154]. Attention Complementary Network (ACNet) is one of RGB-D models which selectively collects features from depth and RGB branches. The ACNet consists of three branches based on ResNet; two branches to separately extract features for depth and RGB, several Attention Complementary Modules (ACMs) are used to obtain these features, and the third branch to process the merged features [155]. Another RGB-D method is Complementary Depth Network (CDNet) which consists of four stages: the first stage is RGB and depth encoders, in this stage two independent VGG networks are employed as the encoders for features extraction from RGB and depth image. In the second stage, a new depth map is estimated from the RGB image directly using a decoder to improve the RGB-D SOD performance. In the third stage, the depth feature fusion module selects and merge these depth features from both the original depth and the estimated depth map dynamically. Finally, a two-stage cross-modal feature fusion scheme is applied to take good advantage of depth and RGB features in all levels. [156]

5.5 Instance segmentation

Instance segmentation is the process of detecting and delineating each distinguished object in an image. This problem was raised for the first time in [157], and has been studied a lot in recent years. Inspired by Instance segmentation, salient instance segmentation was proposed in [158] which detects salient regions and recognizes object instances within them. It contains four cascaded steps, including detection of salient region, detection of salient object contour, generation of salient instance and refinement of salient instance. In [159], a framework for saliency detection based on Multiple-Instance Learning (MIL) is presented. In MIL, segmented regions are referred to as bags and the sampled points as instances. Low, mid, and high-level features are incorporated into the process of learning and testing. These features are color, scale, texture, center prior, boundary, and position. after having all these features, a vector is formed for the concatenation of each feature output to train and test the classifier using MIL.

5.6 Panoptic segmentation

The aim of semantic segmentation is to classify each pixel into the appropriate classes, while in instance segmentation, the focus is on segmenting object instances separately. The Panoptic Segmentation (PS) incorporates semantic and instance segmentation to allocate a class label to all pixels and segment all object instances uniquely. In panoptic segmentation, pixel label encoding includes assigning two labels to each pixel in an image: one for semantic label representation and the other for instance id. The pixels with the same label are deemed to be in the same class, and the stuff instance id is ignored. [160]

5.7 Panoramic panoptic segmentations

The most holistic scene understanding, both in terms of field of view and picture level understanding, is panoramic panoptic segmentation. A full understanding of the surrounding provides the agent with the maximum of information, which is crucial for any intelligent vehicle to make informed decisions in a dynamic and secure environment such as traffic in real-world. The Panoramic Robust Feature (PRF) framework [161] includes two stages: A short pretraining stage that is responsible for providing a robust feature representation to the network's backbone. Following the pretraining stage, standard supervised training on labeled datasets is performed. [161]

5.8 Transformer-based methods

The Transformers is a type of encoder-decoder architecture which uses self-attention layers instead of CNNs or RNNs. the encoder maps a series of inputs into a continuous representation that contains all the input's learned information. the decoder then takes the abstract continuous representation and produces a single output step by step. [162] One of the transformer methods was proposed in [163]. DEtection TRansformer, or DETR, are the key components of the new framework for object detection which is based on bipartite matching loss and transformers. Direct set predictions in detection require two ingredients: (1) a set prediction loss that imposes unique matching between ground truth and expected boxes; (2) an architecture that predicts a set of objects in a single pass and models their relationship. Another vision transformer called Swin Transformer was proposed in [164]. It produces a hierarchical representation which is calculated with shifted windows. The shifted window bases self-attention achieves greater efficiency on computer vision problems. This hierarchical architecture can model at different scales and has linear complexity of computation with respect to image dimensions.

5.9 Context modeling methods

Context is a valuable source of information regarding an object's identity, scale, and location which is important for visual saliency detection. In [165] a method for detecting the boundary of salient objects that takes advantage of depth information was proposed. Because context is vital in saliency recognition, the method combines the multimodal fusion network (MCMFNet) and multiscale multilevel context to aggregate the feature maps of multiscale multilevel context to detect salient objects accurately. Finally, a coarse-to-fine method is performed to an attention module retrieving feature maps of multilevel and multimodal to obtain the final saliency map. In [166], Omnidirectional image segmentation was viewed from the perspective of context awareness. The Efficient Concurrent Attention Networks (ECANets) was proposed to capture the inherent omni-range dependencies which can stretch across 360°. As the horizontal dimension of the wideFoV panoramas include rich contextual dependencies, the ECANet includes a Horizontal Segment Attention (HSA) module and a Pyramidal Space Attention (PSA) module. The model training was upgraded by leveraging multisource omni-supervised learning.

5.10 Selective contrast

Selective contrast explores the most distinctive component information in texture, color, and location. In the human vision system, the opponent colors are present in the early color processing instead of the RGB bands. As a result, a transformation could be applied to get the intrinsic color space component, various linear and nonlinear techniques are available for this task. The transformed color vector expression is called selective color as the representation is more distinctive. In term of texture, different pixels arrangement forms different textures, that would provide descriptive information. Each texture is referred to as its closest texture prototype, this expression is called selective texture. In [167], it was pointed out that important things are more likely to be in the center of an image. This selection principle is generally implemented by giving more weights to the center area and less weight to the area near the edge. But in [168], the reweighing is applied on regions instead of pixels because calculations that based on region can resist a noise at a certain level. so, the input image is over segmented, and the segmented regions become the key unit for calculating saliency. Then, the center prone prior is added. But it is found that a salient object is usually located on the edge of the image. So, the principle that emphasizes less on the far region and more on the close region was adopted [168]

5.11 Weakly supervised object detection

weakly supervised object detection plays a vital role for developing new systems for computer vision and has received much attention in the past decade. the goal of Weakly supervised object detection is to learn precise object detectors with image category labels [169]. A spatial-temporal network was proposed in [170] to predict saliency in 360° video automatically. This network is a weakly supervised trained which made specially for 360° viewing sphere. an effective Cube Padding (CP) technique was proposed as follows. firstly, a perspective projection was used to render the 360° sphere on six faces of a cube. Then, all six faces were concatenated in convolution, pooling, LSTM layers while using the connectivity between cube's faces.

5.12 Semi-supervised object detection

Semi-supervised learning (SSL) has attracted attention in recent years because it has a potential to improve the performance of models using unlabeled data. In [171], a simple SSL framework called STAC was proposed for object detection. There are two stages of training, the first stage includes training the model using all labeled data, and the second stage includes training the model using both labeled and unlabeled data and generate pseudo labels of unlabeled data. Then, apply data augmentations and augment pseudo labels to unlabeled images. Finally, Compute supervised loss and unsupervised loss to train a detector.

5.13 Omni supervised object detection

Most of the semantic-based frameworks work with pinhole cameras and images with narrow Field-of-View (FoV). However, when working with omnidirectional imagery, Significant decrease in accuracy occurs. In [172], an omnisupervised learning framework was proposed for efficient CNNs. In the preparation stage, annotations were generated for unlabeled panoramas by utilizing a teacher architecture and assembling the prediction of teacher models. In the training phase, the multi-source pinhole images were blended with manually annotated labels and panoramas images with automatically generated labels. The proposed ERF-PSPNET is efficient and suitable for omnidirectional semantic segmentation.

The performance evaluation and elapsed time of different deep learning models for SOD in different SOD datasets is shown in Table 4.

6 Experimental results and discussion

In this section, the best used design choices are suggested to help in the future.

6.1 Block-based vs. region-based

In block-based methods there are some shortcomings such as, when the blocks size is large, the boundary of the salient object is not well-preserved. Another shortcoming appears in case of edges with high contrast which usually stands out and this affects the salient object detection. Therefore, the need to use the region-based methods appeared to overcome these shortcomings. Region-based methods have led to a significant evolution in the field of salient object detection, and this is due to several reasons including: the regions number are smaller than number of pixels or blocks and this reduces the computational complexity, and the intrinsic cues such as shapes may be missed in the block-based, but the region-based methods preserve them. As a result, the block-based methods, AC coefficient based [182], achieves 90.19% segmentation accuracy and Histogram based [182], achieves 91.67% segmentation accuracy. While the region-based method, meanshift [183] achieves 96.31% in terms of the best performance index. So, using Region-based methods is the best choice.

6.2 Intrinsic vs. extrinsic

The use of intrinsic cues is popular than extrinsic cues. the intrinsic cues effectiveness has been validated earlier while the extrinsic cues are still less explored. In [184], the saliency detection is applied using method on single image and co-saliency method. On single image, the cluster-based method in [146] achieves F-measure=0.854 while RC [185] achieves F-measure = 0.805. However, when using Co-saliency dataset, the co-saliency method achieves F-measure=0.813. When the co-saliency pairs dataset contains images with clear foreground this will make the second image useless. So, the extrinsic cues may not be effective in some cases, and it is not confirmed to be used in all salient object detection problems.

6.3 Deep learning vs feature-based

In recent years, the deep learning methods have attracted attention in the field of SOD because of the Unprecedented results. In term of Feature-based, HOG, achieves accuracy of 46.0%, 81.48% recall, and 58.80% F-measure. While the deep learning based Fast Yolo achieves accuracy of 76.13%, 43.28% recall, and 55.23% F-measure [115]. in addition, more CNN-based models such as ResNet, VGGNet, Res2Net achieves high performance. In term of MAE, the VGG-16 [178], ResNet-50 [186], Res2Net-50 [27], ResNet-101 [33], achieves 0.048, 0.043, 0.05, 0.065 respectively. While in term of F-measure the results equal 0.932, 0.921, 0.905, 0.813, respectively.

In [57], The proposed CVAE-based and ABP-based models achieve state-of-the-art performance. The CVAE-based model achieves .026 MAE, 0.919 F-MEASURE, and 0.921 S-MEASURE. The ABP-based model achieves 0.027 MAE, 0. 913 F-MEASURE, and 0.917 S-MEASURE.

In [26], the proposed PoolNet model can outperform all former state-of-the-art approaches on six widely used SOD benchmarks. On ECSSD dataset, the model achieves 0.945 in terms of F-MEASURE and 0.038 in terms of MAE. While on HKU-IS dataset, the model achieves 0. 935 in terms of F-MEASURE and 0.030 in terms of MAE.

In [56], experimental results show that the BASNet model outperforms 15 state-of-the-art methods on six datasets. On ECSSD dataset, the BASNet model achieves 0.942 in terms of F-MEASURE, and 0.037 in terms of MAE.

In [173], The HED architecture takes 0.022 second which is much faster than other methods. It achieves 0.913 in terms of F-MEASURE and 0.045 in terms of MAE on HKU-IS dataset.

In[174], both high level features and low-level features are used for saliency detection which improve the performance. On PASCAL-S dataset, the proposed model achieves 0.771 in terms of F-MEASURE, and 0.121 in terms of MAE. On ECSSD dataset, the proposed model achieves 0.867 in terms of F-MEASURE, and 0.080 in terms of MAE.

In [175], C2S-Net model achieves state-of-the-art result on five popular SOD datasets. on ECSSD, PASCAL-S, HKU-IS, DUTS-TE, and DUT-OMRON, C2S-Net decreases the MAE by 8.5%, 11.9%, 5.9%, 3.1%, and 4.1% respectively. The F-MEASURE is improved by 1.2%, 4.4%, 0.1%, 0.2%, and 2.7%, on ECSSD, PASCAL-S, HKU-IS, DUTS-TE, and DUT-OMRON, respectively.

In [176], the proposed bi-directional message passing method for salient object detection surpasses 13 state-of-the-art methods. On ECSSD dataset, the F-MEASURE is 0.928, and MAE is 0.044. On HKU-IS dataset, the F-MEASURE is 0. 920, and MAE is 0.038.

From the previous evaluation, it observes that the deep learning models have achieved competitive results. The deep learning models will have a promising future in many computer vision problems especially SOD and other models may be discovered in the future.

7 Conclusion

This paper presents the techniques which are used in the salient object detection field. These techniques are divided into two categories: the first one is the Feature-based models, and the other is deep learning-based models. The object detection application has gradually become extensive with the powerful object detectors in many fields such as medical field, plant field, remote sensing field, and transportation field. Various studies which have been recently implemented in this field were reviewed and analyzed. These studies have shown a significant superiority of deep learning techniques in terms of accuracy and performance. It has been demonstrated that CNN, Deep Neural Network, and faster RCNN have been used repetitively for many robust systems of object detection and have obtained contemporary performance in many of these studies on various datasets. Nevertheless, there are several issues that need to be addressed in the future such as panoramic panoptic segmentations which will improve the performance of salient object detection, but there is very little research in this area, and it will be the direction of future research. Also, most of the current research deals with simple background images however images with complex and cluttered backgrounds still need further study. The RGB-D methods are a promising field which still has some challenges in terms of low-quality depth or Incomplete depth maps and the RGB-D datasets for SOD are too small so, new large-scale RGB-D datasets are required for future research.

References

Goswami A and Dixit M (2020) "An Analysis of Image Segmentation Methods for Brain Tumour Detection on MRI Images," in 9th IEEE International Conference on Communication Systems and Network Technologies

Abdusalomov A, Mukhiddinov M, Djuraev O, Djuraev O and Whangbo TK (2020) "Automatic Salient Object Extraction Based on Locally Adaptive Thresholding to Generate Tactile Graphics," in Appl. Sci.

Borji A, Cheng MM, Hou Q, Jiang H and Li J (2019) "Salient object detection: A survey," in Computational Visual Media

Chen K, Wang Y, Hu C and Sh H (2020) "SALIENT OBJECT DETECTION WITH BOUNDARY INFORMATION," in 2020 IEEE International Conference on Multimedia and Expo (ICME)

Zhou LQ, Wang JY, Yu SY, Wu GG, Wei Q, Deng YB, Wu XL and Cui XW (2019) "Artificial intelligence in medical imaging of the liver," in World J Gastroenterol, .

I. Aganj, M. G. Harisinghani, R. Weissleder and B. Fischl (2018) "Unsupervised Medical Image Segmentation Based on the Local Center of Mass," in scientific reports

T. Sakinis, F. Milletari, H. Roth, P. Korfiatis, P. Kostandy, K. Philbrick, Z. Akku, Z. Xu, D. Xu and B. J. Erickson (2019) "Interactive segmentation of medical images through fully convolutional neural networks," in arXiv e-prints

Voronin V, Semenishchev E, Pismenskova M, Balabaeva O, Agaian S (2019) Medical image segmentation by combing the local, global enhancement, and active contour model. In: Anomaly Detection and Imaging with X-Rays (ADIX) IV. Baltimore, Maryland, United States

A. Borji, M.-M. Cheng, H. Jiang and J. Li (2015) "Salient Object Detection: A Benchmark," in IEEE Transactions on Image Processing

Tsai C-C, Yang Y-H, Lin H-W, Wu B-X, Chang EC, Liu HY, Lai J-S, Chen PY, Lin J-J, Chang JS, Wang L-J, Kuo TT, Hwang J-N, Guo J-I (2020) THE 2020 EMBEDDED DEEP LEARNING OBJECT DETECTION MODEL COMPRESSION COMPETITION FOR TRAFFIC IN ASIAN COUNTRIES. In: 2020 IEEE International Conference on Multimedia & Expo Workshops (ICMEW). United Kingdom, London

Arif JMCTM, Niessen WJ, Schoots IG, Veenland JF (2020) Automated Classification of Significant Prostate Cancer on MRI: A Systematic Review on the Performance of Machine Learning Applications, Cancers (Basel), pp 1–13

Y. Shen, N. Wu, J. Phang, J. Park, K. Liu, S. Tyagi, L. Heacock, S. G. Kim, L. Moy, K. Cho and K. J. Geras (2020) "An interpretable classifier for high-resolution breast cancer screening images utilizing weakly supervised localization," arXiv

Papandrianos N, Papageorgiou E, Anagnostis A, Papageorgiou K (2020) Efficient Bone Metastasis Diagnosis in Bone Scintigraphy Using a Fast Convolutional Neural Network Architecture, Diagnostics (Basel), pp 1–17

H. Chen, KailaiZhang, P. Lyu, H. Li, LudanZhang, JiWu and C.-H. Lee (2019) "A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical flms," Scientific Reports, pp. 1-19

L. Wang, L. Wang, H. Lu, Y. Wang, M. Feng, D. Wang, B. Yin and X. Ruan (2017) "Learning to Detect Salient Objects with Image-level Supervision," in CVPR2017

L. Hou, Chen-Ping, Y. Hoai and D. Samaras (2016) "Large-scale training of shadow detectors with noisilyannotated shadow examples," in ECCV

J. Wang, X. Li, L. Hui and J. Yang (2018) "Stacked Conditional Generative Adversarial Networks for Jointly Learning Shadow Detection and Shadow Removal," in CVPR

J. Zhu, K. G. G. Samuel, S. Z. Masood and M. F. Tappen (2010) "Learning to recognize shadows in monochromatic natural images," in CVPR

D.-P. Fan, M.-M. Cheng, J.-J. Liu, S.-H. Gao, Q. Hou and A. Borji (2018) "A. Salient objects in clutter: Bringing salient object detection to the foreground," in the European Conference on Computer Vision (ECCV)

C. Yang, L. Zhang, H. Lu, X. Ruan and M.-H. Yang (2013) "Saliency Detection via Graph-Based Manifold Ranking," in IEEE Conference on Computer Vision and Pattern Recognition

Li G and Yu Y (2015) "Visual Saliency Based on Multiscale Deep Features," in IEEE Conference on Computer Vision and Pattern Recognition

J. Shi, Q. Yan, L. Xu and J. Jia (2015) "Hierarchical image saliency detection on extended cssd," in IEEE Trans. Pattern Anal. Mach. Intell.

Y. Li, X. Hou, C. Koch, J. M. Rehg and A. L. Yuille (2014) "The secrets of salient object segmentation," in IEEE Conference on Computer Vision and Pattern Recognition

Movahedi V and Elder JH (2010) "Design and Perceptual Validation of Performance Measures for Salient Object Segmentation," in 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition

Wu Z, Su L and Huang Q (2019) "Cascaded Partial Decoder for Fast and Accurate Salient Object Detection," CVPR, pp. 3907-3916

J.-J. Liu, Q. Hou, M.-M. Cheng, J. Feng and J. Jiang (2019) "A Simple Pooling-Based Design for Real-Time Salient Object Detection," arXiv:1904.09569v1

S.-H. Gao, M.-M. Cheng, K. Zhao, X.-Y. Zhang, M.-H. Yang and P. Torr (2019) "Res2Net: A New Multi-scale Backbone Architecture," arXiv:1904.01169v2, pp. 1-10

A. Ahmed, A. Jalal and A. A. Rafique (2019) "Salient Segmentation based Object Detection and Recognition using Hybrid Genetic Transform," in International Conference on Applied and Engineering Mathematics

W. Wang, J. Shen, X. Dong and A. Borji (2018) "Salient Object Detection Driven by Fixation Prediction," in IEEE/CVF Conference on Computer Vision and Pattern Recognition

Alzahrani AJ and Afridi H (2019) "Salient Object Detection: A Distinctive Feature Integration Model," ArXiv, Vols. @article{Alzahrani2019SalientOD

Mansourian L, Abdullah MT, Abdullah LN, Azman A, Mustaffa MR (2016) A Salient Based Bag of Visual Word Model (SBBoVW) : Improvements toward Difficult Object Recognition and Object Location in Image Retrieval. KSII Transactions on Internet and Information Systems (TIIS) 10:769–786

Wang Q, Zhang L, Li Y, Kpalma K (2020) Overview of deep-learning based methods for salient object detection in videos. Pattern Recognition 104:1–16

M.-M. C. X.-W. H. A. B. Z. T. P. T. Qibin Hou (2018) "Deeply Supervised Salient Object Detection with Short Connections," in IEEE Transactions on Pattern Analysis and Machine Intelligence

P. Pancha, V. C. Raman and S. Mantri (2019) "Plant Diseases Detection and Classification using Machine Learning Models," in 2019 4th International Conference on Computational Systems and Information Technology for Sustainable Solution (CSITSS)

P. Prihandoko, B. Bertalya and L. Setyowati (2020) "City Health Prediction Model Using Random Forest Classification Method," in 2020 Fifth International Conference on Informatics and Computing (ICIC)

V. Jackins, S. Vimal, M. Kaliappan and M. Y. Lee (2020) "AI-based smart prediction of clinical disease using random forest classifier and Naive Bayes," The Journal of Supercomputing

A. Mourad, A. Afifi and A. E. Keshk (2020) "Automated Brain Tumor Segmentation in MRI using Superpixel Over-segmentation and Classification," in 2020 21st International Arab Conference on Information Technology (ACIT)

D. Das, M. Singh, S. S. Mohanty and S. Chakravarty (2020) "Leaf Disease Detection using Support Vector Machine," in 2020 International Conference on Communication and Signal Processing (ICCSP)

Z. Bingzhen, Q. Xiaoming, Y. Hemeng and Z. Zhubo (2020) "A Random Forest Classification Model for Transmission Line Image Processing," in The 15th International Conference on Computer Science & Education (ICCSE 2020)

A. Aruraj, A. Alex, M. Subathra, N. Sairamya, S. T. George and S. V. Ewards (2019) "Detection and Classification of Diseases of Banana Plant Using Local Binary Pattern and Support Vector Machine," in 2019 2nd International Conference on Signal Processing and Communication (ICSPC)