Abstract

Over time, Augmented Reality (AR) based technology becomes not being properly to implement with oral and maxillofacial surgery to visualise the narrow area spot in jaw surgery as blood vassals and root canals in these types of surgeries. Image registration is considered the major limitation of using the AR in these types of surgeries and reduces the accuracy of visualising the narrow areas. In this research, we propose a Correntropy based scale ICP algorithm as a solution to improve the image registration during jaw surgery. Correntropy is considered here to minimise the error metric of the ICP algorithm instead of the Euclidean distance measurement compared to the state-of-the-art solution. This led to decrease the registration error, increase the video accuracy and reduce the processing time simultaneously. The proposed system consists of Enhanced Tracking Learning Detection (TLD), which is used as an occlusion removal featured algorithm in the intra-operative stage of the AR-based jaw surgery system. In this research, a Modified Correntropy-based enhanced ICP (MCbeICP) algorithm is proposed for the system’s pose-refinement phase. Moreover, this proposed algorithm (MCbeICP) has a new function to process the point set registration with great noises and outliers. It eliminates the poor performance of the ICP algorithm of the noisy point set. Furthermore, the ICP algorithm considers the scale factor to register the point with different scales of the real-time video and the sample models. Additionally, this method improves the result of the pose refinement stage in terms of registration accuracy and processing time. By this method, the pose refinement stage gives an improved result in terms of registration accuracy and processing time. The samples, which were taken from the upper (maxillary) and the lower (mandible) jaw bone show that the proposed algorithm provides a significant accuracy improvement in alignment to 0.21- 0.29 mm from 0.23 to 0.35 mm and an increment in processing time from 8 to 12 frames per second (fs/s) to 10-14 fs/s compared to the result provided by state of the art. The proposed augmented reality (AR) system is focused on the overlay accuracy and processing time. Finally, this study addressed the limitation of Image registration with AR using modified Correntropy-based enhanced ICP algorithm to implement oral and maxillofacial surgery successfully.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Jaw surgery is implemented to correct the defects or misalignment or treat any diseases in the jaw or teeth. These procedures may include implantation, resection, cutting and drilling [1]. The main difficulty in jaw surgery is the narrow space for visualising the patient’s mouth’s surgical area. It is challenging for surgeons to properly view the area of interest, which may lead to the risk of cutting off nerve channels and the teeth’ root while performing dental surgery. The traditional form of jaw surgery proposed based on the plan, which used to be formed manually by the surgeons based on CT scan. But this process is still insufficient and inaccurate because it depends on the surgeons’ imagination to plan the surgery. To reduce surgeons’ workload, a concept of 2D monitor used into the operation room to visualise the surgical area. This process improved the surgical procedure. On the other hand, Surgeons still required to look at the screen for the surgical navigation that was kept away from them. To overcome these limitations, augmented reality (AR) technology was introduced for surgical navigation [16].

Augmented Reality is the latest technology implemented to be used in the surgery of the medical field. This technology’s key point is to align a model image with the video of the real-time environment. This process will generate a 3-dimensional (3D) view of the surgical scene. This will be allowed for the surgeon to have a clear view of the surgical part. The surgeons can then perform different tasks, such as cutting, identifying the position for drilling, locating the teeth root and nerve channels, and other useful information of the surgical part [16].

Despite having many advantages, the Augmented Reality based surgical navigation is still under the research because of the different limitations of this technology. The system is not highly accurate and taking longer processing time. The major issues in this technology are image registration, depth perception, accuracy, processing time and occlusion. AR can only be adequately implemented in surgery navigation by achieving high accuracy and very short processing time.

Currently, three different categories of AR-based surgical navigation are being used in the medical sector. These are see-through, video-based, and projection-based augmented reality-based navigation. These categories can be classified as marker-based and marker-free in terms of registration technique. Marker-free registration based augmented reality-based navigation system is gaining attention in the field of surgery as it eliminates the need for bulky trackers, and it has many advantages over the marker-based navigation system [24]. The best marker-free navigation system has achieved 0.23~0.35 mm video accuracy and in terms of overlay error and 8-12 frames per second [1].

This paper is aimed to increase image video accuracy and processing time. Image registration is the key point of the augmented reality system. The model image obtained from the pre-operative stage is overlayed with the image of the intra-operative environment. By doing this, the visualisation of narrow area and root canals is enhanced, which will help surgeons to have a clear view of the surgical area. We proposed a solution for image registration by introducing a concept of Correntropy-based ICP algorithm to solve the presence of statistical outliers of the ICP algorithm, enhancing the pose refinement stage (Fig. 1).

The paper is organised as follows: Literature review section includes the improvement and limitation of current solutions, which are worked on the field of dental surgery and related field using AR technology. The 2.1 section contains a detailed overview of the current best solution. This is followed by the proposed solution that includes the proposed model with the proposed formula’s flowchart and pseudocode. The 4 section discuss various techniques for testing the jaw samples of the maxilla and mandible area. Finally, the 5 section presents a comparison between state of the art and the proposed system. Then the 6 section concludes this work.

2 Literature review

The dental surgery field and related field using augmented reality technology have indeed drawn a large amount of research attention. In [16], the AR-based jaw surgery system’s pose refinement stage is improved by proposing an enhanced ICP with a rotational matrix and translation vector (RMaTV). RMaTV is presented to reduce the geometrical error on the state of art solution [24]. Two-stereo camera was used to improve the depth perception and provided an accuracy of 0.30 to 0.40 mm and a processing time was 7 to 10 frames per second, respectively. However, the system has not considered cutting error [17]. The Tracking Learning Detection (TLD) phase in the intra-operative system is enhanced in [1] by proposing a noise removal technique that improves live video frames’ noise. Also, the authors used occlusion removal method, which gives image overlay to 0.23~0.35 mm and processing speed of 8~12 frames per second was achieved. On the other hand, it does not consider the noise and outliers during the image registration, which further decreases the robustness of the image registration [7]. In [17], the system is improved by proposing the volume subtraction technique to reduce the cutting error of the state of art solution [16]. The authors used an optical camera and measured the history of cut; the remaining area’s calculation is done with the help of volume subtraction technique. Markov random field surface reconstruction algorithm was used to calculate the remaining surface to be cut. With this method, video accuracy reaches to 0.40 to 0.55mm, which was 0.55 to 0.64 in the previous method and the processing time drop from 12~13 fs/s to 9~10 fs/s. But it does not consider the direction, depth, and the remaining part of the surgery from the bone that needs to be cut as guiding information. F. Meng [15] improved the state of the art solution of [20]. They proposed a Multiview stereo vision to cover and reconstruct the maximum part of the surgical area by using an optical camera, coarse registration and fine registration to achieve the image registration. Then, the concept of the marker-less AR system provided in the operating room. Still, this system does not provide the complete guideline for surgical navigation.

Jiang et al. [11] improved on the state of the art solution [26, 28]. They suggested point cloud-based image registration for the ICP for real-time registration by using 3D image overlay. This led to improved time taken for surgery (P<.0.5) and pre-operative 3D model overlaid accurately in the surgical site. Even though the surgery’s accuracy and processing time was improved, the system does not provide complete information on the feasibility of the 3D augmented reality–guided navigation system in implant dentistry. J. Hettig et al.[10] improved the state of the art solution of [2]. They described an augmented visualisation box that is a parameterisable, as well as a framework, is used for the objective evaluation of AR visualisation technique, which avoids registration error in a surgical environment. On the other hand, the use of the AVB was not considered in this system. So, new problems were arisen due to the depth cues of the stereoscope and the deformation’s effect. Wang et al. [24] present an improvement on the state of the art solution described in [26]. They proposed marker-less video-see through AR system with a simple clinical setting. It included an incorporated aspect graph-based with the TLD tracking framework to make the system faster. This led to achievement in the system’s accuracy about less than 1 mm target overlay error and processing time of 3-5 frames per second by using the ICP algorithm in the pose refinement stage. However, this system uses optical cameras that cannot provide depth perception. This system has not considered a geometric error and cutting error. Continuously, Wang. et al. [19] improved the pre-operative stage of the state of the art solution produced in [24]. They have used an intraoral 3D scanner to overcome the shape inconsistency problem, directly affecting image registration. This method independent of the patient’s movement and the proposed system is practical and non-invasive in oral and maxillofacial surgery. The solution provides an average error of less than 0.50 mm with the time cost less than 0.5 s. However, this method adopts to work with cases which have few missing teeth and metals only.

Zhu et al.[30] improved the state of the art solution produced in [18, 29] by proposing the Occlusal splint concept compounded with a fiducial marker (OSM) to decrease positional error. They offer a solution to the problem using dental reference splint, which was fixed to a real rapid prototyping model, and 3D dental casts were used for the registration between imaging data and the patient. They improved position error of this navigation system up to 0.96 ± 0.51 mm. However, this system is marker-based. Q. H. Gao et al. [9] enhanced in the state of the art solution formed in [8, 12] by introducing a new algorithm for the recognition and matching in the Iterative closest point (ICP) algorithm. The model to scene transformation was used through the point cloud fusion to improve the registration accuracy. They used Gaussian weight function with bilateral filtering and outlier removals to enable repeatable detection of 3D descriptors. This method improved the registration error. However, this system has not provided a guideline for complete navigation of surgery. Ma et al. [13] improved in the state of the art solution introduced in [21]. They proposed an accurate cone-beam computed tomography (CBCT) patient registration method to achieve the required dental implant accuracy. The registration between pre-operative data and intra-operative data is done using the registration device. This increased the implant accuracy and registration accuracy of the system. The result shows the mean target error is 1.25 mm compared to the previous system was 1.63 mm; similarly; the mean angle error is 4.03 compared to 6.10 in the previous system. Nevertheless, the registration and implant accuracy are improved this method as a marker-based. Suenaga et al. [22] has improved on the visualisation of the system by proposing a stereo vision for tracking and marker-less registration on the state of the art solution in [23]. They established a new AR marker-less registration system which does not need any additional display device for surgeons to see the superimposed 3D image. Unlike other research, this system was tested on a human volunteer for the first time. They used the incisal margin based on contour matching technique for registration. They achieved 0.63 mm target registration error and a processing time of 5 frames per second. However, this method depend on the stereo camera, which is required a maintenance and re-calibration.

Mahmoud et al. [14] produced an improvement on the visualisation of the system of the state of the art solution illustrated in [3]. They proposed Visual simultaneous localisation and mapping (SLAM) to visualise a 3D pre-operative model on the intra-operative environment. They used a commercial tablet-PC as an additional hardware device attached with a camera, to eliminate the use of external tracking devices. This proposed system provides robustness, accuracy and minimum processing time by approximately 5-mm mean TRE (target registration error). But this system requires an additional external device which is a tablet-pc computer so that the work can be tedious. Once more, Wang et al. [26] improved the registration of the AR of the system on the state of art solution [27]. They proposed a marker-free image registration technique using a stereo camera and minimising the error caused by the patient movement. The solution provides a tracking accuracy of 0.70 mm (overlay error) by using ICP (Iterative closest point) algorithm. However, the geometric error and the geometric fidelity (i.e. the generations of a 3D image of the organs as in real) still don’t take into account. [4, 5] improved the system’s navigation by improving the state of the art solution’s resection accuracy in [5]. They proposed enhanced navigation during the surgery of bone tumor with AR technology. They provided a cheaper AR system for the visualisation of resection plane and safety margin about 9.85± 1.02 mm by using a portable tabled PC device, increasing the system’s accuracy. Then again, this system fails to provide acceptable accuracy in case of movement of the patient.

2.1 State of the art

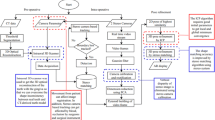

The main features of the existing system are represented inside a broken blue line and the limitations of the system are represented inside a broken red line as shown in Fig. 2. [1] proposed an enhanced TLD algorithm with occlusion removal and noise-free real-time video frame with the help of Modified kernel Non-Local Means (MK-NLM) filter algorithm, which improves the geometrical error. Optical camera is used to enhance the processing time because the 3D view should not be converted to 2D [1]. This paper improved the research by using an optical camera to take real-time video. Correspondingly, TLD algorithm is enhanced by adding Modified Kernel Non-Local Means Filter (MK-NLM) and ICP with RMaTV algorithm for real-time registration [1]. This system consists of three stages. They are the pre-operative stage, intra-operative stage and pose refinement stage, as shown in Fig. 2. The results achieved by this proposed system are 0.23 ~ 0.35 mm video accuracy in terms of overlay error and an 8 ~ 12 frames per second processing time.

Block Diagram of the State-of-the-Art System [1]. (The best features are shown in blue borders and the limitations of state of the art are shown in the red border)

Pre-operative environment

In this work, CT scan data of the patient is taken and segmented. The segmented data are used to reconstruct the 3D model for the area of concern, and an aspect graph is built as an output of this stage. The camera parameter is considered to get the image from a different viewpoint to overlay images from different angles in the intraoperative stage.

Intra-operative environment

Real-time 2D video is taken by utilising an optical camera, thus eliminating the need to convert 3D video to 2D. Reduced processing time is achieved, as well. Besides, the noise which is occurred due to external factors during operation is filtered using Modified Kernel Non-Local Means Filter (MK-LNM), which will maintain the quality of real time video. The hierarchical model of a filtered 2D video frame is created so that the lowest resolution image is set at the top of the model. The lowest resolution image’s use decreases processing time because the lowest resolution image does not require much time for analysis. The patient body is tracked and detected using TLD (Tracking Learning Detection) algorithm with the help of a bounding box. Finally, after the two stages, pre-operative and intraoperative, the best pose is obtained.

Pose-refinement

The best match pose which is obtained intraoperatively is converted to 3D image. Then, the 3D image, which is overlaid on the surgical area, is refined using the ICP algorithm. Continuously, the ICP algorithm has been enhanced by applying the RMaTV algorithm, which is removed the geometric error during the conversion process of 2D to 3D [16]. Using this model, the enhanced ICP algorithm performed a poor result of image registration, when the point sets have many outliers and noises. To obtain the accurate point set (image) registration, it is important to eliminate the interference of outliers and noise and suppress unimportant points. As a result, only the original information of the point pair should be retained[7]. The proposed model presented video accuracy of 0.23 ~ 0.35 mm, processing time with 8 ~12 frames per second [1]. The RMaTV algorithm is implemented in level 6 of ICP to refine the geometric error shown in Eq. 1 [16]. However, the accuracy can be increased by the techniques for best alignment and the processing time can be reduced.

C(k) is a correspondence of the corresponding points of the offline mode sample and online video frames

where

-

\({\overrightarrow{\text{x}}}_{\text{i}}\) denotes the point set of model shape

-

\( {\mathrm{y}}_{\mathrm{c}\left(\mathrm{t}\right)} \) denotes the point set of data shape

-

R is a rotation matrix.

-

t is a translation matrix.

-

\({\text{y}}_{\text{c}\left(\text{t}\right)}\)is the corresponding point of \({\overrightarrow{\text{x}}}_{\text{i}}\)

Error metric defines the objective function minimised in every iteration of the ICP algorithm in level 5 by the technique of point to point, as shown in Eq. 2 [16]. This procedure slows down the system and is prone to error.

Model point set Q= (q1, q2, q3,. qn), Data point set P=(p1,p2,p3,.pn), R is a rotation matrix, \(\mathrm T^\rightarrow\) is a translation vector.

2.2 Flowchart of enhanced ICP (Fig. 3)

3 Proposed system

We reviewed different methods used in the field of the augmented reality-based AR surgery. The advantages and limitations of every method were analysed. Besides, we revised different and well-known issues that exist in AR guided surgery field. These are video accuracy, processing time, depth perception, image registration and noise. We select the best out of many papers reviewed, [1], as the foundation of our proposed solution. Here, the Correntropy based scale ICP algorithm is considered in this research as a proposed solution to eliminate noises and outliers and obtain high registration accuracy by suppress unimportant information compared to RMaTV algorithm which does not consider any noises and outliers that may exist in the point set, which affects the registration accuracy. The ICP algorithm needs the noise-free point sets to achieve an accurate rigid registrational. Correntropy is used instead of the Euclidean distance in level 6 of the ICP algorithm because Correntropy has the property of outlier rejection [7].

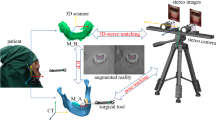

Additionally, a real-time 2D video is taken by using an optical camera during our proposed solution. Thus, the need to convert 3D video to 2D and the processing time are decreased by using this optical camera. This is considering another advantage of our proposed solution compared to the state-of-the-art solution. The one-to-one correspondence for all the point sets further increase the speed of the algorithm. So, the accuracy of the ICP algorithm is increased. The proposed system (as cleared in Fig. 4) contains pre-operation, intra-operation and pose-refinement stages as seen in next sections.

Pre-operative environment

In this stage, the CT scan data of the patient is taken and segmented. 3D model for the area of concern was reconstructed using the segmented data, and an aspect graph is built as an output of this stage. The camera parameter is considered to obtain the image from a different viewpoint to overlay images from different angles in the intraoperative stage.

Intra-operative environment

As mentioned before, a real-time the use of an optical camera takes 2D video. Thus, the necessity of conversion of 3D video to 2D is eliminated. The use of this camera decreases the processing time. The noise which is occurred due to external factors during operation such as vibration of surgical machinery, and the sensors used to monitor the patient’s health condition is filtered using Modified Kernel Non-Local Means Filter (MK-NLM). It will maintain the quality of the real-time video. The hierarchical model of the filtered 2D video frame is created so that the lowest resolution image is set at the top of the model. The lowest resolution image’s use decreases processing time because the lowest resolution image does not require much time for analysis. The patient body is tracked and detected using TLD (Tracking Learning Detection) algorithm with the help of a bounding box. Subsequently, the online matching of images attained from the two pre-operative and intraoperative stages, and the best pose is obtained.

Pose-refinement

The 2D image obtained from the previous stage is converted here into a 3D image. The initial alignment is completed by using the Ulrich method. Compared to the state–of–the–art solution, the Correntropy is used in our proposed system to improve the augmented video’s accuracy and speed instead of the Euclidean distance. The RMaTV algorithm implements the minimisation of the error metric. The refined pose is overlaid into the video taken at the real-time, which creates the augmented reality-based video that guides surgeons to visualise the root canals, nerves and other hidden structure surgical area. The error metric defines the objective function, and this is minimised in every iteration of the enhanced ICP algorithm. Maximum correntropy criterion is used as a new objective function. This factor removes the unnecessary noise and outliers in the point set, which increases the accuracy and processing time of the system [7].

The Correntropy between any two points, let’s say X and Y are given by

X undergoes the rigid transformation and aligns with data set Y, so there is a scale transformation function. The variable ‘s’ which is included in the Eq. 5, represents the registration of point sets with different scale [6].

In order to handle the registration of point sets with different scale, a scale factor is introduced in the algorithm, which increases the robustness of the algorithm. The scale-based registration provides more robustness to the system. The system will be faster by reducing the processing time.

Which is applied in data set X, by merging Eqs. (3), (4) and (5), the modified ICP equation can be written as given below in Eq. 6. So, the new objective function is based on the correspondences’ Correntropy, as shown in Eq. 6. So, the Correntropy based ICP algorithm removes the noise and outliers from the point set, which increases the robustness and decreases the processing time of the proposed system.

Where

-

Mc(k) denotes modified correspondence.

-

k is the number of iterations.

-

s is a scale factor.

-

\({\overrightarrow{\text{x}}}_{\text{i}}\) denotes the point set of model shape

-

\({\text{y}}_{\text{c}\left(\text{t}\right)}\) denotes the point set of data shape

-

R is a rotation matrix.

-

t is a translation vector.

-

\({\text{y}}_{\text{c}\left(\text{t}\right)}\) is the corresponding point of \({\overrightarrow{\text{x}}}_{\text{i}}\)

-

σ is a kernel bandwidth.

3.1 Area of improvement

The mentioned proposed equation is used in the matching phase of the ICP algorithm. In this work, we aimed to suppress the unnecessary points and take those points with the original information. This procedure is implemented by using the Correntropy to measure the shape similarity instead of the Euclidean distance measurement system in the error metric stage of ICP algorithm to eliminate the noisy point set affected the accuracy and processing time of the proposed system [7].

The enhance ICP algorithm has six major steps which are followed for the registration of an image. These stages are selection, matching, weighting, rejecting, assigning an error metric and minimising the error metric. We worked on the matching stage of the ICP algorithm to improve the system’s accuracy and processing time.

3.2 Why modified scale based Correntropy based ICP algorithm?

ICP algorithm is highly accurate and fast in case of rigid point set registration. On the other hand, it requires a noise-free point set for robust performance. To solve this problem, a concept of maximum correntropy criterion (MCC) as the similarity measure is introduced. Instead of the traditional Euclidean distance, Correntropy eliminates the noises and outliers. This used algorithm converges to any given initial parameters [7]. In order to handle the registration of point sets with different scale, a scale factor is introduced in the algorithm, which increases the accuracy and decreases the processing time of the system [7].

3.3 Flowchart of the proposed algorithm (Fig. 5)

4 Results

The implementation of our proposed system is completed on Matlab-R2019a. 20 sample video and 20 CT scan of jaw samples were collected for male and female of the different age group of people as shown in Table 1 below. These video samples and CT data were available online. The performance of the system was measured in terms of accuracy and processing time, respectively. The lower part of the oral and maxillofacial part is called the mandible, and the upper part is called the maxilla. There are 3 sub-parts in mandible, namely lower left mandible, lower right mandible and lower front mandible. Similarly, maxilla has three sub-parts, namely, upper left maxilla, upper right maxilla, and frontal maxilla. Our proposed system works in three main steps (Table 2).

There are pre-operative steps, intra-operative steps, and refinement with RMaTV and Correntropy based scale ICP algorithms. CT scan is taken to create the aspect graph and 3D model in the pre-operative stage, which used in the intraoperative stage for the online matching. The aspect graph is composed in 42 s. In the intraoperative stage, the real-time video is captured by using the optical camera. Different video frames are created by utilising the real-time video. The hierarchical model is built from each frame to assist with the online matching process. Then, the selected frame based on the resolution is passed through the TLD algorithm with the bounding box technique to reduce the processing time. This process is continued until the highest resolution image is reached. The enhanced TLD algorithm prevents the occlusion that is caused by different reasons, such as blood, surgeon’s hand and the surgical instrument. Ulrich’s method is used for an initial alignment [25]. The image registration is automatic by using Ulrich’s method, and it can handle the interruption caused by the patient’s movement.

In the pose refinement stage, two different images that are provided intraoperatively are taken to refine. The Correntropy based ICP algorithm is used to remove the noise and outliers, makes the system more robust. It could handle noises and outliers, in the point sets for the image registration from online matching. So, if the video is interrupted the algorithm can still track back and continue the operation and the accuracy of the initial image registration remains unchanged. This increases the accuracy of the image registration process. Correntropy is used instead of the Euclidean error metric. Thus, the processing time is decreased (as seen in Fig. 6). Accuracy and speed are the two main factors that are decided by the image overlay error and the system’s processing time. The difference in the superposition of the projected offline 2D image into the real-time video is given. The processing time is defined as the total number of image frames that is processed by the system in the given timeframe.

The experiments are conducted on MATLAB 2019a and run on mac PC with AMD Intel Iris Plus Graphics 640 1536 MB APU 2.3 GHz Intel Core i5 CPU and 8.0 GB RAM. The confidence range or confidence interval along with the mean value and standard deviation of correlation coefficient and processing time data results for image samples of both state of the art, and the proposed solution are observed. The confidence range provides a range of fair values for our measurement metrics, i.e., correlation coefficient and processing time. Calculation of confidence interval is done in Microsoft Excel using Confidence statistical function. Those parameters are measured by the MATLAB confidence score function (Eq. 7 shows how to calculate it).

The simulation tests the proposed algorithm on MATLAB by using the video samples and video sample collected from different online sources for different gender and age groups. The experiment was done for both the proposed and the state of the art solutions. Both results obtained using the reports and graphs were compared. These reports and graphs are explained in more detail below. Figure 7 gives a detailed description of the performance in terms of accuracy for the proposed system compared to the state-of-the-art solution for the maxillary jaw. Figure 8 shows the difference between the processing time of state of the art and proposed solutions. Similarly, Fig. 9 gives a detailed description of the performance in terms of accuracy for the proposed system compared to the state of the art solution for the mandibular jaw bone. Figure 10 shows the difference between the processing time of the state of art solution and proposed solution.

The result of accuracy in the maxillary jaw bone part. (a) The first twosome bar represents the image registration accuracy in terms of overlay average error (b) The second twosome bar represents the average accuracy in terms of image overlay error. (c) The third twosome bar represents the accuracy in terms of overlay error by the movement of the patient (d) The last twosome bar represents the overlay accuracy in terms of overlay error by the movement of the instrument

The result of processing time in the maxillary jaw bone part. (a) The first twosome bar represents the average processing time of image registration (b) The second twosome bar represents the processing time for image overlay. (c) The third twosome bar represents the processing time after the patient’s movement (d) The last twosome bar represents the processing time after the movement of the instrument

The result of accuracy in the mandibular jaw bone part. (a) The first twosome bar represents the image registration accuracy in terms of overlay average error (b) The second twosome bar represents the average accuracy in terms of image overlay error. (c) The third twosome bar represents the accuracy in terms of overlay error by the movement of the patient (d) The last twosome bar represents the overlay accuracy in terms of overlay error by the movement of the instrument

The result of processing time in the mandibular jaw bone part. a (a) The first twosome bar represents the average processing time of image registration (b) The second twosome bar represents the processing time for image overlay. (c) The third twosome bar represents the processing time after the patient’s movement (d) The last twosome bar represents the processing time after the movement of the instrument

Tables 3, 4, 5, 6, 7, and 8 shows the maxillary and mandibular jaw results for different age groups and different gender groups. The green border in the following table represents the narrow area of the respective jaw sample. The visualisation of the narrow area, nerve channels and blood vessels are highlighted by green colour, which improves the visualisation of the hidden area from the view of the surgeons, This helps in better visualisation of the targeted areas for the surgeon that will also help them in navigating the path of the surgery, The improvement of the visualisation of the narrow area helps surgeon to achieve high success in the jaw surgery in less time.

5 Discussion

The accuracy and processing time of state of the art and the proposed solutions are shown above in Tables 3, 4, 5, 6, 7, and 8. These accuracy and processing time of the system are determined by the image registration, overlay of an image, movement of the patient, and the surgical instrument’s movement. The proposed system has achieved 0.21 ~ 0.29 mm registration accuracy compared to 0.23~0.35 mm accuracy of the state of the art solution. Similarly, the processing time of the proposed solution is improved and is given by 10-14 fps in comparison to 8-12 fps given by the state of the art solution.

The green border in Tables 3, 4, 5, 6, 7, and 8, represents the narrow area of the respective jaw sample. The visualisation of the narrow area, nerve channels and blood vessels is highlighted by green colour, which improves the visualisation of the hidden area from the surgeons’ view. This led to better visualisation of the surgeon’s targeted areas, which will help them in navigating the path of the surgery as well. The improvement of the narrow area’s visualisation helps the surgeon achieve high success in the jaw surgery in less time, as shown in Tables 3, 4, 5, 6, 7, and 8.

The improvement of the augmented reality-based jaw surgery is possible by our proposed system. The implementation of Correntropy based ICP algorithm given by Eq. 5. It replaces the Euclidian error metric in the pose refinement stage of the ICP algorithm, suppressing the noises and outliers in the point sets that help to achieve high registration accuracy. Similarly, the addition of a scale factor-based registration supports the registration process of point sets by taking different point sets with different scales, and this provides further robustness of the algorithm. Continuously, the one-to-one correspondence between the point sets to increase the speed of the system. The combination of scale factor on the enhanced ICP algorithm along with the introduction of Correntropy improves the accuracy and processing time of the system.

We got the system’s accuracy by calculating the difference between the targeted overlay pixel on the image and the actual overlay pixel on the image. A built-in protocol is available in the Matlab, that identify the targeted coordinates of the pixel. Then, the difference between the actual and targeted overlay pixel coordinates is calculated (as presented in Tables 3, 4, 5, 6, 7, and 8 previously). The total time taken to perform any given task is known as the processing time. In this case, the processing time is noted after every execution of the sample.

The AR is still under the research because surgeons are finding the difficulty in visualising the patient’s narrow area. Suppose surgeons operate poorly due to the bad visualisation of the narrow area. In that case, this will be risky for the patient, leading to patient death. This paper’s main work is to improve the visualisation of the narrow area of the jaw by increasing the accuracy of the image registration, which gives the 3D view of the patient’s hidden structure. The results given by the proposed work was simulated on Matlab, and it produces an acceptable range of accuracy by decreasing the processing time of the system. See Table 9.

6 Conclusions

[16] used the concept of RMaTV algorithm-based pose refinement inherited by the state of the art solution produced in [1]. State of the art also proposed the 3D reconstruction-based Occlusion removal. Nevertheless, state of the art does not consider the noise and outliers that may have present in the point sets, which affect the accuracy of the image registration. The enhanced ICP algorithm performs very poorly when there are many noise and outliers in the point sets. Correntropy has a property of eliminating noise and outliers’ interference to give high registration accuracy, instead of the Euclidean distance measurement. Correntropy is used to measure the shape similarity in the matching phase of the ICP algorithm, further enhancing the enhanced algorithm in pose refinement for accurate and robust results. Also, the one-to-one correspondence between the points increases the speed of the matching phase of the enhanced ICP algorithm. Therefore, Correntropy is used instead of the Euclidean distance to improve the accuracy and processing time of the enhanced ICP algorithm [7]. The purpose of this research was motivated on the error metric stage of the ICP algorithm. The future work can be focused on the other stages of the ICP algorithm. Furthermore, Future research is needed further to improve the initial parameters of the ICP algorithm, because it is very important to avoid the wrong initialisation of the parameters to obtain the high registration accuracy.

References

Basnet BR, Alsadoon A, Withana C, Deva A, Paul M (2018) A novel noise filtered and occlusion removal: navigational accuracy in augmented reality-based constructive jaw surgery. Oral Maxillofac Surg 22(4):385-401

Bernhardt S, Nicolau SA, Soler L, Doignon C (2017) The status of augmented reality in laparoscopic surgery as of 2016. Med Image Anal 37:66–90

Chen X et al (2015) Development of a surgical navigation system based on augmented reality using an optical see-through head-mounted display. J Biomed Inform 55:124–131

Choi H et al (2017) A portable surgical navigation device to display resection planes for bone tumor surgery. Minim Invasive Ther Allied Technol 26(3):144–150

Choi H, Cho B, Masamune K, Hashizume M, Hong J (2016) An effective visualization technique for depth perception in augmented reality-based surgical navigation. Int J Med Robot 12(1):62–72

Du S et al (2017) Precise iterative closest point algorithm with corner point constraint for isotropic scaling registration. Multimed Syst 25(2):119–126

Du S, Xu G, Zhang S, Zhang X, Gao Y, Chen B (2018) Robust rigid registration algorithm based on pointwise correspondence and Correntropy. Pattern Recognit Let

Gao QH, Wan TR, Tang W, Chen L (2017) A stable and accurate marker-less augmented reality registration method,“ in International Conference on Cyberworlds (CW). IEEE, pp 41-47

Gao QH, Wan TR, Tang W, Chen L (2018) Object registration in semi-cluttered and partial-occluded scenes for augmented reality. Multimed Tools Appl: 1–21

Hettig J, Engelhardt S, Hansen C, Mistelbauer G (2018) AR in VR: assessing surgical augmented reality visualizations in a steerable virtual reality environment. Int J Comput Assist Radiol Surg 13(11):1717–1725

Jiang W et al (2018) Evaluation of the 3D Augmented Reality-Guided Intraoperative Positioning of Dental Implants in Edentulous Mandibular Models. Int J Oral Maxillofac Implants 33(6):1219-1228

Li L et al (2016) A novel augmented reality navigation system for endoscopic sinus and skull base surgery: a feasibility study. PLoS ONE 11(1):e0146996

Ma L et al (2019) Augmented reality surgical navigation with accurate CBCT-patient registration for dental implant placement. Med Biol Eng Comput 57(1):47-57

Mahmoud N et al (2017) On-patient see-through augmented reality based on visual SLAM. Int J Comput Assist Radiol Surg 12(1):1–11

Meng F, Zhai F, Zeng B, Ding H, Wang G ( 2018) An automatic markerless registration method for neurosurgical robotics based on an optical camera,. Int J Comput Assist Radiol Surg 13(2):253–265

Murugesan YP, Alsadoon A, Manoranjan P, Prasad PWC (2018) A novel rotational matrix and translation vector algorithm: geometric accuracy for augmented reality in oral and maxillofacial surgeries. Int J Med Robot Comput Assist Surg 14(3):e1889

Pokhrel S, Alsadoon A, Prasad PWC, Paul M (2018) A novel augmented reality (AR) scheme for knee replacement surgery by considering cutting error accuracy. Int J Med Robot Comput Assist Surg 15(1):1958

Scuderi GR, Fallaha M, Masse V, Lavigne P, Amiot L-P, Berthiaume M-J (2014) Total knee arthroplasty with a novel navigation system within the surgical field,. Orthop Clin 45(2):167–173

Wang J, Shen Y, Y S (2019) A practical marker-less image registration method for augmented reality oral and maxillofacial surgery. Int J Comput Assist Radiol Surg: 1–11

Shin S et al (2015) Markerless surgical robotic system for intracerebral hemorrhage surgery. In 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE, pp 5272-5275

Somogyi-Ganss E, Holmes HI, Jokstad A (2015) Accuracy of a novel prototype dynamic computer‐assisted surgery system. Clin Oral Implants Res 26(8):882–890

Suenaga H et al (2015) Vision-based markerless registration using stereo vision and an augmented reality surgical navigation system: a pilot study. BMC Med Imaging 15:51

Suenaga, Hideyuki et al (2013) Real-time in situ three-dimensional integral videography and surgical navigation using augmented reality: a pilot study. Int J Oral Sci 5(2):98

Suenaga. H WJ, Sakuma I (2017) Video see-through augmented reality for oral and maxillofacial surgery. Int J Med Robot Comput Assist Surg 13(2):e1754

Ulrich M, Wiedemann C, Steger C (2012) Combining scale-space and similarity-based aspect graphs for fast 3D object recognition,. IEEE Trans Pattern Anal Mach Intell 34(10):1902–1914

Wang J et al (2014) Augmented reality navigation with automatic marker-free image registration using 3-D image overlay for dental surgery. IEEE Trans Biomed Eng 61(4):1295–1304

Wang, Junchen et al (2013) Real-time marker-free patient registration and image-based navigation using stereovision for dental surgery. Augmented reality environments for medical imaging and computer-assisted interventions. Springer, Berlin, pp 9–18

Yamaguchi S, Ohtani T, Yatani H, Sohmura T (2009) Augmented reality system for dental implant surgery. in International Conference on Virtual and Mixed Reality. Springer, Berlin, pp 633-638

Zhang W, Wang X, Zhang J, Shen G ( 2016) Application of preoperative registration and automatic tracking technique for image-guided maxillofacial surgery. Comput Assist Surg (Abingdon) 21(1):137–142

Zhu M et al (2017) A novel augmented reality system for displaying inferior alveolar nerve bundles in maxillofacial surgery. Sci Rep 7:42365

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Puri, A., Alsadoon, A., Prasad, P.W.C. et al. Augmented reality for visualization the narrow areas in jaw surgery: modified Correntropy based enhanced ICP algorithm. Multimed Tools Appl 81, 24319–24345 (2022). https://doi.org/10.1007/s11042-022-11963-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-11963-8