Abstract

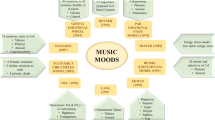

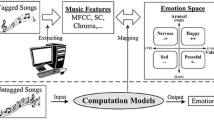

Emotion is considered a physiological state that appears whenever a transformation is observed by an individual in their environment or body. While studying the literature, it has been observed that combining the electrical activity of the brain, along with other physiological signals for the accurate analysis of human emotions is yet to be explored in greater depth. On the basis of physiological signals, this work has proposed a model using machine learning approaches for the calibration of music mood and human emotion. The proposed model consists of three phases (a) prediction of the mood of the song based on audio signals, (b) prediction of the emotion of the human-based on physiological signals using EEG, GSR, ECG, Pulse Detector, and finally, (c) the mapping has been done between the music mood and the human emotion and classifies them in real-time. Extensive experimentations have been conducted on the different music mood datasets and human emotion for influential feature extraction, training, testing and performance evaluation. An effort has been made to observe and measure the human emotions up to a certain degree of accuracy and efficiency by recording a person’s bio- signals in response to music. Further, to test the applicability of the proposed work, playlists are generated based on the user’s real-time emotion determined using features generated from different physiological sensors and mood depicted by musical excerpts. This work could prove to be helpful for improving mental and physical health by scientifically analyzing the physiological signals.

Similar content being viewed by others

References

Aljanaki A, Wiering F, Veltkamp RC (2015) Studying emotion induced by music through a crowdsourcing game. Inf Process Manage 52(1):115–128. https://doi.org/10.1016/j.ipm.2015.03.004

Aljanaki A, Yang YH, Soleymani M (2017) Developing a benchmark for emotional analysis of music. PLoS ONE 12(3):e0173392. https://doi.org/10.1371/journal.pone.0173392

Amber ML, Jennifer VL, Brandon Z et al (2009) Brain activation during anticipation of sound sequences. J Neurosci 29(8):2477. https://doi.org/10.1523/JNEUROSCI.4921-08.2009

Bagherzadeh Y, Baldauf D, Pantazis D et al (2020) Alpha synchrony and the neurofeedback control of spatial attention. Neuron 105(3):577–587. https://doi.org/10.1016/j.neuron.2019.11.001

Bahl A, Hellack B, Mihaela B et al (2019) Recursive feature elimination in random forest classification supports nanomaterial grouping. NanoImpact 15:100179. https://doi.org/10.1016/j.impact.2019.100179

Bashashati A, Ward RK, Birch GE et al (2003) Fractal dimension-based EEG biofeedback system. Proc IEEE Eng Med Biol Soc 3:2220–2223. https://doi.org/10.1109/IEMBS.2003.1280200

Bengio Y, Réjean D, Vincent P et al (2006) Neural probabilistic language models. J Mach Learn Res 3:1137–1155. https://doi.org/10.5555/944919.944966

Bhat AS, Amith VS, Prasad NS et al (2014) An efficient classification algorithm for music mood detection in western and hindi music using audio feature extraction. Proc ICSIP. https://doi.org/10.1109/ICSIP.2014.63

Bhattarai B, Lee J (2019) Automatic music mood detection using transfer learning and multilayer perceptron. Int J Fuzzy Logic Intell Syst 19(2):88–96. https://doi.org/10.5391/IJFIS.2019.19.2.88

Bigo L, Garcia J, Spicher A et al (2012) PaperTonnetz: music composition with interactive paper. Sound and music computing. https://hal.inria.fr/hal-00718334/file/smc12.pdf. Accessed 30 Nov 2020

Cao C, Li M (2009) Thinkit’s submissions for MIREX2009 audio music classification and similarity tasks. ISMIR MIREX. https://www.music-ir.org/mirex/wiki/MIREX_HOME. Accessed 30 Nov 2020

Cernian A, Olteanu A, Carstoiu D et al (2017) Mood detector—on using machine learning to identify moods and emotions. Proceedings of ICCSCS Bucharest, 213–216

Chanel G, Ansari-Asl K, Pun T (2007) Valence-arousal evaluation using physiological signals in an emotion recall paradigm. Proc IEEE ICSMC Montreal. https://doi.org/10.1109/ICSMC.2007.4413638

Chaturvedi V, Kaur AB, Varshney V et al (2021) Music mood and human emotion recognition based on physiological signals: a systematic review. Multimed Syst. https://doi.org/10.1007/s00530-021-00786-6

Cowie R, Douglas-Cowie E, Savvidou S et al (2000) FEELTRACE: an instrument for recording perceived emotion in real time. ISCA tutorial and Research Workshop (ITRW) on Speech and Emotion. p 19–24

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines. Cambridge University Press, New York

Drucker H, Burges C, Kaufman L et al (2003) Support vector regression machines. Advances in neural information processing systems. MIT Press, Cambridge, pp 155–161

Edla D, Mangalorekar K, Dhavalikar G et al (2018) Classification of EEG data for human mental state analysis using random forest classifier. Proc Comput Sci 132:1523–1532. https://doi.org/10.1016/j.procs.2018.05.116

Giannakopoulos T (2015) pyAudioAnalysis: an open-source python library for audio signal analysis. PLoS ONE 10(12):e0144610. https://doi.org/10.1371/journal.pone.0144610

Gilda S, Zafar H, Soni C et al (2017) Smart music player integrating facial emotion recognition and music mood recommendation. Proceedings of International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), 154–158

Han BJ, Rho S, Dannenberg RB et al (2009) SMERS: music emotion recognition using support vector regression. Proceedings of International Society for Music Information Retrieval Conference, ISMI, 651–656

Harmon-Jones E, Sigelman J (2001) State anger and prefrontal brain activity: evidence that insult-related relative left-prefrontal activation is associated with experienced anger and aggression. J Pers Soc Psychol 80(5):797–803

Hosseini SA, Naghibi-Sistani MB, Rahati-quchani S (2010) Dissection and analysis of psychophysiological and EEG signals for emotional stress evaluation. J Biol Syst 18:101–114. https://doi.org/10.1109/ICBECS.2010.5462520

Hu X, Downie JS, Laurier C et al (2008) The 2007 MIREX audio mood classification task: lessons learned. Proceedings of ISMIR International Conference on Music Information Retrieval, 462–467

IBM Corp Released (2017) IBM SPSS statistics for windows, version 25.0. IBM Corp, Armonk

Juslin PN, Laukka P (2004) Expression, perception, and induction of musical emotions: a review and a questionnaire study of everyday listening. J N Music Res 33(3):217–238. https://doi.org/10.1080/0929821042000317813

Khanchandani K, Hussain M (2009) Emotion recognition using multilayer perceptron and generalized feed forward neural network. J Sci Ind Res 68:367–371

Kim KH, Bang SW, Kim SR (2004) Emotion recognition system using short-term monitoring of physiological signals. Med Biol Eng Comput 42(3):419–427. https://doi.org/10.1007/BF02344719

Kim Y, Schmidt EM, Migneco R et al (2010) Music emotion recognition: a state of the art review. Proc Int Soc Music Info Retrieval Conf (ISMIR) 86:937–952

Kingma D and Ba J (2014) Adam: a method for stochastic optimization. Proceedings of International Conference on Learning Representations. arXiv preprint

Landauer TK, McNamara DS, Dennis S et al (2013) Handbook of latent semantic analysis. Psychology Press, Hove

Lehmberg LJ, Fung CV (2010) Benefits of music participation for senior citizens: a review of the literature. Music Education Res Int 4:19–30

Lu L, Liu D (2006) Automatic mood detection and tracking of music audio signals. IEEE Trans Audio Speech Lang Process 14(1):5–18

Malheiro R, Panda R, Gomes P et al (2016) Bi-modal music emotion recognition: novel lyrical features and dataset. Proc Workshop on Music Machine Learn. https://doi.org/10.5220/0006037400450055

McCraty R, Barrios-Choplin B, Atkinson M et al (1998) The effects of different types of music on mood, tension, and mental clarity. Altern Ther Health Med 4(1):75–84

Naji M, Firoozabadi M, Azadfallah P (2013) Classification of music-induced emotions based on information fusion of forehead biosignals and electrocardiogram. Cogn Comput 6(2):241–252. https://doi.org/10.1007/s12559-013-9239-7

Naji M, Firoozabadi M, Azadfallah P (2015) Emotion classification during music listening from forehead biosignals. SIViP 9(6):1365–1375. https://doi.org/10.1007/s11760-013-0591-6

North AC, Hargreaves DJ (2014) Music and consumer behavior. Proc Nat Acad Sci USA 111(2):646–651

Nummenmaa L, Glerean E, Hari R, Hietanen JK (2014) Bodily maps of emotions. Proc Nat Acad Sci USA 111(2):646–651

Oliphant TE (2006) Guide to NumPy. Trelgol Publishing, USA

Panda R, Malheiro R, Paiva RP (2018) Novel audio features for music emotion recognition. IEEE Trans Affect Comput 11(4):614–626. https://doi.org/10.1109/TAFFC.2018.2820691

Patil TR, Sherekar SS (2013) Performance analysis of naive bayes and J48 classification algorithm for data classification. Proc Int J Comput Sci Appl 6(2):256–261

Pedregosa F, Varoquaux G, Gramfort A et al (2012) Scikit-learn: machine learning in python. J Mach Learn Res 12(12):2825–2830

Platt J (1998) Sequential minimal optimization: a fast algorithm for training support vector machines. Adv Kernel Methods-Support Vector Learn 208:1–21

Ramanathan R, Kumaran R, Rohan RR, Gupta R, Prabhu V (2017) An intelligent music player based on emotion recognition. Proc Int Conf Comput Syst Info Technol Sustain Solut (CSITSS). https://doi.org/10.1109/CSITSS.2017.8447743

Reynaldo J, Santos A (1999) Cronbach’s alpha: a tool for assessing the reliability of scales. J Ext 37(2):1–5

Reynolds D (2015) Gaussian mixture models. In: Li SZ, Jain AK (eds) Encyclopedia of biometrics. Springer, Boston, pp 827–832

Russell JA (1980) A circumplex model of affect. J Pers Soc Psychol 39(6):1161–1178

Schacter DL, Gilbert DT, Wegner DM (2010) Psychology, 2nd edn. Worth Publishers, New York

Schmidt EM, Turnbull D, and Kim YE (2010) Feature selection for content-based, time-varying musical emotion regression. Proceedings of the International Conference on Multimedia Information Retrieval Philadelphia, 267–274

Selvaraj J, Murugappan M, Wan K et al (2013) Classification of emotional states from electrocardiogram signals: a non-linear approach based on Hurst. Biomed Eng OnLine 12(1):44. https://doi.org/10.1186/1475-925X-12-44

Silverman MJ (2015) Effects of live music in oncology waiting rooms: two mixed methods pilot studies. Int J Music Perform Arts 3(1):1–15

Takahashi K (2004) Remarks on emotion recognition from bio-potential signals. Proceedings of 2nd International Conference on Autonomous Robots and Agents, 186–191

Taktak A, Ganney P, Long D, Axell R (2019) Clinical engineering: a handbook for clinical and biomedical engineers. Academic Press, Cambridge

Tan KR, Villarino ML, Maderazo C (2019) Automatic music mood recognition using Russell’s two-dimensional valence-arousal space from audio and lyrical data as classified using SVM and Naïve Bayes. Proc IOP Conf Series 482(1):012–019

Thaut MH (2005) Rhythm, music, and the brain: scientific foundations and clinical applications. Routledge, New York

Thayer RE (1989) The biopsychology of mood and arousal. Oxford University Press, New York

Tzanetakis G, Cook P (2002) Musical genre classification of audio signals. Proc IEEE Trans Speech Audio Process 10(5):293–302

van der Zwaag M, Dijksterhuis C, de Waard D et al (2012) The influence of music on mood and performance while driving. Ergonomics 55(1):12–22

Vijayan AE, Sen D, Sudheer AP (2015) EEG-based emotion recognition using statistical measures and auto-regressive modelling. Proc IEEE Int Conf Comput Intell Commun Technol. https://doi.org/10.1109/CICT.2015.24

Weninger F, Eyben F, Schuller B (2014) On-line continuous-time music mood regression with deep recurrent neural networks. Proc IEEE Int Conf Acoustics Speech and Signal Processing (ICASSP). https://doi.org/10.1109/ICASSP.2014.6854637

Wolpert D, Macready W (1997) No free lunch theorems for optimization. Proc IEEE Trans Evolut Comput 1(1):67–82. https://doi.org/10.1109/4235.585893

Yıldırım Ö, Baloglu UB, Acharya UR (2018) A deep convolutional neural network model for automated identification of abnormal EEG signals. Neural Comput Appl. https://doi.org/10.1007/s00521-018-3889-z

Zhang K, Zhang HB, Li S et al (2018) The PMEmo dataset for music emotion recognition. Proc Int Con Multimed Retrieval 2012:135–142

Zheng W, Lu B (2015) Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. Proc IEEE Trans Auton Mental Dev 7(3):162–175. https://doi.org/10.1109/TAMD.2015.2431497

Zong C, Chetouani M (2009) Hilbert-huang transform based physiological signals analysis for emotion recognition. Proc IEEE Int Symp Signal Process Info Technol (ISSPIT). https://doi.org/10.1109/ISSPIT.2009.5407547

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Garg, A., Chaturvedi, V., Kaur, A.B. et al. Machine learning model for mapping of music mood and human emotion based on physiological signals. Multimed Tools Appl 81, 5137–5177 (2022). https://doi.org/10.1007/s11042-021-11650-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11650-0