Abstract

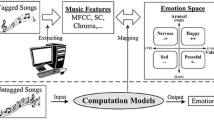

Scientists and researchers have tried to establish a bond between the emotions conveyed and the subsequent mood perceived in a person. Emotions play a major role in terms of our choices, preferences, and decision-making. Emotions appear whenever a person perceives a change in their surroundings or within their body. Since early times, a considerable amount of effort has been made in the field of emotion detection and mood estimation. Listening to music forms a major part of our daily life. The music we listen to, the emotions it induces, and the resulting mood are all interrelated in ways we are unbeknownst to, and our survey is entirely based on these two areas of research. Differing viewpoints on this issue have led to the proposal of different ways of emotion annotation, model training, and result visualization. This paper provides a detailed review of the methods proposed in music mood recognition. It also discusses the different sensors that have been utilized to acquire various physiological signals. This paper will focus upon the datasets created and reused, different classifiers employed to obtain results with higher accuracy, features extracted from the acquired signals, and music along with an attempt to determine the exact features and parameters that will help in improving the classification process. It will also investigate several techniques to detect emotions and the different music models used to assess the music mood. This review intends to answer the questions and research issues in identifying human emotions and music mood to provide a greater insight into this field of interest and develop a better understanding to comprehend and answer the perplexing problems that surround us.

Similar content being viewed by others

Notes

Available at https://www.nielsen.com/in/en/insights/report/2018/india-music-360-report, The Nielsen Company (US) (Last Checked—6-Jan-2020) .

Available at https://indianmi.org/?id=12060&t=Digital%20Music%20Study,%202019 (Last Checked—6-Jan-2020).

Available at https://github.com/tyiannak/pyAudioAnalysis (Last Checked—6-Jan-2020).

Available at https://librosa.github.io/librosa (Last Checked—6-Jan-2020).

References

Abadi, M.K., Subramanian, R., Kia, S.M., Avesani, P., Patras, I., Sebe, N.: DECAF: MEG-based multimodal database for decoding affective physiological responses. IEEE Trans. Affect. Comput. 6(3), 209–222 (2015). https://doi.org/10.1109/TAFFC.2015.2392932

Acharya, U.R., Hagiwara, Y., Deshpande, S.N., Suren, S., Koh, J.E., Oh, S.L., Arunkumar, N., Ciaccio, E.J., Lim, C.M.: Characterization of focal EEG signals: a review. Future Gener. Comput. Syst. 91(2019), 290–299 (2018). https://doi.org/10.1016/j.future.2018.08.044

Alakuş T.B., Turkoglu I. (2018). Determination of accuracies from different wavelet methods in emotion estimation based on EEG signals by applying KNN classifier. In: 3rd International Conference on Engineering Technology and Applied Science, pp 250–254 (2018)

Alarcão, S.M., Fonseca, M.J.: Emotions recognition using EEG signals: a survey. IEEE Trans. Affective Comput. 10(3), 374–393 (2019). https://doi.org/10.1109/TAFFC.2017.2714671

Aljanaki, A., Yang, Y.H., Soleymani, M.: Developing a benchmark for emotional analysis of music. PLoS ONE 12(3), 2017 (2017). https://doi.org/10.1371/journal.pone.0173392

Aljanaki, F.W., Veltkamp, R.C.: (2015) Studying emotion induced by music through a crowdsourcing game. Inf. Process. Manag. 52(1), 115–128 (2015)

Andersson, P.K., Kristensson, P., Wastlund, E., Gustafsson, A.: Let the music play or not: the influence of background music on consumer behaviour. J. Retail. Consumer Behavior 19(6), 553–560 (2012)

Andrzejak, R.G., Schindler, K., Rummel, C.: Non-Randomness, nonlinear dependence, and non-stationarity of electroencephalographic recordings from epilepsy patients. Phys. Rev. Am. Phys. Soc. E 86, 2012 (2012). https://doi.org/10.1103/PhysRevE.86.046206

Arnrich, B., Marca, R.L., Ehlert, U.: Self-Organizing Maps for Affective State Detection. 2010, 1–8 (2010)

Atkinson, J., Campos, D.: Improving BCI-based emotion recognition by combining EEG feature selection and kernel classifiers. Expert Syst. Appl. 47(C), 35–41 (2016). https://doi.org/10.1016/j.eswa.2015.10.049

Besson, M., Faïta, F., Peretz, I., Bonnel, A., Requin, J.: Singing in the brain: independence of lyrics and tunes. Psychol. Sci. 9(6), 494–498 (1998)

Bhatti, A.M., Majid, M., Anwar, S.M., Khan, B.: Human emotion recognition and analysis in response to audio music using brain signals. Comput. Hum. Behav. 65(2), 267–275 (2016). https://doi.org/10.1016/j.chb.2016.08.029

Bittner R., Salamon J, Tierney M., Mauch M., Cannam C., Bello J.P.: MedleyDB: a multitrack dataset for annotation-intensive MIR research. In: 15th International Society for Music Information Retrieval Conference, ISMIR, pp 155–160 (2014)

Bojorquez, G.R., Jackson, K.E., Andrews, A.K.: Music therapy for surgical patients. Crit. Care Nurs. Q. 43(1), 81–85 (2020). https://doi.org/10.1097/cnq.0000000000000294

Bos D.O.: EEG-based Emotion Recognition. The Influence of Visual and Auditory Stimuli (2006)

Brown L., Grundlehner B., Penders J.: Towards wireless emotional valence detection from EEG. In: Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pp. 2188–2191 (2011). https://doi.org/10.1109/IEMBS.2011.6090412

Chanel G., Ansari-Asl K., Pun T.: Valence-arousal evaluation using physiological signals in an emotion recall paradigm. In: IEEE International Conference on Systems, Man and Cybernetics, Montreal, pp. 2662–2667 (2007)

Chen, Y.-A., Yang Y-H., Wang J-C., Chen H.: The AMG1608 dataset for music emotion recognition. In: 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). QLD, pp. 693–697 (2015). https://doi.org/10.1109/icassp.2015.7178058

Defferrard M., Benzi K., Vandergheynst P., Bresson X.: FMA: a dataset for music analysis. In: 18th International Society for Music Information Retrieval Conference 2017 (2017). arxiv.org/abs/1612.01840

Duan R., Zhu J., Lu B. Differential entropy feature for EEG-based emotion classification. In: 6th International IEEE/EMBS Conference on Neural Engineering (NER), pp. 81–84 (2013). https://doi.org/10.1109/NER.2013.6695876

Eerola, T., Vuoskoski, J.K.: A comparison of the discrete and dimensional models of emotion in music. Psychol. Music 39(1), 18–49 (2010). https://doi.org/10.1177/0305735610362821

Ekman, P.: An Argument for Basic Emotions. Cognition Emotion 6(3–4), 169–200 (1992). https://doi.org/10.1080/02699939208411068

Fernández-Aguilar, L., Martínez-Rodrigo, A., Moncho-Bogani, J., Fernández-Caballero, A., Latorre, J.M.: Emotion detection in aging adults through continuous monitoring of electro-dermal activity and heart-rate variability. IWINAC (2019). https://doi.org/10.1007/978-3-030-19591-5_26

Fernández-Sotos, A., Fernández-Caballero, A., Latorre, J.M.: Influence of tempo and rhythmic unit in musical emotion regulation. Front. Comput. Neurosci. 10(80), 1–13 (2016)

Foote, J.: Content-based retrieval of music and audio. Multimed. Storage Arch. Syst. II 3229, 1997 (1997). https://doi.org/10.1117/12.290336

Fu, Z., Lu, G., Ting, K.M., Zhang, D.: A survey of audio-based music classification and annotation. IEEE Trans. Multimed. 13(2), 303–319 (2011)

Gemmeke J.F., Ellis D., Freedman D., Jansen A., Lawrence W., Moore R.C., Plakal M., Ritter M.: Audio Set: an ontology and human-labeled dataset for audio events. In: 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp 776–780 (2017). https://doi.org/10.1109/ICASSP.2017.7952261.

Hadjidimitriou, S., Hadjileontiadis, L.J.: EEG-based classification of music appraisal responses using time-frequency analysis and familiarity ratings. IEEE Trans. Affect. Comput. 4(2), 161–172 (2013). https://doi.org/10.1109/T-AFFC.2013.6

Hadjidimitriou, S.K., Hadjileontiadis, L.J.: Towards an EEG-based recognition of music liking using time-frequency analysis. IEEE Trans. Biomed. Eng. 59(2012), 3498–3510 (2012). https://doi.org/10.1109/TBME.2012.2217495

Harati A., Lopez S., Obeid I., Picone J., Jacobson M., Tobochnik S.: The TUH EEG CORPUS: a big data resource for automated EEG interpretation. In: IEEE Signal Processing in Medicine and Biology Symposium, IEEE SPMB (2014). https://doi.org/10.1109/SPMB.2014.7002953

Hevner, K.: Experimental studies of the elements of expression in music. Am. J. Psychol. 48(2), 246–268 (1936)

Homburg, H., Mierswa, I., Moller, B., Morik, K., Wurst, M.: A benchmark dataset for audio classification and clustering. Int. Symp. Music Inf. Retrieval 2005, 528–531 (2005)

Hosseini, S.A., Naghibi-Sistani, M.B., Rahati-quchani, S.: Dissection and analysis of psychophysiological and EEG signals for emotional stress evaluation. J. Biol. Syst. 18(spec01), 101–114 (2010)

Hu X., Downie J. S., Laurier C., Bay M., Ehmann A.F.: The 2007 Mirex audio mood classification task: Lessons learned. In: ISMIR 9th International Conference on Music Information Retrieval, pp. 462–467 (2008). https://doi.org/10.5281/zenodo.1416380

Hu X., Sanghvi V.R., Vong B., On P.J., Leong C.N., Angelica J.: Moody: a web-based music mood classification and recommendation system. In: Proceedings of the International Conference on Music Information Retrieval (2008)

Huron D.: Perceptual and cognitive applications in music information retrieval. In: ISMIR, 1st International Symposium on Music Information Retrieval (2000)

Ito, S., Mitsukura, Y., Fukumi, M., Akamatsu, N.: A feature extraction of the EEG during listening to the music using the factor analysis and neural networks. Proc. Int. Jt. Conf. Neural Netw. 3, 2263–2267 (2003). https://doi.org/10.1109/IJCNN.2003.1223763

Ito S., Mitsukura Y., Fukumi M., Cao J. (2007). Detecting method of music to match the user's mood in prefrontal cortex EEG activity using the GA. In: International Conference on Control, Automation and Systems, Seoul, South Korea, (2007). https://doi.org/10.1109/ICCAS.2007.4406685

Jain, R., Bagdare, S.: Music and consumption experience: a review. Int. J. Retail Distrib. Manag. 39(4), 289–302 (2011). https://doi.org/10.1108/09590551111117554

Jaques N., Rudovic O., Taylor S., Sano A., Picard R.: Predicting tomorrow’s mood, health, and stress level using personalized multitask learning and domain adaptation. In: IJCAI 2017 Workshop on Artificial Intelligence in Affective Computing, Journal of Machine Learning Research Vol. 66, pp. 17–33 (2017)

Jatupaiboon, N., Pan-Ngum, S., Israsena, P.: Real-time EEG-based happiness detection system. Sci. World J. (2013). https://doi.org/10.1155/2013/618649

Jerritta S., Murugappan M., Nagarajan R., Wan K.: Physiological signals based human emotion recognition: a review. In: IEEE 7th International Colloquium on Signal Processing and its Applications, pp. 410–415 (2011)

Jiang, D., Lu, L., Zhang, H., Tao, J., Cai, L.: Music type classification by spectral contrast feature. IEEE Int. Conf. Multimed. Expo 1(2002), 113–116 (2002)

Juslin, P.N., Laukka, P.: Expression, perception, and induction of musical emotions: a review and a questionnaire study of everyday listening. J. New Music Res. 33(2004), 217–238 (2004). https://doi.org/10.1080/0929821042000317813

Khosrowabadi R., Quek H.C., Wahab A., Ang K.K.: EEG-based emotion recognition using self-organizing map for boundary detection. In: 20th International Conference on Pattern Recognition, ICPR’10. IEEE Computer Society, pp. 4242–4245 (2010). https://doi.org/10.1109/ICPR.2010.1031

Khosrowabadi R., Wahab A., Ang K.K., Baniasad M.H.: Affective computation on EEG correlates of emotion from musical and vocal stimuli. In: International Joint Conference on Neural Networks, Atlanta, GA, USA (2009). https://doi.org/10.1109/IJCNN.2009.5178748

Kim, D., Koo, D.: Analysis of pre-processing methods for music information retrieval in noisy environments using mobile devices. Int. J. Contents 8(2), 1–6 (2012). https://doi.org/10.5392/IJOC.2012.8.2.001

Kim, K.H., Bang, S.W., Kim, S.R.: Emotion recognition system using short-term monitoring of physiological signals. Med. Biol. Eng. Comput. 42(2004), 419–427 (2004). https://doi.org/10.1007/BF02344719

Kim Y.E., Schmidt E.M., Migneco R., Morton B.G., Richardson P., Scott J.J., Speck J.A., Turnbull D.: State of the art report: music emotion recognition: a state-of-the-art review. In: International Society for Music Information Retrieval Conference ISMIR (2010)

Kim Y.E., Schmidt E.M., Emelle L.: MoodSwings: a collaborative game for music mood label collection. In: ISMIR, pp. 231–236 (2008)

Koelstra, S., Mühl, C., Soleymani, M., Jong-Seok, L., Yazdani, A., Ebrahimi, T., Pun, T., Nijholt, A., Patras, I.: DEAP: a database for emotion analysis using physiological signals. IEEE Trans. Affective Comput. 3(1), 18–31 (2011). https://doi.org/10.1109/T-AFFC.2011.15

Lahane, P.U., Thirugnanam, M.: Human emotion detection and stress analysis using EEG signal. Int. J. Innov. Technol. Explor. Eng. (IJITEE) 8(42), 96–100 (2019)

Lang, P.J.: The emotion probe: studies of motivation and attention. Am. Psychol. Assoc. 50(5), 372–385 (1995)

Laurier C., Grivolla, N., Herrera P.: Multimodal music mood classification using audio and lyrics. In: 7th International Conference on Machine Learning and Applications, pp. 688–693 (2008). https://doi.org/10.1109/ICMLA.2008.96

Law E., Ahn L.V., Dannenberg R.B., Crawford M.J.: TagATune: a game for music and sound annotation. In: 8th International Conference on Music Information Retrieval ISMIR (2007)

Lehmberg, L.J., Fung, C.V.: Benefits of music participation for senior citizens: a review of the literature. Music Educ. Res. Int. 4(2010), 19–30 (2010)

Li T., Ogihara M.: Content-based music similarity search and emotion detection. In: ICASSP, pp. 705–708 (1988). https://doi.org/10.1109/ICASSP.2004.1327208

Lin, Y.P., Wang, C.H., Jung, T.P., Wu, T.L., Jeng, S.K., Duann, J.R., Chen, J.H.: EEG-Based Emotion Recognition in Music Listening. IEEE Trans. Biomed. Eng. 57(2010), 1798–1806 (2010). https://doi.org/10.1109/TBME.2010.2048568

Liu Y., Yan N., Hu D.: Chorlody: a music learning game. In: CHI Extended Abstracts on Human Factors in Computing Systems, pp. 277–280 (2014). https://doi.org/10.1145/2559206.2580098

Liu, Y.J., Yu, M., Zhao, G., Song, J., Ge, Y., Shi, Y.: Real-time movie-induced discrete emotion recognition from EEG signals. IEEE Trans. Affect. Comput. 9(4), 550–562 (2018). https://doi.org/10.1109/TAFFC.2017.2660485

Losorelli S., Nguyen D.C., Dmochowski J.P., Kaneshiro B.: NMED-T: a tempo-focused dataset of cortical and behavioral responses to naturalistic music. In: 18th International Society for Music Information Retrieval Conference, pp. 339–346 (2017). https://doi.org/10.5281/zenodo.1417917

Lotte, F., Bougrain, L., Cichocki, A., Clerc, M., Congedo, M., Rakotomamonjy, A., Yger, F.: A review of classification algorithms for EEG-based brain–computer interfaces: a 10-year update. J. Neural Eng. 15(3), 2018 (2018). https://doi.org/10.1088/1741-2552/aab2f2

Lu, L., Liu, D., Zhang, H.J.: Automatic Mood Detection and Tracking of Music Audio Signals. IEEE Trans. Audio Speech Lang. Process. 14(2006), 5–18 (2005). https://doi.org/10.1109/TSA.2005.860344

Luo, S., Yu, Y., Liu, S., Qiao, H., Liu, Y., Feng, L.: Deep attention-based music genre classification. Neurocomputing 372(2020), 84–91 (2020). https://doi.org/10.1016/j.neucom.2019.09.054

Ma K., Wang X., Yang X., Zhang M., Girard J.M., Morency L.P.: ElderReact: a multimodal dataset for recognizing emotional response in aging adults. In: 2019 International Conference on Multimodal Interaction, ICMI, pp. 349–357 (2019). https://doi.org/10.1145/3340555.3353747

Mandel, M.I., Ellis, D.P.: A web-based game for collecting music metadata. J. New Music Res. 37, 365–366 (2007). https://doi.org/10.1080/09298210802479300

McCraty, R., Barrios-Choplin, B., Atkinson, M., Tomasino, D.: The effects of different types of music on mood, tension, and mental clarity. Altern. Ther. Health Med. 4(1), 75–84 (1998)

McKeown, G., Valstar, M., Cowie, R., Pantic, M., Schroder, M.: The SEMAINE database: annotated multimodal records of emotionally colored conversations between a person and a limited agent. IEEE Trans. Affect. Comput. 3(1), 5–17 (2012). https://doi.org/10.1109/T-AFFC.2011.20

Mehrabian, A., Russell, J.A.: An Approach to Environmental Psychology. MIT Press, Cambridge (1974)

Miranda, J., Abadi, M.K., Nicu, S., Patras, I.: AMIGOS: a dataset for mood, personality and affect research on individuals and groups. IEEE Trans. Affect. Comput. 2017, 1–14 (2017). https://doi.org/10.1109/TAFFC.2018.2884461

Moody, G.B., Mark, R.G.: The impact of the MIT-BIH arrhythmia database. IEEE Eng. Med. Biomed. 20(3), 45–50 (2001)

Murthy, Y.V.S., Koolagudi, S.G.: Content-based music information retrieval (CB-MIR) and its applications towards the music industry: a review. ACM Comput. Surv. 51(3), 1–46 (2018)

Naji, M., Firoozabadi, M., Azadfallah, P.: Classification of music-induced emotions based on information fusion of forehead biosignals and electrocardiogram. Cognit. Comput. 6(2), 241–252 (2013). https://doi.org/10.1007/s12559-013-9239-7

Naji, M., Firoozabadi, M., Azadfallah, P.: Emotion classification during music listening from forehead biosignals. Signal Image Video Process. 9(6), 1365–1375 (2015). https://doi.org/10.1007/s11760-013-0591-6

Nasehi, S., Pourghassem, H.: An optimal EEG-based emotion recognition algorithm using Gabor features. WSEAS Trans. Signal Process. 8, 87–99 (2012)

Naser, D.S., Saha, G.: Recognition of emotions induced by music videos using DT-CWPT. Indian Conf. Med. Inf. Telemed. (ICMIT) (2013). https://doi.org/10.1109/IndianCMIT.2013.6529408

Nasoz, F., Lisetti, C.L., Alvarez, K., Finkelstein, N.: Emotion recognition from physiological signals for user modelling of affect. Cognit. Technol. Work 6(1), 4–14 (2003). https://doi.org/10.1007/s10111-003-0143-x

North, A.C., Hargreaves, D.J.: (2009) Music and consumer behavior. In: Hallam, S., Cross, I., Thaut, M. (eds.) The Oxford Handbook of Music Psychology, pp. 481–490. Oxford University Press, Oxford (2009)

Oh S., Hahn M., Kim J.: Music mood classification using intro and refrain parts of lyrics. In: 2013 International Conference on Information Science and Applications (ICISA), pp. 1–3 (2013). https://doi.org/10.1109/ICISA.2013.6579495

Pandey P., Seeja K.R.: Subject-independent emotion detection from EEG signals using deep neural network. In: Proceedings of ICICC 2018, Vol. 2, pp. 41–46 (2018). https://doi.org/10.1007/978-981-13-2354-6_5

Park, H.S., Yoo, J.O., Cho, S.B.: A Context-aware music recommendation system using fuzzy Bayesian networks with utility theory. In: Fuzzy Systems and Knowledge Discovery. FSKD 2006. Lecture Notes in Computer Science, vol. 4223. Springer, New York (2006). https://doi.org/10.1007/11881599_121

Paul, D.J., Kundu, S.: A survey of music recommendation systems with a proposed music recommendation system. In: Emerging Technology in Modelling and Graphics. Advances in Intelligent Systems and Computing, vol. 937. Springer, New York (2020). https://doi.org/10.1007/978-981-13-7403-6_26

Petrantonakis, P.C., Hadjileontiadis, L.J.: Emotion recognition from EEG using higher order crossing. IEEE Trans. Inf Technol. Biomed. 14(2010), 186–197 (2009). https://doi.org/10.1109/TITB.2009.2034649

Petrantonakis, P.C., Hadjileontiadis, L.J.: Emotion recognition from brain signals using hybrid adaptive filtering and higher order crossing analysis. IEEE Trans. Affect. Comput. 1(2010), 81–97 (2010). https://doi.org/10.1109/T-AFFC.2010.7

Plutchik, R.: The Nature of Emotions: Human emotions have deep evolutionary roots, a fact that may explain their complexity and provide tools for clinical practice. Am. Sci. 89(4), 344–350 (2001)

Russell, J.A.: Culture and the categorization of emotions. Psychol. Bull. 110(3), 425–450 (1991)

Ruvolo P., Fasel I., Movellan J.: Auditory mood detection for social and educational robots. In: 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA (2008). https://doi.org/10.1109/ROBOT.2008.4543754

Scaringella, N., Zoia, G., Mlynek, D.: Automatic genre classification of music content: a survey. IEEE Signal Process. Mag. 23(2), 133–141 (2006)

Schalk, G., McFarland, D.J., Hinterberger, T., Birbaumer, N., Wolpaw, J.R.: BCI2000: a general-purpose brain-computer interface (BCI) system. IEEE Trans. Biomed. Eng. 51(6), 1034–1043 (2004)

Shu, L., Xie, J., Yang, M., Li, Z., Li, Z., Liao, D., Xu, X., Yang, X.: A review of emotion recognition using physiological signals. Sensors 18, 2074 (2018). https://doi.org/10.3390/s18072074

Shuman, V., Schlegel, K., Scherer, K.: Geneva Emotion Wheel rating study (Report). Swiss Centre for Affective Sciences, Geneva (2015)

Silverman, M.J.: Effects of live music in oncology waiting rooms: two mixed methods pilot studies. Int. J. Music Perform. Arts 3(1), 1–15 (2015)

Skowronek J., McKinney M.F., Par S.V.: Ground truth for automatic music mood classification. In: Proceedings of the International Conference on Music Information Retrieval, pp. 395–396 (2006)

Snyder D., Chen G., & Povey D.: MUSAN: A Music, Speech, and Noise Corpus. ArXiv, abs/1510.08484 (2015)

Soleymani M., Asghari-Esfeden S., Pantic M., Fu Y. Continuous emotion detection using EEG signals and facial expressions. In: IEEE International Conference on Multimedia and Expo (ICME), pp. 1–6 (2014). https://doi.org/10.1109/ICME.2014.6890301

Soleymani M., Caro M.N., Schmidt E.M., Sha C.Y., Yang YH.: 1000 songs for emotional analysis of music. In: 2nd ACM international workshop on Crowdsourcing for multimedia (CrowdMM'13). ACM, pp. 1–6 (2013). https://doi.org/10.1145/2506364.2506365

Soleymani, M., Lichtenauer, J., Pun, T., Pantic, M.: A multimodal database for affect recognition and implicit tagging. IEEE Trans. Affect. Comput. 3(1), 42–55 (2012). https://doi.org/10.1109/T-AFFC.2011.25

Song Y., Dixon S., Pearce M.T.: A survey of music recommendation systems and future perspectives. In: 9th International Symposium on Computer Music Modeling and Retrieval (CMMR’12) (2012)

Sourina O., Liu Y.: A fractal-based algorithm of emotion recognition from EEG using arousal-valence model. BIOSIGNALS. In: Proceedings of the International Conference on Bio-Inspired Systems and Signal Processing, pp. 209–214 (2011)

Sourina, O., Wang, Q., Liu, Y., Nguyen, M.K.: A real-time fractal-based brain state recognition from EEG and its applications. In: International Joint Conference on Biomedical Engineering Systems and Technologies BIOSTEC, pp. 258–272 (2011)

Stober S., Sternin A., Owen A.M., Grahn J.A.: Deep Feature Learning for EEG Recordings. ArXiv, abs/1511.04306 (2015)

Subramanian, R., Wache, J., Abadi, M.K., Vieriu, R.L., Winkler, S., Sebe, N.: ASCERTAIN: emotion and personality recognition using commercial sensors. IEEE Trans. Affect. Comput. 9(2), 147–160 (2018). https://doi.org/10.1109/TAFFC.2016.2625250

Takahashi K.: Remarks on emotion recognition from bio-potential signals. In: 2nd International Conference on Autonomous Robots and Agents, pp. 186–191 (2004)

Thaut, M.H.: Rhythm, Music, and the Brain: Scientific Foundations and Clinical Applications. Routledge, New York (2005).. (ISBN 0415973708)

Bertin-Mahieux, T., Ellis, D.P.W., Whitman, B., Lamere, P. The million song dataset. In: 12th International Society for Music Information Retrieval Conference ISMIR, pp. 591–596 (2011)

Tseng K.C., Lin B.S., Han C.M., Wang P.S. Emotion recognition of EEG underlying favourite music by support vector machine. In: 1st International Conference on Orange Technologies (ICOT), pp. 155–158 (2013). https://doi.org/10.1109/ICOT.2013.6521181

Turnbull D., Liu R., Barrington L., Lanckriet, G.R. A game-based approach for collecting semantic annotations of music. In: International Society on Music Information Retrieval ISMIR, pp. 535–538 (2007)

Tzanetakis, G., Cook, P.: Musical genre classification of audio signals. IEEE Trans. Speech Audio Process. 10(5), 293–302 (2002)

Van Der Zwaag, M.D., Dijksterhuis, C., De Waard, D., Mulder, B.L.J.M., Westerink, J.H.D.M., Brookhuis, K.A.: The influence of music on mood and performance while driving. Ergonomics 55(1), 12–22 (2012). https://doi.org/10.1080/00140139.2011.638403

Vijayan A.E., Sen D., Sudheer A.P.: EEG-based emotion recognition using statistical measures and auto-regressive modelling. In: IEEE International Conference on Computational Intelligence & Communication Technology, pp. 587–591 (2015). https://doi.org/10.1109/CICT.2015.24

Wagner J., Kim J., Andre E.: From physiological signals to emotions: implementing and comparing selected methods for feature extraction and classification. In: 2005 IEEE International Conference on Multimedia and Expo, pp. 940–943 (2005)

Weihs C., Ligges U., Mörchen F., Müllensiefen D.: Classification in music research. Adv. Data Anal. Classification, 1(3): 255–291 (2007). 1111 S. (2013). Using Physiological Signals for Emotion Recognition. In: 6th International Conference on Human System Interactions. 2013: 556–561. DOI: https://doi.org/10.1109/HSI.2013.6577880

Yang, Y., Chen, H.H.: Machine recognition of music emotion: a review. ACM Trans. Intell. Syst. Technol. (TIST) 3(3), 40:1-40:30 (2012). https://doi.org/10.1145/2168752.2168754

Yang, Y., Lin, Y., Su, Y., Chen, H.H.: A regression approach to music emotion recognition. IEEE Trans. Audio Speech Lang. Process. 16(2), 448–457 (2008). https://doi.org/10.1109/TASL.2007.911513

Yang Y., Liu C.C., Chen H.H.: Music emotion classification: a fuzzy approach. In: Proceedings of the ACM International Conference on Multimedia, pp. 81–84 (2006). https://doi.org/10.1145/1180639.1180665

Yıldırım, Ö., Baloglu, U.B., Acharya, U.R.: A deep convolutional neural network model for automated identification of abnormal EEG signals. Neural Comput. Appl. (2018). https://doi.org/10.1007/s00521-018-3889-z

Zheng, W., Lu, B.: A Multimodal approach to estimating vigilance using EEG and forehead EOG. J. Neural Eng. 14(2), 026017 (2016). https://doi.org/10.1088/1741-2552/aa5a98

Zheng, W., Lu, B.: Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Mental Dev. 7(3), 162–175 (2015). https://doi.org/10.1109/TAMD.2015.2431497

Zheng, W., Zhu, J., Lu, B.: Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. 10(3), 417–429 (2016). https://doi.org/10.1109/TAFFC.2017.2712143

Zong C., Chetouani M.: Hilbert-Huang transform based physiological signals analysis for emotion recognition. In: IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), pp. 334–339 (2009). https://doi.org/10.1109/ISSPIT.2009.5407547

Thayer, R.E.: The Biopsychology of Mood and Arousal. Oxford University Press, New York (1989).. (ISBN0-19-506827-0)

Fontaine, J.R.J., Scherer, K.R., Roesch, E.B., Ellsworth, P.C.: The world of emotions is not two-dimensional. Psychol. Sci. 18(12), 1050–1057 (2007). https://doi.org/10.1111/j.1467-9280.2007.02024.x

Stober S., Sternin A., Owen A.M., & Grahn, J.A.: Towards Music Imagery Information Retrieval: Introducing the OpenMIIR Dataset of EEG Recordings from Music Perception and Imagination. In: 16th International Society for Music Information Retrieval Conference, pp. 763–769 (2015). https://doi.org/10.5281/zenodo.1416270

Alakuş, T.B., Türkoglu, I.: Feature selection with sequential forward selection algorithm from emotion estimation based on EEG signals. Sakarya Univ. J. Sci. 23(6), 1096–1105 (2019). https://doi.org/10.16984/saufenbilder.501799

Hosseini, M., Hosseini, A., Ahi, K.: A review on machine learning for EEG signal processing in bioengineering. IEEE Rev. Biomed. Eng. (2020). https://doi.org/10.1109/RBME.2020.2969915

Zhang, K., Zhang, H.B., Li, S., Yang, C., Sun, L.: The PMEmo dataset for music emotion recognition. Int. Conf. Multimed. Retrieval. (2018). https://doi.org/10.1145/3206025.3206037

Warrenburg, L.A.: Choosing the right tune: a review of music stimuli used in emotion research. Music Perception Interdiscip. J. 37(3), 240–258 (2020). https://doi.org/10.1525/mp.2020.37.3.240

Su, K., Hairston, W., Robbins, K.: EEG-annotate: automated identification and labeling of events in continuous signals with applications to EEG. J. Neurosci. Methods 293, 359–374 (2018)

Amin, H.U., Mumtaz, W., Subhani, A., Saad, M., & Malik, A.: Classification of EEG signals based on pattern recognition approach. Front. Comput. Neurosci. 11, 103 (2017). https://doi.org/10.3389/fncom.2017.00103. eCollection 2017

Delorme, A., Makeig, S.: EEGLAB: an open-source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21 (2004)

Ghosh, A., Danieli, M., & Riccardi, G.: Annotation and prediction of stress and workload from physiological and inertial signals. In: 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pp. 1621–1624 (2015)

Wu, S., Xu, X., Shu, L., Hu, B.: Estimation of valence of emotion using two frontal EEG channels. In: Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine, Kansas City, MO, USA, 13–16 November 2017; pp. 1127–1130 (2017)

Lan, Z., Sourina, O., Wang, L., Liu, Y.: Real-time EEG-based emotion monitoring using stable features. Vis. Comput. 32, 347–358 (2016)

Dror, O.E.: The cannon-bard thalamic theory of emotions: a brief genealogy and reappraisal. Emot. Rev. 6(1), 13–20 (2014). https://doi.org/10.1177/1754073913494898

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by I. Bartolini.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chaturvedi, V., Kaur, A.B., Varshney, V. et al. Music mood and human emotion recognition based on physiological signals: a systematic review. Multimedia Systems 28, 21–44 (2022). https://doi.org/10.1007/s00530-021-00786-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-021-00786-6