Abstract

The attention towards robot-assisted therapies (RAT) had grown steadily in recent years particularly for patients with dementia. However, rehabilitation practice using humanoid robots for individuals with Mild Cognitive Impairment (MCI) is still a novel method for which the adherence mechanisms, indications and outcomes remain unclear. An effective computing represents a wide range of technological opportunities towards the employment of emotions to improve human-computer interaction. Therefore, the present study addresses the effectiveness of a system in automatically decode facial expression from video-recorded sessions of a robot-assisted memory training lasted two months involving twenty-one participants. We explored the robot’s potential to engage participants in the intervention and its effects on their emotional state. Our analysis revealed that the system is able to recognize facial expressions from robot-assisted group therapy sessions handling partially occluded faces. Results indicated reliable facial expressiveness recognition for the proposed software adding new evidence base to factors involved in Human-Robot Interaction (HRI). The use of a humanoid robot as a mediating tool appeared to promote the engagement of participants in the training program. Our findings showed positive emotional responses for females. Tasks affects differentially affective involvement. Further studies should investigate the training components and robot responsiveness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Emotions are relevant in all aspects of human life. Few visual stimuli are as socially salient as the faces of humans. Faces convey critically important social cues, as age, sex, and identity, but they are also the primary vehicle for nonverbal communication and the precise interpretation of facial emotions is an important predictor of the attainment in social interactions. Theory of basic emotions posits that a core set of different emotions (e.g., Anger, Sadness, or Happiness) derives from a distinct neural system that manifests in discrete patterns of autonomic response, motor behavior, and facial expressions [16]. Basic and secondary emotions are also classified as areas in the space defined by different dimension: activation and evaluation. Activation refers to the predisposition of the individual to perform an action according to her/his emotional state. Evaluation is assumed as the general appraisal of the positive or negative feeling related with an emotional status. Alternatively, the circumplex model of affect holds that all emotions derive from two underlying, orthogonal dimensions of emotional experience, valence and arousal [44]. Although many others communicative channels are been explored, several studies on human emotion are addressed to facial expressiveness, defined by the Facial Action Coding System (FACS) [16].

Researchers have shown that facial expression recognition improves from childhood to early adulthood and degenerates in the elderly [33]. Lacks of emotion recognition can have a big impact on social competence of the elderly causing stress, communication problems, and depression. Some researchers explain emotion recognition deficit as linked to cognitive impairments such as visual impairment [1], but emotion recognition from faces is a complex task that involves different cerebral structures and cognitive abilities that can be compromised by neuro-psychological disease [48]. Emotion recognition impairment in dementia seems to progress from a deficit in low-intensity facial expression recognition in MCI phase and subsequently it extends to mild Alzheimer disease, as a possible effect of the well-known progressive emotion processing degeneration of the brain [48]. MCI is a stage between normal cognitive functions and early dementia [42] in which: (i) the individual shows a decline in cognitive capacity confirmed by neuro-psychological tools; (ii) independence in activities of daily living is present, although compromise can be observed in complex activities; and (iii) criteria for clinical dementia are not fulfilled [39]. The prevalence of MCI in adults over 65 years old is from 10% to 20%.

It was estimated [43] that people with MCI vs. healthy older adults have triple the risk of being diagnosed with Alzheimer’s dementia within 2–5 years. Delaying the appearing of dementia one year could decrease the number of the Alzheimer’s patients by 9 million in 2050 [40]. The primary aim for MCI patients is to keep their cognitive ability when functional capabilities and independence are not compromised [20].

The affective computing [41] and social computing fields started to be broadly investigated, focusing on the new technologies that advance our understanding of emotions and their role in human experience [9]. In recent years, new tools and technologies based on machine learning and robotics are successfully applied to the field of psychology and robotics has become more widely applied in elderly care [7] and social assistance for people with MCI [23, 24]. There is a paucity in research aimed to investigate RAT for adult with MCI or mild AD [29]. A real companion must be able to fill in for the elder’s loss of family or friends relieving the feeling of isolation, and to send/receive emotion’s expressions [45] in a mutual adaptation with others making behavior understandable and social interaction complete and synchronized [27]. In [23, 31, 47] robots were successfully used to improve social communication and interaction among patients with dementia. They serve many functionalities [24] providing life assistance and showing that the robot also has the potential to improve the daily life of persons with a mild level of dementia [47]. Telepresence social robots were used in order to let patients to communicate with family improving communication [34] because the social robots capabilities are more complete [12, 13] thanks to ability to simulating emotions [12, 15] and empathy [11]. In human interaction, emotional and social signals expressing additional information are essential. With this aim, particular attention was devoted to quantifying the effectiveness of a social robot on well-being of training recipients. This was accomplished measuring spontaneous facial expressions with a software for automatic assessment of human-robot interaction decoding facial expressions [10]. Our purpose was to explore the response of participants (e.g. positive or negative) in different tasks in order to see whether participants are positively engaged during interaction or not. Our research addresses affective expression in human-robot interactions exploiting video clips recorded during the 2-month of the previous study and analyzing facial expressions from the participants [43].

Some research questions have led to the present paper. First, is our system a viable tool for automatically measuring the quality of human-robot interaction? Second, is our tool able to detect human perceivable facial expressions during HRI? Third, does the interaction with the humanoid robot induce changes due to the gender [17] during a RAT for individuals with MCI? The main contributions of the present paper are the following: a new computer vision-based system able to analyze facial expression in unconstrained and real scenario of memory training, decoding facial expression in partially occluded faces. The system recognize facial expression in a group through a multi-face detector providing the clinical perspectives on memory training program assisted by a social robot for the elderly. Many studies have employed the robot NAO. If appropriately programmed, it is able to decode human emotions, simulate emotions through the color of his eyes or the position of the body, recognize faces and model physical exercise for seniors [2, 46], and equipped to measure of health and environmental parameters [50].

2 Related work

A recent trend has moved towards generic platforms such as the NAO humanoid robot from SoftBank, previously used in different fields as autism spectrum disorders with the aim to expand social and communication skills [29, 37]. During human-robot interaction, the mirror neuron system (MNS), responsible for social interaction, is verified to be active [21] suggesting that humans can consider robots as real companions with their own intentions [18]. Embodied cognition paradigm [28] is widely explored in robotics to design and implement effective HRI. According to this theoretical framework, cognitive and affective processes are mediated by perceptual and postures of the body. An approach to enhance the HRI interaction capacity of NAO associated its verbal communication with gestures through the movements of hand and head as well as gaze orienting. Some studies identified also a fixed set of non-verbal gestures of NAO used for enhancing their presentations and turn management capability of NAO in social interactions. This emotional expressions set for NAO involving the dimensions of arousal and valence was employed in some applications [5]. If appropriately programmed, NAO is able to decode human emotions, simulate emotions through the color of his eyes or the position of the body, recognize faces and model physical exercise to a group of seniors [2], and equipped to measure of health and environmental parameters [50]. NAO was used in individual and group therapy sessions [32] to assist the therapist with speech, music and movement. It was also observed a good acceptance by older adult in assisted living facilities [49].

Robotics could partially fill in some of the identified gaps in current health care and home care provisions for promising applications [8] also through the development of a coding system aimed at measuring engagement-related behavior across activities in people with dementia [6, 38]. With the growing incidence of pathologies and cognitive impairment associated with aging, there will be an increasing demand for maintaining care systems and services for elderly with the imperative of economic cost-effectiveness of care provision. This is important for the clinician, as MCI is typically a progressive disorder and intervention is not so much about altering the underlying neuro-psychological impairment but reducing the disability deriving from the impairment, i.e. increasing quality of life, and sustaining independent living [26]. Findings about stimulation therapy on optimizing cognitive function of older adults with mild to moderate dementia are robust and promising, but the majority of studies have focused on cognitive abilities (e.g., memory performance, problem solving, and communication techniques), and both the effects of cognitive rehabilitation on MCI and social aspects remain inconclusive [10]. Very little is known about the relationship between clinical features (e.g. cognitive impairments and behavioral symptoms) and the characteristics of assistive technologies that are most suitable for persons with specific impairment. Reduced empathy [4] has been associated with greater risk for loneliness and depression, and poorer personal life satisfaction, which are all major concerns that have been tied to increased morbidity in older adults [3]. Emotion perception has been shown to rely on a ventral affective system, including the amygdala, insula, ventral striatum, and ventral regions of the anterior cingulate gyrus and prefrontal cortex. Regions in this ventral system, including the amygdala, are also susceptible to atrophy already in the MCI stage [25]. We are only beginning to recognize the complexity of matching individual and technology for persons with MCI and mild dementia [47]. In [43], NAO has been involved in a robot-assisted memory-training for people with MCI in a center for cognitive disorders and dementia. The emotional cues as found through facial expression has shown connections in a number of cognitive tasks, and the robot should be able to operate autonomously adapting its operations to evolving users’ needs as well as emotional states, via learning in the context of interactions. With the purpose of recognize emotions from facial expression several methods and approaches have been studied and developed. Noldus FaceReaderFootnote 1 and AffectivaFootnote 2 are well known available and validated facial expression recognition software that could be used in our study. Unfortunately, requirements and constraints of our ecological experimental setting made it necessary to use a customized system also able to support occlusions. System validation accomplished on validated dataset with a high recognition rate provide reasonable assurance on results from the video analysis.

3 The proposed approach

In this subsection the architecture and the pipeline of the system is given. The proposed system includes hardware and software main components: the MCI training program, the NAO social robot, three RGB cameras, and an automatic group facial expression recognition module as depicted in Fig. 1.

3.1 MCI memory training program

The MCI training program was developed using Choreographe (a rapid programming tool offered by the robot’s constructor) and Python. The developed software was installed on the robot and used robot behaviors (speech, music, and body movements) thanks to NAOqi APIs to perform the five tasks of the training. The program aimed to train focused, divided and alternate attention, categorization and association as learning strategies embedded in the following five tasks:

-

Reading stories (T1);

-

Comprehension questions about stories (T2);

-

Words learning (T3);

-

Words recall (T4);

-

Song-singer matching (T5).

3.2 Automatic group facial expression recognition module

In order to automatically recognize facial expression, three RGB cameras captured the participant’s face in order to automatically recognize facial expression. The cameras acquired a HD video at 30 frames per second. The automatic group facial expression recognition module identifies faces in the recorder videos by the cameras and then it recognizes 7 facial expressions: anger, disgust, fear, happiness, sadness, surprise, and neutral. The module is based on a previous study that aimed to recognize six basic emotions through facial expression [14, 36]. The extracted features basically consist of the complete zone of the eyes and the mouth that are merged into a single new image. In this work the system has been enhanced: to detect multiple faces in each video frame and to recognize facial expression also in partially occluded faces. The pipeline of the proposed system is shown in Fig. 2. Occlusions handling is crucial in order to guarantee high performances. During the sessions of the memory training program, the face of the participants is occasionally occluded by fingers, hair, or glasses as shown in Fig. 3.

When a face is identified, the automatic facial expression recognition system locating on the detected face 77 fiducial facial landmarks. Then, the system extracts: 15 distance features, 3 polygonal area, and 7 elliptical area features. An explanation of the extracted features is given below. A distance feature is a distance between two facial landmarks. Thus, three distance features were used to define the the left eyebrow. Two distance features were used to describe the left eye and one distance feature for the cheeks. Moreover, one distance feature was employed for the nose and eight distance features were used for the mouth. Then, the features extraction step elaborates polygonal features, calculating the area delimited by irregular polygons created using three or more facial landmarks. One polygonal area feature was used to describe the left eye and one polygonal area feature was exploited to describe the area between the corners of the left eye and the left corner of the mouth. Another polygonal feature was used for the area of the the mouth. Subsequently, the system extracts the elliptical area features, calculated by the ratio between the major axis and the minor axis of the ellipse built using four facial landmarks. Seven facial elliptical features were extracted by the system: one features to define the left eyebrow, three feature to describe the left eye and eyelids. Finally, three elliptical area features are extracted do define the the mouth, the lower lip and the upper lip [36]. Overall, 25 facial features were gathered. These features were the input of the handle occlusion step that uses entirely or in part the set of features in order to obtain better performances in the classification step. The last step is the classification step used to recognize the seven facial expressions. This step is based on the Random Forest classifier that analyzes the extracted set of features to determine the facial expression. Accuracy of the system has been evaluated on the Extended Cohn-Kanade (CK+) data set, a well known facial expression image database of 123 individuals of different gender, ethnicity and age [30]. The system reach an average accuracy facial expression recognition rate of 94,24% considering the 7 facial expression (anger, disgust, fear, happiness, sadness, surprise, and neutral).

4 Experimental evaluation

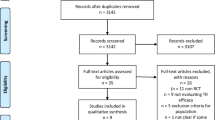

4.1 Participants

Participants were recruited from the Center for Cognitive Disorders and Dementia of AUSL Parma (Italy). Inclusion criteria were:

-

1.

diagnosis of MCI obtained through Petersen criteria, and full marks in the two tests measuring daily living activities (ADL and IADL);

-

2.

both genders;

-

3.

age range 45–85 years;

-

4.

without specific drug treatment.

Exclusion criteria were:

-

1.

Major Neurocognitive Disorder;

-

2.

previous stroke;

-

3.

other central nervous system diseases or psychiatric illness.

Participants underwent to a complete tests battery. In the center they were involved in programs that last 8 weeks, with weekly meetings of 1 h and a half, conducted in a small group format by an expert neuropsychologist.

The study was carried out in accordance with the ethical standards of the Italian Board of Psychologists and of the Italian Psychological Society. Given that the experiment did not involve clinical tests, use of pharmaceuticals or medical equipment, participant discomfort in any other way, and because the research was scheduled within the typical activities held in the Center, approval of Ethics Committee for Clinical Research was deemed unnecessary, in agreement with the regulation of the Institutional Reviewing Board (IRB) of the University of Parma. Informed consent with detailed description of research purpose and procedure with explicit emphasis on audio-video (AV) recordings and their use for scientific aims was obtained from all participants included in the study. The research was carried out in accordance with the 1964 World Medical Association Declaration of Helsinki Ethical Principles for Medical Research Involving Human Subjects and its later amendments or comparable ethical standards. Twenty-one participants (10 females and 11 males) with a mean age of 73.45 years (SD = 7.71) have been recruited for this study. The education level was of 9.90 years (SD = 4.58) with a minimum value of 5 years corresponding to the elementary school and a maximum value of 18 years which corresponds to a bachelor degree. All participants were native Italian speakers and had either normal or corrected vision. The data collection took place during 2015–2017 and was scheduled during the regular activities time held by the center. The institution provided an activity area, an additional room to ensure each informant’s privacy during the data collection, and the neuropsychologist who helped screen participants, to evaluate their fit for the experiment and conduct the training sessions.

4.2 Social scenario and procedure

The social scenario was structured in memory training group sessions in which patients were with the therapist who was assisted by the robot NAO from SoftBank.

Rich capabilities of NAO in terms of HRI motivate us to use this robot as a research platform in MCI training program. Basing on the diagnosis of the patients, participants were assigned at one group composed from six to eight individuals and one expert. The robot-assisted sessions were held during a standard cognitive stimulation protocol lasting two months. During a memory training session, the social robot was placed on an table while the participants were seated around. In this way, NAO was approximately at the same height of the participants’ eyes as depicted in Fig. 4.

The position of NAO sitting on the table at a distance of about one meter from each group participant is both compatible with distances falling within the personal and the social distance zones and robotics [35] suggestions that the most successful manner for a robot to attract attention is by deliberately it toward a person. By orienting the robot toward each participants, it is conceived that they are the focus of attention of NAO and that the robot is intently attending to the participants’ communication. Experimental evidence showed that humans interacting with a robot engaged the same social conventions for eye contact and personal distance as they would in human relation [19].

NAO assisted the intervention program administrating specific memory tasks based on cognitive stimulation exercises established in the literature [22] and on the characteristics of the social robot. The experimenter who operated the robot was sitting back in the room with the computer visible to the subjects. Participants respond to the social robot requests based on the task, which were proposed in a randomized mode to avoid stereotypical responses. During the sessions participants were seated in the chair at around the table and her/his facial expression reactions were recorded with three cameras placed at the corners of the table at a height of about 150 cm from the floor in the context of robot-assisted therapy room.

The training program involving five tasks was implemented in the SoftBank NAO social robot and administered during 24 group sessions. Facial emotional reactions were analyzed with our module in an off-line mode. For the analysis, we used a corpus of 48 video clips of one hour belonging to 24 therapeutic sessions for automatically estimating if participants were focusing their visual attention on the robot. Each video frame recorded three or four participants. Overall, at the end of the training for each participant a total of eight hours of video have been collected.

5 Experimental results

Finally, participants involved in the study were twenty-one: six in the first group, eight in the second group and seven in the last group. In this section, in order to assess the facial expression production for each subject, during the memory training assisted by the robot, two different kind of analysis have been carried out. The first analysis aimed to evaluate the automatic group facial expression recognition system proposed in this paper. The second analysis aimed to determine, thanks to the facial expression recognition system, the facial expression production of the participants.

The video clips of the collected data have additionally been explored more deeply the affective communications. Indeed, starting from the data of the facial expression recognition step performed on the whole video corpus collected during the experiment, a further analysis have been employed. The focus of this analysis has been carried out considering both the participants’ gender and how they produce facial expression during the memory training tasks. This analysis allowed to distinguish between the facial expressions produced by the male and the female individuals during the training sessions. Further analysis of the videos, as mentioned above, was carried out in this study. This analysis concerned the recognition of facial expressions during the 5-task program and makes it possible to obtain relevant information across the combination of functions trained. For each participant, we compared his/her facial expressions along the different task that he/she performed.

5.1 Analysis of the system

In order to quantitatively evaluate the automatic facial recognition system the recording session videos have been properly trimmed and down sampled at one frame per second. After the pre-processing, for each participant 28,800 frames have been analyzed (1 frame/s × 8 h of recording). Two metrics have been used on the entire video data set pre-processed as described above:

-

1.

the number of detected face (nFD);

-

2.

and the number of each facial expression recognized (nFE) in every frame for each participant.

Overall, the system was able to analyze all the video dataset and all the 21 faces of the participants have been correctly detected. With respect to nFD, it has been observed that, in percentage, a face has been detected in total of the corpus with a success rate of 56%. Moreover, respect to the nFE, it has been observed that in each frame where a face had been detected (also if partially occluded) the system was able to recognize a facial expression. During the whole memory training program, facial expressions have been recognized in percentage (with some cases of overlap) for all the sessions and for all the participants as follows: anger has been founded in the 7.24% of the frames, disgust has been identified in the 2.51% of the data analyzed, fear has been recognized in the 11.83% of the video corpus, happiness has been identified in the 16.95% of the frames analyzed, sadness has been recognized in the 14.31% of the data, surprise has been founded in the 3.64%, and, finally, neutral has been identified in the 43.52% of the videos. Neutral represented the most common facial expression recognized during the whole program but not in every case participant. Indeed, it is possible to notice that neutral is not the most common facial expression for the participant IDs 17, 18, 19, and 20.

5.2 Statistical analysis

Quantitative data were presented as means and ± SD. The Shapiro-Wilk test was used to establish data distribution. The data collected were subjected to a several statistical tests including t-test, n-way ANOVA, and Bonferroni test for multiple comparisons. The statistical significance level was set at α = 0.05. Figures 5 shows the percentage of each facial expression during the memory training grouped by gender. The two groups (M and F) are then compared with statistic analysis to better evaluate gender’ differences. A Student t-test has been employed to statistically assessing facial expression production of male and female participants during the memory training program. Normalized results of the t-test for the male and female participants are given in Table 1.

5.3 Results for emotions exhibited by the participants

An initial 5 (Tasks) × 2 (Gender) × 7 (Emotions expressiveness) ANOVA on the facial expressions’ frequency of occurrence with tasks, genders and emotions as between subject factors was performed. The prominent main effect of gender [F (1, 733) = 11.2905, p < 0.001], indicating that emotions expressiveness differ between females and males (M = 322.63 for females and M = 290.48 for males respectively), was further qualified by an interaction involving gender and emotions production [F (13, 721) = 49.7924, p < .0001]. In line with our hypothesis, female showed a higher level of emotion expressiveness respect to males.

Importantly, the main effect of emotions’ production was significant [F (6, 728) = 517.4305, p < .0001] reflecting a contrastive pattern of aftereffects for different emotions expressiveness, which was further qualified by a two way interaction with task, [F (24, 700) = 4.1714, p < 0.0001)] suggesting that expressed emotions varied across the tasks.

Post hoc comparisons using the t-test with Bonferroni correction indicated that the mean score for the following comparisons between emotions expressiveness showed significant differences: AN-DI, AN-FE, AN-HA, AN-NE, AN-SA, AN-SU, DI-FE, DI-HA, DI-NE, DI-SA, DI-SU, FE-HA, FE-NE, FE-SA, HA-NE, HA-SU, NE-SA, NE-SU, SA-SU with a p – value < .001. On the contrary, the comparisons between FE-SU (p – value = .179) and HA-SA (p – value = .572) were not statistically different.

Because of the first analysis revealed an higher level for neutral expressiveness, we calculated the magnitude of emotions’ expression effect for gender and each experimental condition by subtracting the proportions of neutral responses in all tasks. We then computed a 5 (Tasks) × 2 (Gender) X 6 (Emotions expressiveness) ANOVA on the facial expressions’ frequency of occurrence with tasks, genders and emotions as between subject factors. There was a prominent main effect of gender, [F (1, 628) = 10.43, p < .0012)]. There was a strong significant difference for the expressiveness effect [F (5, 624) = 348.3533, p < .001]. Pair wise comparisons suggested that the following pair showed significant differences: AN-DI, AN-FE, AN-HA, AN-SA, AN-SU, DI-FE, DI-HA, DI-SA, DI-SU, FE-HA, FE-SA, FE-SU, HA-SU, SA-SU with a p value < .001.

The comparisons between HA-SA (p value = .0939) did not reach statistical significance. The main effect of gender was qualified by an interaction with the expressiveness, [F (5, 618) = 12.7038, p < .001] suggesting a different pattern of emotions’ expressiveness across the gender. Importantly, there was a significant interaction between tasks and emotions expression, [F (20, 600) = 5.6704, p < .001] indicating that the tasks differed for emotions expressed by the participants. In order to disentangle the role of valence from expressiveness, we calculated the magnitude of emotions’ expression for each experimental tasks and gender by adding the occurrences of happy and surprise for positive emotions, and anger, disgust, fear and sadness for negative ones. We then computed a 5 (Tasks) × 2 (Gender) × 2 (Positive vs. Negative) ANOVA with all between factors. The ANOVA test revealed a significant difference between gender, [F (1, 628) = 4.0451, p < .05]. This main effect of gender was qualified by a strong interaction with valence of expressiveness [F (5, 618) = 44.0777, p < .001]. There was another significant difference between experimental tasks [F (1, 628) = 6.0644, p < .001]. The main effect of tasks was qualified by an interaction with valence, [F (20, 600) = 7.4948, p < .001]. A strong differences was revealed for the valence of emotions’ expression [F (1, 628) = 231.9942, p < .001]. With respect to the valence the largest difference was for negative respect the positive ones; this difference can be attributed to the amount of negative emotions, which are in greater number respect to the positive ones. Taken together, the analysis demonstrated substantial aftereffects of expressiveness on the tasks and gender. The pattern of observed effects comprised fewer interactions, but was generally similar along the analyses. A visual inspection of the results also suggests that positive facial expressions were frequently more expressed rather than negative ones; in particular, apart the neutral, the happiness was the highest deployed (X = 550.53) followed by sadness (X = 464.86). The disgust emotion had the lowest (X = 81.72) expression. Among potential reasons is that disgust is interpreted in relation to specific contexts, like experiences involving food. The lack of such situations leaves the interpretation quite undetermined.

The negative facial expressions are activated in the first task when participants were requested to read stories and in the third and fourth tasks (X = 65.33 and X = 64.80 respectively) in which subjects hold a list of couples of words that she/he must learn by association and then must recall writing down the associated word presented before respectively. Conversely, users tend to show positive emotions in the second (X = 42.48) and fifth (X = 40.86) tasks in which they received some comprehension’s questions about the stories, and have to remember the songs title as response to NAO who sings the song with the original singers voice. It can be observed that women showed a propensity to be more expressive compared to males which are generally less expressive. Furthermore, the latter remain more neutral throughout the experimental sessions. In all the other cases (AN, DI, FE, HA, SA, and SU) female were more expressive. Data showed that males produced significantly more neutral facial expressions than females. Concerning females, we found that they, compared to the males, exhibited a higher expressiveness patterns along all the tasks from the training for the expressions of anger, disgust, fear, sadness, and surprise. On the contrary, for happiness no significant effect was shown. Conversely, males activated at a more extent than females the neutral facial expression. It has been observed that neutral was the most common facial expression performed by the patients. Results for each facial expression are reported in Table 2.

6 Discussion and conclusions

Automated systems will have a terrific impact on basic research by making facial expression measurement more accessible as a behavioral measure, and by providing data on the dynamics of facial behavior at a resolution that was previously unavailable. The present paper addressed the issue of emotion recognition through facial expression using a method to automatically detect basic facial emotional expressions with handling of occlusions in the context of a rehabilitation memory program for individuals with MCI. A specific context such as a robot-assisted memory training for adults can benefit from computer vision and machine learning technologies to understand better how patients react to the robot or engage in interaction and tasks. Moreover, from automatic facial expression recognition psychologists can keep track of the mood of the individuals involved during the training sessions. We introduced a technique for automatically evaluating the quality of human-robot interaction based on the analysis of facial expressions. This test involved recognition of spontaneous facial expressions in the continuous video stream. Satisfactory performance results were obtained for directly processing the output of an automatic face detector and registration of facial features. Our findings suggest that user independent fully automatic real time coding of basic expressions is an achievable goal, at least for applications in which frontal views or multiple cameras can be assumed. Our study provides compelling evidence that affective states are particularly relevant in HRI. Participants though the interaction with NAO stimulating and many of them appreciated the reminders and prompts as the fact that the humanoid robot called them by name and started the training sessions with an orientation to time and place. The more remarkable results were gender and task differences, with women showing mostly positive emotions probably due to the different tasks and entangling diverse capabilities, which is fully understandable if we take into account the variety of the nature of human emotions and the ways of expressing them. The positive valence that the female agents express can be seen as a sort of a self-regulation between actions and emotions. Positive emotions may influence the generation of resources and thoughts in order to select the correct action. The evolution of the agent’s positive behavior is motivated by a self-regulation between actions and emotions. The agent’s reactions when it is facing a particular task could trigger a cycle of positive or negative emotions connected to the relevant action. Through positive reinforcement, humans are capable of improving skills and capacities in an interrelation, e.g., when humans face challenging situations and they are more likely to make more assertive decisions that when they have a negative emotional charge. During human-robot interaction, the mirror-circuit, responsible for social interaction, is verified to be active [18] suggesting that humans can consider robots as real companions with their own intentions. This finding somehow supports evidence from studies of facial emotion recognition in which differences in sensitivity to expressions depending on the gender were reported. There are limited details for gender effects in emotional processing in MCI, but further studies are necessary to elucidate this interesting aspect.

Although facial expression has been shown to be quite effective in the communication of affective response, some researchers feel that body movement and posture may reveal underlying emotions that might be hidden otherwise, because there are emotion categories that not have a corresponding facial expression and some emotion categories could share the same facial expression. Additionally, emotional episodes could be classified by bio-physiological signals or by brain structures and neurotransmitters involved. This reinforces the need for redundant or different method of affective expression such as non-facial. Despite this claim, it is shown by our experimental results that the proposed system can detect emotions with satisfactory accuracy, achieving the change of the emotional behavior of the agent faced with humans. In future work, other analysis on the video corpus will be done to understand the engagement, how the participants have been involved during the memory training program, and how to better include the individuals with MCI in new rehabilitation training program mediated by a social robot developing emotional companion systems with more emotional capabilities that will connect with humans, offering improved natural interaction.

References

Albert MS, Cohen C, Koff E (1991) Perception of affect in patients with dementia of the alzheimer type. Arch Neurol 48(8):791–795

Bäck I, Makela K, Kallio J (2013) Robot-guided exercise program for the rehabilitation of older nursing home residents. Annals of Long Term Care 21(6):38–41

Bailey PE, Brady B, Ebner NC, Ruffman T (2020) Effects of age on emotion regulation, emotional empathy, and prosocial behavior. The Journals of Gerontology B: Psychological Science and Social Sciences 75(4):802–810. https://doi.org/10.1093/geronb/gby084

Beadle JN, Christine E (2019) Impact of aging on empathy: Review of psychological and neural mechanisms. Frontiers in Psychiatry 10:331. https://doi.org/10.3389/fpsyt.2019.00331

Beck A, Cañamero L, Hiolle A, Damiano L, Cosi P, Tesser F, Sommavilla G (2013) Interpretation of emotional body language displayed by a humanoid robot: A case study with children. International Journal of Social Robotics 5(3):325–334

Boissy P, Corriveau H, Michaud F, Labonté D, Royer MP (2007) A qualitative study of in-home robotic telepresence for home care of community-living elderly subjects. J Telemed Telecare 13(2):79–84

Corcella L, Manca M, Nordvik JE, Paterǹo F, Sanders AM, Santoro C (2019) En-abling personalisation of remote elderly assistance. Multimedia Tools and Applications 78(15):21557–21583

Dahl TS, Boulos MNK (2014) Robots in health and social care: a complementary technology to home care and telehealthcare? Robotics 3(1):1–21

Damasio AR (1995) Descartes’error: emotion, reason, and the human brain. Penguin Book, New York (NY)

Dautenhahn K, Ogden B, Quick T (2002) From embodied to socially embedded agents–implications for interaction-aware robots. Cogn Syst Res 3(3):397–428. https://doi.org/10.1016/S1389-0417(02)00050-5

De Carolis B, Ferilli S, Palestra G (2015) Improving speech-based human robot interaction with emotion recognition. In: International Symposium on Methodologies for Intelligent Systems, Springer, pp. 273–279

De Carolis B, Ferilli S, Palestra G (2017) Simulating empathic behavior in a social as-sistive robot. Multimededia, Tools and Applications 76(4):5073–5094. https://doi.org/10.1007/s11042-016-3797-0

De Carolis B, Macchiarulo N, Palestra G (2019) Soft biometrics for social adaptive robots. In: International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Springer, pp. 687–699

De Carolis B, Palestra G, Pino O (2019) Facial expression recognition from nao robot within a memory training program for individuals with mild cognitive impairment. In: Proceedings of the Workshop Socio-Affective Technologies: An interdisciplinary approach co-located with IEEE SMC 2019 (Systems, Man and Cybernetics), pp 42–46

De Carolis BN, Ferilli S, Palestra G, Carofiglio V (2015) Towards an empathic social robot for ambient assisted living. In: ESSEM@ AAMAS, pp. 19–34. https://dl.acm.org/citation.cfm?id=3054107

Ekman P (2006) Darwin and facial expression: A century of research in review. Malor Books, Cambridge, MA Los Altos, CA

Elferink MWO, van Tilborg I, Kessels RP (2015) Perception of emotions in mild cognitive impairment and alzheimer’s dementia: does intensity matter? Translational Neuroscience 6(1):139–149. https://doi.org/10.1515/tnsci-2015-0013

Farina E, Baglio F, Pomati S, d’Amico A, Campini IC, Di Tella S, Belloni G, Pozzo T (2017) The mirror neurons network in aging, mild cognitive impairment, and alzheimer disease: A functional MRI study. Frontiers in Aging Neuroscience 9:371. https://doi.org/10.3389/fnagi.2017.00371

Fincannon T, Barnes LE, Murphy RR, Riddle DL (2004) Evidence of the need for social intelligence in rescue robots. In: 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)(IEEE Cat. No. 04CH37566), vol. 2, pp. 1089–1095. IEEE

Gauthier S, Reisberg B, Zaudig M, Petersen RC, Ritchie K, Broich K, Belleville S, Brodaty H, Bennett D, Chertkow H, Cummings JL, de Leon M, Feldman H, Ganguli M, Hampel H, Scheltens P, Tierney MC, Whitehouse P, Winblad B (2006) Mild cognitive impairment. Lancet 367(9518):1262–1270. https://doi.org/10.1016/S0140-6736(06)68542-5

Gazzola V, Rizzolatti G, Wicker B, Keysers C (2007) The anthropomorphic brain: the mirror neuron system responds to human and robotic actions. Neuroimage 35(4):1674–1684

Gollin D, Ferrari A, Peruzzi A (2007) Una palestra per la mente. Stimolazione cognitiva per l’invecchiamento cerebrale e le demenze. Edizioni Erickson, Trento (IT)

Gross HM, Schroeter C, Mueller S, Volkhardt M, Einhorn E, Bley A, Langner T, Martin C, Merten M (2011) I’ll keep an eye on you: Home robot companion for elderly people with cognitive impairment. In: Systems, Man, and Cybernetics (SMC), 2011 IEEE International Conference on, pp. 2481–2488. IEEE

Gross HM, Schroeter C, Mueller S, Volkhardt M, Einhorn E, Bley A, Langner T, Merten M, Huijnen C, van den Heuvel H, et al. (2012) Further progress towards a home robot companion for people with mild cognitive impairment. In: Systems, Man, and Cybernetics (SMC), 2012 IEEE International Conference on, pp. 637–644. IEEE

Karas G, Scheltens P, Rombouts S, Visser P, Van Schijndel R, Fox N, Barkhof F (2004) Global and local gray matter loss in mild cognitive impairment and alzheimer’s disease. Neuroimage 23(2):708–716

Kasper S, Bancher C, Eckert A, Förstl H, Frölich L, Hort J, Korczyn AD, Kressig RW, Levin O, Paloma MSM (2020) Management of Mild Cognitive Impairment (mci): The need for national and international guidelines. World Journal of Biology Psychiatry 21(8):579–594. https://doi.org/10.1080/15622975.2019.1696473

Knapp ML, Hall JA, Horgan TG (2013) Nonverbal communication in human interaction. Cengage Learning Inc. Boston (MA)

Kory J, Breazeal C (2014) Storytelling with robots: Learning companions for preschool children’s language development. In: Robot and Human Interactive Communication, 2014 RO-MAN: The 23rd IEEE International Symposium on, IEEE, pp. 643–648

Law M, Sutherland C, Ahn HS, MacDonald BA, Peri K, Johanson DL, Vajsakovic DS, Kerse N, Broadbent E (2019) Developing assistive robots for people with mild cognitive impairment and mild dementia: a qualitative study with older adults and experts in aged care. BMJ Open 9(9):e031937. https://doi.org/10.1136/bmjopen-2019-031937

Lucey P, Cohn JF, Kanade T, Saragih J, Ambadar Z, Matthews I (2010) The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, IEEE, pp. 94–101

Martín F, Agüero C, Cañas JM, Abella G, Beńıtez R, Rivero S, Valenti M, Martínez-Martín P (2013) Robots in therapy for dementia patients. Journal of Physical Agents 7(1):48–55

Martín F, Agüero CE, Cañas JM, Valenti M, Martínez-Martín P (2013) Robotherapy with dementia patients. International Journal of Advanced Robotic Systems 10(1):10

McCade D, Savage G, Naismith SL (2011) Review of emotion recognition in Mild Cognitive Impairment. Dementia and Geriatric Cognitive Disorders 32(4):257–266. https://doi.org/10.1159/000335009

Moyle W, Jones C, Cooke M, O’Dwyer S, Sung B, Drummond S (2014) Connecting the person with dementia and family: a feasibility study of a telepresence robot. BMC Geriatr 14(1):7. https://doi.org/10.1186/1471-2318-14-7

Nourbakhsh IR, Kunz C, Willeke T (2003) The mobot museum robot installations: A five year experiment. In: Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No. 03CH37453), vol. 4, IEEE, pp. 3636–3641

Palestra G, Pettinicchio A, Del Coco M, Carcagni P, Leo M, Distante C (2015) Improved performance in facial expression recognition using 32 geometric features. In: International Conference on Image Analysis and Processing, Springer, pp. 518–528

Palestra G, De Carolis B, Esposito F (2017) Artificial intelligence for robot-assisted treatment of autism. In: WAIAH@ AI* IA, pp. 17–24

Perugia G, van Berkel R, Díaz-Boladeras M, Català-Mallofŕe A, Rauterberg M, Barakova E (2018) Understanding engagement in dementia through behavior. the ethographic and laban-inspired coding system of engagement (elicse) and the evidence-based model of engagement-related behavior (emodeb). Front Psychol 9:690. https://doi.org/10.3389/fpsyg.2018.00690

Petersen RC, Caracciolo B, Brayne C, Gauthier S, Jelic V, Fratiglioni L (2014) Mild cognitive impairment: A concept in evolution. J Intern Med 275(3): 214–228. https://doi.org/10.1111/joim.12190

Petersen RC, Lopez O, Armstrong MJ, Getchius TS, Ganguli M, Gloss D, Gronseth GS, Marson D, Pringsheim T, Day GS, et al. (2018) Practice guide line update summary: Mild cognitive impairment: Report of the guideline development, dissemination, and implementation subcommittee of the american academy of https://www.overleaf.com/project/neurology. Neurology 90(3), 126–135

Picard RW (1999) Affective computing for hci. In: HCI (1), Citeseer, pp 829–833

Pino O (2015) Memory impairments and rehabilitation: Evidence-based effects of approaches and training programs. The Open Rehabilitation Journal 8(1):25–33. https://doi.org/10.2174/1874943720150601E001

Pino O, Palestra G, Trevino R, De Carolis B (2020) The humanoid robot nao as trainer in a memory program for elderly people with mild cognitive impairment. International Journal of Social Robotics 12(1):21–33. https://doi.org/10.1007/s12369-019-00533-y

Posner J, Russell JA, Peterson BS (2005) The circumplex model of affect: an integrative approach to affective neuroscience, cognitive development, and psychopathology. Developmental Psychopathology 17(3):715–734

Reyes ME, Meza IV, Pineda LA (2019) Robotics facial expression of anger in collaborative human–robot interaction. International Int J Adv Robot Syst 16(1). https://doi.org/10.1177/1729881418817972

Rouaix N, Retru-Chavastel L, Rigaud AS, Monnet C, Lenoir H, Pino M (2017) Affective and engagement issues in the conception and assessment of a robot-assisted psychomotor therapy for persons with dementia. Front Psychol 8:950. https://doi.org/10.3389/fpsyg.2017.00950

Sabanovic S, Bennett CC, Chang WL, Huber L (2013) Paro robot affects diverse interaction modalities in group sensory therapy for older adults with dementia. In: Rehabilitation Robotics (ICORR), 2013 IEEE International Conference on, IEEE, pp. 1–6

Spoletini I, Marra C, Di Iulio F, Gianni W, Sancesario G, Giubilei F, Trequattrini A, Bria P, Caltagirone C, Spalletta G (2008) Facial emotion recognition deficit in amnestic mild cognitive impairment and alzheimer disease. Am J Geriatr Psychiatr 16(5):389–398

Valentí Soler M, Agüera-Ortiz L, Olazarán Rodŕıguez J, Mendoza Rebolledo C, Ṕerez Muñoz A, Rodŕıguez Ṕerez I, Osa Ruiz E, Barrios Sánchez A, Herrero Cano V, Carrasco Chillón L et al (2015) Social robots in advanced dementia. Front Aging Neurosci 7:133. https://doi.org/10.3389/fnagi.2015.00133

Vital JP, Couceiro MS, Rodrigues NM, Figueiredo CM, Ferreira NM (2013) Fostering the nao platform as an elderly care robot. In: 2013 IEEE 2nd International Conference on Serious Games and Applications for Health (SeGAH), IEEE, pp. 1–5

Funding

Open access funding provided by Università degli Studi di Parma within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Palestra, G., Pino, O. Detecting emotions during a memory training assisted by a social robot for individuals with Mild Cognitive Impairment (MCI). Multimed Tools Appl 79, 35829–35844 (2020). https://doi.org/10.1007/s11042-020-10092-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-10092-4