Abstract

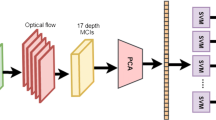

A novel first-person human activity recognition framework is proposed in this work. Our proposed methodology is inspired by the central role moving objects have in egocentric activity videos. Using a Deep Convolutional Neural Network we detect objects and develop discriminant object flow histograms in order to represent fine-grained micro-actions during short temporal windows. Our framework is based on the assumption that large scale activities are synthesized by fine-grained micro-actions. We gather all the micro-actions and perform Gaussian Mixture Model clusterization, so as to build a micro-action vocabulary that is later used in a Fisher encoding schema. Results show that our method can reach 60% recognition rate on the benchmark ADL dataset. The capabilities of the proposed framework are also showcased by profoundly evaluating for a great deal of hyper-parameters and comparing to other State-of-the-Art works.

Similar content being viewed by others

References

Avgerinakis K, Briassouli A, Kompatsiaris Y (2016) Activity detection using sequential statistical boundary detection (ssbd). Comput Vis Image Underst 144:46–61

Bambach S, Crandall DJ, Yu C (2015) Viewpoint integration for hand-based recognition of social interactions from a first-person view. In: Proceedings of the 2015 ACM on International Conference on Multimodal Interaction. ACM, pp 351–354

Bambach S, Lee S, Crandall DJ, Yu C (2015) Lending a hand: Detecting hands and recognizing activities in complex egocentric interactions. In: 2015 IEEE international conference on Computer vision (ICCV). IEEE, pp 1949–1957

Chéron G, Laptev I, Schmid C (2015) P-cnn: Pose-based cnn features for action recognition. In: Proceedings of the IEEE international conference on computer vision, pp 3218–3226

Crispim-Junior CF, Buso V, Avgerinakis K, Meditskos G, Briassouli A, Benois-Pineau J, Kompatsiaris I, Bremond F (2016) Semantic event fusion of different visual modality concepts for activity recognition. IEEE Trans Pattern Anal Mach Intell 38(8):1598–1611

Crispim-Junior CF, Gómez uría A, Strumia C, Koperski M, König A, Negin F, Cosar S, Nghiem AT, Chau DP, Charpiat G et al (2017) Online recognition of daily activities by color-depth sensing and knowledge models. Sensors 17(7):1528

Dai J, Li Y, He K, Sun J (2016) R-fcn: Object detection via region-based fully convolutional networks. In: Advances in neural information processing systems, pp 379–387

Dalal N, Triggs B, Schmid C (2006) Human detection using oriented histograms of flow and appearance. In: European conference on computer vision. Springer, pp 428–441

Damen D, Doughty H, Maria Farinella G, Fidler S, Furnari A, Kazakos E, Moltisanti D, Munro J, Perrett T, Price W et al (2018) Scaling egocentric vision: The epic-kitchens dataset. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 720–736

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-fei L (2009) Imagenet: A large-scale hierarchical image database

Du W, Wang Y, Qiao Y (2017) Rpan: an end-to-end recurrent pose-attention network for action recognition in videos. In: Proceedings of the IEEE International Conference on Computer Vision, pp 3725–3734

Everingham M, Van Gool L, Williams CK, Winn J, Zisserman A (2010) The pascal visual object classes (voc) challenge. Int J Comput Vis 88 (2):303–338

Fathi A, Farhadi A, Rehg JM (2011) Understanding egocentric activities. In: 2011 IEEE international conference on Computer vision (ICCV). IEEE, pp 407–414

Feichtenhofer C, Pinz A, Wildes R (2016) Spatiotemporal residual networks for video action recognition. In: Advances in neural information processing systems, pp 3468–3476

Feichtenhofer C, Pinz A, Zisserman A (2016) Convolutional two-stream network fusion for video action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1933–1941

Frankl P, Maehara H (1988) The johnson-lindenstrauss lemma and the sphericity of some graphs. J Comb Theory Ser B 44(3):355–362

Gaidon A, Harchaoui Z, Schmid C (2011) Actom sequence models for efficient action detection. In: CVPR 2011. IEEE, pp 3201–3208

Giannakeris P, Avgerinakis K, Vrochidis S, Kompatsiaris I (2018) Activity recognition from wearable cameras. In: 2018 International conference on content-based multimedia indexing (CBMI). IEEE, pp 1–6

Girshick R (2015) Fast r-cnn. In: Proceedings of the IEEE international conference on computer vision, pp 1440–1448

González-Díaz I, Benois-Pineau J, Domenger JP, Cattaert D, de Rugy A (2019) Perceptually-guided deep neural networks for ego-action prediction: Object grasping. Pattern Recogn 88:223–235

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Henriques JF, Caseiro R, Martins P, Batista J (2015) High-speed tracking with kernelized correlation filters. IEEE Trans Pattern Anal Mach Intell 37(3):583–596

Huang J, Rathod V, Sun C, Zhu M, Korattikara A, Fathi A, Fischer I, Wojna Z, Song Y, Guadarrama S et al (2017) Speed/accuracy trade-offs for modern convolutional object detectors. In: IEEE CVPR

Jain M, Jegou H, Bouthemy P (2013) Better exploiting motion for better action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2555–2562

Jhuang H, Gall J, Zuffi S, Schmid C, Black MJ (2013) Towards understanding action recognition. In: Proceedings of the IEEE international conference on computer vision, pp 3192–3199

Jin P, Rathod V, Zhu X (2018) Pooling pyramid network for object detection. arXiv:1807.03284

Johnson WB, Lindenstrauss J (1984) Extensions of lipschitz mappings into a hilbert space. Contemp Math 26(189-206):1

Kong T, Sun F, Yao A, Liu H, Lu M, Chen Y (2017) Ron: Reverse connection with objectness prior networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 5936–5944

Kroeger T, Timofte R, Dai D, Van Gool L (2016) Fast optical flow using dense inverse search. In: European conference on computer vision. Springer, pp 471–488

Kuznetsova A, Rom H, Alldrin N, Uijlings J, Krasin I, Pont-Tuset J, Kamali S, Popov S, Malloci M, Duerig T et al (2018) The open images dataset v4: Unified image classification, object detection, and visual relationship detection at scale. arXiv:1811.00982

Li Y, Liu M, Rehg JM (2018) In the eye of beholder: Joint learning of gaze and actions in first person video. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 619–635

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft coco: Common objects in context. In: European conference on computer vision. Springer, pp 740–755

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu CY, Berg AC (2016) Ssd: Single shot multibox detector. In: European conference on computer vision. Springer, pp 21–37

McCandless T, Grauman K (2013) Object-centric spatio-temporal pyramids for egocentric activity recognition. In: BMVC, vol 2, pp 3

Meditskos G, Plans PM, Stavropoulos TG, Benois-Pineau J, Buso V, Kompatsiaris I (2018) Multi-modal activity recognition from egocentric vision, semantic enrichment and lifelogging applications for the care of dementia. J Vis Commun Image Represent 51:169–190

Ohnishi K, Kanehira A, Kanezaki A, Harada T (2016) Recognizing activities of daily living with a wrist-mounted camera. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 3103–3111

Papadimitriou CH, Raghavan P, Tamaki H, Vempala S (2000) Latent semantic indexing: a probabilistic analysis. J Comput Syst Sci 61(2):217–235

Pirsiavash H, Ramanan D (2012) Detecting activities of daily living in first-person camera views. In: 2012 IEEE conference on Computer vision and pattern recognition (CVPR). IEEE, pp 2847–2854

Pirsiavash H, Ramanan D (2014) Parsing videos of actions with segmental grammars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 612–619

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: Unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 779–788

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: Towards real-time object detection with region proposal networks. In: Advances in neural information processing systems, pp 91–99

Sharma S, Kiros R, Salakhutdinov R (2015) Action recognition using visual attention. arXiv:1511.04119

Singh B, Najibi M, Davis LS (2018) Sniper: Efficient multi-scale training. In: Advances in neural information processing systems, pp 9333–9343

Sun S, Kuang Z, Sheng L, Ouyang W, Zhang W (2018) Optical flow guided feature: a fast and robust motion representation for video action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1390–1399

Tran A, Cheong LF (2017) Two-stream flow-guided convolutional attention networks for action recognition. In: Proceedings of the IEEE International Conference on Computer Vision, pp 3110–3119

Uijlings JR, Van De Sande KE, Gevers T, Smeulders AW (2013) Selective search for object recognition. Int J Comput Vis 104(2):154–171

Vaca-Castano G, Das S, Sousa JP, Lobo ND, Shah M (2017) Improved scene identification and object detection on egocentric vision of daily activities. Comput Vis Image Underst 156:92–103

Wang H, Kläser A, Schmid C, Liu CL (2011) Action recognition by dense trajectories. In: 2011 IEEE conference on Computer vision and pattern recognition (CVPR). IEEE, pp 3169–3176

Wang H, Kläser A, Schmid C, Liu CL (2013) Dense trajectories and motion boundary descriptors for action recognition. Int J Comput Vis 103 (1):60–79

Wang H, Schmid C (2013) Action recognition with improved trajectories. In: Proceedings of the IEEE international conference on computer vision, pp 3551–3558

Wang L, Xiong Y, Wang Z, Qiao Y (2015) Towards good practices for very deep two-stream convnets. arXiv:1507.02159

Wang L, Xiong Y, Wang Z, Qiao Y, Lin D, Tang X, Van Gool L (2018) Temporal segment networks for action recognition in videos IEEE transactions on pattern analysis and machine intelligence

Wang X, Gao L, Song J, Zhen X, Sebe N, Shen HT (2018) Deep appearance and motion learning for egocentric activity recognition. Neurocomputing 275:438–447

Yan Y, Ricci E, Liu G, Sebe N (2015) Egocentric daily activity recognition via multitask clustering. IEEE Trans Image Process 24(10):2984–2995

Zhang S, Wen L, Bian X, Lei Z, Li SZ (2018) Single-shot refinement neural network for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 4203–4212

Zhao Q, Sheng T, Wang Y, Tang Z, Chen Y, Cai L, Ling H (2018) M2det: A single-shot object detector based on multi-level feature pyramid network. arXiv:1811.04533

Zhou Y, Ni B, Hong R, Yang X, Tian Q (2016) Cascaded interactional targeting network for egocentric video analysis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 1904–1913

Zhu Y, Zhao C, Wang J, Zhao X, Wu Y, Lu H (2017) Couplenet: Coupling global structure with local parts for object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp 4126–4134

Acknowledgements

This work is supported by the project SUITCEYES that has received funding from the European Union’s Horizon 2020 research and innovation program under grant agreement No 780814.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Giannakeris, P., Petrantonakis, P.C., Avgerinakis, K. et al. First-person activity recognition from micro-action representations using convolutional neural networks and object flow histograms. Multimed Tools Appl 80, 22487–22507 (2021). https://doi.org/10.1007/s11042-020-09902-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09902-6