Abstract

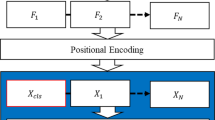

In the recent past, deep convolutional neural network (DCNN) has been used in majority of state-of-the-art methods due to its remarkable performance in number of computer vision applications. However, DCNN are computationally expensive and requires more resources as well as computational time. Also, deeper architectures are prone to overfitting problem, while small-size dataset is used. To address these limitations, we propose a simple and computationally efficient deep convolutional neural network (DCNN) architecture based on the concept multiscale processing for human activity recognition. We increased the width and depth of the network by carefully crafting the design of network, which results in improved utilization of computational resources. First, we designed a small micro-network with varying receptive field size convolutional kernels (1\(\times\)1, 3\(\times\)3, and 5\(\times\)5) for extraction of unique discriminative information of human objects having variations in object size, pose, orientation, and view. Then, the proposed DCNN architecture is designed by stacking repeated building blocks of small micro-networks with same topology. Here, we factorize the larger convolutional operation in stack of smaller convolutional operations to make the network computationally efficient. The softmax classifier is used for activity classification. Advantage of the proposed architecture over standard deep architectures is its computational efficiency and flexibility to use with both small as well as large size datasets. To evaluate the effectiveness of the proposed architecture, several extensive experiments are conducted by using publically available datasets, namely UCF sports, IXMAS, YouTube, TV-HI, HMDB51, and UCF101 datasets. The activity recognition results have shown outperformance of the proposed method over other existing state-of-the-art methods.

Similar content being viewed by others

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Ke Shian-Ru, Le Uyen Hoang, Thuc Yong-Jin Lee, Hwang Jenq-Neng, Yoo Jang-Hee, Choi Kyoung-Ho (2013) A review on video-based human activity recognition. Computers 2(2):88–131

Kushwaha Arati, Khare Ashish, Khare Manish (2022) Human activity recognition algorithm in video sequences based on integration of magnitude and orientation information of optical flow. Int J Image Gr 22(01):2250009

Dalal Navneet, Triggs Bill (2005) Histograms of oriented gradients for human detection. In: 2005 IEEE computer society conference on computer vision and pattern recognition (CVPR’05), vol 1, pp 886–893. IEEE

Srivastava Prashant, Khare Ashish (2018) Utilizing multiscale local binary pattern for content-based image retrieval. Multimed Tools Appl 77(10):12377–12403

Tan Xiaoyang, Triggs Bill (2010) Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans Image Process 19(6):1635–1650

Laptev Ivan (2005) On space-time interest points. Int J Comput Vision 64(2):107–123

Sipiran Ivan, Bustos Benjamin (2011) Harris 3d: a robust extension of the harris operator for interest point detection on 3d meshes. Vis Comput 27(11):963–976

Khan Muhammad Attique, Zhang Yu-Dong, Khan Sajid Ali, Attique Muhammad, Rehman Amjad, Seo Sanghyun (2021) A resource conscious human action recognition framework using 26-layered deep convolutional neural network. Multimed Tools Appl 80(28):35827–35849

Krizhevsky Alex, Sutskever Ilya, Hinton Geoffrey E (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25:1–9

Simonyan Karenl, Zisserman Andrew (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, pp 1–14

Szegedy Christian, Liu Wei, Jia Yangqing, Sermanet Pierre, Reed Scott, Anguelov Dragomir, Erhan Dumitru, Vanhoucke Vincent, Rabinovich Andrew (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Khare Manish, Srivastava Rajneesh Kumar, Khare Ashish (2014) Single change detection-based moving object segmentation by using daubechies complex wavelet transform. IET Image Proc 8(6):334–344

Srivastava Yash, Murali Vaishnav, Dubey Shiv Ram (2019) A performance evaluation of loss functions for deep face recognition. In: National conference on computer vision, pattern recognition, image processing, and graphics, pp 322–332. Springer

Hsu Pai-Hui, Zhuang Zong-Yi (2020) Incorporating handcrafted features into deep learning for point cloud classification. Remote Sens 12(22):3713

Nadjet Bouchaour, Smaine Mazouzi (2022) Deep pattern-based tumor segmentation in brain mris. Neural Comput Appl 34(17):14317–14326

Yang Ziheng, Benhabiles Halim, Hammoudi Karim, Windal Feryal, He Ruiwen, Collard Dominique (2021) A generalized deep learning-based framework for assistance to the human malaria diagnosis from microscopic images. Neural Computing and Applications, pp 1-16

Roitberg Alina, Perzylo Alexander, Somani Nikhil, Giuliani Manuel, Rickert Markus, Knoll Alois (2014) Human activity recognition in the context of industrial human-robot interaction. In: signal and information processing association annual summit and conference (APSIPA), 2014 Asia-Pacific, pp 1–10. IEEE

Kushwaha Arati, Khare Ashish, Srivastava Prashant (2021) On integration of multiple features for human activity recognition in video sequences. Multimed Tools Appl 80(21):32511–32538

Singh Roshan, Kushwaha Alok Kumar Singh, Srivastava Rajeev (2019) Multi-view recognition system for human activity based on multiple features for video surveillance system. Multimed Tools Appl 78(12):17165–17196

Nigam Swati, Khare Ashish (2016) Integration of moment invariants and uniform local binary patterns for human activity recognition in video sequences. Multimed Tools Appl 75(24):17303–17332

Sharif Muhammad, Khan Muhammad Attique, Zahid Farooq, Shah Jamal Hussain, Akram Tallha (2020) Human action recognition: a framework of statistical weighted segmentation and rank correlation-based selection. Pattern Anal Appl 23(1):281–294

Xiao Guoqing, Li Jingning, Chen Yuedan, Li Kenli (2020) Malfcs: an effective malware classification framework with automated feature extraction based on deep convolutional neural networks. J Parallel Distrib Comput 141:49–58

Xiao G, Li K, Zhou X, Li K (2017) Efficient monochromatic and bichromatic probabilistic reverse top-k query processing for uncertain big data. J Comput Syst Sci 89:92–113

Yang Hao, Yuan Chunfeng, Li Bing, Yang Du, Xing Junliang, Weiming Hu, Maybank Stephen J (2019) Asymmetric 3d convolutional neural networks for action recognition. Pattern Recogn 85:1–12

Almaadeed Noor, Elharrouss Omar, Al-Maadeed Somaya, Bouridane Ahmed, Beghdadi Azeddine (2019) A novel approach for robust multi human action recognition and summarization based on 3d convolutional neural networks. arXiv preprint arXiv:1907.11272, pp 1–22

Khan Muhammad Attique, Javed Kashif, Khan Sajid Ali, Saba Tanzila, Habib Usman, Khan Junaid Ali, Abbasi Aaqif Afzaal (2020) Human action recognition using fusion of multiview and deep features: an application to video surveillance. Multimedia tools and applications, pp 1–27

Tran Du, Wang Heng, Torresani Lorenzo, Ray Jamie, LeCun Yann, Paluri Manohar (2018) A closer look at spatiotemporal convolutions for action recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6450–6459

Chaudhary Sachin, Murala Subrahmanyam (2019) Depth-based end-to-end deep network for human action recognition. IET Comput Vis 13(1):15–22

Leong Mei Chee, Prasad Dilip K, Lee Yong Tsui, Lin Feng (2020) Semi-cnn architecture for effective spatio-temporal learning in action recognition. Appl Sci 10(2):557

Luo Wenjie, Li Yujia, Urtasun Raquel, Zemel Richard (2016) Understanding the effective receptive field in deep convolutional neural networks. Adv Neural Inf Process Syst 29:4905–4913

Yamashita Rikiya, Nishio Mizuho, Do Richard Kinh Gian, Togashi Kaori (2018) Convolutional neural networks: an overview and application in radiology. Insights Imaging 9(4):611–629

Bottou Léon (2010) Large-scale machine learning with stochastic gradient descent. In: Proceedings of COMPSTAT’2010, pp 177–186. Springer

Rodriguez Mikel D, Ahmed Javed, Shah Mubarak (2008) Action mach a spatio-temporal maximum average correlation height filter for action recognition. In: 2008 IEEE conference on computer vision and pattern recognition, pp 1–8. IEEE

Kim Sun Jung, Kim Soo Wan, Sandhan Tushar, Choi Jin Young (2014) View invariant action recognition using generalized 4d features. Pattern Recogn Lett 49:40–47

Liu Jingen, Luo Jiebo, Shah Mubarak (2009) Recognizing realistic actions from videos “in the wild”. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp 1996–2003. IEEE

Patron-Perez Alonso, Marszalek Marcin, Reid Ian, Zisserman Andrew (2012) Structured learning of human interactions in tv shows. IEEE Trans Pattern Anal Mach Intell 34(12):2441–2453

Kuehne Hildegard, Jhuang Hueihan, Garrote Estíbaliz, Poggio Tomaso, Serre Thomas (2011) Hmdb: a large video database for human motion recognition. In: 2011 International conference on computer vision, pp 2556–2563. IEEE

Soomro Khurram, Zamir Amir Roshan, Shah Mubarak (2012) A dataset of 101 human action classes from videos in the wild. Center for Research in Computer Vision, 2(11)

Zare Amin, Moghaddam Hamid Abrishami, Sharifi Arash (2020) Video spatiotemporal mapping for human action recognition by convolutional neural network. Pattern Anal Appl 23(1):265–279

Abdelbaky Amany, Aly Saleh (2021) Two-stream spatiotemporal feature fusion for human action recognition. Vis Comput 37(7):1821–1835

Afza Farhat, Khan Muhammad Attique, Sharif Muhammad, Kadry Seifedine, Manogaran Gunasekaran, Saba Tanzila, Ashraf Imran, Damaševičius Robertas (2021) A framework of human action recognition using length control features fusion and weighted entropy-variances based feature selection. Image Vis Comput 106:104090

Abdelbaky Amany, Aly Saleh (2021) Human action recognition using three orthogonal planes with unsupervised deep convolutional neural network. Multimed Tools Appl 80(13):20019–20043

Khan Muhammad Attique, Sharif Muhammad, Akram Tallha, Raza Mudassar, Saba Tanzila, Rehman Amjad (2020) Hand-crafted and deep convolutional neural network features fusion and selection strategy: an application to intelligent human action recognition. Appl Soft Comput 87:105986

Nazir Saima, Yousaf Muhammad Haroon, Nebel Jean-Christophe, Velastin Sergio A (2018) A bag of expression framework for improved human action recognition. Pattern Recogn Lett 103:39–45

Gnouma Mariem, Ladjailia Ammar, Ejbali Ridha, Zaied Mourad (2019) Stacked sparse autoencoder and history of binary motion image for human activity recognition. Multimed Tools Appl 78(2):2157–2179

Liu An-An, Yu-Ting Su, Nie Wei-Zhi, Kankanhalli Mohan (2016) Hierarchical clustering multi-task learning for joint human action grouping and recognition. IEEE Trans Pattern Anal Mach Intell 39(1):102–114

Gao Zan, Nie Weizhi, Liu Anan, Zhang Hua (2016) Evaluation of local spatial-temporal features for cross-view action recognition. Neurocomputing 173:110–117

Zhang Zufan, Lv Zongming, Gan Chenquan, Zhu Qingyi (2020) Human action recognition using convolutional lstm and fully-connected lstm with different attentions. Neurocomputing 410:304–316

Afrasiabi Mahlagha, Mansoorizadeh Muharram et al (2020) Dtw-cnn: time series-based human interaction prediction in videos using cnn-extracted features. Vis Comput 36(6):1127–1139

Afrasiabi Mahlagha, Khotanlou Hassan, Gevers Theo (2020) Spatial-temporal dual-actor cnn for human interaction prediction in video. Multimed Tools Appl 79(27):20019–20038

Haroon Umair, Ullah Amin, Hussain Tanveer, Ullah Waseem, Sajjad Muhammad, Muhammad Khan, Lee Mi Young, Baik Sung Wook (2022) A multi-stream sequence learning framework for human interaction recognition. IEEE Trans Human-Mach Syst 52(3):435–444

Ke Qiuhong, Bennamoun Mohammed, An Senjian, Boussaid Farid, Sohel Ferdous (2016) Human interaction prediction using deep temporal features. In: European conference on computer vision, pp 403–414. Springer

Jeongmin Yu, Jeon Moongu, Pedrycz Witold (2014) Weighted feature trajectories and concatenated bag-of-features for action recognition. Neurocomputing 131:200–207

Wang Hanli, Yi Yun, Wu Jun (2015) Human action recognition with trajectory based covariance descriptor in unconstrained videos. In: Proceedings of the 23rd ACM international conference on Multimedia, pp 1175–1178

Li Jun, Liu Xianglong, Zhang Wenxuan, Zhang Mingyuan, Song Jingkuan, Sebe Nicu (2020) Spatio-temporal attention networks for action recognition and detection. IEEE Trans Multimed 22(11):2990–3001

Sheng Yu, Xie Li, Liu Lin, Xia Daoxun (2019) Learning long-term temporal features with deep neural networks for human action recognition. IEEE Access 8:1840–1850

Zhang C, Xu Y, Xu Z, Huang J, Lu J (2022) Hybrid handcrafted and learned feature framework for human action recognition. Appl Intell 52(11):12771–12787

Han Yamin, Zhang Peng, Zhuo Tao, Huang Wei, Zhang Yanning (2017) Video action recognition based on deeper convolution networks with pair-wise frame motion concatenation. In: Proceedings of the IEEE conference on computer vision and pattern recognition Workshops, pp 8–17

Acknowledgements

This work was supported by the Science and Engineering Research Board (SERB), Department of Science and Technology (DST), New Delhi, India, under Grant No. CRG/2020/001982.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kushwaha, A., Khare, A. & Prakash, O. Micro-network-based deep convolutional neural network for human activity recognition from realistic and multi-view visual data. Neural Comput & Applic 35, 13321–13341 (2023). https://doi.org/10.1007/s00521-023-08440-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-023-08440-0