Abstract

Brain tumour tissue segmentation is essential for clinical decision making. While manual segmentation is time consuming, tedious, and subjective, it is very challenging to develop automatic segmentation methods. Deep learning with convolutional neural network (CNN) architecture has consistently outperformed previous methods on such challenging tasks. However, the local dependencies of pixel classes cannot be fully reflected in the CNN models. In contrast, hand-crafted features such as histogram-based texture features provide robust feature descriptors of local pixel dependencies. In this paper, a classification-based method for automatic brain tumour tissue segmentation is proposed using combined CNN-based and hand-crafted features. The CIFAR network is modified to extract CNN-based features, and histogram-based texture features are fused to compensate the limitation in the CIFAR network. These features together with the pixel intensities of the original MRI images are sent to a decision tree for classifying the MRI image voxels into different types of tumour tissues. The method is evaluated on the BraTS 2017 dataset. Experiments show that the proposed method produces promising segmentation results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Brain gliomas are tumours associated with glial cells. They are primary tumour among adults. Investigations on improving diagnosis of gliomas are thus highly desirable [23, 26]. In the medical imaging field, the decision tree (DT) classifier has been chosen as the best classifier among other conventional classifiers for brain tumour segmentation [22, 31]. Some hand-designed features were exploited as input to the DT classifier [6, 28, 32]. In order to provide good description of different tissue classes, a large number of features are needed. However, a high dimensional feature vector will cause the problem of high complexity with high computational cost. This limitation was solved using another variant of discriminating approaches.

Recently, deep convolutional neural network (CNN) methods have attracted great attention in the image classification field. Some of the CNN-based approaches have been adopted for segmentation of brain tumour in medical images, especially in MRI data [2, 12, 27, 39]. The classification in the CNN-based approaches is performed on pixels. Therefore, the main limitation in CNN-based methods is that the local pixel dependencies are not fully considered for pixel classification [24, 37, 38]. On the other hand, some hand-crafted features such as histogram-based texture features have been proved to be robust feature descriptors of pixel local dependencies. Hence, the main hypothesis of this paper is that more local information would be achieved by fusing machine-learned with hand-crafted features to improve the classification method for brain tumour segmentation.

In this paper, CIFAR architecture [17, 18] is selected for model implementation since CIFAR network provided good performance in classification task. However, the size of the input images to the CIFAR network is 32 × 32 pixels, which restricts the size of the input image patches in our work. Because the pooling layers in the CIFAR architecture cause down-sampling in the data, some of the image information which is useful for learning is discarded. Moreover, the center pixel of the image patch with size 32 × 32 pixels does not have enough dependencies to give a label from this patch which includes a lot of overlap tissue information. In other words, only limited space of context features is explored because it is a patch-wise method. Accordingly, Histogram-based texture features are used to compensate the loss information by providing features with the high correlation among neighbouring voxels for more accurate classification.

The motivation of this paper is to generate such a model that would be capable of extracting useful features from the MRI images such as local dependency features and combine them with machine-learned features which would be more useful for accurate segmentation of complex tumour structures.

The contributions of this paper include:

-

1.

A novel histogram-based texture descriptor is developed for each pixel of interest within each MRI modality, which is based on Otsu’s image thresholding method. The distribution of the similar intensities of different brain tissues is utilized to encode the local correlation among the neighbouring pixels into the feature representation.

-

2.

A new method is proposed to address the limitation in CIFAR network and increase the performance of brain tumour segmentation by combining the hand-designed features with the machine-learned features that are extracted from the last fully connected layer of the CIFAR architecture.

-

3.

A decision tree-based method is introduced for accurate brain tumour segmentation based on the combined features together with the image intensities.

The rest of the paper is organized as follows. Section 2 describes the related work. Section 3 explains the proposed approach. Section 4 presents the experimental results, and Section 5 draws the conclusions of this work.

2 Related work

Automatic brain tumour segmentation is a challenging task, and various methods have been proposed to tackle this problem. This section will review some of the relevant works for brain tumour segmentation.

For early works of machine learning based brain tumour segmentation methods, including random forest, conditional random fields, and SVM-based methods, the readers are referred to the papers [3, 11, 13, 19, 22, 29]. These methods often require a large number of features, which makes them slow to compute and expensive to occupy memory [12]. Menze et al. [22] mentioned deep learning methods for image segmentation, but no results were shown about them in the paper.

A practical deep learning method using CNN for tumour segmentation was proposed by Havaei et al. [12]. In their method, a multi-scale architecture was employed by combining features from two pathways with different filter sizes. They furthermore improved their results by cascading their models. An increasing number of convolutional layers with 3 × 3 filter sizes was presented by Pereira et al. [27]. They developed different networks to segment low grade and high grade glioblastomas (LGG and HGG), separately. Their LGG network contains 4 convolutional layers, followed by two fully convolutional layers and a classification network. The HGG network consists of 7 convolutional layers. Thaha et al. [34] employed 3 × 3 kernels to build a deep architecture of an enhanced CNN model. To improve the performance, they performed intensity normalization and data augmentation in pre-processing the images. The above-mentioned used 2D convolutions, and they did not considered the local pixel dependencies.

A novel method was presented in [14], which designed multi-cascade CNNs to take care of several local pixel dependencies and multi-scale features of 3D MRI images. The output results of the CNNs were refined using conditional random fields. Razzak et al. [30] suggested a two-pathway-group CNN (2PG-CNN) model which incorporates the global and local information in parallel. They further employed the cascaded 2PG-CNN architecture to enhance the overall performance. Kamnitasas et al. [16] developed an effective dense training approach by processing the adjacent patches into one pass. They have employed dual pathway architecture to incorporate both local and larger contextual features. At the final stage, a 3D fully connected conditional random field was applied to refine the segmentation results.

It is clear from the literature review that all CNN-based methods are patch-wise ones with many redundant convolutional calculations, and they can only explore spatially limited contextual features because the local pixel dependencies are not fully considered. To compensate this limitation, researchers developed different pipeline models, such as multi-cascade CNNs or a large number of convolutional layers, but these pipelines take too much computational time for training and need expensive memory and/or lead to overfitting. This limitation is addressed in our proposed method by utilising only one simple CNN architecture (CIFAR) which is small enough to train quickly with less number of parameters. We combine the CNN extracted features and the hand-crafted ones together with pixel intensity values of the original MRI modals, feed them to a DT classifier to determine the classes of the pixels. We develop a novel histogram-based texture descriptor as the hand-crafted features to encode the pixel local dependencies.

3 Proposed approach

The proposed technique is comprised of three main stages, which are pre-processing, features extraction and classification. The image intensities of the MRI modalities (FLAIR, T1ce, T2) are normalized in the pre-processing stage. Then, the MRI modalities are partitioned into regular patches with the same size. The image patches are then fed into the CIFAR network and the highest output scores of which are taken as the machine-learned features. From the same MRI modalities, histogram-based texture features are extracted as hand-crafted features. In the last stage, both types of features together with pixel intensities of the same images are fed into a DT classifier to classify the pixel of each MRI image into healthy tissue and sub-tumour tissue regions. The overall workflow of the proposed method is shown in Fig. 1

3.1 Data pre-processing

MRI data sets are usually collected from different MRI sources, such as different manufacturers and scanner-models. Therefore, some artifacts, such as inhomogeneity in the dynamic intensity range of brain tissues, exist in the data sets. These artifacts can affect the quality of data and are considered as problems which limit the performance accuracy of different medical image segmentation techniques. They cause false intensity levels, which leads to false positives in automated segmentation. To address this problem, we adopt the following steps:

-

The bias field correction algorithm in N4ITK [35] is applied to the MRI data.

-

To compensate for the MRI inhomogeneity, intensity normalization is performed on different MRI modalities by transforming the mean intensity value and variance to 0 and 1, respectively.

-

The top and bottom 1% intensity values for each MRI images are removed, as the 1% highest values correspond to the hyper intensities of the remaining voxels related to the skull, while the 1% lowest values correspond to the background noise.

3.1.1 Image patches extraction

Most of the classification-based brain tumour segmentation approaches [8, 12] divide the MR images into a specific size of 2D image patches as input data. We also need to divide the MR images into patches in this work. A couple of steps for patch selection are used which had a great impact on the results.

The first step is patch size selection. After combining the 3 MRI modalities (FLAIR, T1ce, T2), patches of sizes 64 × 64 are extracted from the FLAIR modality, and patches of size 32 × 32 are extracted from the other two MRI modalities, T1ce and T2. All corresponding patches of different sizes have centered at the same pixel. The reason that the patch size 64 × 64 instead of 32 × 32 is selected for the FLAIR modality is that the FLAIR is considered as a highly effective MRI sequence image which provides information that helps to separate the complete tumour boundary region from the healthy tissue [21], and a large patch size is necessary to provide global special feature information for machine learning [12]. The patch size 32 × 32 is selected for the T1ce and T2 modalities because smaller size is required to provide local details for machine learning. The T1ce modality provides information that is related to the necrosis and the active tumour region (enhancing tumour) which can be distinguished easily, while T2 modality represent more information related to the tumour structure and healthy tissues [5]. See Fig. 2.

The second step is patch selection control. Normally, patches are randomly selected from the MRI data according to the types of classes. However, the trained model based on this selection usually classifies most patches within the area of the brain tissues as tumour, and only the patches within the black areas as background. This happens due to the fact that about half of the background patches are in the zero-intensity area with no brain tissues. See Fig. 3. Nevertheless, when the selection process is controlled to exclude patches in which more than 20% of the pixels are of zero-intensity, the results can be significantly improved. See Fig. 4.

3.2 CIFAR model architecture and features extraction

CIFAR network is designed as a series of convolutional layers for image classification task. The details of the CIFAR net are shown in Fig. 5. CIFAR model takes images with size 32 ×32 pixel as input to the first layer. Next to the input layer are the middle layers of the network which are made up of repeated 3 blocks. Each block includes 5 ×5 kernel size Convolutional layer with a pad size 2 and stride 1, followed by the ReLU (rectified linear units) and a Max pooling layer with pool size 3, stride 2 and padding size 0. The convolutional layers describe sets of filter weights, which are updated during network training. The ReLU layer improves non-linearity to the network, which permit the network to approximate non-linear functions that map image pixels to the semantic content of the image. The final layers of the CIFAR model are typically composed of 2 fully connected layers which lead into a Softmax loss and Classification output layer. The model follows the architecture described by Alex Krizhevsky [17, 18] with a few differences in the top few layers. The other reason for selecting CIFAR net in this work is that the model is small enough to train fast, which is ideal for trying out new ideas and experimenting with a new data.

The transfer learning is commonly used in deep learning applications. Therefore, a pretrained CIFAR network can be trained again to classify a new set of images. Fine-tuning a network with transfer learning is usually much quicker and easier than training a network with randomly initialized weights from scratch. Additionally, abstract features which are common among different types of images are learned in the first few layers of any CNN. Therefore, learned features can be rapidly transferred to a new task using a smaller number of training images. However, some modifications in pretrained CIFAR should be considered. Starting with the input data, the CIFAR model is designed for RGB natural images of size 32 × 32 pixels with 3 channels. Accordingly in this work, three MRI modalities (FLAIR, T1-contrast and T2-weighted) are combined to form the input data. To fine tune and retrain the CIFAR network for MRI image classification, the last layers are redesigned and the fully connected layer (FC2) of the network, which has ten classes in the original model, is changed to have the desired number of classes, i.e., 4 categories (Background, oedema, necrosis and enhanced tumour) for the brain tumour segmentation task. Additionally, the learning rate factors of the fully connected layer is increased so that the new layers can learn faster than the transferred layers. Figure 6 demonstrates all the transfer learning in pretrained CIFAR network.

After training CIFAR network, each layer produces filter responses to the input MRI modalities. However, there are only a few layers within a trained CIFAR that are suitable for image feature extraction. The basic image features, such as edges, corners and blobs, are captured at the beginning of the network. Then in the deeper layers, these primitive features are processed to form higher level image features. These higher-level features are better suited for brain tumour classification task because they have combined all the primitive features into a better image representation [9]. Typically, the layer right before the classification layer is a good place for feature extraction, where four scores corresponding to background, oedema, necrosis and enhancing tumour are extracted. A four-dimensional feature vector is then constructed for each pixel in the MRI images with the values equivalent to the probabilities of the feature extraction layer. See Fig. 7

3.3 Histogram-based texture feature extraction

Brain tissues have complex structures which contain overlapping normal tissues and tumourous regions. Consequently, the shape and intensity features cannot provide enough information for segmenting brain tumours in MRI images with high accuracy. This task also requests the features related to local or neighbourhood information, such as texture features. Therefore, histogram-based texture features are considered in this work as hand-crafted features for brain tumour and sub-tumour segmentation. The whole pipeline of the histogram-based texture feature descriptor in this work consists of 3 steps: Otsu’s thresholding, image quantization and intensity histogram calculation.

Otsu’s method is one of the most popular threshold selection methods, which gives an acceptable result when the numbers of pixels in each class are close to each other. It is an unsupervised algorithm that utilizes the information from the concerning neighbouring pixels in the image and directly works on the gray level histograms by selecting the thresholds that maximize the difference [25]. In this method, the multi-level threshold version of Otsu [20] on gray scale images is used to produce a reasonably segmented image with the pixels being clustered appropriately. Each MRI modality (FLAIR, T1ce, T2) is decomposed into clusters. The number of thresholds and thus that of clusters is a tunable parameter, which is defined or selected by the user empirically based on the number of healthy and pathology tissues. In the MR images of brain tumour, the major tissues include tumour are oedema, necrosis, enhanced tumour, white matters (WM), gray matters (GM) and cerebrospinal fluid (CSF), and thus the number of clusters is determined based on the number of tissue types. Image quantization is then based on the number of clusters (N Cluster), and each pixel in a quantized image is assigned a gray level value by the label of its cluster (ID). See Fig. 8, where different labels are also visualized with different colors. From the figure, it can show the various clusters in the quantized images in color by converting a label into a color RGB image to visualize the labeled regions.

A histogram can represent the texture feature of a center pixel with its neighbourhood of size m × m pixels. In this work, the texture feature-based histogram is computed by summing up the intensity values of the original MRI modalities (FLAIR, T1ce and T2) corresponding to each cluster inside the window. Different fixed window sizes (5 × 5), (7 × 7), (9 × 9) and (11 × 11) were experimented. The results are not effected when using window sizes (5 × 5) and (7 × 7). However, the best result was obtained at the window size 9 × 9 as this window size is enough for providing local information to label the center pixel. We do not use window size 11 × 11 as the results are similar as using window size 9 × 9 and is costs more time than 9 × 9 window. Normalized intensity representing the center pixel will also be used in pixel classification. Therefore, the feature representation of each pixel can achieve local dependencies by encoding information of the center pixel conditionally depending on its neighbourhood. An example of the histogram intensity is demonstrated in Fig. 9.

(a) top to down demonstrates FLAIR, T1ce and T2 MRI modalities, respectively with 9 × 9 pixel intensities neighbourhood of center pixel. (b) top to down illustrates quantized images of corresponding FLAIR, T1ce and T2 MRI modalities with 9 × 9 ID neighbourhood of center pixel. (C) Mapping the 9 × 9 pixel intensities neighbourhood of the center pixel of the three MRI modalities into intensity histogram

3.4 Hybrid features

As discussed in Section 2.3, the histogram-based texture feature is considered as a hand-crafted feature which provides a robust descriptor that indicates the local dependences. Therefore, more local information will be incorporated by combining histogram-based texture features with the CIFAR based feature to improve the final brain tumour segmentation. In total, 13 features were calculated from multi-MRI protocols which are described in Table 1.

The CIFAR features which are considered as machine-learned features are extracted from the second fully connected layer of the CIFAR network for the corresponding pixel, while the texture features which are represented as hand-crafted features are extracted based on the histogram of quantized image in a fixed window of 9 × 9, centered at that pixel. The voxels inside the brain region which represent the target area are considered for generating a feature vector (i.e., 13 features for each voxel). Then, all these feature vectors are fed into the classifier.

3.5 DT classifier

DT is a flowchart-like tree structure which is used to categories each pixel into healthy or tumour brain tissues. Each node of the tree involves a set of training examples and predictor. Splitting starts from the root node and continues at every node of the tree. The procedure is performed based on the feature representation. The trees grow into a specified tree depth (D tree) which is the main parameter for designing the DT classifier. Different tree depth numbers were tested on BRATS2017 training dataset. Each pixel in the image is classified into a tumour or sub-tumour region candidate. The final predicted mask is generated by mapping back the pixel class which is assigned for each pixel in the testing dataset to the volume of the segmentation mask.

4 Experimental results

In this study, the BRATS2017 dataset [4, 22] was considered which includes 285 MRI scans of patients with high grade glioma (HGG) (210 in total) and low grade glioma (LGG) (75 in total). Each MRI scan has the size 240 × 240 × 150, where 150 is the number of slices in the scan, and 240 × 240 is the size of each slice. Basically, 75% of the patients (158 HGG and 57 LGG) were selected to train the model and 25% (52 HGG and 18 LGG) were assigned as testing set. Three MRI protocols (FLAIR, T1ce, T2) were used as input to the CIFAR network because CIFAR was designed for three input channels. The major tumour information exists in these three MRI modalities which are valuable to produce a good performance in tumour segmentation. Therefore, this configuration was selected in this work.

The performance accuracy of the proposed model was evaluated on the testing data set. As a result of practical clinical application, the standard segmentation of the brain tumour structures are grouped into three different tumour areas which are defined by:

-

Complete tumour (oedema, necrosis , enhanced tumour).

-

tumour core (necrosis, enhanced tumour).

-

Enhanced tumour.

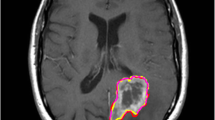

Figure 10 shows the modalities of MRI and the ground truth.

Complete tumour visible in FLAIR (a), the tumour core appears in T2 (b), the enhancing and necrotic tumour component structures are visible in T1ce (c). The final labels of the tumour structures noticeable in (d): Oedema (yellow), necrotic/cystic core (light blue), enhancing core(red) [1]

In each tumour structure, the segmentation results have been evaluated quantitatively using the F-measure which provides an intersection measurement between the manually defined brain tumour areas and the predicted results of the proposed method, as follows:

The proposed algorithm was implemented using Matlab 2018a and ran on a PC with CPU Intel Core i7 and RAM 16 GB in the operating system Windows 7. Our implementation is based on the specialized Matlab deep learning tool box and classification learner toolbox which also based on Matlab for training DT classifier. Regarding deep learning, the whole training process for the CIFAR model took approximately 2.5 days on a single NVIDIA GPU Titan XP.

The performance of the proposed model(CIFAR_PI_HIS_DT) was evaluated based on the investigation of the four qualified experiments. In the first experiment, the final segmentation masks of brain tumour were classified directly using the CIFAR network (CIFAR). In the second experiment, the MR images of brain tumour were segmented with the features that were extracted from the trained CIFAR network and then classified by the DT (CIFAR_DT). In the third experiment, the normalized pixel intensity in MRI images was considered. The extracted features of CIFAR were combined with a specific normalized pixel intensity of each MRI modalities (FLAIR, T1ce and T2) and then the pixel was classified using the DT classifier (CIFAR_PI_DT). In the final experiment setting, the histogram based texture features were considered in the proposed method for accurate segmentation of brain labels. Table 2 demonstrates our final results and Fig. 11 shows some examples of visual result masks from our proposed models.

It can be seen that the F-measure is not so good for segmentation using only CIFAR network. This indicates that the CIFAR can segment the location of the tumour or the area that contains the tumour regions, but it cannot accurately detect or determined the exact tumour boundaries. There is a slight drop in the F-measure for complete tumour and tumour core if the CIFAR machine learned features are sent into the DT classifier. It means that the CIFAR features have higher matching precision with deep learning classifier in the last layer than with the machine learning classifier (DT). The classifier layer in the CIFAR network perfectly interprets the deep learning features to predict the label of the specific pixel. Adding the pixel intensity to the pipeline slightly improves the F-measure for all areas (CT, TC and ET). It means that the prediction segment masks of tumour region boundaries are much closer to the ground truth. However, the CIFAR_PI_DT method does not consider the local dependencies. It only considers the normalized pixel intensity which provides more global information in the method. Adding the histogram based texture features to the pipeline significantly improves the F-measure for complete tumour, tumour core and enhanced tumour. The reason behind is that the local dependencies are achieved by adding histogram-based texture features to the whole pipeline.Due to the small number of training dataset of MRI examples, CIFAR_PI_HIS_DT model was trained on a pretrained CIFAR rather than from scratch. The original CIFAR is trained on the natural image dataset and this effects on how much the accuracy of the segmentation is increased. One future idea is to initialize the network and achieve a perfect case by using a pretrained CIFAR which is trained on the actual brain MRI dataset.

We also compared our methods with some state-of-the-art methods. Table 3 shows the comparison results. From the table we can see that our method has medium performance among the state-of-the-art methods. However, it is worth mentioning that our model is based on an improved CIFAR network, which is the simplest among the networks in comparison. Our model has less parameters and thus is faster than the other models. Moreover, we can take the pretrained CIFAR model that has already learned to extract powerful and informative features from brain tumour task and use it as a starting point to train with more data set or even to learn a new task, e.g., stroke lesion detection. Consequently, it signals that this model is quite robust to change.

In comparison of the time complexities of different methods, we will instead illustrate their network architecture complexities because the more complex the network architectures are, the more parameters they have, and the more time they need for computation.

The CIFAR network we use consists of only 3 convolution-ReLU-MaxPooling blocks and 2 fully connected layers. Casamitjana’s method [7] used two V-Nets, where each V-Net consists of 21 convolution-PReLU blocks and 4 “Down” convolution layers and 4 “Up” convolution layers. Kamnitsas’s method [15] used ensembles of multiple models and architectures, which consists of two deepMedic models, three 3D FCNs, and two 3D versions of the U-Net architecture [10, 33], where U-Net has the similar complexity as V-Net. And Wang’s method [36] consists of 3 networks, each of which consists of 24 convolution+batch normalization+PReLU and 4 convolution output channels. Based on the architecture comparison, we can find that the complexity of the proposed scheme is significantly lower than other existing schemes.

Bharath’s method [6] involves a superpixel wise two-stage tumour tissue segmentation algorithm. Each stage includes superpixel computation, feature extraction based on multi-linear singular value decomposition on a tensor constructed from multimodal MRI data, and random forest classifier. We cannot compare the time complexities directly based on the architectures of their method with ours. However, as we understand, computations in each step of Bharath’s method is not small.

5 Conclusion

As mention previously, using the CIFAR network as CNN classifier for brain tumour segmentation has drawbacks (incorrectly labelling some of the tumour classes and smooth boundaries of tumour regions in segment results) and this is because that correlation with the near pixels are not considered for classification of the pixels. To overcome these drawbacks, we have combined the hand-crafted features (the histogram-based texture features) and the machine-learned features to compensate the limitation in the local dependencies. The learned features are then applied to DT to classify each pixel in the MRI images into normal brain tissue and different parts of tumour regions. The tumour segmentation labels are oedema, necrosis and enhanced tumour. The proposed model was evaluated on the BRATS2017 dataset. The experiment results demonstrate the high segmentation performance in the F-measure of 0.84% for the whole tumour and the average results of 0.64% and 0.66% for core and enhanced tumour regions, respectively. Overall, the combination of the histogram-based texture features as hand-crafted features technique with the machine-learned features can produce the best possible result masks that close to the expert’s delineation across all the glioma grades, leading to faster brain tumour diagnosis and treatment plan for patients.

References

Alqazzaz S, Sun X, Yang X, Nokes L (2019) Automated brain tumour segmentation on multi-modal MR image using SegNet. Comput Visual Media 5(2):209–219

Badrinarayanan V, Kendall A, Cipolla R (2017) SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Pattern Anal Mach Intell 39(12):2481–2495

Bahadure NB, Ray AK, Thethi HP (2017) Image analysis for MRI based brain tumour detection and feature extraction using biologically inspired BWT and SVM. International Journal of Biomedical Imaging

Bakas S, Akbari H, Sotiras A, Bilello M, Rozycki M, Kirby JS, Freymann JB, Farahani K, Davatzikos C (2017) Advancing the cancer genome atlas glioma MRI collectios with expert segmentation labels and radiomic features. Scientif Data 4:170117

Bauer S, Wiest R, Nolte LP, Reyes M (2013) A survey of MRI-based medical image analysis for brain tumour studies. Phys Med Biol 58(13):R97

Bharath HN, Colleman S, Sima DM, Van Huffel S (2017) tumour segmentation from multimodal MRI using random forest with superpixel and tensor based feature extraction. In: International MICCAI brainlesion workshop. Springer, Cham, pp 463–473

Casamitjana A, Catà M, Sánchez I, Combalia M, Vilaplana V (2017) Cascaded V-Net using ROI masks for brain tumour segmentation. In: International MICCAI brainlesion workshop. Springer, Cham, pp 381–391

Castillo LS, Daza LA, Rivera LC, Arbeláez P (2017) Volumetric multimodality neural network for brain tumour segmentation. In: 13th international conference on medical information processing and analysis, vol 10572. International Society for Optics and Photonics, Bellingham, p 105720E

Donahue J, Jia Y, Vinyals O, Hoffman J, Zhang N, Tzeng E, Darrell T (2014) Decaf: a deep convolutional activation feature for generic visual recognition. In: International conference on machine learning, pp 647–655

Dong H, Yang G, Liu F, Mo Y, Guo Y (2017) Automatic brain tumour detection and segmentation using U-Net based fully convolutional networks. In: Annual conference on medical image understanding and analysis. Springer, Cham, pp 506–517

Gordillo N, Montseny E, Sobrevilla P (2013) State of the art survey on MRI brain tumour segmentation. Magn Reson Imaging 31(8):1426–1438

Havaei M, Davy A, Warde-Farley D, Biard A, Courville A, Bengio Y, Pal C, Jodoin PM, Larochelle H (2017) Brain tumour segmentation with deep neural networks. Med Image Anal 35:18–31

Holli KK, Harrison L, Dastidar P, Wäljas M, Liimatainen S, Luukkaala T, Öhman J, Soimakallio S, Eskola H (2010) Texture analysis of MR images of patients with mild traumatic brain injury. BMC Medical Mmaging 10 (1):8

Hu K, Gan Q, Zhang Y, Deng S, Xiao F, Huang W, Cao C, Gao X (2019) Brain tumour segmentation using multi-cascaded convolutional neural networks and conditional random field. IEEE Access 7:92615–92629

Kamnitsas K, Bai W, Ferrante E, McDonagh S, Sinclair M, Pawlowski N, Rajchl M, Lee M, Kainz B, Rueckert D, Glocker B (2017) Ensembles of multiple models and architectures for robust brain tumour segmentation. In: International MICCAI brainlesion workshop. Springer, Cham, pp 450–462

Kamnitsas K, Ledig C, Newcombe VF, Simpson JP, Kane AD, Menon DK, Rueckert D, Glocker B (2017) Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med Image Anal 36:61–78

Krizhevsky A (2014) CIFAR Network:cuda-convnet, https://code.google.com/p/cuda-convnet/

Krizhevsky A, Hinton G (2009) Learning multiple layers of features from tiny images (Vol. 1, No. 4, p. 7). University of Toronto, Technical report

Lai Y, Viswanath S, Baccon J, Ellison D, Judkins AR, Madabhushi A (2011) A texture-based classifier to discriminate anaplastic from non-anaplastic medulloblastoma. In: 2011 IEEE 37th annual northeast bioengineering conference (NEBEC), IEEE, pp 1–2

Liao PS, Chen TS, Chung PC (2001) A fast algorithm for multilevel thresholding. J Inf Sci Eng 17(5):713–727

Liu J, Li M, Wang J, Wu F, Liu T, Pan Y (2014) A survey of MRI-based brain tumour segmentation methods. Tsinghua Sci Technol 19 (6):578–595

Menze BH, Jakab A, Bauer S, Kalpathy-Cramer J, Farahani K, Kirby J, Burren Y, Porz N, Slotboom J, Wiest R, Lanczi L (2014) The multimodal brain tumour image segmentation benchmark (BRATS). IEEE Trans Med Imaging 34(10):1993–2024

Niyazi M, Brada M, Chalmers AJ, Combs SE, Erridge SC, Fiorentino A, Grosu AL, Lagerwaard FJ, Minniti G, Mirimanoff RO, Ricardi U (2016) ESTRO-ACROP Guideline target delineation of glioblastomas. Radiother Oncol 118(1):35–42

Noh H, Hong S, Han B (2015) Learning deconvolution network for semantic segmentation. In: Proceedings of the IEEE international conference on computer vision, pp 1520–1528

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Transa Syst Man Cybern 9(1):62–66

Patel MR, Tse V (2004) Diagnosis and staging of brain tumours. In Seminars Roentgenol 39(3):347

Pereira S, Pinto A, Alves V, Silva CA (2016) Brain tumour segmentation using convolutional neural networks in MRI images. IEEE Trans Med Imaging 35(5):1240–1251

Phophalia A, Maji P (2017) Multimodal brain tumour segmentation using ensemble of forest method. In: International MICCAI brainlesion workshop. Springer, Cham, pp 159–168

Pinto A, Pereira S, Correia H, Oliveira J, Rasteiro DM, Silva CA (2015) Brain tumour segmentation based on extremely randomized forest with high-level features. In: 2015 37th annual international conference of the IEEE engineering in medicine and biology society (EMBC), pp 3037–3040

Razzak MI, Imran M, Xu G (2018) Efficient brain tumour segmentation with multiscale two-pathway-group conventional neural networks. IEEE J Biomed Health Inform 23(5):1911–1919

Rehman ZU, Naqvi SS, Khan TM, Khan MA, Bashir T (2019) Fully automated multi-parametric brain tumour segmentation using superpixel based classification. Expert Syst Appl 118:598–613

Revanuru K, Shah N (2017) Fully automatic brain tumour segmentation using random forests and patient survival prediction using XGBoost. In: Proceedings of The 6th MICCAI-BRATS challenge, pp 239–243

Ronneberger O, Fischer P, Brox T (2015) U-Net: Convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, Cham, pp 234–241

Thaha MM, Kumar KPM, Murugan BS, Dhanasekeran S, Vijayakarthick P, Selvi AS (2019) Brain tumour segmentation using convolutional neural networks in mri images. J Med Syst 43(9):294

Tustison NJ, Avants BB, Cook PA, Zheng Y, Egan A, Yushkevich PA, Gee JC (2010) N4ITK: improved N3 bias correction. IEEE Trans Med Imaging 29(6):1310

Wang G, Li W, Ourselin S, Vercauteren T (2017) utomatic brain tumour segmentation using cascaded anisotropic convolutional neural networks. In: International MICCAI Brainlesion Workshop. Springer, Cham, pp 178–190

Zheng S, Jayasumana S, Romera-Paredes B, Vineet V, Su Z, Du D, Huang C, Torr PH (2015) Conditional random fields as recurrent neural networks. In: Proceedings of The IEEE international conference on computer vision, pp 1529–1537

Zhao X, Wu Y, Song G, Li Z, Zhang Y, Fan Y (2018) A deep learning model integrating FCNNs and CRFs for brain tumour segmentation. Med Image Anal 43:98–111

Zikic D, Ioannou Y, Brown M, Criminisi A (2014) Segmentation of brain tumour tissues with convolutional neural networks. In: Proceedings MICCAI-BRATS, pp 36–39

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Al-qazzaz, S., Sun, X., Yang, H. et al. Image classification-based brain tumour tissue segmentation. Multimed Tools Appl 80, 993–1008 (2021). https://doi.org/10.1007/s11042-020-09661-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09661-4