Abstract

Convolutional neural networks (CNN) have become a popular choice for image segmentation and classification. Internal body images are obscure in nature with involvement of noise, luminance variation, rotation and blur. Thus optimal choice of features for machine learning model to classify bleeding is still an open problem. CNN is efficient for attribute selection and ensemble learning makes a generalized robust system. Capsule endoscopy is a new technology which enables a gastroenterologist to visualize the entire digestive tract including small bowel to diagnose bleeding, ulcer and polyp. This paper presents a supervised learning ensemble to detect the bleeding in the images of Wireless Capsule Endoscopy. It accurately finds out the best possible combination of attributes required to classify bleeding symptoms in endoscopy images. A careful setting for CNN layer options and optimizer for back propagation after reducing the color palette using minimum variance quantization has shown promising results. Results of testing on public and real dataset has been analyzed. Proposed ensemble is able to achieve 0.95 on the public endoscopy dataset and 0.93 accuracy on the real video dataset. A detailed data analysis has also been incorporated in the study including RGB pixel intensities, distributions of binary classes and various class ratios for training.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Convolutional Neural Networks (CNN) has become popular for wide range of image processing applications including Wireless Capsule Endoscopy (WCE) due to its powerful feature extraction and classification faculties. CNN is one of the most popular and robust class of deep neural networks [20]. It is particularly useful for finding patterns in the images to recognize objects, faces, and scenes. They have the ability to learn directly from the dataset of the images and thus detecting patterns to classify images and thereby eliminating the need for manual feature extraction. It is a synthetic neural network to perform image analysis through pattern recognition with the help of non linear activation functions and neuron weights, calculated during training process. This property of pattern detection is incredibly helpful to make CNN of the utmost importance. It has hidden layers known as convolutional layers as its basis which makes it completely different from normal Multi-Layer Perceptron (MLP) [8]. These convolutional layers perform scanning operations on the image to look for various features. They receive the input, rework in a way to concise it and forward it to selected subsequent layers. Nowadays CNN has become a really powerful tool for solving image classification tasks as they are able to learn highly discriminating features from raw pixel intensities. However, their applicability to medical image analysis is restricted by the non-availability of enormous sets of annotated knowledge needed for training the CNN model. Although image analysis has been the foremost widespread use of CNN, they can even be used for different data type analyses including classification and regression [21].

Wireless Capsule Endoscopy (WCE) is a new technique to visualize entire digestive trace including small bowel using non-invasive means. It is primarily used to examine areas in small intestine where other endoscopy methods fail. The paper proposes a new CNN augmented architecture for detecting the obscure bleeding cases from wireless capsule endoscopy images. Nevertheless, the proposed method is general and can be utilised for other image classification applications as well. Below are the challenges and contributions of the underlying study.

Challenges

-

1.

In-vivo internal body organ images obtained from capsule endoscopy suffer from various issues such as (a) movement of capsule camera for site capturing (b) organ movements (c) non-ideal luminance and focal conditions to capture (d) no control over the movement of both camera and the organs [4].

-

2.

Images suffer from compression resulting in information loss. Moreover noise and blur are involved during capture phase [15].

-

3.

Data collected for anomaly detection is often imbalanced with greater instances of normal case images relatively. Sometimes the ratio is 1000:1 for healthy:sick while making the classification problem difficult to formulate [1].

-

4.

Optimal CNN architecture also depends upon the underlying data distribution along with layer+parameter tuning. Therefore it is a meta-heuristic problem without a clear cut well defined solution [38].

Contribution

-

1.

This paper proposes a CNN augmented ensemble architecture for training, validation and testing the obscure bleeding symptoms from Capsule Endoscopy (CE) images.

-

2.

For ensemble, copies of the image data have been generated using synthetic augmentation by introducing rotation, blur, illuminance and noise.

-

3.

Color palette reduction has been used using minimum variance quantization, studied in [32] to get 24 colors in the image for optimal balance of accuracy and time-space complexity.

-

4.

Data distribution analysis of the RGB pixel intensities has been studied in detail to demonstrate the complexity of the classification problem.

-

5.

The experimental analysis of the proposed technique has been performed on the public and real dataset and compared with other state-of-art methods.

This paper is structured as follow: Section 2 is about the background of CNN and WCE, Section 3 is about literature survey to trigger the research motivation, Section 4 is about proposed method, Section 5 is about results and experiments and finally Section 6 is conclusion and future possibilities.

2 Background - CNN and WCE

A brief discussion about CNN and WCE has been presened in this section with relevant illustrations.

2.1 Convolutional neural networks

According Professor Geoffrey Hinton (father of convolutional networks) in [22], factors responsible for increased use of CNN are as follows:

-

Eliminate the need of manual feature extraction thereby, saving time and labour. CNN mimics human intelligence to learn from input image examples automatically.

-

CNN produce state-of-the-art recognition results with improved quality and performance.

-

It can be retrained for new recognition tasks, enabling you to build on pre-existing networks.

CNN is composed of an input layer, output layer, and many hidden convolutional layers as intermediate layers (Fig. 1). These layers perform operations that alter the data with the intent of learning features specific to the data. Fundamental inherent components of CNN are: convolution, activation function such as Rectified Linear Unit (ReLU), pooling and fully connected layer. CNN has varied filters to facilitate the pattern recognition ranging from simple to obscure for automatic image feature extraction.

Images features may be colors, texture, boundaries or shapes which are detected by using various geometric filters. Classical conception was that deeper the network, more refined are the filters to detect mature features at the cost of computation time. But latest architectures are able to perform better with fewer layers studied in [11]. In convolutional layer, the block of pixel set (convolution matrix) moves over the whole image while filtering across every block of pixels of the input image.

Output of last convolutional block will yield the feature volume. As an example, Fig. 12 shows the features from three convolution layers and fully connected layers for example gastral images case study. The features are present in the output of the convolutional channels. The fully connected layer produces representation vectors that already digested the features.

It explains about the automatic feature learning and classification phases of CNN framework which is similar to identify bleeding against normal images in endoscopy images for the underlying case.

CNN terminology

Convolution means to blend two functions simultaneously and can be described as follows in case of two variables for digital images:

where f,g are two functions with x,y variables, i,j are indices that run for a N × N pixel image for example. The dot ‘.’ on the right hand side is the element by element product. Thus the convolution is a sum of pointwise products of function values, which is conditioned to traversal. CNN is mostly used to learn from three channel coloured image with input size of 256 × 256 × 3 i.e. the intensity matrices (0-255 values) for R,G and B channels. Input images are known as input volume. Kernels are small squared matrices which are convolved with input volume. This process generates activation maps which indicate the highlighted regions detected in the image. During the training process, the kernel matrix values change to extract required features and highlight the important regions for learning. Details can be found in [31]. In CNN, the receptive field (a two dimensional region say 5 × 5) specify the required neuron connections instead of all possible neuron connections. So, if the input volume is 28 × 28 × 3 and receptive field is 5 × 5, then in convolutional layer, every neuron is connected to 5 × 5 × 3 in the input volume for all 3 color channels. Therefore, number of neuron weights will be 75 which gets updated during successive iterations of Back-propagation similar to a feed-forward network.

CNN architecture

Architecture of Convolutional Neural Network consists of following layers (1) Convolutional layer (2) Pooling layer (3) Rectified Liner Unit layer (4) Fully connected layer. The CNN structure anatomy for the proposed network is shown in Table 1

-

1.

Convolutional layer performs the operations of convolution on the input volume and responsible for neutron firing. It has three dimensional structure due to RGB channels. Neuron connections are defined by the receptive field. This layer calculates the low level image features such as lines, edges and corners. By combining multiple maps, each output map feature is calculated as follows:

$$ {y_{k}^{t}} = f\left( \underset{i\in M_{k}}{\sum} x_{i}^{t-1} * K_{ik}^{t}+{b_{k}^{t}}\right) $$(2)where t is tth layer, M is a set of input maps , Kik is convolutional kernel, bk is bias. Please refer [31] for details.

-

2.

Pooling layer reduces the dimensions (down-sampling) of input volume for successive convolutional operations by keeping the depth same. Down-sampling avoids over-fitting and reduces the overhead. Similar to convolutional layer, it uses sliding window using max or average operations.

-

3.

ReLu layer uses the function max(0,x) but it is not differentiable at (0,0). Therefore a smoother function is used. For example Softplus function which is integral of the Sigmoid function as studied in [47].

$$ f(x) = ln(1+e^{x}) $$(3) -

4.

Fully connected layer is completely connected with its preceding layer and follows the output layer. It is the last stage of CNN.

After convolution, the size of the output image can be calculated using the following formula [31]:

where W is input volume, F is the receptive field, P is the padding value, S is the stride. For example, let the image has size 256 × 256 × 3,(W1 = 256),S = 2,F = 4,P = 0. Then \(W_{2} = \frac {256-4+2.0}{2}+1=128\).

The convolution, pooling, ReLu layer operations are repeated over tens or hundreds of layers, with each layer learning to identify different features. After CNN layers are trained, the CNN architecture shifts to classification phase which is the final phase of its life cycle. The last but one layer is a fully connected layer that outputs a vector equivalent to length of number of classes (binary in our case) that the network will be able to predict. This vector contains the probabilities for each class of any image being classified. The final layer of the CNN architecture uses a classification layer such as softmax to provide the output of the classification of the model.

2.2 Wireless capsule endoscopy

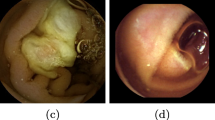

It involves a pill camera similar to the the size of a large vitamin tablet. Two frames per second are recorded by the camera. The patient swallows the capsule and the camera will capture the pictures of entire gastrointestinal tract. Capsule records for about 8 hours and delivers about 55,000 images to the receiver which is put on the waist of the person being examined. When this data is transferred into the computer, the gastroenterologist can see the entire GI tract. The example images of small bowel bleeding symptoms against normal images have been shown in the Fig. 2. The internal body images are blurry red brown in color but bleeding images are more saturated in redness. Digestive tract may involve bleeding, ulcers, tumors and polyp to damage on the intestinal wall or mucous membrane. Capsule endoscopy is mainly used to find the cause of unexplained bleeding in the digestive tract or in case of inflammation or tumors in the small intestine.

Row 1 shows bleeding samples and row 2 shows normal images of GI tract from public data in [5]

Capsule endoscopy is a safe procedure involving little risks only such as the capsule might remain lodged in the body rather than leaving the body through bowel movement. The purpose is not only to identify bleeding but also exact position of bleeding instances [5, 28]. Therefore, automation of this manual procedure would save a lot of valuable time of the doctors and patients waiting for the results of the diagnosis of the endoscopy recording. The current technologies are efficient to traverse WCE images swiftly but still lack automatic diagnosis capability with 100% accuracy. The capsule involved in WCE can be placed in a child with weight as low as 10kg making it a better option for people of all ages. In spite of its benefits, CE is neither a substitute for upper endoscopy nor for colonoscopy. The reason is that CE is only a diagnostic technique and also, it is non-invasive so it cannot help in biopsy.

The developing predominance of intestinal bleeding overall expands the quantity of cases that must be surveyed by doctors. Furthermore, the cost involving the regular check ups and examination are very high and absence of expert specialists keep many people away from accepting a sufficient and effective medication. Algorithmic image classification of WCE is an attempt to assist the analysis of huge image datasets with promising quality and lesser expenses. Below is the literature survey which motivated this research.

3 Literature survey

Authors of this current research paper have also recently worked on explainable machine learning model (based upon CNN) to classify in-vivo gastral images in [33]. It has the capability to justify the test results in contrast to the features involved while highlighting the segmented region of interest in the medical images. Explanable Artificial Intelligence (XAI) provides the insight to a black box machine learning model to reason for the test results derivation [2].

Review on the Applications of Deep Learning for Gastral images has been studied in [6]. Automatic bleeding zone detection using low complexity CNN Structure has been studied in [12] and using multi-stage attention-Unet in [25]. Xing et al.[44] have generated a bleeding detection algorithm of three stages. Pre-processing along with key frame extraction and edge removal is computed in the first stage. The second stage is to differentiate the bleeding frames using a novel superpixel-color histogram (SPCH) feature build on the principle color spectrum and later the decision is made by a subspace KNN classifier. Lastly, segmentation of the bleeding regions is done by extracting a 9-D color feature vector from the multiple color spaces at the superpixel level. Jia et al.[16] present a technique for automatic GI bleeding detection based on a deep CNN. Then performance is calculated by constructing a big WCE dataset that contains nearly 10,000 annotated images for bleeding detection. An eight-layer convolutional neural network that contain three convolutional layers, two fully-connected layers, and three pooling layers are made. After that model is trained using stochastic gradient descent having a batch size of 100 examples, learning rate, momentum, and weight decay of 0.001, 0.9 and 0.004 respectively. The proposed method is lastly modified by replacing the second fully-connected layer with an SVM classifier and complete the bleeding frame detection.

In [34], Probabilistic Neural Network (PNN) is used to extract features of the bleeding region in WCE images distinguishing from the non-bleeding region. Bleeding detection algorithm along with programming are implemented, and the results correctly recognize the bleeding regions in WCE images and clearly mark them out with sensitivity and specificity as 93.1% and85.6% respectively. Sekuboyina et al. [42] propose a technique in which splitting the image into several patches and extracting features pertaining to each block using CNN to automate the abnormality detection in WCE images. It increases their generality while overcoming the drawbacks of manually crafted features. This paper results in sensitivity, specificity as 71 ± 19% and 72 ± 3% respectively. In [13] Hajabdollahi et al. propose a simple and efficient method for segmentation of the bleeding regions in WCE captured images. Suitable colour channels are used and classified by MLP structure. They proposed that quantized neural network without any multiplication could be considered as automatic diagnostic approach inside the capsule. Ghosh et al. [9] trained CNN using SegNet layers with three classes. Then endoscopy image is segmented using the training network and the detected bleeding zones are marked. The best performance is achieved using the hue saturation and value (HSV) color space among different color planes. Performance is evaluated on a publicly available clinical dataset, and framework achieves 94.42% global accuracy.

A deep learning U-Net architecture was used to detect and segment red lesions in the small bowel by Coelho et al. in [5]. This U-Net was evaluated in an annotated sequence by using the Suspected Blood Indicator (SBI) tool and state of art techniques. U-net outperformed its peers in both detection and lesion segmentation. Its lesion detection accuracy outperformed the state-of-art methods by 1.78% in accuracy. Li et al [23] proposed a SVM based approach that combines chrominance moments and uniform LBP as color texture feature for bleeding detection on data collected from 10 patients. Further, Giritharan et al [10] also proposed a SVM based approach for rebalancing the samples by oversampling the minority class and undersampling the majority class and obtaied the accuracy of 90%. In [26], transfer learning has been studied for GI bleeding diagnosis using pre-trained V3 model on ImageNet dataset. Data re-sampling have been used to increase the positive sample rate of the training sets for CNN.

Table 2 summarizes the comparison of recent techniques while listing their year, basic approach, dataset, highlights and results obtained. The state-of-the-art techniques only focus on the classification algorithms on static data without introducing any variations.

4 Proposed method

This sections explains the basic architecture of CNN model used followed by the proposed CNN ensemble based on data augmentation and finally details the proposed model training.

4.1 CNN architecture layers

There are a variety to deep learning models available for CNN architecture. The architecture of each CNN in the proposed ensemble has been inspired from [17, 41, 46] and modified to tune the learning accuracy of the model on the underlying dataset. Similar to the CNN model proposed by Jia et al. [16], each of the proposed CNN involved in the ensemble has been comprised of eight-layers that involve three convolutional layers (C1-C3), two fully-connected layers (FC1, FC2) and three pooling layers (MP1-MP3). Flow diagram for CNN starting from input image, role of various layers and the final binary outcome has been shown in Fig. 3. Table 3 contains the variable values of the layers used in CNN architecture.

4.2 Data augmentation

The proposed method uses dynamic data for CNN training using augmentation during input phase. This augmentation involves generating copies of one image into multiple possibilities of blur, luminance, noise and rotation variances of the image, for efficient and generalized training. The reason of data augmentation is to increase the size of dataset for real time application and avoid overfitting in case of deep learning according to [35, 40]. By utilising blur, luminance, noise and rotation variances of the images, the labelled dataset size can be increased. Labelled data is difficult and expensive to obtain from expert gastroenterologists and also time consuming. It also involves patient’s private information and thus difficult to acquire from hospitals.

For the proposed CNN ensemble, the data is augmented with four transformations and five copies of each data image (4 × 5 = 20 copies per image). Afterwards, each of these five copies are fed into each of the CNN and the aggregate result calculates the binary classification of bleeding and normal image. Figure 4 shows original image, rotation, blur, illuminance alteration, and noise into the original image. Details of each step is sequentially represented in the following subsections. In order to reduce the variance error (in the bias variance graph) for the CNN model, it is useful to consider various deformations in the training data using data augmentations and use ensemble of CNN learning. Various data versions would be infused into CNN ensemble for the aggregate decision. It will make the model capable of dealing with such spectrum of degradation. The CNN ensemble would help finding the generalized decision function thereafter.

Various image augmentations of the original image for ensemble setup shown in Figure 5

While the wireless endoscopy camera captures the images in the small intestine, it might take pictures with random arbitrary angles and distances. The images captured might be blurry due to poor focusing of the camera or it might contain bubbles as shown in Fig. 2. Due to capsule movement, variations in the angles of image rotation (R), illuminance (L) conditions, blurring (B), and noise (N) [24] are simulated. Again, the results might be affected by illuminance conditions. The proposed algorithm should be able to deal with these types of degraded images and the following transformations are considered for studying these degradations which are also shown in Fig. 4. Figure 5 presents the block diagram of our proposed ensemble for image classification. Each of the augmentation is randomly input into CNN models. The ensemble learning helps to reduces the bias caused by individual classifiers for the given set of parameters. Thus, ensemble is more generalised as compared to individual classifiers. In [48], the review of various ensemble learning models and motivation has been studied in detail. A similar ensemble setup has been studied in [30] for traffic surveillance to classify vehicle types.

-

1.

Rotation: Images have been rotated with {60∘,120∘,180∘,240∘,300∘} as 5 different values of degrees by using nearest-neighbor interpolation.

-

2.

Luminance change: To tune the luminance, the image is first converted from RGB to YCbCr. A scalar L is chosen in a random and uniform manner in the interval of [0, 1] and multiplying L with the Y channel. Thus, the modified image is converted back to RGB. Five different variations of YCbCr versions of the image were obtained using random variations in luminance (Y values). The random factors help to spread out the luminance across whole value space possible.

-

3.

Blurring: A circular-symmetric 2-D Gaussian kernel of wσw pixels and Standard deviation σ is used for RGB components for image filtering. RGB components were blurred using this kernel generating 5 versions of each frame with random w and σ values as follows: (w,σ) ∈{(11,9),(9,7),(7,5),(5,3),(3,1)}. The original and blurred version is shown in Fig. 4 which illustrates that the details in the image are weakened by using blurred operation.

-

4.

Poisson Noise: The photon counter endoscopic camera introduces noise following Poisson distribution [37]. The WCE images are being added a Poisson noise. Thus, the output is generated for each pixel using Poisson distribution by computing mean of pixel value. Five versions of noise variants have been generated using MATLAB function imnoise as illustrated in Fig. 4.

Thus in total, 4 × 5 = 20 images have been generated for each image input and introduced into 5 different CNN models in the ensemble framework. There are almost 60,000 images for a real capsule endoscopy video, but our public dataset [5] contains only 3,895 images. Thus 20 fold augmentation helps to achieve the real data simulation (3,895 × 20 = 77,900. Since only a small proportion of intestinal images contain , so augmentation of the images is beneficial for robust model training and data enhancement [35]. In Fig. 5, \(\{R_{i},L_{i},B_{i},N_{i}\}_{i=1}^{5}\) signify various versions of rotation, luminance, blur and noise as explain in above bullet points.

4.3 Training of proposed method

Fine tuning of the model is vital for achieving a premium quality performance in especially in case of medical image analysis. It is because health related decision are critical and internal body images obtained are not often the best quality for automation. Parameter tuning helps to mitigate the problems of over-fitting and speeding up the training process as well [36]. CNN model have been trained and Table 3 highlights the CNN network layer configuration used in training of proposed model. He’s method has been used for weights initialization [14]. For back propagation in CNN, there are various optimizing algorithms options available such as Stochastic Gradient Descent with Momentum (SGDM) solver [3], Root Mean Square Propagation (RMSPROP) and, Adam Stochastic Gradient Descent (ASGD). SGDM has been found to perform relatively better in the experiments. SGDM optimizer specifications have been summarized in Table 4. The epochs were initially set to 20 but the similar accuracy was achieved after 4 epochs as depicted in Figs. 6 and 7. Hence, the number of epochs were reduced to 4 gradually to increase the computation time.

5 Imagenet vs training from scratch

This section discusses about pre-training using ImageNet versus training from scratch for the underlying medical dataset specifically related to endoscopy. Medical dataset has two primitive attributes while working with deep learning: (a) data content is different from pre-trained (Imagenet) model (b) smaller sample dataset. Capsule endoscopy dataset is quite different and obscure as compared to the available natural images in Imagenet. Therefore primitive features contained in the first layer of pre-trained Imagenet model may not be useful. Hence model training from scratch has found to work better in the underlying case study. Moreover Fig. 8 shows the size of ImageNet files in MB which needs to be downloaded for the pre-trained model (obtained from https://keras.io/applications/). Even by using the initial parameters obtained through Imagenet model followed by updating the network parameter results in same classification accuracy. To summarize below are the major aspects to be considered before using transfer learning (such as Imagenet):

-

If the trained dataset has enough labelled examples and nature of the dataset is quite different from ImageNet then it is better to train CNN from scratch. ImageNet does not have endoscopy medical dataset [29].

-

The data distribution of underlying data should be similar to the pre-trained ImageNet dataset. Otherwise training and testing will not be consistent.

-

If the labelled data is less than pre-trainined model (such as ResNet) which comprises million parameters, it could result in overfitting [29]. Number of layers is one of the hyper-parameter which is not easy to reduce in order to deal with overfitting. Moreover, it is computationally expensive to figure out the layers and neurons which needs to be eliminated for dealing with overfitting problem.

Thus, pre-trained networks can be used but one has to fine-tune the parameters, number of layer/neurons making the results not better as compared to training from scratch. The coverage time could be better for transfer learning but performance may not, since the data distribution is different. In [19], a special transfer learning based upon modality-bridge has been proposed which uses a special bridge database for the underlying medical imaging to reduce the domain difference between nature images and medical images.

6 Experiments

This section contains discussion about public dataset, system configuration, minimum variance quantization for preprocessing, performance metrics, and results analysis on public and real dataset.

6.1 Public dataset

Public dataset for small bowel lesion has been obtained from [5] containing two sets of 3,295 + 600 = 3,895 as shown in Table 5. The dataset also contains target labels of bleeding/normal along with bleeding regions segmented manually which played a crucial role in the performance analysis. These image samples are good representative of small bowel situation including normal and bleeding cases. All the images have been re-sized to 256 × 256.

6.2 System configuration

The HP Pavilion X360 on the 64-bit Windows 10 operating system, having the Intel i5 processor has been used to run the experiments. HP Pavilion X360 graphics were produced courtesy of the integrated Intel UHD 620 Graphics along with the NVDIA MX130. Latest version of MATLAB R2018b has been used for programming the proposed method.

6.3 Minimum variance quantization

Quantization helps to reduce the color pallete in the underlying images to help focus on the region of interest and faster computation. This algorithm begins with under sampling the lattice of image pixels, use low-pass filter to blur the image to get the average colors present in uniform sections and finally find the color clusters by using fast quantization methods studied in [32]. The input layer of the CNN architecture fetches the images in RGB color space after reduction in the color palette using minimum variance quantization. Number of colors chosen to be 24 has resulted in good balance between the accuracy and computational complexity, also studied in [39]. Figure 9 illustrates the increased contrast of the transformed crisp image. Color reduction helps in better image segmentation by simplifying the obscure nature of internal body image which is originally red in color. It also enhances the computational efficiency of the proposed model through this pre-processing.

6.4 Performance metrics

The prominent metrics for comparative analysis have been used which include, accuracy, sensitivity, specificity, precision, recall and F1-score. Their formulae have been given in the Table 6.

6.5 Data analysis

Machine learning model selection along with its performance depends on the underlying data distribution [18]. Therefore the data analysis is imperative before model design and selection. Two experiments have been performed to study the data distribution of normal and sick (bleeding) images (1) average red color (R) intensity comparison in sick versus healthy images (2) RGB values analysis in healthy and sick images to see the dispersion and separability of two classes in 2-d plots. For sake of clarity in the plots, less than 10% images have been chosen randomly out of 3,895 images in the underlying public dataset obtained from [5]).

In Fig. 10, the average red color in the sick and healthy classes has been sorted and plotted for 120 images from each category. Although all gastral images are reddish brown in color, but the sick images are more saturated with redness. In Fig. 11, various intensity plots of 300 images have been illustrated. These four figures show the following: (a) RGB intensity distributions of 120 bleeding (sick) and 180 normal (healthy) images for comparison of dispersion in red color. Red is more dispersed on the left half (sick class) which means higher range of red values. Other three plots (b-d) show for all images, the R versus G; R versus B and B versus G plots for these 120 sick + 180 healthy images. In (b-d) images show that there is tremendous amount of overlap and a simple linear regression may not be sufficient for the bleeding classification. Thus, there is a need for powerful non-linear learning system (such as CNN) to discern among sick and healthy classes.

Only 300 images out of 3,895 (from public dataset [5]) has been chosen for clarity of illustration (a) RGB intensity of 300 images (180:120 for healthy:sick cases) (b) Red vs Green average intensities (c) Red vs Blue avg. intensities (d) Blue vs. Green intensities plotted of all 300 images. It is clear from (b-d) that none of the colour intensities are easily separable for healthy and sick cases

6.6 CNN parameters and optimizers

The output layer of the proposed model is a binary output, which is either bleeding or normal. Hidden layers act as intermediate layers with the freedom to tune the parameters to achieve best possible decision function for classification. The training cycle included 4 epochs to yield the required accuracy. Epochs were initially set to 20 but decremented to 4 gradually for the similar accuracy but faster computation during empirical analysis (Figs. 6 and 7). The validation frequency was set to 3 iterations with Infinite Patience and hardware included single CPU with constant learning rate schedule and 0.01 learning rate. There are three 2-D convolutional layers in the proposed network. The initial layers are relatively basic to learn low level features while maturing up towards the end of the network. Layers enlarge in the receptive field size capability to learn subtle features from left to right in CNN. Figure 12 shows the outputs for convolution layers (1-3) and fully connected layer. Figure 12 has been drawn using MATLAB function deepDreamImage to visualise network features. It shows semantic learning prowess in subsequent CNN layers as the data goes through. Refer Table (3) for variables for layers corresponding to entries {2, 6, 10, 13}. Thus the network learns progressively about colors and edges at different angles for complex features. It is clear from the Fig. (12) that by the end, the model is able to classify bleeding versus normal frames due to their striking differences in the contrast after the transformation through CNN layers.

Three optimizer options were tried for CNN ensemble (sgdm, adam and rmsprop) to compare the performance of proposed model as shown in Table 7. It can be observed that for better overall performance, sgdm (Stochastic Gradient Descent with Momentum) solver should be used for CNN, which is related to the works [3]. Thus sgdm optimizer is good choice over rmsprop (Root Mean Square Propagation) and adam (Adam Stochastic Gradient Descent) for this dataset to classify bleeding. Now, keeping the solver fixed to sgdm, the dataset ratio in training phase has been varied by decreasing the normal images randomly to make the data relatively balanced for binary classes (60:35, 60:40 and 50:50).

6.7 Results analysis for class ratios

Various data ratios of healthy and sick must be explored to analyse the performance due to data distribution disparity (in healthy and sick classes) as studied in Section 6.5. All 3,895 images have been considered for training and testing, with random selection of 85:15 for training:testing ratio. Afterwards, 10-trails average has been used to report the performance results. In the training phase, normal:bleeding class ratios has been altered (65:35, 60:40 and then 50:50) for each optimizer and performance has been recorded for analysis. The sick examples were kept intact and normal image examples were decreased gradually. It has been observed that by reducing the data for normal examples, the decision boundary gets biased towards sick images resulting in higher sensitivity. On the other hand, specificity and overall accuracy decreases as the data balances in class ratio by successive reductions. Thus in the underlying case study, more instances with normal examples are beneficial for global predictions for both sick and healthy.

Visual representation of the Table 7 has been plotted as Fig. 13. It clearly demonstrates the victory of sgdm optimizer in CNN over various data ratios of normal and bleeding image sets. Although the best sgdm results were obtained by using 65:35 ratio (for normal and bleeding images). The results from proposed method have been compared with other state-of-art algorithms as shown in Table 8. For consistent comparison, same dataset (of 3,895 images from [5]) has been used with 85:15 for train:test ratio and average over 10 trials. It can be observed that proposed CNN model can achieve accuracy as high as 0.95 outperforming other modern methods. Also, an optimal split ratio for training the model depends on underlying data distribution i.e. ratio of healthy to sick images. With consideration of the system used for experimentation, data analysis results might be useful in the future for researchers to improve their model. Thus CNN ensemble strives to find out the best possible combination of attributes required to classify bleeding symptoms in CE images after setting up the layer options, SGDM optimizer and color palette reduction using minimum variance quantization technique.

6.8 Real dataset

Real endoscopy dataset have been obtained from a known gastroenterologist with manual annotation of the binary labels (normal or bleeding). We considered a subset of this endoscopic video for testing, stretching up to 42 minutes which included significant bleeding frames. The video to image conversion has been done using MATLAB using function readFrame with the rate of 2 frames per second. It yielded 5,000 images (normal:bleeding ratio is 4000:1000). Sample frames from the real dataset video has been shown in the Fig. 14. The previous trained model on the public dataset [5] with augmentations has been used to test this real world video dataset. Training rules were defined in Tables 3, 4, 5. Prior to the testing real dataset on the proposed algorithm, basic pre-processing has been performed such as (a) histogram equalization for contrast enhancement (b) image resizing to match the training images (c) color palette reduction using minimum variance quantization. Proposed ensemble was able to achieve 0.93 and 0.91 accuracy with and without preprocessing on the real video dataset while outperforming state-of-art algorithms.

Thus convolutional neural networks ensemble made from data augmentation with minimum variance quantization with a specific setting of layers and optimizer makes it well suitable for building highly dependable image classification system for internal organ images.

7 Conclusion

This paper has defined a CNN augmented ensemble architecture to diagnose bleeding symptoms from Capsule Endoscopy (CE) images. Data distribution and RGB pixel intensities has been studied in detail to demonstrate the complexity of the classification problem. Copies of the image data have been generated using synthetic augmentation by introducing rotation, blur, illuminance and noise. Afterwards, Color palette reduction has been used using minimum variance quantization, studied in [32] to get 24 colors in the image for optimal balance of accuracy and time-space complexity. The experimental analysis of the proposed technique has been performed on both the public and real dataset and compared with other state-of-art methods.

The limitation of the proposed technique is that if images quality is poor, then the detection accuracy might degrade. So the assumption is a good quality image dataset for training and testing. Image size should also be optimized to balance the trade off with meta-heuristic performance accuracy and computational time on the underlying machine. For high volume video data processing, GPU workstation is also required which might be expensive. Nevertheless, the proposed CNN ensemble is well suitable for building highly dependable image classification system for any similar type of image classification application. Future plan is to incorporate longer and obscure videos for model training and design automatic video annotation and segmentation system by utilizing cross-modal features of image and collateral text obtained from expert gastroenterologists.

References

Abouelenien M, Yuan X, Giritharan B, Liu J, Tang S (2013) Cluster-based sampling and ensemble for bleeding detection in capsule endoscopy videos. Am J Sci Eng 2(1):24–32

Anjomshoae S, Främling K, Najjar A (2019) Explanations of black-box model predictions by contextual importance and utility. In: International Workshop on Explainable, Transparent Autonomous Agents and Multi-Agent Systems. Springer, New York, pp 95–109

Bottou L (2010) Large-scale machine learning with stochastic gradient descent. In: proceedings of COMPSTAT’2010. Springer, New York, pp 177–186

Carpi F, Shaheed H (2013) Grand challenges in magnetic capsule endoscopy. Expert Rev Med Devices 10(4):433–436

Coelho P, Pereira A, Salgado M, Cunha A (2018) A deep learning approach for red lesions detection in video capsule endoscopies. In: International Conference Image Analysis and Recognition. Springer, New York, pp 553–561

Du W, Rao N, Liu D, Jiang H, Luo C, Li Z, Gan T, Zeng B (2019) Review on the applications of deep learning in the analysis of gastrointestinal endoscopy images. IEEE Access 7:142053–142069

Figueiredo IN, Kumar S, Leal C, Figueiredo PN (2013) Computer-assisted bleeding detection in wireless capsule endoscopy images. Comput Methods Biomec Biomed Eng Imaging Visualization 1(4):198–210

Fuentes Álvarez JR Deep learning in hierarchical neural networks applied as pattern classifiers for massive information systems

Ghosh T, Li L, Chakareski J (2018) Effective deep learning for semantic segmentation based bleeding zone detection in capsule endoscopy images. In: 2018 25th IEEE International Conference on Image Processing (ICIP). IEEE, Los Alamitos, pp 3034–3038

Giritharan B, Yuan X, Liu J, Buckles B, Oh J, Tang SJ (2008) Bleeding detection from capsule endoscopy videos. In: Engineering in Medicine and Biology Society, 2008. EMBS 2008, 30th Annual International Conference of the IEEE. IEEE, Los Alamitos, pp 4780–4783

Gu J, Wang Z, Kuen J, Ma L, Shahroudy A, Shuai B, Liu T, Wang X, Wang G, Cai J et al (2018) Recent advances in convolutional neural networks. Pattern Recogn 77:354–377

Hajabdollahi M, Esfandiarpoor R, Najarian K, Karimi N, Samavi S, Soroushmehr SR (2019) Low complexity cnn structure for automatic bleeding zone detection in wireless capsule endoscopy imaging. In: 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE, Los Alamitos, pp 7227–7230

Hajabdollahi M, Esfandiarpoor R, Soroushmehr S, Karimi N, Samavi S, Najarian K (2018) Segmentation of bleeding regions in wireless capsule endoscopy images an approach for inside capsule video summarization,arXiv:1802.07788

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In: proceedings of the IEEE international conference on computer vision, pp 1026–1034

Iakovidis DK, Koulaouzidis A (2015) Software for enhanced video capsule endoscopy: challenges for essential progress. Nat Rev Gastr Hepat 12(3):172

Jia X, Meng MQH (2016) A deep convolutional neural network for bleeding detection in wireless capsule endoscopy images. In: Engineering in Medicine and Biology Society (EMBC), 2016 IEEE 38th Annual International Conference of the. IEEE, Los Alamitos, pp 639–642

Jia X, Meng MQH (2017) Gastrointestinal bleeding detection in wireless capsule endoscopy images using handcrafted and cnn features. In: 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE, Los Alamitos, pp 3154–3157

Kaur H, Pannu HS, Malhi AK (2019) A systematic review on imbalanced data challenges in machine learning: Applications and solutions. ACM Comput Surveys (CSUR) 52(4):79

Kim HG, Choi Y, Ro YM (2017) Modality-bridge transfer learning for medical image classification. In: 2017 10th International Congress on Image and Signal Processing BioMedical Engineering and Informatics (CISP-BMEI). IEEE, Los Alamitos, pp 1–5

Lawrence S, Giles CL, Tsoi AC, Back AD (1997) Face recognition: a convolutional neural-network approach. IEEE trans Neural Netw 8(1):98–113

LeCun Y, Bengio Y, et al. (1995) Convolutional networks for images, speech, and time series. Handbook Brain Theory Neural Netw 3361(10):1995

LeCun Y, bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Li B, Meng MQH (2009) Computer-aided detection of bleeding regions for capsule endoscopy images. IEEE Trans Biomed Eng 56(4):1032–1039

Li P, Li Z, Gao F, Wan L, Yu J (2017) Convolutional neural networks for intestinal hemorrhage detection in wireless capsule endoscopy images. In: 2017 IEEE International Conference on Multimedia and Expo (ICME). IEEE, Los Alamitos, pp 1518–1523

Li S, Zhang J, Ruan C, Zhang Y (2019) Multi-stage attention-unet for wireless capsule endoscopy image bleeding area segmentation. In: 2019 IEEE International Conference on Bioinformatics and Biomedicine (BIBM). IEEE, Los Alamitos, pp 818–825

Li X, Zhang H, Zhang X, Liu H, Xie G (2017) Exploring transfer learning for gastrointestinal bleeding detection on small-size imbalanced endoscopy images. In: Engineering in Medicine and Biology Society (EMBC), 2017 39th Annual International Conference of the IEEE. IEEE, Los Alamitos, pp 1994–1997

Liangpunsakul S, Mays L, Rex DK (2003) Performance of given suspected blood indicator. Amer J Gastro 98(12):2676–2678

Liu J, Yuan X (2009) Obscure bleeding detection in endoscopy images using support vector machines. Optim Eng 10(2):289–299

Liu S, Wang Y, Yang X, Lei B, Liu L, Li SX, Ni D, Wang T (2019) Deep learning in medical ultrasound analysis: a review. Engineering

Liu W, Zhang M, Luo Z, Cai Y (2017) An ensemble deep learning method for vehicle type classification on visual traffic surveillance sensors. IEEE Access 5:24417–24425

Lu Y, Yi S, Zeng N, Liu Y, Zhang Y (2017) Identification of rice diseases using deep convolutional neural networks. Neurocomputing 267:378–384

Lucchese L, Mitra S (1999) An algorithm for fast segmentation of color images. In: Multimedia Communications. Springer, New York, pp 110–119

Malhi A, Kampik T, Pannu HS, Madhikermi M, Framling K (2019) Explaining machine learning based classifications of in-vivo gastral images. In: 2019 IEEE International Conference on Digital Image Computing: Techniques and Applications (DICTA 2019). IEEE, Los Alamitos, pp 1–7

Pan G, Yan G, Qiu X, Cui J (2011) Bleeding detection in wireless capsule endoscopy based on probabilistic neural network. J Med Syst 35(6):1477–1484

Perez L, Wang J (2017) The effectiveness of data augmentation in image classification using deep learning, arXiv:1712.04621

Radenović F, Tolias G, Chum O (2018) Fine-tuning cnn image retrieval with no human annotation. IEEE transactions on pattern analysis and machine intelligence

Raginsky M, Jafarpour S, Harmany ZT, Marcia RF, Willett RM, Calderbank R (2011) Performance bounds for expander-based compressed sensing in poisson noise. IEEE Trans Signal Process 59(9):4139–4153

Razzak MI, Naz S, Zaib A (2018) Deep learning for medical image processing: Overview, challenges and the future. In: Classification in BioApps. Springer, New York, pp 323–350

Sainju S, Bui FM, Wahid KA (2014) Automated bleeding detection in capsule endoscopy videos using statistical features and region growing. J Med Syst 38(4):25

Salamon J, Bello JP (2017) Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal Process Letters 24 (3):279–283

Seguí S, Drozdzal M, Pascual G, Radeva P, Malagelada C, Azpiroz F, Vitrià J (2016) Generic feature learning for wireless capsule endoscopy analysis. Comput Bio Med 79:163–172

Sekuboyina AK, Devarakonda ST, Seelamantula CS (2017) A convolutional neural network approach for abnormality detection in wireless capsule endoscopy. In: 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017). IEEE, Los Alamitos, pp 1057–1060

Usman MA, Satrya GB, Usman MR, Shin SY (2016) Detection of small colon bleeding in wireless capsule endoscopy videos. Comput Med Imaging Graph 54:16–26

Xing X, Jia X , Meng MH (2018) Bleeding detection in wireless capsule endoscopy image video using superpixel-color histogram and a subspace knn classifier. In: 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE, Los Alamitos, pp 1–4

Xiong Y, Zhu Y, Pang Z, Ma Y, Chen D, Wang X (2015) Bleeding detection in wireless capsule endoscopy based on mst clustering and svm. In: 2015 IEEE Workshop on Signal Processing Systems (SiPS). IEEE, Los Alamitos, pp 1–4

Yuan Y, Meng MQH (2017) Deep learning for polyp recognition in wireless capsule endoscopy images. Med physics 44(4):1379–1389

Zheng H, Yang Z, Liu W, Liang J, Li Y (2015) Improving deep neural networks using softplus units. In: 2015 International Joint Conference on Neural Networks (IJCNN). IEEE, Los Alamitos, pp 1–4

Zhou Z-H (2015) Ensemble learning. Encyclopedia of biometrics, 411–416

Acknowledgments

This research work has been sponsored by the Seed Money project grant at Thapar Institute of Engineering and Technology Patiala India under Grant TU/DORSP. Authors are thankful to Dr. Sunil Arya Gastroenterologist, Patiala India for providing the dataset and technical recommendations.

Funding

Open access funding provided by Aalto University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pannu, H.S., Ahuja, S., Dang, N. et al. Deep learning based image classification for intestinal hemorrhage. Multimed Tools Appl 79, 21941–21966 (2020). https://doi.org/10.1007/s11042-020-08905-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-08905-7