Abstract

In this paper, a robust additive image watermarking system operating in the Dual Tree Complex Wavelet Transform (DTCWT) domain is proposed. The system takes advantage of a new perceptual masking model that exploits the Human Visual System (HVS) characteristics at the embedding stage. It also uses an efficient watermark detection structure, called the Rao-test, to verify the presence of the candidate watermark. This structure relies on the statistical modeling of high frequency DTCWT coefficients by the Generalized Gaussian distribution. Experimental results show that the proposed system outperforms related state-of-the-art watermarking systems in terms of imperceptibility and robustness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recent advances in information technologies have enabled users to access, manipulate and distribute digital multimedia easily, allowing massive production and sharing of digital data. However, issues have arisen regarding the protection of intellectual property because the current technology also facilitates unauthorized copying and illegal distribution of multimedia. To overcome these issues, security approaches such as encryption, watermarking, and perceptual hashing have been reported in the literature.

Digital watermarking has evolved very quickly and is gaining more and more interest in practical applications. Besides copyright protection, digital watermarking has been introduced in digital copy tracking, broadcast monitoring, steganography and data authentication. There are two classes of digital watermarking; multi-bit and one-bit watermarking. In multi-bit watermarking, the watermark consists of a sequence of bits representing a meaningful information such as an ID or a binary logo. In this case, the role of the decoding scheme is to extract, bit by bit, the full version of the watermark in order to recover the hidden information [1, 7, 15, 16, 24,25,26,27, 35, 37, 39, 40, 43]. In one-bit watermarking, however, the watermark serves as a verification code where the role of the detector is to check the presence/absence of the watermark [2, 13, 21, 22, 30]. In practice, one-bit watermarking can be used in copy detection, copyright protection, and broadcast monitoring. The key idea of watermark embedding is to introduce controlled modifications to all or some selected samples of the host data. These modifications can be performed in the spatial domain or in the transform domain. Although spatial domain methods are simple and easy to apply and implement, embedding in the transform domain provides higher performance in terms of imperceptibility and robustness. Commonly used transforms include the Discrete Wavelet Transform (DWT), the Discrete Cosine Transform (DCT), the Singular Value Decomposition (SVD) and the Discrete Fourier Transform (DFT). Due to its desirable features, especially the ability to exploit the Human Visual System (HVS) characteristics in a better way, the DWT is viewed as one of the most broadly used and studied domain in the field of digital watermarking. However, this transform has two major drawbacks: (i) lack of shift invariance, which means that small shifts in the input data leads to major variations in the distribution of the energy between DWT coefficients at different scales; and (ii) poor directional selectivity for diagonal features [20].

To overcome these limitations, Kingsbury has derived a new kind of wavelet transform called the Dual-Tree Complex Wavelet Transform (DTCWT) [19] which combines desirable properties from the DWT and the Complex Wavelet Transform (CWT), namely: (i) nearly shift invariance; (ii) good directional selectivity; (iii) Perfect reconstruction; (iv) Limited redundancy; and (v) low computational complexity [20]. Due to these advantageous properties, the DTCWT has become an attractive embedding domain for designing efficient watermarking systems. The first work in this context has been proposed by Loo and Kingsbury [29] and then many works have built upon the idea of DTCWT domain for watermarking images [3, 7, 12, 21, 27, 31, 43] and videos [4, 5, 9, 34]. In image watermarking, most of the work published in the literature is concerned with the multibit approach [3, 7, 12, 27, 31, 43] and to the best of our knowledge, very little effort has been put on one-bit watermarking [21]. The only work that is worth mentioning here was reported in [21] where the authors have proposed two blind additive watermark detection structures in the DTCWT domain. The authors have first demonstrated that the concatenated real and imaginary components of the DTCWT detail sub-bands can be statistically modeled by the Generalized Gaussian Distribution (GGD). Then, they adjusted a Likelihood-ratio based detector, initially proposed in [13] and the Rao detector as reported in [33] to operate in the DTCWT domain. The authors have found that the Rao-based detector is more practical and provides better results than the Likelihood-ratio based detector. In video watermarking, a number of one-bit watermarking techniques have been published [4, 5, 9, 34]. Recently, Asikuzzaman et al. [5] have presented three versions of a blind additive watermarking algorithm to combat illegal video distribution. The watermark is additively embedded in all the 3rd level DTCWT sub-bands of the video chrominance channel and the detection was carried out using a normalized cross correlation rule. In their first version, the authors built upon a previous work published in [4], to detect the watermark only from the sub-bands where it was originally embedded (i.e. the sub-bands of level 3 of the DTCWT decomposition). The second version was designed to resist the downscaling in resolution attack, by extracting the watermark from any level of DTCWT decomposition depending on the downscaling resolution rate, rather than extracting it from the sub-bands of the 3rd level. Unlike these two versions that use a symmetric key approach, the third version is based on a keyless detection approach where the watermark can be detected by only using information extracted from the frames. This version can resist temporal de-synchronization attacks, such as frame dropping, frame insertion or frame rate conversion.

In this paper, a blind additive watermarking system for still images operating in the DTCWT domain is proposed. In order to overcome the problem of controlling the watermark imperceptibility in additive watermarking, a new perceptual masking model is proposed. This model builds upon the work of [44], but adjusted here to operate in one-bit additive watermarking in the DTCWT domain. Note that the system developed in [44] is a multi-bit watermarking scheme using a multiplicative rule and operating in the DWT domain. The proposed model exploits HVS characteristics, namely: the frequency band sensitivity, the brightness masking and the texture masking to quantify the amount of unnoticeable changes in the DTCWT domain. It is worth mentioning that there has not been any masking model reported in the literature exploiting the aforementioned characteristics and operating in the DTCWT domain. At the watermark detection stage, we have introduced and adapted a well known watermark detector, which is based on the Rao-Test. As known, the performance of this detector relies heavily on the statistical modeling of the host data. Therefore, the DTCWT coefficients are modeled by a GGD as suggested in previous works. Extensive experiments have been carried out to assess the performance of the proposed system and results show its efficiency in terms of imperceptibility and robustness with a clear superiority over related schemes. Also, through experiments, we have demonstrated that it is possible to achieve a good detection performance with fixed GGD parameters rather than estimating them for each image. This reduces the computational complexity at the detection stage.

The rest of the paper is structured as follows. Section 2 provides a brief introduction to the DTCWT. Section 3 describes the proposed watermarking system. Experimental results are reported and discussed in Section 4. Conclusions are drawn in Section 5.

2 Introduction to the dual tree complex wavelet transform

The Dual Tree Complex Wavelet Transform was first introduced by Kingsbury [19]. This transform has gained a special attention because it exhibits the desirable properties of the DWT and CWT. That is, perfect reconstruction, computational efficiency, approximate shift invariance and directionally selective filters [20]. Instead of using one filter tree in the original DWT, the DTCWT uses two filter trees to produce two sets of coefficients which can be combined to obtain complex coefficients. In practice, the DTCWT is implemented by using two real DWTs that use different sets of filters. The first DWT generates the real part of the transform while the second DWT gives the imaginary part. This makes this transform redundant with a factor of 2d for d-dimension signals. To obtain the inverse of the DTCWT, the real part and the imaginary part are each inverted using the inverse of each of the two real DWTs to get two real signals. These two signals are then averaged to reconstruct the final signal [36].

In the case of digital images (i.e. 2-D signals), the DTCWT generates two complex low frequency sub-bands and six high frequency complex sub-bands at each level of decomposition, representing the outputs of six directional filters oriented at angles of ± 15∘, ± 45∘ and ± 75∘ [9] (Fig. 1). Mathematically, the low frequency coefficients can be expressed by

and the high frequency coefficients can be written as

for

where Re(.) and Im(.) are the real and the imaginary parts, respectively. L1 and L2 represents the low-frequency sub-bands obtained from the first and the second tree of decomposition, respectively. Λ is the decomposition level. 𝜃 is the direction of the sub-band. N and M represent the size of the input image. The variables u and v indicate the location of the coefficient in each sub-band.

The DTCWT has been introduced in many image processing applications such as image denoising, classification, segmentation and sharpening, digital watermarking, textures analysis and synthesis, etc. In the field of watermarking, the nearly shift invariance property of the DTCWT is particularly important since the watermark can resist geometric distortions. Also, the DTCWT offers powerful perceptual characteristics as it exhibits better directional sensitivity in high frequency sub-bands when compared to the DWT, hence, offering higher imperceptibility of embedded watermarks [28].

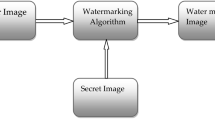

3 Proposed watermarking system

As depicted in Fig. 2, the proposed watermarking system comprises two parts: watermark embedding and watermark detection. At the embedding stage, the 2-D binary watermark is first decomposed using a 1-level DTCWT and the obtained high frequency coefficients are embedded in the high frequency coefficients of the DTCWT transformed image by using an additive rule. To overcome the drawback of the additive rule in controlling the amplitude of the inserted watermark, a new visual masking model is used. In the detection phase, the high frequency coefficients of the 1-level DTCWT transformed candidate watermark along with the high frequency DTCWT coefficients of the watermarked image are presented to the watermark detector in order to verify the presence of the candidate watermark.

3.1 Proposed masking model

In the literature, little research has been devoted to the use visual masking models in DTCWT watermarking. These models have been applied on still images [27, 29] and and video [5, 9]. Liu et al. [27] have adopted the perceptual masking model proposed which was initially proposed for DWT coefficients in [23]. However, the technique was not blind as it requires the original image at detection. In this work, we propose a new perceptual masking model in the DTCWT domain for blind additive image watermarking. This model builds upon the idea of the Just Perceptual Weighting (JPW) presented in [44] which exploits three HVS characteristics, namely: band sensitivity, local brightness and texture masking. More precisely, the proposed model combines a spatial frequency sensitivity function, a brightness masking function and a texture masking function to compute a weight for each DTCWT coefficient of the image. This weight describes the amount of changes that can be introduced in the DTCWT coefficients triggering the sensitivity of the HVS. The weight value for a coefficient x(Λ, 𝜃, u, v), is formulated as follows

where SF(Λ, 𝜃) represents the spatial frequency for a sub-band (Λ, 𝜃); LB(Λ, 𝜃, u, v) is the local brightness for a coefficient x(Λ, 𝜃, u, v); and TM(Λ, 𝜃, u, v) is the texture masking adjustment of a coefficient located in position (u, v) in the sub-band (Λ, 𝜃). The the parameters a, b and c are obtained through extensive experiments and the optimal values obtained are a = b = 0.25 and c = 0.02.

3.1.1 Spatial frequency sensitivity

It is known that the HVS is sensitive to patterns and textures which can be perceived as spatial frequencies. Furthermore, this sensitivity has been shown to be dependent on the orientation of texture. Particularly, the HVS is more sensitive to vertical and horizontal lines and edges in an image than those with a 45-degree orientation [10]. Normally, the spatial frequency response is described by the sensitivity to luminance contrast as a function of spatial frequency, and this is referred to as the Contrast Sensitivity Function (CSF). In the case of DWT, a CSF is usually implemented by assigning a single value to each sub-band. This represents a frequency weighting factor that describes the average sensitivity of the HVS for the covered frequency range [32].

In this work, we propose to use the CSF model proposed by Hill et al.[14] for the DTCWT, so that a single value for the frequency weighting factor is assigned to each DTCWT sub-band. Note that the real and imaginary parts will receive the same value since the frequency factor depends on the decomposition level and the sub-band orientation only. The values of each DTCWT sub-band are reported in Table 1.

3.1.2 Local brightness masking

According to Barni et al. [6], the human eye is less sensitive to modifications that occur in very dark and very bright areas. This characteristic has been introduced to design perceptual models, especially in the DWT domain [6, 42], where the local brightness is exploited by using the approximation sub-band. In this work, we propose to evaluate local brightness of DTCWT sub-bands at a given level based on the magnitude of the low frequency sub-bands of that level. A mathematical formulation is given by

with

where ∣.∣ represents the magnitude value of a complex number. x(λ, L1, u, v) and x(λ, L2, u, v) are the value of the DTCWT coefficient, in the low frequency sub-bands L1 and L2 at level λ, respectively. Note that the values of the magnitudes of the low frequency sub-bands are all normalized into the range of [0,1] before computing the local brightness masking.

3.1.3 Texture masking

It is well known that human eye is less sensitive to alterations in highly textured regions than in smooth and homogeneous areas. This fact can allow to hide or mask other patterns such as watermarks into the textured areas in an imperceptible manner and this is referred to as texture masking. In this work, a Noise Visibility Function (NVF) [38] that characterizes the local image properties is used to model the textured regions. The NVF used is based on stationary Generalized Gaussian model because according to Kwitt et al. [21] the DTCWT coefficients can be well modeled using a GGD. The perceptual weight describing sensitivity to changes in textured areas is given for each DTCWT coefficient x(λ, 𝜃, u, v) as [38]

where σ2(λ, 𝜃) is the variance of the sub-band 𝜃 at level λ. and,

where

where \(\overline {x}(\lambda ,\theta ,i,j)\) represents a local mean, computed using a local window of size L, centered at (u, v). It is given by: \(\overline {x}(\lambda ,\theta ,u,v) = 1/(2L + 1)^{2} {\Sigma }_{m = -L}^{L}{\Sigma }_{n = -L}^{L} a(i+m,j+n)\). ∥.∥ denotes the matrix norm. and, \(\eta (\gamma ) = \sqrt {\frac {\Gamma (3/\gamma )}{\Gamma (1/\gamma )}}\), with Γ(.) represents the gamma function. The parameter γ represents the shape parameter that characterizes the GGD of each sub-band. This parameter can be estimated as described in [11]. However, in this work, we propose to use fixed values for the parameter γ because as demonstrated in Section 4, this helps to reduce the computation complexity while enhancing the detection performance of the system.

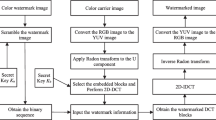

3.2 Watermark embedding and detection

The watermark to be embedded W is a 2-D array with values in {− 1,+ 1} generated by using a pseudo-random sequence generator (PRSG) where the seed represents the secret key k. However, as pointed out in [5, 21, 29], this bipolar watermark cannot be inserted directly into the DTCWT coefficients because, due to the redundancy of the DTCWT, some components of the watermark that lie in the null space of the inverse DTCWT may be lost during the reconstruction process. To overcome this issue, it has been proposed to embed the DTCWT coefficients of the watermark into the host data. In this work, a one-level DTCWT is applied to the watermark W to obtain a low frequency sub-band w(1) and six detail sub-bands w(1, 𝜃), as depicted in Fig. 3. The coefficients of the six high frequency sub-bands constitute the watermark to be embedded in the coefficients at the second level DTCWT coefficients of the host image. In our implementation, the watermark is inserted into the real and imaginary parts of the high frequency DTCWT coefficients of the sub-bands with 𝜃 = ± 45∘ via an additive rule as follows

with

where y represents the set of the watermarked coefficients and δ is a scalar used to control the watermark strength. abs represents the absolute value and σ(.) is the standard deviation.

1-level DTCWT decomoposition applied to the Watermark W[9]

The role of a watermark detector is to verify whether the input image contains the candidate watermark. Watermark detection can be viewed as a problem of detecting a known signal in a noisy environment, where the host coefficients represent the noisy channel and the watermark is the signal to be detected. In this paper, an efficient watermark detector is adopted. The structure of this detector relies on the Rao-test which is based on a binary hypothesis test. Two hypotheses are formulated to describe the presence or absence of the candidate watermark W∗ in the data under test. The two hypotheses are: the null hypothesis H0 (the claimed watermark is not present) and the alternative one H1 (the host data carries the claimed watermark). Furthermore, the performance of the detector depends on the statistical modeling of the host data. As pointed out by Kwitt et al. [21], a good statistical approximation of DTCWT coefficients can be obtained by adaptively varying two parameters of the GGD, which is given by

where Γ(.) is the Gamma function, \({\Gamma }(z)={\int }_{0}^{\infty }e^{-t}t^{z-1}dt\), z > 0. The parameter α is referred to as the scale parameter and it models the width of the pdf peak (standard deviation) and β is called the shape parameter and it is inversely proportional to the decreasing rate of the peak.

In detection theory, Kay [17] has proven that the Rao-test has an asymptotically optimal performance similar to that of the generalized likelihood ratio test (GLRT). In other words, under the assumption that the noise probability density function (pdf) is symmetric, the performance of the Rao-based detector is equivalent to that of GLRT-based one that is designed with a priori knowledge of the noise parameters.

It is worth mentioning that the optimum detector proposed by Nikolaidis and Pitas in [33] for additive watermarking in the DWT and DCT domain cannot be used here because the watermark is not bipolar since it is transformed in the DTCWT domain. We adopt in this work a Rao-based watermarking detector that considers GGD for modeling DTCWT coefficients and takes into account the normal distribution of the DTCWT transformed watermark. The detector response is given by the following equation [21]

In our case, y represents the high frequency DTCWT coefficients and w′∗ is the set of the high frequency DTCWT coefficients of the candidate watermark W∗. Without loss of generality, y and w′∗ are assumed to be vectors of length N.

In the literature [18, 21, 22, 33], it is well established that the detection response ρ follows a Chi-square distribution with one degree of freedom (\({\chi _{1}^{2}}\)) under hypothesis H0, whereas under hypothesis H1, it follows a Non-Central Chi-square distribution with one degree of freedom and non-centrality parameter Λ (χ1,Λ2), as shown in Fig. 4. Based on these characteristics, the detection threshold TRao can be defined based on a desired \(P_{FA}^{*}\) as follows

where erfc(.) is the complementary error function, given by \(erfc(x) = 2\pi .{\int }_{x}^{\infty }e^{t^{2}}dt\).

The probability of detection of a watermark (PDet = Prob(ρ > TRao|H1)) is defined for a given \(P_{FA}^{*}\) by [21]

where Q(.) is the q-function.

After inspecting the Rao detector structure, there is only one parameter (i.e., the shape parameter β) to be estimated directly from the watermarked coefficients. However, as mentioned in [22, 33], the detector presented by (11) is asymptotically optimal, which means that the host data needs to be adequately large.

4 Experimental results

In this section, intensive experiments have been conducted to evaluate the performance of the proposed watermarking system on a set of test images. In all experiments, standard grayscale and color images of size 512 × 512 have been used. In particular, results on six grayscale and two color images, as shown in Figs. 5 and 6, respectively, are reported. Note that these images are of different contents and cover a good range of the frequency content that natural images normally carry (i.e., textured, edged, and smooth images). For color images, the luminance plane has been selected to hold the watermark to ensure robustness against color manipulations. In our analysis, the following blind additive watermarking systems have been considered for reference

-

Cheng et al. [8]: In their work, a perceptual model constrained approach to information hiding in the DWT and the DCT domains is proposed. In this paper, the DWT-based and the DCT-based models are referred to as Cheng (DWT) and Cheng (DCT), respectively.

-

Kwitt et al. [21]: In their work, a watermark detection structure has been proposed in the DTCWT based on the Rao-test, where no perceptual model has been used. It is referred to as Kwitt (DTCWT) in this paper.

-

Asikuzzaman et al. [5]: Their work builds upon the idea published in [9] where a perceptual mask is used in the embedding phase and the detection relies on an inverse mask to decode the watermark. The correlation is then used to verify the presence of the candidate watermark. The main difference from [9] is that in [5] the chrominance plane is used to enhance watermark imperceptibility and the watermark is embedded in high frequency DTCWT coefficients. This system is referred to as Asikuzzaman (DTCWT).

Three aspects are considered in our experiments: (i) the imperceptibility of the hidden watermark, (ii) the detection performance in absence of attacks, (iii) the robustness of the watermark against common signal processing attacks, and (iv) the computational complexity of the embedding and the detection processes.

4.1 Imperceptibility analysis

First, watermark invisibility is assessed. In Fig. 7, the original images are displayed along with their watermarked versions with a PSNR close to 45 dB. As can be seen, the images are visually indistinguishable, thus demonstrating the effectiveness of DTCWT watermarking and the perceptual masking scheme. In particular, this can be be appreciated from Fig. 7, where the absolute difference between the original images and the watermarked ones, magnified by a factor of 5, is shown. Obviously, the watermarking takes place mainly in high activity regions and around edges. This suggests that edged and textured images are more suitable for watermarking than smooth and low activity images. Next, an objective evaluation of watermark imperceptibility is performed using two well known measures: the Peak Signal-to-Noise Ratio (PSNR) and the Structural SIMilarity (SSIM) index [41]. Each test image has been watermarked using 2000 randomly generated watermarks and the average values of PSNR and SSIM are reported. To make the comparison as fair as possible, the watermark strength has been set to obtain approximately the same value of the Document-to-Watermark Ratio (DWR) for the competing techniques. Figures 8 and 9 show the obtained PSNR and SSIM of the test images with different values of DWR, respectively. As can be seen, the proposed system clearly outperforms the other systems in terms of imperceptibility on both grayscale and color images.

4.2 Detection performance

In order to evaluate the performance of the watermark detection, the Receiver Operationg Characteristics (ROC) curves were used. These curves represent the variation of the PDet against the theoretical \(P_{FA}^{*}\). To obtain the ROC curves, the test images have been watermarked by 10000 randomly generated watermarks. For each tested system, the strength of the watermark is set to obtain a PSNR value of ≈ 60 dB for Baboon and ≈ 65dB for the other images.

First, experiments have been performed to evaluate the impact of the shape parameter β on the detection performance. To do so, the performance of the proposed system have been assessed with different values of β. These values are either fixed in the range {0.5,0.8,1,1.2} or estimated from the watermarked coefficients according to the Maximum-Likelihood Estimation (MLE) method described in [11]. It is worth mentioning that the idea of using fixed values for the shape parameter β has first been proposed by Hernandez et al. [13] in the DCT and DWT domains. Figure 10 shows the obtained ROC curves. Interestingly, the best detection performance is obtained with fixed parameter settings (i.e, β = 1.2 for Pepper and Baboon and β = 1 for the other images). In fact, the performance obtained when MLE was used to estimate β has been lower for all test images. As a result, it would be sensible to use a fixed value of β since this yields better detection performance than that obtained with MLE. Furthermore, this significantly saves the computational cost involved at the detection stage for estimating β with MLE.

The second set of experiments have been conducted to evaluate the detection performance of the proposed system in the absence of attacks with a comparison to the aforementioned systems. As can be seen from Fig. 11, the proposed system outperforms the competing techniques for almost all test images. The worst results were obtained for the system of Asikuzzaman et al. [5]. Such a poor performance was expected since this system is a correlation-based detector and hence is optimal only when the host data follows a Gaussian distribution.

To validate the superiority of the proposed system over its competitors, we have calculated the Equal Error Rate (EER) and the obtained results are depicted in Table 2. As can be noted, the proposed system appears more powerful than competing techniques.

4.3 Robustness analysis

The robustness of the proposed scheme against some image processing techniques and geometric attacks is assessed. To this end, a set of 10000 randomly generated watermarks are embedded into each test image, then each attack with a fixed strength value is applied to the watermarked images. In all tests, the value of the strength is set to obtain a PSNR around 55 dB. In this section, only the results obtained on Lena (grayscale image) and Barbara (color image) are reported because similar findings have been reached on the remaining test images.

4.3.1 Robustness against image processing

We have evaluated the robustness of the proposed system against JPEG and JPEG-2000 compression schemes, mean filtering and additive white Gaussian noise (AWGN). The watermarked images have been altered by applying attacks with a fixed strength as follows: JPEG compression with quality factor of 30, JPEG-2000 compression with a ratio of 16, average filtering with a filter size of 5 × 5, and AWGN with SNR= 15 dB. The obtained results are depicted in Figs. 12 and 13. These results clearly show that the proposed system provides superior performance especially in the presence of JPEG compression scheme and mean filtering.

4.3.2 Robustness against geometric attacks

In this paper, two geometric attacks have been considered, namely: image cropping and translation. In our experiments, the cropping is implemented as shown in Fig. 14 while a horizontal shifting is applied to watermarked images, as shown in Fig. 15. The obtained results are given in Figs. 16 and 17, respectively. As can be seen, the proposed system provides more robustness against image cropping and translation.

4.4 Computational complexity

In this subsection, a set of experiments have been conducted in order to analyze the computational complexity of the proposed system as well as its competitors. For each system, the run time has been recorded during the embedding and detection stages on 6 gray level test images of size 512 × 512, in which a watermark has been embedded and detected 1000 times. All the source codes were implemented in MATLAB and run on a platform of an Intel Core(TM) i5-3230M CPU at 2.60 GHz with 4 GB of memory. The average CPU time is listed in Table 3 for each technique. It can be seen that the proposed system takes more time to watermark an image than other competing techniques do. This is mainly attributed to the significant computations required for estimating the perceptual mask in addition to the use of complex numbers in the DTCWT structure. It is, however, worth mentioning that the main computational component in our proposed system is the perceptual masking process which involves the estimation of the local brightness mask as well as the texture mask, separately. Therefore, one can explore some parallelism to conduct these two processes simultaneously since they do not depend on each other. Moreover, because the texture masking process is repeated for real and imaginary subbands independently (see (3)), this can also be executed in parallel to speed up the process. On the other hand, it can be seen that the watermark detection process with our system is significantly faster and constitutes the most efficient one along with the system proposed in [21]. In this context, it is worth noting that the embedding process is not as important as the detection one since it can be performed offline. The watermark detection stage, however, is crucial as it requires a decision on the presence of the watermark.

5 Conclusions

This paper proposes a blind additive image watermarking scheme in the DTCWT domain. In order to enhance imperceptibility, a new visual masking model exploiting the HVS characteristics has been used. The structure of the watermark detector is an adapted version of the Rao-test based detector. The host data in which the watermark is embedded (i.e. the high frequency DTCWT coefficients) is modeled by the generalized Gaussian distribution. Experimental results have shown that the proposed visual masking enhances significantly the performance of the system in terms of imperceptibility, detection accuracy and robustness to common attacks when compared with recent state-of-the-art techniques. Furthermore, we have found that the MLE of the GGD shape parameter does not provide good detection performance in most cases and a fixed shape parameter can offer better results. In future, it would be sensible to extend this work and use HVS-based masking models for multibit watermarking in the DTCWT domain. This would serve other practical applications of watermarking such as covert communication and source tracking. The optimization of the watermark embedding process would also be of interest to the authors.

References

Agarwal H, Atrey PK, Raman B (2015) Image watermarking in real oriented wavelet transform domain. Multimedia Tools and Applications 74:10883–10921

Albalawi U, Mohanty SP, Kougianos E (2016) A new region aware invisible robust blind watermarking approach. Multimedia Tools and Applications 1–35

Alkhathami M, Han F, van Schyndel R (2013) Fingerprint image watermarking approach using DTCWT without corrupting minutiae. In: 6th international congress on image and signal processing (CISP), pp 1717–1723

Asikuzzaman M, Alam MJ, Lambert AJ, Pickering MR (2012) A blind digital video watermarking scheme with enhanced robustness to geometric distortion. In: Proceedings of the international conference on digital image computing technology and applications, pp 1–8

Asikuzzaman M, Alam MJ, Lambert AJ, Pickering MR (2014) Imperceptible and robust blind video watermarking using chrominance embedding: a set of approaches in the DT CWT domain. IEEE Trans Inf Forensics Secur 9(9):1502–1517

Barni M, Bartolini F, Piva A (2001) Improved wavelet-based watermarking through pixel-wise masking. IEEE Trans Image Process 10(5):783–791

Benyoussef M, Mabtoul S, El Marraki M, Aboutadjine D (2014) Robust image watermarking scheme using visual cryptography in dual-tree complex wavelet domain. Journal of Theoretical and Applied Information Technology 60(2):372–379

Cheng Q, Huang TS (2001) An additive approach to transform-domain information hiding and optimum detection structure. IEEE Trans Multimedia 3(3):273–284

Coria LE, Pickering MR, Nasiopoulos P, Ward RK (2008) A video watermarking scheme based on the dual-tree complex wavelet transform. IEEE Trans Inf Forensics Secur 3(3):466–474

Cox IJ, Miller ML, Bloom JA, Fridrich J, Kalker T (2008) Digital watermarking and steganography, 2nd edn. Morgan Kaufmann Publishers, San Mateo

Do MN, Vetterli M (2002) Wavelet-based texture retrieval using generalized gaussian density and kullback-leibler distance. IEEE Trans Image Process 11(2):146–158

Guo B, Li L, Pan J-S, Yang L, Wu X (2008) Robust image watermarking using mean quantization in DTCWT domain. In: 8th international conference on intelligent systems design and applications (ISDA), pp 19–22

Hernandez JR, Amado M, Pérez-Gonzalez F (2000) Dct-domain watermarking techniques for still images: detector performance analysis and a new structure. IEEE Trans Image Process 9(1):55–68

Hill P, Achim A, Al-Mulla ME, Bull D (2016) Contrast sensitivity of the wavelet, dual tree complex wavelet, curvelet and steerable pyramid transforms. IEEE Trans Image Process 25(6):2739–2751

Horng SJ, Rosiyadi D, Li T, Takao T, Guo M, Khan MK (2013) A blind image copyright protection scheme for e-government. J Vis Commun Image Represent 24(7):1099–1105

Horng SJ, Rosiyadi D, Fan P, Wang X, Khan MK (2014) An adaptive watermarking scheme for e-government document images. Multimedia Tools and Applications 72(3):3085–3103

Kay SM (1989) Asymptotically optimal detection in incompletely characterized non-gaussian noise. IEEE Trans Acoust Speech Signal Process 37(5):627–633

Kay SM (1998) Fundamentals of statistical signal processing: detection theory, vol 2. Prentice Hall, Englewood Cliffs

Kingsbury NG (1998) The dual-tree complex wavelet transform: a new technique for shift invariance and directional filters. In: IEEE digital signal processing workshop, Bryce Canyon

Kingsbury NG (2001) Complex wavelets for shift invariant analysis and filtering of signals. Journal of Applied and Computational Harmonic Analysis 10(3):234–253

Kwitt R, Meerwald P, Uhl A (2009) Blind DT-CWT domain additive spread-spectrum watermark detection. In: Proceedings of the 16th international conference on digital signal processing, pp 1–8

Kwitt R, Meerwald P, Uhl A (2011) Lightweight detection of additive watermarking in the DWT-domain. IEEE Trans Image Process 20(2):474–484

Lewis AS, Knowles G (1992) Image compression using the 2-d wavelet transform. IEEE Trans Image Process 2(1):244–250

Lin WH, Horng SJ, Kao T-W, Fan P, Lee CL, Pan Y (2008) An efficient watermarking method based on significant difference of wavelet coefficient quantization. IEEE Trans Multimedia 10(5):746–757

Lin WH, Horng SJ, Kao T-W, Chen RJ, Chen YH, Lee CL, Terano T (2009) Image copyright protection with forward error correction. Expert Syst Appl 36(9):11888–11894

Lin WH, Wang YR, Horng SJ, Kao T -W, Pan Y (2009) A blind watermarking method using maximum wavelet coefficient quantization. Expert Syst Appl 36(9):11509–11516

Liu J, She K (2010) Robust image watermarking using dual tree complex wavelet transform based on human visual system. In: Proceedings of the international conference on image analysis and signal processing (IASP), pp 675–679

Loo P (2002) Digital watermarking with complex wavelets. PhD Thesis, University of Cambridge, United Kingdom

Loo P, Kingsbury N (2000) Digital watermarking with complex wavelets. In: Proceedings of the IEEE international conference on image processing, ICIP 2000, pp 29–32

Lu W, Sun W, Lu H (2009) Robust watermarking based on DWT and nonnegative matrix factorization. Comput Electr Eng 35(1):183–188

Mabtoul S, Ibn-Elhadj E, Aboutadjine D (2008) A blind image watermaking algorithm based on dual tree complex wavelet transform. In: IEEE symposium on computers and communications (ISCC 2008), pp 1000–1004

Nadenau MJ, Reichel J, Kunt M (2003) Wavelet-based color image compression: exploiting the contrast sensitivity function. IEEE Trans Image Process 12(1):58–70

Nikolaidis A, Pitas I (2003) Asymptotically optimal detection for additive watermarking in the DCT and the DWT domains. IEEE Trans Image Process 12(5):563–571

Pickering M, Coria L, Nasiopoulos P (2007) A novel blind video watermarking scheme for access control using complex wavelets. In: International conf. consum. electron. dig. tech. papers, pp 1–2

Rosiyadi D, Horng SJ, Fan P, Wang X, Khan MK, Pan Y (2012) Copyright protection for e-government document images. IEEE MultiMedia 19(3):62–73

Selesnick IW, Baraniuk RG, Kingsbury NG (2005) The dual tree complex wavelet transform: a coherent framework for multiscale signal and image processing. IEEE Signal Proc Mag 22(6):123–151

Tang L-L, Huang CT, Pan J-S, Liu CY (2015) Dual watermarking algorithm based on the fractional fourier transform. Multimedia Tools and Applications 74:4397–4413

Voloshynovskiy S, Herrigel A, Baumgaertner N, Pun T (1999) A stochastic approach to content adaptive digital image watermarking. In: International workshop on information hiding, vol 1768, pp 211–236

Wang C, Wang X, Zhang C, Xi Z (2017) Geometric correction based color image watermarking using fuzzy least squares support vector machine and bessel K form distribution. Signal Process 134:197–208

Wang XY, Yang HY, Wang AL, Zhang Y, Wang CP (2014) A new robust digital watermarking based on exponent moments invariants in nonsubsampled contourlet transform domain. Comput Electr Eng 40(3):942–955

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 14(4):600–612

Xie G, Shen H (2005) Toward improved wavelet-based watermarking using the pixel-wise masking model. In: IEEE international conference on image processing (ICIP 2005), pp 689–692

Yang H, Jiang X, Kot AC (2011) Embedding binary watermarks in dual tree complex wavelets domain for access control of digital images. Transactions on Data Hiding and Multimedia Security 6730:18–36

Zebbiche K, Khelifi F (2014) Efficient wavelet-based perceptual watermark masking for robust fingerprint image watermarking. IET Image Process 8(1):23–32

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zebbiche, K., Khelifi, F. & Loukhaoukha, K. Robust additive watermarking in the DTCWT domain based on perceptual masking. Multimed Tools Appl 77, 21281–21304 (2018). https://doi.org/10.1007/s11042-017-5451-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-5451-x