Abstract

The idea of Industry 4.0 is a stage of revolution in terms of new solutions and advanced technology, as well as artificial intelligence. Micro and macroeconomic factors generate the need to modify logistics and production processes in enterprises. One of the changes is the organization of warehouse management. The aim of the article is to indicate the possibility of using RFID technology in conjunction with Laser Telemetry in the location of objects in space along with the visualization of this location. In addition, the study aims to merge passive RFID technology with laser scanning to produce a system that can identify and locate objects in a virtual Computer-Aided Design (CAD)—based 3Dimensional (3D) environment. The described case study is the result of research conducted as part of the implementation project. This paper describes the steps to be taken and the creation of a system that will use RFID technology and that will enable the identification of objects in the space of computer systems. For this purpose, it was possible to create a system that will identify objects using RFID and Laser Telemetry in 3D space.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

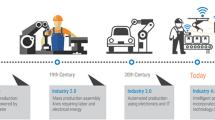

Nowadays, computerization and digitization are the basis for further development of the economy, as well as individual enterprises. Industry 4.0 is a key challenge facing a well-growing economy. These are also challenges for companies that want to keep up with trends and face the problems that are currently emerging. Automation is one of the directions that modern companies are striving for. Its development includes autonomous vehicles, maintenance-free forklifts, full automation of the production line or warehouse space, robots used in warehouse management as well as modern automatic identification systems. One of the challenges is the identification of products, objects in space. The article proposes one of the solutions of this identification and visualization. In addition, the idea of combining RFID technology with laser telemetry was presented. The aim of the article is to indicate the possibility of using RFID technology in conjunction with Laser Telemetry in the location of objects in space along with the visualization of this location. In addition, the study aims to merge passive RFID technology with laser scanning to produce a system that can identify and locate objects in a virtual Computer-Aided Design (CAD)—based 3Dimensional (3D) environment. Fu, et al. [1] assert that RFID and Laser telemetry measures within just a few centimeters. The project’s design meets the real-world needs of the business. It also meets the criteria for the European Union or the EU’s Framework Program for Research and Innovation “2014–2020”. This project produced an intelligent, real-time logistical ecosystem technology for asset detection, forecasting, modeling, and surveillance in collaboration with Proximus Software Systems, Inc and Proxigroup SP.Z.O.O. The detailed description presents the reason for the created artifact in the introduction. Fu, et al. [2] argue that there is a depiction of the issue at hand, notably the absence of knowledge of the precise location of a particular object, the collection of which is impossible with the use of only passive RFID technology. There are other references to research on installing RFID systems in commercial buildings. The presented research is the result of a project financed with the number SMEInst-10–2016-2017. Project title: Small business innovation research for Transport and Smart Cities Mobility by Proxigroup (Proximus Software Systems, Inc) for their RFID technology called ProxiTrak [3]. The article also uses research by Ur Rehman et al. [4] and Fu et al. [5], who indicate the possibilities of using the described system and discovered that after each movement, the program can perform an identification procedure that scans the area.

2 RFID technology in the automatic identification of objects

The history of RFID (Radio frequency identification) technology begins in the 1960s. Hunt, et al. [6] argue that the development of the first fully operational systems appeared in the 1970s, and it was Tiris. Then it was forgotten by the industry mainly by the financial aspect because readers, antennas, and especially tags were expensive. With industry development, RFID equipment is much cheaper, and this technology has been experiencing a well-deserved revival [3]. RFID is also known as electronic label technology, is a non-contact automated identification technology that recognizes the target object and extracts relevant data and critical characteristics using radio frequency signals [7]. Another advantage of the RFID technology is multi-reading for readers with RFID tags, which takes place in several cycles. Thanks to this, we can achieve the highest recognition rate [8].

RFID technology divides into two main types: Passive and Active. Chowdhury, et al. [9] assert that the difference between these is that Active RFID tags have batteries, and thanks to them, they can transmit signals to antennas, which have a much more extensive range but are much more expensive. In our artifact, we use passive RFID tags, powered by the energy supplied by the antenna, thanks to which each tag is charged and then sends a weak signal to the antenna but sufficient to read it. ProxiTrak is a (passive) RFID technology, a restriction imposed by the industry to use this type of RFID [3]. RFID has several standards that require using radio waves of different frequencies. In this research, we use 865—868 MHz frequency radio waves, i.e., in European standard ETSI (European Telecommunications Standards Institute). It is a standard solution for UHF (Ultra-High Frequency) passive RFID [10].

Recent market research indicates that the manufacturing and logistics sectors have seen a radical transition over the past several years, and this shift will continue to accelerate. Tan and Sidhu contend that companies aim to integrate and automate all stages of production to build a comprehensive supply chain [10]. Increasingly, manufacturers want to manage their resources within buildings. For instance, they want to know the precise location of any section of a particular infrastructure in the supply chain [10]. It could be for example: RFID, EAN, RuBee, QR, deep learning technology (using in autonomous shops) etc.

Numerous systems can presently solve this issue. There are numerous systems utilizing various technologies. RFID (Radio Frequency Identification) is one of these technologies, and this paper employs this technology. Smith asserts that the primary benefit of RFID is the ease of identifying objects due to the unique identification embedded in each RFID tag [11]. Another benefit of RFID technology is that a direct line of sight to interrogate an object, regardless if concealed, is not required [12]. RFID is gaining popularity in the industrial sector due to these characteristics.

Zhu and Chen argue that passive RFID systems also have many limitations [13]. The main limitation is the lack of precise location of the object [14]. We can predict it based on signal strength, but this method is not very accurate because each type of tag and each tag rotation concerning the antenna causes a change in signal strength. Lee, et al. found that researchers should use other technology to determine the exact location [15]. In our case, it is a laser range-finder, with the help of which we will create our 3D model of the examined area.

This research aims to combine these two technologies to identify the investigated objects simultaneously with 100% certainty and have their exact current position in space. Zheng, et al. contend that this system fits perfectly into an Industry 4.0 model because it helps to emulate the real world and transfer it to the Internet [16]. There is nothing to prevent the results from being automatically available on the web so that warehouse managers can access them in real-time despite the scan time. The system aims to be the basis for building a virtual environment in which it will access everything that is in reality. The motivation to create this type of artifact is an immediate need to track objects by automatic systems. Research conducted by Weber shows that the number of intelligent plants expects to double from 2017 to 2020, and 48% of enterprises plan to implement RFID technology [17].

Currently, RFID technology is used more and more widely. Originally dedicated to production and logistics, today it is used in other areas of the economy. One of the new solutions is the use of this technology for unattended sales, in medicine and the functioning of hospitals, anti-theft systems, university libraries, mobile robots, etc. [18,19,20,21,22,23,24]. It is a technology that allows minimizing the participation of additional people in individual processes and can be used for greater automation and creation of places and processes that will not require service by employees.

An example of using RFID with other technologies is prototype method for manual inventorying and real-time 3D localization of RFID tagged products by a low-cost handheld UHF RFID reader is introduced. The device is equipped with a UHF RFID reader and an optical camera. Experimental results show that increased accuracy can be achieved by increasing the spatial density of the optical and RFID markers. The proposed method is expected to enhance low-cost manual inventorying, delivering 3D localization of the tagged products [25]. Thus, in the literature there are ideas for visualizing objects using RFID and other technologies. One such proposal will be presented in this article. The possibilities of using RFID and Laser Telemetry technology are not described in the literature and constitute a research gap.

3 Methodology-tools and technology used for the research project

The introduction and manifestation of many elements and technologies take place in this study. They are combined to create a coherent whole technology stack where each system's elements have specific tasks and technological value. RFID is mainly responsible for identifying the object, while the laser range-finder is responsible for the exact location in space, utilizing a motion sensor to recognize movement.

The study leverages a high-order programming platform for the research, with several third-party libraries utilized for specific functions for human-to-machine interactions (HMI). The programming language used was C#, but any high- order language such as Java, C, C++ , and Python as a backup language. To integrate the system with the hardware, we used libraries supplied by the hardware manufacturers.

Even though we considered numerous platforms, the.Net Framework is an optimal environment because each hardware manufacturer provides it with the necessary libraries and functions so that we can easily integrate with the hardware without diving into low-level programming languages. Jain, et al. [26] asserts that, in other words, the.NET framework provided an atomic focal point for the heterogeneous nature of engineering multiple technologies. Maher Abdulzahra [27] found that another reason for using the environment provided by Microsoft is that the system is ready to integrate with the existing RFID system, which is ProxiTrak, also written and engineered under the.NET stack. It is a software solution based on CAD modeling, integrating and providing information from the RFID environment, ultimately synchronizing "virtual world" CAD representations to "real-world" hardware. The artifact developed for this research is the prototype and the basis for the system used in industry [2].

The hardware used was one static UHF Class 1 Generation 2 RFID Reader 25 RFID wet inlay UHF RFD Tags. We used four polarized circular antennas, one DELL Precision T7600 Desktop server that hosted SQL Server 2016, Visual Studio 2017, and the ProxiTrak Windows Application.

3.1 Reader hardware

The reader employed in this research is an Alien RFID ALR-9900 + EMA. The reader boasts one of the best reception sensitivity in its class, with improved resistance to interference, and is easy to configure and integrate with its system [28]. The manufacturer provides a ready-made library with all the functions needed to manage the reader [11] (Fig. 1).

3.2 Antenna hardware

The type and model of the antenna used in this research are Alien ALR-8696-C. It is the primary antenna dedicated to this type of solution. Its great advantage is the circular field that allows us to read tags in different orientations, not only those set at the right angle to the antenna. It is a considerable advantage and requirement of the industry of this research [29] (Fig. 2).

3.3 Tags

The type and model of the RFID tags used in this research are Alien ALN-9662 "Short" RFID inlay. These are basic passive tags supplied by the same manufacturer as the antenna and the reader. They have 800 bits of memory, and as many as 512 bits are available for the user. Thanks to a used reader, these tags are programmable, which allows us to control their identification numbers and integrate them with existing systems [30].

3.4 Scanning section

To scan the area in this research, we use a laser range-finder placed on a robotic arm. This solution allows us to scan an extensive area at a lower cost than a complete kit ensemble, thanks to the lidars.

3.5 Range-finding laser hardware

The Garmin Lidar-Lite v3 is the range-finding laser device used in this research. It is a compact laser distance meter at an affordable price. One of the advantages of this laser range-finder is its range of 40 m. Its accuracy given by the manufacturer is 3 cm, but such accuracy is obtained only in ideal conditions. Thanks to its properties, this sensor is often used in various applications such as autonomous vehicles. Communication with the device takes place via I2C or PWM bus [31] (Fig. 3).

In order to connect the sensor with the software, use the microcontroller. In our research, we use Atmel Atsam 3s4c and specifically the brick supplied by TinkerForge—Master Brick 2.1, thanks to which we can easily control the work of the laser detector and connect it with our system [32] (Fig. 4).

3.6 Robotic arm

The study employs a uArm Swift Pro robotic arm that is 4-axis, high-performance, precision robot arm controlled by Atmege Mega [33]. It has high- quality stepper motors to achieve an accuracy of 0.2 mm. Its maximum load is 500 g, so with a laser range-finder weight of 30 g and the materials used for assembly, there are no problems with movement.

The robotic arm and the software connect by transmitting specific commands in gCode. We wrote a customized Application Programming Interface (API) library as part of the research to enable easy and practical control of the robot from the application level without the knowledge of gCode [34] (Fig. 5).

3.7 Additional devices

The experiment employs the AS612 PIR motion sensor for the research. With a radius of over 11 m, this is a typical motion detector. The detector tells the program when to begin detecting. TinkerForge has provided us with a Fresnel Lens model S8002-2W that we can use to attach the detector to the setup [35] (Fig. 6).

In order to be able to communicate with the RFID reader, we also need a router that will create a local network for us. The research employed the Pentagram Cerberus router (Figs. 7 and 8).

The prepared tools were used to create the project, which will be presented in the further part of the study.

4 Results of project design and implementation

4.1 Application description

The research creates an artifact that combines various meaningful data to provide the user with maximum information. Maher Abdulzahra [27] claims that the data it collects can determine the position of each tracked object in appropriate conditions with an accuracy of a few centimeters.

Warehouse work can be significantly accelerated and facilitated due to the knowledge of the exact location of each item and the application's information [36]. Thanks to the application, the warehouse employee will not have to search the entire warehouse to find an item, and the logistics department will facilitate analysis and optimize the process.

The application integrates different types of sensors to achieve the desired result. Liang, et al. [37] argue that it must collect information from four sources simultaneously to work optimally. In continuous mode, the application scans the area in search of motion thanks to the motion sensor, monitors the area in real-time, and checks whether a new one has appeared or the removal of the RFID tag. The need for concurrency dictates that these requirements force us to work with a multithreaded approach [38]. Upon start, the application scans the previously set area as a baseline which promotes change detection as a basis for further processes. Upon scan completion, the application goes into the "rest" state, which means waiting for the appearance of movement. When motion is detected, the application waits for its completion and then scans the area again in search of objects. At the same time also supervised RFID tag list status. After movement completion, the area is scanned anew and updated. The system marks a newly found object with a different color and if at the same time, is assigned to the object if a new RFID tag appears in the area.

The entire software technology portion is written in C# language and running in a.NET environment, which allows universal use on any computer with Windows [26]. The computer must also have USB ports and a network card with an RJ-45 connector. Connecting all devices to a local network with PoE (Power of Ethernet) technology is also possible. Fortunately, we can easily arrange the devices conveniently for the customer (Fig. 9).

4.2 Input data

Input data in our system comes from many sources. These include RFID readers, laser range- finders, motion sensors, and feedback from the robotic arm. Data input resources occurred in various forms, with each data set interpreted as required by the research.

We can modify the data provided by the RFID reader and connected antennas. The reader provided by Alien allows us to receive a list of tags in different formats. We can choose an XML format with basic information or a modifiable sequence of characters and variables for each tag. Thanks to the reader, we can receive information such as an identification number, the tag discovery time, the tag's last seen moment, the statistic of readings since the last transmission of data, the antenna that discovered the tag, and the power with which the tag is read, among other details [10] (Fig. 10).

The Alien ALR-99000 reader also has special functions activated in a specific situation associated with the list of tags. Namely, the reader can inform us each time it is changed, that is when the tag appears in the zone or leaves it, which is a protocol considered for use in this research [28].

We can also configure the data provided by the laser range-finder with certain constraints. With the API provided by TinkerForge, we can easily change the options for providing information. In each case, the sensor sends us a single value, for example, the distance to the nearest obstacle or the average of the last readings. Wu, et al. [39]. discovered that we could obtain this information by directly calling a function, receiving it at a given time interval, or changing the distance read by a specific value. The values sent by the sensor are in centimeters, and they are always integers that result in the best solution because the laser has a minimum error of about 2 cm [4].

The motion sensor provides us with simple information when motion is detected and then put to sleep for a specified period. This parameter setting’s adjustment proceeds according to our needs. It is also possible to determine the range to which the sensor is to react [17].

Communication with the robot arm transpires on the request-respond principle. Each command is submitted to the device directly or through a returned library with the starting character '#' and the command's identification number. Subsequently, when we start to execute it, we are also informed about it by the character '$' with the identification number of the command and the response message 'ok.' The software will also inform us with the appropriate code in case of any error in the same way but with a changed special character starting the message. In the research, we also use the robot's ability to report its current position and the end of the movement [16] (Fig. 11).

The visualization of all Azure Cloud data points in this work occurs in CloudCompare software. This software depicts the visual acuity of 3- dimensional points on the cloud. The platform is used only for visualization, and all cloud operations are self- implemented.

4.3 Output data

The outcome is depicted on an Azure cloud- hosted SQL database with colored entities due to the artifact's events. Each of the several shades of blue indicates a different entity. The use of text files is instrumental in keeping keep track of all elements and their related shades. The object is readily apparent in the graphic below as a cluster of points (Figs. 12 and 13).

The diagram below illustrates the precisely located objects in the examined area. Thanks to such information, potential customers will easily find their items in the warehouse or will be able to supervise them remotely. Obtained results perfectly complement the imperfections of passive RFID systems, namely the lack of accurate location of the object. Due to the solution, this system is a strong competitor in the competitive landscape and other associated markets.

4.4 Algorithm description

As we can see in the block diagram (Fig. 14), the system works based on several separate parts coalesced in such a way as to obtain the desired effect. Zhou, et al. [40] ascertain that the most crucial step is to scan the area we are studying and to find the objects of interest. Scanning the zone takes place at the beginning of the program to determine what the environment looks like, and then after each completed motion is detected and completed. Li, et al. [41]. argue that it is essential that the movement has finished because the person or device that placed the object in the zone or took it from it would cover the area and introduce errors.

Diagram of the robotic arm with dimensions and marked laser position [16]

With the available device, we can generate a point cloud, the equivalent of a 3D model of our environment. Based on this scan, further object recognition ensues, and the quality of this data depends on different algorithms and success in recognizing data objects.

Motroni, et al. [42] conclude that the principle of this process is to combine the distance read by the laser with the arm's current position. The first step to be determined in this scan was to set the zero point of the whole system. The most straightforward and intentional approach was that it should be the zero point of the robotic arm, the point at its base. The next step is to calculate the current distance between the laser distance meter and the zero point of our system. Tripicchio, et al. [43] maintain that the geometry of the robotic arm and all angles in the subsequent hinges must be known to accomplish the calculation. Using these data points, we obtain the formula below in Figure below.

where:

- α :

-

angle reading from Left Motor

- β :

-

angle reading from Right Motor

- l zero :

-

distance of laser from zero point

Knowing the angles on the hinges, we know which side the laser is pointing. By adding the laser sensor from the zero point to the measurement made by the laser range-finder, we can ascertain the distance absolutes of the point of interest from the center of the system (l).

where:

- l :

-

distance of the point from the centre of the system

- l laser :

-

laser sensor from the zero point

- l zero :

-

measurement made by the laser rangefinder

Thanks to the knowledge of angles on individual engines, we can calculate the trigonometric functions we need to calculate the coordinates of the point we seek (Fig. 15).

where:

- x, y, z:

-

next coordinate points

- l :

-

distance of the point from the centre of the system

- β :

-

Right Motor′s angle

- γ :

-

Base Motor′s angle

Orientation relation to the position of the laser [16]

Scanning operates on a step-by-step principle. This method is long-lasting, but it gives the most accurate results. Wu, et al. [44] state that, unfortunately, the speed of the data transmitted from the distance meter is insufficient for the continuous movement of the laser, and the results obtained in this way are insufficient for further processing.

For continuous motion scanning to be possible, we would have to slow down the robot or speed up the frequency of the data received. Unfortunately, we cannot do this using the API supplied to us by the manufacturers. Motroni, et al. [45] describe that the solution to this problem could be to change the software controlling the robotic arm or to read the results of the laser distance meter.

4.5 Scanning time and type

The scan was made with a stepping motion which we see in Fig. 16 and lasted 2 min and 15 s.

The area included 10 degrees of height and 40 degrees of width, and a single step of scanning is one step in each direction, giving us a total of 450 points. Motroni, et al. [46] clarify that selecting the appropriate scanning properties is crucial depending on how large the area we want to control and the size of objects we need to recognize. We can control the width and height of the scan and the size of a single step.

Scanning time depends on the size of the area and the accuracy with which we want to scan it [47]. Selection of properties must occur due to the tracked objects' dimensions and the distance from which we will investigate them (Fig. 17).

As we can see from the chart above, the relationship between the size of the area and the time needed for scanning is linear. The difference between increasing width and height is slight and may be negligible. In the above study, the blue mark represents an increase in area width and the increase in height in orange. On the axis of the graph, we give the tested field, for instance, multiplied by these two values (Figs. 18 and 19).

As we can see from Fig. 20, the relationship between scanning accuracy and time is a powerful function. It increases accuracy in two dimensions, width and scanning height. As we can observe, it does not use too much accuracy, as it significantly prolongs the scanning process. Thomas, et al. [48] stipulate that the accuracy of the scan determines the number of measurements per degree of engine rotation. Figure 20 depicts that the accuracy is directly proportional to measurements (Fig. 20).

Khazetdinov, et al. [49] found that if the objects requiring tracking are small in size, an increase in the scanning accuracy is needed to recognize them. As a result of this study, we increased the accuracy parameters. The parameter change is very time- consuming, so we had to choose the appropriate scanning properties to meet the requirements of the process [50]. Below are examples of scans made from different distances for three different sizes of objects (Figs. 21, 22, 23, and 24).

Figures 25 and 26 illustrates that longer distances require higher scanning accuracy application settings. Recognition of small objects over long distances is impossible due to inaccuracies in tracked assets [5].

The noise in the laser reading also causes the results obtained. The following graph shows the distance readings for a stationary object over time, showing the actual errors. The accuracy given by the manufacturer is checked only at short distances up to about 10 m in dark rooms [4] (Fig. 27 and 28).

The presented graphs show us that the laser range-finder does not have the accuracy of 3 cm for the whole range in actual conditions. Shamsfakhr, et al. propose that the cause is various types of interference, which result from the fact that this is not a completely dark environment without other light sources, and the surfaces measured also disperse the laser beam [51]. These results should take into account in further calculations.

Starting a new scan is done after each completed movement so that people or vehicles moving in our facilities during the scan or not considered. The movement must be detected in the specified area to start a new scan [39]. We use a motion sensor for this purpose. When the sensor sends a motion detection message, a function is activated to check whether the motion has ended. Object motion invokes function execution where the sensor will provide notifications about transient objects. This function waits for a specified interval (number of seconds) and informs the system that the movement has ended [52]. The number of expected seconds each time is renewed after detecting movement. Yu et al. [52] stress that the number of seconds should be determined based on the process of transporting our objects.

4.6 Scan Comparison

Comparing scans involves comparing individual points from each cloud. As a starting object, we get only those points that are not on the first base scan. Shamsfakhr, et al. [53] calculate that the precise comparison of each point is unlikely when the system has many measurement errors, which proves the hypothesis in our case. The first error of concern is the error of the laser itself. According to the documentation, the laser has a 3 cm measuring error, but as we proved under realistic conditions, it is more significant [35]. Therefore, for each environment, it is necessary to check what the system is like in reality before starting and accepting it for further calculations.

Another error we must consider is the inaccuracy in the laser position due to the inaccuracy of the robotic arm. The deviation we have to consider is ± 0.05º in each direction, and two axes must be taken into account to calculate the error resulting from this measurement for a single point. Liang, et al. [37] declares that it also depends on the measured distance of the point. The error is that the more significant the object is further away from the sensor. The formula determines this:

where:

- er :

-

robotic arm error

- a :

-

the inaccuracy of the position of the robot arm. It is equal to 0.05º

- x :

-

distance of the point from the sensor

- \(\frac{\sqrt2}2\) :

-

coefficient of error in two axles

Combining these two measurement errors gives us a general error that can occur during a scan, and consideration occurs when comparing two scans. The combination of these two errors and the total error represents a formula based on the diagram below [2] (Fig. 29).

- el:

-

measurement error of the laser rangefinder

- \(\frac{\sqrt2}2\) :

-

coefficient of error in two axles error

where:

- e +:

-

total measurement error for further points

- e −:

-

total measurement error for closer points

- α:

-

the inaccuracy of the position of the robot arm. It is equal to 0.05º

- l :

-

test point distance from the center of the system

- x :

-

base point distance from the center of tℎe system

Depending on the comparison of points, we select the appropriate measurement error. Zhuang, et al. [54] view that for base points closer to the system's center than the tested point, for instance, if its absolute distance to point 0 of the system is shorter than the absolute distance of the tested point, select the more significant error, i.e., e + . When the tested point is closer to the system's center, we choose the smaller error e-. In this way, we optimally support our measurement uncertainty.

Wang, et al. [55] argue that a point is different from a base scan if the absolute distance from each base point is greater than the accepted measurement error. Such a method gives the clouds of points that have changed since the last scan. This cloud is the basis for finding our object.

4.7 Object separation

Object separation arises on a cloud of different points in the previous step. This cloud has noises that are the result of erroneous measurements. Not all points classified as different are points and belong to the object of interest. Finding an object is to classify only those points most likely belong to the object of interest. Motroni, et al. [42] claim that classification drives the finding of the largest concentration of different points. A concentration of points comes about as points that are adjacent to each other. Adjacent points are those where the distance between them does not exceed a particular limit value. The limit value depends on the measurement error, scanning accuracy, and the distance of the test point from the system's center [2]. To calculate it, we use the formula:

where:

- t :

-

limit value

- a :

-

scanning accuracy

- l :

-

distance of the point from the centre of the system

- e :

-

measurement error occuring in the previous step

The concentration of adjacent points created in this way belongs to the object we are looking for and thus finding. To find more than one concentration, select those with more points belonging to it and consider them a new object.

4.8 Object identification

Identification consists of assigning an identification number to an object, the RFID tag ID. Each object appearing in the examined zone has an RFID tag with a unique ID number. When a new object appears in the zone, the configured reader sends information about this event to the system. Currently, the list of all tags in the zone is also updated. After scanning and finding the concentration of points, for example, the object searched for, the assignment of the identification number to the last discovered RFID tag occurs at the moment of the scanning.

In a situation when no new object arrives in the zone, we will not read a new RFID tag and two concentrations of located points. It is a situation of moving an object in our zone. The new position of an object is to be where the points are closer to the zero point of the zone compared to the base points on the same scanning line [50]. The scanning “line” is the linear line that passes through the system's center and the scanned point. DiGiampaolo and Martinelli [56] found that in this way, an update can occur on the position of an already discovered object. When we receive information about removing the RFID tag from the examined zone, and when we do not find any cluster of points after the scan, we remove all the points that belonged to the object from our cloud [54].

5 Detection problem of several objects

The issue with several objects is that the detection of more than one happens during one identification process. We investigate during one movement when we place more than one object in the zone. The idea to solve this problem was to use the reading power of individual RFID tags. Rohman, et al. [57] discovered that based on the power read, knowledge of the location of RFID antennas, and the location of individual objects, we would be able to determine which of them is closer or further from the antenna by identifying its exact location.

The study ended with the results presented below in Fig. 30. The diagram in Fig. 30 depicts the dependence of tag rotation on signal strength. The values for signal strength reported by the reader are unitless quantities, and different reader models may report different values under the same conditions [58].

The rotation of the associated antenna’s tag significantly influences the power with which we receive the signal, as evident by the analysis of the research results. It was not apparent because the radio signal emission pattern by the antenna is circularly polarized [59]. These results, unfortunately, exclude the identification of objects based on reading the power of their signal because, in the case of the different orientations of the tag in direct relation to the antenna, we could not determine which of them is closer to the antenna and predict their location [53].

6 Conclusion

This research aimed to combine appropriate sensors and create a system that could accurately determine the object's location in a virtual 3d environment and identify it. The system based on passive RFID technology eliminates one of its drawbacks, namely the location limited to specific zones. The aim of the research was also to examine the created artifact in order to facilitate its implementation in various industry verticals. The study achieved all of the prescribed objectives.

A system is a combination of two technologies that complement each other. Thanks to RFID with 100% certainty, we identify the object, and the laser range-finder allows us to determine the object's exact location accurately. The system also uses a robotic arm to direct the laser range-finder and a motion sensor that facilitates human interaction and control to determine the moment of termination of movement and thus start a new scan. Jain, et al. [14] contend that the integration of components materializes by utilizing high-order programming languages in a heterogeneous software environment. The result is the creation of a cohesive system that, as output, gives us cloud-hosted data points with subsequent objects marked in specific colors. There is a list of all objects encompassing information such as the color, object identification number, and the ID number of the tag. The research shows us the possibilities of the system to recognize objects at different distances. Khazetdinov, et al. [39] find that on that basis, we can select appropriate scanning parameters for the area we are investigating to carry out the whole identification process optimally. The artifact is ready to integrate with the existing product for inventory and real-time object tracking. This artifact is ProxiTrak. It is the main flagship product of the company ProxiGroup, which supervised the fundamental research in close cooperation with the specialists. The system responds to the real needs of the current industry and is another element of Industry 4.0, where we move the real world to the virtual world.

The idea of solving the problem of the appearance of many objects is to compare their size with the previously created database. We can determine its dimensions by scanning our area and separating our object from it. If two objects appear based on their dimensions, it is possible to identify them, but this way, it would not work for objects of the same size. The use of a robotic arm is not necessary. A two-system consisting of two rotating connections perpendicular to each other would be sufficient for scanning. Robotic arms let us skip construction and focus on creating systems and algorithms.

The artifact creation promotes the building and visualization of an RFID-digital location-centric environment in the virtual world. The point cloud we obtain as a result of the program presents a basis for overlaying 3D models on recognized objects. This way, after creating a full 3D model of our warehouse and placing it on the Internet, we could put on augmented reality glasses anywhere to move to the warehouse. This way, we could track, surveillance, and manage the entire contents of an asset pallet or logistic magazine more efficiently and effectively. The proposed solution was tested in a laboratory unit as part of the project. At the design stage, it was not implemented in the company, but it is a goal in the future.

Data Availability

Data provided by Proxigroup sp. z o.o.

References

Fu W et al (2020) A method of multiple dynamic objects identification and localization based on laser and RFID. Sensors 20(14):3948

Fu Y, Wang C, Liu R, Liang G, Zhang H, Ur Rehman S (2018) Moving object localization based on UHF RFID phase and laser clustering. Sensors 18(3):825

Cordis (2017) PROXITRAK – next generation IoT tracking solution for a connected logistics – collect, analyse and visualise big data in a true real time. European Commission. https://cordis.europa.eu/project/id/761931. Accessed 2017

Ur Rehman S, Liu R, Zhang H, Liang G, Fu Y, Qayoom A (2019) Localization of moving objects based on RFID tag array and laser ranging information. Electronics 8(8):887

Fu Y, Liu R, Zhang H, Liang G, Rehman SU, Liu L (2019) Continuously tracking of moving object by a combination of ultra-high frequency radio-frequency identification and laser range finder. Int J Distrib Sens Netw 15(7):1550147719860990

Hunt VD, Albert P, Mike P (2007) History and evolution of RFID technology. In: RFID: A guide to radio frequency identification. Wiley, pp 25–31

Raptopoulos A, Sergiadis G, Dimitriou A (2023) Tag localization by Handheld UHF RFID reader with optical and RFID landmarks. IEEE J Radio Freq Identif 99:1–1. https://doi.org/10.1109/JRFID.2023.3238822

Xie D, Wang X, Tang A, Zhu H (2022) A portable RFID localization approach for mobile robots. IEEE Internet Things J. https://doi.org/10.1109/JIOT.2022.3202136

Chowdhury BDB, Masoud S, Son Y-J, Kubota C, Tronstad R (2021) A dynamic HMM-based real-time location tracking system utilizing UHF passive RFID. IEEE J Radio Freq Identif 6:41–53

Tan WC, Sidhu MS (2022) Review of RFID and IoT integration in supply chain management. Oper Res Perspect 100229

Smith AD (2021) Green supply chains and enabling RFID technology. In: Encyclopedia of organizational knowledge, administration, and technology. IGI Global, pp 2403–2420

De A, Datta T (2022) Backscatter interrogation performance in UHF RFID with energy harvestin. In: Proceedings of international conference on advanced computing applications. Springer, pp. 313–329

Zhu Z, Chen C (2021) Design of UHF-RFID-based automatic directionfinding, detection, location and rescue device. In: 2021 IEEE 5th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), vol. 5, pp. 1426-1429. https://doi.org/10.1109/ITNEC52019.2021.9586885

Zhong RY, Xu X, Wang L (2017) IoT-enabled smart factory visibility and traceability using laser-scanners. Proc Manuf 10:1–14

Lee YK, Goh YH, Tew Y (2018) Cyber Physical Autonomous Mobile Robot (CPAMR) framework in the context of industry 4.0. In: MATEC Web of Conferences, vol. 167: EDP Sciences, p. 02005

Zheng P et al (2018) Smart manufacturing systems for Industry 4.0: Conceptual framework, scenarios, and future perspectives. Front Mech Eng 13(2):137–150

Weber MM (2022) Equity research on Zebra technologies corp.-accelerated digitization along supply chains promises further growth

Abugabah A et al (2021) RFID adaption in healthcare organizations: An integrative framework. Comput Mater Contin. https://doi.org/10.32604/cmc.2022.019097

Xiao F, Wang Z, Ye N, Wang R, Li X-Y (2018) One more tag enables fine-grained RFID localization and tracking. IEEE/ACM Trans Netw 26(1):161–174

Yang L, Chen Y, Li X-Y, Xiao C, Li M, Liu Y (2014) Tagoram: Real-time tracking of mobile RFID tags to high precision using COTS devices. In: Proc. ACM MobiCom, pp. 237–248

Bernardini F et al (2020) Robot-based indoor positioning of UHF-RFID tags: The SAR method with multiple trajectories. IEEE Trans Instrum Meas 70:1–15

Tripicchio P et al (2022) A synthetic aperture UHF RFID localization method by phase unwrapping and hyperbolic intersection. IEEE Trans Autom Sci Eng 19(2):933–945

Huk K, Robaszkiewicz-Ostręga J (2018) Logistyka zwrotów na przykładzie hurtowni farmaceutycznej Neuca-Logistyca sp. z o.o., Prace Naukowe Uniwersytetu Ekonomicznego we Wrocławiu. Globalne i lokalne strategie logistyczne, Wydaw. Uniwersytetu Ekonomicznego we Wrocławiu, Wrocław, no. 505

Tzitzis A, Raptopoulos AC, Yioultsis TV, Dimitriou AG (2021) A real-time multi-antenna SAR-based method for 3D localization of RFID tags by a moving robot. IEEE J Radio Freq Identif 5(2):207–221

Vogt H (2002) Efficient object identification with passive RFID tags. In: Pervasive computing. Springer, pp. 98–113

Jain SB, Sonar SG, Jain SS, Daga P, Jain RS (2020) Review on comparison of different programming language by observing it’s advantages and disadvantages. Res J Eng Technol 11(3):133–137

Abdulzahra MM (2019) Novel anti-collision algorithm in RFID tag identification process. In: Computer science on-line conference. Springer, pp. 152–169

Alien. ALR-9900+ Files | Alien Technology. https://www.alientechnology.com/products/files-2/alr-9900/. Accessed 2017

A. Technology, ALR-8696-C Low VSWR/Axial Ratio Antenna. Available: http://www.alientechnology.com/wp-content/uploads/Alien-Technology-ALR-8696-C-Antenna.pdf. Accessed 2017

A. Technology, ALN-9662 Short Inlay. Available: http://www.alientechnology.com/wp-content/uploads/Alien-Technology-Higgs-3-ALN-9662-Short.pdf. Accessed 2017

Garmin. Garmin Lidar Lite v3 Operation manual. Garmin International. https://static.garmin.com/pumac/LIDAR_Lite_v3_Operation_Manual_and_Technical_Specifications.pdf. Accessed 2017

TinkerForge. Garmin Lidar-Lite v3 Laser Range-Finder. TinkerGorge. https://www.tinkerforge.com/en/doc/Hardware/Bricks/Master_Brick.html. Accessed 2017

uFactory. uArm Swift Pro Developer Guide (2013) Shenzhen factory LTD. Available: http://download.ufactory.cc/docs/en/uArm%20pro%20User%20Manual%20v1.1.0.pdf. Accessed 2017

Demir T, Mutlu U, Yıldırım T (2018) Motion-controlled virtual reality robotic arm with kinect. In: 2018 Innovations in Intelligent Systems and Applications (INISTA). IEEE, pp. 1–5

TinkerForge. Motion detector bricklet 2.0. TinkerForge. https://www.tinkerforge.com/en/doc/Hardware/Bricklets/Motion_Detector_V2.html. Accessed 2017

Hayward S, Earps J, Sharpe R, van Lopik K, Tribe J, West A (2021) A novel inertial positioning update method, using passive RFID tags, for indoor asset localisation. CIRP J Manuf Sci Technol 35:968–982

Liang G, Liu R, Fu Y, Zhang H, Wang H, Guo M (2020) An innovative approach to track moving object based on RFID and laser ranging information. KSII Trans Internet Inf Syst (TIIS) 14(1):131–147

Sandén BI (2009) Multithreading. Colorado Technical University, Colorado Springs

Wu H, Wu X, Tian G (2018) Indoor robot localization based on single RFID tag. Artificial Life and Robotics 23(3):373–379

Zhou G-j, Yu X-l, Liu M-j, Lu D-s RFID tag group performance detection based on optoelectronic sensing technology

Li L, et al. (2022) Optimization of RFID reading performance based on YOLOv3 and Elman neural network. J Intell Fuzzy Syst, no. Preprint, pp. 1–14

Motroni A, Nepa P, Buffi A, Tripicchio P, Unetti M (2018) RFID tag localization with UGV in retail applications. In: 2018 3rd International Conference on Smart and Sustainable Technologies (SpliTech). IEEE, pp. 1–5

Tripicchio P et al (2021) A synthetic aperture UHF RFID localization method by phase unwrapping and hyperbolic intersection. IEEE Trans Autom Sci Eng 19(2):933–945

Wu C, Tao B, Wu H, Gong Z, Yin Z (2021) A UHF RFID-based dynamic object following method for a mobile robot using phase difference information. IEEE Trans Instrum Meas 70:1–11

Motroni A, Buffi A, Nepa P (2021) A survey on indoor vehicle localization through RFID technology. IEEE Access 9:17921–17942

Motroni A, Buffi A, Nepa P, Tellini B (2020) Sensor-fusion and tracking method for indoor vehicles with low-density UHF-RFID tags. IEEE Trans Instrum Meas 70:1–14

F. Bernardini, A. Motroni, P. Nepa, A. Buffi, and B. Tellini (2020) SAR-based localization of UHF- RFID tags in smart warehouses. In: 2020 5th International Conference on Smart and Sustainable Technologies (SpliTech). IEEE, pp. 1–6

Thomas C, Panagiotopoulos T, Kotipalli P, Haynes M, Starner T (2018) RF-pick: comparing order picking using a HUD with wearable RFID verification to traditional pick methods. In: Proceedings of the 2018 ACM international symposium on wearable computers, pp. 168–175

Khazetdinov A, Aleksandrov A, Zakiev A, Magid E, Hsia K (2020) RFID-based warehouse management system prototyping using a heterogeneous team of robots. Robots in Human Life, p. 263

Longhi M, Millane A, Taylor Z, Nieto J, Siegwart R, Marrocco G (2018) An integrated mav- rfid system for geo-referenced monitoring of harsh environments. In: 2018 IEEE Conference on Antenna Measurements & Applications (CAMA), IEEE, pp. 1–4

Shamsfakhr F et al. (2022) RFID-based robot localisation: an unconstrained optimisation problem by exploiting RSSI. In: 2022 IEEE International Instrumentation and Measurement Technology Conference (I2MTC). IEEE, pp. 1–6

Yu X, Zhuang X, Liu Z, Zhao Z, Li L, Zhang W (2022) A novel 3D measurement of RFID multi-tag network based on MWCNN and ELM. IET Sci Meas Technol 16(1):15–27

Shamsfakhr F, Motroni A, Palopoli L, Buffi A, Nepa P, Fontanelli D (2021) Robot localisation using UHF-RFID tags: A Kalman smoother approach. Sensors 21(3):717

Zhuang X et al (2018) A novel method for 3D measurement of RFID multi-tag network based on matching vision and wavelet. Meas Sci Technol 29(7):075001

Wang G et al (2022) A Generalized method to combat multipaths for RFID sensing. IEEE/ACM Trans Network 01:1–16

DiGiampaolo E, Martinelli F (2019) A restarting paradigm for a Range-Only SLAM algorithm using the phase of passive UHF-RFID signals. In: 2019 IEEE International Conference on RFID Technology and Applications (RFID-TA). IEEE, pp. 279–284

Rohman BP, Andra MB, Putra HF, Fandiantoro DH, Nishimoto M (2019) Multisensory surveillance drone for survivor detection and geolocalization in complex post-disaster environment. In: IGARSS 2019–2019 IEEE International Geoscience and Remote Sensing Symposium. IEEE, pp. 9368–9371

Wu D-L, Ng WW, Yeung DS, Ding H-L (2009) A brief survey on current RFID applications. In: 2009 International conference on machine learning and cybernetics, vol. 4, pp. 2330–2335. IEEE

Patel SJ, Zawodniok M (2022) 3D localization of RFID antenna tags using convolutional neural networks. IEEE Transactions on Instrumentation and Measurement, pp. 1–1. https://doi.org/10.1109/TIM.2022.3146604

Funding

Financed by the National Center for Research and Development (NCBiR) "Smart Development Operational Program 2014–2020", application no. POIR.01 0.01 0.01-00-0452/17.

Author information

Authors and Affiliations

Contributions

Not Applicable.

Corresponding authors

Ethics declarations

Ethics Approval

Not Applicable.

Competing Interests

Not Applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shull, C., Marecki, K., Huk, K. et al. The Study of RFID Technology and Laser Telemetry to Locate Products in Space. Mobile Netw Appl (2023). https://doi.org/10.1007/s11036-023-02242-3

Accepted:

Published:

DOI: https://doi.org/10.1007/s11036-023-02242-3