Abstract

Existing systems have disadvantages such as slow running speed, long time-consuming, and poor rendering effect in virtual reconstruction of architectural spatial structure. In order to solve such problems, virtual reconstruction system of building space structure is designed using laser 3D scanning technology under condition of fusion of multiple big data. The system was equipped with a 3D laser scanner and connected to computer, and the noise interference was reduced by image preprocessing module to complete the hardware design. The system improved user interface and maintenance module. Eventually, 3D model reconstruction was realized via data acquisition, data registration, coordinate transformation and 3D rendering. The results show that the system designed in this paper runs fast, and color of reconstruction results is consistent, which indicates that reconstruction results of building space structure obtained by the system are conducive to in-depth study of building space.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As a product of information age, three-dimensional city has gradually become a research hotspot in the field of digital city [1]. With continuous development and transformation of people's cognition, the solution of the problem of building reconstruction marks realization of urban digitization process [2]. Due to the high cost of building space reconstruction, in order to save costs, it is very necessary to realize virtual reconstruction of building space structure with help of computer technology [3]. There are many elements which make up building space, and relevant structural data inside building space should be collected during reconstruction period [4]. Generally, traditional data acquisition requires help of GPS positioning system. However, accuracy of the internal data collected by this method is low [5]. Therefore, virtual reconstruction of building spatial structure is further studied [6].

Xia et al. [7] proposed a virtual space reconstruction method based on position of the central eye. The results show that the method realizes reconstruction of building space structure, and provides a theoretical basis for improving authenticity and interactivity of virtual reality technology. However, reconstruction results are deviation and accuracy is low. Overbeck et al. [8] designed a system for acquiring, processing and rendering panoramic light field static photography in virtual reality. A novel real-time optical field reconstruction algorithm was proposed. The algorithm used optical field pre-filtering operation to project high-quality off-line reconstruction model into real-time model to restrain artifacts. They proposed a practical method of light field compression. By modifying VP9 video decoder, high quality compression was provided. These components are combined into a complete light field system, and effect of high- quality rendering was realized. Simultaneously, a 90 Hz stereo view was generated on commercial virtual reality hardware. The results show that the system can restrain generation of double artifacts, but its running time is long. Wang et al. [9] propose a virtual reconstruction system based on laser 3D scanning for building spatial structure. According to single chip mapping and 3D laser scanning technology, the overall design of reconstruction system is carried out. The process, preprocessing and registration method of building spatial structure data obtained by laser 3D scanning are analyzed in detail. 3D reconstruction of building spatial structure model is carried out by using triangular grid, and outline collection tool and interactive editing environment of building spatial structure are provided for operators. The experimental results show that 3D modeling results and actual results fit well, but rendering effect is not good. In addition to above methods, scholars apply big data technology to Internet of Things and intelligent Internet communities [10]. Also research on fusion and prediction method of privacy perception data based on time and space background in industrial environment of intelligent city [11]. Meantime, a framework of agricultural big data cloud computing and analysis based on Dempster Shafer theory is designed, and big data technology is applied in various fields [12].

Aiming at problems of slow running speed, long time-consuming, and poor spatial structure rendering in existing system, a virtual reconstruction system of building spatial structure based on laser 3D scanning is designed. The innovations of the system are analyzed below:

-

(1)

In the hardware design part of the system, based on optical principle, 3D laser scanner is connected to computer. At the same time, image preprocessing module is designed to reduce noise interference, facilitate subsequent image processing, and help solve the problem of poor rendering effect of traditional system.

-

(2)

Through laser three-dimensional scanning, information analysis window of distance between the scanning center and the obstacle is constructed, and the difference between adjacent data is calculated, which can effectively filter out pulse interference noise and mixed pixel interference, and improve effect of noise filtering.

-

(3)

Realize 3D model reconstruction function with the help of hardware equipment, design user interface and maintenance module of the system, and improve space reconstruction effect.

-

(4)

Practical results show that applying it to the virtual reconstruction of building space structure can effectively solve the problems of slow reconstruction of existing reconstruction system and inconsistency with actual structure.

2 Hardware Design for Virtual Reconstruction System of Building Space Structure

The hardware design of the system studied in this paper is divided into two parts: one is three-dimensional laser scanning instrument, the other is running equipment which can support system operation.

2.1 3D Laser Scanner

Laser scanner is a kind of distance sensor based on optical principle. Specific working principle is to map the point laser measurement to the whole three-dimensional space by adding multiple degrees of freedom, so as to obtain three-dimensional laser point cloud. Technology is used to scan shape, structure and color of the object space, so as to obtain spatial coordinates of the object surface. Laser scanner is shown in Fig. 1.

Because influence of laser on measured object can be ignored, it is widely used in field of building measurement, particularly, in dangerous areas and buildings difficult to measure. Scanning methods include single line scanning, raster scanning and all angle scanning. Laser source, scanner and light detector are core components of laser scanner. Related performance parameter settings are shown in Table 1.

First, parameters of laser scanner are calibrated before measurement. Stepping motor is selected as power driving device. The core processor is high-speed processing chip 2.0. Based on non-contact computer vision technology, 3D modeling method of automatic generation is used to model three-dimensional objects of building space structure. Generally, 3D laser scanner uses low-energy red laser to scan building space structure to obtain local geometry information. The complete geometric information of the object needs 5 ~ 12 scans, and use the scanner's software for geometric stitching.

Three-dimensional laser scanning has high efficiency and precision, but equipment is expensive. Moreover, scanning materials are limited. Some low-grade scanners can not get rich texture information of surface color, which needs to be compensated by follow-up methods. This requires taking a series of multi angle photos around the object to obtain texture characteristics of building space structure. This process has obvious advantages such as intuitive, low quality requirements for original photos, and allowing texture image to be edited during generation process.

2.2 Image Pretreatment Module

Before image analysis, the image should be preprocessed. This is because there are some interference factors, such as thermal noise and undercurrent noise, during acquisition of images by laser 3D scanning. The difference of light intensity and shooting angle will also disturb the image. In addition, the scanned image is a color image composed of a large number of pixels, which is disadvantageous to the later image processing. Fig. 2 shows a circuit diagram of the image preprocessing module.

According to the needs of image preprocessing module, power circuit, configuration circuit and storage circuit are added to the peripheral, and non-uniformity correction pipeline processing is started. After two-point correction, then a point of correction is carried out, then the image is input into buffer, and original image is sent to processing module later.

2.3 System Running Equipment

Operating conditions of computer are as follows: 64 bit operating system, processor at least 4.20 Ghz, memory space above 8GB, memory of peripheral memory is more than 32GB.

3 Design of Software in Virtual Reconstruction System for Building Space Structure

Based on hardware design, the software in virtual reconstruction system of building space structure is divided into function module and performance module. The function module realizes single-chip mapping and 3D model reconstruction. Performance module includes user interface and system maintenance. Module distribution of system is shown in Fig. 3.

According to characteristics of data acquisition of laser 3D scanner, virtual reconstruction of building spatial structure is divided into four levels: building contour acquisition, internal structure reconstruction, model editing and model export.

3.1 Improvement of user interactivity under user interface design

According to the function which must realize, user interface is divided into login interface, user interaction interface and UI design interface. Login interface controls whether the user can log in to the system. Ordinary users use registered user name and password to log in. In this interface, the function of remembering password is set. The user login interface is shown in Fig. 4.

The navigation bar is set on the UI interface. The navigation bar includes home page, single sheet mapping, 3D reconstruction of building and interactive window. User-to-system and user-to-user interaction can be realized in interactive window. Design a variety of login methods for users to choose. Users can choose traditional way of registering new users to fill in their own information, and record user data in client to generate user's personal ID. At the same time, user's login method is not limited to use of personal ID. Client can also cooperate with third-party software to facilitate users to authorize login through other software and facilitate unification of user accounts.

3.2 Spatial structure combination based on single-chip surveying

Single-sheet mapping is a function option in virtual reconstruction system of space structure, and it is also a key step to realize final reconstruction of spatial structure. In order to reconstruct the whole building space, each plane element needs to be constructed separately, and then all the elements are combined according to the spatial structure. Single-sheet mapping is to construct all plane elements, and use laser scanning instrument or ordinary image recording equipment to record construction data of plane, and then input it into the system directly. In the single mapping module, editing tools are set to adjust partial structure, and thus to provide users with interactive editing environment. In this module, the essence of constructing single mapping environment is to use spatial encryption effect to set up initial value, and combine with external orientation elements of matching solution with homonymous features to construct perspective projection model and build single mapping environment combined with point cloud.

3.3 Realization of Virtual Reconstruction of Building Space Structure Based on Multivariate Data Fusion

Reconstruction of 3D virtual model is to collect the outline of buildings and integrate point clouds with related images in mode of multi-variate data fusion. The reconstruction for internal structure needs to use normal segmentation method to detect rooftop and roof structure line, and then use corresponding algorithm for specific reconstruction. In this process, can also edit specific requirements for reconstruction results, provide editing tools for local modification of the automatically generated results, and render and export reconstruction results in 3D format files through support model.

3.3.1 Acquisition of 3D Laser Scanning Data

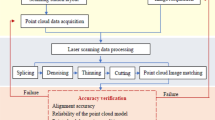

The laser scanning equipment is running according to set parameter values. Result of data acquisition is to store read and identified data in the system database. The flow of data acquisition is shown in Fig. 5.

Fig. 5 shows that scanning equipment is placed in front of the building that will be tested, and then laser transmitting device is turned on. Through USB communication module, the software receives point cloud data sent by lidar, and returned optical signal is immediately identified after being processed by electronic signal. The structural elements within building space include wall, floor and ceiling. When scanning, in addition to recording scanned data information, it is necessary to identify attributes of detected objects. Then, data acquisition of spatial structure is carried out with help of scanning instrument, which mainly focuses on ranging and angular measurement. 3D laser scanner can be regarded as core unit. Laser is emitted to the surface of the target to be measured. According to ranging principle and actual needs of building structure, phase ranging method is adopted to measure distance data. Laser beam is continuously emitted to target building, and then laser receiving equipment receives reflection echo [13]. The round-trip time of laser signal is calculated by phase difference between two laser beams, and then distance between targets is measured. If the angular frequency is , the distance to be measured is L, and the phase delay of a round trip is expressed by , calculation of round-trip time is shown in Formula (1).

Therefore, the distanceL can be expressed by Formula (2).

In formula, fis the signal frequency after modulation. Nis the number of modulation half-wavelengths contained in the measuring line. Δϕ is the rest of the insufficient phase delay generated by a round trip of signal in the measuring line. In given modulation and standard atmospheric conditions \( \frac{c}{4\pi f} \) is a constant under. Then, the precise angle θbmeasurement results are obtained by precise calculation. The specific calculation is shown in Formula (3).

In formula, Nris the number of rotor teeth in the motor. mis the number of motor phases. b is the number of line status of continuous windings. In this way, laser pulse measurement can be carried out synchronously by encoder controlled by precise clock, and then scanning angle value of laser pulse in horizontal and vertical directions can be obtained [14, 15]. Reconstruction parameters of each spatial structure are stored to complete storage of original data. The storage format of processed data is (L, θb, φ, G). According to specified requirements, the storage data files are generated.

3.3.2 Noise Filtering of Laser Scanning

In process of laser scanning, noise interference factors mainly include mutual interference between light source and laser light source in tracking environment, leading to pixel mixing. If emissivity is too small or incident angle of measured target is too large, data will be lost. Due to occlusion in building space, scanning blind area will be formed, and some areas can’t be scanned due to gap between laser scanning lines. During virtual reconstruction of building space, the above interference factors will lead to inaccurate reconstruction results. Therefore, it is necessary to filter the data obtained by laser scanning.

After analyzing previous researches, can see that ranging information is mainly reflected in all measurement directions of polar coordinates, and the measurement data of adjacent time points have correlation. In the same measurements, measurement information on adjacent scanning angle also has obvious correlation. Therefore, it is necessary to remove interference in reconstruction. According to obtained ranging data, a 3×3 information analysis window is constructed

In the formula, ki, jis the distance between the scanning center and the jth obstacle. The subscripti represents sampling time point of ranging information.j represents the sequence of ranging information in the same group of data. In this window, there are nine data which are correlated in time and space. The difference between ki. j and its adjacent data is calculated by Formula (5)

In the formula, pand q can’t be equal to 0 at the same time. Δkmin is the minimum difference between adjacent data in time and space. When Δkmin > χd, the measured dataki, j can be regarded as the measured value of noise, but it does not participate in the 3D reconstruction of the building spatial structure. χd is the standard deviation of laser measurement.

By constructing information analysis window of the distance between the scanning center and the obstacle, and calculating difference between adjacent data, can get standard deviation range of different laser measurements. In this way, pulse interference noise and mixed pixel interference can be effectively filtered out, and noise filtering of laser scanning is completed.

3.3.3 Data Registration

In order to complete data registration, standard format of pixel is set at first, which aims to avoid program rendering failure when the data is output. Then, plane segmentation is performed on image data to ensure accuracy of data registration. The region growing segmentation method based on point cloud is adopted. Conditions restricting the region growth process are specified before segmentation, and then eigenvector estimation can be performed on each pixel in segmentation point [16, 17]. In segmented regions, random selection or Hough transform are used to select seed points. On this basis, whether neighborhood points have similar feature measure with seed point can be judged. If answer is “yes”, it is identified as same region, otherwise, it is deleted and next point is taken as new regional growing seed point. If no new seed points can be found in its neighborhood, regional growth is completed. In this process, all points identified as similar points are divided into one class. Finally, pairwise registration within region is completed. Based on data of feature description points in two data sets, corresponding relation between two points is estimated, and then a correct correspondence relation is used to estimate rigid transformation and thus to complete registration.

3.3.4 Coordinate Transformation

According to results of data denoising and data registration, 3D coordinate transformation of spatial structure is carried out. According to different functions, 3D coordinate transformation can be divided into two types: one is to convert laser scanning data into the form of 3D coordinates, and the other is to realize function of editing tools [18, 19]. The data format collected by laser scanner is (L, θb, φ, G). If scanning starting point coordinate of scanner is(x0, y0, z0), input target data can be set as (L0, θb0, φ0, G0), and then coordinates(x, y, z) of the target point can be obtained by coordinate conversion algorithm. The solution of horizontal ordinate x is shown in Formula (6).

For the same reason, solution methods of coordinate y and coordinatez are shown in Formula (7) and Formula (8).

Let suppose that gray value of each pixel in image is G0. According to above method, matrix description form of target image can be obtained by coordinate transformation of each pixel in target area.

Functions of editing tools include translation, rotation and scaling. These transformation methods will change coordinate data. Therefore, we need to calculate them. Taking rotation operation as an example, original coordinate is expressed as [X, Y, Z]-1, and it rotates around x-axis. Thus, it can be expressed as:

In the formula, Rxis rotation matrix, and its expression is shown in Formula (10).

In the formula, αis the rotation angle. The decomposition is shown in formula (11).

In the same way, coordinate transformation along axisyand axisz can be realized.

3.3.5 3D Rendering

In general, the internal structure of large buildings is very complex, so it is necessary to construct internal structure when modeling and rendering. The internal structure includes regular building components and irregular building components. Need to retain building components that are difficult to model, if necessary. Triangular network model can be used to retain original appearance of building. According to projection principle, each building component is placed in the same file for overall measurement control. Parameters of each component are in overall coordinate system, so a complete virtual space inside building can be formed by splicing them in order [20]. It is necessary to correct big and small gaps and cross section between components. In this way, a complete 3D model of building can be constructed. Rendering parameters are set before 3D rendering. Because required reconstruction results are output in 3D form, perspective projection is selected as a rendering projection method. The setting of gray value refers to the values of R, G and B, and the value of R is shown in formula (12).

Similarly, values of G and B are shown in Formula (13) and Formula (14).

Where, f is the projection frequency.

In addition to setting viewpoint position and length-width ratio of viewport, it is necessary to set lighting, shadow, material, texture and other conditions of rendering environment in process of 3D rendering. In rendering thread, the render scene function is called repeatedly. In order to make the scene more realistic, gridlines can be added. Finally, rendered model is exported by general 3D file formats such as DWG, OBJ and STL, and intermediate file format recognized by TerraScan software.

3.4 Result generation of virtual reconstruction of building space structure

The system maintenance module is used to ensure normal operation of the system. Maintenance includes registry, operating system and prevention and treatment of viruses. The maintenance of system can improve operation efficiency, save time of virtual reconstruction of space structure.

The system calls 3D laser scanner to collect the data. In the system interface, button on the toolbar to generate reconstruction results can be directly clicked.

In the toolbar in Fig. 6, we can edit the reconstruction and modify part of the spatial structure, and thus to obtain the ideal reconstruction result.

4 System Test Results

In order to verify application performance of virtual reconstruction system of building space structure based on laser three-dimensional scanning under fusion of multivariate big data, experimental verification was performed.

4.1 Experimental protocol

(1) Experimental hardware environment

The experiment was carried out under hardware condition that the processor was Intel Core-M480I5CPU@2.67GHz, memory was 8GB, operating system was 64-bit, and version was Windows10, and experiment time was set to 30min. In order to ensure reliability and validity of experiment results, the maximum coverage radius of network equipment used in experiment is set to 150m, transmission delay is 0.10s, signal-to-noise ratio of multipath interference is -10dB, pulse width is 1ms, and carrier frequency is 1100KHz. Due to the influence of various factors during experiment, experimental data will produce errors. In order to avoid the influence of the error on experimental results, repeated measurements will be carried out to obtain the average value to reduce influence of error.

(2) Source of experimental data

The data used in experiment comes from "International Architecture Database", and data in this database comes from website "archinform.net". The above data is time-sensitive.

(3) Comparison index

a. Virtual reconstruction system running time: This indicator can fully reflect actual application performance of reconstruction system. The shorter the reconstruction time, the higher operating efficiency of the system.

b. Rendering effect: The rendering effect can reflect visual effect of virtual reconstruction of architectural space structure, which is of great significance for evaluating effectiveness of the system.

(4) Comparison method

In order to verify advantages of designed method in virtual reconstruction of architectural space structure, a virtual space reconstruction system based on the position of central eye (system 1) and a panoramic light field static photography system based on virtual reality (system 2) as comparison system, conduct specific comparison analysis.

According to above experimental conditions, virtual reconstruction effect of building space structure is tested, and corresponding experimental conclusions are drawn.

4.2 Experimental results and analysis

This paper studies running time of virtual reconstruction system. The traditional virtual space reconstruction system of central eye position and panoramic light field static photography system of virtual reality are set as system 1 and system 2 respectively. LRS based on 3D laser scanning is compared with system 1 and system 2. Several buildings are taken as reconstruction objects, and internal architectural design drawings of buildings are taken as reconstruction standard. Basic parameters of reconstruction system are consistent. Start time is time when hardware starts the task of collecting and reconstructing data. Completion time of rendering reconstruction results and exporting according to specified three-dimensional format is the end time of experiment. Statistical results are shown in Table 2.

From the data in Table 2, it can be seen that with increase in the number of tasks, running time of virtual reconstruction system of building space structure based on laser 3D scanning gradually increases, but through comparison, it can be seen that reconstruction time of the proposed system is always lower than that of traditional system. The shortest reconstruction time is only 1.0s, the longest reconstruction time is only 6.5s, and reconstruction time of system 1 is increased from 3.5s to 15.4s, which is a large improvement, and reconstruction time of system 2 is also significantly higher than mentioned system. It shows that virtual reconstruction system of building space structure based on laser 3D scanning under fusion of multiple big data effectively solves problems of slow running speed and long time consumption of existing virtual reconstruction system of building space structure. This is because the system constructs an information analysis window for distance between scanning center and obstacle, and calculates difference between its adjacent data, thereby effectively filtering out impulse interference noise and mixed pixel interference, thereby solving problem of slow system running time.

In order to verify reconstruction effect of virtual reconstruction system of building space structure based on laser 3D scanning under fusion of multiple big data, rendering effect is used as an index to compare different systems. Results are shown in Fig. 7.

As shown in Fig. 7, chromaticity distribution of image color block obtained by system 1 is inhomogeneous, and image is bright and dark. Similarly, chromaticity distribution of system 2 image is not even, which indicates that reconstructed images obtained by two systems are poor. The system designed in this paper has excellent color uniformity and better rendering effect, which shows that system has outstanding applicability. This is because the designed system preprocesses the image in process of acquiring the image by using laser three-dimensional scanning technology to reduce influence of noise such as thermal noise and undercurrent noise. At the same time, it reduces impact of difference in light intensity and shooting angle on the image. Deal with impact, thereby enhancing reconstruction effect. However, existing system ignores the image preprocessing process, resulting in poor reconstruction effect, which proves superiority of designed system.

5 Conclusion

In order to solve problems of slow running speed, long time consuming, and poor spatial structure rendering in existing virtual reconstruction system of building space structure, a virtual reconstruction of building space structure based on laser 3D scanning technology under condition of fusion of multiple big data is designed system. Three-dimensional laser scanning technology is a brand-new method of virtual reconstruction of building space structure, which greatly reduces difficulty of reconstruction work. The following is the focus of this article:

(1) In hardware design part of the system, image preprocessing module reduces noise interference, reduces interference of noise factors, and shortens reconstruction time.

(2) In the system software design part, single-chip mapping function and 3D model reconstruction function are realized, and output of reconstruction results is optimized.

(3) Experimental results show that the proposed system effectively solves long-running problem of traditional systems. The shortest reconstruction time of the proposed system is only 1.0s, the longest reconstruction time is only 6.5s, and rendering effect is better.

However, this reconstruction method still has problems such as large amount of original data and data redundancy. It is believed that in near future, 3D laser technology will play a greater role in the field of building spatial structure reconstruction.

References

Hepp B, Niessner M, Hilliges O (2019) Plan3D: Viewpoint and Trajectory Optimization for Aerial Multi-View Stereo Reconstruction. ACM Transactions on Graphics 38(1):4.1–4.17

Tachella J, Altmann Y, Ren X, McCarthy A (2018) Bayesian 3D Reconstruction of Complex Scenes from Single-Photon Lidar Data. Siam Journal on Imaging Sciences 12(1):521–550

Vala J, Jarosova P (2018) Optimization Approaches to Some Problems of Building Design. Applications of Mathematics 63(3):305–331

Pan ML (2019) 3D Modeling and Simulation Technology of Single Structure Scene Image. Computer Simulation 36(2):166–170

Jia X (2020) Design of computer data acquisition and processing system based on GPS. Modern Electronics Technique 43(21):147–150+155

Nili VA, Mansouri E, Kavehvash Z, Fakharzadeh M, Shabany M, Khavasi A (2018) Low-cost three-dimensional millimeter-wave holographic imaging system based on a frequency-scanning antenna. Applied Optics 57(1):65

Xia ZP, Hu FY, Cheng C, Gu MM (2019) Virtual reality space reconstruction based on visual space orientation theory. Chinese Journal of Liquid Crystals and Displays 34(02):100–104

Overbeck RS, Erickson D, Evangelakos D, Pharr M (2018) A System for Acquiring, Processing, and Rendering Panoramic Light Field Stills for Virtual Reality. ACM Transactions on Graphics 37(6):1–15

Wang M, Wang CY, Du HH (2019) Virtual reconstruction system based on laser three-dimensional scanning building space structure. Laser Journal 266(11):174–177

Sun Y, Song H, Jara AJ, Bie R (2017) Internet of Things and Big Data Analytics for Smart and Connected Communities. IEEE Access 4(1):766–773

Qi L, Hu C, Zhang X, Khosravi MR, Wang T (2020) Privacy-Aware Data Fusion and Prediction With Spatial-Temporal Context for Smart City Industrial Environment. IEEE Transactions on Industrial Informatics 17(6):1

Mokarram M, Khosravi MR (2020) A cloud computing framework for analysis of agricultural big data based on Dempster–Shafer theory. The Journal of Supercomputing 24(6):2545–2565

Kiyono T, Asawa T, Oshio H (2021) Laser-Scanning-Based Method for Estimating the Distribution of the Convective-Heat-Transfer Coefficient on Full-Scale Building Walls. Boundary-Layer Meteorology 178(3):1–24

Thi T, Camillo R, Norbert P (2018) Integrated Change Detection and Classification in Urban Areas Based on Airborne Laser Scanning Point Clouds. Sensors 18(2):448

Shuai L, Shuai W, Xinyu L, et al. Human Memory Update Strategy: A Multi-Layer Template Update Mechanism for Remote Visual Monitoring, IEEE Transactions on Multimedia, 2021, online first, https://doi.org/10.1109/TMM.2021.3065580.

Fan R, Ai X, Dahnoun N (2018) Road Surface 3D Reconstruction Based on Dense Subpixel Disparity Map Estimation. IEEE Transactions on Image Processing 27(6):3025–3035

Liu S, Wang S, Liu X et al (2021) Fuzzy Detection aided Real-time and Robust Visual Tracking under Complex Environments. IEEE Transactions on Fuzzy Systems 29(1):90–102

El Hazzat S, Merras M, El Akkad N, Saaidi A, Satori K (2018) 3D reconstruction system based on incremental structure from motion using a camera with varying parameters. The Visual Computer 34(10):1443–1460

Shuai L, Chunli G, Fadi A et al (2020) Reliability of response region: A novel mechanism in visual tracking by edge computing for IIoT environments. Mechanical Systems and Signal Processing 138:106537

Quenzel J, Nieuwenhuisen M, Droeschel D, Beul M, Houben S, Behnke S (2019) Autonomous MAV-based Indoor Chimney Inspection with 3D Laser Localization and Textured Surface Reconstruction. Journal of Intelligent & Robotic Systems 93(5):317–335

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liang, ., Woźniak, M. Virtual Reconstruction System of Building Spatial Structure Based on Laser 3D Scanning under Multivariate Big Data Fusion. Mobile Netw Appl 27, 607–616 (2022). https://doi.org/10.1007/s11036-021-01825-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11036-021-01825-2