Abstract

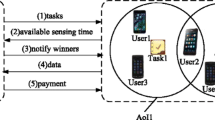

In this paper, we tackle the problem of stimulating users to join mobile crowdsourcing applications with personal devices such as smartphones and tablets. Wireless personal networks facilitate to exploit the communication opportunity and makes diverse spare-resource of personal devices utilized. However, it is a challenge to motivate sufficient users to provide their resource of personal devices for achieving good quality of service. To address this problem, we propose an incentive framework based on Stackelberg game to model the interaction between the server and users. Traditional incentive mechanisms are applied for either single task or multiple dependent tasks, which fails to consider the interrelation among various tasks. In this paper, we focus on the common realistic scenario with multiple collaborative tasks, where each task requires a group of users to perform collaboratively. Specifically, participants would consider task priority and the server would design suitable reward functions to allocate the total payment. Considering the information of users’ costs and the types of tasks, four incentive mechanisms are presented for various cases to the above problem, which are proved to have the Nash equilibrium solutions in all cases for maximizing the utility of the server. Moreover, online incentive mechanisms are further proposed for real time tasks. Through both rigid theoretical analysis and extensive simulations, we demonstrate that the proposed mechanisms have good performance and high computational efficiency in real world applications.

Similar content being viewed by others

References

Idc worldwide smartphone growth forecast toslow from a boil to a simmer as prices drop and market mature., http://www.idc.com/getdoc.jsp?containerId=prUS25282214

Response times: The 3 important limits http://www.nngroup.com/articles/response-times-3-important-limits/

Amintoosi H, Kanhere SS (2014) A reputation framework for social participatory sensing systems. Mobile Networks and Applications 19(1):88–100

Chai R, Wang X, Chen Q, Svensson T (2013) Utility-based bandwidth allocation algorithm for heterogeneous wireless networks. Science China Information Sciences 56(2):1–13

Cormen TH (2009) Introduction to algorithms. MIT press

Duan L, Kubo T, Sugiyama K, Huang J, Hasegawa T, Walrand J (2012) Incentive mechanisms for smartphone collaboration in data acquisition and distributed computing. INFOCOM, Proceedings IEEE, pp. 1701–1709

Elkind E, Sahai A, Steiglitz K (2004) Frugality in path auctions. Proceedings of the fifteenth annual ACM-SIAM symposium on Discrete algorithms, pp. 701–709. Society for Industrial and Applied Mathematics

Feng Z, Zhu Y, Zhang Q, Ni ML, Vasilakos AV (2014) TRAC: Truthful auction for location-aware collaborative sensing in mobile crowdsourcing, INFOCOM, Proceedings IEEE

Jaimes LG, Vergara-Laurens I, Labrador M.A (2012) A location-based incentive mechanism for participatory sensing systems with budget constraints. Pervasive Computing and Communications,IEEE International Conference on, pp. 103–108

Karlin AR, Kempe D (2005) Beyond VCG: Frugality of truthful mechanisms. Foundations of Computer Science. 46th Annual IEEE Symposium on, pp. 615–624

Ledlie J, Odero B, Minkov E, Kiss I, Polifroni J (2010) Crowd translator: on building localized speech recognizers through micropayments. ACM SIGOPS Operating Systems Review 43(4):84–89

Liu X, Ota K, Liu A, Chen Z (2015) An incentive game based evolutionary model for crowd sensing networks. Peer-to-Peer Networking and Applications, pp.1–20

Luo T, Tan HP, Xia L (2014) Profit-maximizing incentive for participatory sensing. INFOCOM, Proceedings IEEE

Matyas S, Matyas C, Schlieder C, Kiefer P, Mitarai H, Kamata M (2008) Designing location-based mobile games with a purpose: collecting geospatial data with cityexplorer. Proceedings of the international conference on advances in computer entertainment technology, ACM, pp. 244–247

Nisan N, Roughgarden T, Tardos E, Vazirani VV (2007) Algorithmic game theory, vol. 1. Cambridge University Press Cambridge

Shi C, Lakafosis V, Ammar MH, Zegura E.W (2012) Serendipity: Enabling remote computing among intermittently connected mobile devices. In: Proceedings of the thirteenth ACM international symposium on Mobile Ad Hoc Networking and Computing, pp. 145–154

Singla A, Krause A (2013) Truthful incentives in crowdsourcing tasks using regret minimization mechanisms. In: Proceedings of the 22nd international conference on World Wide Web, pp. 1167–1178

Yang D, Xue G, Fang X, Tang J (2012) Crowdsourcing to smartphones: incentive mechanism design for mobile phone sensing. In: Proceedings of the 18th annual international conference on Mobile computing and networking, pp. 173–184

Zhang G, Liu P, Ding E (2013) Pareto optimal time-frequency resource allocation for selfish wireless cooperative multicast networks. Science China Information Sciences 56(12):1–8

Zhang X, Yang Z, Wu C, Sun W, Liu Y, Liu K (2014) Robust trajectory estimation for crowdsourcing-based mobile applications. IEEE Trans Parallel Distrib Syst 25(7):1876–1885

Zhang X, Yang Z, Zhou Z, Cai H, Chen L, Li X (2014) Free market of crowdsourcing: Incentive mechanism design for mobile sensing

Zhang Y, van der Schaar M (2012) Reputation-based incentive protocols in crowdsourcing applications. INFOCOM, Proceedings IEEE, pp. 2140–2148

Zhao D, Li XY, Ma H (2014) How to crowdsource tasks truthfully without sacrificing utility: Online incentive mechanisms with budget constraint. INFOCOM, Proceedings IEEE, pp. 1213–1221

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

The China Postdoctoral Science Foundation under Grant No. 2015M570059.

Appendices

Appendix A: The proof of Theorem 1

Since for any task i and j, R i = R j in this homogeneous case, \(R_{j} =\frac {R}{k}\) for both reward functions mentioned above. In Stage II, given reward R and the number of completed tasks k, we have the utility of participant i as

where 1≤k≤M. From the aspect of each user, it satisfies IR property; i.e., u i ≥0. Hence, we have \(\frac {R}{km_{0}} \ge c_{i}\). In order to get the best response strategy of user i, we compute the first-order derivative of u i with respect to t i .

Since \(\frac {R}{km_{0}} \ge c_{i}\), we have \(\frac {du_{i}}{dt_{i}} \ge 0\). Hence, u i is an increasing function, and reaches the maximum value when t i = k = M; i.e., the best response strategy of user i is

The NE of cost threshold is the solution when B R i =0. Hence, we can obtain \(c^{*}=\frac {R}{Mm_{0}}\).

Next, we analyze the stage I to obtain the maximum utility of the server. For the profitability property of the server, we have M m 0 c ∗≤R≤M v 0, i.e., \(c^{*} \le \frac {v_{0}}{m_{0}}\). Let c 0 be the m 0−t h smallest users’ unit cost among all N users. For the IR property of users, we have R≥M m 0 c 0. Therefore, we have m a x{u s } = M(v 0−m 0 c 0) when R ∗ = M m 0 c 0.

When \(c_{0} >\frac {v_{0}}{m_{0}}\), we can not motivate m 0 users to perform tasks; i.e., it is failed to find x j users satisfying the IR property with x j ≥m 0. When \(c_{0} \le \frac {v_{0}}{m_{0}}\), the server can collect enough users to complete all tasks.

Appendix B: The proof of lemma 1

From the Section 9.3 of the textbook [5], we can obtain that the process of determining the i-th smallest element from a array of n>1 is in O(n) time in the worst case. Thus, Line 2 can be implemented to run in O(N) time. It is obvious that Line 3 also tasks O(N) time and Line 4 tasks O(1) time. Overall, the total running time of max-utility algorithm in this case is O(N).

Appendix C: The proof of theorem 2

From \(R^{*}=c_{i}^{max} \cdot \sum \limits _{j\in T_{c}}{m_{j}}\), we can obtain \(c_{i} \le R^{*} /\sum \limits _{j\in T_{c}}{m_{j}}\). Next, we will prove U s is constant during increase of R from R L to R ∗. That means we can not find another different users set U s′ to complete tasks of T c , such that \(\left \{c_{i} \le R^{\prime }/\sum \limits _{j\in T_{c}} {m_{j}} \vert i\in U_{s}^{\prime } \right \}\), where R L <R ′<R ∗.

Proof by contradiction. Assuming \(\exists U_{s}^{\prime }, U_{s}^{\prime }\ne U_{s}\) and \(\left \{c_{i} \le R^{\prime }/\sum \limits _{j\in T_{c} } {m_{j}} \vert i\in U_{s}^{\prime }\right \}\), such that the utility of the server is maximized. From \(c_{i}^{\prime } \le R^{\prime }/\sum \limits _{j\in T_{c}} {m_{j}}\), we can obtain \(max\left \{c_{i}^{\prime }\right \}={R}^{\prime }/\sum \limits _{j\in T_{c}} {m_{j}}\). From \(c_{i} \le R^{*} /\sum \limits _{j\in T_{c}} {m_{j}}\), we can obtain \(max\left \{c_{i}\right \}=R^{*} /\sum \limits _{j\in T_{c} } {m_{j} } \). Since R ′<R ∗, \(max\{c_{i}^{\prime } \}<max\{c_{i}\}\). However, because U s is the set of users with the minimum costs, \(max\{c_{i}^{\prime }\}\ge max\{c_{i}\}\). There is a contradiction, and the theorem has been proved.

Appendix D: The proof of lemma 2

From the analysis of lemma 1, we can obtain that Line 3 can be implemented to run in O(N) time. Hence, the for-loop (Lines 2-7) takes O(M N) time. It is obvious that the following for-loop (Line 9-15) also tasks O(M N) time. Next, the the process of R ∗ computation tasks O(N) time for finding maximum value, whatever the reward function is NU function or the VT one. Finally, there is no loop to compute the NE of server’s utility, and takes O(1) time. Overall, the total running time of max-utility algorithm in this case is O(M N).

Appendix E: The proof of theorem 3

Definition 3 (Best response dynamics)

Suppose user i has a belief on others’ strategies s −i ∈S −i , then user i’s strategy\(s^{*}_{i}\), \(s^{*}_{i} \in S_{i}\) is a best response if for every \(s^{\prime }_{i} \in S_{i}\), we have \(u_{i}\left (s^{*}_{i},s_{-i}\right )\geq u_{i}\left (s^{\prime }_{i},s_{-i}\right )\).

Each user will continually prepare to improve its solution in response to changes made by the other user(s). That process is referred as best-response dynamics. Each user updates to the current situation based on its best response. It is aimed to find stable solutions, where the best response of each user is to maximize its own utility. Such a solution, from which no user has an incentive to deviate, is referred as a NE.

Proposition 1

Best-response dynamics always leads to a set of strategies that forms a NE solution.

To study the best response of user i, the derivatives of u i with respect to t i is computed as

From Eq. 30, we obtain that the second-order derivation of u i is negative. Hence, given any R>0, the useri’s utility has a unique maximum value if it exists when x j ≥m j .

Appendix F: The proof of lemma 3

Base on Eq. 13, we can obtain that the computation of probability with one task completed tasks O(N−M) time. Hence, the process of E(u i ) calculation (Line 3) is in O(M∗(N−M)) time based on Eq. 15. To get the NE of threshold cost, we need to find the maximum E(u i ) among a series of cost threshold. The inner for-loop achieves this goal, which tasks |c|∗M∗(N−M) time (Line 2-5), where |c| is the number of this for-loop. Based on Eq. 16, it is easily obtained that the process of E(u s ) calculation (Line 6) tasks O(M∗(N−M)) time. The outer for-loop aims to obtain the maximum E(u s ) among a series of R which takes (|R|)∗M∗(N−M) time (Lines 1-7), where |R| denotes the number of this for-loop. Finally, it takes O(1) time to compute the NE of the expectation of server’s utility with c ∗ and R ∗. Overall, the total running time of max-utility algorithm in this case is O(M N∗|c||R|), because of O(M N)>O(M∗(M−N)). The time complexity of Algorith 3 is related with the granularity of c ∗ and R ∗.

Appendix G: The proof of lemma 4

Base on Eq. 18 or Eq. 21, we can obtain that the computation of probability with task j completed tasks O(N−M) time. The for-loop (Lines 4-6) tasks O((N−M)∗|c|) time. The process of finding c(j)∗ takes O(|c|) in Line 7. It is easy to find that the running time of the for-loop (Lines 3-8) is O(η M(N−M)∗|c|) The goal of Lines 9-11 is to obtain the selected users Q j and the completed tasks T c , which tasks O(M⋅δ N) time. Line 12 is subject to sum E(u i ) j , where j∈T i , hence it takes O(η N). Therefore, the for-loop of Lines 2-13 aims to get the NE of cost threshold, and takes O((N−M)|c|δ M⋅η N). The most outer for-loop (Lines 1-15) to acquire the expectation of server’s utility tasks O(|R|(N−M)|c|δ M⋅η N). Overall, the time complexity of Algorith 4 is O(|R|(N−M)|c|δ M⋅η N).

Rights and permissions

About this article

Cite this article

Luo, S., Sun, Y., Ji, Y. et al. Stackelberg Game Based Incentive Mechanisms for Multiple Collaborative Tasks in Mobile Crowdsourcing. Mobile Netw Appl 21, 506–522 (2016). https://doi.org/10.1007/s11036-015-0659-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11036-015-0659-3