Abstract

To learn about real world phenomena, scientists have traditionally used models with clearly interpretable elements. However, modern machine learning (ML) models, while powerful predictors, lack this direct elementwise interpretability (e.g. neural network weights). Interpretable machine learning (IML) offers a solution by analyzing models holistically to derive interpretations. Yet, current IML research is focused on auditing ML models rather than leveraging them for scientific inference. Our work bridges this gap, presenting a framework for designing IML methods—termed ’property descriptors’—that illuminate not just the model, but also the phenomenon it represents. We demonstrate that property descriptors, grounded in statistical learning theory, can effectively reveal relevant properties of the joint probability distribution of the observational data. We identify existing IML methods suited for scientific inference and provide a guide for developing new descriptors with quantified epistemic uncertainty. Our framework empowers scientists to harness ML models for inference, and provides directions for future IML research to support scientific understanding.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Scientists increasingly use machine learning (ML) in their daily work. This development is not limited to natural sciences like material science (Schmidt et al., 2019) or the geosciences (Reichstein et al., 2019), but extends to social sciences such as educational science (Luan & Tsai, 2021) and archaeology (Bickler, 2021).

When building predictive models for problems with complex data structures, ML outcompetes classical statistical models in both performance and convenience. Impressive recent examples of successful prediction models in science include the automated particle tracking at CERN (Farrell et al., 2018), or DeepMind’s AlphaFold, which has made substantial progress in predicting protein structures from amino acid sequences (Senior et al., 2020). In such examples, some see a paradigm shift towards theory-free science that “lets the data speak” (Anderson, 2008; Kitchin, 2014; Mayer-Schönberger & Cukier, 2013; Spinney, 2022). However, purely prediction driven research has its limits: In a survey with more than 1,600 scientists, almost 70% expressed the fear that the use of ML in science could lead to a reliance on pattern recognition without understanding (Van Noorden & Perkel, 2023). This is in line with the philosophy of science literature, which does recognize the importance of predictions (Douglas, 2009; Luk, 2017), but also emphasizes other goals such as explaining and understanding phenomena (Longino, 2018; Salmon, 1979; Shmueli, 2010; Toulmin, 1961).

The reason why understanding phenomena with ML is difficult is that, unlike traditional scientific models, ML models do not provide a cognitively accessible representation of the underlying causal mechanism (Boge, 2022; Hooker & Hooker, 2017; Molnar & Freiesleben, 2024). The link between the ML model and phenomenon is unclear, leading to the so-called opacity problem (Sullivan, 2022). Interpretable machine learning (IML, also called XAI, for eXplainable artificial intelligence) aims to tackle the opacity problem by analyzing individual model elements or inspecting specific model properties (Molnar, 2020). However, it often remains unclear how IML can help to address problems in science (Roscher et al., 2020) as IML methods are often designed with other purposes and stakeholders in mind, such as guiding engineers in model construction or offering decision support for end users (Zednik, 2021).

In spite of these challenges, scientists increasingly use IML for inferring which features are most predictive of crop yield (Shahhosseini et al., 2020; Zhang et al., 2019), personality traits (Stachl et al., 2020), or seasonal precipitation (Gibson et al., 2021), among others. Although researchers are aware that their IML analyses remain just model descriptions, it is often implied that the explanations, associations, or effects they uncover extend to the corresponding real-world properties. Unfortunately, drawing inferences with current IML raises epistemological issues (Molnar et al., 2022): it is often unclear what target quantity IML methods estimate (Lundberg et al., 2021), is it properties of the model or of the phenomenon (Chen et al., 2020; Hooker et al., 2021)? Moreover, theories to quantify the uncertainty of interpretations are underdeveloped (Molnar et al., 2020; Watson, 2022).

1.1 Contributions

In this paper, we present an account of scientific inference with IML inspired by ideas from philosophy of science and statistical inference. We focus on supervised learning on identically and independently distributed (i.i.d.) data relating predictors \(\varvec{X}\) and a target variable \(Y\). Our key contributions are:

-

We argue that ML cannot profit from the traditional approach to scientific inference via model elements because individual ML model parameters do not represent meaningful phenomenon properties. We observe that current IML methods generally do not offer an alternative route: while some do interpret the model as a whole beyond its elements, they are designed to support model audit and not scientific inference.

-

We identify the criteria that IML methods need to fulfill so that they provide access to the properties of the conditional probability distribution \(\mathbb {P}(Y\,|\,\varvec{X})\). We provide a constructive approach to derive new IML methods for scientific inference (we call them property descriptors), and identify for which estimands existing IML methods can be appropriated as property descriptors. We discuss how property descriptions can be estimated with finite data and quantify the resulting epistemic uncertainty.

1.2 Roadmap

This paper is addressed to an interdisciplinary audience, where various communities may find some sections of special interest:

-

Philosophers of science may start with our discussion in Sect. 3 on traditional scientific modeling and why its notion of representation is not longer applicable to ML models (see Sects. 4 and 5). Additionally, Sect. 11 offers insights on what causal understanding can be derived from ML model interpretations.

-

Researchers in IML may want to skip ahead to Sect. 9, where we offer a concise guide to selecting or developing IML methods apt for scientific inference. In preparation, sections Sects. 6 and 7 motivate our approach and Sect. 8 reviews some necessary mathematical background. We suggest future avenues for IML research in Sect. 12.

-

Finally, scientists will find in Table 2 a list of published IML methods that address a variety of practical inference questions, with Sect. 12 discussing limitations to consider in applications.

1.3 Terminology

For the purposes of our discussion below, a phenomenon is a real-world process whose aspects of interest can be described by random variables; these aspects possess distinct properties. Observations of the phenomenon are assimilated to draws from the unknown joint distribution of the random variables to form a dataset, or just data. Scientific inference describes the process of rationally inducing knowledge about a phenomenon from such observational data (via ML, or other types of models), providing a basis for scientific explanations. In this work, when we talk about ML, we focus exclusively on the supervised learning setting. A supervised ML model is a mathematical function with some free parameters that a learning algorithm optimizes (“trains”) based on existing labeled data in order to accurately predict future or withheld observations, i.e. to generalize beyond the initial data. While these predictions are often called ‘inferences’ in the deep learning literature, we here employ inference in its original sense in statistics: investigating unobserved variables and parameters to draw conclusions from data. In this sense, inference goes beyond prediction; it concerns the data structure and its uncertainties. When we talk about IML methods in this paper, we include all approaches that analyze trained ML models and their behavior. In contrast to Rudin (2019), we do not limit the scope of IML to inherently interpretable models. These brief conceptual remarks are meant to reduce ambiguity in our usage, we lay no claim as to their universality.

2 Related Work

Whether and how ML models, and specifically IML, can help obtain knowledge about the world is a debated topic in philosophy of science, statistics, causal inference, and within the IML community.

Philosophy of Science

According to Bailer-Jones and Bailer-Jones (2002) and Bokulich (2011) ML models are only suitable for prediction, since their parameters are merely instrumental and lack independent meaning. Conversely, Sullivan (2022) contends that nothing prevents us from gaining real-world knowledge with ML models as long as the link uncertainty—the connection between the phenomenon and the model—can be assessed. While Cichy and Kaiser (2019) and Zednik and Boelsen (2022) claim that IML can help in learning about the real world, they remain vague about how model and phenomenon are connected. We agree with Watson (2022) that only IML methods that evaluate the model on realistic data can allow insight into the phenomenon, but clarify that without further assumptions such methods only reveal associations learned by the ML model, not causal relationships in the world (Räz, 2022; Kawamleh, 2021). Our work makes precise that ML models can be described as epistemic representations of a certain phenomenon that allow us to perform valid inferences (Contessa, 2007) via interpretations.

Statistical Modeling and Machine Learning

Breiman (2001b) distinguishes between algorithmic (ML) and data (statistical) modeling. He illustrates, using a medical example, that interpreting high-performance ML models post-hoc can yield more accurate insights about the underlying phenomena compared to standard, inherently interpretable data models. Our paper provides an epistemic foundation for such post-hoc inferences. Shmueli (2010) distinguishes statistics and ML by their goals—prediction (ML) and explanation (statistics). Like Hooker and Mentch (2021), we argue against such a clear distinction and offer steps to integrate the two fields. To do so, we initially clarify what properties of a phenomenon can be addressed by IML in principle. Starting from theoretical estimands, we develop a guide for constructing IML methods for finite data, following the scheme in Lundberg et al. (2021). Finally, based on the approach by Molnar et al. (2023), we show how to obtain uncertainty estimates for IML-based inferences.

Causal Inference Using Machine Learning

ML is now widely used as a tool for estimating causal effects (Dandl, 2023; Knaus, 2022; Künzel et al., 2019). Double machine learning, for example, provides an ML-based unbiased and data-efficient estimator of the (conditional) average causal effect, assuming that all confounding variables are observed (Chernozhukov et al., 2018).Footnote 1 While these works focus on the construction of practical estimators for causal effects, we focus on the question what inferences can be drawn from interpreting individual ML models and how to design property descriptors for such inferences.

The Targeted Learning framework by (Van der Laan et al., 2011; Van der Laan & Rose, 2018) is closely related to our proposal and also to double ML (Díaz, 2020; Hines et al., 2022). Like us, they discourage the use of interpretable but misspecified parametric models for scientific inference. Instead, they suggest to directly estimate relevant properties (they call them parameters) of the joint data distribution that are motivated by causal questions. They estimate relevant aspects of the joint distribution from which the parameters can be derived using the so-called super learner (a weighted ensemble of ML models), and debias their estimator with the targeted maximum likelihood update (Hines et al., 2022; Van Der Laan & Rubin, 2006). Compared to targeted learning, we ask more specifically what inferences we can draw from interpreting individual ML models and how to match such interpretations with parameters of traditional scientific models. Also, we primarily provide a theoretical framework for IML tools and how they must be designed to gain insights into the data, whereas the work of Van der Laan et al. (2011) is more practical, providing unbiased estimators and implementations for a variety of inference tasks (Van Der Laan & Rubin, 2006). We further discuss the relationship between property descriptors and targeted learning in Sect. 12.

Interpretable Machine Learning

IML as a field has been widely criticized for being ill-defined, mixing different goals (e.g. transparency and causality), conflating several notions (e.g. simulatability and decomposability), and lacking proper standards for evaluation (Doshi-Velez & Kim, 2017; Lipton, 2018). Some even argued against the central IML leitmotif of analyzing trained ML models post hoc in order to explain them (Rudin, 2019). In this paper, we show that if we focus solely on interpretations for scientific inference, we can clearly define what estimand post hoc IML methods estimate and how well they do so.

Using IML for scientific inference is not a completely new idea. It has been proposed for IML methods like Shapley values (Chen et al., 2020), global feature importance (Fisher et al., 2019) and partial dependence plots (Molnar et al., 2023). Our framework generalizes these method-specific proposals to arbitrary IML methods and provides guidance on how to construct IML methods that enable inference.

3 The Traditional Approach to Scientific Inference Requires Model Elements That Meaningfully Represent

Models can be found everywhere in science, but what are they really? Like Bailer-Jones, we see a scientific model as an “interpretative description of a phenomenon that facilitates perceptual as well as intellectual access to that phenomenon” (Bailer-Jones 2003, p. 61). The way models provide access to phenomena is usually specified by some sort of representation (Frigg & Nguyen, 2021; Frigg & Hartmann, 2020). Indeed, models represent only some aspects of a phenomenon, not all of it—a good model is true to the aspects that are relevant to the model user (Bailer-Jones, 2003; Ritchey, 2012).

In scientific modeling, we noted a paradigm that many models implicitly follow—we call it the paradigm of elementwise representationality.

Definition

A model is elementwise representational (ER) if all model elements (variables, relations, and parameters) represent an element in the phenomenon (components, dependencies, properties).

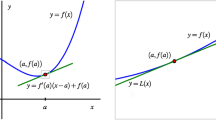

Figure 1 illustrates how models relate to the phenomenon when they are ER (in the example, two-body Newtownian dynamics is used to model the Earth-Moon system) : variables describe phenomenon components; mathematical relations between variables describe structural, causal or associational dependencies between components; parameters specify the mathematical relations and describe properties, like the strength, of the component dependencies. By distinguishing components, dependencies and properties, we closely follow inferentialist accounts of representation by Contessa (2007) and other philosophers like Achinstein (1968), Bailer-Jones (2003), Ducheyne (2012), Hughes (1997), Levy (2012), Stachowiak (1973). The upward arrows in Fig. 1 describe encoding into representations i.e. the translation of a phenomenon observation to a model configuration; The downward arrows describe decoding i.e. the translation of knowledge about the model into knowledge about the phenomenon.

Model and phenomenon sustain an encoding-decoding relationship. The main elements of a traditional, ER model, are shown in encoding-decoding correspondence to the phenomenon elements they represent (Contessa, 2007 Stachowiak, 1973). Phenomenon and model elements are illustrated with a simple example of two bodies in gravitational interaction and its classical, Newtonian mechanistic description. This physical example was chosen to illustrate the ER paradigm, we make no claim that our property descriptors presented later will achieve similar representational power

ER does not mean that each model element represents independently of the rest of the model. Instead, ER is model-relative. When we specify the rest of the model, ER implies that each model element has a fixed meaning in terms of the phenomenon. We also want to emphasize that ER is an extreme case. There are indeed models in science where not every model element represents but some parts of the overall model do. A typical non-representational element used in scientific models is a noise term. Instead of representing a specific component or a property of the phenomenon, the noise can be a placeholder for unexplained aspects of the phenomenon (Edmonds, 2006).

ER is obtained through model construction; ER models are usually “hand-crafted” based on background knowledge and an underlying scientific theory. Variables are selected carefully and sparsely during model construction, and relations are constrained to a relation class with few free parameters. When ER models need to account for additional phenomenon aspects, they are gradually extended so that large parts of the “old” model are preserved in the more expressive “new” model (Schwarz et al., 2009). ER even eases this model extension process because model interventions are intelligible on the level of model elements. Usually, ER is explicitly enforced in modeling: if there is a phenomenon element devoid of meaning, researchers either try to interpret it or exclude it from the model.

ER is so remarkable because it gives models capabilities that go beyond prediction. ER has a crucial role in translating model knowledge into phenomenon knowledge (surrogative reasoning Contessa 2007; Hughes 1997; Swoyer 1991). Scientists can analyze model elements and draw immediate conclusions about the corresponding phenomenon element (Frigg & Nguyen, 2021). However, only those properties of the phenomenon that have a model counterpart can be analyzed with this approach. Fortunately, as described above, ER models can be extended to account for further relevant phenomenon elements identified by the scientist.

Example Associational ER Model: Simple Linear Regression

The mechanistic causal model from Fig. 1 is ideal for illustrating what constitutes an ER model. However, ML models are associational in nature. To better isolate the differences in the ways ER and ML models represent, we now focus on an associational ER model, in this case a simple linear model.

Suppose we want to study which factors influence students’ skills in math, specifically focusing on language mastery (Peng et al., 2020). We adopt grades as quantitative proxies for the respective skills, and find in Cortez and Silva (2008) a suitable dataset reporting Portuguese and math grades on a 0–20 scale, along with 30 other variables such as student age, parents’ education, etc (see Appendix A for details). Here, the students’ characteristics like their math and Portuguese skills are the phenomenon components, genetic and environmental associations are the dependencies, and the strength or direction of these associations are instances of relevant properties.

We start with a linear regression with the Portuguese grade as the only predictor variable, denoted \(X_p\), and the math grade as the target, \(Y=\beta _0+\beta _1 X_p+\epsilon\), with \(\beta _0,\beta _1\in \mathbb {R}\). This linear relation is a reasonable, if crude, assumption for a first analysis in absence of prior insight.Footnote 2 Our model is ER except for the noise term, \(\epsilon \sim {\mathcal {N}}(0,\sigma ^2)\), which accounts for all variability in \(Y\) not due to \(X_p\) and thus is, by design, not ER. We train the model by finding the \({\hat{\beta }}_1,{\hat{\beta }}_0\) that minimize the mean-squared-error (MSE) of predictions on the training set:

The fitted regression coefficient \({\hat{\beta }}_1=0.77\) has a 95% confidence interval (CI) of \([0.63<\beta _1<0.91]\),Footnote 3 and represents the association as the expected increase in math grade for a unit increase in Portuguese grade. The bias \({\hat{\beta }}_0\) is also straightforward to interpret.Footnote 4 Thus, from \({\hat{\beta }}_1\) and its CI, we might conclude with some confidence that language and math skills are positively and strongly associated. This conclusion is contingent on the model being ER, but crucially, also on it capturing well the phenomenon. Although by optimizing the MSE we targeted an appropriate estimand, namely the conditional expectation \(\mathbb {E}_{Y\,|\,X_p}[Y\,|\,X_p]\), we estimated it with a rather crude model. Clearly, if the model is not highly predictive, it is ill-suited for reliable scientific inference. To improve performance, we can make the model more expressive by introducing additional variables, relations, or interaction parameters. As long as we preserve ER, we can draw scientific inferences directly from model elements. These inferences are only as valid as the modeling assumptions (e.g. target normality, homoscedasticity, or linearity).

4 The Elements of ML Models Do Not Meaningfully Represent

ER models suit our image of science as an endeavor aimed at understanding. ER enables us to reason about the phenomenon, and in causal models it even allows us to reason about effects of model or even real-world interventions. However, when constructing ER models, we require background knowledge about which components are relevant, and we usually need to severely restrict the class of relations that can be considered in modeling a given phenomenon. These difficulties might lead scientists to either limit their investigations to simple phenomena or to settle for overly simplistic models for complex phenomena and, as Breiman (2001b) and Van der Laan et al. (2011) cautioned, possibly draw wrong conclusions.

ML models, on the other hand, excel for complex problems with an unbounded number of components that entertain ambiguous and entangled relationships, i.e. ML models are highly expressive (Gühring et al., 2022). Indeed, given enough data, effective prediction with ML requires less subject-domain background knowledge than traditional modeling approaches (Bailer-Jones & Bailer-Jones, 2002). While the definition of a prediction task and the encoding of features are still largely based on domain knowledge, the choice of model class, hyperparameters and learning algorithms is often guided by the data types and aims to promote efficient learning for the respective modality, e.g. by selecting architectures such as CNNs for images, LSTMs for sequences or GNNs for relational data.

The gain in generality and convenience with ML comes at a price—ML models are generally far from being ER. As also argued in Bailer-Jones and Bailer-Jones (2002), Bokulich (2011) and Boge (2022), ML models (e.g. paradigmatically artificial neural networks) contain model elements such as weights that have no obvious phenomenon counterpart.

Example ML Associational Model: Artificial Neural Network (ANN)

Suppose we want to improve on the predictive performance of our simple linear model (Eq. 1), and opt instead for a dense three-layer neural network that uses all available features to predict math grades. To train it, we minimize the MSE on training data, but now use gradient descent with an adaptive learning rate for lack of an analytical solution. The trained model can be described as a function parameterized by \(31\times 31\) weight matrices \({\hat{W}}_1\), \({\hat{W}}_2\), \({\hat{W}}_3\) and \(31\times 1\) bias vectors \(\hat{\varvec{b}}_1,\hat{\varvec{b}}_2,\hat{\varvec{b}}_3\) using component-wise ReLU activations \({\text {ReLU}}(\varvec{x}_j) := \max (\varvec{x}_j,0)\):

While this ANN achieves a test-set MSE of just 8.9 compared to 16.0 for the simple linear model,Footnote 5 it is now highly unclear what aspects of our phenomenon the ANN parameters correspond to. Its weights and biases are very hard or even impossible to interpret: any individual weight might have a positive, neutral, or negative effect on the target, dependent on all other model elements. Similarly, the design of the architecture aims to maximize predictive performance, rather than to reflect any actual or even hypothesized dependencies between components of the phenomenon.

5 But Do ML Model Elements Really Not Represent?

One may believe that we went too fast here and argue that individual elements of ML models do have a natural phenomenon counterpart, one that only surfaces after extensive scrutiny. The underlying intuition is that human representations are near-optimal to perform prediction tasks and will be eventually rediscovered by ML algorithms. We find this unlikely: ER is not enforced in most state-of-the-art models (Leavitt & Morcos, 2020) and, even worse, some widely-employed ANN training techniques, such as dropout, purposefully discourage ER in order to gain robustness (Srivastava et al, 2014). High-capacity ML models like ANNs are indeed designed for distributed representation (Buckner & Garson, 2019; McClelland et al., 1987).

Still, it has been claimed that model elements represent high-level constructs constituted from low-level phenomenon components that are often called concepts (Buckner, 2018; Bau et al., 2017; Olah et al., 2020; Räz, 2023). The idea is that similar to the hierarchical schema we use to understand nature, with lower level components such as atoms combining to form higher level entities such as molecules, cells, and organisms, representations in deep nets evolve through layers from pixels to shapes to objects. If this were the case, model elements or aggregates of such elements could be reconnected to the respective phenomenon entities; ER would be restored by the representations of coarse-grained phenomenon components.

While empirical research on neural networks finds that some model elements are weakly associated with human concepts ( Bau et al., 2017; Bills et al., 2023; Kim et al., 2018; Mu & Andreas, 2020; Olah et al., 2017; Voss et al., 2021), often these elements are neither the only associated elements nor exclusively associated with one concept as shown in Fig. 2 (Bau et al., 2017; Donnelly & Roegiest, 2019; Olah et al., 2020). Moreover, intervening on these model elements generally does not have the expected effect on the prediction—the elements do not share the causal role of the “represented” concepts, even in prediction (Donnelly & Roegiest, 2019; Gale et al., 2020; Zhou et al., 2018). It is therefore questionable in what sense, or even whether, individual elements of ML models represent (Freiesleben, 2023). Moreover, this line of research that tries to identify concepts in model elements predominantly focuses on images, where nested concepts are arguably easy to identify for humans.

ML models are generally not ER. Three input images synthesized to maximally activate a given unit in a neural network (Olah et al., 2020) illustrate how “concepts” as different as cat faces, fronts of cars, or cat legs all elicit strong responses, suggesting neural network elements generally do not represent unique concepts (Mu & Andreas, 2020; Nguyen et al., 2016)

In sum, current ML algorithms do not enforce ER—hence, trained ML models rely on distributed representations and cannot be reduced to logical concept machines. An associative connection between a model element and a phenomenon concept should not be confused with their equivalence. While research on the representational correlates of model elements may indeed seem fascinating, analyzing single model elements appears to be a hopeless enterprise, at least if the goal is to support scientific inference.

6 IML Analyzes the Model as a Whole, but Does It Allow for Scientific Inference?

Let us accept that ML models are indeed not ER. Can we still exploit their predictive power for scientific inference? We think this is indeed possible. Our approach is to regard the model as representational of phenomenon aspects only as a whole—we call this holistic representationality (HR). The idea behind HR is not new; it underlies, for example, modern causal inference (Van der Laan et al., 2011). HR has its roots in what Heckman (2000) calls Marschak’s Maxim – it is possible to directly describe an aspect of the data distribution without first identifying its individual components through a parametric model. ML models represent one paradigmatic case of HR models (Starmans, 2011).

The current research program in IML takes an HR perspective on ML models: Many IML methods analyze the entire trained ML model post-hoc just as an input-output mapping (Scholbeck et al., 2019), sometimes leveraging additional useful properties such as differentiability (Alqaraawi et al., 2020).

Initial definitions of, for example, global feature effects (Friedman et al., 1991), feature importance (Breiman, 2001a), local feature contribution (Štrumbelj & Kononenko, 2014) or model behavior (Ribeiro et al., 2016) have been presented. However, in recent years, many researchers have pointed out that these methods lead to counterintuitive results, and offered alternative definitions (Alqaraawi et al., 2020; Apley & Zhu, 2020; Janzing et al., 2020; Goldstein et al., 2015; König et al., 2021; Molnar et al., 2023; Strobl et al., 2008; Slack et al., 2020).

We believe that these controversies stem from a lack of clarity about the goal of model interpretation. Are we interested in model properties to learn about the model (model audit) or do we want to use them as a gateway to learn about the underlying phenomenon (scientific inference)? These two goals must not be conflated.

The auditor examines model properties e.g. for debugging, to check if the model satisfies legal or ethical norms, or to improve understanding of the model by intervening on it (Raji et al., 2020). Another function that an audit can have is to assess the alignment between model properties and our (causal) background knowledge, such as certain monotonicity constraints (Tan et al., 2017), which is particularly important when ML is used in high-stakes decision making. In all those use-cases, auditors even take interest in model properties that have no corresponding phenomenon counterpart, such as single model elements or the behavior of the model when applied on unrealistic combinations of features. The scientist, on the other hand, wants to learn about model properties only in so far as they can be interpreted in terms of the phenomenon.

This does not imply that model audit is irrelevant for scientists. In fact, a careful model audit that shows what the model does and how reliable it is appears as a prerequisite for trustworthy scientific inference.

7 How to Think of Scientific Inference with HR Models?

We just argued that ML models are generally not ER and therefore do not allow for scientific inference in the traditional way. HR offers a viable alternative, however, the IML research community currently conflates different goals of model interpretation. Our plan in the following sections is to show how a HR perspective enables scientific inference using IML methods. Particularly, we show which IML methods qualify as property descriptors, i.e. quantify properties of the phenomenon, not just the model. Figure 3 describes our conceptual move: instead of matching phenomenon properties with model parameters as in ER models, we match them with external descriptions of the whole model.

In what follows, we will focus on scientific inference with trained ML models, as these constitute a paradigmatic and highly relevant category of HR models, even though our theory of property descriptors is generally applicable to any HR model as long as we know what it holistically represents.

Property descriptions distill phenomenon properties from HR models. Instead of explicitly encoding phenomenon properties as parameters like for ER models, HR models (e.g. ML models) encode phenomenon properties in the whole model. We propose that these encoded properties can be read out with property descriptions external to the model. Property descriptors can take on the inferential role of parameters, for example of coefficients in linear models

In scientific inference, we start from a question concerning a phenomenon and some relevant data about that phenomenon. Even though in simple cases fitting properly constructed ER models can provide interpretable answers, for complex phenomena, a lack of model capacity often results in poor answers. In contrast, while recent ML models are opaque, they have the required representational capacity, and multiple IML methods already exist to probe them in various ways. What is missing, and what we are proposing here, is a way to map the initial question to a relevant IML method so that we can perform scientific inference even with models just aimed at predictive performance.

The key missing ingredient for scientific inference is to link the phenomenon and the model. We propose to draw this link using statistical learning theory, which characterizes what optimal ML models can holistically represent. If the question can be in principle answered with an ideal model, we expect an approximate model to be able to answer it approximately. The problem is now reduced to qualifying what an approximate model is, and quantifying the error induced by the approximation. The same schema applies to using limited data as opposed to infinite data: we are fine with an approximate answer, so long as we can quantify the uncertainty.

8 What Aspects of Phenomena Do ML Models Holistically Represent?

Which aspects of a phenomenon ML models can represent under ideal circumstances depends on the data setting, the learning paradigm, and the loss function. We focus here on supervised learning from identically and independently distributed (i.i.d.) samples. In this widely useful setting, statistical learning theory provides us with optimal predictors (Hastie et al., 2009, p. 18–22) as a rigorous tool to address model representation.

Using the notation \({\varvec{X}{:}{=}(X_1,\ldots ,X_n)}\) for the input variables and \(Y\) for the target variable with ranges \(\mathcal {X}\), and \(\mathcal {Y}\), we now formalize what characterizes the optimal prediction model and how to train such models from labeled data.

Optimal Predictors

An optimal predictor \(m(\varvec{x})\) predicts realizations of the target \(Y\) from realizations of the input \(\varvec{X}\) with minimal expected prediction error i.e. \(m=\underset{{\hat{m}}\in \mathcal {M}}{\mathop {\textrm{arg}\,\textrm{min}}}\,\textrm{EPE}_{Y|\varvec{X}}({\hat{m}})\), with

where L is the loss function \(L(Y,m(\varvec{X})): \mathcal {X}\times \mathcal {Y} \rightarrow \mathbb {R}^+\) and \({\hat{m}}\) a model in the class \(\mathcal {M}\) of mappings from \(\mathcal {X}\) to \(\mathcal {Y}\). Table 1 recapitulates the optimal predictors for some standard loss functions.

Supervised Learning from Finite Data

In supervised learning, we seek to approximate an optimal predictor m by a model \({\hat{m}}\) based on a specific finite dataset \({\mathcal {D}{:}{=}\{ (\varvec{x}^{(1)},y^{(1)}),\dotsc ,(\varvec{x}^{(k)},y^{(k)})\}}\). This ‘training’ is carried out by a learning algorithm \(I:\Delta \rightarrow \mathcal {M}\), which maps the class \(\Delta\) of datasets of i.i.d. drawsFootnote 6, \((\varvec{x}^{(i)},y^{(i)})\sim (\varvec{X},Y\)), to a class of models \(\mathcal {M}\). Instead of the EPE itself, the learning algorithm minimizes the empirical risk on training data and is then evaluated based on the empirical risk on test data (i.e. data not contained in the training data), which constitutes a finite-data estimate of the EPE (Hastie et al., 2009).

9 Scientific Inference with ML in Four Steps

We have just outlined how (optimal) predictive models represent holistically aspects of the conditional distribution of the data, \(\mathbb {P}(Y\,|\,\varvec{X})\), see Table 1. In this section, we finally introduce property descriptors as the tools that allow us to investigate these aspects by describing their relevant properties. Property descriptors exploit the ML model to study general associations in our data, providing insight beyond raw prediction. We provide a four-step plan to construct such descriptors that is illustrated in Fig. 4.

We assume the following scenario: interested in answering a scientific question about a specific phenomenon, a researcher seeks to exploit an informative labeled dataset about the phenomenon, \({\mathcal {D}}\) together with a highly predictive (ML) supervised model trained on it, \({\hat{m}}\).

Our strategy encompasses the following key steps: 1. outlining the solution in an idealized context with an optimal predictor m together with prior probabilistic knowledge K, rather than a real model and real data, 2. demonstrating the feasibility of approximating this ideal under certain assumptions, 3. estimating the solution from finite data and a trained model, and 4. quantifying the uncertainty inherent in these approximations.

Step 1: Formalize Scientific Question As an Estimand

The first step in our approach is to formalize the scientific question, Q, as a statistical estimand (Lundberg et al., 2021). This estimand represents a probabilistic query on \(\varvec{X}\) and \(Y\).

Example: The Link Between Language and Math Skills

An educator is interested in how math skills relate to language skills. She has access to a relevant labeled dataset, consisting of student grades in Portuguese and math, represented by the random variables \(X_p\) and \(Y\) respectively. The question of what is the expected grade in math for any given Portuguese grade can be formalized as an estimand, the conditional expectation, \(Q:=\mathbb {E}_{Y\,|\,X_p}[Y\,|\,X_p]\).

Step 2: Identify the Estimand with a Property Descriptor

Having Q, we now ask if it can be derived from an optimal model m (e.g. one of those in Table 1). Clearly, if Q cannot be derived from m, using the actually available approximate \({\hat{m}}\) will be inviable. Even m alone will often not suffice. Since m represents aspects of \(\mathbb {P}(Y\,|\,\varvec{X})\), we also require probabilistic knowledge K about \(\mathbb {P}(\varvec{X})\) and sometimes even of \(\mathbb {P}(Y)\) to derive relevant inferences from m. Note that K is generally not available but must again be estimated from data. Following the reasoning with the ideal model, we consider whether the problem could be resolved assuming we have additional probabilistic knowledge K.

We deem an estimand identifiable with respect to available knowledge K if it can be derived from the optimal predictor m and K. To establish identifiability means to provide a constructive transformation of m and K into Q, ideally, the one with the most parsimonious requirements on K, since in the real world K is obtained by collecting data or positing assumptions. We call this constructive transformation a property descriptor, and demand that it be a continuous function \(g_K\) w.r.t. metrics \(d_{\mathcal {M}}\) and \(d_{\mathcal {Q}}\):Footnote 7

The output space \(\mathcal {Q}\) remains deliberately unspecified; depending on the particular scientific question, we might want \(\mathcal {Q}\) to be a set of real numbers, vectors, functions, probability distributions, etc.

Example: Property Descriptor for a Multivariable Model

Consider a multivariable model trained to minimize the MSE loss, such as our ANN (Eq. 2), which predicts math grades from all available features. The model approximates the conditional expectation \(m(\varvec{X})=\mathbb {E}_{Y\,|\,\varvec{X}}[Y\,|\,\varvec{X}]\).Footnote 8 This is an optimal predictor, but in contrast to our desired estimand Q, it uses the Portuguese grade \(X_p\) as well as other features, denoted \(\varvec{X}_{-p}\). We can obtain Q from m by marginalizing over \(\varvec{X}_{-p}\), i.e. our Q is identifiable if we have the necessary \(\mathbb {P}(\varvec{X}_{-p}\,|\,X_p):=K\) to compute the expectationFootnote 9

Here \(g_K\) is our property descriptor that takes m and K into Q. It is generally defined for an arbitrary model \({\hat{m}}\in \mathcal {M}\) as

Note that \(g_K({\hat{m}})\) is continuous on \(\mathcal {M}\) and in fact corresponds to the well-known conditional partial dependence plot (cPDP; also known as Apley & Zhu, 2020 M-plot, 2020).

Step 3: Estimate Property Descriptions from a Trained Model and Data

In reality, we rarely have optimal predictors, they are theoretical constructs. Instead, we have trained ML models that approximate these theoretical constructs. We call the application of a property descriptor to our concrete ML model, \(g_K({\hat{m}})\), a property description. Having assumed the continuity of property descriptors above guarantees that we obtain an approximately correct estimate when our ML model is close to the optimal model.

Similarly, we do not have access to an ideal K. Instead, we have to estimate it using data and inductive modeling assumptions (e.g. Rothfuss et al., 2019). Ultimately, our model and property descriptions may be evaluated using not just the training and test dataset \(\mathcal {D}\) (see Sect. 8), but also relevant available unlabeled data or artificially generated data. We call this bundle the evaluation data \(\mathcal {D}^*\supseteq \mathcal {D}\).

A way to estimate property descriptions with access only to the ML model and evaluation data is provided by property description estimators, which we assume to be unbiased estimators of \(g_K\), i.e. the function \({\hat{g}}_{\mathcal {D}^*}:\mathcal {M}\rightarrow \mathcal {Q}\) fulfills

Example: Practical Estimates of Property Descriptions

Our evaluation dataset \(\mathcal {D}^*\) comprises the initial training and test dataset \(\mathcal {D}\) as well as artificial instances created by the following manipulation: we make six full copies of the data, and jitter the Portuguese grade by \(1,-1,2,-2,3\) or \(-3\) respectively in each of them. This augmentation strategy reflects how we understand the Portuguese grade as noisy based on our background knowledge of how much student performance varies daily and teachers grade inconsistently. Let the students with jittered Portuguese grade i be \(\mathcal {D}^*_{|x_p=i}{:}{=}(x\in \mathcal {D}^* \,|\,x_p=i)\), then, we can calculate the property description estimator at i as the following conditional mean (an unbiased estimator of the conditional expectation):

The estimated answer to our initial question is plotted in Figure 5a. Math grades appear to depend on Portuguese grades only in the range \(x_p\in (8\text {--}17)\). However, Figure 5b shows that we have very sparse data in some regions (e.g. very few students scored below \(x_p=8\)), a fact that we must take into account before confirming this first impression.

a Shows the cPDP estimate of \(\mathbb {E}_{Y\,|\,X_p}[Y\,|\,X_p]\) via (4). Note that the grade jittering strategy described in the text allows us to evaluate e.g. \({\hat{m}}(x_p=3)\) even though we have no data for that value. b Shows the histogram of grades in Portuguese in the original dataset (Cortez & Silva, 2008)

Step 4: Quantify the Uncertainties in Property Descriptions

We have shown how we can estimate Q using an approximate ML model paired with a suitable evaluation dataset. But how good is our estimate?

In estimating Q using the ML model \({\hat{m}}\) instead of the optimal model m, we introduce a model error, \(\textrm{ME}[{\hat{m}}]:=d_{\mathcal {Q}}\bigl (g_K(m),g_K({\hat{m}})\bigr )\). Moreover, by using the evaluation data \(\mathcal {D}^*\) instead of knowledge K, we also introduce an estimation error, \(\textrm{EE}[\mathcal {D}^*]:=d_\mathcal {Q}\bigl (g_K({\hat{m}}),{\hat{g}}_{\mathcal {D}^*}({\hat{m}})\bigr )\).

In theory, the model error and the estimation error can be cleanly separated. In practice, however, they are often statistically dependent because the training and the evaluation data overlap. Fortunately, there exist various sample splitting approaches that allow to circumvent or minimize this dependence (James et al., 2023). Generally, neither the model error nor the estimation error can be computed perfectly; this would require access to the optimal model m and infinitely many data instances. Nevertheless, we can quantify them in expectation.

An intuitive approach to quantifying the expected errors is to decompose them into bias and variance contributions. For the bias-variance decomposition, we assume the metric \(d_\mathcal {Q}\) to be the squared error.Footnote 10 Considering the dataset that we entered into the learning algorithm as a random variable \(D\), we can decompose the expected model error as follows

where \({\hat{m}}{:}{=}I(\mathcal {D})\) is the trained model (output of a machine learning algorithm I for dataset \(\mathcal {D}\), Sect. 8). Similarly, considering the evaluation data as a random variable \(D^*\), we can also decompose the expected estimation error as follows

In this case, the bias term vanishes because the property description estimator is by definition unbiased w.r.t. the property descriptor.

There are indeed different approaches to quantify the uncertainties of property descriptions. The standard frequentist approach is to estimate the variance in above equations using refitted models and property descriptions with resampled data (Molnar et al., 2023). But there are also Bayesian approaches where uncertainty is directly baked into the prediction model: BART by Chipman et al. (2012), for example, uses a sum-of-trees to perform Bayesian inference and directly provides uncertainties for inferential quantities like marginal effects. Similarly, Gaussian processes provide predictive error-bars (Rasmussen & Nickisch, 2010), which offer a natural confidence measure for property descriptions (Moosbauer et al., 2021). Finally, Bayesian posteriors can even be obtained for neural network architectures (Gal & Ghahramani, 2016; Van Amersfoort et al., 2020) and leveraged to estimate the uncertainty of property descriptions like counterfactuals (Höltgen et al., 2021; Schut et al., 2021).

Example: Uncertainty in Property Descriptions

We will certainly obtain different cPDPs (Fig. 5a) if we use different models with similar performance, or different subsets of evaluation data, so how much can we then rely on these cPDPs?

The estimates of the variances of the cPDP by Molnar et al. (2023) allow us to calculate pointwise confidence intervals (Fig. 6). We can calculate a confidence interval at significance \(\alpha\) that only incorporates the estimation uncertainty due to finite data as follows:

where \(t_{1-\alpha /2}\) is the critical value of the t-statistic. We can also obtain a confidence interval that incorporates both model and estimation uncertainty:

For this combined confidence interval to be valid, the estimation of the property descriptions must be unbiased. While unbiasedness of the property description estimator and the ML algorithmFootnote 11 is sometimes sufficient to prove the unbiaseness of property descriptors (Molnar et al., 2023), there often is a tension between the bias-variance trade-off made to obtain global model fit versus the best estimate of the more localized property descriptions (Van der Laan et al., 2011). Fortunately, there exist various debiasing strategies using influence functions (Hines et al., 2022) like the targeted maximum likelihood update (Van Der Laan & Rubin, 2006) or orthogonalization (Chernozhukov et al., 2018).

Figure 6 shows that for students with Portuguese grades between 8 and 17, we can be very confident in our model and the relationship it identifies between math and Portuguese grade.Footnote 12 However, for Portuguese grades outside this range, the true value might be far off from our estimated value, as we can see from the width of the confidence intervals. For these grade ranges, gathering more data may reduce our uncertainty.

a Shows the cPDP (dots) and its estimation error due to Monte-Carlo integration (dashed lines, Eq. 5). b Shows the cPDP with both estimation and model error (Eq. 6). Confidence bands cover the true expected math grade in 95% of all cases. These plots jointly suggest that most of the uncertainty is due to model error

Synopsis of the Steps

We provide in Fig. 7 an overview of the functions and spaces involved in IML for scientific inference. We started from a phenomenon and formalized a scientific question—our estimand Q. Using a learning algorithm I on a dataset \(\mathcal {D}\) representative of the phenomenon, we trained an ML model \({\hat{m}}\) that approximates the optimal model m. We then set out to estimate Q from \({\hat{m}}\). We defined a property descriptor \(g_K\), that is, a function that allows to compute Q from m given K, respectively approximates Q from \({\hat{m}}\) given K. Because \(g_K\) requires probabilistic knowledge about \(\mathbb {P}(\varvec{X},Y)\), which can only be obtained from data, we introduced a property description estimator \({\hat{g}}_{\mathcal {D}^*}\)—a function estimating Q solely from a finite evaluation dataset \(\mathcal {D}^*\). Finally, we showed how the expected error due to our approximate modeling and finite-data-estimation can be quantified with respective confidence intervals \(\textrm{CI}_{\textrm{ME}[{\hat{m}}]}\) and \(\textrm{CI}_{\textrm{EE}[D^*]}\).

From datasets to inferences via ML models. Mappings (arrows) connect datasets with models and descriptions (points within sets represented as ovals; confidence intervals in shades of green). Practical IML descriptions \({\hat{g}}_{{\mathcal {D}}^\star }({\hat{m}})\) (bottom arrow) approximate with quantifiable uncertainty an estimand Q by using a model \({\hat{m}}\) fit on \({\mathcal {D}}\) together with an evaluation dataset \({\mathcal {D}}^*\)

10 Some IML Methods Already Allow Scientific Inference

Many estimands are relevant across a wide variety of scientific domains. The goal of practical IML research for inference should be to define practical property descriptors for such estimands and provide accessible implementations of these descriptors, including quantification of uncertainty. To find out about scientifically relevant estimands, IML researchers, statisticians, and scientists must interact closely.

In Table 2 we present a few examples of practical inference questions that can be addressed by existing IML methods, i.e., these methods can operate as property descriptors already. We distinguish between global and local questions about the phenomenon: global questions concern general associations (e.g.between math and Portuguese grade), local questions concern associations in the local neighborhood of an instance (e.g. between study time and math grade for a specific student), and appear with gray background. The last column identifies current IML methods that provide approximate answers, albeit often without uncertainty quantification. To draw scientific inferences, we ultimately need versions of IML methods that account for the dependencies in the data (Hooker et al., 2021).

Not only the IML literature has worked on property descriptors, but the fairness literature also discussed measures that can be described as property descriptors, e.g. statistical parity (see Verma and Rubin (2018) for an overview). Similarly, robustness measures under distribution shifts (see Freiesleben & Grote, (2023) for an overview) as well as methods that examine the system stability in physics-informed neural networks (Chen et al., 2018; Raissi et al., 2019) can be seen as property descriptors.

Example: Illustrating the Methods from Table 2 on the Grading Example

To illustrate how the IML methods from Table 2 can help to address general inferential questions, we will introduce them along our grading example. We will begin with a discussion of global questions before moving on to local questions.

cFI

We have seen in the pedagogical example above that the association between language and math skills can be inferred using the cPDP. Another question is whether language skill provides information about math skill that is not contained in other student features (e.g., study-time, absences, and parents educational background). This is a common question among education scientists (Peng, Lin, Ünal et al., 2020) and can be formalized by asking if language skill \(X_p\) is independent of math skill \(Y\), conditional on the remaining features \(\varvec{X}_{-p}\):

To test conditional independence, conditional feature importance (cFI) can be used (Ewald et al., 2024; König et al., 2021; Strobl et al., 2008; Watson & Wright, 2021). cFI compares the performance of the model before and after perturbing the feature of interest while preserving the dependencies with the remaining features. If the Portuguese grade is conditionally independent of the math grade, all relevant information in the Portuguese grade can be reconstructed from the remaining features so that the performance is not affected by the perturbation. Thus, if the cFI is nonzero, Portuguese grade must be conditionally dependent with the math grade. To account for the uncertainties, we rely on Molnar et al. (2023).

In Figure 8, we applied cFI to our ML model \({\hat{m}}\) and computed the respective confidence interval (quantifying both model and estimation uncertainty). The importance of the Portuguese grade for the math grade is significant according to the 90% confidence intervals, as zero is not contained in the interval. On this basis, the scientist may reject \(H_0\).

Conditional feature importance of the Portuguese grade with 90% confidence interval. The confidence interval, which takes into account the model and estimation uncertainty, does not contain the value zero. On this basis, a researcher may reject the null hypothesis that the Portuguese grade is conditionally independent of the math grade given the remaining features

SAGE

Similar inferential questions as with the cFI can be addressed with Shapley additive explanations (SAGE, Covert et al., 2020. In contrast to the cFI, however, SAGE averages conditional importance relative to each subset of the features, often referred to as coalitions. Even if a feature has a cFI of zero, the corresponding SAGE values can be positive because the feature has positive importance in at least one of the coalitions (Ewald et al., 2024).

SAGE values are based on so-called surplus contributions of a feature of interest p (say the Portuguese grade) with respect to a coalition S (e.g., the number of absences and neighborhood). More specifically, surplus contributions quantify how model performance changes when the model, which only has access to features in the coalition S, additionally gets access to j.Footnote 13

Notably, the surplus of a feature depends on the coalition S: Adding a dependent feature to the coalition decreases the surplus, e.g. the surplus of the current Portuguese grade is lower if we already know the Portuguese grade from last term. Conversely, adding a collaborating feature can increase a feature’s surplus, e.g., the effect of the mother being unemployed may be larger if the father is also unemployed. By averaging over the surplus of a feature across all possible coalitions (weighted by the number of possible feature orderings in which the coalition precedes the feature of interest), SAGE provides a broad insight into the importance of a feature. The idea behind SAGE is based on the Shapley value (Covert et al., 2020), a concept from cooperative game theory (Shapley et al., 1953).

PRIM

What defines the optimal math student? Education scientists have clear ideas about general indicators of student success in math, such as parents’ social and economic status, students’ habits and motivation, and cultural factors (Kuh et al., 2006). On this basis, they may construct a hypothetically optimal math student: highly educated parents who work in STEM professions, high study time and frustration tolerance, and a cultural environment that values education. One approach to test scientists’ intuitions about the optimal math student is to compare her expected success with the expected success of the optimal student(s) according to the data distribution. Patient rule induction (PRIM, Friedman & Fisher, 1999) estimates the student(s) with optimal conditions according to the data distribution, thus allowing scientists to test their hypotheses. Note however that the evaluation of such a test can be difficult: The optimal math student(s) according to scientists may lie outside of the data distribution, making their expected success difficult to estimate. In general, the estimation of high-dimensional feature vectors rather than scalars may be statistically less stable, leading to greater uncertainty.

ICE

Education scientists may wish to infer how study time statistically influences expected student success of individual students in math (Rohrer & Pashler, 2007). One way to formalize this question is by the conditional expectation of the math grade given a set of input features where study time is varied. This is exactly the quantity that individual conditional effect curves (ICE, Goldstein et al., 2015) estimate. However, caution is advised, as the variation of study time may break dependencies with other features, forcing the model to extrapolate (Hooker et al., 2021). On the basis of ICE curves, education scientists may hypothesize that for a subset of students, namely efficient learners, the effect of increasing study time on students’ performance saturates (Rohrer & Pashler, 2007); a hypothesis that can then be tested in a different study.

cSV and ICI

Education scientists want to understand the reasons behind the low expected success of some specific students in math (Saha et al., 2024). One way to approach this question is to investigate how knowing certain features (e.g. study time, language grades, or absences) affects the expected math grade for specific low performing students. One property descriptor that allows to approach this question is the conditional Shapley Value (cSV, Aas et al., 2021).

Like SAGE values, cSV are Shapley value methods based on averaging the surplus contribution of a feature j across all possible coalitions S; In contrast to SAGE surplus contributions, cSV surplus contributions do not quantify the surplus in prediction performance for all students, but the change in the predicted value for a specific student. In our example, the cSV of the Portuguese grade p for an individual x tells us how the expected value of the student’s math grade changes when we get access to the Portuguese grade \(x_p\), averaged over all possible coalitions \(x_S\) of features that we already know.

Individual conditional importance (ICI, Casalicchio et al., 2019) also estimates the importance of individual features for the expected math grade of a given student, but not the effect on the prediction itself, but on the accuracy of the prediction (in terms of its loss).

Counterfactuals

Education scientists are interested in identifying feasible conditions that increase the expected success in math of individual students (Saha et al., 2024). For a given student, this question can be framed as a search for similar alternative student features with higher expected math grades. This is the target estimand of plausible counterfactual explanations (Dandl et al., 2020). Analyzing the difference between the original instance and the alternative student features allows education scientists to identify features that are locally associated with higher student success. For example, plausible counterfactuals for a specific student with a bad math grade, 6 absences and a mediocre Portuguese grade suggest that a reduced number of absences to 3 would have yielded a better expected math grade. Counterfactuals do only reflect associations in the data and should not be interpreted causally (see Sect. 11), however, based on counterfactuals, scientists can derive hypotheses about causally relevant factors, which may then be tested in an experimental study. However, it should be noted that there can be (infinitely) large numbers of such counterfactual instances, and selecting among them requires domain knowledge (Mothilal et al., 2020).

The Delicate Line Between Exploration and Inference

Some of the examples just presented, particularly regarding local descriptors, are concerned with exploring data properties rather than drawing concrete inferences using statistical tests. A delicate distinction, according to which inference requires a constrained set of hypotheses to be tested, whereas exploration describes the search for hypotheses (Tredennick et al., 2021).Footnote 14 Our notion of inference—investigating unobserved variables and parameters to draw conclusions from data—is broader and encompasses data exploration. We motivated local property descriptors with these rather exploratory questions because the data support for local estimands is very limited, which leads to greater uncertainty and, consequently, to inferential tests with low statistical power. For this reason, local descriptors are rarely used in practice to test concrete hypotheses.

Disagreement Between Descriptors

There is a growing concern in the IML community about the fact that different IML methods disagree. For example, disagreement has been demonstrated for common feature attribution techniques such as Shapley values and LIME, but also for commonly used saliency maps (Adebayo et al., 2018; Ghorbani et al., 2019; Krishna et al., 2022). While at first glance this may indicate that the methods have limited use in science, they can only diverge meaningfully if they address different inferential questions. Given two property descriptors have the same estimand, they must by definition (given enough data) converge to the same property descriptions.

11 Property Descriptors Do Not Generally Provide Causal Inferences

With property descriptors, we can access a wealth of properties of the observational joint distribution that answer various scientific questions. While the observational joint probability distribution is indeed interesting, it remains on rung 1 of the so-called ladder of causation—the associational level (Pearl & Mackenzie, 2018). What scientists are often much more interested in, is answering causal questions, such as what is the average treatment effect (rung 2) (Imbens & Rubin, 2015) or even counterfactual, what-if questions (rung 3; Salmon, 1998, Woodward & Ross, 2021). In our example, we may be interested not only in how strongly students’ language and math skills are associated (rung 1), but also in how much the provision of tutoring in Portuguese affects students’ math skills (rung 2) or whether a specific student (who is not a native Portuguese speaker) would have done better in math had she received Portuguese tutoring at a young age (rung 3).

Supervised ML models only represent aspects of the observational distribution (rung 1) and, therefore, do not directly provide causal insights (Pearl, 2019). Consequently, property descriptions do not provide causal insights either. Many IML works that discuss causality (Janzing et al., 2020; Schwab & Karlen, 2019; Wang et al., 2021) are only concerned with causal effects on model predictions, which do not necessarily translate into a causal insight into the phenomenon (König et al., 2023)—they address only model audit.

Machine learning methods can still be used to gain causal insights into natural phenomena, however, only if additional causal assumptions are posed. For example, if the so-called backdoor criterion is fulfilled in the causal graph, we can identify the average causal effect from observational data using the backdoor adjustment formula (Pearl, 2009). Or, formulated in terms of the Potential Outcomes (PO) frameworkFootnote 15 by Rubin (1974): if conditional exchangeability is fulfilled, we can use the adjustment formula. Given the causal effect can be identified, there are various ML-based approaches to estimate it, like the T-learner, the S-learner, and doubly robust methods (see Künzel et al. (2019), Knaus (2022), Dandl (2023) for an overview). Prominently, there is double ML (Chernozhukov et al., 2018; Fink et al., 2023) and targeted learning (Van der Laan et al., 2011) that provide unbiased estimates of various identifiable causal estimands. Note, however, that to arrive at the necessary knowledge, we require interventional data and/or have to make strong, untestable assumptions (Rubin, 1974; Holland, 1986; Meek, 2013; Spirtes et al., 2000). Further, even if a causal estimand is identifiable and can therefore be estimated with ML, estimation from finite data may be challenging (Künzel et al., 2019).

In certain simplified scenarios, IML methods applied to associational ML models can be helpful for causal inference. Firstly, if all predictor variables are causally independent and the features cause the prediction target, the causal model interpretation implies the causal phenomenon interpretation. Secondly, associative models in combination with IML can help estimate causal effects even in the absence of causal independence if they are, in principle, identifiable by observation. For example, the partial dependence plot coincides with the so-called adjustment formula; It, therefore, identifies a causal effect if the backdoor criterion (conditional exchangeability) is met and the model optimally predicts the conditional expectation (Zhao & Hastie, 2021). Thirdly, when there is access to observational and interventional data during training, ML models trained with invariant risk minimization predict accurately in interventional environments (Arjovsky et al., 2019; Peters et al., 2016; Pfister et al., 2021). For such intervention-stable models, IML methods that provide insight into the effect of interventions on the prediction also describe causal effects on the underlying real-world components (König et al., 2023).

While supervised learning learns from a fixed dataset, reinforcement learning (RL) systems are designed to act and can, therefore, assess the effect of their interventions. As such, RL models can be designed to provide causal interpretations (Bareinboim et al., 2015; Gasse et al., 2021; Zhang & Bareinboim, 2017).

Finally, another way in which ML supports causal inference is by facilitating practical scientific inference based on potentially complex, but still ER, mechanistic models that are frequently implemented as numerical simulators. Indeed, simulators can represent complex, causal dynamics in an ER fashion, but often at the price of an intractable likelihood and, thus, expensive or even intractable inference. A variety of new methods for likelihood-free inference (Cranmer et al., 2020) allows us to estimate a full posterior distribution over ER parameters for increasingly complex models using ML.

12 Discussion

ER models enable straightforward scientific inference because their elements are meaningful: they directly represent elements of the underlying phenomenon. While ML models are generally not ER, property descriptors can offer an indirect route to scientific inference, provided whole-model properties have a corresponding phenomenon counterpart. We have shown how phenomenon representation can be accessed through optimal predictors and described how to practically construct property descriptors following four steps: the first two steps clarify how domain questions and property descriptors can be theoretically connected, step three shows how to practically estimate property descriptions with ML models and data, and step four allows to evaluate how much the estimated property descriptions may deviate from the ground truth. We highlighted some current IML methods that can already be seen as property descriptors and identified what inference questions they answer.

Scope for HR Modeling in Science

ML models represent just an extreme case where almost no element of the model is representational. There is a continuum between ER models and HR-only models—ranging from full ER models like Newton’s gravitational laws to intermediate statistical models containing higher-order interaction terms to full-blown HR models like deep neural networks. Our main message is: the four-step approach can be used to extend inference to any non ER model, whether ML or not.

Why should scientists use HR models at all? Rudin (2019) argued that whenever there is an HR model with high predictive accuracy, there is also an ER model that achieves similar performance. She backs up her argument with several examples, e.g. loan risk prediction (Rudin & Radin, 2019), recidivism prediction Zeng et al. (2017), finding pattern in EEG data (Li et al., 2017), and even image classification (Chen et al., 2019), where (relatively) interpretable models achieve performance comparable to less interpretable HR models. She therefore recommends favoring ER models in high-stakes settings.

In science, the stakes are quite high and interpretability is highly valued. Therefore, we believe that highly-predictive ER models, when available, should be favored in science over HR models. The problem is that while such powerful ER models may exist, they can be hard to find, especially in model classes with high complexity (Nearing et al., 2021). While reducing the complexity of the model class can help to find high-performing ER models in noisy environments (Semenova et al., 2024), this requires substantial domain knowledge about the data generating process, which is often not available. Choosing simplified models with little predictive performance just for the sake of inherent interpretability is no viable path (Breiman, 2001b). Interpreting models that poorly approximate the phenomenon will lead to unreliable conclusions (Cox, 2006; Good & Hardin, 2012).Footnote 16

However, there could be a reasonable compromise between ER and HR modeling, where some parts of the model are ER, while other parts, where less parametric assumptions are justified, are filled with powerful HR models: This approach is commonly taken in the intersection between causal modeling and ML, where the causal graph is ER whereas the functional dependencies are modeled with HR models (Peters et al., 2017). Similarly, using flexible ML models while enforcing constraints in the training process like additivity can lead to partially ER models (Hothorn et al., 2010; Van der Laan et al., 2011). In physics we may want to model a phenomenon with a classical ER model, namely ordinary differential equations, without constraining the function (Dai & Li, 2022), or we may want to enforce a preference for certain functions (e.g. exponential or sinus) without limiting the possible dependencies (Martius & Lampert, 2016).

Limitations of Our Framework

How Useful are Property Descriptors for Scientific Inference in Practice?

We have shown that, under certain assumptions, property descriptors provide insight into the phenomenon. However, we have not shown that property descriptors are the best approach to gain this insight. The cPDP, for example, could also be estimated directly from the data without interposing an ML model and a property descriptor. Does it make sense to take the detour via the ML model and property descriptors instead of directly estimating the quantity of interest, e.g. using targeted learning (Van der Laan et al., 2011)?

One case is when access to the model, the data, or computational resources is limited, which is common with proprietary models like ChatGPT or other sophisticated models like Alphafold (Senior et al., 2020) or ResNet (He et al., 2016). Due to the data and expertise that went into these models, they are ideal candidates for mining them for insights with IML tools. However, computing interpretations for these models can again be computationally very expensive. Another use case of property descriptors is when scientists have set out to obtain predictions but want to gain additional insights from their model at low cost.

Irrespective of whether scientists should use property descriptors to make concrete scientific inferences, ample evidence in the published scientific literature shows that scientists use IML tools and draw inferences based on these interpretations (Gibson et al., 2021; Roscher et al., 2020; Shahhosseini et al., 2020). Our paper can help clarifying what inferences scientists are enabled to draw from interpretations and what IML tools to use.

To make a fair comparison between the inferential qualities of targeted learning and property descriptors a systematic study would be needed. Under what conditions do estimates based on property descriptors (e.g. conditional feature importance, Strobl et al., 2008) differ from targeted learning approaches (e.g. LOCO, Lei et al., 2018, Verdinelli & Wasserman, 2024)? First works on this question (Hooker et al., 2021; Verdinelli & Wasserman, 2024) indicate that targeted learning may be better suited for standard inferential tasks like estimating feature importance.

How to Obtain Realistic Data?

Many IML methods (e.g. Shapley values, LIME) rely on probing the ML model on modified instances (Scholbeck et al., 2019). These artificial “data” may be useful to audit the model, even though may never occur in the real world. However, if we want to learn about the world, artificial data is supposed to credibly supplement observations. Like others (Hooker & Mentch, 2021; Hooker et al., 2021; Mentch & Hooker, 2016), we recommend respecting the dependency structure in the data if we strive to draw valid scientific inferences with IML.

However, obtaining realistic data is hard. Strategies such as our grade jitter strategy are useful, but require expert domain knowledge of the dependency structure in the data. Conditional density estimation techniques (e.g. probabilistic circuits Choi et al., 2020) or generative models (e.g. generative adversarial networks, normalizing flows, variational autoencoders, etc.) provide paths to generate realistic data without presupposing expert knowledge. Unfortunately, they are often computationally intensive. Also, current IML software implementations often only offer marginal versions of IML methods that are unsuited as property descriptors. We urge the IML research community to provide efficient implementations of conditional samplers and integrate them into IML packages.

Can We Use Property Descriptors to Encode Background Knowledge?

We showed how to use property descriptors to extract knowledge from models. However, the converse direction of incorporating knowledge into models is also central for scientific progress (Dwivedi et al., 2021; Nearing et al., 2021; Razavi, 2021). There are already approaches that allow to enforce monotonicity constraints (Chipman et al., 2022) or sparsity in the training process (Martius & Lampert, 2016). But also property descriptors can be used to constrain the set of allowable models.Footnote 17 For example, we could promote specific property descriptions during training by modifying the loss function to penalize models that deviate from them. Such strategies are indeed common in the fairness literature, where loss functions are designed to optimize for certain fairness metrics, which can be seen as property descriptors [see (Pessach & Shmueli, 2022) for an overview].

What About Non-Tabular Data?

For some data types, such as images, audio, or video data, it is extremely difficult to formalize the estimand only in terms of low-level features such as pixels or audio frequencies. To follow our approach, we need a translation of high-level concepts (e.g. objects in images or words in audio) that scientists can use to formulate their questions in terms of the low-level features (e.g. pixels or audio frequencies) that the model works with. Such translations are notoriously difficult to find, proposals either rely on labeled data to learn such representations (Jia et al., 2013; Koh et al., 2020; Zaeem & Komeili, 2021) or discuss constraints to learn them via unsupervised learning (Bengio et al., 2013; Schölkopf et al., 2021). We think that such a translation between low-level features and high-level concepts is one of the most pressing problems of IML research.

13 Conclusion

Traditional scientific models were designed to satisfy elementwise representationality, allowing scientists to learn about Nature by direct inspection of model elements. While ML models trained for prediction do not satisfy elementwise representationality, they do offer a unique ability to represent complex phenomena by digesting enormous amounts of noisy, multivariate and even multimodal observations. We have shown that it is still possible to learn about the phenomenon using them: all we need to do is to interrogate the model with suitable property descriptors. Our approach provides philosophers, IML researchers and scientists with a novel philosophical perspective on scientific representation with ML models and a valuable methodology for gaining insight into phenomena from such models using interpretation methods.

Notes

The double ML methodology has also been applied for other inferential problems, see Fink et al. (2023).

Note that our toy model is for illustration only, and not meant to reflect social science methodology.

This means that the proportion of CIs (each calculated from a theoretical, newly-sampled dataset) that contain the true parameter value \(\beta\) tends in the long-run to 95% (Heumann et al., 2016).

The expected math grade for a zero in Portuguese is \({\hat{\beta }}_0=0.80\). However, regressing on \(x_p-{\bar{x}}_p\) instead gives the more useful expected grade of an average Portuguese student (with \({\bar{x}}_p=12.55\)), \({\hat{\beta }}^\textrm{avg}_0=10.46\), with a 95% CI of \(10.05<\beta ^\textrm{avg}_0<10.88\).

Using a multiple linear model for fairer comparison results in a MSE of 12.4, reflecting a limited capacity to capture complex relationships.

The domain of I is only completely specified when the parameters that define the learning procedure and the search space of the algorithm (called hyperparameters in the context of \({\hat{m}}\)) are fixed. For our discussion, the reader may assume hyperparameters to have been fixed a priori by a human or an automated ML algorithm (Hutter et al., 2019).