Abstract

Let \(Z(t)= \exp \left( \sqrt{ 2} B_H(t)- \left|t \right|^{2H}\right) , t\in \mathbb {R}\) with \(B_H(t),t\in \mathbb {R}\) a standard fractional Brownian motion (fBm) with Hurst parameter \(H \in (0,1]\) and define for x non-negative the Berman function

where the random variable R independent of Z has survival function \(1/x,x\geqslant 1\) and

In this paper we consider a general random field (rf) Z that is a spectral rf of some stationary max-stable rf X and derive the properties of the corresponding Berman functions. In particular, we show that Berman functions can be approximated by the corresponding discrete ones and derive interesting representations of those functions which are of interest for Monte Carlo simulations presented in this article.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the study of sojourns of rf’s in a series of papers by Berman, see e.g., (1982; 1992) a key random variable (rv) and a related constant appear. Specifically, let \(Z(t)= \exp ( \sqrt{ 2} B_{{H}}(t)- \left|t \right|^{2 H}), t\in \mathbb {R},\) with \(B_{H}\) a fractional Brownian motion (fBm) with Hurst parameter \(H\in (0,1]\), that is a centered Gaussian process with stationary increments, \(Var(B_H(t))=\left|t \right|^{2H},t\in \mathbb {R}\) and continuous sample paths. In view of Berman (1992, Thm 3.3.1, Eq. (3.3.6)) the following rv (hereafter \(\mathbb {I}{\left\{ \cdot \right\} }\) is the indicator function)

plays a crucial role in the analysis of extremes of Gaussian processes. Throughout this paper R is a 1-Pareto rv (\(\ln R\) is unit exponential) independent of any other random element.

The distribution function of \(\epsilon _0(RZ)\) is known only for \(H\in \{1/2,1\}\). For \(H=1\) as shown in Berman (1992, Eq. (3.3.23)) \(\epsilon _0(RZ)\) has probability density function (pdf) \( x^2 e^{- x^2/2}/(2 \sqrt{ \pi }),x>0\), whereas for \(H=1/2\) its pdf is calculated in Berman (1992, Eq. (5.6.9)).

The so-called Berman function defined for all \(x\geqslant 0\) (see Berman (1992, Eq. (3.0.2))) given by

appears also in Berman (1992, Thm 3.3.1, Eq. (3.3.6)).

An important property of the Berman function is that for \(x=0\) it equals the Pickands constant, see (Berman 1992, Thm 10.5.1) i.e., \(\mathcal {B}_{Z }(0) = \mathcal {H}_{Z }, \) where \(\mathcal {H}_{Z } \) is the so called generalised Pickands constant

This fact is crucial since \(\mathcal {B}_{Z }(0)\) is the first known expression of \(\mathcal {H}_{Z } \) in terms of an expectation, which is of particular usefulness for simulation purposes, see Falk et al. (2010), Dieker and Yakir (2014), and Hüsler and Piterbarg (2017) for details on classical Pickands constants.

Besides, Berman’s representation of Pickands constant yields tight lower bounds for \(\mathcal {H}_{Z }\), see Dȩbicki et al. (2019, Thm 1.1). As shown in Dȩbicki et al. (2019) for all \(x \geqslant 0\)

Motivated by the above definition, in this contribution we shall introduce the Berman functions for given \(\delta \geqslant 0\) with respect to some non-negative rf \(Z(t),t\in \mathbb {R}^d,d\geqslant 1\) with càdlàg sample paths (see e.g., Janson (2020), and Bladt et al. (2022) for the definition and properties of generalised càdlàg functions) such that

Specifically, for given non-negative \(\delta , x\) define

where

Here \(\lambda _0(dt)=\lambda (dt)\) is the Lebesgue measure on \(\mathbb {R}^d\), \(0 \mathbb {Z}^d=\mathbb {R}^d\) and \(\lambda _\delta (dt)/\delta ^d\) is the counting measure on \(\delta \mathbb {Z}^d\) if \(\delta >0\). Hence \(\mathcal {B}_{Z }^\delta (x),\delta >0\) is the discrete counterpart of \(\mathcal {B}_{Z }(x)\) and \(\mathcal {B}_{Z }^0(x)=\mathcal {B}_{Z }(x)\).

In general, in order to be well-defined for the function \(\mathcal {B}_{Z}^\delta (x), x\geqslant 0\) some further restriction on the rf Z are needed. A very tractable case for which we can utilise results from the theory of max-stable stationary rf’s is when Z is the spectral rf of a stationary max-stable rf \(X(t), t\in \mathbb {R}^d\), see (2.1) below.

An interesting special case is when \(\ln Z(t)\) is a Gaussian rf with trend equal to the half of its variance function having further stationary increments. We shall show in Lemma 4.3 that for such Z the corresponding Berman function \(\mathcal {B}_{Z }(x)\) appears in the tail asymptotic of the sojourn of a related Gaussian rf.

Organisation of the rest of the paper. In Section 2 we first present in Theorem 2.1 a formula for Berman functions and then in Corollary 2.3 and Proposition 3.1 we show some continuity properties of those functions. In Theorem 2.5 and Lemma 2.4 we present two representations for Berman functions and discuss conditions for their positivity. Section 3 is dedicated to the approximation of Berman functions focusing on the Gaussian case. All the proofs are postponed to Section 4.

2 Main Results

Let the rf \(Z(t), t\in \mathbb {R}^d\) be as above defined in the non-atomic complete probability space \((\Omega , \mathcal {F}, \mathbb {P})\). Let further \(X(t),t\in \mathbb {R}^d \) be a max-stable stationary rf, which has spectral rf Z in its de Haan representation (see e.g., de Haan (1984), Kulik and Soulier (2020))

Here \(\Gamma _i= \sum _{k=1}^i \mathcal {V}_k\) with \(\mathcal {V}_k, k\geqslant 1\) mutually independent unit exponential rv’s being independent of \(\{Z^{(i)}\}_{i=1}^\infty \) which are independent copies of Z. For simplicity we shall assume that the marginal distributions of the rf X are unit Fréchet (equal to \(e^{-1/x },x>0\)) which in turn implies \(\mathbb {E}{\left\{ Z (t)\right\} } =1\) for all \(t\in \mathbb {R}^d\).

Suppose further that for all \(T>0\)

and Z has almost surely sample paths on the space D of non-negative càdlàg functions \(f: \mathbb {R}^d \mapsto [0, \infty )\) equipped with Skorohod’s \(J_1\)-topology. We shall denote by \(\mathcal {D}=\sigma (\pi _t, t\in T_0)\) the \(\sigma \)-field generated by the projection maps \(\pi _t: \pi _t f= f(t), f\in D\) with \(T_0\) a countable dense subset of \(\mathbb {R}^d\). In view of Hashorva (2018, Thm 6.9) with \(\alpha =1, L=B^{-1}\), see also Planinić and Soulier (2018, Eq. (5.2)) the stationarity of X is equivalent with

valid for every measurable functional \(F: D \rightarrow [0,\infty ]\) such that \(F(cf)= F(f)\) for all \(f\in D, c>0\). Here we use the standard notation \(B^h Z(\cdot )= Z(\cdot -h), h\in \mathbb {R}^d\).

We shall suppose next without loss of generality (see Hashorva (2021, Lem 7.1)) that

Under the assumption that X is stationary \(\mathcal {B}_{Z }^\delta (x)\) is well-defined for all \(\delta ,x\) non-negative as we shall show below. We note first that, see e.g., Dȩbicki et al. (2019, 2022)

where \(\mathcal {H}_{Z}^\delta \) is the discrete counterpart of the classical Pickands constant \(\mathcal {H}_{Z}=\mathcal {H}_{Z}^0\). Hence for any \(x>0\) we have

Set below for \(\delta >0\)

and let \(S_0=S_0(Z)=\int _{ \mathbb {R}^d} Z (t) \lambda (dt)\). In view of (2.4) we have that \(S_0>0\) almost surely. Since we do not consider the case \(\delta >0\) and \(\delta =0\) simultaneously, we can assume that \(S_\delta >0\) almost surely (we can construct a spectral rf Z for X that guarantees this, see Hashorva (2021, Lem 7.3)).

In view of Dȩbicki et al. (2022, Cor 2.1) if \(\mathbb {P}{\left\{ S_0=\infty \right\} } =1\), then \(\mathcal {H}_{ Z }=0\) implying

The next result states the existence and the positivity of Berman functions presenting further a tractable formula that is useful for simulations of those functions.

Theorem 2.5

If \(\mathbb {P}{\left\{ S_0=\infty \right\} } <1\), then for any \(\delta , x\) non-negative constants we have

Moreover, (2.5) holds substituting \(S_\delta \) by \(S_\eta \), where \(\eta >0\) if \(\delta =0\) and \(\eta =k \delta , k\in \mathbb {N}\) if \(\delta >0\), provided that

almost surely.

Remark 2.2

-

(i)

If \(x=0\), then we retrieve the results of Dȩbicki et al. (2022, Prop 2.1).

-

(ii)

As shown in Dȩbicki et al. (2022) condition (2.6) holds in the particular case that \(Z(t)>0,t\in \mathbb {R}^d\) almost surely.

-

(iii)

One example for Z, see for instance Dȩbicki et al. (2022) is taking

$$\begin{aligned} Z(t)= \exp ( V(t)- \sigma ^2_V(t)/2)), \quad t\in \mathbb {R}^d, \end{aligned}$$where \(V(t),t\in \mathbb {R}^d\) is a centered Gaussian rf with almost surely continuous trajectories and stationary increments, \(\sigma ^2_V(t)=Var(V(t))\) and \(\sigma _V(0)=0\). For this case \(Z(t)>0,t\in \mathbb {R}^d\) almost surely, condition (2.6) is satisfied and (2.5) reads

$$\begin{aligned} \mathcal {B}_{ Z }^\delta (x) =\int _0^\infty \mathbb {E}{\left\{ \frac{1}{ S_{{\delta }}} \mathbbm {I} \Bigl ( \int _{\delta \mathbb {Z}^d} \mathbbm {I}( V(t) - \sigma ^2_V(t)/2> \ln s) \lambda _\delta (dt) > x \Bigr ) \right\} }\lambda (ds) < \infty . \end{aligned}$$(2.7)

Corollary 2.3

If Z has almost surely continuous trajectories, then for all \(x_0\geqslant 0\)

Define next a probability measure \(\mu \) on \(\mathcal {D}\) by

Let \(\Theta \) be a rf with law \(\mu \). By the definition, \(\Theta \) has also càdlàg sample paths and since D is Polish, in view of Varadarajan (1958, Lem. p. 1276) we can assume that \(\Theta \) is defined in the same probability space as Z. Recall that \(\lambda _\delta (dt)/\delta ^d\) is the counting measure on \(\delta \mathbb {Z}^d\) if \(\delta >0\) and \(\lambda _0\) is the Lebesgue measure on \(\mathbb {R}^d\). Since we can rewrite (2.5) as

where

and the law of \(\Theta \) is uniquely determined by the law of the max-stable stationary rf X and does not depend on the particular choice of Z, see Hashorva (2018, Lem A.1), hence if \(Z_*\) is another spectral rf for X, then

for all \(\delta \geqslant 0\). Assume next that \(\mathbb {P}{\left\{ S_0< \infty \right\} }=1\) and let

be a spectral rf of the max-stable rf \(X_\delta (t)= X(t), t\in \delta \mathbb {Z}^d\), where \(\bar{Q}_\delta \) is independent of a rv T, which has pdf \(p(s)>0, \,s\in {\delta \mathbb {Z}^d}\). We choose p to be continuous when \(\delta =0\). In view of Dȩbicki and Hashorva (2020, Thm 2.3) one possible construction is

with \(c=1\) if \(\delta =0\) and \(c=\delta ^d\) otherwise. Set below \(Q_\delta = \bar{Q}_\delta /c\).

Lemma 2.4

-

(i)

If \(\mathbb {P}{\left\{ S_0< \infty \right\} }=1\), then for \( Q_\delta \) as above and all \(\delta ,x\) non-negative we have

$$\begin{aligned} \mathcal { B}_{ Z }^ \delta (x) = \int _0^\infty \mathbb {P}{\left\{ \int _{\delta \mathbb {Z}^d} \mathbbm {I}( Q_\delta (t)> s) \lambda _\delta (dt) > x \right\} }\lambda (ds) < \infty . \end{aligned}$$(2.13) -

(ii)

If \(\mathbb {P}{\left\{ S_0< \infty \right\} }>0\), then with \(V(t)= Z(t) |S_0 < \infty \) for all \(\delta ,x\) non-negative we have

$$\begin{aligned} \mathcal { B}_{ Z }^ \delta (x) = \mathbb {P}{\left\{ S_0(\Theta )< \infty \right\} } \mathcal { B}_{V}^ \delta (x) < \infty . \end{aligned}$$(2.14)

Let in the following \(Y(t)= R \Theta (t)\) with R a 1-Pareto rv with survival function \(1/x, x \geqslant 1\) independent of \(\Theta \) and set hereafter

Recall that when \(\delta =0\) we interpret \(\delta \mathbb {Z}^d\) as \(\mathbb {R}^d\). We establish below the Berman representation (1.1) for the general setup of this paper.

Theorem 3.2

If \(\mathbb {P}{\left\{ S_0=\infty \right\} } <1\), then for all \( \delta , x\) non-negative

Corollary 2.6

Under the conditions of Theorem 2.5 we have that \(\epsilon _0 (Y )\) has a continuous distribution if Z has almost surely continuous trajectories. Moreover, \(\mathcal { B}_{ Z }^\delta (x) >0\) for all \(x\geqslant 0\) such that \(\mathbb {P}{\left\{ \epsilon _\delta (Y )>x\right\} } >0\).

Proposition 2.7

For all \(\delta \geqslant 0\) and \(x>0\) we have

Remark 2.8

-

(i)

If \(x=0\), the lower bound in (2.16) holds with 1 in the numerator, see Dȩbicki et al. (2019), and Hashorva (2021).

-

(ii)

If \(\mathbb {E}{\left\{ \epsilon ^p_\delta (Y)\right\} }\) is finite for some \(p>0\), then combination of the upper bound in (2.16) with the Markov inequality gives the following upper bound

$$\begin{aligned} \mathcal { B}_{ Z }^\delta (x) \leqslant x^{-p-1}\mathbb {E}{\left\{ \epsilon ^p_\delta (Y)\right\} }, \quad x>0. \end{aligned}$$(2.17) -

(iii)

If \(\mathbb {E}{\left\{ \epsilon _\delta (Y)\right\} }<\infty \) and \(\int _0^\infty e^{sx}(\mathcal { B}_{ Z }^\delta (x))^{1/2}dx<\infty \), then it follows that for all \(s>0\)

$$\begin{aligned} \mathbb {E}{\left\{ e^{s\epsilon _\delta (Y)}\right\} }\leqslant 1+s(\mathbb {E}{\left\{ \epsilon _\delta (Y)\right\} })^{1/2}\int _0^\infty e^{sx}(\mathcal { B}_{ Z }^\delta (x))^{1/2}dx. \end{aligned}$$ -

(iv)

Since \(Y= R\Theta \), we can calculate in case of known \(\Theta \) the expectation of \(\epsilon _\delta (Y)\) as follows

$$\begin{aligned} \mathbb {E}{\left\{ \epsilon _\delta (Y)\right\} }= & {} \int _{\delta \mathbb {Z}^d} \mathbb {P}{\left\{ R\Theta (t)> 1\right\} } \lambda _\delta (dt) =\int _1^\infty \int _{\delta \mathbb {Z}^d}\mathbb {P}{\left\{ \Theta (t)> 1/r\right\} }\lambda _\delta (dt)r^{-2}dr. \end{aligned}$$If \(Z(t)= \exp ( V(t)- \sigma ^2_V(t)/2)), t\in \mathbb {R}^d\) is as in Remark 2.2, Item (iii), then in view of Dȩbicki et al. (2019, Lem 5.4), and Hashorva (2021, Eq. (5.3)) we have

$$\begin{aligned} \begin{aligned} \mathbb {E}{\left\{ \epsilon _\delta (Y)\right\} }&= \int _{\delta \mathbb {Z}^d}\int _1^\infty {\Psi }\left( \frac{\sigma _V(t)}{2}-\frac{\ln r}{\sigma (t)}\right) r^{-2}\lambda _\delta (dt)dr \\&= 2 \int _{t\in \delta \mathbb {Z}^d} {\Psi }(\sigma _V(t)/2)\lambda _\delta (dt), \end{aligned} \end{aligned}$$(2.18)where \(\Psi \) is the survival function of an N(0, 1) rv.

3 Approximation of \(\mathcal {B}_{Z }^\delta (x)\) and its Behaviour for Large x

We show first that \(\mathcal { B}_{ Z }=\mathcal { B}_{ Z }^{0}\) can be approximated by considering \(\mathcal { B}_{ Z }^{ \delta }(x)\) and letting \(\delta \downarrow 0\).

Proposition 3.1

For all \(x\geqslant 0\) we have that

We note in passing that for \(x=0\) we retrieve the approximation for Pickands constants derived in Dȩbicki et al. (2022). An approximation of \(\mathcal { B}_{ Z }^{ \delta }(x)\) can be obtained by letting \(T\rightarrow \infty \) and calculating the limit of

For such an approximation we shall discuss the rate of convergence to \(\mathcal { B}_{ Z }^{ \delta }(x)\) assuming further that

is as in Remark 2.2, Item (iii).

A1 \(\sigma ^2_V(t)\) is a continuous and strictly increasing function, and there exists \(\alpha _0\in (0,2]\) and \(A_0\in (0,\infty )\) such that

where \(\Vert \cdot \Vert \) is the Euclidean norm.

A2 There exists \(\alpha _\infty \in (0,2]\) such that

The following theorem constitutes the main finding of this section.

Theorem 2.1

Under A1-A2 we have for all \(\delta , x\) non-negative and \(\lambda \in (0,1)\)

Remark 3.3

-

(i)

For \(x=0\) the rate of convergence in (3.1) agrees with the findings in Dȩbicki (2005).

-

(ii)

The range of the parameter \(\lambda \in (0,1)\) in Theorem 3.2 cannot be extended to \(\lambda \geqslant 1\). Indeed, following Ling and Zhang (2020), for \(V(t)=\sqrt{2}B_{1}(t)\), \(\delta =0\), \(T>x\) and \(d=1\) we have

$$\begin{aligned} \mathcal { B}_{ Z }([0,T],x)= 2\Psi (x/\sqrt{2})+\sqrt{2}(T-x)\varphi (x/\sqrt{2}) \end{aligned}$$implying

$$\begin{aligned} \mathcal { B}_{ Z }(x)=\sqrt{2}\varphi (x/\sqrt{2}), \end{aligned}$$(3.2)where \(\varphi (\cdot )\) is the pdf of an N(0, 1) rv. Consequently, we have

$$\begin{aligned} \lim _{T\rightarrow \infty } \left| \mathcal { B}_{ Z }(x)-\frac{\mathcal { B}_{ Z }([0,T],x)}{T} \right| T ={|2\Psi (x/\sqrt{2}) -\sqrt{2}x \varphi (x/\sqrt{2})|} >0. \end{aligned}$$

In the rest of this section we focus on \(d=1\) log-Gaussian case. In view of (3.2) for some finite positive constant C

The next result gives logarithmic bounds for \(\mathcal { B}_{ Z }^\delta (x)\) as \(x\rightarrow \infty \) that supports this hypothesis.

Proposition 3.4

Suppose that \(d=1\) and V satisfies A1-A2. Then

and

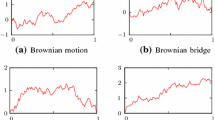

\(\mathbb {E}{\left\{ \epsilon _\delta (Y)\right\} }\) as a function of \(\delta \in [0,2]\) and \(H=\{0.5, 0.9\}\) and the upper bound (2.17) with \(p=1\) for Berman constants as a function of \(x\in [1,10]\) for \(H=0.5\), \(\delta =1\) and \(H=0.9\), \(\delta =10\) where \(V(t)=\sqrt{2}B_H(t)\)

Remark 3.5

-

(i)

If we suppose additionally that \(\sigma _V^2\) is regularly varying at \(\infty \) with parameter \(\alpha >0\), then it follows from Proposition 3.4 that

$$\begin{aligned} - \frac{1}{2^{\alpha }}\leqslant \liminf _{x\rightarrow \infty }\frac{\ln (\mathcal { B}_{ Z }^\delta (x))}{\sigma ^2_V(x)}\leqslant \limsup _{x\rightarrow \infty }\frac{\ln (\mathcal { B}_{ Z }^\delta (x))}{\sigma ^2_V(x)}\leqslant -\frac{3-2\sqrt{2}}{2^{\alpha +1}}. \end{aligned}$$ -

(ii)

If follows from the proof of Proposition 3.4 that under A1-A2

$$\begin{aligned} - \frac{1}{2}\leqslant \liminf _{x\rightarrow \infty }\frac{\ln (\mathbb {P}{\left\{ \epsilon _\delta (Y)>x\right\} })}{\sigma ^2_V(x/2)}\leqslant \limsup _{x\rightarrow \infty }\frac{\ln (\mathbb {P}{\left\{ \epsilon _\delta (Y)>x\right\} })}{\sigma ^2_V(x/2)}\leqslant -\frac{3-2\sqrt{2}}{2}. \end{aligned}$$

Example 3.6

Let \(V(t)=\sqrt{2}B_H(t)\), with \(H\leqslant 1\), i.e., \(\sigma ^2_V(t)=2t^{2H}\). Then \(\mathbb {E}{\left\{ \epsilon _0(Y)\right\} }=\frac{4^{1/(2H)+0.5}}{\sqrt{\pi }\Gamma (1/(2H)+0.5)}\), see Dȩbicki et al. (2019). For \(\delta >0\) we use (2.18) to compute \(\mathbb {E}{\left\{ \epsilon _\delta (Y)\right\} }\), see Table 1. The graph of \(\mathbb {E}{\left\{ \epsilon _\delta (Y)\right\} }\) as a function of \(\delta \) and the upper bound (2.17) with \(p=1\) for Berman constants as a function of \(x\in [1,10]\) are presented on Fig. 1.

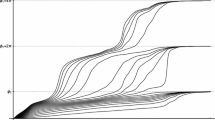

We simulated Berman constant \(\mathcal { B}_{ Z } (x)\) using estimator (2.15) for different x and H see Table 2. In our simulation we generated \(N=20000\) trajectories by means of Davies-Harte algorithm on the interval \([-64, 64]\) with the step \(e=1/2^9=0.001953125\). Since the sample paths of fractional Brownian motion are very torn by the negative correlation of increments for \(H<0.5\) we cannot trust the simulation for H close to 0 and we estimated Berman constant for \(H\ge 0.4\) (see the half width of 95% confidence interval in Table 2). Let us note that the estimator (2.15) for \(x=0\) is different from the estimator of Pickands constant in Dieker and Yakir (2014). Compare our simulation for \(x=0\) with the results of Dieker and Yakir (2014) for Pickands constant.

Example 3.7

Let X(t), \(t\in \mathbb {R}\) be a stationary Ornstein-Uhlenbeck process, i.e., a centered Gaussian process with zero mean and covariance \(\mathbb {E}{\left\{ X(t) X(s)\right\} }=\exp (-|t-s|),s,t\in \mathbb {R}\). Then the random process

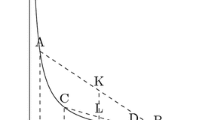

is Gaussian with stationary increments and variance \(\sigma ^2_V(t)=4(|t|+e^{-|t|}-1\)). Using (2.15) we simulated the Berman constant for \(\delta =0\) and different x, see Table 3 and for \(x=0\) and \(\delta =\{0, 0.1, 0.2, 0.5, 1,2,5, 10\}\), see Table 4. We generated \(N=20000\) trajectories with the step \(e=10^{-5}\) on the interval \([-15, 15]\). In Fig. 2 we graphed \(\mathcal { B}_{ Z }(x)\) and \(\frac{\ln (\mathcal { B}_{ Z }(x))}{\sigma _V^2(x/2)}\) as function of x and we get that this ratio is asymptotically around \(-0.4\). Note that according to Remark 3.5 it should be between \(-0.5\) and \(-0.04289322\).

Using (2.18) we computed \(\mathbb {E}{\left\{ \epsilon _\delta (Y)\right\} }\) and \(1/\mathbb {E}{\left\{ \epsilon _\delta (Y)\right\} }\) for \(\delta =\{0,0.1,0.2, 0.5,1,2,5,10\}\), see Table 5. The graph of the lower bound of \(\mathcal { B}^{\delta }_{ Z }(0) \) for the integrated Ornstein-Uhlenbeck process that is \(1/\mathbb {E}{\left\{ \epsilon _\delta (Y)\right\} }\) as a function of \(\delta \in [0,10]\) is given in Fig. 2. The value of \(\mathcal {B}_{ Z }(0)\) constant for the integrated Ornstein-Uhlenbeck process with the same parameters as here was simulated in Dȩbicki et al. (2003) resulting in the value 0.528.

4 Further Results and Proofs

Let in the following \(X(t),t\in \mathbb {R}^d\) be a max-stable stationary rf with càdlàg sample paths and spectral rf Z as in Theorem 2.1 and define \(\Theta \) as in (2.9). Define \(Y(t)=R \Theta (t),t\in \mathbb {R}^d\) with R an 1-Pareto rv (with survival function \(1/x, x\geqslant 1 \)) independent of \(\Theta \) and set \(M_{Y,\delta }= \sup _{t\in \delta \mathbb {Z}^d} Y(t)\). Note in passing that

since \(M_{Y, \delta } \geqslant R \Theta (0)> 1\) almost surely by the assumption on R and by the definition \(\mathbb {P}{\left\{ \Theta (0)=1\right\} }=1\).

Recall that \(S_\delta =S_\delta (Z)= \int _{\delta \mathbb {Z}^d} Z (t) \lambda _\delta (dt)\). In view of (2.4) we have that \(\mathbb {P}{\left\{ S_0>0\right\} }=1\). In the following, for any fixed \(\delta \geqslant 0\) (but not simultaneously for two different \(\delta \)’s) we shall assume that \(S_\delta >0\) almost surely, i.e., Z is such that \(\mathbb {P}{\left\{ \sup _{t\in \delta \mathbb {Z}^d} Z(t)> 0\right\} }=1\). Such a choice of Z is possible in view of Hashorva (2021, Lem 7.3).

A functional \(F:D \rightarrow [0,\infty ]\) is said to be shift-invariant if \(F(f(\cdot -h) )= F(f(\cdot ))\) for all \(h\in \mathbb {R}^d\).

We state first two lemmas and proceed with the postponed proofs.

Lemma 4.1

If \(\mathbb {P}{\left\{ S_0 < \infty \right\} }=1\), then \(\mathbb {P}{\left\{ S_\delta < \infty \right\} }=1, \delta >0\) and for all \(x>0\)

Moreover \(\mathbb {P}{\left\{ M_{Y, \delta }< \infty \right\} }=1\).

Proof of Lemma 4.1

In view of (2.4) \(S_0>0\) almost surely. The assumption that \(S_0< \infty \) almost surely is in view of Dombry and Kabluchko (2017, Thm 3) equivalent with \(Z(t) \rightarrow 0\) almost surely as \(\Vert t \Vert \rightarrow \infty \), with \(\Vert \cdot \Vert \) some norm on \(\mathbb {R}^d\). Hence \(S_\delta < \infty \) almost surely follows from Dombry and Kabluchko (2017, Thm 3). By the definition of \(\Theta \) and the fact that \(\mathbb {P}{\left\{ S_0(Z) \in (0,\infty )\right\} }=1\) we have

implying that \(\mathbb {P}{\left\{ \lim _{\Vert t \Vert \rightarrow \infty } \Theta (t) =0\right\} }=1\). Consequently, \(\mathbb {P}{\left\{ \lim _{\Vert t \Vert \rightarrow \infty } Y(t) =0\right\} }=1\) and hence the claim follows. \(\square \)

Below we interpret \(\infty \cdot 0\) and 0/0 as 0. The next result is a minor extension of Soulier (2022, Lem 2.7).

Lemma 4.2

If \(\mathbb {P}{\left\{ S_0<\infty \right\} }=1\), then for all measurable shift invariant functional F and all \( \delta ,x\) non-negative

Proof of Lemma 4.2

For all measurable functional \(F: D \rightarrow [0,\infty ]\) and all \( x> 0\)

is valid for all \(h \in \mathbb {R}^d\) with \(B^h Y(t)= Y(t-h), h,t\in \mathbb {R}^d\). Note in passing that \(B^h Y\) can be substituted by Y in the right-hand side of (4.4) if F is shift-invariant. The identity (4.4) is shown in Bladt et al. (2022). For the discrete setup it is shown initially in Planinić and Soulier (2018), and Basrak and Planinić (2021) and for case \(d=1\) in Soulier (2022).

Next, if \(x \in (0,1]\), since \(Y(0)=R> 1\) almost surely and by the assumption on the sample paths we have that \(\mathbb {P}{\left\{ \epsilon _\delta (Y/x)>0\right\} }=1,\) recall \(\mathbb {P}{\left\{ \Theta (0)=1\right\} }=1\). By Lemma 4.1 \(\mathbb {P}{\left\{ M_{Y, \delta } \in (1, \infty )\right\} }=1\), hence for all \(x> 1\) we have further that \( M_{Y, \delta } > x\) implies \(\epsilon _\delta (Y/x)>0\). Consequently, in view of (4.1) \(\epsilon _\delta (Y/x)/\epsilon _\delta (Y/x)\) is well defined on the event \(M_{Y, \delta }> x, x> 1\) and also it is well-defined for any \(x\in (0,1]\).

Recall that \(\lambda _\delta (dt)\) is the Lebesgue measure on \(\mathbb {R}^d\) if \(\delta =0\) and the counting measure multiplied by \(\delta ^d\) on \(\delta \mathbb {Z}^d\) if \(\delta >0\). Let us remark that for any shift-invariant functional F, the functional

is shift-invariant for all \(h\in \mathbb {R}^d\) if \(\delta =0\) and any shift \(h\in \delta \mathbb {Z}^d\) if \(\delta >0\). Thus applying the Fubini-Tonelli theorem twice and (4.4) with functional \(F^*\) we obtain for all \(\delta \geqslant 0,x>0\)

hence the proof follows. \(\square \)

Proof of Theorem 2.1

Let \(\delta \geqslant 0\) be fixed and consider for simplicity \(d=1\). By the assumption we have \(\mathbb {E}{\left\{ \sup _{t\in [0,T]} Z (t)\right\} }\!<\! \infty \) for all \(T\!>\!0\). Since we assume that \(\mathbb {P}{\left\{ \sup _{t \in \mathbb {R}} Z(t)\!>\!0\right\} }\!=\!1\), then \(\mathbb {P}{\left\{ S_0\!>\!0\right\} }=1\). Using the assumption we have \(\mathbb {P}{\left\{ S_\eta < \infty \right\} }>0\) for all \(\eta \geqslant 0\) and thus by (2.3) we obtain

Since also for any \(M>0\) and \(\eta >0\)

we conclude as above that for all \(\eta \geqslant 0\)

Next, for any \(x\geqslant 0\) and \(\eta \geqslant 0\)

where the last claim follows from Dȩbicki et al. (2022, Cor 2.1). We shall assume that \(S_\delta >0\) almost surely (this is possible as mentioned at the beginning of this section). For notational simplicity we consider next \(\delta =0\). For any \(M>0, T> 2M\) by the Fubini-Tonelli theorem and (2.3)

Thus we obtain

where \({\mathcal {H}^{{0}}_{ Z }}\) is the Pickands constants, see Dȩbicki et al. (2022, Prop 2.1) for the last formula.

Let us consider the second term

Further, assuming for simplicity that T is a positive integer we get

where the last convergence follows from (4.5). The same way we show that \(L_{M,T}\rightarrow 0\) as \(M\rightarrow \infty \) establishing the proof.

We prove next the second claim. In view of Dȩbicki et al. (2022, proof of Prop 2.1) almost surely for all \(\delta ,\eta \in [0,\infty )\)

Consequently, for any \(\delta ,\eta , x\) non-negative

We proceed next with the case \(\delta =0\), the other case follows with the same argument where it is important that \(\eta =k\delta \) for the shift transformation. Taking \(\delta =0, \eta >0\) we have

where we used (2.3) with \(h=r-v\) to obtain the second last equality above and (2.6) to get the last equality, hence the proof follows. \(\square \)

Proof of Corollary 2.3

Given \(x\geqslant 0\) consider the representation (2.5)

By the monotonicity with respect to variable x of the function

in order to show the continuity of \(\mathcal {B}_{ Z }^0(x)\) it suffices to prove that

for almost all \(s>0\). Let us define the following measurable sets

Since Z has almost surely continuous trajectories we have \(A_s\cap A_{s'}=\emptyset \) if \(0<s<s'\) and \(x>0\). Thus there are countably many \(s>0\) such that \(\mathbb {P}{\left\{ A_s\right\} }>0\) because if there were not countably many ones we would find countably many disjoint \(A_s\) such that \(\sum \mathbb {P}{\left\{ A_s\right\} }=\infty \). Thus we get (4.8) for almost all \(s>0\). The continuity at \(x=0\) follows from the right continuity of (4.7). \(\square \)

Proof of Lemma 2.4

Item (i): In view of (2.5) and substituting \(\Theta (t)=Q_\delta (t)/ S_\delta (\Theta )\) to (2.10) we get

Since \(S_\delta (Q_\delta )=1\) the claim follows.

Item (ii): If \( \mathbb {P}{\left\{ S_0< \infty \right\} } > 0\) we can define \(V(t)= Z(t) |{ S_0 < \infty }\) and set (recall \(S_0=S_0(Z), \mathbb {E}{\left\{ Z (0)\right\} }=1\))

For this choice of b by (2.3) we have

for all \(t\in \mathbb {R}\). Clearly, \(\mathbb {P}{\left\{ \sup _{t\in \mathbb {R}^d} V(t)>0\right\} } =1\). In view of Dombry and Kabluchko (2017) V is the spectral rf of a stationary max-stable rf \(X_*\) with càdlàg sample paths and moreover \(S_0(V)=\int _{\mathbb {R}^d} V (t) \lambda (dt)<\infty \) almost surely. In view of Dȩbicki et al. (2022, proof of Prop. 2.1) we have that

almost surely for all \(\delta >0\). Consequently, we obtain for all \(\delta >0\)

establishing the proof. \(\square \)

Proof of Theorem 2.5

Assume first that \(\mathbb {P}{\left\{ S_0< \infty \right\} }=1\). In view of (4.1) we have that \(\epsilon _\delta < \infty \) almost surely, hence as in Kulik and Soulier (2020), and Soulier (2022) where \(d=1\) is considered it follows that (2.12) holds with

with \(c=1\) if \(\delta =0\) and \(c=\delta ^d \) otherwise. Set below \(Q_\delta = \bar{Q}/c\) and for simplicity omit the subscript below writing simply \(M_Y\) instead of \(M_{Y,\delta }\). Since \(Y(t)/M_Y \leqslant 1\) almost surely for all \(t\in \delta \mathbb {Z}^d\) and \(\mathbb {P}{\left\{ M_Y\in (1,\infty )\right\} }=1\), in view of Lemma 2.4 we have using further the Fubini-Tonelli theorem and Lemma 4.2

The last equality follows from (recall \(M_Y\in (1,\infty )\) almost surely)

In view of (4.1) for all x non-negative such that \(\mathbb {P}{\left\{ \epsilon _\delta (Y)>x\right\} }>0\) we have that \(\mathcal { B}_{ Z }^\delta (x) \in (0,\infty )\), hence the proof follows.

Assume now that \(\mathbb {P}{\left\{ S_0< \infty \right\} }\in (0,1)\). In view of Lemma 2.4 we have

with \(V(t)= Z(t)|S_0 < \infty \), which is well-defined since \(\mathbb {P}{\left\{ S_0<\infty \right\} }>0\) by the assumption. Since \(S_0(V)< \infty \) almost surely and \( Y_*(t) =Y(t) |S_0(\Theta )< \infty ,t\in \mathbb {R}\) by the proof above

In view of Soulier (2022, Lem 2.5, Cor 2.9) and Hashorva (2021, Thm 3.8) and the above

and thus \(\epsilon _\delta (R \Theta )< \infty \) implies \(S_0< \infty \) almost surely. Hence the proof is complete. \(\square \)

Proof of Corollary 2.6

In view of (), the representation (2.15) and the finiteness of \(\mathcal {B}_Z^0(x)\) for all \(x\geqslant 0\), the monotone convergence theorem yields for all \(x_0\geqslant 0\)

consequently, since by our assumption Lemma 4.1 implies \(\mathbb {P}{\left\{ \epsilon _0 (Y ) \in (0,\infty )\right\} }=1\), then

follows establishing the claim. \(\square \)

Proof of Proposition 2.7

In order to prove (2.16) note first that for any non-negative rv U with df G and \(x\geqslant 0 \) such that \(\mathbb {P}{\left\{ U>x\right\} }>0\)

Consequently, we obtain for all \(x>0\)

establishing the proof of the lower bound (2.16). The proof of the upper bound follows from the fact that

where F is the distribution of \(\epsilon _\delta (Y)\). This completes the proof. \(\square \)

Proof of Proposition 3.1

Since \(\mathcal { B}_{ Z }^\delta (0)\) is the generalised Pickands constant \(\mathcal {H}_{ Z }^\delta \), then the claim follows for \(x=0\) from Dȩbicki et al. (2022). In view of (2.14) we can assume without loss of generality that \(\mathbb {P}{\left\{ S_0 < \infty \right\} }=1\). Under this assumption, from the proof of Lemma 4.1 we have that \(Y(t)\rightarrow 0\) almost surely as \(\Vert t \Vert \rightarrow \infty \). Hence for some M sufficiently large \(Y(t) < 1\) almost surely for all t such that \(\Vert t \Vert > M\). Consequently, for all \(\delta \geqslant 0\)

Moreover, \(\epsilon _\delta (Y)< \infty \) almost surely for all \(\delta \geqslant 0\) implying \(\epsilon _\delta (Y) \rightarrow \epsilon _0(Y)\) almost surely as \(\delta \downarrow 0\). In view of Soulier (2022, Lem. 2.5, Cor. 2.9) and Hashorva (2021, Thm 3.8) for all \(\delta \geqslant 0\)

Applying Dȩbicki et al. (2022, Thm 2) and (4.9) yields

Hence \(1/\epsilon _{\delta }(Y), \delta >0 \) is uniformly integrable and hence

establishing the proof. \(\square \)

4.1 Proof of Theorem 3.2

Suppose that \(V(t), t\in \mathbb {R}^d\) is a centered Gaussian field with stationary increments and variance function \(\sigma ^2_V(\cdot )\) that satisfies A1-A2. Then, by stationarity of increments \(\sigma ^2_V(\cdot )\) is negative definite, which combined with Schoenberg’s theorem, implies that for each \(u>0\)

is positive definite, and thus a valid covariance function of some centered stationary Gaussian rf \(X_u(t), t\in \mathbb {R}^d\), where \(s-t\) is meant component-wise. The proof of Theorem 3.2 is based on the analysis of the asymptotics of sojourn time of \(X_u(t)\). Since the idea of the proof is the same for continuous and discrete scenario, in order to simplify notation, we consider next only the case \(\delta =0\).

Before we proceed to the proof of Theorem 3.2, we need the following lemmas, where \(Z(t)=\exp \left( V(t)-\frac{\sigma ^2_V(t)}{2}\right) \) is as in Remark 2.2, Item (iii).

Lemma 4.3

For all \(T>0\) and \(x\geqslant 0\)

-

(i)

$$\begin{aligned} \lim _{u\rightarrow \infty }\frac{\mathbb {P}{\left\{ \int _{[0,T]^d } \mathbbm {I}(X_u(t)>u) dt>x \right\} }}{\Psi (u)}=\mathcal {B}_Z([0,T]^d,x). \end{aligned}$$

-

(ii)

For all \(x\geqslant 0\)

$$\begin{aligned}&\lim _{u\rightarrow \infty }\frac{\mathbb {P}{\left\{ \int _{[0,\ln (u)]^d } \mathbbm {I}(X_u(t)>u) dt>x \right\} }}{(\ln (u))^d\Psi (u)}=\mathcal {B}_Z(x),\\&\lim _{T\rightarrow \infty }\frac{\mathcal {B}_Z([0,T]^d,x)}{T^d}=\mathcal {B}_Z(x)\in (0,\infty ). \end{aligned}$$

Proof of Lemma 4.3

Item (i) follows straightforwardly from Dȩbicki et al. (2023, Lem. 4.1). The proof of Item (i) follows by the application of the double sum technique applied to the sojourn functional, as demonstrated e.g., in Dȩbicki et al. (2023, Prop. 3.1). The claim in Item (ii) follows by the same argument as its counterpart in Dȩbicki et al. (2023, Lem. 4.2). \(\square \)

The following lemma is a slight modification of Piterbarg (1996, Lem 6.3) to the family \(X_u,\ u>0\). Let \({\textbf {i}}=(i_1,...,i_d)\), with \(i_1,...,i_d\in \{0,1,2,...\}\), \(\mathcal {R}_{{\textbf {i}}}:=\prod _{k=1}^d [i_k T,(i_k+1)T]\) and

Lemma 4.4

There exists a constant \(C\in (0,\infty )\) such that for sufficiently large u, for all \({\textbf {i}},{\textbf {j}}\in \widehat{\mathcal {K}}, {\textbf {i}}\ne {\textbf {j}}\) we have

Proof of Theorem 3.2

The proof consists of two steps, where we find an asymptotic upper and lower bound for the ratio

as \(u\rightarrow \infty \). We note that by Lemma 4.3 the limit, as \(u\rightarrow \infty \), of the above fraction is positive and finite.

Asymptotic upper bound. If \(T>0\), then for sufficiently large u

where \(\lceil \cdot \rceil \) is the ceiling function and the last inequality above follows from the stationarity of \(X_u\). Using again the stationary of \(X_u\), we obtain

Next, by Lemma 4.4, for sufficiently large T, u and some \(\text {Const}_{0}>0\)

The upper bound for \(\Sigma _1\) follows by a similar argument as used in the proof of Piterbarg (1996, Lem. 6.3), thus we explain only main steps of the argument. For a while, consider the following probability

Then, for each \(\varepsilon >0\) and sufficiently large T, u,

where the above inequality follows by Lemma 4.4 and

which is a consequence of the stationarity of \(X_u\) and statement (i) of Lemma 4.3 applied to \(x=0\). Again, by the stationarity of \(X_u\) we can obtain the bound as in (4.14) uniformly for all the summands in \(\Sigma _1\).

Application of the bounds (4.12), (4.13), (4.14) to (4.10) leads to the following upper estimate

which is valid for all \(\varepsilon >0\) and T sufficiently large.

Asymptotic lower bound. Taking \(T>0\), for sufficiently large u

where in (4.16) we used Bonferroni inequality.

Using that \(\check{\mathcal {K}}\subset \widehat{\mathcal {K}}\) with the upper bound for

derived in (4.11), we conclude that for each T sufficiently large and \(\varepsilon >0\),

Thus, by statement (ii) of Lemma 4.3 combined with (4.15) and (4.19), in view of the fact that \(\varepsilon \) can take any value in (0, 1), we arrive at

for all \(\lambda \in (0,1)\) establishing the proof. \(\square \)

Proof of Proposition 3.4

The idea of the proof is to analyze the asymptotic upper and lower bound of

as \(x\rightarrow \infty \) and then to apply Proposition 2.7. In order to simplify the notation, we consider only the case \(\delta =0\). Let \(Z(t)=V(t)-\sigma ^2_V(t)/2, t\in \mathbb {R}\) with V a centered Gaussian process with stationary increments that satisfies A1-A2 and \(\mathcal {W}\) an independent of V exponentially distributed rv with parameter 1.

Logarithmic upper bound. Let \(A\in (0,1/2)\). We begin with an observation that

where in (4.20) we used that \(\{V(-t),t\geqslant 0\}{\mathop {=}\limits ^{d}} \{V(t),t\geqslant 0\} \) and the assumption that \(\sigma ^2_V\) is increasing. Next, by A1, for sufficiently large x and \(s,t\geqslant x/2\) such that \(|t-s|\leqslant 1\)

where Z is some centered stationary Gaussian process. Hence, by Slepian inequality (see, e.g., Corollary 2.4 in Adler (1990))

and by Landau-Shepp (see, e.g., Adler (1990, Eq. (2.3))), uniformly with respect to k

The above implies that

Thus, in order to optimize the value of A in (4.21) it suffices now to solve

that leads to (recall that \(A<1/2\))

Hence

which combined with (2.16) in Proposition 2.7 completes the proof of the logarithmic upper bound.

Logarithmic lower bound. Taking \(A> 1/2\) we have

Using that

and the fact that by the stationarity of increments of V

we can apply Borell inequality (e.g., Adler (1990, Thm 2.1)) uniformly for all the summands in (4.23) to get that for sufficiently large x (recall that \(\sigma ^{2}_V\) is supposed to be increasing)

as \(x\rightarrow \infty \).

Hence we arrive at

which combined with (2.16) in Proposition 2.7 and the fact that, by the proof of the logarithmic upper bound \(\mathbb {E}{\left\{ \epsilon _\delta (Y)\right\} }<\infty \) implies

for all \(A>1/2\). This completes the proof. \(\square \)

Availability of Data and Material

The corresponding author is available for data obtained in the simulations and used for all the tables and graphs.

References

Adler RJ (1990) An introduction to continuity, extrema, and related topics for general Gaussian processes. IMS

Basrak B, Planinić H (2021) Compound Poisson approximation for regularly varying fields with application to sequence alignment. Bernoulli 27(2):1371–1408

Berman S (1992) Sojourns and extremes of stochastic processes. The Wadsworth & Brooks/Cole Statistics/Probability Series, Pacific Grove, CA: Wadsworth & Brooks/Cole Advanced Books & Software

Berman S (1982) Sojourns and extremes of stationary processes. Ann Probab 10(1):1–46

Bladt M, Hashorva E, Shevchenko G (2022) Tail measures and regular variation. Electron J Probab 27:43. Paper No. 64

de Haan L (1984) A spectral representation for max-stable processes. Ann Probab 12(4):1194–1204

Dȩbicki K (2005) Some properties of generalized Pickands constants. Teor Veroyatn Primen 50(2):396–404

Dȩbicki K, Hashorva E (2020) Approximation of Supremum of Max-Stable Stationary Processes & Pickands Constants. J Theoret Probab 33(1):444–464

Dȩbicki K, Hashorva E, Liu P, Michna Z (2023) Sojourn times of Gaussian related random fields. ALEA Latin Am J Probab Math Stat 20:249–289

Dȩbicki K, Hashorva E, Michna Z (2022) On the continuity of Pickands constants. J Appl Probab 59(1):187–201

Dȩbicki K, Michna Z, Peng X (2019) Approximation of Sojourn Times of Gaussian Processes. Methodol Comput Appl Probab 21(4):1183–1213

Dȩbicki K, Michna Z, Rolski T (2003) Simulation of the asymptotic constant in some fluid models. Stoch Models 19(3):407–423

Dieker AB, Yakir B (2014) On asymptotic constants in the theory of extremes for Gaussian processes. Bernoulli 20(3):1600–1619

Dombry C, Kabluchko Z (2017) Ergodic decompositions of stationary max-stable processes in terms of their spectral functions. Stochastic Process Appl 127(6):1763–1784

Falk M, Hüsler J, Reiss RD (2010) Laws of small numbers: extremes and rare events, vol 23, 3rd edn. In: DMV Seminar. Basel: Birkhäuser

Hashorva E (2018) Representations of max-stable processes via exponential tilting. Stochastic Process Appl 128(9):2952–2978

Hashorva E (2021) On extremal index of max-stable random fields. Lith Math J 61(2):217–238

Hüsler J, Piterbarg VI (2017) On shape of high massive excursions of trajectories of Gaussian homogeneous fields. Extremes 20(3):691–711

Janson S (2020) The space D in several variables: random variables and higher moments. Preprint at http://arxiv.org/abs/2004.00237

Kulik R, Soulier P (2020) Heavy tailed time series. Springer, Cham

Ling C, Zhang H (2020) On generalized Berman constants. Methodol Comput Appl Probab 22(3):1125–1143

Piterbarg VI (1996) Asymptotic methods in the theory of Gaussian processes and fields, vol. 148 of Translations of Mathematical Monographs. Providence, RI: American Mathematical Society. Translated from the Russian by V.V. Piterbarg, revised by the author

Planinić H, Soulier P (2018) The tail process revisited. Extremes 21(4):551–579

Soulier P (2022) The tail process and tail measure of continuous time regularly varying stochastic processes. Extremes 25(1):107–173

Varadarajan VS (1958) On a problem in measure-spaces. Ann Math Statist 29:1275–1278

Funding

Krzysztof Dȩbicki was partially supported by NCN Grant No 2018/31/B/ST1/00370 (2019-2023). Enkelejd Hashorva was partially supported by SNSF Grant 200021-196888.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, computer simulations and analysis were performed by all authors. The proofs were obtained by all authors during several joint research activities. In particular, all authors contributed to all the proofs and simulations. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dȩbicki, K., Hashorva, E. & Michna, Z. On Berman Functions. Methodol Comput Appl Probab 26, 2 (2024). https://doi.org/10.1007/s11009-023-10059-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11009-023-10059-6