Abstract

We introduce a flexible method to simultaneously infer both the drift and volatility functions of a discretely observed scalar diffusion. We introduce spline bases to represent these functions and develop a Markov chain Monte Carlo algorithm to infer, a posteriori, the coefficients of these functions in the spline basis. A key innovation is that we use spline bases to model transformed versions of the drift and volatility functions rather than the functions themselves. The output of the algorithm is a posterior sample of plausible drift and volatility functions that are not constrained to any particular parametric family. The flexibility of this approach provides practitioners a powerful investigative tool, allowing them to posit a variety of parametric models to better capture the underlying dynamics of their processes of interest. We illustrate the versatility of our method by applying it to challenging datasets from finance, paleoclimatology, and astrophysics. In view of the parametric diffusion models widely employed in the literature for those examples, some of our results are surprising since they call into question some aspects of these models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Diffusion processes have found wide application across the engineering, natural and social sciences (Kloeden and Platen 1992; van Zanten 2013). For instance, they have been successfully used in neuroscience to model the membrane potential of neurons (Lansky and Ditlevsen 2008), in molecular dynamics to model the angles between atoms evolving in a force field (Papaspiliopoulos et al. 2012), and in astrophysics to describe quasar variability over time (Kelly et al. 2009). Within econometrics they have modelled asset prices and interest rates, and have been used to price financial instruments (Karatzas and Shreve 1998a). Other applications include paleoclimatology, where they are used to model glacial cycles in energy balance models and variability in the intensity of El Niño and the Southern Oscillation (Imkeller and Monahan 2002).

In general all of these applications aim to infer from discrete temporal observations \(\mathcal {D} = \{v_{t_i}\}_{i=0}^N\) the coefficients of an underlying diffusion model, V, that is a Markov solution to the stochastic differential equation (SDE)

where b and \(\sigma \) are the drift and volatility coefficients.

If we restrict ourselves to parametric models for b and \(\sigma \) (i.e. the functional forms of the coefficients are known up to the value of a parameter vector \(\theta \)) then inference is still challenging. In particular, for a given parametrization the likelihood of the observations \(\mathcal {D}\) will typically not be known in closed form, and as a consequence a considerable literature has developed to tackle this problem. Within a frequentist paradigm, approaches include estimating functions (Bibby et al. 2010), maximization of approximate likelihood functions (Dacunha-Castelle and Florens-Zmirou 1986; Aït-Sahalia 2002, 2008),and simulation-based schemes (Pedersen 1995; Durham and Gallant 2002; Beskos et al. 2006b, 2009). In the Bayesian literature Roberts and Stramer (2001) proposed a Markov chain Monte Carlo (MCMC) approach using data-augmentation, also utilized by Bladt and Sørensen (2014), Golightly and Wilkinson (2008), Sermaidis et al. (2013), andvan der Meulen and Schauer (2017).

However, for many practical problems the functional form of b and \(\sigma \) are either unknown or disputed within the applied literature. Specification of a good finite-dimensional model is challenging, particularly outside the context of physical phenomena in the natural sciences. In finance for instance, finding realistic models is particularly difficult, as exemplified by disputes surrounding models for interest rates or stock prices (Bali and Wu 2006; Durham and Gallant 2002). In such a situation a non-parametric method of inference may be more attractive as we need make no restrictive assumptions about functional forms for the drift and diffusion coefficients. From the perspective of a practitioner these methods are particularly appealing as the functional form that arises can be used to identify a plausible and interpretable parametric family, or to gain direct insight about the dynamics of the underlying process.

The frequentist, non-parametric literature is dominated by kernel-type estimators. Examples include those of Banon (1978), Stanton (1997), locally linear smoothers with adaptive bandwidth (Spokoiny 2000), and estimators derived via penalized likelihood (Comte et al. 2007). Showing consistency and contraction rates of the estimators is, however, non-trivial (Dalalyan and Kutoyants 2002; Gobet et al. 2004; Tuan 1981; van Zanten 2001).

The literature on Bayesian, non-parametric inference for diffusions is not as well developed as its frequentist counterpart (see van Zanten 2013, for an over view), and to our knowledge methods of inference only for the drift coefficient have been described to date in the setting of low-frequency observations. In Papaspiliopoulos et al. (2012) the unknown drift function is equipped with a prior measure in function space, which is assumed to be Gaussian with mean 0 and covariance defined by a certain differential operator, and a data augmentation scheme (Roberts and Stramer 2001) is then used to compute an MCMC approximation to the posterior. Consistency results for this setting are shown by Pokern et al. (2013), and improved contraction rates are derived by van Waaij and van Zanten (2016). van der Meulen et al. (2014) proposed an algorithmic modification to the procedure of Papaspiliopoulos et al. (2012), using a different basis expansion for the drift function and employing random truncation of this expansion (with a truncation point equipped with a prior and explored with a reversible jump step). Contraction rates for this approach were derived in van der Meulen et al. (2018). Some additional references establishing consistency and contraction rates include (Gugushvili and Spreij 2014; Koskela et al. 2019; Nickl and Söhl 2017; Nickl and Ray 2020; van der Meulen and van Zanten 2013). Finally, Gugushvili et al. (2018) recently proposed a Bayesian method of inference of the diffusion coefficient for high frequency financial datasets.

In the Bayesian setting when dealing with real-world datasets of discretely observed diffusions, the simultaneous estimation of both b and \(\sigma \) in (1) is a challenging problem, and one which current Bayesian non-parametric approaches can not address. In this paper we propose a flexible Bayesian algorithm for simultaneous estimation of both drift and diffusion coefficients for discretely observed diffusions, without any restriction on the observation frequency, drawing on the strengths of both parametric and non-parametric paradigms. Our approach is parametrized in a way that is flexible in adapting to subtle patterns in the data, yet once a set of hyperparameters is fixed the model becomes parametric and consistency results and simplicity of implementation of the regular parametric approach apply. The method has the additional advantage that it avoids the need to work with a discretised version of (1). Substantial emphasis of our work is put into achieving an efficient, self-contained, and user-friendly algorithm for inference on the functional form of b and \(\sigma \) for SDEs. Visualization of the functional form of b and \(\sigma \) is a powerful investigative tool for practitioners, allowing them to better understand the dynamics of their process of interest, and give them insight as to what may be appropriate parametric models.

Our method has two crucial components. The first component is the introduction of a spline basis (De Boor 1978) to model (indirectly) b and \(\sigma \). Splines are compactly supported piece-wise polynomial bases which offer us a great deal of flexibility in modelling functions, and critically are able to capture their local behaviour. The second component is that a scalar diffusion V can be equivalently defined either via the pair of functions \((b(\cdot ),\sigma (\cdot ))\) or via \((A(\cdot ),\eta (\cdot ))\), where

\(\eta \) is commonly known as the Lamperti transformation (Lamperti 1964). In this paper we model the drift and volatility functions by expressing A and \(\eta \) in spline bases. The Lamperti transformation is crucial in avoiding potential issues of degeneracy in the methodology we subsequently develop. Briefly, the issue is as follows. Conceptually we are augmenting the parameter space, to be explored by an MCMC algorithm, with the path space which takes the entire sample path of the diffusion as a latent variable. However, the volatility coefficient is completely determined by this sample path via its quadratic variation, at least in the range of the path. Thus a Gibbs-type algorithm that attempts to alternate between updates of the sample path and updates of \(\sigma \) is ‘reducible’: the sample path allows for only one possible \(\sigma \) and \(\sigma \) can no longer be updated; neither can any new sample paths compatible with other choices for \(\sigma \) be proposed. One solution is to propose updates not to the latent sample path but to its underlying driving Brownian motion, which can be done without reference to any parameters determining \(\sigma \); the Lamperti transformation arises naturally in constructing the mapping between Brownian motion and the original diffusion. See Appendix and extensive discussion in Roberts and Stramer (2001) for further details. Once one accepts the need to parametrise V using \(\eta \), then A (or equivalently \(\alpha \)) is the only free function remaining.

An additional benefit of the parameterization in (2) is that it is possible to enforce monotonicity of \(\eta \) in the spline basis (via so-called I-splines), and thus guarantee \(\sigma \) be positive. In view of the definition of \(\eta \) as an integrated positive function this property is essential. Without this reparametrization, a direct spline representation of \(\sigma \) could become negative in some regions of the state space. As discussed in our methodological sections the transformation (2), together with the availability of easily computable bounds on functions built with splines, have the additional advantage that we can avoid any time-discretization of (1).

Within the framework of a spline basis representation of A and \(\eta \), we provide an MCMC algorithm for sampling from the posterior of the basis parameters, given \(\mathcal {D}\). We proceed via data-augmentation (Roberts and Stramer 2001; Sermaidis et al. 2013), alternately updating the basis parameters and the latent sample path connecting observations in \(\mathcal {D}\). Under conditions on b and \(\sigma \) which we show to hold in their implied spline representations, it is in fact possible to implement an algorithm using only a finite-dimensional surrogate for each sample path, circumventing the need to discretize the model.

We benchmark the performance of our algorithm and its accuracy in recovering the coefficients of a true, generating SDE on an illustrative example. We then apply the method to three real-world datasets: from finance, on the evolution of the short-term interest rate through three-month treasury bills; from paleoclimatology, looking at the fluctuations of historical temperatures on the Northern Hemisphere; and from astrophysics, examining quasar light variability. These showcase a broad range of potential applications. The results obtained on the financial dataset are largely in agreement with the conclusions drawn from the use of competing, frequentist methods. However, for the two other examples we show that the parametric models commonly used in the literature may need revisiting.

This paper is organized as follows: in Section 2 we present our flexible family of diffusions, together with our choice of spline bases; in Section 3 we then develop an MCMC algorithm (Algorithm 1) targeting the coefficients of the spline bases; in Section 4 we discuss a number of practical considerations in the implementation of Algorithm 1, including the particular choices of knots together with their location, and regularization with appropriate choices of prior; in Section 5 we consider our methodology applied to the broad range of real-world examples we discussed above; finally, in Section 6 we outline natural continuations of our work from both a methodological and application perspective. Technical details where appropriate are collated in the Appendix.

2 A Flexible Family of Diffusions

As noted in the introduction, we first apply the transformation (2) to represent \(b:\mathbbm {R}\rightarrow \mathbbm {R}\) and \(\sigma :\mathbbm {R}\rightarrow \mathbbm {R}_{\ge 0}\) as \(A:\mathbbm {R}\rightarrow \mathbbm {R}\) and \(\eta :\mathbbm {R}\rightarrow \mathbbm {R}_{\ge 0}\). We then represent A and \(\eta \) as follows:

where \(u = (u_1,\dots ,u_{M_{\theta }})\) and \(h = (h_1,\dots ,h_{M_{\xi }})\) are two, possibly distinct, sets of (twice-differentiable) basis functions, \(\theta \) and \(\xi \) are vectors of coefficients, and \(\bar{v}\in \mathbbm {R}\) is a free parameter used for centering \(\eta _{\xi }\) (more details about \(\bar{v}\) are given in Section 4.1). We take a Bayesian approach: given a choice of bases u and h and a prior \(\pi (\theta ,\xi )\) on the parameters of interest, our interest is in the posterior \(\pi (\theta ,\xi | \mathcal {D})\). We will develop an MCMC algorithm targeting this distribution.

An additional aim is to avoid any form of time-discretization of the SDE in (1), circumventing the need to analyse any introduced bias. Using a data-augmentation scheme constitutes one way to achieve this; however, as we shall see, there are a series of practical problems that first need to be overcome. In particular it must be possible to compute a series of quantities in closed form: the Jacobian of the Lamperti transformation, \(D_\xi =1/\sigma _\xi \); the integrand in the exponent of a Radon–Nikodym derivative of the law of diffusion X with respect to Wiener law, \(G_\theta :=\left( \alpha _\theta ^2+\alpha _\theta '\right) /2\); and global upper and lower bounds on \(G_\theta \). The first two are easy to derive with any choice of (sufficiently differentiable) basis functions and are given by

where \('\) applied to a vector denotes component-wise derivative. It is the need to compute global upper and lower bounds on \(G_\theta \) that substantially narrows down the possible choices for the basis u. On one hand, so long as all of \(u_i\) \((i=1,\dots , M_{\theta })\) have bounded first and second order derivatives, i.e. \(\Vert u'\Vert _\infty :=\sup \{ |u'_i(y)|;y\in \mathbbm {R}, i\in \{1,\dots ,M_{\theta }\}\}<\infty \) and \(\Vert u''\Vert _\infty <\infty \), it is always possible to bound \(G_\theta \) by:

On the other hand, the bounds above are almost always too crude and render the algorithm impossible to implement in practice. The choice of basis u is therefore dictated by the need for tight bounds on \(G_\theta \).

In this paper we propose to use splines as bases u and h. Splines address all of the issues discussed above. In particular, unlike general bases for which bounds on \(G_{\theta }\) need to be computed from (5) (and are thus limited by the computational issues that arise from the explosion of those bounds with the number of included basis terms), the maxima and minima of \(G_{\theta }\) can be efficiently identified in the spline basis. Ultimately this yields tight bounds regardless of the number of included basis functions, and offers more degrees of freedom than many alternatives. Additionally, it is possible to regularize splines, exerting direct control over the desired level of flexibility of \(A_{\theta }\) and \(\eta _{\xi }\) (see Section 4.2 for details). Finally, it is possible to directly equip any function expanded in a spline basis with a monotonicity property, substantially reducing the size of the function space for \(\eta _{\xi }\) a priori; this is crucial in light of (2) with \(\eta \) an integral of a positive function.

Naturally, a piecewise polynomial basis can be defined in many ways. Perhaps the most common family of splines are the so-called B-splines (De Boor 1978). B-splines are piecewise polynomial curves with compact support for which numerically efficient algorithms exist. For us they are a natural choice as we can simply choose the compact support to include the range of the data plus some margin to avoid edge effects. This allows us to model a flexible class of functions, and gives us analytical tractability (including derivatives) and a user-selectable degree of smoothness.

B-splines are controlled by two sets of hyper-parameters: basis order (signifying the maximal order of any polynomial used) and the placement and number of knots (positions at which different polynomial basis’ elements are spliced together). The two jointly control the maximal flexibility of functions that can be modelled using the chosen basis. In principle any desired degree of flexibility can be achieved by simply fixing the basis order and the number and density of knots to high enough levels (Micula and Micula 2012). For robustness and stability of coefficients the De Boor’s recursion formula (De Boor 1978) defining B-splines is most commonly used in practice. We follow this convention to define the basis u, and define the basis h via related I-splines to guarantee monotonicity. We describe both spline types in detail below.

B-splines (or related M-Splines) are defined by the number and locations of knots, as well as the order of the polynomials used. Let \(\kappa _i \in \mathbbm {R}\) \((i=1,\dots ,\mathcal {K})\) denote the knots (heuristically these are the positions at which splines are anchored), and let \(B_i(x|k)\) denote the ith B-spline of the kth order evaluated at x. The notation for M-spline is defined analogously. M-splines and B-splines are defined by the recurrence relations:

I-splines are integrated M-splines (He and Shi 1998; Ramsay 1988) and thus the ith I-spline of the kth order evaluated at x is given by:

Setting \(h_i(\cdot ):=I_i(\cdot |k)\) and restricting the coefficients \(\xi \) to be non-negative guarantees \(\xi ^Th(\cdot )\) to be monotonically increasing. This restriction can be imposed easily by exponentiating coefficients \(\xi \), i.e. rather than defining \(\eta _{\xi }\) via (3) we write, with abuse of notation:

where exponentiation of a vector is taken to be componentwise.

Between each pair of knots \([\kappa _i,\kappa _{i+1})\), a spline is simply a polynomial. Consequently, to find tight bounds on \(G_{\theta }\) we can break up the domain of \(A_{\theta }\) into intervals \([\kappa _i,\kappa _{i+1})\) \((i=1,\dots ,\mathcal {K}-1)\), so that \(G_{\theta }\) becomes a polynomial on each of them, and then use standard methods to find local extrema of \(G_{\theta }\). Global bounds \(l_{\theta }\le G_{\theta }\) and \(r_{\theta }\ge G_{\theta }-l_{\theta }\) are then given by the minimum (resp. maximum) of all local bounds. Numerical root-finding schemes might in principle give inaccurate results; however, there exist methods that quantify the error bounds and an additional margin can then be added to offset possibly incurred errors (Rump 2003).

3 Inference Algorithm

Following the introduction of our family of diffusions in Section 2, in this section we develop an MCMC algorithm targeting the posterior for \(\theta \) and \(\xi \), the coefficients of the spline bases. We use a data-augmentation scheme in which the unobserved parts of the paths \(\mathcal {A}:=\{V_t;t\in (t_i,t_{i+1}), i =0,\dots ,N-1\}\) are treated as missing values. By targeting the joint posterior distribution \(\pi (\mathcal {A},\theta ,\xi |\mathcal {D})\) via Gibbs sampling, the distribution of interest, \(\pi (\theta ,\xi |\mathcal {D})\), would be admitted as a marginal. However, as noted by Roberts and Stramer (2001), a naïve augmentation scheme \((\theta ,\xi ,\mathcal {D})\rightarrow (\theta ,\xi ,\mathcal {D},\mathcal {A})\) would cause two problems: (i) it prompts for the imputation of an infinite-dimensional object \(\mathcal {A}\), which is obviously impossible to achieve on a computer; and (ii) it leads to a chain which cannot mix because \(\mathcal {A}\) fully determines the diffusion coefficient. Any update of \(\xi \) conditioned on \(\mathcal {A}\) is then degenerate as the conditional density is given by a point mass at a current value of \(\xi \).

We have set up our spline basis carefully so that these problems can be solved, as follows. First we employ the transformation \(\eta \) as defined in (2). It can be shown that \(X_t = \eta (V_t)\) is a diffusion satisfying

in particular X has unit volatility independent of the parameters \((\theta ,\xi )\) (Roberts and Stramer 2001). Second, we augment the parameters not with \(\mathcal {A}\) but instead with the alternative: \((\theta ,\xi ,\mathcal {D})\rightarrow (\theta ,\xi ,\mathcal {D},\mathcal {S}(\mathcal {A}))\), where \(\mathcal {S}(\mathcal {A})\) denotes a finite-dimensional surrogate for the unobserved path \(\mathcal {A}\). By an appropriate choice of surrogate, we can ensure that it is possible to sample \(\mathcal {S}(\mathcal {A})\) without having to discretize time as would be necessary when sampling \(\mathcal {A}\) directly, and we can also ensure that the output is an almost surely finite-dimensional random variable (termed a skeleton) from which an entire path \(\mathcal {A}\) can be reconstructed if needed. We use a path-space rejection sampler with proposals based on Brownian bridges (Beskos et al. 2008). This specific approach (in which a skeleton is used) was suggested and developed in Beskos et al. (2006b) and Sermaidis et al. (2013).

Inference is then performed by Gibbs-type updates, alternately (a) updating unknown parameters by drawing from \(\pi (\theta ,\xi |\mathcal {S}(\mathcal {A}),\mathcal {D})\) and then (b) imputing the unobserved path by sampling from \(\pi (\mathcal {S}(\mathcal {A})|\theta ,\xi ,\mathcal {D})\). As required, the marginal distribution of the parameter chain converges to the posterior distribution \(\pi (\theta ,\xi |\mathcal {D})\). For step (a) we use a Metropolis–Hastings step, following Sermaidis et al. (2013) (see Appendix). Step (b) is where we employ path-space rejection sampling. We summarise the approach in Algorithm 1, which we will now describe in detail.

The algorithm accepts as input \(\kappa ^{(\theta )}\) and \(\kappa ^{(\xi )}\), used to denote the vectors of knots for drift and volatility coefficients respectively, and \(\mathcal {O}^{(\theta )}\) and \(\mathcal {O}^{(\xi )}\), which denote the orders of the respective bases. Computations performed for steps 2 and 4 depend directly on this quadruplet of fixed parameters.

First considering the parameter update step (Algorithm 1 Step 3), often it is not possible to sample from \(\pi (\theta ,\xi |\mathcal {S}(\mathcal {A}),\mathcal {D})\) directly. (More precisely, this conditional density is given up to a constant by the right-hand side of (21), treated as a function of \((\theta ,\xi )\) only. Evidently the dependence on \(\theta \) and \(\xi \) is rather complicated in general.) However, because the joint density \(\pi (\theta ,\xi ,\mathcal {S}(\mathcal {A}),\mathcal {D})\) can be computed in closed form (see (21) and Sermaidis et al. (2013, Thm 3)), it is possible to employ a Metropolis–Hastings correction. To update a large number of parameters at once we further exploit gradient information to improve the quality of proposals. In particular, we employ a Metropolis-adjusted Langevin algorithm (MALA; Roberts and Tweedie 1996) and update all coordinates of \(\xi \) and \(\theta \) at once by defining the proposal \(q((\theta ^{(n-1)},\xi ^{(n-1)},\cdot )\) via

where I is the identity matrix of appropriate size and \(\delta _1,\dots ,\delta _4\) are tuning parameters. To compute \(\nabla _\theta \log (\pi (\theta ^{},\xi ^{},\mathcal {S}(\mathcal {A})^{},\mathcal {D}))\) and \(\nabla _\xi \log (\pi (\theta ^{},\xi ^{},\mathcal {S}(\mathcal {A})^{},\mathcal {D}))\) in a spline context we require only the quantities \(\nabla _\theta l_{\theta }\) and \(\nabla _\theta r_{\theta }\). It is not always possible to find the closed form expressions for those two quantities, but instead a finite difference scheme can be employed.

Now consider the imputation of the unobserved path (Algorithm 1 Step 2). The following arguments may be found in Beskos and Roberts (2005), Beskos et al. (2006a), Beskos et al. (2008), and Sermaidis et al. (2013), and we give only a brief summary. To explain the idea, suppose for the moment that we have discrete observations directly from (9); that is, \(\eta _{\xi }\) is simply the identity and we are interested in inference of \(\theta \) only. To impute the finite-dimensional surrogate variable \(\mathcal {S}(\mathcal {A})\) it is now enough to employ N independent path-space rejection samplers, each for a separate interval \((t_i,t_{i+1})\), \(i=0,\dots ,N-1\), so for simplicity consider a single interval [0, t] with \(X_0 = x\) and \(X_t = y\). Denoting the law of the bridge \((X|X_0 = x, X_t = y)\) under (9) by \(\mathbbm {P}^{(t,x,y)}\) and a Brownian bridge connecting the same points by \(\mathbbm {W}^{(t,x,y)}\), we have that

We recognise the right-hand side as the probability that a Poisson process of unit intensity on \([0,t]\times [0,r_{\theta }]\) has zero points beneath the graph of \(s\mapsto G_{\theta }(X_s) - l_{\theta }\), allowing for a rejection sampler to be implemented using Brownian bridge proposals and with acceptance probability (12) (even though the expression is intractable with finite resources), an example of retrospective simulation. This motivates the choice of \(\mathcal {S}(\mathcal {A})\) as

where \(\{\psi _{j}, \chi _{j}\}_{j=1}^{\varkappa }\) is a unit-intensity Poisson Point Process on \([0,t]\times [0,r_{\theta }]\) and \(Z \sim \mathbbm {W}^{(t,x,y)}\). (By convention \(\{\cdot \}_{j=1}^0:=\emptyset \).) By inspection of (12), this choice of \(\mathcal {S}(\mathcal {A})\) can now be simulated by rejection: (i) Simulate the Poisson process \(\{\psi _{j}, \chi _{j}\}_{j=1}^{\varkappa }\), (ii) Simulate Z at the times \(\psi _1,\dots ,\psi _{\varkappa }\); (iii) Accept \(\mathcal {S}(\mathcal {A})\) if \(G_{\theta }(Z_{\psi _j})-l_{\theta }< \chi _j\) for each \(j=1,\dots ,\varkappa \).

In the more general case, when we have discrete observations from a diffusion of the form (1) rather than (9), considerable further complication is introduced by the fact that, following an application of the Lamperti transformation, the datapoints \(\{\eta _{\xi }(v_{t_i}):\, i=1,\dots ,N\}\) now depend on a parameter of interest. Brownian bridges of the form \(\mathbbm {W}^{(t_{i+1}-t_i,\eta _{\xi }(v_{t_i}),\eta _{\xi }(v_{t_{i+1}}))}\) are now inapplicable as dominating measure in each interval. This issue is resolved by a further re-parametrization which in some contexts is known as noise outsourcing; details are given in the Appendix.

We now provide a formal verification that path-space rejection sampling theory can be applied in our spline context, which suffices for path-space rejection sampling within MCMC.

Theorem 1

Let a diffusion model be defined by the Lamperti transformation \(\eta _{\xi }\) and an anti-derivative of a drift of a Lamperti-transformed diffusion \(A_{\theta }\) as in (2). Suppose further that \(\eta _{\xi }\) and \(A_{\theta }\) can be expanded in I-spline and B-spline bases as in (3), with bases orders fixed to \(\mathcal {O}^{(\xi )}\ge 3\) and \(\mathcal {O}^{(\theta )}\ge 3\). Then it is possible to simulate exactly from \(\pi (\mathcal {S}(\mathcal {A})|\theta ,\xi ,\mathcal {D})\).

Proof

Denote the domain over which \(\eta _{\xi }\) is defined with \(\mathcal {R}^{(\xi )}:=[\kappa ^{(\xi )}_1, \kappa ^{(\xi )}_{\mathcal {K}^{(\xi )}}]\) and similarly the domain over which \(A_{\theta }\) is defined with \(\mathcal {R}^{(\theta )}:=[\kappa ^{(\theta )}_1, \kappa ^{(\theta )}_{\mathcal {K}^{(\theta )}}]\). The algorithm never evaluates \(\eta _{\xi }\), \(A_{\theta }\) nor any of their derivatives outside of these two intervals (see Section 4.1) and consequently the extensions of \(\eta _{\xi }\) and \(A_{\theta }\) to \(\mathbbm {R}\) can be assumed to satisfy all the relevant regularity conditions outside of \(\mathcal {R}^{(\xi )}\) and \(\mathcal {R}^{(\theta )}\).

It follows directly from the definitions (6) and (7) that the \(i^{th}\) order I-spline defines a \(\mathcal {C}^{i}\) function (on \(\mathcal {R}^{(\xi )}\)) and \(i^{th}\) order B-spline defines a \(\mathcal {C}^{i-1}\) function (on \(\mathcal {R}^{(\theta )}\)). Consequently, with the choices \(\mathcal {O}^{(\xi )}\ge 3\) and \(\mathcal {O}^{(\theta )}\ge 3\), \(\eta _{\xi }\) is at least \(\mathcal {C}^{3}\) on \(\mathcal {R}^{(\xi )}\) and \(A_{\theta }\) is at least \(\mathcal {C}^{2}\) on \(\mathcal {R}^{(\theta )}\). By the construction in (8) we know \(\eta _{\xi }'(v)\) is positive, and since

it follows that \(\sigma _{\xi }\) is at least \(\mathcal {C}^{2}\) on \(\mathcal {R}^{(\xi )}\) and \(b_{\theta ,\xi }\) is at least \(\mathcal {C}^{1}\) on \(\mathcal {R}^{(\theta )}\). This implies that \(b_{\theta ,\xi }\) and \(\sigma _{\xi }\) are both locally Lipschitz and since their extensions to \(\mathbbm {R}\) are arbitrary it follows that the SDE defined indirectly through \(A_{\theta }\) and \(\eta _{\xi }\) admits a unique solution (Karatzas and Shreve 1998b, Section 8.2).

To sample from \(\pi (\mathcal {S}(\mathcal {A})|\theta ,\xi ,\mathcal {D})\) imposes additional conditions (Beskos et al. 2006b): \(\alpha _{\theta }\) must be at least \(\mathcal {C}^{1}\), \(\exists \,\widetilde{A}_\theta \) such that \(\widetilde{A}_\theta '=\eta _{\xi }\), \(\exists \,l_{\theta }>-\infty \) such that \(l_{\theta }\le \inf _{u\in \mathbbm {R}}G_{\theta }(u)\), and \(\exists \,r_{\theta }<\infty \) such that \(r_{\theta }\ge \sup _{u\in \mathbbm {R}}G_{\theta }(u)-l_{\theta }\). Clearly, as \(A_{\theta }\) is at least \(\mathcal {C}^{2}\) on \(\mathcal {R}^{(\theta )}\), \(\alpha _{\theta }:=A_{\theta }'\) is at least \(\mathcal {C}^{1}\). Additionally, by construction \(\widetilde{A}_\theta =A_{\theta }\) and the existence of the requisite bounds follows for instance from (5).

We remark that our choice of spline bases yields a relatively simple form for \(\mathcal {S}(\mathcal {A})\); in applications of rejection sampling of diffusions elsewhere it is often necessary to simulate additional information about the diffusion such as its local extrema. Here, the availability of tight global bounds on \(G_\theta \) as a consequence of our choice of spline bases in Section 2 obviate this complication.

4 Practical Considerations

4.1 Choice of Bases

The choice of bases can be regarded as a choice of functional prior on \(\eta _{\xi }(\cdot )\) and \(A_{\theta }(\cdot )\). Bases defined on a compact interval C correspond to priors which are supported only on functions vanishing outside C. In principle, C can be made arbitrarily large, eliminating the influence of the truncation of the prior’s support. This however requires identification of the regions over which \(A_{\theta }\) and \(\eta _{\xi }\) need to be evaluated. We refer to those two regions as \(C({A_{\theta }})\) and \(C({\eta _{\xi }})\) respectively. In the case of \(\eta _{\xi }\) the procedure is simple: we need only ever evaluate \(\eta _{\xi }\) on the range of observations, so we can make an empirical choice \(C({\eta _{\xi }}):=[\min \{\mathcal {D}\}-\delta , \max \{\mathcal {D}\}+\delta ]\), where \(\delta \ge 0\) is some margin that allows us to avoid edge effects from the usage of splines.

Determining \(C(A_\theta )\) is more difficult because region over which \(A_\theta \) is evaluated depends on \(\eta _\xi \). We take a pragmatic approach and try to identify a \(C(A_\theta )\) which is large enough to draw the same inferential conclusions. Noting that there is little value in making \(C(A_\theta )\) so large that it includes basis elements u defined over regions without observations, this gives us a natural way to proceed. We begin by centering \(C({A_{\theta }})\) around the origin by using \(\bar{v}\) in (3) as an anchor. For simplicity we set \(\bar{v}:=\frac{1}{N+1}\sum _{i=0}^Nv_{t_i}\), noting that the choice of anchor is one of convenience and any choice will lead to the same results. Next we initialise \(C({A_{\theta }})\leftarrow [-\mathcal {R},\mathcal {R}]\), for some \(\mathcal {R}\in \mathbbm {R}_+\), and simply proceed with Algorithm 1. If at any point \(A_{\theta }\) (or any of its derivatives) needs to be evaluated outside of \([-\mathcal {R},\mathcal {R}]\) we halt Algorithm 1, double \(\mathcal {R}\), and re-execute.

4.2 Placement of Knots

We now consider the choice of locations and total number of knots as well as the order of polynomials. These award different degrees of flexibility to \(\eta _{\xi }\) and \(A_{\theta }\). A standard approach when using splines is to keep the order of polynomials moderate (anything beyond third order is rarely used; Hastie et al. 2001, Section 5.2). Once \(C(A_{\theta })\) and \(C(\eta _{\xi })\) have been settled, it is a priori reasonable to space knots equally across these intervals, except at the boundaries where knots can be duplicated to relax any continuity requirements there (see Appendix to Ch. 5 of Hastie et al. 2001). We take this approach to knot placement throughout our experiments; indeed, it can be viewed as an advantage of our method that good results can be obtained without the need to first fine-tune knot placement. Similarly, a user would ideally not want to have to perform extensive experimentation to determine the number of knots. A Bayesian approach to this issue is to allow the user to specify too many knots and to employ a prior which induces an appropriate regularization. We use a Gaussian process prior on the integrated squared derivatives of the fitted function (in this case \(A_{\theta }\) and \(\eta _{\xi }\)):

Here, \(\cdot ^{(k)}\) denotes kth order derivative (with respect to x) and \(\lambda _{i,k}\), \(i=1,2\); \(k=1,\dots ,K\), are the tuning hyper-parameters. For splines, the integrals above become:

where matrices:

are available in closed forms (Hastie et al. 2001, Section 5.4).

We found that additional, stronger prior information is required for the basis functions supported on the edges of the intervals \(C(A_{\theta })\) and \(C(\eta _{\xi })\) (i.e. intervals \([\kappa _{i},\kappa _{i+1})\) with extreme values of i). For these, it is possible that only a few observations (or in extreme cases none) fall on the interior of \([\kappa _{i},\kappa _{i+1})\). Consequently, to guarantee the convergence of the Markov chains we impose an additional prior for these functions and shrink the respective \(\theta _i\) and \(\xi _i\) towards 0. This is accomplished by defining diagonal matrices P and \(\widetilde{P}\) with non-negative diagonal elements, where large values indicate strong shrinkage of respective basis element towards 0. An additional penalty \(\theta ^TP\theta +(e^\xi )^T\widetilde{P}(e^\xi )\) can then be added on to (14).

As a result, we end up with a prior of the form:

We have chosen for simplicity a prior for which \(\theta \) and \(\xi \) are independent. For example, placing independent priors on parameters of b and \(\sigma \) (rather than of \(\eta \) and A) would, from (2), induce priors on \(\theta \) and \(\xi \) which are not necessarily independent. However, if the data contains evidence of some unassumed dependence then this should of course ultimately reveal itself in the posterior. It would be straightforward to incorporate any prior knowledge about a correlation between \(\theta \) and \(\xi \) into the prior if desired; Algorithm 1, and specifically Eq. (21), does not rely on a product form for \(\pi (\theta ,\xi )\). Similarly, one could consider priors other than the Gaussian form appearing in (16) though this could potentially introduce computational costs elsewhere. For example, a Gaussian prior ensures that the derivatives appearing in the MALA updates (10)–(11) remain well-behaved while other priors may not.

We follow a number of heuristics to reduce the dimensionality of the hyper-parameters in (16) following Hastie et al. (2001). In practice it is often sufficient to penalize only one integrated derivative of the \(k^*\)-th order and set other \(\lambda _{i,k}=0\) \((k\ne k^*)\). Additionally, only extreme entries on the diagonals of P and \(\widetilde{P}\) need to be set to non-zero values and the algorithm is often quite robust to the actual values chosen. Consequently, the problem of parameter tuning is often reduced to dimension 3–4, and the final search for the most fitting values for the hyper-parameters can be completed by validation; that is, by splitting the dataset into training and testing parts, training the model on the former, evaluating the likelihood on the latter, and keeping the model with the highest likelihood attained on the test dataset.

4.3 Computational Cost

The computational cost of the algorithm will depend on all of its parameters and hyperparameters in a complicated way in general. However, we can pick out the main influences on this cost by noting that the most expensive part of Algorithm 1 is typically Step 2, which employs a rejection sampler for each of the N inter-observation intervals in order to simulate a set of skeleton points of a diffusion bridge. For a diffusion X satisfying (9) with say \(X_{0} = x\) and \(X_{\Delta _i} = y\), the acceptance probability for a proposed surrogate \(\mathcal {S}(\mathcal {A})\), when using \(\mathbbm {W}^{(\Delta _i,x,y)}\) as a proposal law, is

This probability decays exponentially in \(\Delta _i\); thus, we should expect the efficiency of the algorithm to diminish exponentially in the observation spacing. Conversely, as \(\Delta _i\) decreases the latent bridge better resembles a Brownian bridge and the acceptance probability (17) goes to 1 as \(\Delta _i \rightarrow 0\). It is further worth noting that, owing to the Markov property of the diffusion, the bridge between each pair of observations can be treated independently. Therefore for a fixed observation spacing the computational cost of Step 2 is at most O(N) in the number of observations N as \(N\rightarrow \infty \). If there are opportunities to exploit parallelization in the implementation then this cost can be reduced further. One can exploit the linear cost in N to counteract the exponential cost in \(\Delta _i\) by imputing additional datapoints between existing observations; see Sermaidis et al. (2013, Sections 3.4 and 4) for this and other strategies on boosting efficiency, and Peluchetti and Roberts (2012) for an extensive empirical study.

5 Numerical Experiments

5.1 Illustrative Dataset

We begin our numerical experiments by considering an illustrative dataset to determine whether our methodology can recover the (known) underlying generative mechanism. We simulate 2001 equally spaced observations with inter-observation distance set to 0.1 from the SDE:

Observations of the underlying process, generated according to the SDE (18). Dashed line indicates the split between the training (left) and test (right) dataset

The simulated data is plotted in Fig. 1. (Recall the partition of the data into separate training and testing parts for the purpose of hyperparameter tuning; see Section 4.2.) The observations range between \(-1.53\) and 1.51. Because of the well-behaved form of the drift and diffusion coefficient we could set the total number of knots to a moderate value and closely recover the two functions without resorting to strong priors. However, because in general we might have no prior information about the underlying process we follow the general principles presented in Section 4 of over-specifying the total number and density of knots and relying on the regularization property of priors to see how faithfully the truth can be recovered. For bases h we set 15 equidistant knots between \(-2\) and 2, and further place three additional knots on each extreme value (in total there are four knots on \(-2\) and four on 2). The addition of extra margins on both sides of the observed range increases the flexibility of the function \(\eta _{\xi }\) near the end-points, contributing to a speed-up of Algorithm 1. Similarly, for basis u we set 15 equidistant knots between \(-4\) and 4 and place an additional 4 knots on each of the extreme values (on \(-4\) and 4).

Fits to the illustrative dataset. Dashed lines represent the true functions used to generate the data. The MCMC chain was run for 600,000 iterations and 30 random draws from the last 300,000 steps were plotted (solid curves). The upper right plot is on the Lamperti-transformed axis, \(x=\eta _{\xi }(v)\). Clouds of observations are plotted to illustrate the differences in the amount of data falling in different regions of space. For the observation scatterplot, the y-axis has no direct interpretation and is simply a jitter added to aid visualization

The order of the polynomial basis h is set to 3, which results in splines of the 3rd order approximating the function \(1/\sigma _\xi (\cdot )\). The order of basis u is set to 4 so that splines approximating the drift function \(\alpha _{\theta }(\cdot )\) are also of 3rd order, under the reasoning that the functions \(1/\sigma _{\xi }(\cdot )\) and \(\alpha _{\theta }(\cdot )\) could be expected to have similar smoothness properties a priori. With these orders all derivatives needed by the algorithm for various computations exist and do not vanish.Additionally, the choice is in agreement with a common principle of keeping the order of the polynomials moderate (Hastie et al. 2001, Section 5.4). \(\bar{v}\) was set to 0. We ran the algorithm for \(M=600,000\) iterations exploring various choices of hyper-parameters, specifically \(\lambda _{1,3} \in \{10^{-3},10^{-2},\dots ,10^2\}\) and \(\lambda _{2,2} \in \{10^{-4},10^{-3},\dots ,10^2\}\), and the final estimates (resulting in the highest values of averaged, noisy estimates of the likelihood) are presented in Fig. 2. Here \(\lambda _{1,3}=0.1\), \(\lambda _{2,2}=0.1\) were used for the prior and all other \(\lambda _{i,k}\) were set to zero.

Recall that the algorithm aims to directly infer \(A_{\theta }\) and \(\eta _{\xi }\) (\(\alpha _{\theta }\) and \(\eta _{\xi }\) are given in the top row of Fig. 2). The posterior draws closely resemble the true functions \(\alpha \) and \(\eta _{\xi }\) on the range of observations. Functions \(b_{\theta ,\xi }\) and \(\sigma _{\xi }\) are computed as byproducts from \(A_{\theta }\) and \(\eta _{\xi }\) via identities (13) and hence small deviations of the latter from the truth result in larger deviations of \(b_{\theta ,\xi }\) and \(\sigma _{\xi }\). This, together with the less dense observations around the origin, is the reason why the posterior draws of the drift coefficient exhibit increased uncertainty in this region.

5.2 Finance Dataset

In this section we consider modelling the U.S. short-term riskless interest rate. Bali and Wu (2006) review some of the methods proposed in the econometrics literature for modelling this process. Following Stanton (1997), we use the three-month U.S. Treasury bills (T-bills) as its proxy. For the sake of fair comparison, we use the same test dataset as Stanton (1997): daily recordings of U.S. Treasury bills’ rate from 1965 to 1995 (Fig. 3).

Following our methodology, we set the polynomial orders of bases u and h to 4 and 3 respectively, following the reasoning as in Section 5.1. We also set the knots of basis u and h respectively to:

where \(_{(n)}\) in the subscript denotes the multiplicity of a knot (which in absence of the subscript is by default set to 1). \(\bar{v}\) was set to 5. Tuning parameters were set to \(\lambda _{1,3}=6000\), \(\lambda _{2,2}=1\) (with all others set to 0) and Algorithm 1 was run for 200,000 iterations. The results are given in Fig. 4.

We plot the 95% empirical credible regions for the purpose of visualising the uncertainty regarding presence of non-linearities. We recover the results of Stanton (1997) quite closely, though minor differences are present. Just as in Stanton (1997, Fig. 5) we observe clear evidence for non-linearity of the volatility term, manifesting itself in a rapid increase towards greater values at higher levels of interest rate (Fig. 4, bottom left panel). This result intuitively makes sense: extraordinarily high interest rates are expected to be observed only during the most uncertain times for the financial markets. Indeed, the very highest interest rates in Fig. 3 fall in the late 70 s and early 80 s—a time of high inflation, contractionary monetary policy, and an ensuing recession of the U.S. economy. Unlike Stanton (1997) however, we note that the volatility term appears nearly flat for a range of interest rates: 4–9%. Additionally, it is apparent that for smaller values of interest rates (2.5–8%) the drift coefficient acts as a gentle mean reversion term (bottom right panel, compared with Stanton (1997, Fig. 4)). It flattens out at medium interest rates (8–15%) and then changes to a very strong mean reversion term for large values of interest rates (15%+), preventing them from exploding to infinity. Relatively stronger mean reversion is required to counteract the increased level of volatility.

Fits to the U.S. Treasury bills dataset. Algorithm 1 was run for 200,000 iterations and 30 random draws from the last 100,000 steps were plotted. The format of the plot is the same as in Fig. 2

5.3 Paleoclimatology Dataset

In this section we analyse isotopic records from ice cores drilled and studied under the NorthGreenland Ice Core Project (Andersen et al. 2004). The data consists of the estimates of the historical levels of \(\delta ^{18}O\) present in Greenland’s ice cores during their formation, dating back 123,000 BP (before present) until present. \(\delta ^{18}O\) is a ratio of isotopes of oxygen (18 and 16) as compared to some reference level (with a known isotopic composition) and is a commonly used measure of the temperature of precipitation.

The data (Fig. 5) shows oscillations between two states (so called Dansgaard-Oeschger (DO) events): stadial (cold) and interstadial (warm), and it exhibits sharp shifts between the two. Presently employed global circulation models are unable to reconstruct this phenomenon, which puts into question some of the conclusions that could be drawn from such models (Ditlevsen and Ditlevsen 2009). Consequently, one of the scientific goals is to understand the mechanisms causing DO events (see Ditlevsen and Ditlevsen (2009) and references therein for some hypotheses put forth). SDEs are one of the tools used for this purpose (Alley et al. 2001; Ditlevsen et al. 2007). An example often employed in the literature is a stochastic resonance model, such as a double-well potential, possibly with an additional periodic component in the drift (Alley et al. 2001; Ditlevsen et al. 2005, 2007; Krumscheid et al. 2015). The validity of such diffusion models does not yet seem to have reached a consensus. Recently, García et al. (2017) have fitted a non-parametric diffusion model to these data, however the method used by the authors is based on Euler–Maruyama discretization and introduces difficult to quantify bias, which might be substantial. We fit our exact and flexible model with the aim of finding an appropriate family of parametric diffusions, and without a priori assuming the form of a stochastic resonance model.

Again following our methodology, the orders of bases u and h were set to 4 and 3 respectively, and knots for \(A_{\theta }\) and \(\eta _{\xi }\) were placed respectively at:

\(\bar{v}\) was set to \(-40\). The regularization parameters were set to \(\lambda _{1,3}=5000\), \(\lambda _{2,2}=1\) and Algorithm 1 was run for 300,000 iterations. The results are given in Fig. 6.

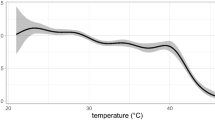

The results are somewhat surprising. The drift parameter indeed appears to be consistent with that of a double-well potential model (this behaviour is more pronounced for the drift of a Lamperti-transformed diffusion), producing the observed separation of stadial and interstadial states. However, the volatility coefficient appears to be an equally strong non-linear contributor. It spikes around \(-41\), which is the trough between the stadial and interstadial regions, allowing for more frequent transitions between two states than would have otherwise been possible under a regular double-well potential model. Our approach shows that existing stochastic resonance dynamic models are not adequate. This could perhaps be an indication that a richer class of models is needed to explain the NGRIP data, such as via more sophisticated drift and volatility coefficients or via a multi-dimensional diffusion model.

Fits to the \(\delta ^{18}O\) dataset. Algorithm 1 was run for 300,000 iterations and 30 random draws from the last 150,000 steps were plotted. The format of the plot is the same as in Fig. 2

5.4 Astrophysics Dataset

Active galactic nuclei (AGNs) are luminous objects sitting at the centres of galaxies. Quasars comprise a subset of the brightest of AGNs. The level of luminosity emitted by those objects varies over time. However, reasons for their variability are unclear (Kelly et al. 2009).

Observations of light curves of NGC 5548 (optical continuum at 5100Å in units of \(10^{-15}\) ergs s\(^{-1}\) cm\(^{-2}\) Å\(^{-1}\)). Data taken from AGN Watch Database (AGN Watch 2003)

Fits to the NGC 5548 dataset. Algorithm 1 was run for 400,000 iterations and 30 random draws from the last 200,000 steps were plotted. The format of the plot is the same as in Fig. 2

Kelly et al. (2009) performed a comprehensive study of the optical light curves of quasars, under the assumption that they can be described by an Ornstein–Uhlenbeck process. This choice was dictated not by an understanding of the mechanism governing the phenomenon, but instead by seeking a model exhibiting three properties: (i) a continuous-time process, (ii) consistent with the empirical evidence for spectral density being proportional to \(S(f)\propto 1/f^2\), and (iii) parsimonious enough to apply to large datasets. Additionally, as theauthors note, “much of the mathematical formalism of accretion physics is in the language of differential equations, suggesting that stochastic differential equations are a natural choice for modeling quasar light curves”. Naturally, this raises the question of whether more complex diffusion processes could provide better fits to the data. We fitted our flexible model to an observation of a single quasar NGC 5548 (Fig. 7, from Kelly et al. (2009, Fig. 4, left)), taken from the AGN Watch Database (AGN Watch 2003). Our aim was to investigate whether fitting a flexible model would exhibit significant deviation from the assumed OU process.

The orders of bases u and h were set to 4 and 3 respectively, and knots for \(A_{\theta }\) and \(\eta _{\xi }\) were placed respectively at:

\(\bar{v}\) was set to \(-40\). The regularization parameters were set to \(\lambda _{1,3}=100\), \(\lambda _{2,2}=1\), and Algorithm 1 was run for 400,000 iterations. The results are given in Fig. 8. The drift of a Lamperti-transformed diffusion indeed appears to be consistent with a simple mean-reversion term \(-a(X-b)\) of the OU process; however, the volatility term exhibits strongly non-linear behaviour. In particular, in two regions of space: 7–9 and 13–15, the volatility is elevated resulting in a high irregularity of the drift of an underlying process. These results strongly suggest that an OU process is too restrictive a model for the given data.

6 Conclusion

In this article we introduced a flexible Bayesian algorithm for simultaneous inference of both drift and diffusion coefficients of an SDE from discrete observations. The method avoids any time-discretization error and does not make any assumptions about the frequency or spacing of the observations; it is therefore naturally suited to handle time series data with missing observations. Key to our approach is to model indirectly using a spline basis suitable transformations of the drift and diffusion coefficients. We developed, with consideration of practical issues, an MCMC algorithm for sampling from the posterior of the basis parameters, given discrete observations from an SDE. We hope that visualization of potential functional forms for b and \(\sigma \) will be a powerful investigative tool for practitioners, allowing them to refine their understanding of the processes they are studying.

The range of real-world examples considered in the numerical section of this paper demonstrate the breadth of applicability of our methodology. In the illustrative example of Section 5.1, we showed that even in the presence of severe over-specification of the number of knots the recovery of true functions is possible. Analysis of the financial dataset resulted in conclusions largely in agreement with what has already been observed in the literature through the use of other, frequentist, non-parametric methods. Our methodology suggests that for the paleoclimatology and astrophysics examples a richer class of statistical models to those currently used by practitioners seems to be needed. This was aided by our ability to visualise plausible posterior functional forms of the drift and diffusion coefficients.

A substantial direction to extend our methodology would be to broaden its applicability from scalar to multi-dimensional processes. Grounds for optimism are that for a d-dimensional diffusion of gradient type (i.e. for which a potential \(A_{\theta }:\mathbbm {R}^d\rightarrow \mathbbm {R}\) exists), the potential can be modelled through multivariate interpolations of B-splines. However, a potential complication is the modelling of the Lamperti transformation, which must satisfy additional conditions analogous to the monotonicity property required in dimension one (see Aït-Sahalia 2008).

Another natural direction would be to develop a companion methodology for noisy observations from an SDE, as opposed to observations without noise (Beskos et al. 2006b, 2009), a very common framework in many applications.

Data Availibility Statement

The US Treasury Bill data can be accessed at https://home.treasury.gov. Our Paleoclimatology example uses data from Andersen et al. (2004), and data for the Astophysics example is taken from AGN Watch Database (AGN Watch 2003).

Change history

21 February 2022

The original version of this paper was updated due to the article title on the journal’s webpage (the html but not the pdf) has an obvious mistake where there is a missing space between two of the words.

References

AGN Watch (2003) Light Curves of NGC 5548, optical continuum at 5100Å. http://www.astronomy.ohio-state.edu/~agnwatch/data.html, update Version: 2003-08-15. Accessed 19 April 2021

Aït-Sahalia Y (2002) Maximum likelihood estimation of discretely sampled diffusions: A closed-form approximation approach. Econometrica 70(1):223–262

Aït-Sahalia Y (2008) Closed-form likelihood expansions for multivariate diffusions. Ann Stat 36(2):906–937

Alley R, Anandakrishnan S, Jung P (2001) Stochastic resonance in the north atlantic. Paleoceanography 16(2):190–198

Andersen KK, Azuma N, Barnola JM et al (2004) High-resolution record of the northern hemisphere climate extending into the last interglacial period. Nature 431:147–151

Bali TG, Wu L (2006) A comprehensive analysis of the short-term interest-rate dynamics. J Bank Financ 30(4):1269–1290. https://doi.org/10.1016/j.jbankfin.2005.05.003

Banon G (1978) Nonparametric identification for diffusion processes. SIAM J Control Optim 16(3):380–395. https://doi.org/10.1137/0316024

Beskos A, Roberts GO (2005) Exact simulation of diffusions. Ann Appl Probab 15(4):2422–2444

Beskos A, Papaspiliopoulos O, Roberts GO (2008) A factorisation of diffusion measure and finite sample path constructions. Methodol Comput Appl Probab 10(1):85–104

Beskos A, Papaspiliopoulos O, Roberts G (2009) Monte Carlo maximum likelihood estimation for discretely observed diffusion processes. Ann Stat 37(1):223–245

Beskos A, Papaspiliopoulos O, Roberts GO (2006a) Retrospective exact simulation of diffusion sample paths with applications. Bernoulli pp 1077–1098

Beskos A, Papaspiliopoulos O, Roberts GO et al (2006b) Exact and computationally efficient likelihood-based estimation for discretely observed diffusion processes (with discussion). J R Stat Soc B (Stat Methodol) 68(3):333–382

Bibby BM, Jacobsen M, Sørensen M (2010) Estimating functions for discretely sampled diffusion-type models. In: Handbook of financial econometrics: Tools and Techniques. Elsevier, pp 203–268

Bladt M, Sørensen M (2014) Simple simulation of diffusion bridges with application to likelihood inference for diffusions. Bernoulli 20(2):645–675

Comte F, Genon-Catalot V, Rozenholc Y (2007) Penalized nonparametric mean square estimation of the coefficients of diffusion processes. Bernoulli 13(2):514–543

Dacunha-Castelle D, Florens-Zmirou D (1986) Estimation of the coefficients of a diffusion from discrete observations. Stochastics: An International Journal of Probability and Stochastic Processes 19(4):263–284

Dalalyan A, Kutoyants YA (2002) Asymptotically efficient trend coefficient estimation for ergodic diffusion. Math Methods Statist 11(4):402–427

De Boor C (1978) A practical guide to splines, vol 27. springer-verlag New York

Ditlevsen PD, Ditlevsen OD (2009) On the stochastic nature of the rapid climate shifts during the last ice age. J Clim 22(2):446–457

Ditlevsen PD, Kristensen MS, Andersen KK (2005) The recurrence time of Dansgaard-Oeschger events and limits on the possible periodic component. J Clim 18(14):2594–2603

Ditlevsen PD, Andersen KK, Svensson A (2007) The DO-climate events are probably noise induced: statistical investigation of the claimed 1470 years cycle. Clim Past 3(1):129–134

Durham GB, Gallant AR (2002) Numerical techniques for maximum likelihood estimation of continuous-time diffusion processes. J Bus Econ Stat 20(3):297–338

García CA, Otero A, Félix P, et al (2017) Nonparametric estimation of stochastic differential equations with sparse Gaussian processes. Phys Rev E 96(2):022,104. https://doi.org/10.1103/PhysRevE.96.022104

Gobet E, Hoffmann M, Reiß M (2004) Nonparametric estimation of scalar diffusions based on low frequency data. Ann Stat 32(5):2223–2253

Golightly A, Wilkinson DJ (2008) Bayesian inference for nonlinear multivariate diffusion models observed with error. Comput Stat Data Anal 52(3):1674–1693

Gugushvili S, Spreij P (2014) Nonparametric Bayesian drift estimation for multidimensional stochastic differential equations. Lith Math J 54(2):127–141. https://doi.org/10.1007/s10986-014-9232-1

Gugushvili S, van der Meulen F, Schauer M et al (2018) Nonparametric Bayesian volatility learning under microstructure noise. arXiv:1805.05606

Hastie T, Tibshirani R, Friedman J (2001) The elements of statistical learning. Springer series in statistics New York, NY, USA

He X, Shi P (1998) Monotone B-spline smoothing. J Am Stat Assoc 93(442):643–650

Imkeller P, Monahan AH (2002) Conceptual stochastic climate models. Stochastics Dyn 02(3):311–326. https://doi.org/10.1142/S0219493702000443

Karatzas I, Shreve SE (1998a) Methods of mathematical finance, vol 39. Springer

Karatzas I, Shreve SE (1998b) Brownian motion and stochastic calculus. Springer

Kelly BC, Bechtold J, Siemiginowska A (2009) Are the variations in quasar optical flux driven by thermal fluctuations? Astrophys J 698(1):895

Kloeden P, Platen E (1992) Numerical Solution of Stochastic Differential Equations. Springer-Verlag, Applications of Mathematics

Koskela J, Spanò D, Jenkins PA (2019) Consistency of Bayesian nonparametric inference for discretely observed jump diffusions. Bernoulli 25(3):2183–2205

Krumscheid S, Pradas M, Pavliotis G et al (2015) Data-driven coarse graining in action: Modeling and prediction of complex systems. Phys Rev E 92(4):042,139

Lamperti J (1964) A simple construction of certain diffusion processes. Journal of Mathematics of Kyoto University 4(1):161–170

Lansky P, Ditlevsen S (2008) A review of the methods for signal estimation in stochastic diffusion leaky integrate-and-fire neuronal models. Biol Cybern 99(4–5):253

Micula G, Micula S (2012) Handbook of splines, vol 462. Springer Science & Business Media

Nickl R, Ray K (2020) Nonparametric statistical inference for drift vector fields of multi-dimensional diffusions. Ann Stat 48(3):1383–1408

Nickl R, Söhl J (2017) Nonparametric Bayesian posterior contraction rates for discretely observed scalar diffusions. Ann Stat 45(4):1664–1693. https://doi.org/10.1214/16-AOS1504

Papaspiliopoulos O, Pokern Y, Roberts GO et al (2012) Nonparametric estimation of diffusions: a differential equations approach. Biometrika 99(3):511–531

Pedersen AR (1995) A new approach to maximum likelihood estimation for stochastic differential equations based on discrete observations. Scand J Stat, pp 55–71

Peluchetti S, Roberts GO (2012) A study of the efficiency of exact methods for diffusion simulation. In: Plaskota L, Woźniakowski H (eds) Monte Carlo and Quasi-Monte Carlo Methods 2010. Springer, Berlin Heidelberg, pp 161–187

Pokern Y, Stuart AM, van Zanten JH (2013) Posterior consistency via precision operators for Bayesian nonparametric drift estimation in SDEs. Stoch Process Appl 123(2):603–628

Ramsay JO (1988) Monotone regression splines in action. Stat Sci 3(4):425–441

Roberts GO, Stramer O (2001) On inference for partially observed nonlinear diffusion models using the metropolis-hastings algorithm. Biometrika 88(3):603–621

Roberts GO, Tweedie RL (1996) Exponential convergence of Langevin distributions and their discrete approximations. Bernoulli 2(4):341–363

Rump SM (2003) Ten methods to bound multiple roots of polynomials. J Comput Appl Math 156(2):403–432

Sermaidis G, Papaspiliopoulos O, Roberts GO et al (2013) Markov Chain Monte Carlo for Exact Inference for Diffusions. Scand J Stat 40(2):294–321

Spokoiny VG (2000) Adaptive drift estimation for nonparametric diffusion model. Ann Stat 28(3):815–836. https://doi.org/10.1214/aos/1015951999

Stanton R (1997) A nonparametric model of term structure dynamics and the market price of interest rate risk. J Financ 52(5):1973–2002. https://doi.org/10.1111/j.1540-6261.1997.tb02748.x

Tuan PD (1981) Nonparametric estimation of the drift coefficient in the diffusion equation. Series Statistics 12(1):61–73. https://doi.org/10.1080/02331888108801571

van der Meulen F, Schauer M (2017) Bayesian estimation of discretely observed multi-dimensional diffusion processes using guided proposals. Electron J Stat 11(1):2358–2396

van der Meulen F, van Zanten H (2013) Consistent nonparametric Bayesian inference for discretely observed scalar diffusions. Bernoulli 19(1):44–63. https://doi.org/10.3150/11-BEJ385

van der Meulen F, Schauer M, van Zanten H (2014) Reversible jump MCMC for nonparametric drift estimation for diffusion processes. Comput Stat Data Anal 71:615–632. https://doi.org/10.1016/j.csda.2013.03.002

van der Meulen F, Schauer M, van Waaij J (2018) Adaptive nonparametric drift estimation for diffusion processes using Faber-Schauder expansions. Stat Infer Stoch Process 21(3):603–628. https://doi.org/10.1007/s11203-017-9163-7

van Waaij J, van Zanten H (2016) Gaussian process methods for one-dimensional diffusions: Optimal rates and adaptation. Electron J Stat 10(1):628–645. https://doi.org/10.1214/16-EJS1117

van Zanten H (2001) Rates of convergence and asymptotic normality of kernel estimators for ergodic diffusion processes. J Nonparametr Stat 13(6):833–850. https://doi.org/10.1080/10485250108832880

van Zanten H (2013) Nonparametric Bayesian methods for one-dimensional diffusion models. Math Biosci 243(2):215–222. https://doi.org/10.1016/j.mbs.2013.03.008

Acknowledgements

The authors would like to thank Dr Marcin Mider for substantial contributions to the development of this work. All three were supported by The Alan Turing Institute under the EPSRC grant EP/N510129/1. Gareth Roberts was additionally supported under the EPSRC grants EP/K034154/1, EP/K014463/1, EP/R034710/1 and EP/R018561/1.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interest

The authors: Paul Jenkins, Murray Pollock and Gareth Roberts have no competing interests to disclose, and contributed equally to all aspects of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Details of Algorithm 1

Appendix: Details of Algorithm 1

1.1 Updating the Latent Diffusion Path

Here we give further details of Step 2 of Algorithm 1 when we have discrete observations from a diffusion of the form (1). See Section 3 for some intuition. As noted in that Section, following an application of the Lamperti transformation the datapoints \(\{\eta _{\xi }(v_{t_i}):\, i=1,\dots ,N\}\) now depend on a parameter of interest, reintroducing the very problem of degeneracy that the Lamperti transformation was designed to avoid. To resolve this, the general idea is to decouple the dependence of the source of randomness from the parameter \(\xi \) when constructing the variable \(\mathcal {S}(\mathcal {A})\). To this end, the innovation process is defined:

with \(\bigotimes \) denoting a product space and \(\mathcal {C}(B;A)\) a space of continuous functions from \(A\rightarrow B\) (notice that Z is independent of \(\xi \)), together with a function:

We construct \(\Psi \) and Z so that \(V^{\circ }:=\Psi (Z;\xi )\) serves as a proposal from \(\pi (\mathcal {A}|\theta ,\xi ,\mathcal {D})\) within a rejection sampler. In practice this can be achieved by instead using a finite-dimensional summary of \(V^{\circ }\) as a proposal from \(\pi (\mathcal {S}(\mathcal {A})|\theta ,\xi ,\mathcal {D})\).

In order to construct the pair \((Z,\Psi (\cdot ;\xi ))\), a diffusion V solving (1) is first transformed to a diffusion \(X:=\{\eta _{\xi }(V_t), t\in [0,T]\}\) via the Lamperti transformation (2). The set of transformed observations is then defined as \(\widetilde{\mathcal {D}}_{\xi }:=\{x^{\xi }_i:\, i=0,\dots ,N\}:=\{\eta _{\xi }(v_{t_i}):\, i=0,\dots ,N\}\). Finally, the centering functions are defined as:

and a map \(\varsigma (\cdot ;\xi ):\bigotimes _{i=0}^{N-1}\mathcal {C}(\mathbbm {R};[0,\Delta _i])\rightarrow \mathcal {C}(\mathbbm {R};[0,T])\) as:

(with \(\varsigma (Z;\xi )_0:=V_0\)). Roberts and Stramer (2001) define the innovation process as draws from the measure \(\bigotimes _{i=0}^{N-1}\mathbbm {W}^{(\Delta _i,0,0)}\), and \(\Psi (\cdot ;\xi )\) to be given by \(\Psi (Z;\xi ):=\eta _{\xi }^{-1}\circ \varsigma (Z;\xi )\), where we recall that \(\mathbbm {W}^{(t,x,y)}\) denotes the law induced by a Brownian bridge from x to y over the interval [0, t].

To impute the finite-dimensional surrogate variable \(\mathcal {S}(\mathcal {A})\) it is now enough to employ N independent path-space rejection samplers, each for a separate interval \((t_i,t_{i+1})\), \(i=0,\dots ,N-1\), and whenever a proposal path \(V^{\circ }\) needs to be revealed at a time-point \(t\in (t_i,t_{i+1})\), an innovation process \(Z^{[i]}\) is sampled at time \(t-t_i\) in order to obtain \(V^{\circ }_t=\Psi (Z;\xi )_t\). Since path-space rejection sampling reveals proposals only at a discrete collection of (random) time-points, the simulated innovation process is given by the following proposal surrogate random variable \(\mathcal {S}^{\circ }(\mathcal {A})\):

Here \(\{\psi _{i,j}, \chi _{i,j}\}_{j=1}^{\varkappa _i}\) is a Poisson Point Process on \([0,\Delta _i]\times [0,1]\) with intensity \(r_{\theta }(\Upsilon _i)\) (where \(r_{\theta }(\Upsilon _i)\) is a local upper bound on \(G_{\theta }-l_{\theta }\), with \(G_{\theta }\) and \(l_{\theta }\) as defined in Section 2). \(\Upsilon _i\) is an additional random element containing information about the path Z enabling the upper bound \(r_{\theta }\) to be computed (for instance an interval which constrains a Brownian bridge path, \(Z^{[i]}\), to a given interval; see Beskos et al. (2008) for more details). This proposal is then accepted with probability proportional to the Radon–Nikodym derivative between the proposal and the target laws, as described in Section 3.

1.2 Updating the Parameters

With the surrogate defined as in (20), Sermaidis et al. (2013) derive a closed form expression for the joint density of the imputed data \(\mathcal {S}(\mathcal {A})\), parameters \((\theta ,\xi )\) and observations \(\mathcal {D}\),which becomes:

Here we denote by \(\mathcal {N}_t(x)\) as a Gaussian density with variance t and mean 0 evaluated at x, and \(D_{\xi }(\cdot ):=(\sigma _{\xi }(\cdot ))^{-1}=\eta _{\xi }'(\cdot )\). Additionally, recall from (2) the notation for the anti-derivative of the drift of X.

The density (21) can then be used to sample from \(\pi (\xi ,\theta |\mathcal {S}(\mathcal {A}),\mathcal {D})\) in the parameter-update step. In particular, to sample from \(\pi (\theta ,\xi |\mathcal {S}(\mathcal {A})^{(n-1)},\mathcal {D})\) at the nth iteration of the Markov chain we can draw \((\theta ^{\circ },\xi ^{\circ })\sim q((\theta ^{(n-1)},\xi ^{(n-1)}),\cdot )\) from some proposal kernel (we did this as per (10) and (11), but could be another proposal such as a random walk) and then employ a Metropolis–Hastings correction with the acceptance probability given by Algorithm 1 Step 4.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jenkins, P.A., Pollock, M. & Roberts, G.O. Flexible Bayesian Inference for Diffusion Processes using Splines. Methodol Comput Appl Probab 25, 83 (2023). https://doi.org/10.1007/s11009-023-10056-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11009-023-10056-9

Keywords

- Markov chain Monte Carlo

- Stochastic differential equation

- Path-space rejection sampling

- Interest-rate modelling

- Climate modelling

- Quasar light curve modelling