Abstract

In modeling of port dynamics it seems reasonable to assume that the ships arrive on a somewhat scheduled basis and that there is a constant lay period during which, in a uniform way, each vessel can arrive at the port. In the present paper, we study the counting process N(t) which represents the number of scheduled vessels arriving during the time interval (0, t], \(t>0\). Specifically, we provide the explicit expressions of the probability generating function, the probability distribution and the expected value of N(t). In some cases of interest, we also obtain the probability law of the stationary counting process representing the number of arrivals in a time interval of length t when the initial time is an arbitrarily chosen instant. This leads to various results concerning the autocorrelations of the random variables \(X_i\), \(i\in \mathbb {Z}\), which give the actual interarrival time between the \((i-1)\)-th and the i-th vessel arrival. Finally, we provide an application to a stochastic model for the queueing behavior at the port, given by a queueing system characterized by stationary interarrival times \(X_i\), exponential service times and an infinite number of servers. In this case, some results on the average number of customers and on the probability of an empty queue are disclosed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Every year about 11 billion tons of goods are transported by ship. Large volumes of products such as grain, oil, chemicals and manufactured goods are shipped by sea, so that around \(90\%\) of international trade is handled by bulk ports worldwide. Bulk ports must be able to accomodate the vessels and to control in reasonable time the load or the unload of materials. They provide berth facilities for ships but the berth capacity is limited and costly, so that terminal operators try to minimize the berth utilization. Queuing theory has been considered since the early 60s as a tool to solve optimization of port handling service system. Use of queuing systems in modeling port dynamics relies on the interpretation of the ships as clients, the berths as server and the time spent by the ship at the berth as service time. Over time many authors have provided the solution for different types of systems. In certain cases it has been possible to obtain the exact solution (for instance, Frankel (1987) and Tsinker (2004)). Other researchers proposed approximations (see Artalejo et al. (2008), de la Peña-Zarzuelo et al. (2020)), statistical models or computational techniques (see Dahal et al. (2003), Wadhwa (1992)). In some recent papers, attention has been devoted to the customer waiting time management: the clients of a queuing system are regarded as active entities able to join or to leave the queue under particular conditions. The customers’ decision about the waiting time of service may be influenced by the observation of the queue length or by the knowledge of crucial information about the system, such as the arrival and the service rates (cfr. Bountali and Economou (2019) and Dimitrakopoulos et al. (2021)). Furthermore, customers may be also characterized by an impatience time which is defined to describe the intolerance to delay. If the waiting time reaches the impatience time, then the customer leaves the system without receiving any service. Examples of this kind of a queueing system can be found in Bar-Lev et al. (2013) or in Inoue et al. (2018).

The random nature of vessel arrivals and terminal service processes have a significant impact on port performance. Indeed, they often lead to significant handling delays. Hence, many arrival planning strategies have been provided in order to mitigate such undesirable effects (see, for instance, Lang and Veenstra (2010) and van Asperen et al. (2003)). In many studies, the arrival of a vessel in a certain area is assumed to follow a stationary Poisson process, which generally is not a realistic assumption, since it may be expected that during certain days or months of the year, ships will arrive in greater numbers than during other periods, cf. Goerlandt and Kujala (2011). Besides the assumption of exponential distribution, other distributions, such as the Erlang-2 distribution, have been considered to describe the ships interarrival times, cf. van Vianen et al. (2014). However, as suggested by Altiok (2000) and Jagerman and Altiok (2003), vessels arrive on a somewhat scheduled basis and there should be one vessel arriving every time units. Unfortunately, due to bad weather conditions, natural phenomena or unexpected failures, vessels do not generally arrive at their scheduled times, so that each vessel is assigned a constant lay period during which, in a uniform way, it is supposed to arrive at the port. Some examples of a pre-scheduled random arrivals process can be found in Guadagni et al. (2011) and Lancia et al. (2018) to describe public and private transportation systems.

Stimulated by the above mentioned researches, in this paper we investigate a stochastic model describing the vessel arrivals and the port dynamics. We assume that the vessel are scheduled to arrive at multiples of a given period \(a>0\), whereas the actual arrival time is increased by a uniformly distributed lay period. Differently from a previous model in which the lay period is uniform over (0, a) (cf. Jagerman and Altiok (2003)), in the present investigation we consider the more general case in which it is uniform over (0, ka), \(k\in \mathbb {N}\).

We mainly focus on the determination of explicit forms of the conditional probability distribution and the mean of the counting process describing the vessel arrivals. It should be noted that the analytical results available in the literature in this area are quite scarce and fragmentary. Nevertheless, we are able to find several explicit results on the main probability distributions of interest. In particular, in some special cases we determine (i) the unconditional probability distribution of the vessel arrival process, (ii) the probability distribution of the stationary process which represents the number of vessel arrivals when the initial time is an arbitrarily chosen instant, (iii) the distribution, the variance and the autocorrelation of the interarrival times between consecutive vessel arrivals. We remark that the finding of such explicit analytical results represents a strength of the paper, compared to other articles in this area where only approximations are obtained by means of simulation techniques.

In addition, with reference to a vessel queueing system with infinite servers, whose service times are exponentially distributed, we propose an application of the main results to determine the mean number of vessels in the queue seen at the arrival of a given ship. This is helpful to deal with resource allocation problems in the port.

1.1 Plan of the Paper

We assume that the actual arrival time of the vessel scheduled to arrive at time \(\varepsilon _i:=i a\), \(a>0\), \(i\in \mathbb {Z}\), is given by \(A_i= i a + Y_i-y_0\), \(i\in \mathbb {Z}\), where the random variables \(Y_i\) are independent and identically distributed, with uniform distribution on (0, ka), \(k\in \mathbb {N}\), and where \(y_0\) denotes the realization of \(Y_0\). Section 2 is devoted to the study of the counting process N(t), which represents the number of scheduled vessels arriving during the time interval (0, t], \(t\ge 0\), where 0 is an arrival instant. We provide the explicit expression of the probability generating function of N(t), its probability distribution and expected value. Furthermore, we develop some cases of interest based on specific choices of k, i.e. \(k=1\) and \(k=2\).

For the same choices of k, in Section 3 we obtain the probability law of a stationary counting process, say \(N_e(t)\). The latter process provides the number of arrivals in a time interval of length t, when the initial time is an arbitrarily chosen instant. The knowledge of the distribution of \(N_e(t)\) allows us to obtain some useful results concerning the variance and the correlations of the random variables \(X_i\), representing the actual interarrival time between the \((i-1)\)-th and the i-th vessel arrival. In particular, for \(k=1\) we find that the correlations \(\rho _h =\textrm{Corr}(X_i,X_{i+h})\), \(i\in {\mathbb Z}\), \(h\in {\mathbb N}\), are vanishing for \(h\ge 2\). Moreover, in the case \(k=2\) they are vanishing for \(h\ge 4\), and negative increasing for \(h=1,2,3\).

Finally, in Section 4 we consider an application of the previous results to a queueing system, say SHIP/M/\(\infty\), in which the service times have i.i.d. exponential distribution with parameter \(\mu > 0\), and there is an infinite number of servers. Denoting by \(Q_n\) the number of customers at the port seen by the arrival of the n-th customer, the knowledge of \(\mathbb {E}\left[ N(t)\right]\) allows us to obtain the explicit expression of the average number of customers \(\mathbb {E}(Q_n)\), together with an upper and a lower bound for the probability of empty queue. In addition, from the stationarity of the interarrival times \(X_i\) we obtain that \(\mathbb {E}(Q_n)\) does not depend on n. Moreover, it depends on parameters a and \(\mu\) only through their product. In addition, it is decreasing in \(\mu a>0\) and increasing in \(k\in \mathbb {N}\).

2 The Model

Consider a system able to receive an infinite sequence of units, such as a stochastic flow of vessel arrivals occurring at epochs in the time domain \((-\infty ,\infty )\). Specifically, for a fixed \(a>0\), the i-th vessel is scheduled to arrive at the port at the time

and it is expected to show up during a lay period having length \(\omega >0\). The lay period is assumed to be the same for every ship. Each vessel arrives at the port, independently from the others, at a random time which is uniformly distributed within the lay period. The assumption of uniformity of vessel arrivals within the lay period is justified recalling that captains are only given the window of arrival and not a scheduled arrival time (see Jagerman and Altiok (2003)).

In the sequel, we denote by \(A_i\), \(i\in \mathbb {Z}\), the actual (rescaled) arrival time of the i-th vessel, which is scheduled to arrive at time (1). Specifically, we assume that (cf. Jagerman and Altiok (2003))

As specified, the r.v.’s \(Y_i\), \(i \in \mathbb {Z}\), representing the elapsed arrival time of the i-th vessel, are independent and identically uniformly distributed over \((0,\omega )\), with distribution function \(F_Y(\cdot )\). The random variable \(Y_0\) describes the elapsed arrival time of the 0-th vessel, and \(y_0\) denotes its realization used for the rescaling in Eq. (2). Hence, we have \(A_0=0\), so that the arrival of the vessel scheduled at time \(-y_0\) actually occurs at time \(t=0\).

Note that, since the vessels can arrive in any order during the common period of sailing, the order of the actual arrivals may differ from the scheduled ones. Hence, whereas the vessels are scheduled to arrive in increasing order, their actual arrival times are not necessarily ordered. Let \(X_i\), \(i \in \mathbb {Z}\), be the actual interarrival time between the \((i-1)\)-th and the i-th vessel arrival, with \(X_1\) denoting the time length between time \(t=0\) and the subsequent arrival. It is known that \(\{X_i\}\) is a stationary sequence of identical distributed random variables. Moreover, the corresponding counting process N(t), which represents the number of scheduled vessels arriving during the time interval (0, t], with 0 an arrival point, is a non-stationary process in continuous time (see Cox and Lewis (1966)).

2.1 Probability Generating Function

In this section we determine the probability generating function (pgf) of N(t), \(t>0\), conditional on \(Y_0=y_0\). Recalling that the arrival events are independent, we have

where, due to Eq. (2),

From Eqs. (3) and (4), for \(t>0\), \(z\in (0,1)\), \(y_0\in (0,\omega )\), the pgf of N(t) can be expressed as

In the sequel we shall assume that \(\omega =k a\), with \(k\in \mathbb {N}\), \(a>0\), so that \(y_0\in (0,ka)\). Under such assumption, it is possible to obtain the explicit expression of \(G_N(t,z|y_0)\), for different ranges of values of t.

Theorem 2.1

Assuming that \(\omega =ka\), for \((n-1)a<y_0<na\), with \(n=1,\dots ,k\), the pgf \(G_N(t,z|y_0)\) given in (5) can be expressed in the following way:

-

(i)

for \(0 \le t<k a-y_0\),

$$\begin{aligned} G_N(t,z|y_0)=(-1)^{s\mathop{-}n}\left[ \frac{1-z}{k}\right] ^{2s\mathop{-}2n} \left( n-\frac{y_0}{a}-\frac{k}{1-z}\right) _{{s\mathop{-}n}} \left( n-\frac{t+y_0}{a}+\frac{k}{1-z}\right) _{{s\mathop{-}n}} \\ \times \left[ 1-(1-z)\frac{t}{ka}\right] ^{k\mathop{-}s\mathop{+}n\mathop{-}1}, \end{aligned}$$ -

(ii)

for \(k a-y_0\le t<(k+n-1) a-y_0\),

$$\begin{aligned} G_N(t,z|y_0)=(-1)^{-n\mathop{+}s}\left[ \frac{1-z}{k}\right] ^{2 s\mathop{-}1\mathop{-}2 n} \left( n-\frac{y_0}{a}-\frac{k}{1-z}\right) _{s\mathop{-}n} \left( n-\frac{t+y_0}{a}+\frac{k}{1-z}\right) _{s\mathop{-}n}\\ \times \frac{\left[ 1-(1-z)\frac{t}{ka}\right] ^{n\mathop{-}s\mathop{+}k}}{\left( \frac{y_0}{a}+\frac{k z}{1-z}\right) }, \end{aligned}$$ -

(iii)

for \(t\ge (k+n-1)a-y_0\),

$$G_N(t,z|y_0)=\left[ \frac{1-z}{k}\right] ^{2k\mathop{-}1} \frac{z^{s\mathop{-}k\mathop{-}n}}{\left( \frac{y_0}{a}+\frac{k z}{1\mathop{-}z}\right) } \left( n-\frac{y_0}{a}-\frac{k}{1-z}\right) _{k} \left( -s+1+\frac{t+y_0}{a}-\frac{k}{1-z}\right) _{k},$$

where \(s=\lfloor (t+y_0)/a \rfloor +1\), with \(\lfloor \cdot \rfloor\) denoting the integer part function, \((x)_n:=\frac{\Gamma (x+n)}{\Gamma (x)}\) is the Pochhammer symbol, and \(\Gamma (\cdot )\) is the Gamma function.

Proof

Starting from Eq. (5), the pgf of N(t) can be rewritten as

where \(F_{W_j}(t)\) is the distribution function of a random variable \(W_j\) uniformly distributed over the interval \(\left[ ja-y_0, (k+j)a-y_0\right]\), \(\textbf{1}_A\) is the indicator function of the set A and we assume

Hence, the proof follows by considering different ranges of values of t. \(\square\)

The knowledge of the pgf of N(t) is useful to determine suitable upper bounds to the probability that the number of scheduled vessels arriving during a time interval (0, t] exceeds a given threshold. Indeed, from the well-known Chernoff bound we have

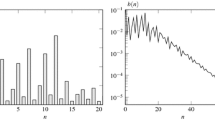

An example of the upper bond \(B_m(t\,|\,y_0)\) is given in Fig. 1, where it is shown to be increasing in t, as expected. Finally, we remark that in the case (iii) of Theorem 2.1 it is not hard to see that \(B_{m\mathop{+}1}(t+a\,|\,y_0)=B_m(t\,|\,y_0)\).

Bound \(B_m(t\,|\,y_0)\), given in Eq. (6), as a function of t, for \(k=5\), \(y_0=2\), \(a=1\), \(n=3\) and \(m=10\), with some exact values of \(\mathbb {P}\left[ N(t) \ge m\,|\,Y(0)=y_0\right]\) shown below

The model (2) of the vessels’ arrival times is based on the assumption that the r.v.’s \(Y_i\), representing the elapsed arrival time of the i-th vessel, \(i \in \mathbb {Z}\), are uniformly distributed over \((0, \omega )\). It would be desirable to modify the latter assumption by considering other distributions for \(Y_i\), looking for more flexible models for the vessel arrivals. For instance, as an alternative model one can assume that \(Y_i\) has a decreasing triangular probability density function (pdf) for describing arrivals that are more likely in proximity of the scheduled arrival times. However, unfortunately the determination of the pgf becomes more difficult even in this case, as shown in the following remark.

Remark 2.1

If the random variables \(Y_i\) are independent and identically distributed, with decreasing triangular pdf on (0, ka), \(k\in \mathbb {N}\), such that

then, due to Eq. (5), the pgf of N(t), \(t>0\), can be expressed as

where

and

In this case, the determination of the explicit form of the pgf is quite difficult and leads to heavy calculations since the functions \(\phi _{ij}(t)\), \(i=1,2\), have a quadratic dependence on t.

2.2 Probability Distribution

For the model (2), with the r.v.’s \(Y_i\) uniformly distributed over \((0, \omega )\), in this section we obtain the conditional probability of N(t) given that \(Y_0=y_0\), denoted by

Even though the expressions for \(p_{y_0}(n,t)\) are given in a quite cumbersome form, they involve finite sums and hypergeometric functions that can be computed by use of any scientific software. In particular, we recall that the series of the Gauss hypergeometric function \({}_{2}F_{1}(a,b; \, c; \, z)\), defined in Eq. (25), terminates if either a or b is a nonpositive integer, in which case the function reduces to a polynomial.

The following proposition provides the explicit expressions of the conditional probability distribution of N(t).

Proposition 2.1

Let us assume \((n-1)a<y_0<na\), with \(n=1,\dots ,k\). Denoting by \(s=\lfloor (t+y_0)/a \rfloor +1\), we have

-

(i)

for \(0\le t<k a-y_0\),

$$\begin{aligned} p_{y_0}(0,t)=\frac{(-1)^{s\mathop{-}n}}{k^{2s\mathop{-}2n}}\,\frac{\Gamma \left( 1+k-n+\frac{y_0}{a}\right) }{\Gamma \left( 1+k-s+\frac{y_0}{a}\right) }\,\frac{\Gamma \left( 1-k-n+\frac{t+y_0}{a}\right) }{\Gamma \left( 1-k-s+\frac{t+y_0}{a}\right) }\, \left( 1-\frac{t}{ka}\right) ^{k\mathop{+}n\mathop{-}s\mathop{-}1}, \end{aligned}$$ -

(ii)

for \(k a -y_0 \le t<(k+n-1) a-y_0\),

$$\begin{aligned} p_{y_0}(0,t)=\frac{(-1)^{s\mathop{-}n} }{k^{2 s\mathop{-}1\mathop{-}2 n}}\,\frac{a}{y_0}\,\left( 1-\frac{t}{ka}\right) ^{n\mathop{-}s\mathop{+}k}\frac{\Gamma \left( 1-k-n+\frac{t+y_0}{a}\right) \Gamma \left( 1+k-n+\frac{y_0}{a}\right) }{\Gamma \left( -k-s+1+\frac{t+y_0}{a}\right) \Gamma \left( -s+1+k+\frac{y_0}{a}\right) }, \end{aligned}$$ -

(iii)

for \(t\ge (k+n-1) a -y_0\),

$$p_{y_0}(0,t)=0.$$

Moreover, for \(h\ge 1\), it results

-

(i)

for \(0\le t<k a-y_0\),

$$p_{y_0}(h,t)={\mathcal P}_1(h,t,n,a,y_0),$$ -

(ii)

for \(k a -y_0 \le t<(k+n-1) a-y_0\),

$$p_{y_0}(h,t)={\mathcal P}_2(h,t,n,a,y_0),$$ -

(iii)

for \(t\ge (k+n-1) a -y_0\),

$$p_{y_0}(h,t)={\mathcal P}_3(h,t,n,a,y_0),$$

where, for brevity, the expressions of \({\mathcal P}_i(h,t,n,a,y_0)\), \(i=1,2,3\), and the proofs are provided in Appendix A.

Figures 2, 3 and 4 show some plots of the probability \(p_{y_0}(h,t)\) for different choices of the involved parameters, evaluated by means of the explicit expressions obtained in Proposition 2.1. Similarly, the exact values of \(\mathbb {P}\left[ N(t) \ge m\,|\,Y(0)=y_0\right]\) shown in Fig. 1 have been evaluated by means of Proposition 2.1.

Remark 2.2

Tables 1, 2, 3 and 4 provide a comparison between the exact expression of the state probabilities \(p_{y_0}(h,t)\) obtained in Proposition 2.1 and the probabilities \(\hat{p}_{y_0}(h,t)\) evaluated via numerical inversion of the pgf \(G_N(t,z|y_0)\) thanks to Theorem 1 of Abate and Whitt (1992). The results show that the numerical inversion provides a good approximation of the exact distribution for small values of h.

In the next theorem, the limiting behavior of \(G_N(t,z|y_0)\) is analyzed for \(t\rightarrow 0^{+}\) and \(t\rightarrow +\infty\).

Theorem 2.2

Assuming \(\omega =k a\), with \(k\in \mathbb {N}\) and \(a>0\), for \((n-1)a<y_0<na\), with \(n=1,\dots ,k\), the pgf of N(t) exhibits the following limiting behavior

where

Proof

It follows from Theorem 2.1.

By inspection of (9), the absence of singularity of the pgf \(G_N(t,z|y_0)\) for large t is ensured.

Remark 2.3

Note that, in agreement with Eq. (A.13) of Jagerman and Altiok (2003), under the assumptions of Theorem 2.2, from (8) one has the large deviation limit

2.3 Mean Values

Making use of Theorem 2.1, we can now determine the mean of N(t), conditional on \(Y_0=y_0\).

Proposition 2.2

For \(\omega =ka\), \(a>0\), \(k\in {\mathbb N}\), the conditional mean of N(t), given that \(Y_0=y_0\), is given by

Proof

Recalling Theorem 2.1, we consider the case \(0\le t<k a -y_0\) and \(s\ge n\). We have

where

with \(\psi ^{(0)}(z)\) denoting the digamma function (see, for instance, Eq. (6.3.1) of Abramowitz and Stegun (1992)). By considering Eq. (6.3.6) of Abramowitz and Stegun (1992), the differences of the polygamma functions in \(A_3(z)\) can be expressed as

and

so that, by means of L’Hôpital’s rule, we have

Finally, since \(\lim _{z\rightarrow 1} A_1(z)=(-1)^{s-n}\), the thesis follows. The other cases can be treated similarly.

Equation (10) shows that \(\mathbb {E}\left[ N(t)|y_0\right]\) is piecewise linear, continuous and increasing convex for \(t \ge 0\). The unconditional expected value of N(t) is provided in the following proposition.

Proposition 2.3

For \(\omega =ka\), \(a>0\), \(k\in {\mathbb N}\), the expected value of N(t) is given by

Proof

The proof follows from Eq. (10) and recalling that \(Y_0\sim U(0,k a)\).

From Eq. (11) we note that \(\mathbb {E}\left[ N(t)\right]\) is an increasing convex continuous function of t, and that

according to a well known result on point processes Cox and Lewis (1966).

2.4 Some Cases of Interest

Aiming to provide a more detailed description of the considered model, hereafter we provide the explicit expressions of the probability generating function (5) and of the state probabilities of N(t) (given in Proposition 2.1) in two cases: (i) \(k=1\) and (ii) \(k=2\). These cases refer to the assumption that \(Y_j\) is uniformly distributed over (0, a) and (0, 2a), respectively.

Note that for \(k=1\) the vessels’ arrival times are uniformly distributed in non-overlapping time intervals, this ensuring that the interarrival times between consecutive vessel arrivals are distributed as the difference between two uniform random variables.

Proposition 2.4

Under the assumptions of Theorem 2.1, in case \(k=1\), \(a>0\), we have

Moreover,

and, for \(n\ge 1\)

Proof

The proof follows from Theorem 2.1 and Proposition 2.1 for \(k=1\).

The case \(k=2\) can be analyzed under two different scenarios: \(y_0\in (0,a)\) and \(y_0\in (a,2 a)\).

Proposition 2.5

Under the assumptions of Theorem 2.1, in case \(k=2\), \(a>0\) and assuming \(y_0\in (0,a)\), one has

The state probabilities given in Proposition 2.1 have the following expressions

and, for \(n\ge 2\),

where, for brevity, the expression of \({\mathcal N}_1(h,t,a,y_0)\) is provided in Appendix B.

If \(k=2\), \(a>0\) and \(y_0\in (a,2 a)\), we have

Moreover, one has

and for \(n\ge 2\)

where the expression of \({\mathcal N}_2(h,t,a,y_0)\) is given in Appendix B.

Proof

The proof follows from Theorem 2.1 and Proposition 2.1 for \(k=2\).

The probabilities \(p_{y_0}(h,t)\), obtained in Proposition 2.5, are plotted in Fig. 5 for \(a=1\) and two different choices of \(y_0\). For \(h=0\) the probabilities \(p_{y_0}(h,t)\) are decreasing in t. Moreoever, for \(h\ge 1\), the plots are composed of continuous, straight and curved lines. Such shape is partially inherited from the triangular distribution arising in the case \(k=1\), when the times between consecutive vessel arrivals are distributed as the difference between two uniform random variables.

Probabilities \(p_{y_0}(n,at)\) obtained in Proposition 2.5 for \(a=1\), \(n=0,1,2\) with \(y_0=0.5\) (upper cases) and \(y_0=1.5\) (lower cases)

3 The Stationary Arrival Counting Process

In the previous section we obtained the probability distribution of the counting process that describes the vessel arrivals, conditional on an arrival occurring at time 0. Unfortunately, due to the difficulties in managing the expressions mentioned in Proposition 2.1, the unconditional distribution is very hard to be obtained in a closed form in the general setting. However, considering the relevance of the unconditional distribution in the applications, hereafter we devote our efforts to derive the mentioned distribution in some special cases. We are confident that these results are useful to give a first insight of the properties and behavior of the considered processes.

Differently from Section 2.2, where the interest was oriented to study the conditional probability (7), in this section we deal with the (unconditional) distribution

In general, this distribution can be easily obtained in a formal way, but the explicit form requires hard calculations due to the lengthy and complicated forms obtained in Proposition 2.1. Furthermore, we also focus on the analysis of the stationary arrival counting process, namely \(N_e(t)\), which represents the number of arrivals in a time interval of length t when the initial time is an arbitrarily chosen instant. This process is useful since it allows to determine some relevant information on the interarrival times between consecutive vessel arrivals. Let us denote by

the probability law of \(N_e(t)\). The probability distributions of the counting processes N(t) and \(N_e(t)\) are linked by means of the following relations (see Chapter 4 of Cox and Lewis (1966)):

where X is identically distributed to the interarrival times \(X_i\). Note that, due to (12), for all \(i\in {\mathbb Z}\) one has

Hence, from (17) one has \(\int _0^{\infty }p(n,t) \,\textrm{d}t =a\) for all \(n\in \mathbb {N}_0\), and

where \(\widetilde{T}_{k\mathop{+}1}\) is the first-passage-time of \(N_e(t)\) through state \(k+1\).

Let us now obtain some results concerning the correlations of the random variables \(X_i\). The difficulties due to the heaviness of the available expressions for the distribution of N(t) force us to consider only few instances. Hence, for both cases \(k=1\) and \(k=2\), hereafter we focus on the expressions of (i) the (unconditional) probability law of N(t), (ii) the probability density function of the interarrival times \(X_i\), (iii) the probability law of \(N_e(t)\), and (iv) the variance and the autocorrelation coefficients of \(X_i\).

3.1 Case \(k=1\)

Proposition 3.1

In the case \(k=1\), for \(a>0\), the probability law of N(t), defined in Eq. (15), is given by

and, for \(n\in \mathbb {N},\)

Proof

The thesis follows from Eqs. (13) and (14) and recalling that \(Y_0\sim U(0,a)\).

Remark 3.1

From Proposition 3.1, we have that the mode of p(n, t), \(t\ge 0\), is given by

As an immediate consequence of Proposition 3.1, we obtain the following results about the density of the interarrival times.

Corollary 3.1

Under the assumptions of Proposition 3.1, the probability density function of the interarrival times \(X_i\), for \(i\in \mathbb {Z}\), is given by

Proof

The thesis follows noting that \(\mathbb {P}(X_i>t)=\mathbb {P}(X_1>t)=p(0,t)\), for all \(i\in \mathbb {Z}\).

The following proposition provides, for \(n\in {\mathbb {N}_0}\), the explicit expression of the probabilities \(\widetilde{p}(n,t)\) introduced in Eq. (16).

Proposition 3.2

For \(k=1\), \(a>0\), the probability law of \(N_e(t)\) is given by

and, for \(n\in \mathbb {N}\),

Proof

The result follows from Proposition 3.1 and Eq. (18), due to Eq. (17).

Figure 6 shows some plots of the probabilities \(\widetilde{p}(n,t)\) obtained in Proposition 3.2 for \(k=1\) and different values of n.

Let us now provide the mean first-passage-time of \(N_e(t)\) through level \(n+1\), \(n\in \mathbb {N}_0\), which is asymptotically linear in n.

Corollary 3.2

Under the assumptions of Proposition 3.1, from (19) one has

With reference to the interarrival times \(X_i\), \(i\in {\mathbb Z}\), the following proposition provides the variance and the autocorrelation coefficients

Proposition 3.3

In the case \(k=1\), \(a>0\), the variance of \(X_i\), \(i\in {\mathbb Z}\), is

and the autocorrelation coefficients (21) are given by

Proof

Due to Eq. 8 of Jagerman and Altiok (2003), and recalling Eq. (20), we have

From this result, and by means of Eq. 9 of Jagerman and Altiok (2003), we obtain

The thesis then follows.

3.2 Case \(k=2\)

Proposition 3.4

In the case \(k=2\), \(a>0\), the probability law of N(t), defined in Eq. (15), is given by

and for \(n\ge 3\)

Proof

The proof follows from Proposition 2.5 recalling that \(Y_0\sim U(0,2a)\).

Remark 3.2

Due to Proposition 3.4, the mode of p(n, t), \(t\ge 0\), is given by

The following result thus holds.

Corollary 3.3

Under the assumptions of Proposition 3.4, the probability density function of the interarrival times \(X_i\) for \(i\in \mathbb {Z}\) is given by

The next proposition provides the explicit expression of the probabilities \(\widetilde{p}(n,t)\), defined in Eq. (16).

Proposition 3.5

For \(k=2\), \(a>0\), the probability law of \(N_e(t)\) is given by

and for \(n\ge 3\)

Proof

The result follows from Proposition 3.4, recalling Eq. (17).

The plots of some probabilities of \(N_e(t)\) given in Proposition 3.5 are shown in Fig. 7.

Hereafter we show the mean first-passage-time of \(N_e(t)\) through \(n+1\), which is identical to that of case \(k=1\) for \(n\ge 3\).

Corollary 3.4

Under the assumptions of Proposition 3.4, from (19) one has

Also in this case, similarly to Proposition 3.3, we are able to provide the variance and the autocorrelation coefficients \(\rho _h\) of the interarrival times.

Proposition 3.6

For \(k=2\), \(a>0\), the variance of the interarrival times is given by

and the autocorrelation coefficients (21) are given by

Proof

Due to Eq. 8 of Jagerman and Altiok (2003), and recalling Proposition 3.5, we have

The expression of the autocorrelation coefficients \(\rho _i\), \(i=1,2,3\), follows from Eq. (9) of Jagerman and Altiok (2003) and noting that

Moreover, for \(h\ge 4\), one has

so that

Remark 3.3

Note that for both cases \(k=1\) and \(k=2\), we have that \(\sum _{h=0}^{+\infty }\rho _h=-\frac{1}{2}\), according to a well-known result concerning the stationary time series, cf. Hassani (2009).

4 Application to the SHIP/M/\(\infty\) Queue

This section provides an application of the previous results, in which we assume that the counting process N(t) introduced in Section 2 is the arrival process of a suitable queueing system having an infinite number of servers. Furthermore, we assume that the service times are independent and identically distributed, having exponential distribution with parameter \(\mu >0\). Henceforth, the resulting system is named SHIP/M/\(\infty\) queue. The assumption of an infinite number of servers is suitable to approximate a real situation in which the port facilities are wide enough to ensure the service even for a large number of arriving vessels. Clearly, in this setting a more realistic model should include a finite number of servers rather than an infinite one. Hence, the following analysis of the SHIP/M/\(\infty\) queue can be viewed as a first step toward the investigation of the bulk port queueing mechanism.

Hereafter, we purpose to study the number \(Q_n\) of customers at the port seen by the arrival of the n-th customer. The main result is based on Eq. (2.1) of Brandt (1987) that, upon a correction, reads

where \(S_{n-i}\) denotes the service time of the \((n-i)\)-th customer. The expected value of \(Q_n\) is obtained in the following proposition. It is worth mentioning that \(\mathbb {E}(Q_n)\) does not depend on n, since by assumption the interarrival times \(X_i\) are stationary and the service times \(S_j\) are i.i.d.

Proposition 4.1

In the case of the SHIP/M/\(\infty\) queue, for \(k\in \mathbb {N}\) and \(\mu >0\), the expected number of customers at the port seen by the arrival of the n-th customer is

Proof

Due to Eq. (22), one has

Hence, recalling that \(\left\{ X_i\right\}\) is a stationary sequence and that \(S_n\), \(n\in {\mathbb Z}\), is a sequence of independent random variables exponentially distributed with parameter \(\mu\), we have

so that the thesis follows from Eq. (11).

Note that the mean number of customers at the port seen by the arrival of a customer depends on parameters \(\mu\) and a only through their product. Moreover, \(\mathbb {E}(Q_n)\) is decreasing in \(\mu a\), it tends to 0 \((+\infty )\) as \(\mu a\) increases (\(\mu a\) tends to 0). Furthermore, \(\mathbb {E}(Q_n)\) is increasing in \(k\in \mathbb {N}\) and it tends to \(1/(\mu a )\) as \(k\rightarrow \infty\).

The knowledge of \(\mathbb {E}(Q_n)\) allows to determine the parameters such that the expected number of customers equals a given value, say q. Indeed, thanks to (23) we are able to find numerically the solutions of the equation \(\mathbb {E}(Q_n)=q\), for \(q>0\). Fig. 8 shows an example of the solution in terms of \(\mu a\). We can see that the solution is decreasing in q and is increasing in k. This means that a small number of customers is expected when k is small and \(\mu a\) is large, i.e. when the mean service time is small and when there are rare overlaps in the vessels arrival.

In the following proposition we provide the expression of an upper and a lower bound for the probability of empty queue.

Proposition 4.2

In the case of the SHIP/M/\(\infty\) queue, for \(k\in \mathbb {N}\) and \(\mu >0\), the probability of empty queue satisfies

where \(\phi _1\) is given in terms of (23) and the expression of \(\phi _2\) is provided in Appendix C, for brevity.

Proof

The thesis follows from Eq. (22).

Remark 4.1

From Proposition 4.2 we have

this suggesting that, for large a and \(\mu\), one has \({\mathbb P}(Q_n=0)\approx 1\).

Proposition 4.3

In the case of the SHIP/M/\(\infty\) queue, for \(k=1\) and \(\mu >0\), one has

and, for \(k=2\),

where \(g(a,\mu )=\left[ 4a \mu \,e^{3a\mu }(4a^3\mu ^3-2a^2\mu ^2-a\mu +2) -e^{2a\mu }(a\mu +2)^2 +2e^{a\mu }(a\mu -2)^2 -(a\mu -2)^2\right]\).

Proof

The thesis is a consequence of Proposition 4.2.

In Fig. 9, we provide some plots of the bounds \(\phi _1(k,a,\mu )\) and \(\phi _2(k,a,\mu )\) for different choices of the parameters by considering the expressions obtained in Propositions 4.2 and 4.3. From the picture one can note that the bounds approach 1 more slowly as k increases.

5 Concluding Remarks

In this paper we have studied the model of port dynamics proposed in Jagerman and Altiok (2003), in which it is assumed that each vessel has a scheduled arrival time but there is also a constant lay period during which, in a uniform way, it can arrive at the port. In particular, we have considered the counting process N(t), which represents the number of scheduled vessels arriving during the time interval (0, t], \(t>0\), and we have obtained the explicit expression of its probability generating function. The latter allowed us to get the probability distribution of N(t) and also its expected value. Differently from other papers in which approximations or simulation techniques are used, we have been able to disclose explicit analytical results. Starting from the probability law of N(t), standard computations have allowed us to obtain the probability law of the stationary counting process related to N(t), and also various results concerning the autocorrelations of the random variables \(\{X_i\}\), \(i\in {\mathbb Z}\), giving the actual interarrival time between the \((i-1)\)-th and the i-th vessel arrival.

The final part of the paper has been dedicated to the queueing system characterized by stationary interarrival times \(\{X_i\}\), exponential service time and infinite number of servers. The latter assumption is a suitable formal approximation of a real situation in which the port facilities are wide enough to ensure the service even for a large number of arriving vessels. Some results on the average number of customers at the port and on the probability of an empty queue have been disclosed.

The given results can be further exploited for the analysis both of the more general case when the lay period \(\omega\) is a real number (not necessarily a multiple of the parameter a) and of a more realistic SHIP/M/N queue. This will be the object of a future investigation.

Data Availability

Data sharing is not applicable to this article since no datasets were generated or analyzed during the current study.

References

Abate J, Whitt W (1992) Numerical inversion of probability generating functions. Oper Res Lett 12:245–251. https://doi.org/10.1016/0167-6377(92)90050-D

Abramowitz M, Stegun IA (1992) Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Dover, New York

Altiok T (2000) Tandem queues in bulk port operations. Ann Oper Res 93:1–14. https://doi.org/10.1023/A:1018915605231

Artalejo JR, Economou A, Gomez-Corral A (2008) Algorithmic analysis of the Geo/Geo/c retrial queue. Eur J Oper Res 189(3):1042–1056. https://doi.org/10.1016/j.ejor.2007.01.060

Bar-Lev SK, Blanc H, Boxma O, Janssen G, Perry D (2013) Tandem queues with impatient customers for blood screening procedures. Methodol Comput Appl Probab 15(2):423–451. https://doi.org/10.1007/s11009-011-9250-y

Bountali O, Economou A (2019) Strategic customer behavior in a two-stage batch processing system. Queueing Systems 93:3–29. https://doi.org/10.1007/s11134-019-09615-0

Brandt A (1987) On stationary queue length distributions for G/M/s/r queues. Queueing Systems 2:321–332. https://doi.org/10.1007/BF01150044

Cox DR, Lewis PAW (1966) The Statistical Analysis of Series of Events. Methuen’s Monographs on Applied Probability and Statistics, London

Dahal K, Galloway S, Burt G, McDonald J, Hopkins I (2003) A port system simulation facility with an optimization capability. Int J Comput Intell Appl 3(4):395–410. https://doi.org/10.1142/S1469026803001099

de la Peña-Zarzuelo I, Freire-Seoane MJ, López-Bermúdez B (2020) New queuing theory applied to port terminals and proposal for practical application in container and bulk terminals. J Waterw Port Coast Ocean Eng 146(1):1–7. https://doi.org/10.1061/(ASCE)WW.1943-5460.0000535

Dimitrakopoulos Y, Economou A, Leonardos S (2021) Strategic customer behavior in a queueing system with alternating information structure. Eur J Oper Res 291(3):1024–1040. https://doi.org/10.1016/j.ejor.2020.10.054

Frankel EG (1987) Port Planning and Development. Wiley, New York

Goerlandt F, Kujala P (2011) Traffic simulation based ship collision probability modeling. Reliab Eng Syst Saf 96:91–107. https://doi.org/10.1016/j.ress.2010.09.003

Guadagni G, Ndreca S, Scoppola B (2011) Queueing systems with pre-scheduled random arrivals. Math Methods Oper Res 73:1–18. https://doi.org/10.1007/s00186-010-0330-5

Hansen ER (1975) A Table of Series and Products. Prentice-Hall Inc., Englewood Cliffs, NJ

Hassani H (2009) Sum of the sample autocorrelation function. Random Operators / Stochastic Eqs. 17:125–130. https://doi.org/10.1515/ROSE.2009.008

Inoue Y, Boxma O, Perry D, Zacks S (2018) Analysis of \(M^x/G/1\) queues with impatient customers. Queueing Syst 89(3–4):303–350. https://doi.org/10.1007/s11134-017-9565-7

Jagerman D, Altiok T (2003) Vessel arrival process and queueing in marine ports handling bulk materials. Queueing Syst 45:223–243. https://doi.org/10.1023/A:1027324618360

Lancia C, Guadagni G, Ndreca S, Scoppola B (2018) Asymptotics for the late arrivals problem. Math Methods Oper Res 88:475–493. https://doi.org/10.1007/s00186-018-0643-3

Lang N, Veenstra A (2010) A quantitative analysis of container vessel arrival planning strategies. OR Spectrum 32:477–499. https://doi.org/10.1007/s00291-009-0186-3

Prudnikov AP, Brychkov YA, Marichev OI (1986) Integrals and Series: Elementary Functions 1 Gordon & Breach Science Publishers. New York

Tsinker GP (2004) Port engineering: Planning, construction, maintenance, and security. Wiley, New York

van Asperen E, Dekker R, Polman M, de Swaan Arons H, Waltman L (2003) Arrival Processes for Vessels in a Port Simulation, ERIM Report Series Research in Management. No. ERS-2003-067-LIS

van Vianen T, Ottjes J, Lodewijks G (2014) Simulation-based determination of the required stockyard size for dry bulk terminals. Simul Model Pract Theory 42:119–128. https://doi.org/10.1016/j.simpat.2013.12.010

Wadhwa LC (1992) Planning operations of bulk loading terminals by simulation. J Waterw Port Coast Ocean Eng 118(3):300–315. https://doi.org/10.1061/(ASCE)0733-950X(1992)118:3(300)

Acknowledgements

The authors are members of the group GNCS of INdAM (Istituto Nazionale di Alta Matematica).

Funding

Open access funding provided by Università degli Studi di Salerno within the CRUI-CARE Agreement. This work is partially supported by MIUR–PRIN 2017, Project Stochastic Models for Complex Systems (no. 2017JFFHSH).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of Interest

All the authors declare no financial competing interests. The authors B.M. and P.P. declare no non-financial competing interests. The author A.D.C. is member of the Editorial Board of MCAP.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 A. Conditional Distribution of N(t)

In this appendix we provide the explicit expressions of the conditional probabilities of N(t) under different scenarios.

1.1.1 A.1 Expression of \({\mathcal P}_1(h,t,n,a,y_0)\)

Let us assume \((n-1)a<y_0<na\), for \(n=1,\dots ,k\) and \(0\le t<k a-y_0\). Denoting by \(s=\lfloor (t+y_0)/a \rfloor +1\), we have

-

(i)

if \(1\le h\le n-s+k-1\),

$$\begin{aligned}{} {\mathcal P}_1(h,t,n,a,y_0)&=\frac{(-1)^{s\mathop{-}n}}{k^{2s\mathop{-}2n}}\,\frac{\Gamma \left( 1+k-n+\frac{y_0}{a}\right) }{\Gamma \left( 1+k-s+\frac{y_0}{a}\right) }\,\frac{\Gamma \left( 1-k-n+\frac{t+y_0}{a}\right) }{\Gamma \left( 1-k-s+\frac{t+y_0}{a}\right) }\, \left( 1-\frac{t}{ka}\right) ^{k\mathop{+}n\mathop{-}s\mathop{-}1}\\{} & \quad \times \left( \frac{t}{k a-t}\right) ^h {n\mathop{-}s\mathop{+}k\mathop{-}1\choose h}{{}_{2} \, F_{1}\left( -h,-2s+2n, k+n-s-h, 1-\frac{k a}{t}\right) }\\{} & {} \quad+\frac{(-1)^{s-n}}{k^{2s\mathop{-}2n}} \left( 1-\frac{t}{k a}\right) ^{k\mathop{+}n\mathop{-}s\mathop{-}1} \sum _{m\mathop{=}1}^{h}\delta _m(t)\\{} & {} \quad\times \left( \frac{t}{ka-t}\right) ^{h-m} \left( {\begin{array}{c}n-s+k-1\\ h-m\end{array}}\right) {{}_{2}F_{1}\left( -h+m,-2s+2n, k+n-s-h+m, 1-\frac{k a}{t}\right) }, \end{aligned}$$ -

(ii)

if \(h=n-s+k\)

$$\begin{aligned}{} {\mathcal P}_1(h,t,n,a,y_0)&=(-1)^{s\mathop{-}n\mathop{+}1}\frac{\Gamma \left( 1+k-n+\frac{y_0}{a}\right) }{\Gamma \left( 1+k-s+\frac{y_0}{a}\right) }\cdot \frac{\Gamma \left( 1-k-n+\frac{t+y_0}{a}\right) }{\Gamma \left( 1-k-s+\frac{t+y_0}{a}\right) }\cdot \frac{(2s-2n)}{k^{2s\mathop{-}2n}}\\{} & {} \quad\times \left( 1-\frac{t}{ka}\right) ^{k\mathop{+}n\mathop{-}s\mathop{-}1}\,\left( \frac{t}{ka-t}\right) ^{n\mathop{-}s\mathop{+}k\mathop{-}1}{{}_{2}F_{1}\left( 2n-2s+1,1-k-n+s,2,1-\frac{ka}{t}\right) }\\{} & {} \quad+\frac{(-1)^{s-n}}{k^{2s\mathop{-}2n}}\left( 1-\frac{t}{ka}\right) ^{k\mathop{+}n\mathop{-}s\mathop{-}1}\sum _{m\mathop{=}1}^{n\mathop{-}s\mathop{+}k}\delta _m(t)\\{} & {} \quad \times \left( \frac{t}{ka-t}\right) ^{n\mathop{-}s\mathop{+}k\mathop{-}m} \left( {\begin{array}{c}n-s+k-1\\ n-s+k-m\end{array}}\right) {{}_{2}F_{1}\left( -n+s-k+m,-2s+2n,m,1-\frac{ka}{t}\right) }, \end{aligned}$$ -

(iii)

if \(n-s+k+1\le h\le s-n+k-1\)

$$\begin{aligned}{} {\mathcal P}_1(h,t,n,a,y_0)&=\frac{\Gamma \left( 1+k-n+\frac{y_0}{a}\right) }{\Gamma \left( 1+k-s+\frac{y_0}{a}\right) }\,\frac{\Gamma \left( 1-k-n+\frac{t+y_0}{a}\right) }{\Gamma \left( 1-k-s+\frac{t+y_0}{a}\right) }\,\frac{(-1)^{h\mathop{-}k\mathop{+}1}}{k^{2s\mathop{-}2n}}\\&\quad \times \left( 1-\frac{t}{ka}\right) ^{k\mathop{+}n\mathop{-}s\mathop{-}1}\,\left( \frac{t}{ka-t}\right) ^{k\mathop{+}n\mathop{-}s\mathop{-}1}\left( {\begin{array}{c}2s-2n\\ h-k-n+s+1\end{array}}\right) \\{} & \quad\times {{}_{2}F_{1}\left( h+1+n-k-s,1-k-n+s,2+h-k-n+s,1-\frac{ka}{t}\right) }\\{} & \quad+\frac{(-1)^{s\mathop{-}n}}{k^{2s\mathop{-}2n}}\left( 1-\frac{t}{ka}\right) ^{k\mathop{+}n\mathop{-}s\mathop{-}1}\sum _{m\mathop{=}max\{1,h\mathop{-}(n\mathop{-}s\mathop{+}k\mathop{-}1)\}}^h \delta _m(t) \left( \frac{t}{ka-t}\right) ^{h\mathop{-}m} \\{} & \quad \times \left( {\begin{array}{c}n-s+k-1\\ h-m\end{array}}\right) {{}_{2}F_{1}\left( -h+m,-2s+2n,k+n-s-h+m,1-\frac{ka}{t}\right) } \\{} & {} \quad+\frac{(-1)^{s\mathop{-}n}}{k^{2s\mathop{-}2n}}\left( 1-\frac{t}{ka}\right) ^{k\mathop{+}n\mathop{-}s\mathop{-}1}\sum _{m\mathop{=}1}^{h\mathop{-}(n\mathop{-}s\mathop{+}k)}\delta _m(t) \left( \frac{t}{ka-t}\right) ^{h\mathop{-}m} \\{} & {} \quad\times \left( 1-\frac{ka}{t}\right) ^{h\mathop{-}m\mathop{-}k\mathop{-}n\mathop{+}s\mathop{+}1}\left( {\begin{array}{c}2s-2n\\ h-m-k-n+s+1\end{array}}\right) \\{} & \quad \times {{}_{2}F_{1}\left( h-m+1+n-k-s,1-k-n+s,2+h-m-k-n+s,1-\frac{ka}{t}\right) }, \end{aligned}$$ -

(iv)

if \(h\ge s-n+k\)

$$\begin{aligned}{} {\mathcal P}_1(h,t,n,a,y_0)&=\frac{(-1)^{s\mathop{-}n}}{k^{2s\mathop{-}2n}}\left( 1-\frac{t}{ka}\right) ^{k\mathop{+}n\mathop{-}s\mathop{-}1}\sum _{m\mathop{=}h\mathop{-}(n\mathop{-}s\mathop{+}k\mathop{-}1)}^h \delta _m(t) \left( \frac{t}{ka-t}\right) ^{h\mathop{-}m}\\& \quad \times \left( {\begin{array}{c}n-s+k-1\\ h-m\end{array}}\right) {{}_{2}F_{1}\left( -h+m,-2s+2n, k+n-s-h+m,1-\frac{ka}{t}\right) } \\ & \quad +\frac{(-1)^{s\mathop{-}n}}{k^{2s\mathop{-}2n}}\left( 1-\frac{t}{ka}\right) ^{k\mathop{+}n\mathop{-}s\mathop{-}1}\sum _{m\mathop{=}h\mathop{-}(s\mathop{-}n\mathop{+}k\mathop{-}1)}^{h\mathop{-}n\mathop{+}s\mathop{-}k} \delta _m(t) \left( \frac{t}{ka-t}\right) ^{h\mathop{-}m} \\{} & \quad\times \left( 1-\frac{ka}{t}\right) ^{h\mathop{-}m\mathop{+}s\mathop{-}k\mathop{-}n\mathop{+}1}\left( {\begin{array}{c}2s-2n\\ h-m-k-n+s+1\end{array}}\right) \\{} & \quad \times {{}_{2}F_{1}\left( h-m+1+n-k-s,1-k-n+s,2+h-m-k-n+s,1-\frac{ak}{t}\right) }, \end{aligned}$$

where

is the Gauss hypergeometric function and we have set

with

for \({\mathcal S}(j,r)\) denoting the Stirling number of the first kind.

Proof

Due to Eq. (52.2.1) of Hansen (1975), we have

Considering that

after some calculations Eq. (28) becomes

With a similar reasoning, it can be shown that

Hence, due to Eqs. (29) and (30), we have

where \(\delta _m(t)\) has been defined in Eq. (26). Moreover, we have

where

Finally, recalling Eqs. (31) and (32), the proof follows from Theorem 2.1 after some calculations.

1.1.2 A.2 Expression of \({\mathcal P}_2(h,t,n,a,y_0)\)

For \((n-1)a<y_0<na\), with \(n=1,\dots ,k\), and \(k a -y_0 \le t<(k+n-1) a-y_0\), with \(s=\lfloor (t+y_0)/a \rfloor +1\), recalling Eq. (33) we have

-

(i)

if \(1\le h\le k-n+s-1\),

$$\begin{aligned}{} {} {\mathcal P}_2(h,t,n,a,y_0)&=\frac{(-1)^{-n\mathop{+}s\mathop{-}1}}{k^{-2n\mathop{+}2 s\mathop{-}1}}\,\frac{a}{y_0}\, \left( 1-\frac{t}{ka}\right) ^{n\mathop{-}s\mathop{+}k} \left( \frac{t}{ka-t}\right) ^h \theta _h(n-s+k;k-2n+s) \\ & {} \quad \times \frac{\Gamma \left( 1-k-n+\frac{t+y_0}{a}\right) \Gamma \left( 1+k-n+\frac{y_0}{a}\right) }{\Gamma \left( -k-s+1+\frac{t+y_0}{a}\right) \Gamma \left( -s+1+k+\frac{y_0}{a}\right) } +\frac{(-1)^{-n\mathop{+}s\mathop{-}1}}{k^{-2n\mathop{+}2 s\mathop{-}1}}\left( 1-\frac{t}{ka}\right) ^{n\mathop{-}s\mathop{+}k} \\{} & \quad \times \sum _{m\mathop{=}\max \, \{h\mathop{-}k\mathop{+}n\mathop{-}s\mathop{+}1,1\}}^h\tilde{\delta }_m(t) \left( \frac{t}{ka-t}\right) ^{h\mathop{-}m} \theta _{h-m}(n-s+k;-1-2n+2 s), \end{aligned}$$ -

(ii)

if \(h\ge k-n+s\)

$$\begin{aligned}{} {\mathcal P}_2(h,t,n,a,y_0)&=\frac{(-1)^{2k\mathop{-}n\mathop{-}s\mathop{+}1}}{k^{-2n\mathop{+}2 s\mathop{-}1}}\left( 1-\frac{t}{ka}\right) ^{n\mathop{-}s\mathop{+}k} \\ & \quad \times \!\!\! \sum _{m\mathop{=}\max \, \{h\mathop{-}k\mathop{+}n\mathop{-}s\mathop{+}1,1\}}^h\tilde{\delta }_m(t) \left( \frac{t}{ka-t}\right) ^{h\mathop{-}m} \theta _{h-m}(n-s+k; \, -1-2n+2 s), \end{aligned}$$

where we set

with

and where \(\beta _m^{q} (k;w_1;w_2)\) is defined in Eq. (27).

Proof

The proof proceeds similarly as for \({\mathcal P}_1(h,t,n,a,y_0)\) and so is omitted.

1.1.3 A.3 Expression of \({\mathcal P}_3(h,t,n,a,y_0)\)

Let us assume \((n-1)a<y_0<na\) (with \(n=1,\dots ,k\)), \(t\ge (k+n-1) a -y_0\) and denote by \(s=\lfloor (t+y_0)/a \rfloor +1\). Recalling Eqs. (27) and (34), it is

-

(i)

if \(1\le h\le s-1-k-n\)

$${\mathcal P}_3(h,t,n,a,y_0)=0,$$ -

(ii)

if \(s-k-n\le h\le s-1+k-n\)

$$\begin{aligned}{} {\mathcal P}_3(h,t,n,a,y_0)&=(-1)^{h\mathop{-}s\mathop{+}1\mathop{+}n\mathop{+}k} \left( \frac{1}{k}\right) ^{2k\mathop{-}1} \left( {\begin{array}{c}2k-1\\ h-s+k+n\end{array}}\right) \left( \frac{t+y_0}{a}-s+1\right) _k \\{} & \quad \times \sum _{r\mathop{=}0}^{n\mathop{-}1}\frac{(n-1)!}{(n-1-r)!} \left( n-\frac{y_0}{a}\right) _{k\mathop{-}r\mathop{-}1} \\& \quad + \left( \frac{1}{k}\right) ^{2k\mathop{-}2} \sum _{m\mathop{=}\max \{0,h\mathop{-}s\mathop{+}1\mathop{+}n\mathop{-}k\}}^{h\mathop{-}s\mathop{+}k\mathop{+}n}\left( {\begin{array}{c}2k-1\\ h-s+k+n-m\end{array}}\right) (-1)^{h\mathop{-}s\mathop{-}m\mathop{+}k\mathop{+}n} \delta ^{*}_m(t), \end{aligned}$$ -

(iii)

if \(h\ge s+k-n\)

$$\begin{aligned}{} & {} {\mathcal P}_3(h,t,n,a,y_0)=\left( \frac{1}{k}\right) ^{2k\mathop{-}2} \sum_{m\mathop{=}\max \{0,h-s\mathop{+}1\mathop{+}n\mathop{-}k\}}^{h\mathop{-}s\mathop{+}k\mathop{+}n}\left( {\begin{array}{c}2k-1\\ h-s+k+n-m\end{array}}\right) (-1)^{h\mathop{-}s\mathop{-}m\mathop{+}k\mathop{+}n} \delta ^{*}_m(t), \end{aligned}$$

where

with \(\beta _m^j (k; \, w_1; \, w_2)\) and \(\eta _m^i\left( l_1; \, l_2; \, w_1; \, w_2\right)\) defined in Eqs. (27) and (34), respectively.

The proof proceeds similarly as for \({\mathcal P}_1(h,t,n,a,y_0)\) and so is omitted.

1.2 B. Conditional Probabilities of N(t)

In this appendix we provide the explicit expressions of the conditional probabilities of N(t) in the case \(k=2\).

1.2.1 B.1 Expression of \({\mathcal N}_1(h,t,a,y_0)\)

Let us assume \(k=2\), \(a>0\) and \(y_0\in (0,a)\). For \(h\ge 2\) we have

1.2.2 B.2 Expression of \({\mathcal N}_2(h,t,a,y_0)\)

Let us assume \(k=2\), \(a>0\) and \(y_0\in (a,2 a)\). For \(h\ge 2\) it is

1.3 C. Upper Bound for the Probability of Empty Queue

In this appendix we give the explicit expression of the upper bound \(\phi _2(k,a,\mu )\) for the probability of empty queue, defined in Eq. (24). To this aim, we need the following preliminary result.

Lemma C.1

Recalling Eq. (7), we have

where

is the confluent hypergeometric function.

Proof

The proof follows from Theorem 2.1 noting that

and recalling the definition of Pochhammer symbol, the second relationship of Section I.4.6 of Prudnikov et al. (1986) and the expansion 24.1.3 of Abramowitz and Stegun (1992).

The expression of \(\phi _2(k,a,\mu )\) is provided in the following proposition.

Proposition C.1

For all \(k\in \mathbb {N}\), \(a,\mu >0\) we have

where \(\gamma (s,\cdot )\) denotes the lower incomplete gamma function, \({\mathcal S}(n,k)\) are the Stirling number of the first kind, and \({}_{1}F_{1}\) is defined in (35). Moreover, we have set

and

where \(\Gamma (s,\cdot )\) is the upper incomplete gamma function. Moreover, the following limits hold

Proof

The proof follows from Proposition C.1, by taking into account the expansion 24.1.3 of Abramowitz and Stegun (1992) and recalling that \(Y_0\sim U(0,k a)\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Di Crescenzo, A., Martinucci, B. & Paraggio, P. Vessels Arrival Process and its Application to the SHIP/M/\(\infty\) Queue. Methodol Comput Appl Probab 25, 38 (2023). https://doi.org/10.1007/s11009-023-10003-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11009-023-10003-8