Abstract

Recent advances in satellite technologies, statistical and mathematical models, and computational resources have paved the way for operational use of satellite data in monitoring and forecasting natural hazards. We present a review of the use of satellite data for Earth observation in the context of geohazards preventive monitoring and disaster evaluation and assessment. We describe the techniques exploited to extract ground displacement information from satellite radar sensor images and the applicability of such data to the study of natural hazards such as landslides, earthquakes, volcanic activity, and ground subsidence. In this context, statistical techniques, ranging from time series analysis to spatial statistics, as well as continuum or discrete physics-based models, adopting deterministic or stochastic approaches, are irreplaceable tools for modeling and simulating natural hazards scenarios from a mathematical perspective. In addition to this, the huge amount of data collected nowadays and the complexity of the models and methods needed for an effective analysis set new computational challenges. The synergy among statistical methods, mathematical models, and optimized software, enriched with the assimilation of satellite data, is essential for building predictive and timely monitoring models for risk analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Motivation

Satellite Earth observation is increasingly used by the research community, civil protection authorities, international organizations, and industry to monitor and forecast natural hazards in order to develop disaster risk management strategies, see for example Van Westen (2000), Joyce et al. (2009), Tomás and Li (2017) and references therein. In recent decades, such applications have benefited from advances in remote sensing technologies and data processing algorithms, thus opening the way to a wider variety of fields exploiting such methodologies. The collaboration among international authorities or institutes has lead to initiatives aimed at applying satellite Earth observation to geohazards monitoring. Among them, we mention the International Charter Space and Major Disasters and the Committee on Earth Observation Satellites (CEOS), or conferences like the International Forum on Satellite Earth Observations for Geohazards (Bally 2014) held in 2012 and organized by the European Space Agency (ESA) and the Group on Earth Observations (GEO).

The present paper provides an updated overview on the literature about the use of satellite data for natural hazards monitoring and forecasting. The review is ambitiously designed for both mathematicians and statisticians interested in exploring this research field and geo-scientists interested in an educated report on the state-of-the-art mathematical and statistical techniques used therein. In particular, we focus on ground displacement measurements obtained through interferometric processing of synthetic aperture radar (SAR) data and analyze the natural hazards that can be studied by means of this type of data, with a review on the statistical techniques, the mathematical models, and the computational methods used to address such problems.

The results of the literature search highlight the importance of Earth observation satellite data in monitoring and forecasting natural hazards, the central role of the analysis of ground displacement measurement derived from SAR data in the study of many natural geohazards and recent advances in numerical and computational models and methods in this field. The growth of methodological and technical aspects in this area of research creates the need for automated and fast processing methodologies. Indeed, the impact on society of the result of these kinds of analyses is bounded by the capability of delivering timely results that can support hazard mitigation, without relying uniquely on visual inspection of the data by experts.

Often, experimental data analyses need to be established accounting for the underlying physical principles, whereas physics-based numerical models require calibrating their parameters on the basis of observed data to be accurate and predictive. In this regard, the scientific literature of Earth observation satellite data for monitoring and forecasting natural hazards is still lacking. Indeed, advanced statistical methodologies, physics-based models, and numerical and computational techniques seldom have been combined to overcome the issues arising from the complexity and the huge dimensionality of Earth observation data, and the need for fast and accurate response. Such a statement opens new directions of research and challenges that should be tackled soon by the scientific community: in monitoring and forecasting natural hazards it is of utmost importance to support civil protection authorities in decision planning processes by means of reliable, physically sound, and prompt responses that can stem from mathematical analyses integrated with Earth observation satellite data evidence.

The paper is organized as follows: Sect. 2 provides an overview on Earth observation satellite data, with a focus on interferometric processing of SAR data; Sect. 3 analyzes the application of the aforementioned data in the field of natural hazards monitoring and forecasting, with a focus on landslides, earthquakes, volcanic activity, and ground subsidence; Sect. 4 focuses on statistical models and methods currently used in this field of research; Sect. 5 describes physics-based approaches used to model natural hazards with a special focus on the integration of satellite data into such models; Sect. 6 draws the conclusions of the paper.

2 Earth Observation Satellite Data

Satellites are equipped with systems that can collect different types of “images” through either passive sensors that detect the reflection of the electromagnetic radiation produced by the Sun (such as visible, infrared, multispectral, and hyperspectral) or active sensors that exploit radiation emitted by the sensor itself (such as radar and LiDAR). Different types of satellite data can provide different information about the soil, the vegetation, the infrastructure, the atmosphere, and the sea. In particular, SAR images can be exploited to assess ground motions, thus measuring the effect of geological processes on the ground surface.

The observation of deformations and motions of ground surface masses is also of paramount importance for monitoring and forecasting many geohazard risks, and the aim of the present paper is to provide a focused review of the use of ground deformations measurements derived from Earth observation satellite SAR data for monitoring and forecasting natural hazards such as landslides, earthquakes, volcanic activity, and ground subsidence.

This review will not cover the field of atmospheric and climate analyses which investigate the composition of the atmosphere using data from remote sensing instruments. Examples include the study of the distribution of aerosols in the atmosphere using data collected by the Multiangle Imaging Spectroradiometer (MISR) and Moderate-resolution Imaging Spectrometer (MODIS) (Nguyen et al. 2012), the estimation of CO\(_2\) mole fraction in the atmosphere using data collected by the Greenhouse Gases Observing Satellite (GOSAT) and Atmospheric Infrared Sounder (AIRS) (Nguyen et al. 2014), the prediction of the total column ozone using data collected by the Total Ozone Mapping Spectrometer (TOMS) (Huang et al. 2002), the estimation of the terrestrial latent heat flux using data collected by MODIS and Landsat (Xu et al. 2018), and analysis of the sea surface temperature from MODIS and the Advanced Microwave Scanning Radiometer-Earth Observing System (AMSR-E) (Ma and Kang 2018).

2.1 SAR Data

SAR is a remote sensing system mounted on Earth observation satellites which collects images of the ground by measuring the echo of a pulse of electromagnetic wave (microwave) sent to the target by the SAR system itself. SAR technology enables the collection of images regardless of the presence of daylight or the weather conditions, since electromagnetic waves can penetrate clouds. The temporal frequency of acquisition of SAR images depends on the repeat cycle of the satellite (i.e., the time between two passages of the satellite over the same geographical location), which ranges from a few days to a few weeks, and on the payload of the satellite, such as the tilt angle and swath width of the sensor, that affects the revisit time (the time between two observations of the same point on the Earth). The revisit frequency also depends on the latitude of the geographical location: the semi-polar orbit of the satellites implies a higher revisit frequency near the poles and a lower revisit frequency near the equator. The spatial resolution of the SAR images depends on the technical characteristics of the SAR system used. For example, the ERS mission, the first ESA program in Earth observation for environmental monitoring, was composed of two satellites (ERS-1 and ERS-2) operating in a 35-day repeat cycle, carrying a SAR operating in the C-band (frequency of 5.3 GHz) with a wavelength of 5.6 cm; the spatial resolution is 26 m in range (across track) and between 6 and 30 m in azimuth (along track). Figure 1 shows the geometric configuration of the SAR sensor of the ERS satellite.

Geometric configuration of the SAR sensor of the ERS satellite. Figure adapted from the ESA Earth online website (https://earth.esa.int)

SAR images are collected by many Earth observation programs currently active, already concluded, or planned for future launch. Some examples are ERS (ESA), Envisat (ESA), TerraSAR-X (DLR), TanDEM-X (DLR), Radarsat (CSA), JERS (JAXA), ALOS (JAXA), COSMO-SkyMed (ASI), and Sentinel (ESA). Many of these observation programs make the collected data freely available, see for example the Copernicus Programme (https://www.copernicus.eu), the European system for monitoring the Earth. This program aims at providing policymakers, researchers, and commercial and private users with environmental data collected in near real time on a global level from Earth observation satellites (the Sentinel families) and in situ sensors. In particular, the Sentinel-1 mission comprises a constellation of two polar-orbiting satellites, operating day and night performing C-band synthetic aperture radar imaging. One of the advantages of the Sentinel-1 mission is the Terrain Observation with Progressive Scans (TOPS) mode, which is the acquisition pattern of the wide swath and extra wide swath modes of Sentinel-1. The TOPS mode relies on the antenna beam steering in the along-track direction, which provides large area mapping and is designed to provide enhanced imaging performance in terms of signal-to-noise ratio and azimuth ambiguity (Prats-Iraola et al. 2012; Scheiber et al. 2014; Yagüe-Martínez et al. 2016).

Moreover, NHAZCA S.r.l. and Geocento Ltd developed a tool (https://www.sarinterferometry.com/insar-feasibility-tool) for the search of groups of data suitable for interferometric analysis among the SAR data made available by various satellite Earth observation programs.

2.2 Interferometric Processing of SAR Data

The raw data collected by SAR sensors (composed of amplitude, phase, and polarization of the backscattered signal) can be processed by means of various image processing techniques to retrieve information about the scanned geographical region. Interferometry is a technique that allows one to obtain topographic and kinematic information about the ground surface from the analysis of the phase difference of different SAR images. Indeed, the phase of the signal is determined by the distance between the sensor and the target, thus providing information on the relative position of the target with respect to the satellite. The interferometric analysis of SAR images of the same geographical region collected at different times (InSAR) was developed to measure the height topography, thus producing digital elevation models of the scanned area. As a further development, this technique has been extended to the estimation of surface displacement. This extension is called differential InSAR (DInSAR), since it considers the differences of interferograms, where the contribution due to the topography (known from a digital elevation model or estimated) is compensated to recover only the components due to the deformation. Originally, DInSAR was used to investigate single events of ground displacement by analyzing two SAR images acquired at different passes of the satellite and producing maps of the surface deformation occurring between the two acquisitions (repeat-pass interferometry). More advanced multi-temporal or multi-interferogram InSAR techniques allow one to estimate time series of surface deformation maps by analyzing a stack of SAR images of the same geographical region collected over a period of time. The deformation measurements are obtained through a phase-unwrapping procedure that reconstructs the displacements given the phase differences (measured in radians); the values obtained from this processing are differences with respect to a master image and a reference point conveniently selected.

One of the advantages of InSAR is providing very precise measurements of surface deformation; indeed, the measured ground displacement can have centimetric or millimetric precision, depending on the wavelength of the electromagnetic signal used. It should be noted that the InSAR technique measures the projection of the actual ground motion on the direction of the line of sight of the sensor, since the difference in the phases of two SAR images of the same area collected at different times represents the change in the relative position of the target with respect to the satellite, making it possible to detect only the component of the ground motion parallel to the trajectory of the microwave pulse used by the SAR system. In other words, it measures how much the ground is approaching or moving away from the sensor, which is not necessarily located at the local geographical zenith.

Because of temporal decorrelation in the signal due to change in the dielectric properties of the reflective surface over time, thermal noise, atmospheric screen phase, or other disturbances, the time series of surface deformation cannot be retrieved for all the pixels of the SAR images. Indeed, time series of surface deformation can be computed only for targets that show coherence along the stack of SAR images; such targets correspond, for example, to rocks (natural reflectors) or buildings (artificial reflectors), which act as permanent reflectors by maintaining constant dielectric properties over the monitoring period. For portions of the ground covered by forests or agricultural fields, the coherence along the stack of images is not preserved because the radar backscatter signal contains a contribution from the vegetation layer. Another source of coherence loss between SAR images is related to the baseline, which is the separation between the orbital position of different acquisitions. Indeed, the correlation of the scanned images is influenced by the component of the baseline perpendicular to a look direction (effective baseline), introducing spatial decorrelation phenomena corrupting the interferograms.

Many techniques have been developed for the computation of time series of surface deformation for specific stable targets. The reader may refer to Crosetto et al. (2016) for a review. Some examples are persistent scatterer interferometry (PSI) algorithms (Ferretti et al. 2001; Hooper et al. 2004; Crosetto et al. 2010), and small baseline subsets (SBAS) algorithms (Berardino et al. 2002; Casu et al. 2006). PSI considers a reference acquisition and a stack of SAR images taken over a large time interval from the reference acquisition up to the decorrelation baseline (the orbital separation at which the interferometric phase is pure noise) and identifies stable scatterers by analyzing the time series of amplitude values supposing a deformation model linear in time, while SBAS is based on the combination of differential interferograms produced by couples of SAR images separated by small baselines and identifies as stable scatterers the pixels exhibiting a sufficiently high phase coherence with a deformation model which supposes spatial and temporal smoothness.

The number of identified stable targets depends on the technical properties of the sensor, such as the wavelength of the radar signal and on the processing technique used. For instance, larger wavelengths are less sensible to the vegetation layer because they have enhanced canopy penetration capability, thus providing larger temporal correlations. In this respect, Hanssen (2005) discusses the feasibility of InSAR analysis and the factors affecting its precision and reliability. Moreover, recently developed techniques aim at extending the coverage of motion results: some examples are provided by Van Leijen and Hanssen (2007), who proposes two methods with adaptive deformation models for the selection of persistent scatterers, and Sowter et al. (2013), who proposes the intermittent SBAS technique, which relaxes the pixel selection criterion to retrieve motion time series for a wider range of reflectors (see Cigna and Sowter 2017 for a comparison between the performance of SBAS and intermittent SBAS). Concerning the extension in coverage due to improvement in technical properties of sensors, the reader may refer, for example, to Bovenga et al. (2012) for a comparison between InSAR results obtained from X-band and C-band satellite radar sensors in the context of landslide hazard assessment.

Recent advancements in PSI and SBAS algorithms have been introduced to enhance the coverage of InSAR measurements. In this regard, Ferretti et al. (2011) propose the SqueeSAR algorithm, which jointly processes point scatterers and distributed scatterers, thus improving the density and quality of measurement points over non-urban areas, and Fornaro et al. (2014) extend SqueeSAR, enabling the identification of multiple scattering mechanisms from the analysis of the covariance matrix. Extensions of the SBAS algorithm have been proposed by Lauknes et al. (2010), where better robustness is achieved using an \(L_1\)-norm cost function, Shirzaei (2012), where the accuracy is enhanced by using new methods for identification of stable pixels and wavelet-based filters for reducing artifacts, and Falabella et al. (2020), where the usability is extended to low-coherence regions using a weighted least-squares approach. Moreover, Hetland et al. (2012) propose an approach, called multiscale InSAR time series, that extracts spatially and temporally continuous ground deformation fields using a wavelet decomposition in space and a general parametrization in time. Other extensions deal with the problems related to the large volume of data produced by the wide-swath satellite missions with short revisit times, such as Sentinel-1, which poses computational challenges for the systematic near-real-time monitoring of the Earth surface and calls for efficient processing techniques, such as those proposed by Ansari et al. (2018) and references therein.

Recent developments in InSAR techniques allow for the estimation of the three-dimensional deformation of the surface. In this framework, the first proposals combine SAR images acquired at different incidence angles in both ascending and descending orbits Wright et al. (2004), Gray (2011). These approaches have low sensitivity to the north–south component of the ground deformation, because of the near-polar orbits of the satellites. Approaches proposed to overcome such limitation are pixel-offset tracking techniques (Fialko et al. 2001, 2005; Strozzi et al. 2002; Hu et al. 2010), which exploit correlations in the amplitude measurements of SAR images, and multi-aperture InSAR (Bechor and Zebker 2006; Hu et al. 2012; Jung et al. 2014; Mastro et al. 2020), which splits the SAR signal spectrum into separate sub-bands to create different-looking interferograms. Other approaches infer the three-dimensional surface displacement field by integrating InSAR data with other sources of information, such as GPS data (Gudmundsson et al. 2002; Spata et al. 2009) or optical images (Grandin et al. 2009). These techniques, developed to study single deformation episodes, have been extended to the estimation of the temporal evolution of the three-dimensional surface displacement: some examples are the pixel-offset SBAS technique (Casu et al. 2011; Casu and Manconi 2016), the multidimensional SBAS methodology (Samsonov and d’Oreye 2012), other approaches based on the Kalman filter Hu et al. (2013), the minimum-acceleration approach Pepe et al. (2016), and the combination of interferograms of multiple-orbit tracks and different incidence angles (Ozawa and Ueda 2011).

InSAR processing is usually performed through commercial software which implements state-of-the art interferometric techniques. Some examples are the software provided by TRE ALTAMIRA (https://site.tre-altamira.com), GAMMA (https://www.gamma-rs.ch), and Aresys (http://www.aresys.it/).

3 Natural Hazards Monitoring and Forecasting Through InSAR Data

InSAR measurements of surface deformation can be exploited to monitor and forecast natural and man-made hazards. Indeed, they are extensively used to study landslides, earthquakes, volcanic activities, ground subsidence, or heave (see, for example, Lu et al. 2010, Chen et al. 2000). InSAR data enable the observation and the precise quantification of the ground deformations produced by such natural events and provide useful information for both natural hazards preventive monitoring and disaster evaluation and assessment. Indeed, the analysis of such data in this context enables the identification of early warning signals or triggering factors, the observation of their behavior, and the a posteriori assessment of the extent of the consequences or the effectiveness of mitigation measures.

The importance of InSAR data for hazard monitoring is proven by its use by local public authorities. For instance, in Italy (which is, among all the European countries, one of the most affected by natural hazards), two examples are the Piedmont and Tuscany regional administrations. Indeed, InSAR data are currently used for land monitoring by Arpa Piemonte, the regional agency for the protection of the environment (http://www.arpa.piemonte.it/approfondimenti/temi-ambientali/geologia-e-dissesto/telerilevamento). Moreover, the regional government of Tuscany requested, founded, and supported, under the agreement “Monitoring ground deformation in the Tuscany Region with satellite radar data,” the development of a monitoring system able to perform continuous and systematic analysis of InSAR data obtained from Sentinel-1 acquisitions (Raspini et al. 2018; Bianchini et al. 2018). At a national level, the project “Progetto Piano Straordinario di Telerilevamento” of the Ministry of the Environment and for Protection of the Land and Sea (http://www.pcn.minambiente.it/mattm/progetto-piano-straordinario-di-telerilevamento/) used InSAR data to monitor areas with high landslide risk (Costantini et al. 2017).

For monitoring other natural hazards such as floods, avalanches, and wildfires, the analysis of ground displacements obtained from InSAR data is not as meaningful as for the aforementioned cases. In these cases, other types of data are preferred, such as those obtained from SAR images through processing techniques different from interferometry or data collected by other types of sensors. For example, the analysis of the amplitude component of SAR images provides information about the reflectivity properties (such as dielectric constant and roughness) of the target, enabling, for example, the identification of flooded regions (because of the different reflectivity properties of the wet soil and the dry soil) and of avalanches (because of the different reflectivity properties of the compact snow and that involved in the avalanche). Flood data are analyzed, for example, in Kussul et al. (2008), Pulvirenti et al. (2011), Stephens et al. (2012), and Vishnu et al. (2019), where a segmentation of the amplitude component of SAR images is performed in order to assess flood extent. Other examples concerning monitoring of avalanches are Eckerstorfer and Malnes (2015) and Wang et al. (2018), where avalanche are detected using the amplitude component of SAR images. The reader may also refer to Eckerstorfer et al. (2016) for a review on remote sensing for avalanche detection using optical, LiDAR, and radar sensors. Other kinds of data used for the study of the aforementioned natural hazards are optical, thermal, or LiDAR images. As for wildfires, see for example Sifakis et al. (2011) for an analysis of data from the MSG-SEVIRI sensor (collecting data in 12 spectral channels) to monitor wildfires, and Schroeder et al. (2011) for the use of Landsat multispectral data in monitoring forest disturbance.

3.1 Landslides

Ground displacement InSAR data are widely used to monitor slow-moving landslides. Indeed, InSAR ground deformation measurements have been analyzed for landslide risk assessment by monitoring mountain slopes, as in Colesanti et al. (2003), Rott and Nagler (2006), Hu et al. (2017), and Nobile et al. (2018), or by checking and updating landslide hazard and risk maps and inventory maps, as in Cascini et al. (2009) and Lu et al. (2014b).

The interest in the topic of landslide monitoring and forecast through the analysis of InSAR data is proven by projects such as the ESA CAT-1 project (ID: 9099) “Landslides forecasting analysis by displacement time series derived from satellite and terrestrial InSAR data” (Mazzanti et al. 2011) and the MUSCL project (Monitoring Urban Subsidence, Cavities and Landslides by remote sensing) within the Fifth Framework Programme for Research and Development of the European Commission (Rott et al. 2002).

Tofani et al. (2013b) highlight the usefulness of InSAR data in landslide mapping, monitoring, and hazard analysis by presenting the results of a questionnaire about the use of remote sensing in current landslide studies in Europe. Besides the prevalent use of radar imagery for landslide studies, Tofani et al. (2013b) underline the use of other remote sensing data such as aerial photos, optical imagery, and meteorological sensors, which are not covered in this review.

3.2 Earthquakes

In the case of active seismic regions, the analysis of InSAR ground deformation data enables one to evaluate the strain accumulated in the ground and to identify faults that are not visible through optical images, thus providing a valuable tool for estimating seismic risk. For example, Moro et al. (2017) identify earthquake early warning signals from ground velocity and acceleration maps obtained from surface deformation data derived from InSAR processing. Moreover, InSAR data can be used to assess the effects of an earthquake and of the events associated with it. The first example is provided by Massonnet et al. (1993), who exploit InSAR data to detect the co-seismic ground displacements produced by an earthquake that occurred in 1992 in Landers, California. As a more recent example, Kuang et al. (2019) use InSAR data to study a seismic event and the landslides and ground deformations induced by it.

3.3 Volcanic Activity

In the case of volcanic activity, InSAR data allow one to monitor ground deformations produced by the inflation and the deflation of the volcano that precede and follow an eruption, thus providing relevant information about magma dynamics. Indeed, the continuous monitoring of the deformations allows for the study of the volcanic evolution during quiescent periods. Moreover, ground deformation data collected during volcanic eruptions give useful information to assess the extent and the severity of the consequences of this natural event. In this field of application, the InSAR technique offers the advantage of collecting images in presence of volcanic gases following an eruption that obstruct the collection of optical measurements. InSAR data are used in Chaussard et al. (2013) and Lu et al. (2002) to study time-dependent volcanic deformations, and in Schaefer et al. (2015) to measure ground deformation during volcanic eruptions in order to evaluate volcanic slope instabilities. De Novellis et al. (2019), instead, exploit InSAR data to study a volcanic eruption and its correlated seismic phenomena.

3.4 Ground Subsidence

InSAR ground deformation data can be used to study land subsidence or heave, which could be caused by natural processes such as sediment compaction or man-made interventions, for example, mining activity, geothermal field exploitation, or fluid extraction from aquifers or oil reservoirs. Some examples of application in this context are provided by Fielding et al. (1998), who study ground subsidence in oil fields due to the extraction of large volumes of fluid from shallow depths, Carnec and Fabriol (1999), who study land subsidence in a geothermal reservoir, Lu and Danskin (2001), Motagh et al. (2008), and Lubis et al. (2011), who study ground deformation produced by water reservoir dynamics and exploitation, and Perski et al. (2009), who analyze ground deformation induced by mining activity in a salt mine.

4 Statistical Models and Methods

The statistical analysis of ground deformation data derived from InSAR processing of satellite images is mostly performed qualitatively by expert visual interpretation of the surface motion maps, with the help of standard descriptive and graphical statistics tools. However, automated procedures would allow continuous real-time monitoring, thus enhancing the impact of remote sensing on natural hazard mitigation. Indeed, developing decision support systems that automatically analyze real-time data acquired from monitoring instruments to identify early warning signals and emergency conditions is of paramount importance in the mitigation of the socioeconomic impact of natural hazards (Groat 2004), but poses computational and methodological challenges, related for example to the characterization of the spatial structure of the data and to the proper handling of the outliers in the detection of emergencies (Brenning and Dubois 2008). This is in fact a relatively new direction of research, and there are only a few examples in the literature of statistical techniques applied to the analysis of InSAR ground deformation time series in the context of monitoring and forecasting natural hazards.

The statistical models applied to InSAR data can be divided into two categories: time series analysis methods and spatial statistic methods.

4.1 Time Series Analysis

Time series of ground deformation measurements derived from multi-temporal InSAR can be used to study the trend of motion of the region under study in order to identify specific deformation patterns or change points related to natural hazards.

In this setting, a first step of the analysis consists in the identification of specific trends of motion and the consequent classification of the time series of the data set into classes featuring similar behavior. This task has been performed manually by experts (see, for example, Cigna et al. 2011) and, more recently, through time series classification algorithms. The need for automated techniques for time series classification arose from the difficulties in the systematic visual classification of large data sets.

One example is Milone and Scepi (2011), who apply a k-medoids clustering technique to time series of ground deformations obtained through InSAR processing. The application considered in the article poses challenges related to the dimensionality of the data set (which involves 18,452 time series). To address this problem, the authors choose a clustering technique specifically developed to deal with large data sets: the clustering large applications (CLARA) algorithm that combines a sampling approach (which reduces the dimensionality of the problem by randomly selecting subsamples of the original data) and the k-medoids algorithm.

Other works deal with the problem of grouping time series into classes of trends using methods based on statistical testing. Following this idea, Berti et al. (2013) present an automated technique to classify ground deformation time series through a conditional sequence of statistical tests aimed at comparing the time series in the data set to a set of predefined displacement patterns. The idea is that the distinctive predefined target trends describe different styles of ground deformation that can be interpreted in terms of the underlying physical process. To implement this method, Berti et al. (2013) identify six reference trends (shown in Fig. 2): uncorrelated, linear, quadratic, bilinear, discontinuous with constant velocity, and discontinuous with variable velocity. These reference trends have been identified by expert visual inspection of 1,000 time series from the available data set. The classification procedure consists of a sequence of standard statistical tests aimed at automatically assigning each time series to one of the six reference trends.

Examples of ground displacement time series following the six reference trends. Figure adapted from Berti et al. (2013)

Following a similar idea, Chang and Hanssen (2015) propose a probabilistic approach to tackle the problem of InSAR time series classification based on multiple hypothesis testing. The set of reference trends (called in this case a library of canonical kinematic models) is much richer than the previous one: starting from a set of six canonical functions (accounting for linearity, seasonal periodicity, discontinuities, and nonlinearity), the set of all the competing alternative models is obtained by nested combinations, leading to a number of reference trends considered that can be on the order of hundreds. The testing procedure exploited to identify the optimal model is based on the B-method of testing, a multiple hypothesis testing technique for simultaneous comparison of different models with different numbers of degrees of freedom fixing the same discriminatory power for all the alternative hypotheses, where the null hypothesis is the steady-state behavior, and the library of canonical kinematic models is the alternative hypotheses in this case. This formulation poses computational challenges related both to the large number of data and to the cardinality of the library of canonical kinematic models; to address this problem, an efficient numerical implementation is devised, aided by the formulation of the testing procedure, which allows one to compute the matrices involved in the computation of the test statistics only once.

These works aim at identifying groups of locations featuring different types of deformation trends in order to discriminate the locations with irregular or fast-rate trends from those featuring stability. A further step in the analysis consists in the quantification of the deviation of the time series in the data set from expected behaviors in order to identify early warning signals or anomalous behaviors. To this aim, Cigna et al. (2012) develop a semiautomated methodology by defining two deviation indexes to quantify the divergence of the time series from a historical trend of motion. The effectiveness of this methodology has also been proven by Tapete and Casagli (2013), who apply the methodology developed by Cigna et al. (2012) to assess the structural stability of archaeological monuments and historical buildings. This methodology is based on the error quantification of a linear regression estimate. In practice, the algorithm fixes a priori a breaking time (\(t_b\)) and aims at identifying and quantifying the deviation of the portion of the time series following \(t_b\) (updated pattern) from the linear trend estimated on the portion of the time series preceding \(t_b\) (historical pattern). To this aim, two possibilities are considered: the time series may show changes in velocity and/or acceleration at \(t_b\) (movements that are precursors of major events), or it may show a discontinuity at \(t_b\) (sudden change influencing the time series locally). To account for the two cases, two deviation indexes are defined. The first deviation index (\(\mathrm{DI}_1\)) accounts for the distances of the points of the updated pattern from the regression line estimated using the historical pattern, and it is computed as the sum of the quantities \(\Delta _i\) shown in the first panel of Fig. 3 normalized by the standard error of the regression (s)

The second deviation index (\(\mathrm{DI}_2\)) accounts for the difference in the values of the two regression lines estimated from the historical and the updated patterns evaluated at \(t_b\)

as shown in the second panel of Fig. 3. The two deviation indexes are able to provide significant information in the analysis of different geological contexts, such as eruption precursor signals identification and assessment of tectonic events.

Graphical representation of the computation of \(DI_1\) (left panel) and \(DI_2\) (right panel). Figure adapted from Cigna et al. (2012)

The aforementioned works rely on algorithms which consider only the temporal dependence of the data set and do not use the spatial dependence of the data. However, in all the cases, the spatial distribution of the data is exploited for interpretation, by representing the results obtained (such as the cluster number or the value of a deviation index) on maps and providing geological interpretation to coherent regions. This highlights the importance of the spatial dependence of the data and the need for its inclusion in the analysis.

4.2 Spatial Statistics

Spatial statistics techniques have been applied in the setting of DInSAR processing of two SAR images to analyze surface deformations occurring between the two acquisition times. One example is provided by Yaseen et al. (2013), who apply geostatistical techniques to DInSAR measurements of ground deformation induced by earthquakes. With the aim of characterizing and understanding the earthquake causative faults mechanisms, Yaseen et al. (2013) study coseismic displacements obtained from InSAR processing of pre- and post-earthquake SAR images. In this context, kriging is applied in order to interpolate missing values due to coseismic temporal decorrelation, thus providing data for computing the parameters of a slip distribution model through the inversion of coseismic displacements.

In the setting of multi-temporal InSAR, where the data depend both on space and time, spatial statistics tools have been applied to automatically analyze and characterize ground displacement and help interpretation.

One of the methods applied in this context is PSI Hotspot and Cluster Analysis (PSI-HCA), a spatial statistical approach proposed by Lu et al. (2012) with the aim of analyzing InSAR data to automatically and efficiently map landslides. This approach is based on Getis–Ord \(G^*_i\) statistic and kernel density estimation. In practice, the \(G^*_i(d)\) statistic is computed for each spatial location in the data set using the following equation

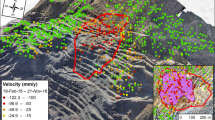

where \(x_i\) is the velocity measured at the spatial location under consideration, \(x_j\) are the velocities measured at the \(n_{ij}\) spatial locations within a distance d from the spatial location under consideration, and \(\bar{x}^*\) and \(s^*\) are the mean and the standard deviation, respectively, of the velocities measured at the n spatial location of the whole data set. The \(G^*_i(d)\) statistic is therefore a measure of association between the spatial location under consideration and its neighbors within a distance d. The choice of the distance d is based on the topography of the region under study by exploiting a digital terrain model. A smooth hotspot map is finally obtained through kernel density estimation with a quadratic kernel function. The regions of the hotspot map featuring high absolute values highlight areas affected by landslides, with the sign indicating the direction of the displacement (towards or away from the sensor for positive or negative values, respectively). Figure 4 shows, as an example, the hotspot map obtained by Lu et al. (2012) applying PSI-HCA to InSAR data for the Arno River basin area.

Example of hotspot map obtained with PSI-HCA applied to ascending and descending InSAR data on a study region covering the Pistoia–Prato–Firenze and Mugello basins in the Arno River basin. Reprinted from Lu et al. (2012)

Another example of InSAR data analysis accounting for both spatial and temporal dependence is provided by Cohen-Waeber et al. (2018), who apply independent and principal component analysis in order to detect spatial and temporal patterns in surface ground deformation time series in the context of a study of slow-moving landslides. The modes identified in the analysis account for continuous linear motion and seasonal or annual cycles correlated to precipitation, which induces soil swelling and changes in pore pressure. Indeed, the resulting components relate to different geomechanical processes, providing insights into the characterization of landslide dynamics. This technique is therefore useful in improved hazard forecasting, as it allows the separation of common spatiotemporal modes of variations of displacement, enabling one to isolate the underlying driving mechanisms.

4.3 Integration of Other Data

InSAR data can be integrated with data obtained from other sources. Some examples of the integration of InSAR and GPS data are found in Polcari et al. (2016), Peyret et al. (2008), and He et al. (2007). Del Soldato et al. (2018b) integrate InSAR data with data from different sources (such as GPS, aerial optical photographs, and traditional measurements carried out through geological and geomorphological surveys) in the context of a historical study of landslide evolution. Tofani et al. (2013a) integrate InSAR data with data collected by ground-based monitoring instrumentation, such as inclinometers and piezometers, to characterize and monitor a landslide. Del Soldato et al. (2018a) combine InSAR data and GNSS (Global Navigation Satellite System) to investigate subsidence-related ground motions. Novellino et al. (2017) combine InSAR data with aerial photograph data and field surveys in order to update a landslide inventory.

4.4 Software Performance Optimization

In recent years, spatial and temporal resolution of Earth observation instruments has increased. Therefore, adopting proper techniques to speed up the analysis process has become inevitable (Plaza et al. 2011), since processing data from SAR systems may become a computationally heavy task. Computational challenges in processing remote sensing data are reviewed in Ma et al. (2015) and mainly concern the following: the high data dimensionality; the complexity of mathematical models and analysis methods, such as those described in the present and next sections; the efficient data storage and the use of dedicated or parallel hardware architectures, such as field-programmable gate arrays (FPGAs) and graphics processing units (GPUs) (Lee et al. 2011); and the implementation of efficient and strongly scalable algorithms, exploiting the peculiar properties of the underlying hardware and providing accurate solutions quickly, up to real-time computing.

In order to effectively apply advanced statistical techniques to large-scale data, an efficient implementation relying on modern programming techniques is required. Some examples of such techniques are expression templates and object factories, which guarantee a wide flexibility and extensibility together with a high computational efficiency. Many libraries written using the Rcpp package (Eddelbuettel 2013) have been developed in order to integrate high-performance C++ codes into the R statistical programming framework, thus providing a more user-friendly processing interface. Samatova et al. (2006) also presents parallel statistical computing techniques for climate modeling. Despite having been applied to different classes of problems, such methods can be inspirational for the development of optimized computational techniques for Earth observation data analysis.

A rapidly growing research field in this framework relies on the use of machine learning and neural network models (Ma et al. 2015). The main challenge in this scenario is in providing a training set that is representative enough, with a possible integration with simulation results obtained from physics-based differential models. Kussul et al. (2016) proposes, in the remote sensing domain, a methodology for solving large-scale classification and area estimation problems by means of a deep learning paradigm: the application concerns the generation of high-resolution land cover and land use maps for the territory of Ukraine from 1990 to 2010 and 2015. Similar techniques, using more general SAR data, have been applied to hydro-geological risk management, such as spatial prediction of flash floods in tropical areas. For instance, Ngo et al. (2018) and Price et al. (2018) present an automatic detection technique of anomalies, such as road damage, from SAR imagery. A deep learning approach using COSMO-SkyMed SAR data for ship classification and navigation control is proposed in Wang et al. (2017), and the techniques proposed therein could also be instrumental for the analysis of ground displacement.

5 Physics-Based Models and Methods

Natural events have spatial, temporal, and spatiotemporal variability that requires effective modeling techniques for proper estimation and decision planning according to scientific principles.

All Earth natural events, including landslides, earthquakes, volcanic activity, and other geological phenomena, are intrinsically stochastic and affected by variability, irregularity, and any other type of uncertainty (Sen 2016). However, it is often convenient to introduce proper idealization concepts and assumptions so that uncertain phenomena become graspable by the physical knowledge available: in such an idealized deterministic framework, for a given set of circumstances the outcome of an event is uniquely predictable.

Such events are frequently described by mathematical models derived from physical principles and simulated by computer software of arbitrary complexity, depending on the accuracy needed and the time available to produce prompt responses. In order to enhance the accuracy and the level of realism of the predictions produced by numerical simulations, the use of InSAR data can be functional for calibrating the large set of parameters, often affected by huge variability, that characterize the physical models adopted. The first part of this section presents both deterministic and stochastic sets of state-of-the-art models and methods integrating physical knowledge that have been proposed in the literature for monitoring and forecasting the natural hazards covered by the present review. Finally, Sect. 5.5 presents a review of different strategies that have been proposed in order to integrate satellite data within the context of physical models.

5.1 Landslides

The main difficulties in physics-based models for propagating landslides arise from the complex nature of slope movements and the computational effort required to provide accurate results from simulating their time- and space-dependent behavior (van Asch et al. 2007). Approaches for landslide simulation are usually based either on discrete or on continuum models.

Continuum approaches commonly involve modeling the complex landslide material as a continuum fluid medium governed by elastoplastic and rheological properties characterized by a limited number of parameters, as described in Cremonesi et al. (2017) and Hungr (1995). The typical physical laws taken into account are equations governing the conservation of mass, momentum, and energy that, depending on the complexity of the model, can be formulated for the solid, fluid, and gas phases, as in Pudasaini (2012) and Pitman and Le (2005), or for a single homogenized phase. For instance, the motion of rigid viscoplastic landslide material can be described using the Navier–Stokes equations for an incompressible fluid

where \(\mathbf {u}\) is the velocity of the material particles, \(p\) the pressure, \(\varrho \) the density, \(\varvec{\sigma }_D\) the deviatoric part of the Cauchy stress tensor, and \(\mathbf {b}\) a forcing term (e.g., the gravity force). Equation (1) is coupled with proper boundary conditions, as described in Cremonesi et al. (2017), and it is solved in an arbitrary Lagrangian–Eulerian fashion, that is, over a computational domain \(\Omega _t\) that moves at each time \(t\) with velocity \(\mathbf {r}\). Dealing with an evolving free surface is a hard task and makes Eulerian approaches less convenient than Lagrangian ones which, on the other hand, are more computationally demanding. In Crosta et al. (2009), the arbitrary Lagrangian–Eulerian approach is used to model entrainment and deposition in rock and debris avalanches. Cremonesi et al. (2017) presents a Lagrangian finite element (FE) technique with a basal slip model used for three-dimensional simulations of landslides. In contrast to the standard FE method for elastoplastic flows (Crosta et al. 2003), different alternatives have been developed in order to overcome the otherwise unfeasible computational effort, such as depth averaging (Quecedo et al. 2004) for landslides in which the depth is much smaller than the length, or mesh-free methods (Wang et al. 2019) for large deformation modeling.

In discrete methods, the landslide is modeled as a discrete set of particles of different shape interacting with each other through contact forces whose physical description is crucial in order to take into account the actual material properties. In Taboada and Estrada (2009), a discrete element (DE) model is adopted for simulating the motion of rock avalanches, which is modeled, on the basis of granular physics and shear experiments, as a dense granular flow of dry frictional and cohesive particles: different phases are identified, from the slope failure to the avalanche triggering and motion. A similar model is adopted in Lu et al. (2014a) to address catastrophic slope failure under heavy rainfall conditions: despite the resulting simulation providing scenario-based run-out paths, particle velocities, and landslide-affected areas, such a discrete model has numerical limitations due to the complex mechanisms of landslides, particularly when the pore pressure increases. A different discrete approach, based on molecular dynamics, is proposed in Martelloni et al. (2013) for shallow landslides caused by rainfall. Here, the interaction between particles is modeled through a proper potential function that takes into account a realistic range of viscosities; the two-dimensional algorithm presented produces characteristic velocity and acceleration patterns that are typical of real landslides.

5.2 Earthquakes

Mathematical models of seismic wave propagation are irreplaceable tools for investigating the Earth’s structure and constructing ground shaking scenarios from earthquake phenomena. Earthquake ground motion prediction plays a central role in seismic hazard analysis and has been employed within both probabilistic and deterministic frameworks for estimating the expected ground motion at a site given an earthquake of known magnitude, distance, faulting style, etc. A reference introduction to computational seismology, covering the one-dimensional wave equation to more advanced state-of-the-art parallel numerical techniques, can be found in Igel (2017). Finite difference (FD), FE, and hybrid FD/FE methods have dominated the literature on modeling of earthquake motion in the past few decades, opening the way for numerical seismic exploration and structural modeling, such as in Moczo et al. (2007).

Mathematical models for elastodynamics analyses in seismology are often derived from the equations of motion, as presented in Mazzieri (2012), namely the equilibrium equations for an elastic medium subjected to an external seismic force \(\mathbf {f}\)

where \(\varrho \) is the density, \(\mathbf {u}\) is the soil displacement, \(\varvec{\sigma }(\mathbf {u})\) is the stress tensor taking into account for the soil elastic properties, and \(\mathbf {f}^\mathrm {visc}\) represents the viscoelastic volume forces depending on the displacement and the velocity. Viscoelastic FE models have been used in Hu et al. (2004) to analyze post-seismic deformation fields of the great 1960 Chile earthquake, providing promising results on the characterization of viscosity parameters and post-seismic seaward motion.

More sophisticated mathematical models for seismic analysis combine the accuracy of spectral element techniques (Mazzieri et al. 2011) with the flexibility of discontinuous Galerkin FE methods (Wollherr et al. 2018 and Antonietti et al. 2018). Such versatility, together with the ongoing progress of computational power, is likely to enable three-dimensional numerical simulations of different seismic excitation scenarios, such as the series of earthquakes analyzed by Paolucci et al. (2014) that occurred in Haiti and Chile in 2010, New Zealand in 2010–2011, Japan in 2011, and Italy in 2012.

Coupled multi-physics approaches modeling the Earth structure, the fault structure, stress states during large earthquakes, and the consequent tsunami generation have been proposed in Uphoff et al. (2017) and in Ulrich et al. (2019) for the 2004 Sumatra and 2018 Sulawesi earthquakes and tsunamis, respectively.

Advancements in computational seismology have led to the development of high-performance software for modeling seismic wave propagation and earthquake dynamics, such as the spectral element simulator SPEED (Mazzieri et al. 2013), used for elasto-dynamics applications as the ones shown in Fig. 5, the discontinuous Galerkin package SeisSol (Breuer et al. 2014). Another example is contained in Komatitsch et al. (2010), where a high-order FE method has been implemented on a large parallel GPU cluster.

Example of elasto-dynamics applications of SPEED. Reprinted from Paolucci et al. (2014)

5.3 Volcanic Activity

Lava flow simulations can be used for planning evacuation or countermeasures in order to mitigate the risks during effusive eruptions. Costa and Macedonio (2005a) present an overview of the main physics-based approaches for lava flow prediction, from simple probabilistic models to more complex computational fluid dynamics simulations.

Stochastic methods are shown to provide approximate maximum slope scenarios in a very narrow computational time frame; they are also used to produce hazard maps with lava invasion probabilities for different geographic sites. Such models are usually based on Monte Carlo or Lattice Boltzmann simulation methods and rely on the assumption that topography plays the major role in determining the lava flow path: the flow is assumed to propagate randomly starting from a source point, and upward paths are prevented whereas downward paths have a higher probability along the maximum slope direction (see, e.g., Favalli et al. 2005).

On the other hand, continuum models require a higher computational effort, but they are able to accurately predict the flow front velocity by taking into account possible human intervention scenarios (lava diversion, natural or artificial barriers, etc.). Following the transport theory, lava can be reasonably modeled as an incompressible non-Newtonian fluid in the presence of a free surface. During heat loss, which occurs by radiation, lava cools and undergoes phase transitions that transform its fluid dynamics properties, which requires a different modeling approach. As already described in Sect. 5.1 for landslides, the physical equations that need to be simulated are the mass, momentum, and energy conservation laws (see Costa and Macedonio 2005a and references therein). An introduction to geomorphological fluid mechanics with a focus on lava flows is contained in Balmforth et al. (2001).

Due to the high complexity of the physical processes involved, the numerical solution of the complete conservation equations for real lava flows is often unfeasible. Simplified models have been derived to overcome the computational difficulties: in Costa and Macedonio (2005b) a two-dimensional depth-averaged model for lava thickness, velocities, and temperature is proposed, representing a good compromise between the full three-dimensional description and the need to restrain the computational costs. Such approach, which is valid in the limit \(H_{*}^2 / L_{*}^2 \ll 1\) (where \(H_{*}\) is the undisturbed fluid height and \(L_{*}\) the characteristic length in the flow direction), consists of solving the mass, momentum, and energy balance equations integrated over the lava thickness. Given a terrain bed surface of height \(H\), the fluid height \(h\) (assumed to be directed as \(z\) in the three-dimensional space) evolves as

where \(u_x\) and \(u_y\) are the depth-averaged fluid velocity components along the \(x\) and \(y\) directions, respectively, which satisfy proper approximations to the balance equations. Similar shallow-depth approximation models for temperature-dependent lava flow advance are also proposed in Bernabeu et al. (2016).

A different approach, presented in Carcano et al. (2013), consists in modeling a multiphase flow to describe the injection and dispersal of a hot and high-velocity gas/pyroclast mixture in the atmosphere: the gas phase is assumed to be composed of atmospheric air mixed with different chemical components leaving the crater, such as water vapor and carbon dioxide, whereas the pyroclasts are modeled as solid particles of given size, density, and thermal properties.

Cellular automata, a concept born in the field of artificial intelligence, are being increasingly used in the literature for geological investigation. The research community has recently shown a huge interest in cellular automata models for lava flow prediction: in such models, the three-dimensional space is populated by “living” cells to which different parameters are associated, such as spatial coordinates, lava thickness and temperature, and fluxes to and from neighbor cells. A set of rules determining the temporal evolution of such cell parameters is prescribed starting from elementary physical principles, for example: the lava flow from one cell to another occurs by means of a hydrostatic pressure gradient, the altitude of the cells is increased as soon as the lava cools down and solidifies, and temperature varies through physical laws describing thermal radiation. One key example is the development of the MAGFLOW cellular automaton model which was employed to investigate the Etna volcano lava flows in 2001 (Vicari et al. 2007), 2004 (Del Negro et al. 2008), and 2006 (Herault et al. 2009). Although the validation and interpretation of these models remain key problems (Dewdney 2008), the sensitivity of the MAGFLOW model was analyzed in depth in Bilotta et al. (2012).

Given the wide variety of numerical methods available for lava flow prediction, assessing their validity is of the utmost importance: this issue has been addressed by proposing different benchmark cases, either analytical (as in Dietterich et al. (2017)) or experimentally driven (as in Kavanagh et al. 2018), that can be used to discriminate the range of applications and the scenarios covered by each model.

5.4 Ground Subsidence

Physics-based models for subsidence available in the literature have been used to investigate the elastic properties of the ground such as displacement, stress, and strain distribution: Yao et al. (1993) propose a mechanical FE analysis of surface subsidence arising from mining an inclined seam, with a comparison to empirical models. In Sayyaf et al. (2014) the land subsidence phenomenon is investigated following the theory of porous media, considering both a solid and a fluid phase: the equations solved have the same form as Eq. (2), where the total density \(\varrho \) here is given by

\(n\) being the ground porosity, and \(\rho _W\) and \(\rho _S\) the water and the solid density, respectively.

Coulthard (1999) presents applications of mathematical models in investigating phenomena induced by underground mining and tunneling, including subsidence, stresses generated when an open stope is filled or when two adjacent stopes are mined, interactions among different tunnels, and effects of under-mining a pre-existing tunnel. Such models are based on the analysis of nonlinear stresses, which can be used to assist the design of excavations and rock support mechanisms.

A model for subsidence prediction caused by extraction of hydrocarbons is discussed in Fokker and Orlic (2006). Such a semi-analytic model applies the viscoelastic equations to a multi-layered subsurface with physical parameters varying across the different layers. The numerical method discussed therein has few unknowns, so the computations turn out to be very fast and fill the gap between analytic single-layered and more elaborate FE models.

The differences between a multi-seam mining-induced subsidence profile and that of single-seam mining have been investigated in Ghabraie et al. (2017) by means of sand-plaster physical models, where different mining configurations were compared. The proposed characterization of the multi-seam subsidence is shown to be useful for enhancing subsidence prediction methods. Similar sand-plaster approaches were compared to FE models in Ghabraie et al. (2015) on a subsidence mechanisms generated by extraction of longwall panels. Another work published by Marketos et al. (2015) focuses on understanding the macroscopic mechanisms that lead to rock salt flow-induced ground displacements, and to this end, a FE model is used in which the rock salt layer is represented by a viscoelastic Maxwell material. In Ferronato et al. (2008), a class of elastoplastic interface models at a regional scale is integrated into an FE geomechanical porous medium model in order to analyze the role exerted by stress variation induced by gas/oil production on land subsidence. An interesting hybrid continuum/discrete approach is proposed in Vyazmensky et al. (2007): the surface subsidence associated with block caving mining is studied by a continuum mechanical FE model for the rock coupled to a discrete network of evolving soil fractures. In an attempt to reduce the computational effort required to simulate subsidence induced by mining under an open-pit final slope, a DE model is presented in Xu et al. (2016), where friction, contact, and gravity forces of a discrete set of jointed rock masses (rather than a continuum medium) are modeled.

Ground subsidence induced by natural events has also been analyzed. In Gambolati and Teatini (1998), the evolution of soil compaction in Venice, the Po River delta, and Ravenna was simulated through a one-dimensional FE model driven by groundwater flow and calibrated through experimental physical parameters. Land subsidence by aquifer compaction was studied in Shearer (1998) through an extension of MODFLOW (a popular FD package for groundwater flow released in 1983, see McDonald and Harbaugh 2003) and applied to a simulation of the port of Hangu in China. Another example of models for natural subsidence concerns the saltwater intrusion process in the phreatic aquifer and was applied to the Po River Plain in Giambastiani et al. (2007), where a numerical model enabled the quantification of density-dependent groundwater flow, hydraulic head, salinity distribution, seepage, and salt load fluxes to the surface water system.

5.5 Data Assimilation

In the context of natural hazard monitoring and forecasting, it is of paramount importance to exploit information coming both from the observation and sensing of the area under study and the prior knowledge about the phenomenon under consideration. Many works deal with the integration of Earth observation satellite data and known models of the dynamics of the specific natural hazard. This enables one to describe the dynamics of the phenomenon by resorting to physical models that characterize the laws governing its evolution or to numerical simulations that emulate its behavior, where the physical parameters involved are calibrated on experimental data that enhance the predictive properties of the model itself.

In the spirit of assimilation of satellite data and physical laws, Moretto et al. (2017) analyze, in the context of the study of slow-moving landslides, the integration of InSAR data and failure forecasting methods (FFMs) based on the creep theory, which characterizes the velocity and the acceleration of the slope. Elliott et al. (2016) provide a review of the role of satellite data in the monitoring and forecasting of the active tectonics and earthquakes and its use, shown in Fig. 6, for modeling the distribution of the strain via elastic dislocation theory, which models the fault slip in the Earth’s crust.

Use of satellite data for observing and interpreting earthquake faulting and deformation. Reprinted from Elliott et al. (2016)

Segall (2013) investigates the use of satellite data for the study of volcanic deformations with the aim of forecasting eruptions, and underlines the importance of including the underlying physicochemical process in the analysis through the exploitation of deterministic models in model-based forecasts. Other examples of integration of satellite data information into numerical models are presented by Wright and Flynn (2003), who compare numerical model results based on the dual-band method with data from a hyperspectral imaging system providing thermal measurements of the lava surface in order to retrieve the end-member thermal components of the lava determining its temperature distributions; in Woo et al. (2012), who integrate InSAR data with three-dimensional numerical models for the study of ground deformations produced by mining, through the use of satellite ground motion data to calibrate a predictive numerical model with the aim of assessing ground subsidence; and in Rutqvist et al. (2015), who present a numerical analysis of an enhanced geothermal system for monitoring and guiding cold-water injection strategies and exploiting InSAR ground-surface deformation data for calibration of the model parameters which characterize the hydraulic and mechanical properties of the reservoir, such as the bulk modulus.

6 Conclusions

This review shows the importance of Earth observation satellite data in monitoring and forecasting natural hazards. We focus on the analysis of InSAR measurements of ground displacement, which enables the study of many natural geohazards such as landslides, earthquakes, volcanic activity, and ground subsidence. Moreover, we describe recent advances in numerical and computational models and methods for these applications.

A promising field of research concerns the techniques for the analysis of time series of ground deformations, described in Sect. 4.1. With these methods, the InSAR data are used not only for monitoring or a posteriori assessment, but also for forecasting natural disasters. Thus, these techniques pave the way for an operative use of InSAR technology by civil protection authorities and for a concrete impact of the result of these analyses on society.

This direction of research gives rise to new challenges. Delivering timely results requires automated and fast data processing methodologies, while at the same time ensuring that decisions are based on solid and reliable analyses, exploiting and integrating statistical methodologies and physical-based models.

References

Ansari H, De Zan F, Bamler R (2018) Distributed scatterer interferometry tailored to the analysis of big insar data. In: 12th European conference on synthetic aperture radar EUSAR 2018, VDE, pp 1–5

Antonietti PF, Ferroni A, Mazzieri I, Paolucci R, Quarteroni A, Smerzini C, Stupazzini M (2018) Numerical modeling of seismic waves by discontinuous spectral element methods. ESAIM Proc Surv 61:1–37

Bally P (2014) Satellite earth observation for geohazard risk management: the santorini conference, Santorini, Greece, 21–23 May 2012. European Space Agency

Balmforth N, Burbidge A, Craster R (2001) Shallow lava theory. In: Balmforth N, Provenzale A (eds) Geomorphological fluid mechanics, vol 582. Springer, Lecture Notes in Physics, pp 164–187

Bechor NB, Zebker HA (2006) Measuring two-dimensional movements using a single insar pair. Geophys Res Lett 33(16)

Berardino P, Fornaro G, Lanari R, Sansosti E (2002) A new algorithm for surface deformation monitoring based on small baseline differential SAR interferograms. IEEE Trans Geosci Remote Sens 40(11):2375–2383

Bernabeu N, Saramito P, Smutek C (2016) Modelling lava flow advance using a shallow-depth approximation for three-dimensional cooling of viscoplastic flows. Geological Society, London, Special Publications 426(1):409–423, ISSN 0305-8719

Berti M, Corsini A, Franceschini S, Iannacone J (2013) Automated classification of Persistent Scatterers Interferometry time series. Natl Hazards Earth Syst Sci 13(8):1945–1958

Bianchini S, Raspini F, Solari L, Del Soldato M, Ciampalini A, Rosi A, Casagli N (2018) From picture to movie: twenty years of ground deformation recording over tuscany region (Italy) with satellite InSAR. Front Earth Sci 6:177

Bilotta G, Cappello A, Hérault A, Vicari A, Russo G, Del Negro C (2012) Sensitivity analysis of the MAGFLOW Cellular Automaton model for lava flow simulation. Environ Model Softw 35:122–131

Bovenga F, Wasowski J, Nitti D, Nutricato R, Chiaradia M (2012) Using COSMO/SkyMed X-band and ENVISAT C-band SAR interferometry for landslides analysis. Remote Sens Environ 119:272–285

Brenning A, Dubois G (2008) Towards generic real-time mapping algorithms for environmental monitoring and emergency detection. Stochastic Environ Res Risk Assess 22(5):601–611

Breuer A, Heinecke A, Rettenberger S, Bader M, Gabriel AA, Pelties C (2014) Sustained petascale performance of seismic simulations with seissol on supermuc. In: International supercomputing conference, Springer, pp 1–18

Carcano S, Bonaventura L, Esposti Ongaro T, Neri A (2013) A semi-implicit, second-order-accurate numerical model for multiphase underexpanded volcanic jets. Geosci Model Dev 6(6):1905–1924

Carnec C, Fabriol H (1999) Monitoring and modeling land subsidence at the Cerro Prieto geothermal field, Baja California, Mexico, using SAR interferometry. Geophys Res Lett 26(9):1211–1214

Cascini L, Fornaro G, Peduto D (2009) Analysis at medium scale of low-resolution DInSAR data in slow-moving landslide-affected areas. ISPRS J Photogram Remote Sens 64(6):598–611

Casu F, Manconi A (2016) Four-dimensional surface evolution of active rifting from spaceborne sar data. Geosphere 12(3):697–705

Casu F, Manconi A, Pepe A, Lanari R (2011) Deformation time-series generation in areas characterized by large displacement dynamics: the sar amplitude pixel-offset sbas technique. IEEE Trans Geosci Remote Sens 49(7):2752–2763

Casu F, Manzo M, Lanari R (2006) A quantitative assessment of the SBAS algorithm performance for surface deformation retrieval from DInSAR data. Remote Sens Environ 102(3–4):195–210

Chang L, Hanssen RF (2015) A probabilistic approach for InSAR time-series postprocessing. IEEE Trans Geosci Remote Sens 54(1):421–430

Chaussard E, Amelung F, Aoki Y (2013) Characterization of open and closed volcanic systems in Indonesia and Mexico using InSAR time series. J Geophys Res Solid Earth 118(8):3957–3969

Chen Y, Zhang G, Ding X, Li Z (2000) Monitoring earth surface deformations with InSAR technology: principles and some critical issues. J Geospatial Eng 2(1):3–22

Cigna F, Del Ventisette C, Liguori V, Casagli N, Lasaponara R, Vlcko J, Meisina C (2011) Advanced radar-interpretation of InSAR time series for mapping and characterization of geological processes. Natl Hazards Earth Syst Sci 11(3)

Cigna F, Sowter A (2017) The relationship between intermittent coherence and precision of ISBAS InSAR ground motion velocities: ERS-1/2 case studies in the UK. Remote Sens Environ 202:177–198

Cigna F, Tapete D, Casagli N (2012) Semi-automated extraction of Deviation Indexes (DI) from satellite Persistent Scatterers time series: tests on sedimentary volcanism and tectonically-induced motions. Nonlinear Process Geophys 19(6):643–655

Cohen-Waeber J, Bürgmann R, Chaussard E, Giannico C, Ferretti A (2018) Spatiotemporal patterns of precipitation-modulated landslide deformation from independent component analysis of insar time series. Geophys Res Lett 45(4):1878–1887

Colesanti C, Ferretti A, Prati C, Rocca F (2003) Monitoring landslides and tectonic motions with the Permanent Scatterers Technique. Eng Geol 68(1–2):3–14

Costa A, Macedonio G (2005a) Computational modeling of lava flows: a review. Spec Pap Geol Soc Am 396:209

Costa A, Macedonio G (2005b) Numerical simulation of lava flows based on depth-averaged equations. Geophys Res Lett 32(5)

Costantini M, Ferretti A, Minati F, Falco S, Trillo F, Colombo D, Novali F, Malvarosa F, Mammone C, Vecchioli F et al (2017) Analysis of surface deformations over the whole Italian territory by interferometric processing of ERS, Envisat and COSMO-SkyMed radar data. Remote Sens Environ 202:250–275

Coulthard M (1999) Applications of numerical modelling in underground mining and construction. Geotechn Geol Eng 17(3):373–385 (ISSN 1573-1529)

Cremonesi M, Ferri F, Perego U (2017) A basal slip model for Lagrangian finite element simulations of 3D landslides. Int J Numer Anal Methods Geomech 41(1):30–53

Crosetto M, Monserrat O, Cuevas-González M, Devanthéry N, Crippa B (2016) Persistent scatterer interferometry: a review. ISPRS J Photogram Remote Sens 115:78–89

Crosetto M, Monserrat O, Iglesias R, Crippa B (2010) Persistent scatterer interferometry. Photogram Eng Remote Sens 76(9):1061–1069

Crosta G, Imposimato S, Roddeman D (2003) Numerical modelling of large landslides stability and runout. Natl Hazards Earth Syst Sci 3(6):523–538

Crosta G, Imposimato S, Roddeman D (2009) Numerical modelling of entrainment/deposition in rock and debris-avalanches. Eng Geol 109(1–2):135–145

De Novellis V, Atzori S, De Luca C, Manzo M, Valerio E, Bonano M, Cardaci C, Castaldo R, Di Bucci D, Manunta M, et al. (2019) DInSAR analysis and analytical modelling of Mt. Etna displacements: the December 2018 volcano-tectonic crisis. Geophys Res Lett

Del Negro C, Fortuna L, Herault A, Vicari A (2008) Simulations of the 2004 lava flow at Etna volcano using the MAGFLOW cellular automata model. Bull Volcanol 70(7):805–812

Del Soldato M, Farolfi G, Rosi A, Raspini F, Casagli N (2018a) Subsidence evolution of the Firenze-Prato-Pistoia plain (Central Italy) combining PSI and GNSS data. Remote Sens 10(7):1146

Del Soldato M, Riquelme A, Bianchini S, Tomàs R, Di Martire D, De Vita P, Moretti S, Calcaterra D (2018b) Multisource data integration to investigate one century of evolution for the Agnone landslide (Molise, southern Italy). Landslides 15(11):2113–2128

Dewdney A (2008) Cellular automata. In: Jørgensen SE, Fath BD (eds) Encyclopedia of ecology, Oxford: Academic Press, ISBN 978-0-08-045405-4, pp 541–550

Dietterich HR, Lev E, Chen J, Richardson JA, Cashman KV (2017) Benchmarking computational fluid dynamics models of lava flow simulation for hazard assessment, forecasting, and risk management. J Appl Volcanol 6(1):9

Eckerstorfer M, Bühler Y, Frauenfelder R, Malnes E (2016) Remote sensing of snow avalanches: recent advances, potential, and limitations. Cold Regions Sci Technol 121:126–140

Eckerstorfer M, Malnes E (2015) Manual detection of snow avalanche debris using high-resolution Radarsat-2 SAR images. Cold Regions Sci Technol 120:205–218

Eddelbuettel D (2013) Seamless R and C++ integration with Rcpp. Springer

Elliott J, Walters R, Wright T (2016) The role of space-based observation in understanding and responding to active tectonics and earthquakes. Nat Commun 7:13844

Falabella F, Serio C, Zeni G, Pepe A (2020) On the use of weighted least-squares approaches for differential interferometric sar analyses: the weighted adaptive variable-length (wave) technique. Sensors 20(4):1103

Favalli M, Pareschi MT, Neri A, Isola I (2005) Forecasting lava flow paths by a stochastic approach. Geophys Res Lett 32(3)

Ferretti A, Fumagalli A, Novali F, Prati C, Rocca F, Rucci A (2011) A new algorithm for processing interferometric data-stacks: SqueeSAR. IEEE Trans Geosci Remote Sens 49(9):3460–3470

Ferretti A, Prati C, Rocca F (2001) Permanent scatterers in SAR interferometry. IEEE Trans Geosci Remote Sens 39(1):8–20

Ferronato M, Gambolati G, Janna C, Teatini P (2008) Numerical modelling of regional faults in land subsidence prediction above gas/oil reservoirs. Int J Numer Anal Methods Geomech 32(6):633–657

Fialko Y, Sandwell D, Simons M, Rosen P (2005) Three-dimensional deformation caused by the bam, iran, earthquake and the origin of shallow slip deficit. Nature 435(7040):295–299

Fialko Y, Simons M, Agnew D (2001) The complete (3-d) surface displacement field in the epicentral area of the 1999 mw7.1 hector mine earthquake, california, from space geodetic observations. Geophys Res Lett 28(16):3063–3066

Fielding EJ, Blom RG, Goldstein RM (1998) Rapid subsidence over oil fields measured by SAR interferometry. Geophys Res Lett 25(17):3215–3218

Fokker PA, Orlic B (2006) Semi-analytic modelling of subsidence. Math Geol 8(5):565–589 (ISSN 1573-8868)

Fornaro G, Verde S, Reale D, Pauciullo A (2014) Caesar: an approach based on covariance matrix decomposition to improve multibaseline-multitemporal interferometric sar processing. IEEE Trans Geosci Remote Sens 53(4):2050–2065

Gambolati G, Teatini P (1998) Numerical analysis of land subsidence due to natural compaction of the Upper Adriatic Sea basin. Springer, In CENAS, pp 103–131

Ghabraie B, Ren G, Smith JV (2017) Characterising the multi-seam subsidence due to varying mining configuration, insights from physical modelling. Int J Rock Mech Min Sci 93:269–279 (ISSN 1365-1609)

Ghabraie B, Ren G, Zhang X, Smith J (2015) Physical modelling of subsidence from sequential extraction of partially overlapping longwall panels and study of substrata movement characteristics. Int J Coal Geol 140:71–83

Giambastiani BM, Antonellini M, Essink GHO, Stuurman RJ (2007) Saltwater intrusion in the unconfined coastal aquifer of ravenna (italy): a numerical model. J Hydrol 340(1):91–104 (ISSN 0022-1694)