Abstract

Let \( (G_n)_{n=0}^{\infty } \) be a polynomial power sum, i.e. a simple linear recurrence sequence of complex polynomials with power sum representation \( G_n = f_1\alpha _1^n + \cdots + f_k\alpha _k^n \) and polynomial characteristic roots \( \alpha _1,\ldots ,\alpha _k \). For a fixed polynomial p, we consider sets \( \left\{ a,b,c \right\} \) consisting of three non-zero polynomials such that \( ab+p, ac+p, bc+p \) are elements of \( (G_n)_{n=0}^{\infty } \). We will prove that under a suitable dominant root condition there are only finitely many such sets if neither \( f_1 \) nor \( f_1 \alpha _1 \) is a perfect square.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A Diophantine n-tuple is a set \( \left\{ a_1,\ldots ,a_n \right\} \) consisting of n positive integers with the property that \( a_i a_j + 1 \) is a perfect square for all \( 1 \le i < j \le n \). More generally, for a given integer m, a D(m) -n-tuple is a set \( \left\{ a_1,\ldots ,a_n \right\} \) consisting of n positive integers with the property that \( a_i a_j + m \) is a perfect square for all \( 1 \le i<j \le n \). For \( m = 1 \) we get the above defined Diophantine n-tuples. The study of Diophantine tuples started more than two thousand years ago and is well known in the meantime. Early contributions are due to Diophantus of Alexandria, Fermat and Euler. Later, many others contributed to this beautiful subject. The original result of Diophantus is that \( \left\{ 1,33,68,105 \right\} \) is a D(256) -quadruple. The first D(1) -quadruple, the set \( \left\{ 1,3,8,120 \right\} \), is due to Fermat. The question usually asked is, for which n do there exist any Diophantine n-tuples, and if one exists, whether there are finitely or infinitely many. It was proved recently (cf. [19]) that \( n \le 4 \) for a D(1) -n-tuple and this bound is sharp. By Euler, there are even infinitely many Diophantine quadruples. For arbitrary m the situation is much less clear. Dujella proved in [5, 6] an upper bound for n depending (logarithmically) on m. More precise results are only available for special values of m, e.g. for D(4) -tuples (cf. [1]) or \( D(-1) \)-tuples (cf. [2]).

One can consider many other variants of Diophantine tuples, e.g. by considering algebraic integers or polynomials instead of integers, higher or perfect powers instead of squares, etc.. For a summary about Diophantine tuples and its variants we refer to [4].

In what follows we shall look at complex polynomials. Let \( p \in \mathbb {C}[X] \) be given. A D(p) -n-tuple in \( \mathbb {C}[X] \) is defined as a set \( \left\{ a_1,\ldots ,a_n \right\} \) consisting of n non-zero complex polynomials such that \( a_i a_j + p \) is a perfect square for all \( 1 \le i<j \le n \) and such that there is no polynomial \( q \in \mathbb {C}[X] \) with \( p/q^2\), \(a_1/q\), ..., \(a_n/q \) are constant polynomials. Again, one is interested in the same questions as above. In [7] it is proven that \( n \le 10 \) for a D(1) -n-tuple in \( \mathbb {C}[X] \). This bound is reduced to 7 in [10]. It is not clear what the expected true upper bound is. For arbitrary p there is no upper bound known so far. This changes if one looks e.g. at linear polynomials p, see [11], or at integer polynomials, cf. for example [5, 8, 9, 20].

Since the sequence of squares can be written as a linear recurrence sequence, one can ask the questions about existence of Diophantine tuples and finiteness of their number not only in the case of squares but also if we restrict \( a_i a_j + 1 \) to take values in an arbitrary fixed linear recurrence sequence. This situation was considered in [18] for the first time. For instance, in [15], which is based on [14] and [16], the authors consider sets \( \left\{ a,b,c \right\} \) of positive integers satisfying \( 1< a< b < c \) such that \( ab+1, ac+1, bc+1 \) are values in a linear recurrence sequence of Pisot type with Binet formula \( G_n=f_1\alpha _1^n + \cdots + f_k\alpha _k^n \). They prove three independent conditions under which there are only finitely many such sets. One of these conditions allows neither \( f_1 \) nor \( f_1 \alpha _1 \) to be a perfect square. This condition will be used in our statement, too. To give an idea about the origin of the conditions appearing in the statement (and in particular the condition that we also use), we very roughly sketch the argument. It starts by assuming on the contrary that there are infinitely many sets \( \left\{ a,b,c \right\} \) such that \( ab+1 = G_x, ac+1 = G_y, bc+1 = G_z \). It follows that \( \min \left( x,y,z \right) \) goes to infinity while x, y, z are all of the same size. On the other hand we have infinitely many squares in the multi-recurrence \( ((G_y-1)(G_z-1)/(G_x-1)) \). Using the Subspace theorem-approach, this is only possible if a functional identity in terms of x, y, z holds (compare e.g. with Theorem 2 in [3]). Comparing “leading terms” gives a contradiction unless one of the assumptions is satisfied. If neither \( f_1 \) nor \( f_1 \alpha _1 \), which are the possible leadings coefficients in the above multi-recurrence, are perfect squares, we get a contradiction at once (see again Theorem 2 in [3]). For large k one has much freedom in the functional identity coming from the irreducibility of the characteristic polynomial so that other arguments work. This last argument cannot be transferred to our situation as we will see below.

In the present paper we consider a function field variant of Diophantine tuples taking values in recurrences. Namely, we study sets \( \left\{ a,b,c \right\} \) consisting of three non-zero complex polynomials with the property that \( ab+1, ac+1, bc+1 \) are elements of a given linear recurrence sequence of polynomials. It will be proven that under some conditions on the recurrence sequence there are only finitely many such sets. In fact we are going to prove even more: the same result still holds if we add an arbitrary fixed polynomial p instead of 1. We mention that the same holds true if we replace \( \mathbb {C}\) by an arbitrary algebraically closed field of characteristic 0.

2 Results

For basic concepts and notions related to linear recurrences we refer to [24]. We call \( (G_n)_{n=0}^{\infty } \) a polynomial power sum if it is a simple linear recurrence sequence of complex polynomials with power sum representation

such that \( \alpha _1, \ldots , \alpha _k \in \mathbb {C}[X] \) are polynomials and \( f_1,\ldots ,f_k \in \mathbb {C}(X) \). Our main theorem that we are going to prove in this paper is the following statement:

Theorem 2.1

Let \( (G_n)_{n=0}^{\infty } \) be a polynomial power sum given as in (2.1). Assume either that the order of this sequence is \( k \ge 3 \) and the dominant root condition \( \deg \alpha _1> \deg \alpha _2 > \deg \alpha _3 \ge \deg \alpha _4 \ge \cdots \ge \deg \alpha _k \) is fulfilled, or that the order is \( k = 2 \) and we have \( \deg \alpha _1> \deg \alpha _2 > 0 \). Moreover, let \( p \in \mathbb {C}[X] \) be a given polynomial. If neither \( f_1 \) nor \( f_1 \alpha _1 \) is a square in \( \mathbb {C}(X) \), then there are only finitely many sets \( \left\{ a,b,c \right\} \) consisting of three non-zero polynomials such that \( ab+p\), \(ac+p\), \(bc+p \) are all elements of \( (G_n)_{n=0}^{\infty } \).

Note that we do not require that the linear recurrence sequence is non-degenerate, i.e. there may be two indices i and j such that \( \alpha _i \) and \( \alpha _j \) differ only be a constant factor. The only non-degeneracy properties we need are given by the dominant root condition.

Moreover, the dominant root condition is really necessary in this situation. If we omit the dominant root condition, then the statement of the theorem does not hold any more as the following example illustrates: Let \( A_n\), \( B_n\), \( C_n \) be three linear recurrence sequences of polynomials. Then the products \( A_nB_n\), \(A_nC_n\), \(B_nC_n \) are also linear recurrence sequences of polynomials. Let \( A_nB_n = s_1 \sigma _1^n + \cdots + s_k \sigma _k^n \) be the Binet representation. Consider now a new linear recurrence sequence \( D_n \) generated from \( A_nB_n \) by the following procedure: Replace each summand \( s_i \sigma _i^n \) with the term

where \( \zeta _3 \) is a primitive third root of unity. This new sequence \( D_n \) has for indices of the shape 3u the same values as \( A_nB_n \) and is zero otherwise. In other words \( D_{3u} = A_{3u}B_{3u} \) and \( D_{3u+1} = 0 = D_{3u+2} \). In the same manner we construct linear recurrence sequences \( E_n \) and \( F_n \) such that \( E_{3u+1} = A_{3u}C_{3u} \) and \( E_{3u} = 0 = E_{3u+2} \) as well as \( F_{3u+2} = B_{3u}C_{3u} \) and \( F_{3u} = 0 = F_{3u+1} \). Last but not least we define the sequence \( G_n := D_n + E_n + F_n + 1 \). Thus we have

First note that \( G_n \) has no dominant root since by the construction above for each characteristic root (possibly except 1) there are two other ones which differ only by the factor \( \zeta _3 \) and \( \zeta _3^2 \), respectively, and therefore have the same degree. If we choose the simple sequences \( A_n,B_n,C_n \) in such a way that all characteristic roots are squares in \( \mathbb {C}[X] \), then all characteristic roots of \( G_n \) are squares in \( \mathbb {C}[X] \) as well. Thus, writing \( G_n \) in the form (2.1), \( f_1 \) is a square in \( \mathbb {C}(X) \) if and only if \( f_1 \alpha _1 \) is a square in \( \mathbb {C}(X) \). Moreover, choose \( A_n,B_n,C_n \) such that all occurring characteristic roots are pairwise distinct, non-constant and have pairwise no common root. Furthermore, let all coefficients in the Binet-formulas of \( A_n,B_n,C_n \) be non-constant polynomials without multiple roots. Assume that these coefficients are pairwise distinct and have pairwise no common root. Additionally, no complex number \( z \in \mathbb {C}\) should be a root of both, an arbitrary root and an arbitrary coefficient. According to this construction no coefficient of a non-constant characteristic root of \( G_n \) is a square in \( \mathbb {C}(X) \). Nevertheless, there are obviously infinitely many sets \( \left\{ a,b,c \right\} \) such that \( ab+1, ac+1, bc+1 \) are all elements of \( (G_n)_{n=0}^{\infty } \). For instance, one can choose

to get an explicit example of the above described generic construction.

We remark that our preliminary assumptions are somewhat the opposite of those in [15] since there the characteristic polynomial is irreducible whereas in our case the characteristic polynomial splits in linear factors over the ground field. Concerning the conclusion, Theorem 2.1 can be seen as a function field analogue of Theorem 2 in [15]. We are unable to prove the result under the condition of Theorem 3 in [15], i.e. for any large k.

Our result is ineffective in the sense that our method of proof does neither produce an upper bound for the number of solutions nor gives a method to actually locate them.

Finally, we mention that we follow very closely the method of proof and the arguments used in [15]. The application of the Subspace theorem is replaced by an application of Proposition 3.2 below. A difference is that we work directly with the identity \( (G_x-1)(G_y-1)(G_z-1) = (abc)^2 \), similarly to [18], which better resembles the dominant root situation in our case. Moreover, another simplification appears since we do not have to parametrize x, y, z with one parameter to identify “leading terms”. Finally, the contradiction follows at once since the coefficients in our functional identity are (complex) constants.

3 Preliminaries

We start with a few quick remarks on the height in function fields in one variable over \( \mathbb {C}\), i.e. finite extensions of the rational function field \( \mathbb {C}(X) \). As such function fields correspond uniquely to regular projective irreducible algebraic curves, it is no surprise that the genus will play a role in the proposition below.

For the convenience of the reader we give a short wrap-up of the notion of valuations and of the height that can e.g. also be found in [12] and [13]. As references for the theory of heights and for other number theoretic aspects in function fields we refer to [21, 22] or [23].

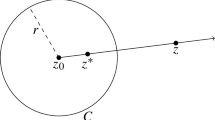

For \( c \in \mathbb {C}\) and \( f(X) \in \mathbb {C}(X) \) denote by \( \nu _c(f) \) the unique integer such that \( f(X) = (X-c)^{\nu _c(f)} p(X) / q(X) \) with \( p(X),q(X) \in \mathbb {C}[X] \) such that \( p(c)q(c) \ne 0 \). Further denote by \( \nu _{\infty }(f) = \deg q - \deg p \) if \( f(X) = p(X) / q(X) \). These functions \( \nu \) are up to equivalence all valuations in \( \mathbb {C}(X) \). If \( \nu _c(f) > 0 \), then c is called a zero of f, and if \( \nu _c(f) < 0 \), then c is called a pole of f. For a finite extension F of \( \mathbb {C}(X) \) each valuation in \( \mathbb {C}(X) \) can be extended to no more (up to equivalence) than \( [F : \mathbb {C}(X)] \) valuations in F. This again gives up to equivalence all valuations in F. Both, in \( \mathbb {C}(X) \) as well as in F the sum-formula

holds, where \( \sum _{\nu } \) means that the sum is taken over all valuations up to equivalence (i.e. over the above defined functions) in the considered function field. Each valuation (again up to equivalence) in a function field corresponds to a place and vice versa. The places can be thought as the equivalence classes of valuations. Moreover, we write \( \deg f = -\nu _{\infty }(f) \) for all \( f(X) \in \mathbb {C}(X) \).

The proof in the next section will make use of height functions in function fields. Let us therefore define the height of an element \( f \in F^* \) by

where the sum is taken over all valuations (up to equivalence) on the function field \( F/\mathbb {C}\). Additionally we define \( \mathcal {H}(0) = \infty \). This height function satisfies some basic properties that are listed in the lemma below, which is proven in [17]:

Lemma 3.1

Denote as above by \( \mathcal {H}\) the height on \( F/\mathbb {C}\). Then for \( f,g \in F^* \) the following properties hold:

-

(a)

\( \mathcal {H}(f) \ge 0 \) and \( \mathcal {H}(f) = \mathcal {H}(1/f) \),

-

(b)

\( \mathcal {H}(f) - \mathcal {H}(g) \le \mathcal {H}(f+g) \le \mathcal {H}(f) + \mathcal {H}(g) \),

-

(c)

\( \mathcal {H}(f) - \mathcal {H}(g) \le \mathcal {H}(fg) \le \mathcal {H}(f) + \mathcal {H}(g) \),

-

(d)

\( \mathcal {H}(f^n) = \left| n \right| \cdot \mathcal {H}(f) \),

-

(e)

\( \mathcal {H}(f) = 0 \iff f \in \mathbb {C}^* \),

-

(f)

\( \mathcal {H}(A(f)) = \deg A \cdot \mathcal {H}(f) \) for any \( A \in \mathbb {C}[T] {\setminus } \left\{ 0 \right\} \).

When proving our theorem, we will use the following function field analogue of the Schmidt subspace theorem. A proof for this proposition can be found in [25]:

Proposition 3.2

(Zannier) Let \( F/\mathbb {C}\) be a function field in one variable, of genus \( \mathfrak {g}\), let \( \varphi _1, \ldots , \varphi _n \in F \) be linearly independent over \( \mathbb {C}\) and let \( r \in \left\{ 0,1, \ldots , n \right\} \). Let S be a finite set of places of F containing all the poles of \( \varphi _1, \ldots , \varphi _n \) and all the zeros of \( \varphi _1, \ldots , \varphi _r \). Put \( \sigma = \sum _{i=1}^{n} \varphi _i \). Then

4 Proof

In this section we will prove Theorem 2.1. Before we begin the proof, let us remark that we will use some ideas of [15] that are quite useful also in our situation. We note that, since some arguments are easier in the function field setting we are in and the proof thus is not too long, it is feasible to write down the proof without interruption.

Proof of Theorem 2.1

We are going to prove the statement indirectly and assume therefore that there are infinitely many sets with the required properties. First note that without loss of generality we can assume that for a still infinite set of sets the inequality \( \deg a \le \deg b \le \deg c \) holds. For any such triple exist non-negative integers x, y, z such that

If all three parameters x, y, z were bounded by a constant, then there can be only finitely many sets \( \left\{ a,b,c \right\} \). Hence it must hold that \( \max \left\{ x,y,z \right\} \rightarrow \infty \).

Since \( \alpha _1 \) is the dominant root, for large enough n, i.e. for \( n \ge n_0 \), the degree satisfies the (in)equality

Now, as \( \max \left\{ x,y,z \right\} \rightarrow \infty \), we get \( \max \left\{ \deg G_x, \deg G_y, \deg G_z \right\} \rightarrow \infty \). Thus \( \deg c \rightarrow \infty \), and consequently we have \( z \rightarrow \infty \) as well as \( y \rightarrow \infty \). Therefore for a still infinite subset of sets we can assume that both, z and y, are not smaller than \( n_0 \), which implies that Eq. (4.2) is applicable.

Recalling the ordering \( \deg a \le \deg b \le \deg c \), we get \( \deg G_x \le \deg G_y \le \deg G_z \). Now again using (4.2) and taking into account that a, b, c are pairwise distinct yields \( x< y < z \).

Let us for the moment assume that x is bounded by a constant. So \( G_x \) is the same fixed value for infinitely many sets. This implies that a and b are fixed for infinitely many sets. Therefore

is constant for infinitely many sets. Dividing by \( f_1 \) and using the power sum representation of the recurrence sequence, we can rewrite this equation in the form

If the left hand side of Eq. (4.3) is non-zero, then it has degree at least \( y \deg \alpha _1 \). However, the degree of the right hand side is at most \( C_0 + z \deg \alpha _2 \), where \( C_0 \) is a constant. Since \( \deg G_y = \deg a + \deg c \) and \( \deg G_z = \deg b + \deg c \) the equation

is satisfied which implies that \( \rho := z-y \) is constant. Consequently, the degree of the right hand side of Eq. (4.3) is at most \( C_1 + y \deg \alpha _2 \) for a new constant \( C_1 \). The only way this can work is that both sides of Eq. (4.3) are zero. Therefore, \( b = a \alpha _1^{\rho } \). Considering the equation

dividing this by a and replacing b by \( a \alpha _1^{\rho } \) yields

The left hand side of Eq. (4.4) has constant degree whereas the degree of the right hand side is \( \rho \deg \alpha _1 + \deg f_2 + y \deg \alpha _2 \). This is a contradiction for large y, implying that x cannot be bounded by a constant.

Overall, there is a still infinite set of sets such that all three indices x, y, z are always greater than an arbitrary fixed constant. In particular, we can assume that no index is smaller than \( n_0 \).

We have already mentioned above, that \( x< y < z \). In the next step it will be proven that z cannot grow much faster than x. For doing so let

be the greatest common divisor of these two polynomials. Now we distinguish between two cases. Firstly, assume \( y \le \kappa z \), where \( \kappa \) is a rational number in the interval (0, 1) which will be determined later. It holds that

Secondly, we assume in the other case that \( y > \kappa z \). Thus we have \( z-y < z - \kappa z = (1-\kappa ) z \). By the definition of g as greatest common divisor, it immediately follows that g is also a divisor of \( (G_z-p) - \alpha _1^{z-y} (G_y-p) \) and therefore

We want to choose \( \kappa \) in such a way that \( \kappa \deg \alpha _1 = \deg \alpha _1 - \kappa \). Hence we set

which yields in both of our cases

If we denote \( \widetilde{g} = \gcd \left( G_x-p, G_z-p \right) \), then \( G_z-p \) is a divisor of \( g \widetilde{g} \) since \( c \mid g \), \( b \mid \widetilde{g} \) and \( G_z-p = bc \). This gives us

as well as by using inequality (4.5) that

and

Thus z is bounded above by \( x/C_6 \). This fact means that the three indices grow with a similar rate.

Combining the three equations in (4.1), we can express the polynomials a, b, c by elements of the linear recurrence sequence \( (G_n)_{n=0}^{\infty } \) in the following way:

By using the square root symbol in an equation we mean that the equation holds for a suitable choose of one of the two possible square roots, which differ only by the factor \( -1 \). This choose can vary from one equation to another.

For this reason, we aim for rewriting the expression \( \sqrt{G_n-p} \) in a more suitable manner. This will be done by applying the (formal) multinomial series expansion to the power sum representation of our recurrence sequence:

where we use the notation \( \underline{h} = (h_1,\ldots ,h_k) \) and

The next step is now to calculate a lower bound for the valuation \( \nu _{\infty } \) of the quantity above, which we will need later on. Since \( \gamma _{h_1,\ldots ,h_k} \in \mathbb {C}\), we get

where \( C_7 = \min \left\{ \deg f_1 - \deg f_2, \ldots , \deg f_1 - \deg f_k, \deg f_1 - \deg p \right\} \). Note that for our purpose we can assume \( n + C_7 > 0 \).

Combining the representations of a, b, c and \( \sqrt{G_n-p} \) we have so far, yields the following representation of the product abc of the elements in a triple:

For the valuation we get the lower bound

Let \( J > 0 \) be a number to be fixed later. Then there exists a natural number L, depending on J, such that

Define \( \varphi _0 = abc \) as well as

for \( j=1,\ldots ,L \). Furthermore, put

Let S be a finite set of places of \( F = \mathbb {C}(X, \sqrt{\alpha _1}, \sqrt{f_1}) \) containing all places lying over the zeros of \( \alpha _1,\ldots ,\alpha _k \), the zeros and poles of \( f_1,\ldots ,f_k \), the zeros of p, and all infinite places. Note that F contains always both possible values of the square roots if we require that one of them is contained since they differ only by the factor \( -1 \). By applying Proposition 3.2, if \( \varphi _0, \ldots , \varphi _L \) are linearly independent over \( \mathbb {C}\), we get the inequality

In order to get also a lower bound for this expression we look at

which gives us

Now, recalling inequality (4.6), we compare the lower with the upper bound to get

Therefore we set \( J := 1 + \frac{C_{11}}{C_6} \deg \alpha _1 \) and note that the right hand side does not depend on J. Plugging this definition into the last inequality yields

which is a contradiction since we have already proven that x cannot be bounded by a constant and that therefore we may assume that all three indices are greater than any fixed constant.

Thus \( \varphi _0, \ldots , \varphi _L \) must be linearly dependent over \( \mathbb {C}\). Without loss of generality we may assume that \( \varphi _1, \ldots , \varphi _L \) are linearly independent since otherwise we could group them together before doing the previous step. Hence, in a relation of linear dependence, there must be a non-zero coefficient in front of abc. So we can write

for \( \lambda _j \in \mathbb {C}\).

We distinguish between two cases considering the parity of \( x+y+z \). If \( x+y+z \) is even, then we have

which contradicts the assumption in our theorem that \( f_1 \) is no square in \( \mathbb {C}(X) \). When \( x+y+z \) is odd, we get

which contradicts the assumption in our theorem that \( f_1 \alpha _1 \) is no square in \( \mathbb {C}(X) \). All in all there can be only finitely many sets satisfying the required properties. \(\square \)

Change history

17 May 2022

A Correction to this paper has been published: https://doi.org/10.1007/s10998-022-00468-4

References

M. Bliznac Trebješanin, A. Filipin, Nonexistence of \(D(4)\)-quintuples. J. Number Theory 194, 170–217 (2019)

N.C. Bonciocat, M. Cipu, M. Mignotte, There is no Diophantine \(D(-1)\)-quadruple. arXiv:2010.09200

P. Corvaja, U. Zannier, Diophantine equations with power sums and Universal Hilbert Sets. Indag. Math. 9, 317–332 (1998)

A. Dujella, Diophantine \( m \)-tuples. https://web.math.pmf.unizg.hr/\(\sim \)duje/dtuples.html

A. Dujella, On the size of Diophantine \( m \)-tuples. Math. Proc. Camb. Philos. Soc. 132, 23–33 (2002)

A. Dujella, Bounds for the size of sets with the property \(D(n)\). Glas. Mat. Ser. III(39), 199–205 (2004)

A. Dujella, C. Fuchs, F. Luca, A polynomial variant of a problem of Diophantus for pure powers. Int. J. Number Theory 4, 57–71 (2008)

A. Dujella, C. Fuchs, R.F. Tichy, Diophantine \( m \)-tuples for linear polynomials. Period. Math. Hungar. 45, 21–33 (2002)

A. Dujella, C. Fuchs, P.G. Walsh, Diophantine \( m \)-tuples for linear polynomials. II. Equal degrees. J. Number Theory 120, 213–228 (2006)

A. Dujella, A. Jurasic, On the size of sets in a polynomial variant of a problem of Diophantus. Int. J. Number Theory 6, 1449–1471 (2010)

A. Filipin, A. Jurasić, On the size of Diophantine \( m \)-tuples for linear polynomials. Miskolc Math. Notes 17(2), 861–876 (2016)

C. Fuchs, S. Heintze, Perfect powers in polynomial power sums. in “Lie Groups, Number Theory, and Vertex Algebra” (D. Adamovic, A. Dujella, A. Milas, P. Pandzic, eds.), Amer. Math. Soc., Contemp. Math. 768, 89–104 (2021)

C. Fuchs, S. Heintze, On the growth of linear recurrences in function fields. Bull. Austr. Math. Soc. 104(1), 11–20 (2021)

C. Fuchs, C. Hutle, N. Irmak, F. Luca, L. Szalay, Only finitely many tribonacci Diophantine triples exist. Math. Slovaca 67, 853–862 (2017)

C. Fuchs, C. Hutle, F. Luca, Diophantine triples in linear recurrences of Pisot type. Res. Number Theory 4, Art. 29 (2018)

C. Fuchs, C. Hutle, F. Luca, L. Szalay, Diophantine triples and \( k \)-generalized Fibonacci sequences. Bull. Malays. Math. Soc. 41, 1449–1465 (2018)

C. Fuchs, C. Karolus, D. Kreso, Decomposable polynomials in second order linear recurrence sequences. Manuscr. Math. 159(3), 321–346 (2019)

C. Fuchs, F. Luca, L. Szalay, Diophantine triples with values in binary recurrences. Ann. Sci. Norm. Super. Pisa Cl. Sci. (5) 7, 579–608 (2008)

B. He, A. Togbé, V. Ziegler, There is no Diophantine quintuple. Trans. Am. Math. Soc. 371, 6665–6709 (2019)

A. Jurasic, Diophantine \( m \)-tuples for quadratic polynomials. Glas. Mat. Ser. III(46), 283–309 (2011)

S. Lang, Introduction to Algebraic and Abelian Functions. Grad Texts in Math, vol. 89, 2nd edn. (Springer, New York, 1982)

S. Lang, Fundamentals of Diophantine Geometry (Springer, New York, 1983)

M. Rosen, Number Theory in Function Fields. Grad. Texts in Math., vol. 210 (Springer, New York, 2002)

W.M. Schmidt, Linear recurrence sequences, Diophantine approximation (Cetraro, 2000), 171–247, Lecture Notes in Math, vol. 1819. (Springer, Berlin, 2003)

U. Zannier, On composite lacunary polynomials and the proof of a conjecture of Schinzel. Invent. Math. 174(1), 127–138 (2008)

Funding

Open access funding provided by Paris Lodron University of Salzburg.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original version of this article has been revised: The first author’s affiliation has been corrected.

Supported by Austrian Science Fund (FWF): I4406.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fuchs, C., Heintze, S. A polynomial variant of diophantine triples in linear recurrences. Period Math Hung 86, 289–299 (2023). https://doi.org/10.1007/s10998-022-00460-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10998-022-00460-y