Abstract

In this paper, we propose systematic approaches for learning imbalanced data based on a two-regime process: regime 0, which generates excess zeros (majority class), and regime 1, which contributes to generating an outcome of one (minority class). The proposed model contains two latent equations: a split probit (logit) equation in the first stage and an ordinary probit (logit) equation in the second stage. Because boosting improves the accuracy of prediction versus using a single classifier, we combined a boosting strategy with the two-regime process. Thus, we developed the zero-inflated probit boost (ZIPBoost) and zero-inflated logit boost (ZILBoost) methods. We show that the weight functions of ZIPBoost have the desired properties for good predictive performance. Like AdaBoost, the weight functions upweight misclassified examples and downweight correctly classified examples. We show that the weight functions of ZILBoost have similar properties to those of LogitBoost. The algorithm will focus more on examples that are hard to classify in the next iteration, resulting in improved prediction accuracy. We provide the relative performance of ZIPBoost and ZILBoost, which rely on the excess kurtosis of the data distribution. Furthermore, we show the convergence and time complexity of our proposed methods. We demonstrate the performance of our proposed methods using a Monte Carlo simulation, mergers and acquisitions (M&A) data application, and imbalanced datasets from the Keel repository. The results of the experiments show that our proposed methods yield better prediction accuracy compared to other learning algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Most canonical classifiers assume that the number of examples in each of the different classes is approximately the same. Unfortunately, class imbalances are present in many real-life situations (Fernández et al., 2018). Class imbalance refers to the dominance of one class (i.e., the majority class) over the other (i.e., the minority class). It occurs when the prior probability of belonging to the majority class is significantly higher than that of belonging to the minority class (Koziarski et al., 2021). The presence of class imbalance is known to deteriorate the prediction of the minority class (Thanathamathee & Lursinsap, 2013). The minority class is usually overlooked. One of the main issues in imbalance problems is that, despite its rareness, the minority class is generally of more interest from an application perspective, as it may contain important and useful knowledge (Krawczyk, 2016). Thus, it is necessary to correct the prediction of the minority class. Furthermore, because any dataset with an unequal class distribution is technically considered imbalanced, proposing new learning methods for imbalanced data is an important topic in the machine learning community.

In this study, we propose systematic approaches to learning imbalanced data. The systematic imbalanced learning method allows us to think more mechanistically about the data generation processes used to produce imbalanced examples. For instance, in the case of the survival rate of sea turtle eggs, ones are recorded for survivors and zeros for hatchlings who did not survive to adulthood. Since the estimated survival rate of hatchlings is about 0.1% (1 one and 999 zeros), the zero (failure) examples outnumber the one (success) examples (Janzen, 1993); the case of successful hatchlings can be characterized as an imbalanced classification. In this case, the survival process consists of two regimes. First, on the beach, hatchlings must escape natural predators, such as birds, crabs, raccoons, and foxes, to make it to the sea (regime 0); second, once in the water, few hatchlings survive to adulthood as a result of anthropogenic activities, such as overexploitation for food, the pet trade, and the threat of global climate change (regime 1) (Stanford et al., 2020). Turtles typically experience the highest mortality rates during the hatchling and early life stages (Gibbons, 1987; Heppell et al., 1996; Perez-Heydrich et al., 2012); the majority of the class (i.e., excessive zeros) is generated in regime 0. However, the survival rate increases rapidly as turtles grow and age (Brooks et al., 1988; Congdon et al., 1994); after passing the first hurdle (regime 0), the minority class (i.e., ones) enters into regime 1. Thus, the minority class comprises survivors, whereas the majority class consists of hatchlings who either failed to approach the water or did not survive to adulthood in the water. Nature operates in this way.

Therefore, in some cases, it is ideal to propose a method that generates two models (regimes). First, a probit (or logit) model is generated for the excessive zero examples (e.g., predicting whether a hatchling will escape from predators); this is identified as regime 0 for the majority class. Then, another probit (or logit) model is generated for the underrepresented examples (e.g., predicting whether or not those hatchlings who graduate from the first regime would survive to adulthood); this is identified as regime 1 for the minority class. Finally, the two models are combined. Notably, each of the two models may use a different set of predictors. In the above example, the factors related to natural predators are more critical to survival in regime 0 than in regime 1. Similarly, the factors related to human activities are more critical to survival in regime 1 than in regime 0. For more details, see Fig. 1.

We argue that systematic approaches that rely on data-generating processes may be appropriate for imbalance learning in particular cases (e.g., survival of sea turtle eggs). We then develop zero-inflated probit boost (ZIPBoost) and zero-inflated logit boost (ZILBoost) methods to account for imbalance learning based on two distinct regimes. More specifically, the proposed ZIPBoost (ZILBoost) uses a two-regime process that combines a split probit (logit) model for regime 0 with an ordinary probit (logit) model for regime 1. Since boosting (e.g., LogitBoost and AdaBoost) is known to improve accuracy compared with a single classifier, we combine a boosting strategy (incremental learning rules) with the framework of the two-regime process.

Notably, we show that the weight functions of ZIPBoost have the desired properties for achieving good predictive performance. Similar to AdaBoost, the weight functions upweight misclassified examples and downweight correctly classified examples. We present these properties as propositions. The properties make the algorithm focus more on misclassified examples during iterations, resulting in a reduction in errors. Since ZIPBoost involves updating two functions—one for probabilities in the split probit model and the other for probabilities in the ordinary probit model—we apply cyclic coordinate descent, which is a repeated application of the Newton–Raphson method. In the case of imbalanced data, it is known that the logit model outperforms the probit model for a leptokurtic distribution (a distribution with positive excess kurtosis), whereas the probit model is preferred for a platykurtic distribution (a distribution with negative excess kurtosis) (Chen & Tsurumi, 2010). Thus, we also introduce the ZILBoost algorithm, wherein the two probit models in ZIPBoost are replaced with two logit models. We show that the weight functions of ZILBoost have similar properties to LogitBoost. The weight functions upweight examples that have low confidence (i.e., the fitted values are around zero) and downweight examples that have high confidence (i.e., the fitted values are not around zero). These properties imply that the algorithm will prioritize examples that are hard to classify in the next iteration, leading to improved prediction accuracy. Like ZIPBoost, ZILBoost requires updating two functions, and thus, we employ cyclic coordinate descent. We use a simulation to demonstrate that the excess kurtosis of the data distribution determines the relative performance of ZIPBoost and ZILBoost. In addition, we present the convergence and time complexity of the proposed methods.

We demonstrate the performance of our proposed methods using experiments by a Monte Carlo simulation, a real data application for predicting M&A outcomes, and imbalanced datasets from the Keel repository. For comparison, we consider standard learning algorithms, including Adaboost, Logitboost, and Probitboost, and existing approaches for learning imbalanced data, such as AdaC2, SMOTEBoost, and generative adversarial networks (GANs). The results from the experiments show that our proposed methods provide the best prediction accuracy. We believe that when data are generated from a two-regime process, our proposed methods outclass existing methods in terms of predictive performance.

To implement the proposed methods, it is necessary to have prior information on two different sets of predictors that affect the probabilities of belonging to either regime 0 or 1. If researchers do not have access to knowledge of the optimal predictor splits, they can empirically determine the splits that provide the best predictive performance on data. However, we note that this data-driven approach to the predictor splits may be computationally expensive. For more details, please see Sect. 5.3. Furthermore, the proposed methods can be generalized to multiclass problems, but we note that other updating schemes should be employed to reduce the computational burden. This study also provides the possibility for future work on the refinement functions of the proposed methods.

This paper is organized as follows. We review the existing approaches to imbalanced learning in Sect. 2. In Sect. 3, we set up the problem. In Sect. 4, we introduce the proposed methods (ZIPBoost and ZILBoost). We present experiments in Sect. 5. In Sect. 6, we conclude the paper with suggestions for future work.

2 Related work

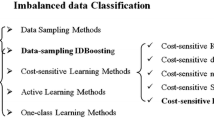

Numerous efforts have been made in the machine learning community to classify the minority class correctly in the presence of class imbalance. A large number of techniques can be broadly categorized into four groups based on how they tackle the class imbalance problem (Fernández et al., 2018).

First, data-level approaches try to rebalance the class distribution by resampling the imbalanced examples (e.g., Batista et al., 2004; Fernández et al., 2008; Koziarski & Woźniak, 2017; Napierała et al., 2010; Stefanowski & Wilk, 2008). Notably, data-level approaches include sampling methods consisting of oversampling, undersampling, and a combination of both. Oversampling attempts to increase the size of the minority class, whereas undersampling discards the examples in the majority class. Among the sampling methods, the Synthetic Minority Over-sampling TEchnique (SMOTE), proposed by Chawla et al. (2002), is quite popular. SMOTE generates synthetic minority examples through linear interpolation. However, the presence of disjoint data distribution and outliers is known to hinder improvements in classification using synthetic examples (Koziarski, 2020). Recently, the GANs approach has been adopted to deal with the imbalance problem (e.g., Frid-Adar et al., 2018). The GANs approach consists of the following two components (Huang et al., 2022): (1) a generator that attempts to generate data similar to the real imbalanced data, and (2) a discriminator that attempts to discriminate between the real imbalanced data and the generated data. Unlike conventional oversampling methods used to address class imbalance, GANs may not suffer from overfitting, because their training is based on adversarial learning between the two components. Data-level approaches do not require modification of the learning algorithm, because sampling methods alter data distribution to train a classifier under class balance. However, the sampling methods have a limitation in that the resampled data may follow a distribution that is different from that of the original data (Sun et al., 2015).

Second, algorithm-level approaches aim to modify existing classification algorithms to bias learning toward the minority class (e.g., Barandela et al., 2003; Lin et al., 2002; Liu et al., 2000). For instance, a support vector machine (SVM), one of the popular classification methods, can be combined with different classification strategies, such as kernel modifications and weighting schemes based on the importance of each example for classification, to alleviate the tendency to classify a minority example as the majority class while learning imbalanced data (Hwang et al., 2011; Liu & He, 2022). For algorithmic approaches, it is vital to have sufficient knowledge of the causes of bias from the underlying mechanisms of the original algorithms so that appropriate modifications can be considered (Krawczyk, 2016). Without a precise identification of the reasons for a failure in classifying the minority class, classifiers still tend to predict the majority class at the cost of losing the minority class’s predictive power.

Third, cost-sensitive approaches consist of a combination of data-level transformations and algorithm-level adaptations (e.g., Chawla et al., 2008; Lee et al., 2020; Ling et al., 2006; Zhang et al., 2008). The classification algorithm is biased toward the minority class by adding costs to instances and is modified to account for misclassification costs. More precisely, to build a cost-sensitive classifier, different misclassification costs for different classes are incorporated into the learning process, such as making the cost of misclassifying a minority example at a higher level than that of misclassifying a majority example. One of the cost-sensitive approaches is the cost-sensitive decision tree, in which different misclassification costs can be used for splitting or pruning criteria (López et al., 2012). However, the major drawback of this approach is that it assumes a known cost matrix that is unknown in most cases (Krawczyk et al., 2014; Pei et al., 2021; Saber et al., 2020; Sun et al., 2007). The cost-sensitive approach has difficulty finding the optimal cost matrix to handle the class imbalance problem (Ren et al., 2022).

Fourth, ensemble-based approaches are hybrid methods that usually combine an ensemble learning algorithm with a data-level (or cost-sensitive) approach (e.g., Wang & Japkowicz, 2010; Wang et al., 2014). For example, ensemble-based approaches include combinations of cost-sensitive approaches with boosting or bagging, which are ensemble learning algorithms designed to improve predictive accuracy. This combination processes imbalanced data before utilizing multiple learning algorithms. To accept costs in the training process, cost-sensitive ensembles guide cost minimization using the ensemble learning algorithm (Galar et al., 2012). Despite this, ensemble learning methods, such as boosting and bagging, can play a role only in producing more accurate predictions than stand-alone learning algorithms. Specifically, unless the combined data-level (or cost-sensitive) approach appropriately addresses the imbalance problem, ensemble-based approaches are unable to resolve the imbalance problem. For example, cost-sensitive ensembles still require an optimal cost matrix.

However, the existing approaches do not account for the possibility that two different types of zeros exist, as they do not distinguish between zeros that may stem from regime 0 (e.g., a hatchling that did not escape from predators on the beach) and zeros generated from regime 1 (e.g., a hatchling that graduated from the first regime but did not ultimately survive to adulthood in the water). In other words, the existing approaches assume that only one regime generates the majority and minority classes. Departing from the existing approaches, we propose systematic approaches to address the imbalance problem for classification by assuming that two distinct regimes produce the majority and minority classes.

3 Problem setup

In this section, we describe the two-regime process and illustrate how boosting can be applied to the process.

3.1 The two-regime process

In this study, we assume that the sample is obtained from a two-regime process: regime 0, which generates excess zeros, and regime 1, which contributes to generating a minority class. We present the distribution of fictitious data from the two-regime process in Fig. 1. We consider the fictitious data to be imbalanced, as an outcome of 0 significantly outweighs an outcome of 1: Among the 500 examples, 400 examples (80%) have an outcome of 0, while only 100 examples (20%) have an outcome of 1. The majority class is an outcome of 0. This two-regime process assumes the following: (1) For the instances of the outcome of 0, regime 0 generates the gray portion (300 examples, representing the excess zeros) and regime 1 generates the black portion (100 examples), and (2) for the instances of the outcome of 1, regime 1 generates the black portion (100 examples, representing the minority class). Thus, in this two-regime process, zeros are composed of two parts: gray and black portions of the zero bar. The black portions of the zero and one bars indicate the examples in regime 1, which passed the first hurdle (regime 0). Notably, in the absence of excess zeros (i.e., without the gray portion), the data would seem balanced, since the zero and one bars have the same height with 100 examples for each outcome. In this case, canonical classifiers, including ordinary probit or logit models, would perform well in predicting outcomes. However, in the presence of a gray portion, it becomes essential to differentiate between the gray portion and the black portion of the zero bar. To this end, a systematic approach is required to identify whether examples belong to the gray portion or black portion of the zero and one bars, as well as to ascertain whether examples in the black portion have an outcome of 0 or 1.

More specifically, let us consider a sample of \(N\) observed units with binary outcomes 0 and 1 and assume that a zero outcome is inflated. To put it differently, the sample represents a class imbalance whose majority is a zero outcome. In this setting, let \({q}^{*}\) be a latent variable to represent the propensity of regime 1 as.

where \({\varvec{x}}\) indicates a vector of covariates that cause inflated zeros for the majority class, \({\varvec{\beta}}\) is a vector of coefficients, and \(u\) represents the error term. Equation (1) represents the splitting equation (SE), which accounts for excess zeros. For example, the SE identifies whether a hatchling will reach the water or not. Depending on the value of \({q}^{*}\), we define the two regimes indicated by \(q\in \{\text{0,1}\}\) such that a unit with \({q}^{*}\le 0\) belongs to regime 0 (i.e., \(q=0\)), and the observed zero turns out to be an inflated case, and if a unit has \({q}^{*}>0\), we may observe one of the possible outcomes, 0 or 1, in regime 1 (i.e., \(q=1\)). The probability of belonging to regime 1 is defined as

For those with \(q=1\), the observed outcome is determined by the underlying latent variable \({\widetilde{y}}^{*}\) defined as follows:

where \({\varvec{z}}\) indicates a vector of covariates that generate the minority class, \({\varvec{\gamma}}\) is a vector of coefficients, and \(\varepsilon \) represents the error term. We refer to Eq. (2) as the outcome equation (OE) (Hill et al., 2011). For example, the OE identifies whether a hatchling will survive to adulthood or not. To state it differently, depending on the value of \({\widetilde{y}}^{*}\) for those with \(q=1\), one of the possible outcomes is observed. Under regime 1, the possible outcomes, \(\widetilde{y}\), are defined as follows:

Notably, zero outcomes can be generated from either \(q\) in the SE or \(\widetilde{y}\) in the OE, but it is not distinguishable. The full probabilities for observed outcomes, \(y\), are then jointly based on the results from the SE and OE:

The SE and OE can be modeled using probit or logit models. The choice between probit and logit models depends on the characteristics of the data distribution. For example, if the data distribution exhibits negative excess kurtosis, a probit model is preferred over a logit model, while a logit model is deemed more suitable for data with positive excess kurtosis (Chen & Tsurumi, 2010). When the probit model is applied, Eq. (3) becomes

where \(\varPhi (\cdot )\) is the cumulative distribution function of the standard univariate normal distribution. Notably, it is shown that the parameters of Eq. (4) are consistently and efficiently estimated through maximum likelihood estimation (Harris & Zhao, 2007). Based on the probabilities in Eq. (4), the log-likelihood function to find an optimal solution is defined as.

where \({f}_{1}\left({\varvec{x}}\right)={{\varvec{x}}}^{\boldsymbol{^{\prime}}}{\varvec{\beta}}\), \({f}_{2}\left({\varvec{z}}\right)={{\varvec{z}}}^{\boldsymbol{^{\prime}}}{\varvec{\gamma}}\), and \(f\in \{{f}_{1}\left({\varvec{x}}\right),{f}_{2}\left({\varvec{z}}\right)\}\).

If the SE and OE are modeled by the logit model, Eq. (4) can be rewritten as

and the log-likelihood function is

where \({f}_{1}\left({\varvec{x}}\right)={{\varvec{x}}}^{\boldsymbol{^{\prime}}}{\varvec{\beta}}\), \({f}_{2}\left({\varvec{z}}\right)={{\varvec{z}}}^{\boldsymbol{^{\prime}}}{\varvec{\gamma}}\), and \(f\in \{{f}_{1}\left({\varvec{x}}\right),{f}_{2}\left({\varvec{z}}\right)\}\).

3.2 Boosting with cyclic coordinate descent

Boosting is an ensemble method that combines many weak classifiers to generate a powerful learning rule (Oentaryo et al., 2014). Notably, it is widely acknowledged that ensembles of many classifiers, such as boosting, often exhibit higher prediction accuracy compared to individual models that produce a single classifier (Guelman, 2012; Provost & Domingos, 2003; Ren et al., 2016). Thus, we employ boosting to update \({f}_{1}\left(\mathbf{x}\right)\) and \({f}_{2}\left(\mathbf{z}\right)\) to obtain the final classifier.

To this end, we use the expected negative log-likelihood as a loss function, denoted as \(E\left[-l\left(f\right)|{\varvec{x}},{\varvec{z}}\right]\). Hence, the expected log-likelihood maximization problem becomes the expected negative log-likelihood minimization problem, and the minimizer \({f}_{1}^{*}\left({\varvec{x}}\right)\) and \({f}_{2}^{*}\left({\varvec{z}}\right)\) of \(E\left[-l\left(f\right)|{\varvec{x}},{\varvec{z}}\right]\) is the maximizer of the expected log-likelihood.

In the iterative process of updating \({f}_{1}\left({\varvec{x}}\right)\) and \({f}_{2}\left({\varvec{z}}\right)\), we use a cyclic coordinate descent algorithm. This sequential update involves updating one of them while keeping the other fixed at each iteration, reducing multivariate optimization to sequential univariate (Tang & Wu, 2014). Owing to computational efficiency, cyclic coordinate descent has gained popularity for solving problems with more than one parameter (Massias et al., 2020; Saha & Tewari, 2013). In each update of \({f}_{1}\left({\varvec{x}}\right)\) and \({f}_{2}\left({\varvec{z}}\right)\), the Newton–Raphson method is applied (Wu, 2013; Wu & Lange, 2010).

To minimize the expected negative log-likelihood, the update schemes based on the Newton–Raphson method at iteration \(m\) are defined as follows:

where \(D\left(.\right)\) and \(H\left(.\right)\) are the gradient and Hessian of the objective function, respectively. Given initial values of 0 for \({f}_{1}^{0}({\varvec{x}})\) and \({f}_{2}^{0}({\varvec{z}})\), the final values after \(M\) iterations are \({f}_{1}^{M}\left({\varvec{x}}\right)={\sum }_{m=1}^{M}{f}_{1}^{m}({\varvec{x}})\) and \({f}_{2}^{M}\left({\varvec{z}}\right)={\sum }_{m=1}^{M}{f}_{2}^{m}({\varvec{z}})\), and the predicted probabilities of observing each possible outcome for unit \(i\) are calculated based on \({f}_{1}^{M}\left({\varvec{x}}\right)\) and \({f}_{2}^{M}\left({\varvec{z}}\right)\). The final classifier is \(\widehat{y}=\) \(\underset{j\in \left\{\text{0,1}\right\}}{\text{argmax}}\text{Pr}(y=j|{\varvec{x}},{\varvec{z}})\), where \(\widehat{y}\) represents the predicted outcome and \(j\) indicates possible outcomes.

4 Proposed methods

In this section, we propose two novel methods, ZIPBoost and ZILBoost, that integrate the two-regime process with boosting techniques to reduce the misclassification of the minority class. We also show the convergence of the proposed methods.

4.1 ZIPBoost

ZIPBoost consists of two types of iterations: one with respect to the SE and the other with respect to the OE. The objective function in ZIPBoost is the expected negative log-likelihood, \(E\left[-l\left(f\right)|{\varvec{x}},{\varvec{z}}\right]\), where \(l\left(f\right)\) is defined as in Eq. (5). Like AdaBoost, the observation weights for the SE and OE increase when units are misclassified and decrease for units that are correctly classified.

4.1.1 Splitting equation iterations

ZIPBoost starts by fitting the SE, \({f}_{1}\left({\varvec{x}}\right)\). We use \({f}_{x}={f}_{1}({\varvec{x}})\) and \({f}_{z}={f}_{2}({\varvec{z}})\) interchangeably for simplicity in notation. Using the properties of the cumulative distribution function and the probability distribution function of the standard normal distribution, we can infer that \(\varPhi \left(-f\left(\cdot \right)\right)= 1-\varPhi \left(f\left(\cdot \right)\right)\), \(\varphi \left(-f\left(\cdot \right)\right)=\varphi \left(f\left(\cdot \right)\right),\) and \({\varphi }{\prime}\left(f\left(\cdot \right)\right)=-f\left(\cdot \right)\varphi \left(f\left(\cdot \right)\right)\), where \(\varPhi \left(\cdot \right)\) represents the standard normal cumulative distribution function and \(\varphi \left(\cdot \right)\) indicates the standard normal probability density function. We also assume the natural logarithm for the negative log-likelihood function for simplicity. The gradient of the objective function is defined as

For the derivation of the gradient \(D\left({f}_{x}\right)\), please see Appendix A.

In addition, the Hessian is defined as

Equation (8) indicates that when \(y=0\), \(h\left({f}_{x}\right)\) can be written as \(h\left({f}_{x}\right)=-\frac{\left\{{f}_{x}\varphi \left({f}_{x}\right)\varPhi \left({f}_{z}\right)\left[1-\varPhi \left({f}_{x}\right)\varPhi \left({f}_{z}\right)\right]-{\varphi }^{2}\left({f}_{x}\right){\varPhi }^{2}\left({f}_{z}\right)\right\}}{{\left\{1-\varPhi \left({f}_{x}\right)\varPhi \left({f}_{z}\right)\right\}}^{2}}\) and when \(y=1\), \(h\left({f}_{x}\right)\) can be written as \(h\left({f}_{x}\right)=-\frac{\left\{-{f}_{x}\varphi \left({f}_{x}\right)\varPhi \left({f}_{x}\right)-{\varphi }^{2}\left({f}_{x}\right)\right\}}{{\left\{\varPhi \left({f}_{x}\right)\right\}}^{2}}.\) Therefore,

where \({G}_{0}\left({f}_{x}\right)=-{f}_{x}+{f}_{x}\varPhi \left({f}_{x}\right)\varPhi \left({f}_{z}\right)+\varphi \left({f}_{x}\right)\varPhi \left({f}_{z}\right),\) \({G}_{1}\left({f}_{x}\right)={f}_{x}\varPhi \left({f}_{x}\right)+\varphi \left({f}_{x}\right)\), and \({G}_{0}\left({f}_{x}\right)=-{f}_{x}+\varPhi \left({f}_{z}\right){G}_{1}\left({f}_{x}\right)\).

Descent methods require convexity of the loss function to guarantee optimality. The convexity can be proven by showing that the Hessian of \(E\left[-l\left(f\right)|{\varvec{x}}\right]\) is positive definite. However, the Hessian in Eq. (9) is indefinite, since \(h\left({f}_{x}\right)>0\) if \({f}_{x}\le 0\) but \(h\left({f}_{x}\right)<0\) if \({f}_{x}>0\) when \(y=1\). This means that our algorithm can converge to saddle points (Dauphin et al., 2014). Thus, we use the absolute value of the Hessian to force the matrix to be positive definite. Notably, for the non-convex functions in the Newton–Raphson method, the eigenvalues of the Hessian can be replaced with absolute values (Paternain et al., 2019; Wright & Nocedal, 2006). In our setting, since the Hessian is a \(1\times 1\) matrix and the eigenvalue is the value of the Hessian itself, the modification is achieved by taking the absolute value, represented as \(|h\left({f}_{x}\right)|\).

Based on the gradient and the modified Hessian, the Newton–Raphson method is applied to minimize the expected negative log-likelihood as follows:

where \({E}_{w}(\cdot |{\varvec{x}})\) indicates the weighted conditional expectation such that \({E}_{w}\left(g\left({\varvec{x}},y\right)|{\varvec{x}}\right)=\frac{\text{E}\left[\text{w}\left({\varvec{x}}, y\right)\text{g}\left({\varvec{x}},y\right)|{\varvec{x}}\right]}{\text{E}\left[\text{w}\left({\varvec{x}},y\right)|{\varvec{x}}\right]},\boldsymbol{ }\text{with w}\left({\varvec{x}}, y\right)>0\).

Furthermore, the weights are expected to increase for misclassification but decrease for correct classification. Thus, we provide the property of the weight function, \(|h\left({f}_{x}\right)|\):

When \(y=0,\) Eq. (10) becomes

The weight function with \(y=0\) upweights the misclassified units and downweights the units that are correctly classified. We summarize these properties in the following propositions:

Proposition 1

(Correct classification) We have \(\underset{{f}_{x}\to -\infty }{\text{lim}}W\left({f}_{x}\right)=0\).

Proposition 2

(Correct classification) Given \({f}_{z}\ll -N\), where \(N\) is an arbitrarily large positive number, \(\underset{{f}_{x}\to \infty }{\text{lim}}W\left({f}_{x}\right)=0\).

Proposition 1 holds because \(\varphi \left({f}_{x}\right)\to 0\) and \(\left({f}_{x}\right)\to 0\), and Proposition 2 holds because \(\varphi \left({f}_{x}\right)\to 0\) and\(\varPhi \left({f}_{x}\right)\to 1\), but\(\varPhi \left({f}_{z}\right)\approx 0\).

Proposition 3

(Misclassification) Given \({f}_{z}\gg N\), where \(N\) is an arbitrarily large positive number, \(\underset{{f}_{x}\to \infty }{\text{lim}}W\left({f}_{x}\right)=1\).

Proof

Given \({f}_{z}\gg N\), \(\underset{{f}_{x}\to \infty }{\text{lim}}W\left({f}_{x}\right)=\underset{{f}_{x}\to \infty }{\text{lim}}\left|\frac{-{f}_{x}\varphi \left({f}_{x}\right)\varPhi \left({f}_{z}\right)+{f}_{x}\varphi \left({f}_{x}\right)\varPhi \left({f}_{z}\right)\varPhi \left({f}_{x}\right)\varPhi \left({f}_{z}\right)+{\varphi }^{2}\left({f}_{x}\right){\varPhi }^{2}\left({f}_{z}\right)}{{\left\{1-\varPhi \left({f}_{x}\right)\varPhi \left({f}_{z}\right)\right\}}^{2}}\right|=\frac{0}{0}\), which is an indeterminate form, since \(\varPhi \left({f}_{x}\right)\varPhi ({f}_{z}) \to 1\) and \(\varphi \left({f}_{x}\right)\to 0\) as \({f}_{x}\to \infty \). Thus, following Zheng and Liu (2012), we apply L’Hôpital’s rule repeatedly:

where the second, sixth, ninth, and tenth equations hold by L’Hôpital’s rule. □

Propositions 1 and 2 provide the weight functions with \(y=0\) in the SE iterations when a unit is correctly classified (i.e., the predicted outcome is 0). As \({f}_{x}\) decreases, it is more likely that the unit is identified as an inflated case, resulting in the correct classification. In such a case, the weight function downweights the unit. When \({f}_{x}\) increases, the algorithm will correctly classify the unit only if \({f}_{z}\) is sufficiently small (i.e., \(\varPhi \left({f}_{z}\right)\approx 0)\). It implies that when \({f}_{z}\) is smaller than an arbitrarily large negative number, \(-N\), the weight for the unit decreases as \({f}_{x}\) increases. On the other hand, Proposition 3 provides the weight function with \(y=0\) in the case of misclassification. When \({f}_{x}\) increases and a sufficiently large value of \({f}_{z}\) (i.e., \(\varPhi \left({f}_{z}\right)\approx 1)\) is given, it is more likely that the unit is misclassified as an outcome of 1, and hence, its weight is increased.

When \(y=1,\) Eq. (10) becomes

The weight function with \(y=1\) increases the weights for the misclassified units and decreases the weights for the units that are correctly classified. We summarize these properties in the following propositions:

Proposition 4

(Correct classification) We have \(\underset{{f}_{x}\to \infty }{\text{lim}}W\left({f}_{x}\right)=0.\)

The proof of Proposition 4 is trivial because \(\varphi \left({f}_{x}\right)\to 0\).

Proposition 5

(Misclassification) We have \(\underset{{f}_{x}\to -\infty }{\text{lim}}W\left({f}_{x}\right)=1\).

Proof

The proof of Proposition 5 is similar to that of Proposition 3. For more details, please see Appendix B.

Proposition 4 provides the weight function with \(y=1\) for correct classification. An increase in \({f}_{x}\) indicates a higher likelihood of passing the first hurdle (regime 0), and hence, the weight for the unit is decreased. Proposition 5 shows the weight function with \(y=1\) in the case of misclassification. When \({f}_{x}\) decreases, it is more likely that the unit, whose actual outcome is 1, is mistakenly identified as an inflated case. In such a case, our algorithm will increase its weight in the next iteration.

4.1.2 Outcome equation iterations

Next, the algorithm updates \({f}_{2}\left({\varvec{z}}\right)\) based on the previously updated \({f}_{1}\left({\varvec{x}}\right)\). The gradient of the objective function is defined as

We provide details on the derivation of the gradient \(D\left({f}_{z}\right)\) in Appendix A.

Furthermore, the Hessian is defined as

When \(y=0, h\left({f}_{z}\right)\) in Eq. (11) becomes \(h\left({f}_{z}\right)=-\frac{\left\{{f}_{z}\varphi \left({f}_{z}\right)\varPhi \left({f}_{x}\right)\left[1-\varPhi \left({f}_{z}\right)\varPhi \left({f}_{x}\right)\right]-{\varphi }^{2}\left({f}_{z}\right){\varPhi }^{2}\left({f}_{x}\right)\right\}}{{\left\{1-\varPhi \left({f}_{z}\right)\varPhi \left({f}_{x}\right)\right\}}^{2}}\), and when \(y=1,\) it becomes \(h\left({f}_{z}\right)=-\frac{\left\{-{f}_{z}\varphi \left({f}_{z}\right)\varPhi \left({f}_{z}\right)-{\varphi }^{2}\left({f}_{z}\right)\right\}}{{\left\{\varPhi \left({f}_{z}\right)\right\}}^{2}}\). Therefore, the Hessian can be rewritten as

where \({G}_{0}\left({f}_{z}\right)=-{f}_{z}+{f}_{z}\varPhi \left({f}_{z}\right)\varPhi \left({f}_{x}\right)+\varphi \left({f}_{z}\right)\varPhi \left({f}_{x}\right),\) \({G}_{1}\left({f}_{z}\right)={f}_{z}\varPhi \left({f}_{z}\right)+\varphi \left({f}_{z}\right)\), and \({G}_{0}\left({f}_{z}\right)=-{f}_{z}+\varPhi \left({f}_{x}\right){G}_{1}\left({f}_{z}\right)\).

Like in Sect. 4.1.1, the Hessian in Eq. (12) is not positive definite, which means that our objective function, \(E\left[-l\left(f\right)|{\varvec{z}}\right]\), is not convex. Thus, we use the modified Hessian by using the absolute value of the Hessian.

Based on the gradient and the modified Hessian, the Newton–Raphson method is applied to minimize the negative log-likelihood with \({f}_{2}({\varvec{z}}\)) as follows:

where \({E}_{w}(\cdot |{\varvec{z}})\) indicates the weighted conditional expectation such that \({E}_{w}\left(g\left({\varvec{z}}, y\right)|{\varvec{z}}\right)=\frac{\text{E}\left[\text{w}\left({\varvec{z}}, y\right)\text{g}\left({\varvec{z}},y\right)|{\varvec{z}}\right]}{\text{E}\left[\text{w}\left({\varvec{z}},y\right)|{\varvec{z}}\right]},\) with \(\text{w}\left({\varvec{z}}, y\right)>0\).

Furthermore, we provide the properties of the weight function, \(|h\left({f}_{z}\right)|\), which is defined as

The weight function with \(y=0\) can be rewritten as

and has the properties of increasing the weights for the misclassified units and decreasing the weights for the correctly classified units as follows:

Proposition 6

(Correct classification) We have \(\underset{{f}_{z}\to -\infty }{\text{lim}}W\left({f}_{z}\right)=0\).

Proposition 7

(Correct classification) Given \({f}_{x}\ll -N,\) where \(N\) is an arbitrarily large positive number,\(\underset{{f}_{z}\to \infty }{\text{lim}}W\left({f}_{z}\right)=0\).

Proposition 6 holds because \(\varphi \left({f}_{z}\right)\to 0\) and \(\varPhi \left({f}_{z}\right)\to 0\), and Proposition 7 holds because \(\varphi \left({f}_{z}\right)\to 0\) and \(\varPhi \left({f}_{z}\right)\to 1\), but \(\varPhi \left({f}_{x}\right)\approx 0\).

Proposition 8

(Misclassification) Given \({f}_{x}\gg N,\) where \(N\) is an arbitrarily large positive number, \(\underset{{f}_{z}\to \infty }{\text{lim}}W\left({f}_{z}\right)=1\).

Proof

The proof of Proposition 8 is similar to that of Proposition 3. For more details, please see Appendix B.

Propositions 6 and 7 provide the weight functions with \(y=0\) in the OE iterations in the case of correct classification. As \({f}_{z}\) decreases, our algorithm predicts an outcome of 0 for the unit, implying that the observed outcome and the predicted outcome are identical. Thus, its weight is decreased. When \({f}_{z}\) increases, correct classification occurs only if \({f}_{x}\) is sufficiently small (i.e., \(\varPhi \left({f}_{x}\right)\approx 0\)). In other words, for a given \({f}_{x}\) smaller than an arbitrarily large negative number, \(-N\), the weight for the unit is decreased, as the unit is identified as an inflated case. However, with a sufficiently large value of \({f}_{x}\)(i.e., \(\varPhi \left({f}_{x}\right)\approx 1\)), an increase in \({f}_{z}\) implies a higher possibility of being misclassified as an outcome of 1 and the weight for the unit is increased, as shown in Proposition 8.

The weight function with \(y=1\) can be rewritten as

and we provide the properties that upweight the misclassified units and downweight the units as follows:

Proposition 9

(Correct classification) \(\underset{{f}_{z}\to \infty }{\text{lim}}W\left({f}_{z}\right)=0\).

It is easy to see that Proposition 9 holds because \(\varphi \left({f}_{z}\right)\to 0\).

Proposition 10

(Misclassification)\(\underset{{f}_{z}\to -\infty }{\text{lim}}W\left({f}_{z}\right)=1\).

Proof

To prove Proposition 10, we apply L’Hôpital’s rule repeatedly since we have an indeterminate form due to the fact that \(\varPhi \left({f}_{z}\right)\to 0\) and \(\varphi \left({f}_{z}\right)\to 0\) as \({f}_{z}\to -\infty \). Please see Appendix B.

Proposition 9 provides the weight function with \(y=1\) in the OE iterations for correct classification. As \({f}_{z}\) increases, it is more likely that the predicted outcome of the unit is 1, leading to a decrease in the corresponding weight. On the other hand, Proposition 10 shows a decrease in weights for misclassification. When \({f}_{z}\) decreases, the unit is more likely to be misclassified as an outcome of 0. In such a case, our algorithm will increase its weight.

4.1.3 Pseudo-code for ZIPBoost

We summarize the proposed algorithm ZIPBoost by presenting the pseudo-code in Algorithm 1. The algorithm requires a set of samples \(\left({{\varvec{x}}}_{i},{{{\varvec{z}}}_{i},y}_{i}\right)\) for \(i\in \{1,\dots ,N\}\), where \({{\varvec{x}}}_{i}\) and \({{\varvec{z}}}_{i}\) are sets of variables for the SE and OE, respectively, and the maximum number of iterations, \(M\). In Step A, we set the initial fitted values of \({f}_{1}^{0}\left({{\varvec{x}}}_{i}\right)\) and \({f}_{2}^{0}\left({{\varvec{z}}}_{i}\right)\) to zero.

Next, in Step B, we run the ZIPBoost iterations to sequentially update the fitted values for the SE (Lines 3–6) and for the OE (Lines 7–10). More precisely, for each iteration of \(m\in \{1,\dots ,M\}\), we first run the SE iterations with the transformed response \({q}_{i,se}^{m}\) in Line 3 and weights for the SE \({w}_{i,se}^{m}\) in Line 4, which are defined as follows:

and

where \({G}_{0}\left({f}_{x}\right)=-{f}_{x}+{f}_{x}\varPhi \left({f}_{x}\right)\varPhi \left({f}_{z}\right)+\varphi \left({f}_{x}\right)\varPhi \left({f}_{z}\right),\) \({G}_{1}\left({f}_{x}\right)={f}_{x}\varPhi \left({f}_{x}\right)+\varphi \left({f}_{x}\right)\), and \({G}_{0}\left({f}_{x}\right)=-{f}_{x}+\varPhi \left({f}_{z}\right){G}_{1}\left({f}_{x}\right)\).

Since the update scheme is based on the Newton–Raphson method, the classifier can be obtained by fitting a weighted least square regression of the transformed response \({q}_{i,se}^{m}\) on \({{\varvec{x}}}_{i}\) with the weight \({w}_{i,se}^{m}\). Thus, the optimal classifier, \({g}^{m}({\varvec{x}};\beta )\) in Line 5, is

Based on \({g}^{m}({{\varvec{x}}}_{i};\beta )\), we update the probability for the SE, \({f}_{1}^{m}({{\varvec{x}}}_{i})\), in Line 6. Then, we have

Given \({f}_{1}^{m}({{\varvec{x}}}_{i})\), we run the OE iterations to update \({f}_{2}\left({{\varvec{z}}}_{i}\right)\) with the transformed response \({y}_{i,oe}^{m}\) in Line 7 and weights \({w}_{i,oe}^{m}\) in Line 8, which are defined as

and

where \({G}_{0}\left({f}_{z}\right)=-{f}_{z}+{f}_{z}\varPhi \left({f}_{z}\right)\varPhi \left({f}_{x}\right)+\varphi \left({f}_{z}\right)\varPhi \left({f}_{x}\right),\) \({G}_{1}\left({f}_{z}\right)={f}_{z}\varPhi \left({f}_{z}\right)+\varphi \left({f}_{z}\right)\), and \({G}_{0}\left({f}_{z}\right)=-{f}_{z}+\varPhi \left({f}_{x}\right){G}_{1}\left({f}_{z}\right)\).

Like in the SE iterations, we fit a weighted least square regression of the transformed response \({y}_{i,oe}^{m}\) on \({{\varvec{z}}}_{i}\) with the weight \({w}_{i,oe}^{m}\). The optimal classifier for the OE, \({g}^{m}({{\varvec{z}}}_{i};\gamma )\) in Line 10, is

We update the probability for the OE, \({f}_{2}^{m}({{\varvec{z}}}_{i})\), in Line 6 as follows:

In Step C, after \(M\) iterations, the probability of belonging to each class can be calculated based on the fitted values of \({f}_{1}^{M}\left({{\varvec{x}}}_{i}\right)\) and \({f}_{2}^{M}\left({{\varvec{z}}}_{i}\right)\). Using these estimated final values, the final probability and the corresponding predicted class for unit \(i\) are produced.

We note that assuming \(k\ll N\) (sample size is much larger than number of variables), the overall time complexity of ZIPBoost is \(O({k}^{2}NM)\), where the bottleneck is the estimation of \({g}^{m}({{\varvec{x}}}_{i};\beta )\) and \({g}^{m}({{\varvec{z}}}_{i};\gamma )\) in Lines 5 and 9, requiring build of weighted least square regression in each iteration of Step B, where each estimation requires \(O({k}^{2}N)\) steps.

During iterations, the weights might become extremely small, especially in regions where units are perfectly classified, leading to potential computational problems. More precisely, in cases of perfect classification, in which the weights are too small (i.e., the weights are close to zero since they are bounded to zero), the denominator of the transformed response can be such a small value that the transformed response is not well defined. To avoid the numerical problems involved in defining the transformed response, we adopt a lower threshold of 2 \(\times machine-zero\) on the weights, following the work of Friedman et al. (2000). In addition, the transformed response can become extreme values, resulting in numerical instability. To be specific, the transformed responses for SE and OE in ZIPBoost can be rewritten as \({q}_{i,se}=\frac{1}{\left|{f}_{x}+\frac{\varphi \left({f}_{x}\right)}{\phi \left({f}_{x}\right)}\right|}\) and \({y}_{i,oe}=\frac{1}{\left|{f}_{z}+\frac{\varphi \left({f}_{z}\right)}{\phi \left({f}_{z}\right)}\right|}\) when \(y=1\), respectively. If \({f}_{x}\) or \({f}_{z}\) is too small, \({q}_{i,se}\) or \({y}_{i,oe}\) becomes very large. When \(y=0\), we have \({q}_{i,se}=\frac{-1}{\left|{-f}_{x}+\frac{\varphi \left({f}_{x}\right)\phi \left({f}_{z}\right)}{1-\phi \left({f}_{x}\right)\phi \left({f}_{z}\right)}\right|}\) and \({y}_{i,oe}=\frac{-1}{\left|{-f}_{z}+\frac{\varphi \left({f}_{z}\right)\phi \left({f}_{x}\right)}{1-\phi \left({f}_{x}\right)\phi \left({f}_{z}\right)}\right|}\). For a unit with large \({f}_{x}\) and \({f}_{z}\) values, \({q}_{i,se}\) and \({y}_{i,oe}\) become quite small. Therefore, we enforce the working responses to fall in the interval of \(\left[-4, 4\right]\), which was derived according to Friedman et al. (2000). This interval shows that we construct the transformed responses with a lower threshold of -4 and an upper threshold of 4.

4.2 ZILBoost

ZILBoost also proceeds with the iterations for the SE and OE sequentially, utilizing the expected negative log-likelihood, \(E\left[-l\left(f\right)|{\varvec{x}},{\varvec{z}}\right]\), where \(l\left(f\right)\) is defined as Eq. (7), serving as the objective function. The weight function operates similarly to LogitBoost: The observation weights increase for those with \({f}_{1}({\varvec{x}})\) or \({f}_{2}\left({\varvec{z}}\right)\) close to zero, whereas the weights decrease for those with \({f}_{1}({\varvec{x}})\) or \({f}_{2}\left({\varvec{z}}\right)\) far from zero.

4.2.1 Splitting equation iterations

The algorithm starts by updating \({f}_{1}\left({\varvec{x}}\right)\). The gradient of the objective function is defined as

and the Hessian is defined as

The derivation of the gradient \(D\left({f}_{x}\right)\) is provided in Appendix A.

Equation (17) indicates that when \(y=0\), \(h\left({f}_{x}\right)\) can be written as

and when \(y=1\), \(h\left({f}_{x}\right)\) can be written as

Therefore,

where \(L\left({f}_{x}\right)={\left(1+\text{exp}\left(-{f}_{x}\right)\right)}^{-3}{\left(1+\text{exp}\left(-{f}_{z}\right)\right)}^{-1}\left(\text{exp}\left(-{f}_{x}\right)-1\right)+{\left(1+\text{exp}\left(-{f}_{x}\right)\right)}^{-4}{\left(1+\text{exp}\left(-{f}_{z}\right)\right)}^{-2}\).

Since we cannot guarantee the positive definiteness of the Hessian in Eq. (19), we replace \(h\left({f}_{x}\right)\) with its absolute value in our algorithm. Based on the gradient and the modified Hessian, the Newton–Raphson method is applied to minimize the expected negative log-likelihood as follows:

where \({E}_{w}(\cdot |{\varvec{x}})\) indicates the weighted conditional expectation such that \({E}_{w}\left(g\left({\varvec{x}},y\right)|{\varvec{x}}\right)=\frac{\text{E}\left[\text{w}\left({\varvec{x}}, y\right)\text{g}\left({\varvec{x}},y\right)|{\varvec{x}}\right]}{\text{E}\left[\text{w}\left({\varvec{x}},y\right)|{\varvec{x}}\right]},\boldsymbol{ }\text{with w}\left({\varvec{x}}, y\right)>0\).

The weight function of ZILBoost, \(|h\left({f}_{x}\right)|\), is similar to that of LogitBoost in that the algorithm upweights the units that are hard to classify (i.e., the fitted values are around zero) and downweights the units that can be classified with high confidence (i.e., the fitted values are not around zero).

From Eq. (18), we can infer that the weight function with \(y=1\) is similar to the probability distribution function (PDF) of the logistic distribution with a location parameter of 0 and a scale parameter of 1. The PDF of the logistic distribution has the maximum probability at the center of 0 and is symmetric around zero. Consequently, the maximum weights will be assigned to units whose fitted values are zero.

Next, we provide the property of the weight function, \(|h\left({f}_{x}\right)|\), when \(y=0\) and \({f}_{z}\gg N\). In this case, whether a unit is misclassified depends on the value of \({f}_{x}\) because \({f}_{z}\) is greater than an arbitrarily large positive number, resulting in \({\left(1+\text{exp}\left(-{f}_{z}\right)\right)}^{-1}\) being approximately 1. Assuming this, let us rewrite the weight function as follows:

Let \(1+\text{exp}\left(-{f}_{x}\right)=K\). Then, we can rewrite Eq. (20) as

which is similar to the PDF of the logistic distribution with a location parameter of 0 and a scale parameter of 1.

In addition, we provide the property of the weight function with \(y=0\) and \({f}_{z}\ll -N\). In this case, \({f}_{x}\) does not play an important role in the classification because the negative value of \({f}_{z}\) indicates that the predicted class for a unit would be zero. This means that \({f}_{z}\) is smaller than an arbitrarily large negative number such that \({\left(1+\text{exp}\left(-{f}_{z}\right)\right)}^{-1}\approx 0\), which reduces the weight function to zero.

4.2.2 Outcome equation iterations

Next, the algorithm updates \({f}_{z}\) given the updated value of \({f}_{x}\). For updating \({f}_{z}\), the gradient of the objective function is defined as

For the derivation of the gradient \(D\left({f}_{z}\right)\), please see Appendix A.

The Hessian is defined as

According to Eq. (21), when \(y=0\), \(h\left({f}_{z}\right)\) can be rewritten as

and when \(y=1\), \(h\left({f}_{z}\right)\) can be rewritten as

Thus, the Hessian of the objective function is as follows:

where \(L\left({f}_{z}\right)={\left(1+\text{exp}\left(-{f}_{z}\right)\right)}^{-3}{\left(1+\text{exp}\left(-{f}_{x}\right)\right)}^{-1}\left(\text{exp}\left(-{f}_{z}\right)-1\right)+{\left(1+\text{exp}\left(-{f}_{z}\right)\right)}^{-4}{\left(1+\text{exp}\left(-{f}_{x}\right)\right)}^{-2}\).

As before, we use the modified Hessian, given that the Hessian in Eq. (22) is not positive definite. Based on the gradient and the modified Hessian, we apply the Newton–Raphson method to minimize the expected negative log-likelihood as follows:

where \({E}_{w}(\cdot |{\varvec{z}})\) indicates the weighted conditional expectation such that \({E}_{w}\left(g\left({\varvec{z}},\text{y}\right)|{\varvec{z}}\right)=\frac{\text{E}\left[\text{w}\left({\varvec{z}},\text{ y}\right)\text{g}\left({\varvec{z}},\text{y}\right)|{\varvec{z}}\right]}{\text{E}\left[\text{w}\left({\varvec{z}},\text{y}\right)|{\varvec{z}}\right]},\boldsymbol{ }\text{with w}\left({\varvec{z}},\text{ y}\right)>0\).

Since the Hessian serving as the weight function in Eq. (22) is akin to the one in Eq. (19) used during the SE iterations, it is easy to see that the update for \({f}_{z}\) results in increased weights for units having high classification confidence and decreased weights for those with low classification confidence.

4.2.3 Pseudo-code for ZILBoost

In this section, we summarize the ZILBoost algorithm through pseudo-code in Algorithm 2. The pseudo-code of ZILBoost is similar to that of ZIPBoost presented in Sect. 4.1.3 except the formulas for the transformed response variables, \({q}_{i,se}^{m}\) and \({y}_{i,oe}^{m}\), and the weight functions, \({w}_{i,se}^{m}\) and \({w}_{i,oe}^{m}\). For the SE iterations, the transformed response and the weight function are defined as

and

where \(L\left({f}_{i,x}^{m-1}\right)={\left(1+\text{exp}\left(-{f}_{1}^{m-1}\left({{\varvec{x}}}_{i}\right)\right)\right)}^{-3}{\left(1+\text{exp}\left(-{f}_{2}^{m-1}\left({{\varvec{z}}}_{i}\right)\right)\right)}^{-1}\left(\text{exp}\left(-{f}_{1}^{m-1}\left({{\varvec{x}}}_{i}\right)\right)-1\right)+{\left(1+\text{exp}\left(-{f}_{1}^{m-1}\left({{\varvec{x}}}_{i}\right)\right)\right)}^{-4}{\left(1+\text{exp}\left(-{f}_{2}^{m-1}\left({{\varvec{z}}}_{i}\right)\right)\right)}^{-2}\). Using \({q}_{i,se}^{m}\) and \({w}_{i,se}^{m}\), we obtain the optimal classifier by fitting a weighted least square regression such that

For the OE iterations, the algorithm uses the following transformed response and the weight function:

and

where \(L\left({f}_{i,z}^{m-1}\right)={\left(1+\text{exp}\left(-{f}_{2}^{m-1}\left({{\varvec{z}}}_{i}\right)\right)\right)}^{-3}{\left(1+\text{exp}\left(-{f}_{1}^{m}\left({{\varvec{x}}}_{i}\right)\right)\right)}^{-1}\left(\text{exp}\left(-{f}_{2}^{m-1}\left({{\varvec{z}}}_{i}\right)\right)-1\right)+{\left(1+\text{exp}\left(-{f}_{2}^{m-1}\left({{\varvec{z}}}_{i}\right)\right)\right)}^{-4}{\left(1+\text{exp}\left(-{f}_{1}^{m}\left({{\varvec{x}}}_{i}\right)\right)\right)}^{-2}\). Like in the OE, we fit a weighted least square regression to obtain the optimal classifier as

After \(M\) iterations, using \({f}_{1}^{M}\left({{\varvec{x}}}_{i}\right)\) and \({f}_{2}^{M}\left({{\varvec{z}}}_{i}\right)\), the probabilities of belonging to each class can be calculated, and the corresponding predicted class for unit \(i\) is determined.

Similar to ZIPBoost, assuming \(k\ll N\) (the sample size is much larger than the number of variables), the overall time complexity of ZILBoost is \(O({k}^{2}NM)\).

Similar to ZIPBoost, we set a lower threshold of the weights as 2 \(\times machine-zero\). Furthermore, the transformed responses for SE and OE in ZILBoost can be defined as \({q}_{i,se}=1+\text{exp}(-{f}_{x})\) and \({y}_{i,oe}=1+\text{exp}(-{f}_{z})\) when \(y=1\). In this case, they become very large values with a small value \({f}_{x}\) or \({f}_{z}\). For \(y=0\), \({q}_{i,se}=-\left(1+\text{exp}\left(-{f}_{x}\right)\right)\left(1-{\left(1+\text{exp}\left(-{f}_{x}\right)\right)}^{-1}{\left(1+\text{exp}\left(-{f}_{x}\right)\right)}^{-1}\right)/\left|-1+\text{exp}\left(-{f}_{x}\right)+{\left(1+\text{exp}\left(-{f}_{x}\right)\right)}^{-1}{\left(1+\text{exp}\left(-{f}_{z}\right)\right)}^{-1}\right|\) and \({y}_{i,oe}=-\left(1+\text{exp}\left(-{f}_{z}\right)\right)\left(1-{\left(1+\text{exp}\left(-{f}_{z}\right)\right)}^{-1}{\left(1+\text{exp}\left(-{f}_{x}\right)\right)}^{-1}\right)/|-1+\text{exp}\left(-{f}_{z}\right)+{\left(1+\text{exp}\left(-{f}_{z}\right)\right)}^{-1}{\left(1+\text{exp}\left(-{f}_{x}\right)\right)}^{-1}|\) so that the transformed responses can be extremely small while having large values of \({f}_{x}\) and \({f}_{z}\). Thus, to maintain numerical stability, we limit the range of the working responses to \(\left[-4, 4\right]\).

4.3 Convergence of proposed methods

ZIPBoost and ZILBoost follow the modified Newton method, \({f}_{x}^{m+1}={f}_{x}^{m}-\frac{D\left({f}_{x}^{m}\right)}{\left|H\left({f}_{x}^{m}\right)\right|}\) for SE and \({f}_{z}^{m+1}={f}_{z}^{m}-\frac{D\left({f}_{z}^{m}\right)}{\left|H\left({f}_{z}^{m}\right)\right|}\) for OE, where \(D\left({f}_{x}^{m}\right)=\frac{\partial E[-l(f)|{\varvec{x}}] }{\partial {f}_{x}}\), \(H\left({f}_{x}^{m}\right)=\frac{\partial }{\partial {f}_{x}}\left[\frac{\partial E[-l(f)|{\varvec{x}}] }{\partial {f}_{x}}\right]\), \(D\left({f}_{z}^{m}\right)=\frac{\partial E[-l(f)|{\varvec{z}}] }{\partial {f}_{z}}\), and \(H\left({f}_{z}^{m}\right)=\frac{\partial }{\partial {f}_{z}}\left[\frac{\partial E[-l(f)|{\varvec{z}}] }{\partial {f}_{z}}\right]\). Here, we show the convergence of the proposed methods to a local minimum. As the iterations for the splitting equation and OE in the proposed methods rely on the same updated scheme, we provide only the convergence for the spitting equation iteration, \({f}_{x}^{m}\), because the convergence for the OE iteration is similar.

Let us define a function \(g\left({f}_{x}^{m}\right)={f}_{x}^{m}-\frac{D\left({f}_{x}^{m}\right)}{\left|H\left({f}_{x}^{m}\right)\right|}\) to have \({f}_{x}^{m+1}=g\left({x}_{k}\right)\). For the convergence, we use the fact that \(g\left({f}_{x}^{m}\right)\) is a contraction in a neighborhood of a local minimum. We define a contraction in Definition 1.

Definition 1

A function \(g(x)\) is called a contraction in the interval \([a,b]\) if there exists a number \(L\in [\text{0,1})\) such that

for any \(x,y\in [a,b]\).

However, in our setting, it is challenging to apply Definition 1 directly to prove that \(g\left({f}_{x}^{m}\right)\) is a contraction. Thus, we rely on Theorem 1, which is equivalent to Definition 1 (For more details, please see Babajee & Dauhoo, 2006).

Theorem 1

(Babajee & Dauhoo, 2006). If \(g\) is differentiable and a number \(L\in [\text{0,1})\) exists such that \(\left|{g}{\prime}\left(x\right)\right|\le L\) for all \(x\in [a,b]\), then \(g\) is a contraction on \([a,b]\).

Proof

Let \(x,y\in [a,b]\) and assume \(x<y\). According to the mean value theorem, we have \(\frac{g\left(x\right)-g\left(y\right)}{x-y}={g}{\prime}(c)\) for some \(c\in (x,y)\). If \(\left|{g}{\prime}\left(x\right)\right|\le L \text{for all }x\in [a,b]\), then \(\left|{g}{\prime}\left(c\right)\right|\le L\).

Therefore, we have \(\left|\frac{g\left(x\right)-g\left(y\right)}{x-y}\right|\le L\), and equivalently, \(\left|g\left(x\right)-g\left(y\right)\right|\le L(x-y)\), which is the definition of the contraction. □

Based on Theorem 1, we now show that the iteration \(g({f}_{x}^{m})\) converges to a local minimum by following Süli and Mayers (2003, Theorem 1.5).

Theorem 2

Let \({f}_{x}^{*}\) be the actual solution of \(D\left({f}_{x}^{*}\right)=0\), and assume \(\left|{f}_{x}^{0}-{f}_{x}^{*}\right|<\delta \), where \({f}_{x}^{0}\) is an initial guess and \(\delta \) is an arbitrary positive number. If \(g\left({f}_{x}^{m}\right)\) is a contraction on \(\left({f}_{x}^{*}-\delta , {f}_{x}^{*}+\delta \right)\), the iteration converges to \({f}_{x}^{*}\).

Proof

Let us assume there exists a solution \({f}_{x}^{*}\) satisfying the following three conditions: (i) \({\left.\frac{\partial E[-l(f)|{\varvec{x}}] }{\partial {f}_{x}}\right|}_{{f}_{x}={f}_{x}^{*}} =D\left({f}_{x}^{*} \right)=0\), (ii) \({\left.\frac{{\partial }^{2}E[-l(f)|{\varvec{x}}] }{{\partial }^{2}{f}_{x}}\right|}_{{f}_{x}={f}_{x}^{*}}=H\left({f}_{x}^{*}\right)>0\), (iii) \(\frac{{\partial }^{3}E[-l(f)|{\varvec{x}}] }{{\partial }^{3}{f}_{x}}\) is bounded near \(\overline{x }\). We also assume that an initial guess \({f}_{x}^{0}\) is sufficiently close to the solution \({f}_{x}^{*}\) satisfying \(\left|{f}_{x}^{0}-{f}_{x}^{*}\right|<\delta \). We note that the standard Newton method also requires this assumption for convergence (Casella & Bachmann, 2021).

First, we show \(g({f}_{x}^{m})\) is a contraction using Theorem 1. We know that \(g({f}_{x}^{m})\) is differentiable since the third-order derivatives can be defined.Footnote 1 Then, there exists a number \(L\in [\text{0,1})\) such that \(\left|{g}{\prime}\left({f}_{x}^{m}\right)\right|\le L\) for all \({f}_{x}\in [{f}_{x}^{*}-\delta , {f}_{x}^{*}+\delta ]\). With \({g}{\prime}\left({f}_{x}^{m}\right)=1-\frac{H\left({f}_{x}^{m}\right)}{\left|H\left({f}_{x}^{m}\right)\right|}+\frac{D\left({f}_{x}^{m} \right)\frac{H\left({f}_{x}^{m}\right)}{\left|H\left({f}_{x}^{m}\right)\right|}{\left. \frac{{\partial }^{3}E[-l(f)|{\varvec{x}}] }{{\partial }^{3}{f}_{x}}\right|}_{{f}_{x}={f}_{x}^{m}}}{{\left(\left|H\left({f}_{x}^{m}\right)\right|\right)}^{2}}\), we can show \(\underset{{f}_{x}\to {f}_{x}^{*}}{\text{lim}}{g}{\prime}\left({f}_{x}^{m}\right)=0\), since the first and second terms converge to 1 and 0, respectively, because \(D\left({f}_{x}^{*} \right)=0\) and \(H\left({f}_{x}^{*}\right)>0\). This result implies that \({g}{\prime}\left({f}_{x}^{m}\right)\) is near zero for \({f}_{x}\) around \({f}_{x}^{*}\), and there exists an interval \([{f}_{x}^{*}-\delta , {f}_{x}^{*}+\delta ]\) where \(\left|{g}{\prime}\left({f}_{x}^{m}\right)\right|<L<1\) for all \({f}_{x}\in [{f}_{x}^{*}-\delta , {f}_{x}^{*}+\delta ]\). This means that the assumptions of Theorem 1 hold, and hence, we conclude that \(g({f}_{x}^{m})\) is a contraction on \([{f}_{x}^{*}-\delta , {f}_{x}^{*}+\delta ]\).

Next, we show that the iteration \(g({f}_{x}^{m})\) converges to \({f}_{x}^{*}\). For \({f}_{x}^{*}\), we have \(g\left({f}_{x}^{*}\right)={f}_{x}^{*}-\frac{D\left({f}_{x}^{*}\right)}{\left|H\left({f}_{x}^{*}\right)\right|}={f}_{x}^{*}\) since \(D\left({f}_{x}^{*}\right)=0\) and \(H\left({f}_{x}^{*}\right)>0\). Thus,

Since \(L<1\), we have \(\underset{m\to \infty }{\text{lim}}\left|{f}_{x}^{m}-{f}_{x}^{*}\right|=0\), implying that \({f}_{x}^{m}\to {f}_{x}^{*}\). Therefore, the iteration by the modified Newton method leads to convergence to \({f}_{x}^{*}\). □

5 Computational experiment

In this section, we show that our proposed methods outperform other boosting methods, such as AdaBoost, LogitBoost, ProbitBoost, AdaC2, SMOTEBoost, and GANs, using a Monte Carlo Simulation, a real data application for predicting M&A outcomes, and imbalanced datasets from the Keel repository. We implemented all algorithms in R (version 4.2.2) on a Mac-OS system with M1 and 16 GB RAM.

5.1 Monte Carlo simulation

We simulate hypothetical data with the zero-inflated case to demonstrate the performance of ZIPBoost and ZILBoost. We include AdaBoost (Freund & Schapire, 1996), LogitBoost (Friedman et al., 2000), ProbitBoost (Zheng & Liu, 2012), AdaC2 (Sun et al., 2007), SMOTEBoost (Chawla et al., 2003), and GANs (Goodfellow et al., 2014) as benchmark models. AdaBoost, LogitBoost, and ProbitBoost update classifiers based on prediction error without accounting for class imbalance. AdaC2 is a variant of AdaBoost with unequal misclassification costs for majority and minority classes. In addition to AdaC2, several other cost-sensitive learning algorithms based on AdaBoost have been proposed, such as AdaCost, AdaC1, and AdaC3. However, this simulation study considers only AdaC2 as one of the benchmark models since previous research shows that AdaC2 outperforms other modifications of AdaBoost (e.g., Sun et al., 2007; Yin et al., 2013). Since AdaC2 embeds unequal misclassification costs for each class in a cost matrix, we select costs that maximize the F-scoreFootnote 2 in the training data following Sun et al. (2007). Meanwhile, SMOTEBoost, a combination of SMOTE (an oversampling method) and boosting, requires the oversampling rate, which is the ratio of the number of synthetic minority examples to that of the original minority examples (Gao et al., 2014), and the number of nearest neighbors. Based on prior studies (e.g., Wei et al., 2021), we set the oversampling rate to the rounded-down value of the imbalance ratio (IR)—that is, the ratio of the number of the majority examples to that of the minority examples. Along with the oversampling rate, the synthetic examples were generated based on the five nearest neighbors. To train weak learners with the synthetic examples, we experimented with various learning algorithms, including classification and regression tree (CART), C.50 Decision Tree (DT), Random Forest (RF), Naïve Bayes (NB), and SVM. We also considered combinations of GANs and learning algorithms as benchmarks. Specifically, we trained the GANs to generate synthetic examples and, thus, balance distributions across the two classes. We applied learning algorithms, including the generalized boost method (GBM), logit, DT, RF, and SVM, for classification after adding the synthetic examples to the dataset.

We generated the dataset 1,000 times following the data-generating process (DGP):

For \(i\in \{1,\dots , 1000\}\),

We examined the performance under various proportions of the minority class in the data, which are 5%, 10%, 20%, 30%, and 40%, by adjusting the parameter values. Specifically, we set \({\beta }_{1}\), \({\beta }_{2},{\beta }_{3}, {\gamma }_{1},{\gamma }_{2},\) and \({\gamma }_{3}\) to 0.5, –3.5, –1.5, –2, 1.5, and 0.5, respectively, across all cases. In addition, we adjusted the values of \({\beta }_{0}\) and \({\gamma }_{0}\) to change the proportions of the minority class: \(\left({\beta }_{0},{\gamma }_{0}\right)=(-5,-5)\) for the 5% minority examples, \(\left({\beta }_{0},{\gamma }_{0}\right)=(-3,-2.5)\) for the 10% minority examples, \(\left({\beta }_{0},{\gamma }_{0}\right)=(-1.5, 0.1)\) for the 20% minority examples, \(\left({\beta }_{0},{\gamma }_{0}\right)=(\text{0,1}.5)\) for the 30% minority examples, and \(\left({\beta }_{0},{\gamma }_{0}\right)=(2, 2.5)\) for the 40% minority examples. In addition, \({x}_{i1},{x}_{i2}\), \({x}_{i3}, {z}_{i1},{z}_{i2},\) and \({z}_{i3}\) are iid with \(N\left(\text{0,2}\right)\), and \({u}_{i}\) and \({\varepsilon }_{i}\) are iid with \(N\left(\text{0,1}\right).\) The observed outcome \({y}_{i}\) is determined as

We considered a zero outcome as the majority class. To assess the predictive performance of our proposed methods against other benchmark methods, we used the first 500 observations as the training set and the remaining 500 observations as the test set. The predictive performance was measured by F-scores and Matthews correlation coefficient (MCC) in the training and test data. The F-score is widely considered an appropriate measure for handling imbalance problems since it does not rely on the true negative rate (Waegeman et al., 2014). While prioritizing accurate predictions for the minority class, we aimed to maintain precision in the majority class. Therefore, we used the F-scores measured in both the minority class and the majority class. For instance, the F-score measured in the minority class indicates that the minority class is considered the positive class so that the proportion of correctly classified majority examples does not affect the F-score. To examine the average performance over all classes, we considered a macro F-score that averages the F-scores measured in each class. All the F-scores range from 0 to 1, with a higher value indicating better accuracy.

In addition to the F-scores, we evaluated the classification performance using MCC. Since the MCC is calculated based on all the information in the confusion matrix, it has been regarded as a summary of a model’s predictive performance and is thus widely used in the presence of class imbalance (Boughorbel et al., 2017). The MCC can take a value from –1 to 1, implying that with the correct classification for all examples, the value of the MCC is equal to 1, while a value below zero indicates that the classifier performs worse than a random classifier.

Regarding predictors, our proposed approaches considered \({x}_{1},{x}_{2}\), and \({x}_{3}\) for the SE iterations and \({z}_{1},{z}_{2},\) and \({z}_{3}\) for the OE iterations, assuming that \({x}_{1},{x}_{2}\), and \({x}_{3}\) are predictors that may generate the inflated zeros in regime 0, and \({z}_{1},{z}_{2},\) and \({z}_{3}\) predict the minority class in regime 1. Other benchmark models utilized all predictors, \({x}_{1},{x}_{2}\), \({x}_{3}, {z}_{1},{z}_{2},\) and \({z}_{3}\), to construct the final classifiers.

We first illustrate the performance of ZIPBoost and ZILBoost over several iterations in Fig. 2. To this end, we provide the F-scores measured on each class and the macro F-score at each number of iterations. Notably, ZIPBoost and ZILBoost provided rapid convergence, attributed to the sequential application of the Newton–Raphson method to update the probability function of the SE and OE.

We note that early stopping conditions can be added to the algorithms of the proposed methods. Notably, the proposed methods show the rapid convergence, which is the advantage of the Newton method (Rohde & Wand, 2016). Given this quick convergence of the proposed methods, an extensive number of iterations may be unnecessary. Thus, in practice, an early stopping condition can be integrated into the algorithms of the proposed methods in many ways. First, one of the possible conditions is to stop iterations when all gradients for SE and OE reach zero, because a zero gradient indicates that no further improvement is possible (London et al., 2023). Second, early stopping can also be done using a validation set (Drucker, 2002). Generally, algorithms aim for optimal performance on unseen data. Thus, we can choose a subsample from the training data for a validation set and limit the iteration to a point where the predictive performance of the algorithm hits its maximum or its error rate approaches a minimum on the validation set.

The results of the simulation using the test data are presented in Table 1. To save space, we reported the performance of the proposed methods on the training data and training time in Appendix C1. The number of iterations for each boosting method was set to 100. Across all datasets, our algorithms returned the final classifier within 1 s, similar to AdaBoost, LogitBoost, and ProbitBoost, regardless of the proportions of the minority class. Using AdaC2, we did not find large differences in training time across the proportions of the minority class. It required about 7–8 s to generate the final classifier, starting with a cost matrix search. The training time of SMOTEBoost varied based on the learning algorithms and the proportions of the minority examples. When synthetic examples were generated from the 10% minority examples and CART was used to construct the classifier from augmented data, the final classifier was provided within 2 s. However, the training time of SMOTEBoost-SVM exceeded 14 s when the size of the minority class comprised 30% of the dataset. GANs required the longest training time, ranging from about 6–29 s in most cases, except when synthetic examples were generated from the 20% minority examples.

In terms of predictive performance, we found that the prediction accuracy for the minority class from most of the models improved with an increase in the proportion of the minority class. AdaBoost, SMOTEBoost-C.50, and SMOTEBoost-RF achieved perfect classifications in the training data, regardless of the proportions of the minority class. AdaC2 resulted in perfect classifications when the proportion of the minority class was less than or equal to 20%. SMOTEBoost-SVM achieved a macro F-score of 1 and an MCC of 1 when the size of the minority class was extremely small (i.e., 5% minority examples), whereas GANs-RF produced zero classification error when the number of minority examples was greater than or equal to 20% of the dataset. ZIPBoost and ZILBoost showed moderate performance across all proportions of the minority examples.

Using the test data, our proposed methods showed superior predictive performance compared to the benchmark models for both classes. The proposed methods produced maximum F-scores for both classes and the macro F-score and MCC across all cases. We also found that the performance of the two proposed methods was similar. This may be due to the fact that the data-generating process for the OE follows a normal distribution with zero excess kurtosis. For more details, see Appendix D, where we discuss the relative performance of ZIPBoost and ZILBoost depending on the excess kurtosis of the OE data-generating process. The results indicate that our proposed methods improved the accuracy of predicting the minority class without significant sacrifice in predicting the majority class, in comparison to the benchmark models. Even the benchmark models that achieved perfect classifications on the training data exhibited less accuracy than the proposed methods in terms of the macro F-score and MCC.

Classifiers built using conventional boosting methods are trained to minimize overall misclassification error at the expense of neglecting the minority class (Song et al., 2011; Sun et al., 2007). AdaC2 also requires obtaining a cost matrix based on an F-score from the training data without considering the inherent class imbalance, which may result in overfitting. Furthermore, oversampling methods, including SMOTE and GANs, may not be optimal for handling imbalance problems in the presence of two distinct DGPs. SMOTE is vulnerable to disjoint data distributions (Koziarski, 2020), which the two-regime process may cause, and GANs can fail to learn the true distribution of the minority class (Yang & Zhou, 2021), which results in synthetic examples that inadequately represent the minority class.

Therefore, our proposed methods outperform the benchmark methods because the benchmark methods do not reflect the existence of the two-regime process that causes the excess zeros.

5.2 M&A data

We examine the performance of ZIPBoost and ZILBoost using real data to predict M&A outcomes. Notably, most M&A deals end up being successful, making failures relatively rare occurrences. Nevertheless, the misclassification of the failures can induce substantial costs because it may be accompanied by missed opportunities to look for other potential deals (Lee et al., 2020).

We considered M&A deals spanning from 2009 to 2014. The information on M&A deals was collected from the Securities Data Company’s (SDC) U.S. Mergers and Acquisitions database and coupled with financial data from Compustat. To construct the final sample, we started by retaining the first takeover announcement during the sample period. We also excluded cases in which the acquirer and target firm tickers were identical. Since Compustat provides financial information for public firms, being matched with Compustat lets us restrict our sample to the takeover whose acquirer and target firm were both publicly held. Finally, we included only deals with a completed or withdrawn status. The final sample consists of 411 completed deals and 56 withdrawn takeover deals.

In this application, the target variable is whether a takeover was completed successfully or withdrawn. We assign values of zero and one to represent successful and withdrawn takeovers, respectively, and this leads to a zero-inflated case in which approximately 86% of the sample has zero outcomes. Notably, based on the previous study (Lee et al., 2020), we assumed that the completion of takeovers may be caused by either deals’ characteristics or financial characteristics of the acquirer or target firm. More specifically, some takeovers may be completed mainly due to deal characteristics, whereas others were completed based on financial characteristics, without deal characteristics forcing the decision. For example, the presence of a termination fee may lead to the completion of a takeover (Butler & Sauska, 2014). On the other hand, without such a termination fee, the decision to complete the deal may hinge on the financial performance of the acquirer or target firm, possibly leading to deal withdrawal. Thus, we included two types of predictors: (1) M&A-related predictors to account for successfully completed cases (zeros; majority class) and (2) financial performance-related predictors to account for withdrawn cases (ones; minority class).

The majority of completed cases (SE) are likely to be driven by factors associated with M&A-related predictors based on the literature review (Bugeja, 2005; Gao et al., 2021; Renneboog & Vansteenkiste, 2019; Renneboog & Zhao, 2014; Stahl et al., 2012). More specifically, hostile deals may face resistance from target firms (Renneboog & Zhao, 2014; Stahl et al., 2012), making nonhostile deals more likely to succeed. Tender offers also convey confidence in the deal (Renneboog & Vansteenkiste, 2019). We considered additional factors that can affect the probability of completing a deal, such as its relative, which indicates the risk to which an acquirer and target firm can be exposed (Gao et al., 2021), and an increase in the target firm’s share price prior to a merger announcement, which reduces the probability of bid competition and price revision (Bugeja, 2005). Thus, \({q}^{*}\) is likely to be related to the presence of a termination fee, a termination fee imposed on the acquirer, a termination fee imposed on the target firm, a hostile deal, a tender offer, and relative deal size (i.e., deal size related to the size of the acquirer) and the target firm’s share price one day prior to the announcement. In mathematical notation, for each M&A deal \(i\), we assumed the following data-generating process for SE:

where \({\text{fee}}_{i}\) indicates a dummy variable for the presence of a termination fee, \({\text{fee}}_{\text{acq},i}\) is a termination fee imposed on the acquirer, \({\text{fee}}_{\text{target},i}\) is a termination fee imposed on the target firm, \({\text{hostile}}_{i}\) indicates hostile deals, \({\text{tender}}_{i}\) indicates tender offers, \({\text{dealsize}}_{i}\) represents relative deal size, \({\text{share}}_{i}\) indicates the target firm’s share price one day prior to the announcement, and \({u}_{i}\) is random error.

Regarding the withdrawn cases (OE) conditional on M&A-related predictors, the existing literature typically focuses on financial predictors (Baker & Wurgler, 2006; Rodrigues & Stevenson, 2013). We used financial characteristics of the acquirer and target firms, such as dividend per share, the ratio of inventory to total assets, the market-to-book ratio, the price-to-earnings ratio, the growth rate in sales over the past year, the ratio of capital expenditure to operating revenue, invested capital turnover, dividend yield, and the logarithm of total assets. Following Lee et al. (2020), we used the difference in financial performance between the acquirer and the target firm to measure the dyadic relationship between them, since the extent of this difference can be used to predict the completion of deals. More precisely, the purpose of M&As between two firms (i.e., acquirers and target firms) is to strategically combine their resources. Such interfirm relationships require firms to get better at identifying the potential sources of dyadic conflict that arise during M&A negotiations (Lee et al., 2020). This implies that evaluating these dyadic conflicts is important when assessing why two firms engaged in a takeover process, which could be either completed or withdrawn. Thus, \({\widetilde{{y}_{i}}}^{*}\) is likely to be associated with dividend, the ratio of inventory to total assets, the market-to-book ratio, the price-to-earnings ratio, the growth rate in sales, the ratio of capital expenditure to operating revenue, capital turnover, dividend yield, and total assets. In mathematical notation, for each M&A deal \(i\), we assumed the following data-generating process for OE:

where \({\text{dividend}}_{i}\) indicates dividend per share, \({\text{inventory}}_{i}/{\text{assets}}_{i}\) indicates the ratio of inventory to total assets, \(\text{M}/\text{B} {\text{ratio}}_{i}\) represents the market-to-book ratio, \(\text{P}/\text{E} {\text{ratio}}_{i}\) represents the price-to-earnings ratio, \({\text{growth}}_{i}\) indicates the growth rate in sales over the past year, \({\text{expenditure}}_{i}/{\text{revenue}}_{i}\) indicates the ratio of capital expenditure to operating revenue, \(\text{capital} {\text{turnover}}_{i}\) indicates invested capital turnover, \({\text{yield}}_{i}\) represents dividend yield, \(\text{log}\left({\text{assets}}_{i}\right)\) indicates the log of total assets, and \({\varepsilon }_{i}\) is random error. Based on \({q}_{i}^{*}\) and \({\widetilde{{y}_{i}}}^{*}\), the outcome of deal \(i\), \({y}_{i}\), is determined as

To demonstrate the predictive performance of our proposed approaches, we used the examples from 2009 to 2012 as the training set (314 deals: 273 completed and 41 withdrawn) and the examples from 2013 and 2014 as the test set (153 deals: 138 completed and 15 withdrawn). The goal of this application was to predict the outcomes of M&A deals in 2013 and 2014. As in the simulation study, we considered the following benchmark models: AdaBoost, LogitBoost, ProbitBoost, AdaC2, SMOTEBoost, and GANs. We also considered different learning algorithms to build classifiers using SMOTEBoost and GANs. For AdaC2, we derived the cost matrix based on the F-score. For SMOTEBoost, the oversampling rate was fixed to 500, which is a rounded-down value of the IR in the training data, with five nearest neighbors. To train GANs, we used a batch size of 20 since we had only 41 withdrawn cases in the training set. In terms of predictors, the benchmark models used all M&A- and financial-related variables.