Abstract

State-of-the-art deep neural networks (DNNs) are highly effective at tackling many real-world tasks. However, their widespread adoption in mission-critical contexts is limited due to two major weaknesses - their susceptibility to adversarial attacks and their opaqueness. The former raises concerns about DNNs’ security and generalization in real-world conditions, while the latter, opaqueness, directly impacts interpretability. The lack of interpretability diminishes user trust as it is challenging to have confidence in a model’s decision when its reasoning is not aligned with human perspectives. In this research, we (1) examine the effect of adversarial robustness on interpretability, and (2) present a novel approach for improving DNNs’ interpretability that is based on the regularization of neural activation sensitivity. We evaluate the interpretability of models trained using our method to that of standard models and models trained using state-of-the-art adversarial robustness techniques. Our results show that adversarially robust models are superior to standard models, and that models trained using our proposed method are even better than adversarially robust models in terms of interpretability.(Code provided in supplementary material.)

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, deep neural networks (DNNs) have increasingly been used to tackle many complex tasks in various domains, including tasks previously thought to be solvable only by humans, with accuracy often surpassing that of humans. These domains include computer vision, natural language processing, and anomaly detection, where DNNs have been employed in a variety of use cases such as medical diagnosis, text translation, self-driving cars, fraud detection, and malware detection.

There are two major obstacles limiting wider adoption of DNNs in mission-critical tasks: (1) their vulnerability to adversarial attacks (Goodfellow et al., 2014; Carlini & Wagner, 2017; Kurakin et al., 2016; Chen et al., 2019; Brendel et al., 2017) and, more generally, concerns about their robustness when confronted with real-world data, and (2) their opaqueness, which makes it difficult to trust their output (Gerlings et al., 2021; Ribeiro et al., 2016).

Extensive research has been performed to address the first obstacle, with studies mainly proposing approaches for the detection of adversarial examples (Metzen et al., 2017; Feinman et al., 2017; Song et al., 2017; Fidel et al., 2019; Katzir & Elovici, 2018) and methods for training robust models (Goodfellow et al., 2014; Madry et al., 2017; Salman et al., 2020; Wong et al., 2020; Altinisik et al., 2022; Wang et al., 2020; Ding et al., 2020). To address the second obstacle, research has focused on creating a priori, interpretable models and developing methods capable of providing post-hoc explanations for existing models (Smilkov et al., 2017; Sundararajan et al., 2017; Lundberg & Lee, 2017; Ribeiro et al., 2016).

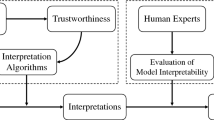

The terms interpretability and explainability are often incorrectly used interchangeably. We adopt the definitions proposed by Gilpin et al. (2018): Interpretability is a measure of how well a human can understand the way a system (in our case - a DNN) functions. Explainability of DNNs is a field of research that aims to answer the question of why a DNN produces a specific output for a given input (e.g., by assigning contribution scores to different neurons of the network signifying their importance in steering the network towards that output), or to describe what the network “learns" (e.g., by producing visualizations of concepts learned by specific neurons Olah et al. (2017)). The evaluation of improved interpretability in DNNs is performed by showing that conventional explainability techniques yield explanations that are more comprehensible to humans, as assessed both visually and through quantitative metrics.

In this paper, we examine the relationship between the aforementioned obstacles through quantitative and qualitative analyses of the effect of a model’s robustness (achieved via adversarial training and Jacobian regularization) on its interpretability, and by introducing a regularization-based approach that is conceptually similar to other approaches aimed at improving adversarial robustness but has a substantial effect on the model’s interpretability.

Recent research has shown that adversarial robustness positively affects interpretability (Zhang & Zhu, 2019; Tsipras et al., 2018; Allen-Zhu & Li, 2022; Noack et al., 2021; Margeloiu et al., 2020). However, most studies evaluated their methods using low-resolution datasets such as the MNIST (LeCun et al., 1998) and CIFAR-10 (Krizhevsky et al., 2009) datasets or only provided anecdotal evidence of improved interpretability by presenting saliency maps of a few input images.

The main contributions of this paper are as follows: (1) We provide a quantitative comparison, based on well-accepted metrics, of the interpretability of adversarially trained models vs. standard models on high-resolution image datasets; (2) Our research resulted in the discovery that Jacobian regularization is nearly as effective as adversarial training for improving model interpretability; (3) We propose a novel regularization-based approach that outperforms both adversarial training and Jacobian regularization in terms of the interpretability of the trained model, with a similarly low decline in accuracy. We believe that these contributions are significant since: (1) to the best of our knowledge, our study is the first to show quantitatively, rather than via merely anecdotal evidence, the enhanced interpretability resulting from adversarially trained models, and (2) it further improves interpretability without the overhead of adversarial training.

2 Background

2.1 Post-hoc explainability techniques for DNNs

There are many methods for explaining the predictions of machine learning models, and more specifically DNNs. In the computer vision domain, explanations usually consist of a saliency/attribution map that assigns a score to each input pixel based on its positive or negative effect on encouraging the model to classify the input as a specific class. Some of the most prominent methods, which we use in our evaluation, include: Integrated Gradients (IG) (Sundararajan et al., 2017), SHapley Additive exPlanations (SHAP) (Lundberg & Lee, 2017) (and specifically Gradient SHAP (GS)), Layer-wise Relevance Propagation (LRP) (Bach et al., 2015), and Gradient-weighted Class Activation Mapping (GradCam) (Selvaraju et al., 2017). Additional information on the various explanation methods used in the evaluation is provided in Appendix A.

2.2 Adversarial evasion attacks and defenses

Adversarial evasion attacks are methods of producing adversarial examples, which are model inputs that closely resemble valid inputs but result in drastically different model outputs. Formally, given a classifier \(M(\cdot ): R^d \rightarrow R^C\), an input sample \(x \in R^d\), and a correct class label c, we denote \(\delta \in R^d\) an adversarial perturbation and \(x' = x + \delta \) an adversarial example if:

where \(||\cdot ||\) is a distance metric, and \(\epsilon > 0\) is the maximum perturbation size allowed, which is set at a low positive value to constrain the perturbation so that the resulting adversarial example is indistinguishable from the original sample to the naked human eye thus making it potentially useful in various adversarial scenarios.

Countering adversarial attacks has been the subject of a great deal of research. Studies have largely aimed at proposing methods capable of detecting adversarial examples or methods for training robust models. The latter is relevant to our study, and two such methods are highlighted below.

Adversarial training (Goodfellow et al., 2014; Madry et al., 2017) is a method in which a model is trained to correctly classify adversarial examples by presenting them to the model during the training process. More precisely, this method solves the saddle point problem:

in which the aim is to find model parameters that minimize the expected value of the worst case increase in model loss due to input perturbations. Practically, the method consists of modifying the standard training loss so that it is applied to adversarial examples constructed from the training batch samples instead of the original training samples. Madry et al. (2017) used projected gradient descent (PGD) to generate adversarial examples during model training. More recent studies have advanced the original adversarial training approach by addressing the inherent tendency of overfitting in adversarially trained models (Altinisik et al., 2022). These advancements include adversarial training with a variable perturbation budget to maximize margins from decision boundaries (Wang et al., 2020), and differentiating between correctly classified and misclassified examples during adversarial training (Ding et al., 2020).

Jacobian regularization (Jakubovitz & Giryes, 2018; Hoffman et al., 2019) is a method in which the Frobenius norm of the Jacobian matrix containing the partial derivatives of the model’s logits over the inputs is added as an extra loss term, resulting in the minimization of the Jacobian norm during model training. This was found to effectively push the model’s decision boundaries away from the data manifold (Hoffman et al., 2019) thus improving the model’s robustness to adversarial attacks.

3 Related work

The observation that adversarially robust models are more interpretable is not new. Zhang and Zhu (2019) showed that adversarially trained convolutional neural networks (CNNs) produce explanations that rely on the global shape of the input images, in contrast to standard CNNs which focus more on textures that are inherently more sensitive to small perturbations.

Tsipras et al. (2018) observed that by virtue of the constraints imposed by adversarial training, which have the effect of reducing sensitivity to small perturbations, adversarially trained models are more aligned with human vision, which is evident in the produced explanations that emphasize features that are more human-perceivable.

In Allen-Zhu and Li (2022), the authors studied the effect of adversarial training on feature-level explanations of internal CNN layers and showed that adversarially trained models produce feature-level explanations that are “purified," in that they are much less noisy and better represent high-level visual concepts.

Noack et al. (2021) proposed a method for leveraging model explanations to improve robustness, by adding terms to the training loss that penalize the cosine of the angle between the explanation vector and the loss gradient, as well as the norm of the loss gradient vector. They showed that minimizing these two terms improves adversarial robustness. Margeloiu et al. (2020) explored the impact of adversarial training on the interpretability of CNNs for skin cancer diagnosis, demonstrating that adversarially trained CNNs produce clearer and more visually consistent saliency maps, especially highlighting melanoma characteristics.

The work in the literature most similar to ours is that of Zhang et al. (2020) who proposed augmenting adversarial training with the regularization of the sensitivity of the top-k sensitive neurons of each DNN layer in order to improve model robustness to adversarial attacks. While conceptually similar to our method of regularizing neuron sensitivities, our work differs in the following respects: (1) We regularize the sensitivity of all neurons, not just the top-k sensitive ones. (2) We regularize the sensitivity of the neurons by measuring sensitivity to random noise instead of adversarial examples, which is computationally lighter. (3) We regularize neuron sensitivity in order to improve interpretability rather than robustness. (4) In contrast to merely providing visual representations of a limited set of input samples as done by Zhang et al., we perform a quantitative evaluation to validate the improvement in interpretability observed. Furthermore, we performed our evaluation on multiple datasets, including a medical dataset.

4 Proposed method

4.1 Method overview

The proposed method aims to improve the interpretability of neural network classifiers. We introduce NsLoss, a novel regularization term that penalizes the classifier for high sensitivity of the network’s neurons to input perturbations. Thus, we define a new training loss function as follows:

where CELoss is the standard cross-entropy loss, and NsLoss is our new loss term.

We apply the proposed method on a pretrained model by continuing to train it with the custom loss function for a predefined number of epochs using a standard stochastic gradient descent-based optimizer and a relatively low learning rate to allow the model’s interpretability to improve without “unlearning” the classification task.

4.2 NsLoss regularization term

The inputs and parameters used to compute the NsLoss are as follows:

-

M - The model being trained.

-

X - Batch of samples for which the loss is computed.

-

\(\epsilon _{ns}\) - Hyperparameter specifying the radius of the \(L_p\) sphere from which random perturbations used to compute the loss are sampled. An \(L_p\) sphere around a point \(x_0\) with radius r is the set \(\{x \in \mathbb {R}^d: ||x-x_0||_p = r\}\).

-

N - Number of perturbations to generate for each sample.

We begin by computing the normalized sensitivity of each neuron in the model to random perturbations of the input within an \(L_p\) sphere with radius \(\epsilon _{ns}\). Given the j-th neuron of the i-th layer of the model:

-

1.

For every sample \(x \in X\), generate N random samples: \(x_1, x_2,..., x_N\) s.t. \(||x-x_k||_p = \epsilon _{ns}\) and store them in tensors \(X_p^{(k)}\) for \(k \in [1, N]\) (Note that the "p" in \(X_p\) stands for "perturbed" and is not related to the norm dimension of the \(L_p\) sphere from which random samples are sampled).

-

2.

Evaluate \(M_{i,j}(X)\) and \(M_{i,j}(X_p^{(k)})\), the activations of the j-th neuron in the i-th layer of the model, on each original and randomly perturbed sample, respectively.

-

3.

Compute the mean absolute activation of neuron i, j on batch X:

$$\begin{aligned} \begin{aligned} MA_{i,j} \leftarrow \frac{\sum _{m=1}^{|X|}{|M_{i,j}(X[m])|}}{|X|} \end{aligned} \end{aligned}$$(3)where X[m] is the m-th sample in batch X.

-

4.

Compute the mean absolute difference between the activations of the neuron on the perturbed and original samples:

$$\begin{aligned} \begin{aligned} MD_{i,j} \leftarrow \frac{\sum _{k=1}^{N}\sum _{m=1}^{|X|}{|{M_{i,j}(X[m])-M_{i,j}(X_p^{(k)}[m])|}}}{N \cdot |X|} \end{aligned} \end{aligned}$$(4) -

5.

Compute the sensitivity of the neuron:

$$\begin{aligned} \begin{aligned} NS_{i,j} \leftarrow \frac{MD_{i,j}}{\epsilon _{ns} \cdot |M_i| \cdot MA_{i,j}} \end{aligned} \end{aligned}$$(5)where \(|M_i|\) is the number of neurons in the i-th layer of M.

Figure 1 illustrates the process described so far for a single neuron i, j and example x.

Finally, we compute the NsLoss as follows:

The final loss is simply the mean neuron sensitivity weighted by the neuron’s mean absolute activation, which accommodates for the neuron’s contribution to the model’s output. From the implementation perspective, it is important to note that for the sake of simplicity, in the description of the algorithm used to compute the NsLoss provided here, the neuron sensitivity values for each neuron are computed separately; in practice, the computation of the aggregated loss can be computed using tensor operations on entire input batches and model layers, effectively making the time spent on loss computation negligible compared to model forward passes.

A simplified illustration of the process used to compute neuron activation differences (MD) and sensitivity (NS) for a single neuron ij with a single example x. A neuron’s sensitivity is measured based on its behavior, i.e., the variation in its activation values for a given input x and the corresponding N perturbations \(x^k_p\) sampled from the surrounding \(L_p\) sphere of radius \(\epsilon _{ns}\) - a sphere with the distance metric \(|\cdot |_p\). \(M_{ij}(\cdot )\) denotes the activation value of neuron j in layer i for a given input; each activation value is presented in a different color, e.g., the activation of the last perturbation \(x^3_p\) is presented in blue. Then, MD is used to calculate the activation differences, and NS is used to assess a neuron’s sensitivity based on the computed MD and normalization factors

4.3 NsLoss regularization’s effectiveness

The NsLoss regularization term is constructed in a way that penalizes the model for small random input perturbations that cause large differences in the activations of the model’s output neurons (logits) and internal neurons. This has the obvious effect of optimizing the model to minimize the activation differences and, as a result, minimizing the magnitude of the model’s gradients with respect to inputs in the vicinity of the training set and, by generalization, in the vicinity of the test set. Once aggregated on the entire training set during the training process, this is expected to have the effect of minimizing the model’s gradients in an \(\epsilon _{ns}\) neighborhood of the entire data manifold, where \(\epsilon _{ns}\) is the hyperparameter specifying the radius of the \(L_p\) sphere from which random input perturbations are sampled during the computation of the NsLoss regularization term.

4.4 Hyperparameters

A description of the hyperparameters used in our approach is provided below.

-

N - The number of perturbed samples used to estimate the neuron sensitivity; \(N=5\) was used in all of our experiments. The value of N linearly affects the training computation budget. We have performed anecdotal experimentation with values larger than five and found no improvement in interpretability metrics.

-

\(\epsilon _{ns}\) - The radius of the \(L_p\) sphere from which perturbations for neuron sensitivity computation are generated. We have chosen values aligned with best practices from the Adversarial robustness literature as these values correspond to negligible perceptual artifacts in the perturbed images.

-

p - The order of the distance measure \(L_p\). A common choice for p is 2 or \(\inf \).

-

\(\lambda \) - The weight of the loss term. We aim to select the highest value of \(\lambda \) that does not harm the model’s cross-entropy loss and validation set’s accuracy. The following protocol is followed to select the value of \(\lambda \):

-

1.

For a standard model, compute the values of NsLoss on 10 random batches from the training and validation sets and store the average value as \(NsLoss_0\).

-

2.

Choose \(\lambda _0 = \frac{\log _2{NumClasses}}{NsLoss_0}\).

-

3.

Perform a binary search by selecting values of \(\lambda \) that are higher and lower than \(\lambda _{0}\) but in the same order of magnitude. As an illustrative example, if \(\lambda _0\) = 5, we can choose the lower value to be 1 and a higher value set at 10. Note that the exact choice of values is less important as long as the smaller value has a negligible influence on the model’s accuracy, while the larger value leads to a deterioration in the model’s accuracy. For each such \(\lambda \), train the model for an epoch. If the training cross-entropy loss reaches the cross-entropy of a random guess (\(\log _2{NumClasses}\)), then the \(\lambda \) value is too high; otherwise it can be increased further.

-

1.

For Adversarial Training and Jacobian regularization we have used standard hyper-parameters from the relevant literature (Madry et al., 2017; Hoffman et al., 2019).

5 Evaluation

The objective of our experiments is to examine the effect of Adversarial Training, Jacobian regularization, and our proposed NsLoss regularization term on the quality of the explanations both qualitatively (i.e., by observing visual improvement) and quantitatively (by using objective metrics). We also compare the results to those obtained by a baseline model.

5.1 Evaluation setup

We assess the effectiveness of our method across three diverse datasets: ImageNette (Howard, 2019), a collection of high-resolution images from a 10-class subset of ImageNet (Deng et al., 2009); CIFAR10 (Krizhevsky et al., 2009), a widely used dataset for image classification; and a subset of the HAM10000 dermatology dataset (Tschandl et al., 2018). The HAM10000 subset focuses on three balanced classes: melanoma, melanocytic nevi, and benign keratosis, as recommended by Margeloiu et al. (2020).

For the models, we employ the VGG19 (Simonyan & Zisserman, 2014), PreResNet10 (He et al., 2016) and DenseNet (Huang et al., 2018) architectures - all pretrained on ImageNet. These models were fine-tuned on Imagenette, CIFAR10 and the aforementioned HAM10000 subset. On Imagenette, the models achieved clean test accuracy rates of 96.2%, 96.8% and 99.3% for VGG19, PreResNet10 and DenseNet, respectively. On HAM10000, the VGG19 and PreResNet10 models obtained clean test accuracy rates of 87.6% and 84.0%, respectively. On CIFAR10, the VGG19 and PreResNet10 models obtained clean test accuracy rates of 92.4% and 91.5% respectively.

We use the VGG19, PreResNet10 and DenseNet architectures, since they achieve near state-of-the-art performance on image classification tasks and are different enough to demonstrate that our method does not overfit to a specific model architecture. To train robust models, we use (1) the adversarial training method of Madry et al. (2017), as implemented by the “robustness" library (Engstrom et al., 2019), to retrain the standard models against a PGD adversary, and (2) the implementation of Jacobian regularization presented by Hoffman et al. (2019). Tables 11, 12, 13, 14, 15, 16, 17 in Appendix B summarize the configurations and hyperparameters used for all the methods evaluated.

5.2 Evaluation metrics

Several recent studies (Carvalho et al., 2019; Montavon et al., 2018; Alvarez-Melis & Jaakkola, 2018) attempted to determine what properties an attribution-based explanation should have. These studies showed that the use of just one metric is insufficient to produce explanations that are meaningful to humans. Bhatt et al. (2020) established three desirable criteria for feature-based explanation functions: low sensitivity, high faithfulness, and low complexity. Therefore, we evaluate the different techniques based on these three well-studied properties:

-

1.

Sensitivity - measures how much the explanations vary within a small local neighborhood of the input when the model prediction remains approximately the same (Alvarez-Melis & Jaakkola, 2018; Yeh et al., 2019). In our evaluation, we use max-sensitivity, avg-sensitivity (Yeh et al., 2019), and the Local Lipschitz estimate (Alvarez-Melis & Jaakkola, 2018) metrics.

-

2.

Faithfulness - estimates how the presence (or absence) of features influences the prediction score, i.e., whether removing highly important features results in a degradation of model accuracy (Bhatt et al., 2020; Bach et al., 2015; Alvarez Melis & Jaakkola, 2018). In our evaluation, we use the faithfulness correlation (Bhatt et al., 2020) and faithfulness estimate (Alvarez Melis & Jaakkola, 2018) metrics.

-

3.

Complexity - captures the complexity of explanations, i.e., how many features are used to explain a model’s prediction (Chalasani et al., 2020; Bhatt et al., 2020). In our evaluation, we use the metrics of complexity (Bhatt et al., 2020) and sparseness (Chalasani et al., 2020).

5.3 Explainability methods

Various explanation methods are used in our evaluation, including Integrated Gradients (IG) (Sundararajan et al., 2017), Gradient SHAP (GS) (Lundberg & Lee, 2017), Layer-wise Relevance Propagation (LRP) (Bach et al., 2015), and GradCam (Selvaraju et al., 2017). For the implementation, we employ the Captum library (Kokhlikyan et al., 2020). We note that the implementation of Gradient SHAP we used (the Captum library) applies SmoothGrad (Smilkov et al., 2017) by default.

We argue that since the majority of local explanation methods use model gradients, any improvement observed on these explanation methods when using the proposed method is likely to be successfully transferred to other gradient-based methods (Tables 1, 2).

5.4 Quantitative evaluation results

We start by examining the performance of the compared methods, considering the three aforementioned explanation-quality criteria (i.e., sensitivity, faithfulness, and complexity), applied to the values of the methods’ respective explanations.

Tables 3, 4, 5 summarize the results on the Imagenette dataset for the PreResNet10, DenseNet and VGG19 models, respectively. For the HAM1000 dataset, we report the results in Tables 6 and 7 for VGG19 and PreResNet10. On the CIFAR10 dataset, we report the results for the VGG19, and PreResNet10 models in Tables 8 and 9.

The scores were computed and averaged over the entire test set. The Quantus library (Hedström et al., 2022) was employed for XAI evaluation and either Integrated Gradients (IG), Gradient SHAP (GS), or Layer-wise Relevance Propagation (LRP) were used as the base attribution method. To facilitate meaningful comparisons, all of the compared models were retrained to have roughly the same test accuracy on natural images (except for the standard model), which is presented in the ninth column. To support the hypothesis that robust models tend to be more interpretable than standard models, we also provide the robust accuracy for models re-trained with Jacobian Regularization and Adversarial Training, obtained using the AutoAttack (Croce & Hein, 2020) library, in the tenth column. The robust accuracy is computed by running AutoAttack on each sample in the test set to obtain an adversarial test set and then computing the accuracy of the model on the adversarial test set with the original target labels.

In each table, the methods (standard, NsLoss \(L_2\), NsLoss \(L_{\infty }\), JacobReg, and Adversarial Training) are listed in the first column, and the respective values for the metrics: max-sensitivity (Max), avg-sensitivity (Avg) (Yeh et al., 2019), local Lipschitz estimate (LL) (Alvarez-Melis & Jaakkola, 2018), faithfulness correlation (Corr) (Bhatt et al., 2020), faithfulness estimate (Est) (Alvarez Melis & Jaakkola, 2018), complexity (Comp) (Bhatt et al., 2020), and sparseness (Spr) (Chalasani et al., 2020) are presented in columns 2–8.

For the sensitivity criterion, lower values are better; for the faithfulness criterion, higher values are better, and for the complexity criterion, lower complexity values and higher sparseness values are better. (\(\uparrow \)) indicates that a higher value is better, and (\(\downarrow \)) indicates that a lower value is better. A value in bold is the best score in a column, and an underlined value is the second best.

Prior to discussing the results for the seven \(<dataset, model~architecture>\) pairs, the radar plot in Fig. 2 and Tables 1 and 2 provide a high-level view of the results by normalizing and averaging the different metrics’ values over all models and datasets. To create the aggregated results, we first normalize the values of each metric for all methods and for each \(<dataset, model~architecture>\) pair into the range of [0,100] (note that after the normalization, for all metrics, a higher value is better). Then, we aggregate all seven experiments by calculating the mean of each metric for each method over the seven \(<dataset, model~architecture>\) pairs. The radar plot is based on the mean values for each of the eight metrics presented in Tables 1 and 2. Note that due to our normalization, these results no longer represent absolute metric values but rather represent the relative scores of the evaluated methods on each metric.

We further evaluate the statistical significance of these results. Since we cannot assume a normal distribution of the data, we first computed the Friedman test (Friedman, 1937), which resulted in a P value of 0.00003 (rounded), meaning that the different methods are significantly different. Then we performed the Wilcoxon signed-rank test (Woolson, 2007) for each pair of methods, and performed P value adjustment for multiple tests using the Benjamini-Hochberg method (Benjamini & Hochberg, 1995). Based on the results, we can see that all method pairs are significantly different with a P value of 0.05 except for the pairs \(<NsLoss L_2,~NsLoss L_\infty>\) and \(<JacobReg,~Adv. Training>\) (the P values of all pairs are presented in Table 18 in Appendix C). This demonstrates the statistical significance of our results. From Fig. 2 and Tables 1 and 2 we can clearly see that (1) both Adversarial Training and Jacobian Regularization significantly improve interpretability over a standard baseline model; and (2) both NsLoss \(L_2\) and NsLoss \(L_\infty \) methods further significantly improve the interpretability compared to Adversarial Training and Jacobian Regularization.

In Tables 3, 4, 5, we evaluate the PreResNet10, DenseNet and VGG19 models trained on the Imagenette dataset using the five different methods presented in Tables 11, 12, 13 respectively, using Integrated Gradients (IG), Gradient SHAP (GS) and Layer-wise Relevance Propagation (LRP) as the respective base attribution methods.

Our NsLoss methods demonstrate strong performance across key metrics. In terms of the sensitivity criterion, the NsLoss variants show strong results.

On the PreResNet10 model, NsLoss \(L_{\infty }\) and adversarial training achieve the best results for the max and avg sensitivity metrics, while NsLoss \(L_{\infty }\) is the best for the Local Lipschitz estimate metric. On the DenseNet model, NsLoss \(L_2\) and NsLoss \(L_{\infty }\) achieve the best and second best results for max and avg sensitivity, and the third and second best results for the Local Lipschits estimate metric. On the VGG19 model, JacobReg performs the best based on the max and avg sensitivity metrics, but NsLoss \(L_2\) and \(L_{\infty }\) are close competitors, achieving the second-best results. In terms of the faithfulness criterion, the NsLoss variants exhibit superior performance.

On the PreResNet10 model, NsLoss \(L_{\infty }\) achieves the highest scores for both metrics. On the DenseNet model, NsLoss \(L_2\) has the best and second-best results for faithfulness estimate and faithfulness correlation, respectively. On the VGG19 model, NsLoss \(L_2\) performs the best based on the faithfulness correlation metric (Corr) and is the second best based on the faithfulness estimation metric (Est), while NsLoss \(L_{\infty }\) performs the best based on the faithfulness estimation metric (Est) and is second best based on the faithfulness correlation metric (Corr). In terms of the complexity criterion, both NsLoss variants obtain the lowest complexity (Com) and highest sparsity (Spr) values on both the PreResNet10 model and DenseNet models.

On the VGG19 model, NsLoss \(L_2\) obtains the best results in terms of both the complexity (Com) and sparsity (Spr), followed closely by NsLoss \(L_{\infty }\) and adversarial training. NsLoss \(L_2\) is second-best for all three sensitivity metrics and NsLoss \(L_2\) and \(L_\infty \) are best and second-best interchangeably on the two Faithfulness metrics.

In Tables 6, 7, we evaluate the VGG19 and PreResNet10 models trained on the HAM10000 dataset using the different methods presented in Tables 14, 15 respectively, with integrated gradients and gradient SHAP serving as the respective base attribution methods. It is worth noting that despite extensive hyperparameter tuning attempts, when performing Adversarial Training, we were unable to achieve a substantial improvement in terms of the robustness or interpretability metrics without experiencing a decrease in the clean accuracy to around 53%. This renders the interpretability metrics of Adversarial Training problematic to compare with and thus we mark them in gray and ignore them when choosing the best and second-best scores for each column. Nevertheless, we do provide the results for completeness.

Across both models, our NsLoss method is a robust performer in terms of the interpretability criteria. In terms of the sensitivity criterion, NsLoss often performs on par with or better than JacobReg and adversarial training, achieving the best or nearly best scores on both models. In terms of the faithfulness criterion, NsLoss leads on the VGG19 model and is a close second on the PreResNet10 model, suggesting a high level of fidelity in its explanations. JacobReg shows competitive performance here but does not consistently outshine NsLoss. In terms of the complexity criterion, NsLoss stands out, particularly on the VGG19 model, obtaining the lowest complexity (Com) and highest sparsity (Spr) values. On the PreResNet10 model, it closely follows JacobReg but achieves slightly better performance in terms of sparsity (Spr). In contrast, adversarial training often demonstrates low sensitivity but lags in terms of faithfulness and complexity, making it less balanced overall. JacobReg performs well in terms of faithfulness but does not consistently outperform NsLoss on other metrics.

In Tables 8, 9 we present the performance comparison for the CIFAR10 dataset, using VGG19 and PreResNet10 models, described in Tables 16, 17, with Gradient SHAP and LRP used as the base attribution methods. For VGG19, JacobReg performs best in both max and avg sensitivity metrics, NsLoss \(L_{\infty }\) performs best on the LL metric, whereas for almost all other criteria and metrics, both the NsLoss \(L_2\) and \(L_{\infty }\) methods outperforms the alternatives. For PreResNet10, NsLoss \(L_2\) and \(L_\infty \) perform best for all interpretability metrics, each alternating between best and second-best results. These observations highlight NsLoss’ ability to deliver feature-based explanations that are simultaneously low in sensitivity, high in faithfulness, and low in complexity, making it a viable candidate for generating explanations that may be easier for humans to interpret.

5.5 Qualitative evaluation results

Figures 3 and 4 present the attribution maps of images from Imagenette’s test set for the VGG19 and PreResNet10 models presented in Tables 13 and 11 respectively. Figure 5 presents the attribution maps of images from HAM10000’s test set for the VGG19 models presented in Table 14. The attribution maps generated by our method are framed in red. The NsLoss attribution maps for the parachute images (top two rows in Fig. 3), as well as the skin lesions in Figs. 5, 6 demonstrate the ability of the NsLoss-trained model to capture the key region of interest in the image (the parachute or lesion itself) and produce an attribution map that focuses precisely on that region, while ignoring the background. While the other methods were also able to capture the region of interest in the image, these regions were rather noisy and less sharp.

Following Smilkov et al. (2017), we use visual coherence to indicate that the salient areas highlight mainly the object of interest rather than the background. As can be seen in the attribution maps of the church images (bottom two rows in Fig. 3), the dogs in Fig. 4, and the skin lesions in Fig. 5, the standard saliency maps show quite poor visual coherence, as they focus mainly on the background, rather than on the object itself. In contrast, the non-standard methods provide more visually coherent maps; however, the results presented in these figures, along with the findings included in the supplementary material show that NsLoss is consistently provides the most visually coherent and least noisy maps compared to the other methods, regardless of the explainability method used.

Comparison of the attribution maps obtained for four images from the Imagenette test set by the VGG19 model trained using the standard, NsLoss \(L_2\), JacobReg, and adversarial training methods. The labels on the left indicate the attribution method used–integrated gradients (IG), layer-wise relevance propagation (LRP), gradient SHAP (GS) and GradCam (GC)

Comparison of the feature maps obtained by the PreResNet10 (He et al., 2016) model which was trained using the standard, NsLoss \(L_2\), JacobReg, and adversarial training methods on images of dogs from the Imagenette (Howard, 2019) test set. The labels on the left indicate the attribution method used - integrated gradients (IG) and gradient SHAP (GS)

Attribution map comparison obtained by the VGG19 model (Simonyan & Zisserman, 2014) trained using the standard, NsLoss, JacobReg, and adversarial training methods on images of skin diseases from the HAM10000 (Tschandl et al., 2018) test set. The labels on the left indicate the image class and attribution method used - integrated gradients (IG) (Sundararajan et al., 2017), layer-wise relevance propagation (LRP) (Bach et al., 2015) and Gradient SHAP (GS) (Lundberg & Lee, 2017). The attribution maps generated by our method are framed in red (Color figure online)

Feature maps obtained on samples from CIFAR10 by the VGG19 model which was trained using the methods described in Table 16. The row labels indicate the attribution method used (IG, GS)

5.6 Hyperparameter studies

Effect of the regularization term and training length NsLoss makes use of several hyperparameters. We examine the effect of (1) \(\lambda \), which is the weight of the regularization term, and (2) the number of training epochs on select explanation-quality metrics.

Regularization term weight (\(\lambda \)) In Table 10, it can be seen that as \(\lambda \) increases, there is a gradual improvement in the results on all explanation-quality metrics: The max-sensitivity scores decrease, and the faithfulness estimation and sparseness increase, suggesting an improvement in the model’s interpretability. However, this improvement is accompanied by a certain decrease in the model’s accuracy. Therefore, the \(\lambda \) value must be carefully chosen; accordingly, we suggest that readers follow the protocol described in Sect. 4.4.

Training epochs Figure 7 presents plots for three attribution quality metrics and clean test accuracy over 80 training epochs. It can be seen that the max-sensitivity values decrease relatively quickly, right from the first epoch. Both the faithfulness estimation and sparseness continue to improve moderately as the number of epochs increases. The results show that there is an interpretability-accuracy trade-off, and a gradual drop in accuracy can be seen. Therefore, the training process should be monitored, choosing the “sweet spot" where there is a balance between the desired interpretability quality and the required accuracy of the model.

The effect of the number of training epochs on test accuracy based on a subset of explanation-quality metrics. The scores were obtained by training PreResNet10 for 80 epochs using the NsLoss method with hyperparameter \(\lambda =60\). a clean accuracy over epochs, b faithfulness estimation (Alvarez Melis & Jaakkola, 2018) over epochs, c max-sensitivity (Yeh et al., 2019) over epochs, d sparseness (Chalasani et al., 2020) over epochs

6 Conclusions and future work

Our experimental results validate the effectiveness of adversarial training, Jacobian regularization, and our novel regularization-based approach (NsLoss) in improving the model’s interpretability by changing the model’s behavior such that state-of-the-art explainability methods produce explanations that are more focused and better aligned with human perception. This supports the results of prior research and hypotheses about the positive effect of adversarial training on model interpretability and sets the stage for further research in the field. Moreover, our comprehensive evaluation quantitatively demonstrated the superiority of our proposed method using well-accepted metrics for measuring the quality of explanations, and provided representative qualitative evidence of its capabilities based on saliency map visualizations.

Future work may include: (1) evaluating our method on other computer vision tasks and modern model architectures such as vision transformers (Dosovitskiy et al., 2021); we expect to see very similar results on other model architectures, datasets and tasks, such as object detection, pose estimation, and image captioning; (2) applying NsLoss to other domains (beyond computer vision), which should be fairly straightforward, as the method makes no assumptions about the nature of the input or the model’s architecture; (3) exploring the effect of NsLoss regularization on the nature of the features learned by the model, as has been done in prior research on adversarially trained models (Allen-Zhu & Li, 2022; Zhang & Zhu, 2019; Tsipras et al., 2018). Extrapolation of the results presented in this paper leads us to believe that NsLoss-trained models learn features that are better aligned with human perception than adversarially trained models; (4) investigating the effect of using adversarial perturbations instead of random perturbations for the proposed regularization method; (5) training a model to predict a single score from the various interpretability metrics/features that will be aligned with human rating of interpretability; and (6) finding a training-time method that will achieve state-of-the-art results on both adversarial robustness and interpretability.

Availability of data and material

All datasets used are publicly available.

Code availability

Code will be made public.

References

Allen-Zhu, Z., & Li, Y. (2022). Feature purification: How adversarial training performs robust deep learning. In 2021 IEEE 62nd annual symposium on foundations of computer science (FOCS). https://doi.org/10.1109/focs52979.2021.00098.

Altinisik, E., Messaoud, S., Sencar, H.T., & Chawla, S. (2022). A3T: Accuracy aware adversarial training.

Alvarez Melis, D., & Jaakkola, T. (2018). Towards robust interpretability with self-explaining neural networks. Advances in Neural Information Processing Systems 31.

Alvarez-Melis, D., & Jaakkola, T.S. (2018). On the robustness of interpretability methods. arXiv preprint arXiv:1806.08049.

Bach, S., Binder, A., Montavon, G., Klauschen, F., Müller, K.-R., & Samek, W. (2015). On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS One, 10(7), 0130140.

Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate - a practical and powerful approach to multiple testing. Journal of the Royal Statistical Society: Series B, 57, 289–300. https://doi.org/10.2307/2346101

Bhatt, U., Weller, A., & Moura, J.M. (2020). Evaluating and aggregating feature-based model explanations. arXiv preprint arXiv:2005.00631.

Brendel, W., Rauber, J., & Bethge, M. (2017). Decision-based adversarial attacks: Reliable attacks against black-box machine learning models. arXiv preprint arXiv:1712.04248.

Carlini, N., & Wagner, D. (2017). Adversarial examples are not easily detected: Bypassing ten detection methods. In Proceedings of the 10th ACM workshop on artificial intelligence and security (pp. 3–14). ACM.

Carvalho, D. V., Pereira, E. M., & Cardoso, J. S. (2019). Machine learning interpretability: A survey on methods and metrics. Electronics, 8(8), 832.

Chalasani, P., Chen, J., Chowdhury, A.R., Wu, X., & Jha, S. (2020). Concise explanations of neural networks using adversarial training. In International conference on machine learning (pp. 1383–1391). PMLR.

Chen, J., Jordan, M.I., & Wainwright, M.J. (2019). HopSkipJumpAttack: A query-efficient decision-based attack.

Croce, F., & Hein, M. (2020). Reliable evaluation of adversarial robustness with an ensemble of diverse parameter-free attacks. In ICML.

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248–255). IEEE.

Ding, G.W., Sharma, Y., Lui, K.Y.C., & Huang, R. (2020). MMA training: Direct input space margin maximization through adversarial training.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., Uszkoreit, J., & Houlsby, N. (2021). An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale.

Engstrom, L., Ilyas, A., Salman, H., Santurkar, S., & Tsipras, D. (2019). Robustness (Python Library). https://github.com/MadryLab/robustness.

Feinman, R., Curtin, R.R., Shintre, S., & Gardner, A.B. (2017). Detecting adversarial samples from artifacts. arXiv preprint arXiv:1703.00410.

Fidel, G., Bitton, R., & Shabtai, A. (2019). When explainability meets adversarial learning: Detecting adversarial examples using SHAP signatures.

Friedman, M. (1937). The use of ranks to avoid the assumption of normality implicit in the analysis of variance. Journal of the American Statistical Association, 32(200), 675–701. https://doi.org/10.1080/01621459.1937.10503522

Gerlings, J., Shollo, A., & Constantiou, I. (2021). Reviewing the need for explainable artificial intelligence (xai). In Proceedings of the 54th Hawaii international conference on system scienceshttps://doi.org/10.24251/hicss.2021.156.

Gilpin, L.H., Bau, D., Yuan, B.Z., Bajwa, A., Specter, M., & Kagal, L. (2018). Explaining explanations: An overview of interpretability of machine learning. In 2018 IEEE 5th international conference on data science and advanced analytics (DSAA) (pp. 80–89). IEEE.

Goodfellow, I.J., Shlens, J., & Szegedy, C. (2014). Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572.

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Identity mappings in deep residual networks. Lecture Notes in Computer Science, 630–645. https://doi.org/10.1007/978-3-319-46493-0_38

Hedström, A., Weber, L., Bareeva, D., Motzkus, F., Samek, W., Lapuschkin, S., & Höhne, M.M.-C. (2022). Quantus: An explainable ai toolkit for responsible evaluation of neural network explanations arXiv:2202.06861 [cs.LG].

Hoffman, J., Roberts, D.A., & Yaida, S. (2019). Robust learning with jacobian regularization.

Howard, J. (2019). https://github.com/fastai/imagenette/.

Huang, G., Liu, Z., Maaten, L., & Weinberger, K.Q. (2018). Densely connected convolutional networks.

Jakubovitz, D., & Giryes, R. (2018). Improving dnn robustness to adversarial attacks using jacobian regularization. Lecture Notes in Computer Science (pp. 525–541). https://doi.org/10.1007/978-3-030-01258-8_32

Katzir, Z., & Elovici, Y. (2018). Detecting adversarial perturbations through spatial behavior in activation spaces. arXiv preprint arXiv:1811.09043.

Kokhlikyan, N., Miglani, V., Martin, M., Wang, E., Alsallakh, B., Reynolds, J., Melnikov, A., Kliushkina, N., Araya, C., & Yan, S., et al. (2020). Captum: A unified and generic model interpretability library for pytorch. arXiv preprint arXiv:2009.07896.

Krizhevsky, A., Hinton, G., et al. (2009). Learning multiple layers of features from tiny images. Citeseer: Technical report.

Kurakin, A., Goodfellow, I., & Bengio, S. (2016). Adversarial machine learning at scale.

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P., et al. (1998). Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11), 2278–2324.

Lundberg, S.M., & Lee, S.-I. (2017). A unified approach to interpreting model predictions. In: Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R. (eds.) Advances in neural information processing systems 30, pp. 4765–4774. Curran Associates, Inc. http://papers.nips.cc/paper/7062-a-unified-approach-to-interpreting-model-predictions.pdf.

Madry, A., Makelov, A., Schmidt, L., Tsipras, D., & Vladu, A. (2017). Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083.

Margeloiu, A., Simidjievski, N., Jamnik, M., & Weller, A. (2020). Improving interpretability in medical imaging diagnosis using adversarial training. arXiv preprint arXiv:2012.01166.

Metzen, J.H., Genewein, T., Fischer, V., Bischoff, B.: On detecting adversarial perturbations. arXiv preprint arXiv:1702.04267 (2017)

Montavon, G., Samek, W., & Müller, K.-R. (2018). Methods for interpreting and understanding deep neural networks. Digital Signal Processing, 73, 1–15.

Noack, A., Ahern, I., Dou, D., & Li, B. (2021). An empirical study on the relation between network interpretability and adversarial robustness. SN Computer Science, 2(1), 1–13.

Olah, C., Mordvintsev, A., & Schubert, L. (2017). Feature visualization. Distill. https://doi.org/10.23915/distill.00007. https://distill.pub/2017/feature-visualization

Ribeiro, M.T., Singh, S., & Guestrin, C. (2016). “why should i trust you?”. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining - KDD ’16. https://doi.org/10.1145/2939672.2939778.

Salman, H., Ilyas, A., Engstrom, L., Kapoor, A., & Madry, A. (2020). Do adversarially robust imagenet models transfer better?

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., & Batra, D. (2017). Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision (pp. 618–626).

Shapley, L. S. (1951). Notes on the N-Person Game II: The Value of an N-Person Game. Santa Monica, CA: RAND Corporation. https://doi.org/10.7249/RM0670

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556.

Smilkov, D., Thorat, N., Kim, B., Viégas, F., & Wattenberg, M. (2017). Smoothgrad: removing noise by adding noise. arXiv preprint arXiv:1706.03825.

Song, Y., Kim, T., Nowozin, S., Ermon, S., & Kushman, N. (2017). Pixeldefend: Leveraging generative models to understand and defend against adversarial examples. arXiv preprint arXiv:1710.10766.

Sundararajan, M., Taly, A., & Yan, Q. (2017). Axiomatic attribution for deep networks. In International conference on machine learning (pp. 3319–3328). PMLR.

Sundararajan, M., Taly, A., & Yan, Q. (2017). Axiomatic attribution for deep networks.

Tschandl, P., Rosendahl, C., & Kittler, H. (2018). The ham10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Scientific Data, 5(1), 1–9.

Tsipras, D., Santurkar, S., Engstrom, L., Turner, A., & Madry, A. (2018). Robustness may be at odds with accuracy. In International conference on learning representations.

Wang, Y., Zou, D., Yi, J., Bailey, J., Ma, X., & Gu, Q. (2020). Improving adversarial robustness requires revisiting misclassified examples. In 8th international conference on learning representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020. OpenReview.net. https://openreview.net/forum?id=rklOg6EFwS.

Wong, E., Rice, L., & Kolter, J.Z. (2020). Fast is better than free: Revisiting adversarial training.

Woolson, R.F. (2007). Wilcoxon signed-rank test. Wiley encyclopedia of clinical trials, 1–3.

Yeh, C.-K., Hsieh, C.-Y., Suggala, A., Inouye, D.I., & Ravikumar, P.K. (2019). On the (in) fidelity and sensitivity of explanations. Advances in Neural Information Processing Systems 32.

Zhang, T., & Zhu, Z. (2019). Interpreting adversarially trained convolutional neural networks.

Zhang, C., Liu, A., Liu, X., Xu, Y., Yu, H., Ma, Y., & Li, T. (2020). Interpreting and improving adversarial robustness of deep neural networks with neuron sensitivity. IEEE Transactions on Image Processing, 30, 1291–1304.

Funding

Open access funding provided by Ben-Gurion University. Not applicable.

Author information

Authors and Affiliations

Contributions

Code development, experimental design, and manuscript writing were collaborative efforts, with equal contributions from O.M. and G.F. Ideation was supported by R.B., while all authors participated in the thorough review of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest/Conflict of interest

Not applicable.

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Editor: Sarunas Girdzijauskas.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A. Post-hoc explainability techniques for DNNs

There are many methods for explaining the predictions of machine learning models, and more specifically DNNs. In the computer vision domain, explanations usually consist of a saliency/attribution map which scores each input pixel based on its positive or negative effect on encouraging the model to classify the input as a specific class. Some of the most prominent methods, which we use in our evaluation include: Integrated Gradients (IG) (Sundararajan et al., 2017), SHapley Additive exPlanations (SHAP) (Lundberg & Lee, 2017) (and specifically Gradient SHAP), Layer-wise Relevance Propagation (LRP) (Bach et al., 2015) and Gradient-weighted Class Activation Mapping (GradCam) (Selvaraju et al., 2017).

1.1 A.1 Integrated gradients

Integrated Gradients (IG) is a popular technique for explaining the predictions of machine learning models, and more specifically, deep neural networks. The method was proposed by Sundararajan et al. in 2017 (Sundararajan et al., 2017). IG is a post-hoc explanation technique that assigns importance scores to the input features of a model by computing the path integral between a baseline image and the input image.

The baseline image represents the absence of the input features, and it can be chosen in several ways. One common choice is to use a black image, but other approaches, such as using an average image, have been proposed. To compute the importance score for each input feature, IG calculates the gradient of the model’s output with respect to the input feature and integrates it along a path between the baseline image and the input image.

Formally, the importance score for feature i can be computed as:

Here, f represents the model, x represents the input image, \(x'\) represents the baseline image, and \(\alpha \) is a parameter that controls the interpolation between the baseline image and the input image. The integral in Eq. 7 can be approximated using numerical integration methods, such as the trapezoidal rule or Simpson’s rule.

By computing the path integral between the baseline image and the input image, IG obtains multiple estimates of the importance of every feature. This avoids the problem of saturated local gradients, which can occur in the backpropagation process when the gradients become too small to be informative. IG has been shown to be a versatile and effective method for explaining the predictions of deep neural networks across different domains, including computer vision, natural language processing, and genomics.

1.2 A.2 SHapley additive explanations

Gradient SHAP (Lundberg & Lee, 2017) is a local interpretability method that approximates the Shapley values (Shapley, 1951) of the input features. Shapley values are a game-theoretic concept that measures the contribution of each player to the overall outcome of a game. In the context of machine learning, Shapley values measure the contribution of each input feature to the output prediction of a model.

To compute the Shapley values, we need to evaluate the model’s output for all possible subsets of features and compare the contribution of each feature to the model’s prediction. However, this is not feasible, as the number of possible feature subsets grows exponentially with the number of features.

Gradient SHAP addresses this problem by approximating the Shapley values through expected gradients computed over a set of background samples. In particular, it computes the expected value of the gradient of the output prediction with respect to each input feature, when adding Gaussian noise to each feature.

Formally, let x be the input instance, f(x) be the output prediction of the model, and \(x'\) be a baseline instance that represents the absence of features. The Gradient SHAP score for feature i is given by:

where K is the number of background samples, \(x^{(k)}\) is the k-th background sample, \(\Delta _i f(x^{(k)})\) is the difference between the output prediction of the model for the input \(x^{(k)}\) with the i-th feature set to 1 and the output prediction for the baseline \(x'\), and \(w_k\) is a weight assigned to each background sample.

The weights are proportional to the difference between the expected output prediction of the model for the input \(x^{(k)}\) and the expected output prediction for the baseline \(x'\). Specifically, \(w_k\) is given by:

where \(\Delta _j f(x^{(k)})\) is the difference between the output prediction of the model for the input \(x^{(k)}\) with the j-th feature set to 1 and the output prediction for the baseline \(x'\).

Gradient SHAP has been shown to have several desirable properties, including local accuracy, missingness, and consistency (Shapley, 1951; Lundberg & Lee, 2017).

1.3 A.3 Layer-wise relevance propagation

LRP (Layer-wise Relevance Propagation) is an explanation technique used for feed-forward neural networks, which can be applied to various types of inputs, such as images, videos, or text. The LRP algorithm redistributes the prediction score of a particular class towards the input features, based on the contribution of each input feature. The redistribution is done using the weights and neural activations generated within the forward pass. The relevance distribution is propagated from the previous layer to the next layer until the input layer is reached. The propagation is subject to a conservation rule, which means that the sum of relevances remains constant in each step of back-propagation from the output layer towards the input layer.

In practice, LRP can use different rules to distribute the relevance scores. One such rule is LRP-Y, where Y is a tuning parameter that can be adjusted to favor positive attributions over negative ones. As Y is increased, negative contributions are gradually canceled out. The LRP-Y rule can be defined as follows:

where j and k are neurons at two consecutive layers of the network, \(a_j\) are the attributions, \(w_{jk}\) are the weights, and \(R_k\) are the relevances. The rule redistributes the relevance score \(R_k\) of the k-th neuron to the j-th neuron, based on the activation \(a_j\) and the weight \(w_{jk}\) between the two neurons. The parameter \(\gamma \) controls the trade-off between positive and negative contributions.

Overall, LRP is a powerful technique for explaining the behavior of deep neural networks, as it provides a way to understand how each input feature contributes to the output prediction. Its flexibility in handling different types of inputs and its model-awareness make it a widely used method in the field of deep learning.

1.4 A.4 Gradient-weighted class activation mapping (GradCam)

GradCam (Selvaraju et al., 2017) is an extension of the Class Activation Mapping (CAM) technique, which produces a coarse localization map by computing the weighted sum of the activation maps in the last convolutional layer of the model. GradCam improves on CAM by using the gradients of the output class with respect to the activation maps in the last convolutional layer to obtain more precise and fine-grained localization.

Formally, given an input image x, let \(f_k(x)\) be the activation map of the last convolutional layer for the k-th class, and \(y_k\) be the output score of the k-th class. The GradCam saliency map \(L_{GradCam}^k(x)\) for the k-th class is defined as:

where \(\text {ReLU}(x) = \max (x, 0)\), and \(\frac{\partial y_k}{\partial f_{i,j}}\) is the gradient of the output score for class k with respect to the activation map at position (i, j).

Note that unlike CAM, GradCam can be applied to any layer in the network that has spatial dimensions, not just the last convolutional layer. This allows for a more fine-grained interpretation of the model’s decisions by identifying the specific regions of the input that are important for each class.

In summary, Post-hoc explainability techniques, such as Integrated Gradients, SHAP, LRP, and GradCam, provide valuable insights into the behavior of DNNs, by identifying the input features that contribute the most to the model’s output. These methods have been extensively used to analyze the performance of DNNs in various tasks and domains, including computer vision, natural language processing, and speech recognition. However, they are not without limitations, and their results should always be interpreted with caution, as they are based on approximations and assumptions about the behavior of the model.

B Configurations used to train the models evaluated

See Tables 11, 12, 13, 14, 15, 16, 17.

C Statistical significance study

See Table 18.

D Additional qualitative results

The figures below are additional qualitative results obtained on the Imagenette dataset and the HAM10000 dataset, for different explainability methods and architectures. The heatmaps, left to right, corresponds to the Standard, NsLoss, Jacobian and Adversarial Training methods.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Moshe, O., Fidel, G., Bitton, R. et al. Improving interpretability via regularization of neural activation sensitivity. Mach Learn 113, 6165–6196 (2024). https://doi.org/10.1007/s10994-024-06549-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-024-06549-4