Abstract

The success of deep learning in computer vision and natural language processing communities can be attributed to the training of very deep neural networks with millions or billions of parameters, which can then be trained with massive amounts of data. However, a similar trend has largely eluded the training of deep reinforcement learning (RL) algorithms where larger networks do not lead to performance improvement. Previous work has shown that this is mostly due to instability during the training of deep RL agents when using larger networks. In this paper, we make an attempt to understand and address the training of larger networks for deep RL. We first show that naively increasing network capacity does not improve performance. Then, we propose a novel method that consists of (1) wider networks with DenseNet connection, (2) decoupling representation learning from the training of RL, and (3) a distributed training method to mitigate overfitting problems. Using this three-fold technique, we show that we can train very large networks that result in significant performance gains. We present several ablation studies to demonstrate the efficacy of the proposed method and some intuitive understanding of the reasons for performance gain. We show that our proposed method outperforms other baseline algorithms on several challenging locomotion tasks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

We have witnessed considerable improvements in the fields of computer vision (CV) (Chen et al., 2020; Krizhevsky et al., 2012; He et al., 2016a; Huang et al., 2017), natural language processing (NLP) (Devlin et al., 2019; Brown et al., 2020; Kaplan et al., 2020; Zhou et al., 2023), and robotics (Ahn et al., 2022; Brohan et al., 2022) in the last decade. These developments could be largely attributed to the training of very large neural networks with millions (or even billions or trillions) of parameters that can be trained using huge amounts of data and an appropriate optimization technique to stabilize training (Neyshabur et al., 2019). In general, the motivation for training larger networks comes from the intuition that larger networks allow better solutions as they increase the search space of possible solutions. Having said that, neural network training largely relies on finding good minimizers of highly non-convex loss functions. These loss functions are also governed by the choices of network architecture, batch size, etc. This has also driven a lot of research in these communities towards understanding the underlying reasoning for performance gains (Li et al., 2018; Lu et al., 2017; Nguyen & Hein, 2017; Zhang et al., 2017)

In striking contrast, the deep reinforcement learning (DRL) community has not reported a similar trend with regard to training larger networks for RL. Some studies have reported that deep RL agents experience instability while training with larger networks (Achiam et al., 2019; Henderson et al., 2018; Sinha et al., 2020; van Hasselt et al., 2018). As an example, in Fig. 1, we show the results of the Soft Actor Critic (SAC) (Haarnoja et al., 2018) agent that uses Multi-layered Perceptron (MLP) for function approximation with the increasing number of layers while fixing its unit size to 256 (also notice the loss surface). These plots show that the use of deeper networks naively leads to poor performance for a deep RL agent. Consequently, using larger networks to train deep RL networks is not fully understood and thus is limiting in several ways. As a result, most of the reported work in the literature ends up using similar hyperparameters such as network structure, number, and size of layers.

Training curves of SAC agents with the different numbers of layers while fixing the unit size (256) on Ant-v2 environment, and the loss function surface (Li et al., 2018) of the deepest (16-layers) Q-network. The training curves suggest that simply building a deeper MLP with a fixed number of units does not improve the performance of DRL while building a larger network is generally effective in supervised learning. The loss surface shows that deeper networks have a more complex loss surface that could be susceptible to the choice of hyperparameters (Li et al., 2018). Motivated by this, we conduct an extensive study on how to train larger networks that contribute to performance gain for RL agents

Our work is motivated by this limitation. We explore the interplay between the size, structure, training, and performance of deep RL agents to provide some intuition and guidelines for using larger networks.

We present a large-scale study and provide empirical evidence for the use of larger networks to train DRL agents. First, we highlight the challenges that one might encounter when using larger networks to train deep RL agents. To circumvent these problems, we integrate a three-fold approach: decoupling feature representation from RL to efficiently produce high-dimensional features, employing DenseNet architecture to propagate richer information, and using distributed training methods to collect more on-policy transitions to reduce overfitting. Our method is a novel architecture that combines these three elements, and we demonstrate that our proposed method significantly improves the performance of RL agents in continuous control tasks. We also conduct an ablation study to show which component contributes to the performance gain. In this paper, we consider learning from state vectors, i.e., not from high-dimensional observations, such as images.

Our contributions can be summarized as follows:

-

We conduct a large-scale study on employing larger networks for DRL agents and empirically show that, contrary to deeper networks, wider networks can improve performance.

-

We propose a novel framework that synergistically combines recently proposed techniques to stabilize training: decoupling representation learning from RL, DenseNet architecture, and distributed training. Although each of these components has previously been proposed, the combination is novel and we demonstrate that it significantly improves performance.

-

We analyze the performance gain of our method using metrics of effective ranks of features and visualization of the loss function landscape of RL agents.

2 Related work

Our work is broadly motivated by Henderson et al. (2018) which empirically demonstrates that DRL algorithms are vulnerable to different training choices like architectures, hyperparameters, activation functions, etc. The paper compares performance on the different numbers of units and layers and demonstrates that larger networks do not consistently improve performance. This is contrary to our intuition considering recent progress in solving computer vision tasks such as ImageNet (Deng et al., 2009): larger and more complex network architectures have proven to achieve better performance (He et al., 2016a; Huang et al., 2017; Krizhevsky et al., 2012; Tan & Le, 2019).

van Hasselt et al. (2018) identify a deadly triad of function approximation, bootstrapping, and off-policy learning. When these three properties are combined, learning can be unstable and potentially diverge, with value estimates becoming unbounded. Several previous works have attempted to address this issue by implementing various techniques, such as target networks (Mnih et al., 2015), double Q-learning (Van Hasselt et al., 2016), and n-step learning (Hessel et al., 2018). Our challenge of training larger networks is specifically related to function approximation. However, as the deadly triad is entangled in a complex manner, we also have to deal with other problems. Regarding network size, some studies investigate the effect of making the network larger for continuous control tasks using MLP (Achiam et al., 2019; Fu et al., 2019) and concluded that larger networks tend to perform better, but also become unstable and prone to diverge more. In a related investigation, van Hasselt et al. (2018) employed CNNs to approximate functions for Atari games, which also revealed the instability that arises when expanding networks based on DQNs (Mnih et al., 2015). The study also found that training stability can sometimes be achieved through the use of Double Q-Networks, although it was not consistent with the size of the networks. Similar studies on on-policy methods are performed in Andrychowicz et al. (2021) and Liu et al. (2021), showing that too small or large networks could cause a significant drop in policy performance. Although these studies are limited to relatively small sizes (hundreds of units with several layers), we will have a more thorough study on much larger networks, specifically focusing on learning from state vectors.

To build a large network, unsupervised learning has been used to learn powerful representations for downstream tasks in natural language processing (Devlin et al., 2019; Radford et al., 2019) and computer vision (Chen et al., 2020; He et al., 2020). In the context of RL, auxiliary tasks, such as predicting the next state conditioned on the past state(s) and action(s) have been widely studied to improve the sample efficiency of RL algorithms (Ha & Schmidhuber, 2018; Jaderberg et al., 2017b; Li et al., 2022; Shelhamer et al., 2017). Researchers have generally focused on learning a good representation of the state input setting that produces low-dimensional features (Lesort et al., 2018; Munk et al., 2016). In contrast to this, Ota et al. (2020) propose the use of an online feature extractor network (OFENet) that intentionally increases input dimensionality and demonstrates that a larger feature size enables improving RL performance in both sample efficiency and control performance. We leverage this idea and use larger input (or feature) for RL agents, as well as larger networks for the policy and value function networks.

One can also use the AutoRL approaches (Parker-Holder et al., 2022) that dynamically adjust hyperparameters during training to build large networks. For example, Wan et al. (2022) employ a combination of the population-based method (Jaderberg et al., 2017a) and the Bayesian optimization framework to optimize hyperparameters, including network architecture, to maximize returns during training. Mohan et al. (2023) empirically demonstrate that hyperparameter landscapes vary over time during training, which requires AutoRL algorithms to adjust hyperparameters dynamically. Although this work focuses on proposing a framework to train large networks while improving the performance of RL algorithms, the proposed framework can also be easily combined with these AutoRL approaches.

3 Method

While recent studies suggest that larger networks for DRL agents have the potential to improve performance, it is non-trivial to alleviate some potential issues that lead to instability when using larger networks to train RL agents.

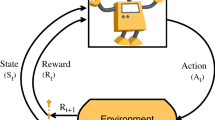

Our method is based on three key ideas: (1) decoupling representation learning from RL, (2) allowing better feature propagation using good network architectures, and (3) using huge amounts of more on-policy data using distributed training to avoid overfitting in larger networks. We first obtain good features apart from RL using an auxiliary task and then propagate the features more efficiently by employing the DenseNet (Huang et al., 2017) architecture. Additionally, we use a distributed RL framework that can mitigate the potential overfitting problem. In the following, we describe in detail the three elements that we use to train larger networks for deep RL agents. Our proposed approach is shown schematically in Fig. 2.

Proposed framework to train larger networks for deep RL agents. We combine three elements. First, we decouple the representation learning from RL to extract an informative feature \(z_{s_t}\) from the current state \(s_t\) using a feature extractor network that is trained using an auxiliary task to predict the next state \(s_{t+1}\). Second, we use large networks using DenseNet architecture, which allows for stronger feature propagation. Finally, we employ the Ape-X-like distributed training framework to mitigate the overfitting problems that tend to happen in larger networks and enable to collect more on-policy data that can improve performance. FC refers to a fully-connected layer

3.1 Decoupling representation learning from RL

While the simplicity of learning the entire pipeline in an end-to-end fashion is appealing, updating all parameters of a large network using only a scalar reward signal can result in very inefficient training (Stooke et al., 2020). Decoupling unsupervised pretraining from downstream tasks is common in computer vision (He et al., 2020; Henaff, 2020) and has proven to be very efficient. Taking inspiration from this, we adopt the online feature extractor network (OFENet) (Ota et al., 2020) to learn meaningful features separately from RL training.

OFENet learns the representation vectors of states \(z_{s_t}\) and state-action pairs \(z_{s_t,a_t}\), and provides them to the agent instead of the original inputs \(s_t\) and \(a_t\), delivering significant performance improvements in continuous robot control tasks. As the representation vectors \(z_{s_t}\) and \(z_{s_t,a_t}\) are designed to have much higher dimensionality than the original input, OFENet matches our philosophy of providing a larger solution space that allows us to find a better policy. The representations can be obtained by learning the mappings \(z_{s_t}=\phi _s(s_t)\) and \(z_{s_t,a_t}=\phi _{s,a}(s_t,a_t)\). The \(\phi _s\) and \(\phi _{s,a}\) are neural networks with arbitrary architecture that have parameters \(\theta _{\phi _s}, \theta _{\phi _{s,a}}\), and trained by minimizing an auxiliary task of predicting the next state \(s_{t+1}\) from the current state and action representation \(z_{s_t,a_t}\) as:

where \(f_{\text {pred}}\) is represented as a linear combination of the representation \(z_{s_t, a_t}\). The learning of the auxiliary task is done concurrently with the learning of the downstream RL task. Our experiments allow the input dimensionality to be much larger than previously presented in Ota et al. (2020). Furthermore, we also increase the network size of the RL agents. For more details, interested readers are referred to Ota et al. (2020).

3.2 Distributed training

In general, larger networks need more data to improve the accuracy of the function approximation (Deng et al., 2009; Hernandez et al., 2021). MLP with a large number of hidden layers is particularly known to cause an overfitting of training data, often resulting in inferior performance to shallow networks (Ramchoun et al., 2017). In the context of RL, while we train and evaluate in the same environment, there is still a problem of overfitting: the agent is only trained on limited trajectories it has experienced, which cannot cover the entire state-action space of the environment (Liu et al., 2021). Fu et al. (2019) showed that the overfitting to the experience replay does exist. To mitigate this overfitting problem, Fedus et al. (2020) empirically showed that having more policy data in the replay buffer, i.e., collecting more than one transition while updating the policy one time, can improve the performance of the RL agent. However, it will be extremely slow.

In light of these studies, we employ the distributed RL framework, which leverages distributed training architectures that decouple learning from collecting transitions by utilizing many actors running in parallel on different environment instances (Horgan et al., 2018; Kapturowski et al., 2019). In particular, we use the Ape-X (Horgan et al., 2018) framework, where a single learner receives experiences from distributed prioritized replay (Schaul et al., 2016), and multiple actors collect transitions in parallel (see Fig. 2). This helps to increase the number of data close to the current policy, that is, more data on the policy, which can improve the performance of off-policy RL agents (Fedus et al., 2020) and mitigate rank collapse problems in Q networks (Aviral Kumar et al., 2021). It is noted that one can collect more on-policy data by collecting more than one transition at each policy update iteration while being much slower, as shown in Fedus et al. (2020). Furthermore, while we employ a distributed training framework proposed in Horgan et al. (2018), we do not use the RL algorithm used there, but instead use standard off-policy RL algorithms: SAC (Haarnoja et al., 2018) and the Twin Delayed Deep Deterministic policy gradient algorithm (TD3) (Fujimoto et al., 2018) in our experiments. Furthermore, it should be noted that our approach uses a distributed training framework as suggested by Horgan et al. (2018). However, the RL algorithms we evaluate differ from theirs; we employ two widely used off-policy RL algorithms, namely Soft Actor-Critic (SAC) (Haarnoja et al., 2018) and Twin Delayed Deep Deterministic policy gradient algorithm (TD3) (Fujimoto et al., 2018) in our experimental design.

3.3 Network architectures

Tremendous developments have been made in the computer vision community in designing sophisticated architectures that enable training of very large networks by making the gradients more well-behaved, such as skip connections and batch normalization (Ioffe & Szegedy, 2015; He et al., 2016a; Huang et al., 2017; Tan & Le, 2019). We focus specifically on the use of the Dense Convolutional Network (DenseNet) architecture, which alleviates the problem of vanishing gradient, strengthens feature propagation, and reduces the number of parameters (Huang et al., 2017). DenseNet has a skip connection that directly connects each layer to all subsequent layers as \(y_{i} = f_i^{\text {dense}}([ y_0, y_1,\ldots , y_{i-1}])\), where \(y_i\) is the output of the ith layer; thus all the inputs are concatenated into a single tensor. Here, \(f_i^{\text {dense}}\) is a composite function that consists of a sequence of convolutions, Batch Normalization (BN) (Ioffe & Szegedy, 2015), and an activation function. An advantage of DenseNet is its improved flow of information and gradients throughout the network, making large networks easier to train. We borrow this architecture to train large networks for RL agents.

Although there are existing examples of applying the DenseNet architecture to Deep Reinforcement Learning (DRL) agents, the full potential of this approach has yet to be fully investigated. In this regard, Sinha et al. (2020) proposed a novel modification to the DenseNet architecture, wherein the state or the state-action pair is concatenated to each hidden layer of the multi-layer perceptron networks (MLP), except the final linear layer, in their D2RL algorithm. In contrast to the modified version proposed by Sinha et al. (2020); Ota et al. (2020) strictly adhered to the original DenseNet architecture in their work, utilizing the dense connection that concatenates all previous layer outputs for OFENet training. Similarly, our approach uses the original DenseNet architecture to represent the policy and value function networks. The schematic of the DenseNet architecture is also shown in Fig. 2.

4 Experimental settings

In this section, we summarize the settings we use for our experiments. We run each experiment independently with five random seeds. Average and \(\pm 1\) standard deviation results will be reported, which are solid lines and shaded regions when we show training curves. The horizontal axis of a training curve is the number of gradient steps, which is not identical to the number of steps an agent interacts with an environment only when we use the distributed replay.

4.1 Metrics

We evaluate the experimental results on two metrics: average return and recently proposed effective ranks (Aviral Kumar et al., 2021) of the features matrices of Q-networks. Aviral Kumar et al. (2021) showed that MLPs used to approximate policy and value functions that use bootstrapping lead to a reduction in the effective rank of the feature, and this rank collapse for the feature matrix results in poorer performance. The effective rank can be computed as \({\text {srank}}_{\delta }(\Phi )=\min \left\{ k: \frac{\sum _{i=1}^{k} \sigma _{i}(\Phi )}{\sum _{i=1}^{d} \sigma _{i}(\Phi )} \ge 1-\delta \right\}\), where \(\sigma _{i}(\Phi )\) are the singular values of the feature matrix \(\Phi\), which are the features of the penultimate layer of the Q-networks. We used \({\text {srank}}_{\delta }(\Phi )=0.01\) to calculate the number of effective ranks in the experiments, as in Aviral Kumar et al. (2021).

4.2 Implementations

4.2.1 RL agents

The hyperparameters of the RL algorithms are also the same as those of their original papers, except that the TD3 uses the batch size 256 instead of 100 as done in Ota et al. (2020). Also, for a fair comparison to Ota et al. (2020), we used a random policy to store transitions to replay buffer before training RL agents for 10K time steps for SAC and for 100K steps for TD3. The other hyperparameters, network architectures, and optimizers are the same as those used in their original papers (Fujimoto et al., 2018; Haarnoja et al., 2018; Ota et al., 2020). Our implementation of RL agents is based on the public codebase used by Ota et al. (2020). We conduct experiments on five different random seeds and report the average and standard deviation scores.

4.2.2 OFENet

Regarding the parameters of OFENet, we also follow the implementation of Ota et al. (2020), that is, all OFENet networks that we used for our experiments consist of 8-layers DenseNet architectures with Swish activation (Ramachandran et al., 2017). To implement OFENet, we refer to the official codebase provided by Ota et al. (2020). We also used target networks (Mnih et al., 2015) to stabilize OFENet training, since the distribution of experiences stored in the shared replay buffer can change more dynamically utilizing the distributed training setting as described in Sect. 3.2. Target networks are updated at each training step by slowly tracking the learned networks: \(\theta ^{\prime } \leftarrow \tau \theta +(1-\tau )\theta ^{\prime }\), where we assume that \(\theta\) are the network parameters of the current OFENet, and \(\theta ^{\prime }\) are the target network parameters. We use the target smoothing coefficient \(\tau =0.005\), which is the same as the one used to update the target value networks in SAC (Haarnoja et al., 2018), in other words, we do not tune this parameter.

4.2.3 Distributed training

The distributed training setting we used is similar to Stooke and Abbeel (2018), which collects experiences using \(N^{\text {core}}\) cores on which each core contains \(N^{\text {env}}\) environments. Specifically, we used \(N^{\text {core}}=2\) and \(N^{\text {env}}=32\). Figure 3 shows the schematic of the distributed training. Since the actions are computed by the latest parameters, the collected experiences result in more on-policy data.

Schematic of asynchronous training. We use \(N^{\text {core}}=2\) cores for collecting experiences, where each core has \(N^{\text {env}}=32\) environments. Since the network parameters are shared, and the training and collecting transitions are decoupled, the collected experiences result in more on-policy data compared to the standard off-policy training, where the agent collects one transition while it applies one gradient step

4.3 Visualizing loss surface of Q-function networks

Li et al. (2018) proposed a method to visualize the loss function curvature by introducing filter normalization method. The authors empirically demonstrated that the non-convexity of the loss functions could be problematic, and the sharpness of the loss surface correlates well with test error and generalization error. In light of this, we also visualize the loss surface of the networks to figure out why the deeper network could not lead to better performance, while the wider networks result in high-performance policies (Fig. 5).

To visualize the loss surface of our Q-networks, we use the authors’ implementationFootnote 1 with the loss of:

with

in which we exactly follow the notations used by SAC paper (Haarnoja et al., 2018). To compute this objective \(J_{Q}(\theta )\), we collect all the transitions used in the training of deeper and wider networks and compute the target values of \({\hat{Q}}\left( {\textbf{s}}_{t}, {\textbf{a}}_{t}\right)\) after the training has been completed and store the tuples of \(\left( s_t, a_t, {\hat{Q}}\left( {\textbf{s}}_{t}, {\textbf{a}}_{t}\right) \right)\) for all transitions in the training. Then, we use the authors’ implementation to visualize the loss with the stored transitions and trained weights of the Q-network. Please refer to Li et al. (2018) for more details.

5 Experimental results

In this section, we present the results of numerical experiments in order to answer some relevant underlying questions posed in this paper. In particular, we answer the following questions.

-

Can RL agents benefit from the usage of larger networks during training? More concretely, can using larger networks lead to better policies for DRL agents?

-

What characterizes a good architecture that facilitates better performance when using larger networks?

-

Can our method work across different RL algorithms as well as different tasks, including sparse reward settings?

-

How does the proposed framework perform in terms of wall clock time and sample efficiency?

5.1 Impact of network size on performance

In the first set of experiments, we try to investigate whether increasing the size of the network always leads to poor performance. We quantitatively measure the effectiveness of increasing the network size by changing the number of units \(N^{\text {unit}}\) and layers \(N^{\text {layer}}\), while the other parameters are fixed.

Figure 1a shows the training curves when increasing the number of layers while the unit size is fixed to \(N^{\text {unit}}=256\). As we described in Sect. 1, we observe that the performance becomes worse as the network becomes deeper. In Fig. 4a, we show the effect of increasing the number of units while the number of layers is fixed to \(N^{\text {layer}}=2\). Contrary to the results when making the network deeper, we can observe a consistent improvement when making the network wider. To investigate more thoroughly, we also conduct a grid search, where we sample each parameter of the network from \(N^\text {unit}\in \left\{ 128, 256, 512, 1024, 2048 \right\}\), and \(N^\text {layer} \in \left\{ 1, 2, 4, 8, 16 \right\}\) and evaluate the performance in Fig. 5. We can see a monotonic improvement in performance when widening networks at almost all depths of the network.

Grid search results of maximum average return at one-million training steps over the different number of units and layers for SAC agent on Ant-v2 environment. This demonstrates that a deeper MLP (see horizontally) does not consistently improve performance, while a wider MLP (see vertically) generally does

This result is in line with the general belief that training deeper networks is, in general, more difficult and more susceptible to the choice of hyperparameters (Ramchoun et al., 2017). This could be attributed to the initialization of the networks—it is known that the wider a layer, the closer the network is to the ideal conditions under which the initialization scheme was derived (Glorot & Bengio, 2010). To understand why the deeper network is harder than the wider networks, we investigate the loss surface curvatures (Li et al., 2018) of both deeper and wider networks. We show the loss surface of the deeper network (\(N^{\text {layer}}=16, N^{\text {unit}}=256\)) in Fig. 1b and the wider network (\(N^{\text {layer}}=2, N^{\text {unit}}=2048\)) of the SAC agent trained in the Ant environment in Fig. 4b as well as HalfCheetah-v2 environment in Fig. 6 using the visualization method proposed in Li et al. (2018) with the loss of TD error of Q-functions of SAC agents. These figures show that wider networks have a nearly convex surface while deeper networks have more complex loss surface, which could be susceptible to the choice of hyperparameters (Li et al., 2018). Comparison of deeper and wider networks has also been done in several works (He et al., 2016a; Li et al., 2018; Nguyen & Hein, 2017; Wu et al., 2019; Zagoruyko & Komodakis, 2016), where wider networks are prone to have more generalization capability due to their smooth loss functions.

From these results, we observe and conclude that larger networks can be effective in improving deep RL performance. In particular, we achieve consistent performance gains when we widen individual layers rather than going deeper. Consequently, we fix the number of layers to \(N^{\text {layer}}=2\), and only change the number of units to learn larger networks in the following experiments.

Loss landscapes of models trained on HalfCheetah-v2 with one million steps, visualized using the technique in Li et al. (2018)

5.2 Architecture comparison

In the next set of experiments, we try to investigate the role of a synergistic combination of connectivity architecture, state representation, and distributed training to allow the usage of larger networks for training deep RL agents. A brief introduction to these techniques is described in Sect. 3.

5.2.1 Connectivity architecture

We first compare four connectivity architectures: standard MLP, MLP-ResNet, MLP-DenseNet, and MLP-D2RL, which is a recently proposed architecture to improve RL performance. MLP-ResNet is a modified version of Residual Networks (ResNet) (He et al., 2016a, b), which has a skip-connection that bypasses the nonlinear transformations with an identity function: \(y_{i} = f_i^{\text {res}}(y_{i-1}) + y_{i-1}\), where \(y_i\) is the output of the ith layer, and \(f_i^{\text {res}}\) is a residual module, which consists of a fully connected layer and a nonlinear activation function. An advantage of this architecture is that the gradient can flow directly through the identity mapping from the top layers to the bottom layers. MLP-D2RL is identical to Sinha et al. (2020), and MLP-DenseNet is our proposed architecture defined in Sect. 3.3. We compare these four architectures on both small networks (\(N^{\text {unit}}=128\), denoted by S) and large networks (\(N^{\text {unit}}=2048\), denoted by L).

Figure 7 shows the training curves of the average return in Fig. 7a, and the effective ranks in Fig. 7b. The results show that our MLP-DenseNet achieves the highest return on small and large networks while mitigating rank collapse comparable to MLP-D2RL. This shows that MLP-DenseNet is the best architecture among these four choices, and thus we employ this architecture for both the policy network and the value function network in the following experiments.

5.2.2 Decoupling representation learning from RL

Next, we evaluate the effectiveness of using OFENet (see Sect. 3.1) to decouple representation learning from RL. In order to evaluate the performance on different network sizes, we sample the number of units from \(N^{\text {units}} \in \left\{ 256, 1024, 2048 \right\}\), which we respectively denote S, M, and L, and compare these against the baseline SAC agents, which do not use OFENet-like structure with the auxiliary loss and are trained only from a scalar reward signal. In other words, the baseline agents are identical to the DenseNet architecture of the previous connectivity comparison experiment.

The results in Fig. 8 show separating representation learning from RL improves control performance and mitigates rank collapse of Q-networks regardless of network size. Thus, we can conclude using bigger representations, which are learned using the auxiliary task (see Sect. 3.1), contributes to improving performance on downstream RL tasks.

To investigate more in-depth, we also conduct a grid search over the different number of units for both SAC and OFENet in Fig. 9. The baseline is SAC agent without OFENet (see leftmost column). The results suggest that the performance does improve when compared against the baseline agent (see horizontally), however, it saturates around the average return of 8000. In the following experiments, we employ distributed replay and expect we can attain higher performance.

Grid search results of average maximum return over the different number of units between SAC and OFENet with ApeX-like distributed training. Compared to Fig. 9, adding distributed RL enables monotonic improvement when we widen SAC or OFENet

5.2.3 Distributed RL

Finally, we add distributed replay (Horgan et al., 2018) to further improve performance while using larger networks. We use an implementation similar to Stooke and Abbeel (2018), which collects experiences using \(N^{\text {core}}\) cores on which each core contains \(N^{\text {env}}\) environments, specifically we used \(N^\text {core}=2\) and \(N^{\text {env}}=32\).

Similarly to the previous experiments, we perform a grid search on the different number of units for SAC and OFENet with the distributed replay in Fig. 10, and also compare the training curves of three different network size S, M, and L in Fig. 11. Note that the horizontal axis in Fig. 11 is the number of times we applied gradients to the network, not the number of interactions. Comparing Figs. 10 and 9, we can clearly see that distributed training enables further performance gain in all sizes of networks. Furthermore, we can observe a monotonic improvement when we increase the number of units for both SAC and OFENet. Thus, we verified that combining distributed replay contributes to further performance gain while training larger networks.

5.2.4 How about generalization to different RL algorithms and environments?

To quantitatively measure the effectiveness of our method across different RL algorithms and tasks, we evaluate two popular optimization algorithms, namely SAC and TD3 (Haarnoja et al., 2018; Fujimoto et al., 2018), on five different locomotion tasks in MuJoCo (Todorov et al., 2012). We denote our method as Ours, which uses the largest network of \(N^{\text {units}}=2048\) among the previous experiments for both the OFENet and the RL algorithms. We compare the proposed method against two baselines: the original RL algorithm denoted by Original. Furthermore, we also compare OFENet, which can achieve current state-of-the-art performance on these tasks to the best of our knowledge.

We plot the training curves in Fig. 12 and list the highest average return in Table 1. In the figure and the table, our method SAC (Ours) and TD3 (Ours) achieves the best performance in almost all environments. Furthermore, we can see that our proposed method can work with both RL algorithms and thus is agnostic to the choice of the training algorithm. In particular, our method notably achieves much higher episode returns in Ant-v2 and Humanoid-v2, which are harder environments with larger state/action space and more training examples. Interestingly, the proposed method does not achieve reasonable solutions in Hopper-v2, which has the smallest dimensionality among five environments. We consider that the performance in smaller dimension problems saturates early and even additional methods cannot provide any significant performance gain.

5.3 Ablation study

Since our method integrates several ideas into a single agent, we conduct additional experiments to understand what components contribute to the performance gain. We highlight that our method consists of three elements: feature representation learning using OFENet, DenseNet architecture, and distributed training. Furthermore, we compare the results without increasing the network size to reinforce that a larger network improves performance. Figure 13 shows the ablation study on SAC with Ant-v2 environment. Full is our method, which combines all three elements we proposed and uses large networks (\(N^{\text {unit}}=2048, N^{\text {layer}}=2\)) for the SAC agent. sac is the original SAC implementation.

w/o Ape-X removes Ape-X-like distributed training setting. As distributed RL enables the collection of more experiences close to the current policy, we consider that the significant performance gain can be explained by learning from more on-policy data, which was also empirically shown by Fedus et al. (2020). Also, we believe that receiving more novel experiences helps the agent generalize to state-action space. In other words, although the off-policy training setting obtains a new experience by interacting with the environment, it is much less compared to the on-policy setting, resulting in overfitting to the limited trajectories, which becomes more problematic in harder environments, which have larger state/action space and larger neural networks. Fu et al. (2019) have also empirically proven this issue.

w/o OFENet removes OFENet and trains the entire architecture using only a scalar reward signal. The much lower return shows that learning the large networks from just the scalar reinforcement signal is difficult, and training the bottom networks (close to the input layer), i.e. obtaining informative features by using an auxiliary task, enables better learning of control policy.

w/o Larger NN reduces the number of units from \(N^{\text {unit}}=2048\) to 256 for both OFENet and SAC. This also significantly decreases performance; therefore, we can conclude that the use of larger networks is essential to achieve high performance.

Finally, w/o DenseNet replaces the MLP-DenseNet defined in Sect. 3.3 with the standard MLP architecture. The result shows that strengthening feature propagation does contribute to improving performance.

It is noted that while fully using the proposed architecture improves the performance the most, each component (decoupling representation learning from RL, distributed training, and network architecture) also contributes to the performance gain.

Training curves of the derived methods of SAC on Ant-v2. This shows that each element does contribute to performance gain, and our combination of DenseNet architecture, distributed training, and decoupled feature representation (shown as Full ) allows us to train larger networks that perform significantly better compared against the baseline SAC algorithm (shown as sac )

5.4 Sparse reward setting

So far, we have evaluated the proposed framework in dense reward settings. In this section, we apply our framework to the sparse reward settings in multi-goal environments discussed in Plappert et al. (2018), which includes a 7 DoF robot manipulator (Fetch) to perform Reach, Slide, Push, and PickAndPlace tasks. Since our framework does not need to change the underlying RL algorithms, it can be naturally combined with any plug-and-play method. Specifically, we combine our framework with Hindsight Experience Replay (HER) (Andrychowicz et al., 2017), which is a standard method for solving RL problems with sparse reward settings.

Figure 14 shows the success rates of the four different sparse reward environments. It clearly shows that the proposed framework enables the agent to learn a better policy compared to the baseline method, which is a naive combination of HER and SAC; that is, it does not include the proposed framework that consists of three components. Specifically in difficult settings (Slide, Push, and PickAndPlace), our method quickly converges to a success rate of almost 100%, while the original algorithm does not achieve the goal in 300 thousands of steps.

5.5 Analysis on computation and sample efficiency

In the final experiment, we evaluate the performance of the proposed framework with respect to wall clock time and sample efficiency.

5.5.1 Environmental and gradient steps per second

First, we analyze the number of environmental and gradient steps per second for the four different methods. Table 2 compares the gradient and environmental steps averaged over all environments for Ours, Ours w/o ApeX, OFENet, and Original. Comparing Ours and Ours w/o ApeX, which is our proposed framework without the ApeX-like distributed training, the proposed method collects \(88 (=1362.0 / 15.4)\) times more transitions per second by employing the distributed training framework. Therefore, more on-policy transitions are stored in a replay buffer compared to those without a distributed training framework, which is important to train high-performance policy. Focusing on the gradient steps, Ours has more steps than Ours w/o ApeX, because our framework conducts policy updates and transition collection asynchronously, which will be effective for performance in terms of wall clock time performance.

5.5.2 Wall clock time performance

Next, we compare the performance of the models with respect to the wall clock time, that is, we compare models trained over a fixed period of time. Specifically, we compare the performance of all methods for the amount of time taken by the naive baseline SAC (Original) to complete the training.

The result in Table 3 shows that our method SAC (Ours) achieves the best performance in almost all environments, although the number of gradient steps is \(2.3 (=43.9 / 19.3)\) times less than Original. This is achieved by using the distributed training framework; our method collects more on-policy transitions thanks to the asynchronous data collection setup, which enables the agent to overfit its networks to the experience replay (see Sect. 3.2 for more details). Thus, we show that the proposed framework is effective with respect to the wall clock time.

5.5.3 Sample efficiency

Next, we analyze the sample efficiency, where we compare our method with baselines with the same number of environmental steps (thus we do not consider the wall clock time).

Table 4 shows the performance of each method with a fixed number of environmental steps (1 million for Hopper-v2 and Walker2D-v2 and 3 million for the others). Note that the returns of SAC (Original) and SAC (OFENet) are identical to those in Table 1 of the manuscript because these methods do not use the distributed training framework. Comparing Ours with baselines (OFENet and Original), our proposed framework performs worse than the baselines. This is because it collects a huge amount of samples very quickly, thanks to the asynchronous training architecture (Fig. 3 in the manuscript); the data collection speed of the proposed framework is 88 times faster than that of the naive baseline. Therefore, effectively, our proposed method is able to apply only fewer gradient steps (approximately 100 times less) than the compared baselines. This results in poor performance, as there are simply not enough updates to train the model.

Finally, to show the effectiveness of the other two components of the proposed framework, namely the DenseNet architecture and decoupling representation learning from RL, we also added results without distributed training, denoted by Ours w/o ApeX in Table 4. From the table, Ours w/o ApeX achieves much higher performance than the baselines, while it has the same sample efficiency as the baselines, since it does not use distributed training. Therefore, one can choose the framework with or without distributed training based on the importance of sample efficiency; While our full framework is not very sample efficient due to the nature of distributed training, the proposed method without distributed training still performs much better than the baselines. Implementing the distributed framework can really improve performance due to the distributed implementation and the large amounts of on-policy diverse data.

6 Conclusion

Deep Learning has catalyzed huge breakthroughs in the fields of computer vision and natural language processing, making use of massive neural networks that can be trained with huge amounts of data. Although these domains have greatly benefitted from the use of larger networks, the RL community has not witnessed a similar trend in the use of larger networks to train high-performance agents. This is mainly due to the instability when using larger networks to train RL agents. In this paper, we studied the problem of using a larger network to train RL agents. To achieve this, we proposed a novel framework for training larger networks for deep RL agents, while reflecting on some of the important design choices that one has to make when using such networks. In particular, the proposed framework consists of three elements. First, we decouple the representation learning from RL using an auxiliary loss to predict the next state. This allows more informative features to be obtained to learn control policies with richer information than learning entire networks from a scalar reward signal. The learned representation is then propagated to the DenseNet architecture, which consists of very wide networks. Finally, a distributed training framework provides huge amounts of on-policy data whose distribution is much closer to the current policy and thus enables the RL agent to mitigate the overfitting problem and enhance generalization to novel scenarios. Our experiments demonstrate that this novel combination achieves significantly higher performance compared to current state-of-the-art algorithms across different off-policy RL algorithms and different continuous control tasks. While this paper focused on learning from state vectors, we plan to apply the proposed framework to high-dimensional observations, such as images, in future work. Furthermore, motivated by a surge of interest in applying Transformer-based networks to RL agents (Li et al., 2023; Chen et al., 2021; Shang et al., 2022; Konan et al., 2023), we also plan to include a self-attention-based architecture to our framework. Nevertheless, we believe that our approach could be helpful toward training larger networks for Deep RL agents.

Availability of data and material

Not applicable.

Code availability

Upon acceptance, we will upload codes on GitHub.

Notes

Code used for these plots can be found at https://github.com/tomgoldstein/loss-landscape.

References

Achiam, J., Knight, E., & Abbeel, P. (2019). Towards characterizing divergence in deep q-learning. arXiv preprint arXiv:1903.08894

Ahn, M., Brohan, A., Brown, N., Chebotar, Y., Cortes, O., David, B., Finn, C., Fu, C., Gopalakrishnan, K., Hausman, K., Herzog, A., Ho, D., Hsu, J., Ibarz, J., Ichter, B., Irpan, A., Jang, E., Ruano, R.J., Jeffrey, K., Jesmonth, S., Joshi, N., Julian, R., Kalashnikov, D., Kuang, Y., Lee, K.-H., Levine, S., Lu, Y., Luu, L., Parada, C., Pastor, P., Quiambao, J., Rao, K., Rettinghouse, J., Reyes, D., Sermanet, P., Sievers, N., Tan, C., Toshev, A., Vanhoucke, V., Xia, F., Xiao, T., Xu, P., Xu, S., Yan, M., & Zeng, A. (2022). Do as i can and not as i say: Grounding language in robotic affordances. arXiv Preprint arXiv:2204.01691

Andrychowicz, M., Raichuk, A., Stańczyk, P., Orsini, M., Girgin, S., Marinier, R., Hussenot, L., Geist, M., Pietquin, O., Michalski, M., Gelly, S., & Bachem, O. (2021). What matters in on-policy reinforcement learning? A large-scale empirical study. In Proceedings of international conference on learning representations (ICLR).

Andrychowicz, M., Wolski, F., Ray, A., Schneider, J., Fong, R., Welinder, P., McGrew, B., Tobin, J., Pieter Abbeel, O., & Zaremba, W. (2017). Hindsight experience replay. In Proceedings of advances in neural information processing systems (NeurIPS) (Vol. 30).

Aviral Kumar, D. G. Rishabh Agarwal, & Levine, S. (2021). Implicit under-parameterization inhibits data-efficient deep reinforcement learning. In Proceedings of international conference on learning representations (ICLR).

Brohan, A., Brown, N., Carbajal, J., Chebotar, Y., Dabis, J., Finn, C., Gopalakrishnan, K., Hausman, K., Herzog, A., Hsu, J., Ibarz, J., Ichter, B., Irpan, A., Jackson, T., Jesmonth, S., Joshi, N., Julian, R., Kalashnikov, D., Kuang, Y., Leal, I., Lee, K.-H., Levine, S., Lu, Y., Malla, U., Manjunath, D., Mordatch, I., Nachum, O., Parada, C., Peralta, J., Perez, E., Pertsch, K., Quiambao, J., Rao, K., Ryoo, M., Salazar, G., Sanketi, P., Sayed, K., Singh, J., Sontakke, S., Stone, A., Tan, C., Tran, H., Vanhoucke, V., Vega, S., Vuong, Q., Xia, F., Xiao, T., Xu, P., Xu, S., Yu, T., & Zitkovich, B. (2022). Rt-1: Robotics transformer for real-world control at scale. In: arXiv Preprint arXiv:2212.06817

Brown, T.B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. ., Wu, J., Winter, C., Hesse, C., Chen, M., Sigler, E., Litwin, M., Gray, S., Chess, B., Clark, J., Berner, C., McCandlish, S., Radford, A., Sutskever, I., & Amodei, D. (2020). Language models are few-shot learners. In H. Larochelle, M. Ranzato, R. Hadsell, M. Balcan, & H. Lin (Eds.), Proceedings of advances in neural information processing systems (NeurIPS).

Chen, T., Kornblith, S., Norouzi, M., & Hinton, G. (2020). A simple framework for contrastive learning of visual representations. In Proceedings of international conference on machine learning (ICML) (pp. 1597–1607).

Chen, T., Kornblith, S., Swersky, K., Norouzi, M., & Hinton, G. (2020). Big self-supervised models are strong semi-supervised learners. In Proceedings of advances in neural information processing systems (NeurIPS).

Chen, L., Lu, K., Rajeswaran, A., Lee, K., Grover, A., Laskin, M., Abbeel, P., Srinivas, A., & Mordatch, I. (2021). Decision transformer: Reinforcement learning via sequence modeling. Advances in Neural Information Processing Systems, 34, 15084–15097.

Deng, J., Dong, W., Socher, R., Li, L., Li, K., & Fei-Fei L. (2009). Imagenet: A large-scale hierarchical image database. In 2009 IEEE Conference on computer vision and pattern recognition.

Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 conference of the North American chapter of the association for computational linguistics: Human language technologies, volume 1 (long and short papers).

Fedus, W., Ramachandran, P., Agarwal, R., Bengio, Y., Larochelle, H., Rowland, M., & Dabney, W. (2020). Revisiting fundamentals of experience replay. In Proceedings of the 37th international conference on machine learning (pp. 3061–3071).

Fu, J., Kumar, A., Soh, M., & Levine, S. (2019). Diagnosing bottlenecks in deep q-learning algorithms. In Proceedings of international conference on machine learning (ICML).

Fujimoto, S., van Hoof, H., & Meger, D. (2018). Addressing function approximation error in actor-critic methods. In Proceedings of international conference on machine learning (ICML).

Glorot, X., & Bengio, Y. (2010). Understanding the difficulty of training deep feedforward neural networks. In Y.W. Teh, & M. Titterington (Eds.), Proceedings of the artificial intelligence and statistics (AISTATS). Proceedings of machine learning research (Vol. 9, pp. 249–256). PMLR. https://proceedings.mlr.press/v9/glorot10a.html

Ha, D., & Schmidhuber, J. (2018). Recurrent world models facilitate policy evolution. In Proceedings of advances in neural information processing systems (NeurIPS).

Haarnoja, T., Zhou, A., Abbeel, P., & Levine, S. (2018). Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. CoRR. arXiv:1801.01290 [abs]

He, K., Fan, H., Wu, Y., Xie, S., & Girshick, R. (2020). Momentum contrast for unsupervised visual representation learning. In Proceedings of IEEE conference on computer vision and pattern recognition (CVPR).

He, K., Zhang, X., Ren, S., & Sun, J. (2016a). Deep residual learning for image recognition. In Proceedings of IEEE conference on computer vision and pattern recognition (CVPR) (pp. 770–778). https://doi.org/10.1109/CVPR.2016.90

He, K., Zhang, X., Ren, S., & Sun, J. (2016b). Identity mappings in deep residual networks. In Proceedings of European conference on computer vision (ECCV).

Henaff, O. (2020). Data-efficient image recognition with contrastive predictive coding. In Proceedings of international conference on machine learning (ICML) (pp. 4182–4192).

Henderson, P., Islam, R., Bachman, P., Pineau, J., Precup, D., & Meger, D. (2018). Deep reinforcement learning that matters. In Proceedings of AAAI conference on artificial intelligence.

Hernandez, D., Kaplan, J., Henighan, T., & McCandlish, S. (2021). Scaling laws for transfer. arXiv preprint arXiv:2102.01293 [cs.LG]

Hessel, M., Modayil, J., van Hasselt, H., Schaul, T., Ostrovski, G., Dabney, W., Horgan, D., Piot, B., Azar, M. G., & Silver, D. (2018). Rainbow: Combining improvements in deep reinforcement learning. In Proceedings of AAAI conference on artificial intelligence.

Horgan, D., Quan, J., Budden, D., Barth-Maron, G., Hessel, M., Van Hasselt, H., & Silver, D. (2018). Distributed prioritized experience replay. arXiv preprint arXiv:1803.00933.

Huang, G., Liu, Z., Van Der Maaten, L., & Weinberger, K. Q. (2017). Densely connected convolutional networks. In 2017 IEEE Conference on computer vision and pattern recognition (CVPR) (pp. 2261–2269). https://doi.org/10.1109/CVPR.2017.243

Ioffe, S., & Szegedy, C. (2015). Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of international conference on machine learning (ICML).

Jaderberg, M., Dalibard, V., Osindero, S., Czarnecki, W. M., Donahue, J., Razavi, A., Vinyals, O., Green, T., Dunning, I., Simonyan, K., Fernando, C., & Kavukcuoglu, K. (2017a). Population based training of neural networks. arXiv preprint arXiv:1711.09846.

Jaderberg, M., Mnih, V., Czarnecki, W. M., Schaul, T., Leibo, J. Z., Silver, D., & Kavukcuoglu, K. (2017b). Reinforcement learning with unsupervised auxiliary tasks. In Proceedings of international conference on learning representations (ICLR).

Kaplan, J., McCandlish, S., Henighan, T., Brown, T.B., Chess, B., Child, R., Gray, S., Radford, A., Wu, J., & Amodei, D. (2020). Scaling laws for neural language models. arXiv preprint arXiv:2001.08361arXiv:2001.08361 [cs.LG]

Kapturowski, S., Ostrovski, G., Dabney, W., Quan, J., & Munos, R. (2019). Recurrent experience replay in distributed reinforcement learning. In Proceedings of international conference on learning representations (ICLR).

Konan, S. G., Seraj, E., & Gombolay, M. (2023). Contrastive decision transformers. In K. Liu, D. Kulic, & J. Ichnowski (Eds.), Proceedings of the 6th conference on robot learning. Proceedings of machine learning research (Vol. 205, pp. 2159–2169). PMLR. https://proceedings.mlr.press/v205/konan23a.html

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. In F. Pereira, C. J. C. Burges, L. Bottzou, & K. Q. Weinberger (Eds.), Proceedings of Advances in Neural Information Processing Systems (NeurIPS) (Vol. 25, pp. 1097–1105). Curran Associates Inc.

Lesort, T., Díaz-Rodríguez, N., Goudou, J.-F., & Filliat, D. (2018). State representation learning for control: An overview. CoRR. arXiv:1802.04181 [abs]

Li, H., Xu, Z., Taylor, G., Studer, C., & Goldstein, T. (2018). Visualizing the loss landscape of neural nets. In Proceedings of advances in neural information processing systems (NeurIPS) (pp. 6391–6401).

Li, W., Luo, H., Lin, Z., Zhang, C., Lu, Z., & Ye, D. (2023) A survey on transformers in reinforcement learning. Transactions on Machine Learning Research Survey Certification.

Li, X., Shang, J., Das, S., & Ryoo, M. (2022). Does self-supervised learning really improve reinforcement learning from pixels? Advances in Neural Information Processing Systems, 35, 30865–30881.

Liu, Z., Li, X., Kang, B., & Darrell, T. (2021). Regularization matters in policy optimization. In Proceedings of international conference on learning representations (ICLR).

Lu, Z., Pu, H., Wang, F., Hu, Z., & Wang, L. (2017). The expressive power of neural networks: A view from the width. In Proceedings of advances in neural information processing systems (NeurIPS) (pp. 6232–6240).

Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., Graves, A., Riedmiller, M., Fidjeland, A. K., Ostrovski, G., Petersen, S., Beattie, C., Sadik, A., Antonoglou, I., King, H., Kumaran, D., Wierstra, D., Legg, S. & Hassabis, D. (2015). Human-level control through deep reinforcement learning. Nature, 518(7540), 529–533.

Mohan, A., Benjamins, C., Wienecke, K., Dockhorn, A., & Lindauer, M. (2023). Autorl hyperparameter landscapes. arXiv preprint arXiv:2304.02396

Munk, J., Kober, J., & Babuška, R. (2016). Learning state representation for deep actor-critic control. In IEEE Conference on decision and control (CDC) (pp. 4667–4673).

Neyshabur, B., Li, Z., Bhojanapalli, S., LeCun, Y., & Srebro, N. (2019). The role of over-parametrization in generalization of neural networks. In Proceedings of international conference on learning representations (ICLR). https://openreview.net/forum?id=BygfghAcYX

Nguyen, Q., & Hein, M. (2017). The loss surface of deep and wide neural networks. In Proceedings of international conference on machine learning (ICML) (pp. 2603–2612).

Ota, K., Oiki, T., Jha, D., Mariyama, T., & Nikovski, D. (2020). Can increasing input dimensionality improve deep reinforcement learning? In Proceedings of international conference on machine learning (ICML) (pp. 7424–7433).

Parker-Holder, J., Rajan, R., Song, X., Biedenkapp, A., Miao, Y., Eimer, T., Zhang, B., Nguyen, V., Calandra, R., Faust, A., Hutter, F., & Lindauer, M. (2022). Automated reinforcement learning (autorl): A survey and open problems. Journal of Artificial Intelligence Research, 74, 517–568.

Plappert, M., Andrychowicz, M., Ray, A., McGrew, B., Baker, B., Powell, G., Schneider, J., Tobin, J., Chociej, M., Welinder, P., Kumar, V., & Zaremba, W. (2018). Multi-goal reinforcement learning: Challenging robotics environments and request for research. arXiv preprint arXiv:1802.09464

Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., & Sutskever, I. (2019). Language models are unsupervised multitask learners.

Ramachandran, P., Zoph, B., & Le, Q.V. (2017). Searching for activation functions. CoRR. arXiv:1710.05941 [abs]

Ramchoun, H., Idrissi, M. A. J., Ghanou, Y., & Ettaouil, M. (2017). New modeling of multilayer perceptron architecture optimization with regularization: An application to pattern classification. IAENG International Journal of Computer Science, 44(3), 261–269.

Schaul, T., Quan, J., Antonoglou, I., & Silver, D. (2016). Prioritized experience replay. In Proceedings of international conference on learning representations (ICLR).

Shang, J., Kahatapitiya, K., Li, X., & Ryoo, M. S. (2022). Starformer: Transformer with state-action-reward representations for visual reinforcement learning. In European conference on computer vision (pp. 462–479). Springer.

Shelhamer, E., Mahmoudieh, P., Argus, M., & Darrell, T. (2017). Loss is its own reward: Self-supervision for reinforcement learning. In Proceedings of international conference on learning representations (ICLR).

Sinha, S., Bharadhwaj, H., Srinivas, A., & Garg, A. (2020). D2rl: Deep dense architectures in reinforcement learning. arXiv preprint arXiv:2010.09163 [cs.LG]

Stooke, A., & Abbeel, P. (2018). Accelerated methods for deep reinforcement learning. arXiv preprint arXiv:1803.02811

Stooke, A., Lee, K., Abbeel, P., & Laskin, M. (2020). Decoupling representation learning from reinforcement learning. arXiv preprint arXiv:2009.08319 [cs.LG]

Tan, M., & Le, Q. (2019). Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of international conference on machine learning (ICML) (pp. 6105–6114). PMLR.

Todorov, E., Erez, T., & Tassa, Y. (2012). Mujoco: A physics engine for model-based control. In Proceedings of IEEE/RSJ international conference on intelligent robots and systems (IROS).

van Hasselt, H., Doron, Y., Strub, F., Hessel, M., Sonnerat, N., & Modayil, J. (2018). Deep reinforcement learning and the deadly triad. CoRR.

Van Hasselt, H., Guez, A., & Silver, D. (2016). Deep reinforcement learning with double q-learning. In Thirtieth AAAI conference on artificial intelligence.

Wan, X., Lu, C., Parker-Holder, J., Ball, P. J., Nguyen, V., Ru, B., & Osborne, M. (2022). Bayesian generational population-based training. In International conference on automated machine learning (pp. 14–1). PMLR.

Wu, Z., Shen, C., & Van Den Hengel, A. (2019). Wider or deeper: Revisiting the resnet model for visual recognition. Pattern Recognition, 90, 119–133.

Zagoruyko, S., & Komodakis, N. (2016). Wide residual networks. In Proceedings of British machine vision conference (BMVC).

Zhang, C., Bengio, S., Hardt, M., Recht, B., & Vinyals, O. (2017). Understanding deep learning requires rethinking generalization. In Proceedings of international conference on learning representations (ICLR).

Zhou, C., Li, Q., Li, C., Yu, J., Liu, Y., Wang, G., Zhang, K., Ji, C., Yan, Q., He, L., Peng, H., Li, J., Wu, J., Liu, Z., Xie, P., Xiong, C., Pei, J., Yu, P., & Sun, L. (2023). A comprehensive survey on pretrained foundation models: A history from bert to chatgpt. arXiv preprint arXiv:2302.09419

Funding

This work is not funded by any institution.

Author information

Authors and Affiliations

Contributions

Kei Ota participated in developing the paper’s idea, implementing codes, doing experiments, and writing the paper. Devesh K. Jha and Asako Kanezaki participated in developing the paper’s idea and writing the paper.

Corresponding author

Ethics declarations

Conflict of interest

Not applicable.

Ethical approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Yes.

Additional information

Editor: Lijun Zhang.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ota, K., Jha, D.K. & Kanezaki, A. A framework for training larger networks for deep Reinforcement learning. Mach Learn 113, 6115–6139 (2024). https://doi.org/10.1007/s10994-024-06547-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-024-06547-6