Abstract

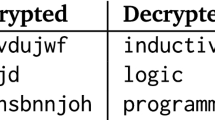

A magic value in a program is a constant symbol that is essential for the execution of the program but has no clear explanation for its choice. Learning programs with magic values is difficult for existing program synthesis approaches. To overcome this limitation, we introduce an inductive logic programming approach to efficiently learn programs with magic values. Our experiments on diverse domains, including program synthesis, drug design, and game playing, show that our approach can (1) outperform existing approaches in terms of predictive accuracies and learning times, (2) learn magic values from infinite domains, such as the value of pi, and (3) scale to domains with millions of constant symbols.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A magic value in a program is a constant symbol that is essential for the good execution of the program but has no clear explanation for its choice. For instance, consider the problem of classifying lists. Figure 1 shows positive and negative examples. Figure 2 shows a hypothesis which discriminates between the positive and negative examples. Learning this hypothesis involves the identification of the magic number 7.

Magic values are fundamental to many areas of knowledge, including physics and mathematics. For instance, the value of pi is essential to compute the area of a disk. Likewise, the gravitational constant is essential to identify whether an object subject to its weight is in mechanical equilibrium. Similarly, consider the classical AI task of learning to play games. To play the game connect four,Footnote 1 a learner must correctly understand the rules of this game, which implies that they must discover the magic value four, i.e. four tokens in a row.

Although fundamental to AI, learning programs with magic values is difficult for existing program synthesis approaches. For instance, many recent inductive logic programming (ILP) (Muggleton, 1991; Cropper & Dumancic, 2022) approaches first enumerate all possible rules allowed in a program (Corapi et al., 2011; Kaminski et al., 2018; Raghothaman et al., 2019; Evans & Grefenstette, 2018) and then search for a subset of them. For example, ASPAL (Corapi et al., 2011) precomputes every possible rule and uses an answer set solver to find a subset of them. Other approaches similarly represent constants as unary predicate symbols (Evans & Grefenstette, 2018; Cropper & Morel, 2021). Both approaches suffer from two major limitations. First, they need a finite and tractable number of constant symbols to search through, which is clearly infeasible for large and infinite domains, such as when reasoning about continuous values. Second, they might generate rules with irrelevant magic values that never appear in the data, and thus suffer from performance issues. Older ILP approaches similarly struggle with magic values. For instance, for Progol (Muggleton, 1995) to learn a rule with a constant symbol, that constant must appear in the bottom clause of an example. Progol, therefore, struggles to learn recursive programs with constant values. It can also struggle when the bottom clause grows extremely large due to many potential magic values.

The goal of this paper, and therefore its main contribution, is to overcome these limitations by introducing an ILP approach that can efficiently learn programs with magic values, including values from infinite and continuous domains. The key idea of our approach, which is heavily inspired by Aleph’s lazy evaluation approach (Srinivasan & Camacho, 1999), is to not enumerate all possible magic values but to instead generate hypotheses with variables in place of constant symbols that are later filled in by a learner. In other words, the learner first builds a partial general hypothesis and then lazily fills in the specific details (the magic values) by examining the given data. For instance, reconsider the task of identifying the magic number 7 in a list. The learner first constructs a partial intermediate hypothesis as the one shown in Fig. 3. In the first clause, the first-order variable B is marked as a constant with the internal predicate @magic. However, it is not bound to any particular constant symbol. The value for this magic variable is lazily identified by executing this hypothesis on the examples.

As the example in Fig. 3 illustrates, the key advantages of our approach compared to existing ones are that it (1) does not rely on enumeration of all constant symbols but only considers candidate constant values which can be obtained from the examples, (2) can learn programs with magic values from large and infinite domains, and (3) can learn magic values for recursive programs.

To implement our approach, we build on the learning from failures (LFF) (Cropper & Morel, 2021) approach. LFF is a constraint-driven ILP approach where the goal is to accumulate constraints on the hypothesis space. A LFF learner continually generates and tests hypotheses, from which it infers constraints. For instance, if a hypothesis is too general (i.e. entails a negative example), then a generalisation constraint prunes generalisations of this hypothesis from the hypothesis space.

Current LFF approaches (Cropper & Morel, 2021; Cropper, 2022; Purgał et al., 2022) cannot, however, reason about partial hypotheses, such as the one shown in Fig. 3. They must instead enumerate candidate constant symbols using unary predicate symbols. Current approaches, therefore, suffer from the same limitations as other recent ILP approaches, i.e. they struggle to scale to large and infinite domains. We, therefore, extend the LFF constraints to prune such intermediate partial hypotheses. Each constraint prunes sets of intermediate hypotheses, each of which represents the set of its instantiations. We prove that these extended constraints are optimally sound: they do not prune optimal solutions from the hypothesis space.

We implement our magic value approach in MagicPopper, which, as it builds on the LFF learner Popper, supports predicate invention (Cropper & Morel, 2021) and learning recursive programs. MagicPopper can learn programs with magic values from domains with millions of constant symbols and scale to infinite domains. For instance, we show that MagicPopper can learn (an approximation of) the value of pi. In addition, in contrast to existing approaches, MagicPopper does not need to be told which arguments may be bound to magic values but instead can automatically identify them if any is needed, although this fully automatic approach comes with a high cost in terms of performance. In particular, it can cost additional learning time and can lower predictive accuracies.

1.1 Contributions

We claim that our approach can improve learning performance when learning programs with magic values. To support our claim, we make the following contributions:

-

1.

We introduce a procedure for learning programs in domains with large and potentially infinite numbers of constant symbols.

-

2.

We extend the LFF hypothesis constraints to additionally prune hypotheses with constant symbols. We prove the optimal soundness of these constraints.

-

3.

We implement our approach in MagicPopper, which supports learning recursive programs and predicate invention.

-

4.

We experimentally show on multiple domains (including program synthesis, drug design, and game playing) that our approach can (1) scale to large search spaces with millions of constant symbols, (2) learn from infinite domains, and (3) outperform existing systems in terms of predictive accuracies and learning times when learning programs with magic values.

2 Related work

2.1 Numeric discovery

Early discovery systems identified relevant numerical values using a fixed set of basic operators, such as linear regression, which combined existing numerical values. The search followed a combinatorial design (Langley et al., 1983; Zytkow, 1987) or was based on beam search guided by heuristics, such as correlation (Nordhausen & Langley, 1990) or qualitative proportionality (Falkenhainer & Michalski, 1986). These systems could rediscover physical laws with magic values. However, the class of learnable concepts was limited. BACON (Langley et al., 1983), for instance, cannot learn disjunctions representing multiple equations. Conversely, MagicPopper can learn recursive programs and perform predicate invention. Moreover, MagicPopper can take as input normal logic program background knowledge and is not restricted to a fixed set of predefined operators.

2.2 Symbolic regression

Symbolic regression searches a space of mathematical expressions, using genetic programming algorithms (Augusto & Barbosa, 2000) or formulating the problem as a mixed integer non-linear program (Austel et al., 2017). However, these approaches cannot learn recursive programs nor perform predicate invention and are restricted to learning mathematical expressions.

2.3 Program synthesis

Program synthesis (Shapiro, 1983) approaches based on the enumeration of the search space (Si et al., 2019; Ellis et al., 2021) struggle to learn in domains with a large number of constant symbols. For instance, the Apperception engine (Evans et al., 2021) disallows constant symbols in learned hypotheses, apart from the initial conditions represented as ground facts. To improve the likelihood of identifying relevant constants, Hemberg et al. (2019) manually identify a set of constants from the problem description. Compared to generate-and-test approaches, analytical approaches do not enumerate all candidate programs and can be faster (Kitzelmann, 2009).

Several program synthesis systems consider partial programs in the search. Neo (Feng et al., 2018) constructs partial programs, successively fills their unassigned parts, and prunes partial programs which have no feasible completion. By contrast, MagicPopper only fills partial hypotheses with constant symbols. Moreover, MagicPopper evaluates hypotheses based on logical inference only while Neo also uses statistical inference. Finally, Neo cannot learn recursive programs. Perhaps the most similar work is Sketch (Solar-Lezama, 2009), which uses an SAT solver to search for suitable constants given a partial program. This approach expects as input a skeleton of a solution: it is given a partial program and the task is to fill in the magic values with particular constants symbols. Conversely, MagicPopper learns both the program and the magic values.

2.4 ILP

2.4.1 Bottom clauses

Early ILP approaches, such as Progol (Muggleton, 1995) and Aleph (Srinivasan, 2001), use bottom clauses (Muggleton, 1995) to identify magic values. The bottom clause is the logically most-specific clause that explains an example. By constructing the bottom clause, these approaches restrict the search space and, in particular, identify a subset of relevant constant symbols to consider. However, this bottom clause approach has multiple limitations. First, the bottom clause may grow large which inhibits scalability. Second, this approach cannot use constants that do not appear in the bottom clause, which is constructed from a single example. Third, this approach struggles to learn recursive programs and does not support predicate invention. Finally, as they rely on mode declarations (Muggleton, 1995) to build the bottom clause, they need to be told which argument of which relations should be bound to a constant.

2.4.2 Lazy evaluation

The most related work is an extension of Aleph that supports lazy evaluation (Srinivasan & Camacho, 1999). During the construction of the bottom clause, Aleph replaces constant symbols with existentially quantified output variables. During the refinement search of the bottom clause, Aleph finds substitutions for these variables by executing the partial hypothesis on the positive and negative examples. In other words, instead of enumerating all constant symbols, lazy evaluation only considers constant symbols computable from the examples. Therefore, lazy evaluation provides better scalability to large domains. This approach can identify constant symbols not seen in the bottom clause. Moreover, in contrast with MagicPopper, it also can identify constant symbols whose value arises from reasoning from multiple examples, such as coefficients in linear regression or numerical inequalities. It also can predict output numerical variables using custom loss functions measuring error (Srinivasan et al., 2006). However, this approach inherits some of the limitations of bottom clause approaches aforementioned including limited learning of recursion and lack of predicate invention. Moreover, the user needs to provide a definition capable of computing appropriate constant symbols from lists of inputs, such as a definition for computing a threshold or regression coefficients from data. The user must also provide a list of variables that should be lazy evaluated or bound to constant symbols in learned hypotheses.

2.4.3 Regression

First-order regression (Karalič & Bratko, 1997) and structural regression tree (Kramer, 1996) predict continuous numerical values from examples and background knowledge. First-order regression builds a logic program that can include literals performing linear regression, whereas MagicPopper cannot perform linear regression. Structural regression tree builds trees with a numerical value assigned to each leaf. In contrast with MagicPopper, these two approaches do not learn optimal programs.

2.4.4 Logical decision and clustering trees

Tilde (Blockeel & De Raedt, 1998) and TIC (Blockeel et al., 1998) are logical extensions of decision tree learners and can learn hypotheses with constant values as part of the nodes that split the examples. These nodes are conjunctions built from the mode declarations. Tilde and TIC evaluate each candidate node, and select the one which results in the best split of the examples. Tilde can also use a discretisation procedure to find relevant numerical constants from large, potentially infinite domains, while making the induction process more efficient (Blockeel & De Raedt, 1997). However, this approach only handles numerical values while MagicPopper can handle magic values of any type. Moreover, Tilde cannot learn recursive programs and struggles to learn from small numbers of examples.

2.4.5 Meta-interpretive learning

Meta-interpretive learning (MIL) (Muggleton et al., 2014) uses meta-rules, which are second-order clauses acting as program templates, to learn programs. A MIL learner induces programs by searching for substitutions for the variables in meta-rules. These variables usually denote predicate variables, i.e. variables that can be bound to a predicate symbol. For instance, the MIL learner Metagol finds variable substitutions by constructing a proof of the examples. Metagol can learn programs with magic values by also allowing some variables in meta-rules to be bound to constant symbols. With this approach, Metagol, therefore, never considers constants which do not appear in the proof of at least one positive example and thus does not enumerate all constants in the search space. Our magic value approach is similar in that we construct a hypothesis with variables in it, then find substitutions for these variables by testing the hypothesis on the training examples. However, a key difference is that Metagol needs a user-provided set of meta-rules as input to precisely define the structure of a hypothesis, which is often difficult to provide, especially when learning programs with relations of arity greater than two. Moreover, Metagol does not remember failed hypotheses during the search and might consider again hypotheses which have already been proved incomplete or inconsistent. Conversely, MagicPopper can prune the hypothesis space upon failure of completeness or consistency with the examples, which can improve learning performance.

2.4.6 Meta-level ILP

To overcome the limitations of older ILP systems, many recent ILP approaches are meta-level (Cropper et al., 2020) approaches, which predominately formulate the ILP problem as a declarative search problem. A key advantage of these approaches is greater ability to learn recursive and optimal programs. Many of these recent approaches precompute every possible rule in a hypothesis (Corapi et al., 2011; Kaminski et al., 2018; Evans & Grefenstette, 2018; Raghothaman et al., 2019). For instance, ASPAL (Corapi et al., 2011) precomputes every possible rule in a hypothesis space, which means it needs to ground rules with respect to every allowed constant symbol. This pure enumeration approach is intractable for domains with large number of constant symbols and impossible for domains with infinite ones. Moreover, the variables which should be bound to constants must be provided as part of the mode declarations by the user (Corapi et al., 2011).

Other recent meta-level ILP systems, such as \(\delta \) -ILP (Evans & Grefenstette, 2018) and Popper (Cropper & Morel, 2021), do not directly allow constant symbols in clauses but instead require that constant symbols are provided as unary predicates. These unary predicates are assumed to be user-provided. Moreover, since the size of the search space is exponential into the number of predicate symbols, this approach prevents scalability and in particular handling domains with infinite number of constant symbols. Conversely, MagicPopper identifies relevant constant symbols by executing hypotheses over the positive examples, and can scale to infinite domains. In addition, it does not express constant symbols with additional predicates and thus can learn shorter hypotheses.

3 Problem setting

Logic preliminaries

We assume familiarity with logic programming (Lloyd, 2012) but restate some key terminology. A variable is a string of characters starting with an uppercase letter. A function symbol is a string of characters starting with a lowercase letter. A predicate symbol is a string of characters starting with a lowercase letter. The arity n of a function or predicate symbol p is the number of arguments it takes. A unary or monadic predicate is a predicate with arity one. A constant symbol is a function symbol with arity zero. A term is a variable or a function symbol of arity n immediately followed by a tuple of n terms. An atom is a tuple \(p(t_1, ..., t_n)\), where p is a predicate of arity n and \(t_1\), ..., \(t_n\) are terms, either variables or constants. An atom is ground if it contains no variables. A literal is an atom or the negation of an atom. A clause is a set of literals. A constraint is a clause without a positive literal. A definite clause is a clause with exactly one positive literal. A program is a set of definite clauses. A substitution \(\theta = \{v_1 / t_1, ..., v_n/t_n \}\) is the simultaneous replacement of each variable \(v_i\) by its corresponding term \(t_i\). A clause \(C_1\) subsumes a clause \(C_2\) if and only if there exists a substitution \(\theta \) such that \(C_1 \theta \subseteq C_2\). A program \(H_1\) subsumes a program \(H_2\), denoted \(H_1 \preceq H_2\), if and only if \(\forall C_2 \in H_2, \exists C_1 \in H_1\) such that \(C_1\) subsumes \(C_2\). A program \(H_1\) is a specialisation of a program \(H_2\) if and only if \(H_2 \preceq H_1\). A program \(H_1\) is a generalisation of a program \(H_2\) if and only if \(H_1 \preceq H_2\).

3.1 Learning from failures

Our problem setting is the learning from failures (LFF) (Cropper & Morel, 2021) setting, which in turn is based upon the learning from entailment setting (Muggleton & De Raedt, 1994). LFF uses hypothesis constraints to restrict the hypothesis space. LFF assumes a meta-language \({\mathcal {L}}\), which is a language about hypotheses. Hypothesis constraints are expressed in \({\mathcal {L}}\). A LFF input is defined as:

Definition 1

A LFF input is a tuple \((E^{+},E^{-},B,{{\mathcal {H}}},C)\) where \(E^{+}\) and \(E^{-}\) are sets of ground atoms representing positive and negative examples respectively, B is a definite program representing background knowledge, \({{\mathcal {H}}}\) is a hypothesis space, and C is a set of hypothesis constraints expressed in the meta-language \({\mathcal {L}}\).

Given a set of hypotheses constraints C, we say that a hypothesis H is consistent with C if, when written in \({{\mathcal {L}}}\), H does not violate any constraint in C. We call \({{\mathcal {H}}}_{C}\) the subset of \({\mathcal {H}}\) consistent with C. We define a LFF solution:

Definition 2

Given a LFF input \((E^{+},E^{-},B,{{\mathcal {H}}},C)\), a LFF solution is a hypothesis \(H \in {{\mathcal {H}}}_C\) such that H is complete with respect to \(E^+\) (\(\forall e \in E^+, B\cup H \models e\)) and consistent with respect to \(E^-\) (\(\forall e \in E^-, B\cup H \not \models e\)).

Conversely, given a LFF input, a hypothesis H is incomplete when \(\exists e \in E^{+}, H \cup B \not \models e\), and is inconsistent when \(\exists e \in E^{-}, H \cup B \models e\).

In general, there might be multiple solutions given a LFF input. We associate a cost to each hypothesis and prefer the ones with minimal cost. We define an optimal solution:

Definition 3

Given a LFF input \((E^{+},E^{-},B,{{\mathcal {H}}},C)\) and a cost function cost : \({{\mathcal {H}}} \rightarrow \mathbb {R}\), a LFF optimal solution \(H_1\) is a LFF solution such that, for all LFF solution \(H_2\), \(cost(H_1)\le cost(H_2)\).

A common bias is to express the cost as the size of a hypothesis. In the following, we use this bias, and we measure the size of a hypothesis as the number of literals in it.

3.1.1 Constraints

A hypothesis that is not a solution is called a failure. A LFF learner identifies constraints from failures to restrict the hypothesis space. We distinguish several kinds of failures, among which are the following. If a hypothesis is incomplete, a specialisation constraint prunes its specialisations, as they are provably also incomplete. If a hypothesis is inconsistent, a generalisation constraint prunes its generalisations, as they are provably also inconsistent. A hypothesis is totally incomplete when \(\forall e \in E^{+}, H \cup B \not \models e\). If a hypothesis is totally incomplete, a redundancy constraint prunes hypotheses that contain one of its specialisations as a subset (Cropper & Morel, 2021). These constraints are optimally sound: they do not prune optimal solutions from the hypothesis space (Cropper & Morel, 2021).

Example 1

(Hypotheses constraints) We call \(c_2\) the unary predicate which holds when its argument is the number 2. Consider the following positive examples \(E^+\) and the hypothesis \(H_0\):

The second example is a list of length 3 while the hypothesis \(H_0\) only entails lists of length 2. Therefore, the hypothesis \(H_0\) does not cover the second positive example and thus is incomplete. We can soundly prune all its specialisations as they also are incomplete. In particular, we can prune the specialisations \(H_1\) and \(H_2\):

4 Magic evaluation

The constraints described in the previous section prune hypotheses. In particular, they can prune hypotheses with constant symbols as shown in Example 1. However, hypotheses identical but with different constant symbols are treated independently despite their similarities.

For instance, Popper could consider all of the hypotheses represented on the left of Fig. 4. Each of these hypotheses would be considered independently. For each of them, Popper learns constraints which prune specialisations, generalisations, or redundancy of this single hypothesis but do not apply to other hypotheses. By contrast, as shown on the right of Fig. 4, MagicPopper represents all these hypotheses jointly as a single one by using variables in place of constant symbols. Thus, MagicPopper reasons simultaneously about hypotheses with similar program structure but different constant symbols.

MagicPopper extends specialisation, generalisation, and redundancy constraints to apply to such partial hypotheses.

Moreover, the unary predicate symbols used by Popper must be provided as bias: it is assumed the user can provide a finite and tractable number of them. Conversely, MagicPopper represents the set of hypotheses with similar structure but with different constant symbols as a single one, and therefore can handle infinite constant domains.

In this section, we introduce MagicPopper’s representation, present these extended constraints, and prove they are optimally sound.

4.1 Magic variables

A LFF learner uses a meta-language \({\mathcal {L}}\) to reason about hypotheses. We extend this meta-language \({\mathcal {L}}\) to represent partial hypotheses with unbound constant symbols. We define a magic variable:

Definition 4

A magic variable is an existentially quantified first-order variable.

A magic variable is a placeholder for a constant symbol. It marks a variable as a constant but does not require the particular constant symbol to be identified. Particular constant symbols can be identified in a latter stage. We represent magic variables with the unary predicate symbol @magic. For example, in the following program H, the variable B marked with the syntax @magic is a magic variable:

This magic variable is not yet bound to any particular value. The use of the predicate symbol @magic allows us to concisely represent the set of all possible substitutions of a variable.

The predicate symbol @magic is an internal predicate. For this reason, literals with this predicate symbol are not taken into account in the rule size. For instance, the hypothesis H above has size 2. Therefore, compared to approaches that use additional unary body literals to identify constant symbols, our representation represents hypotheses with constant symbols with fewer literals.

4.2 Magic hypotheses

A magic hypothesis is a hypothesis with at least one magic variable. An instantiated hypothesis, or instantiation, is the result of substituting magic variables with constant symbols in a magic hypothesis. Magic evaluation is the process of identifying a relevant subset of substitutions for magic variables in a magic hypothesis to form instantiations.

Example 2

(Magic hypothesis) The magic hypothesis H above may have the following corresponding instantiated hypotheses, or instantiations, \(I_1\) and \(I_2\):

Magic hypotheses allow us to represent the hypothesis space more compactly and to reason about the set of all instantiations of a magic hypothesis simultaneously. For instance, the magic hypothesis H above represents concisely all its instantiations, including \(I_1\) and \(I_2\), amongst many other ones. The only instantiation of a non-magic hypothesis is itself.

In practice, we are not interested in all instantiations of a magic hypothesis, but only in a subset of relevant instantiations. In the following, we consider a magic evaluation procedure which only considers instantiations that, together with the background knowledge, entail at least one positive example. We show we can ignore other instantiations.

4.3 Constraints

To improve learning performance, we prune the hypothesis space with constraints (Cropper & Morel, 2021). Given our hypothesis representation, each constraint prunes a set of magic hypotheses, each of which represents the set of its instantiations. In other words, for each magic hypothesis pruned, we eliminate all its instantiations. We identify constraints that are optimally sound in that they do not eliminate optimal solutions from the hypothesis space. Specifically, we consider extensions of specialisation, generalisation, and redundancy constraints for magic hypotheses. We describe them in turn. The proofs are in the appendix.

4.3.1 Extended specialisation constraint

We first extend specialisation constraints. If all the instantiations of a magic hypothesis, together with the background knowledge, entail at least one positive example and are incomplete, then all specialisations of this hypothesis are incomplete:

Proposition 1

(Extended specialisation constraint) Let \((E^{+},E^{-},B,{{\mathcal {H}}},C)\) be a LFF input, \(H_1 \in {{\mathcal {H}}}_C\), and \(H_2 \in {{\mathcal {H}}}_C\) be two magic hypotheses such that \(H_1 \preceq H_2\). If all instantiation \(I_1\) of \(H_1\) such that \(\exists e \in E^{+}, B\cup I_1 \models e\) are incomplete, then all instantiation of \(H_2\) also are incomplete.

We provide an example to illustrate this proposition.

Example 3

(Extended specialisation constraint) Consider the dyadic predicate head which takes as input a list and returns its first element. Consider the following positive examples \(E^+\) and the magic hypothesis \(H_0\):

This hypothesis holds for lists whose first element is a particular constant symbol to be determined. This hypothesis \(H_0\) has the following two instantiations \(I_{0,1}\) and \(I_{0,2}\) covering at least one positive example:

The first instantiation \(I_{0,1}\) holds for lists whose head is the element b. This instantiation covers the first positive example. The second instantiation \(I_{0,2}\) holds for lists whose head is the element c. It covers the second positive example. However, each of these instantiations is incomplete and too specific. Therefore, no instantiation of \(H_0\) can entail all the positive examples. As such, all specialisations of \(H_0\) can be pruned, including magic hypotheses such as \(H_1\) and \(H_2\):

4.3.2 Extended generalisation constraint

We now extend generalisation constraints. If all the instantiations of a magic hypothesis together with the background knowledge entail at least one positive example are inconsistent, then we can prune non-recursive generalisations of this hypothesis and they are either inconsistent or non-optimal:

Proposition 2

(Extended generalisation constraint) Let \((E^{+},E^{-},B,{{\mathcal {H}}},C)\) be a LFF input, \(H_1 \in {{\mathcal {H}}}_C\) and \(H_2 \in {{\mathcal {H}}}_C\) be two magic hypotheses such that \(H_2\) is non-recursive and \(H_2 \preceq H_1\). If all instantiation \(I_1\) of \(H_1\) such that \(\exists e \in E^{+}, B\cup I_1 \models e\) are inconsistent, then all instantiations of \(H_2\) are inconsistent or non-optimal.

We illustrate generalisation constraints with the following example and give a counter-example to explain why non-recursive hypotheses cannot be pruned.

Example 4

(Extended generalisation constraint) Consider the following positive examples \(E^+\), the negative examples \(E^-\) and the magic hypothesis \(H_0\):

This hypothesis \(H_0\) has the following two instantiations \(I_{0,1}\) and \(I_{0,2}\) covering at least one of these positive examples:

The first instantiation \(I_{0,1}\) holds for lists whose head is the element b. This instantiation covers the first positive example and the first negative example. The second instantiation \(I_{0,2}\) holds for lists whose head is the element c. It covers the second positive example and the second negative example. Each of these instantiations is inconsistent and thus is too general. As such, all non-recursive generalisations of \(H_0\) can be pruned. In particular, the magic hypotheses \(H_1\) and \(H_2\) below are non-recursive generalisations of \(H_0\) and can be pruned:

However, there might exist other instantiations of \(H_0\) which do not cover any positive examples but are not inconsistent, such as \(I_{0,3}\):

This instantiation could be used to construct a recursive solution, such as I:

The instantiation I holds for list which contain the element a at any position.

4.3.3 Extended redundancy constraint

We extend redundancy constraints for magic hypotheses. If a magic hypothesis has no instantiations which, together with the background knowledge, entail at least one positive example, we show that it is redundant when included in any non-recursive hypothesis.

Proposition 3

(Extended redundancy constraint) Let \((E^{+},E^{-},B,{{\mathcal {H}}},C)\) be a LFF input, \(H_1 \in {{\mathcal {H}}}_C\) be a magic hypothesis. If \(H_1\) has no instantiation \(I_1\) such that \(\exists e \in E^{+}, B\cup I_1 \models e\), then all non-recursive magic hypotheses \(H_2\) which contain a specialisation of \(H_1\) as a subset are non-optimal.

We illustrate this proposition with the following example and provide a counter-example to explain why non-recursive hypotheses cannot be pruned.

Example 5

(Extended redundancy constraint) Consider the following positive examples \(E^+\) and the magic hypothesis \(H_0\):

This hypothesis \(H_0\) holds for lists which contain a single element which is a particular constant to be determined. However, both examples have length greater or equal to 2. Therefore, among the possible instantiations of the hypothesis \(H_0\), there are no instantiations which, together with the background knowledge, cover at least one positive example. \(H_0\) cannot entail any of the positive examples and is redundant when included in a non-recursive hypothesis. As such, all hypotheses which contain a specialisation of \(H_0\) as a subset are non-optimal. In particular, the magic hypotheses \(H_1\) and \(H_2\) below contain a specialisation of \(H_0\) as a subset. They are non-optimal and can be pruned:

However, there might exist other instantiations of \(H_0\) which are not redundant in recursive hypotheses. For instance, the following recursive instantiated hypothesis I includes an instantiation of \(H_0\) as a subset but may not be non-optimal:

This instantiation holds for lists whose last element is the constant c. It covers both positive examples but none of the negative examples.

While extended specialisation constraints are sound, extended redundancy and generalisation constraints are only optimally sound. They might prune solutions from the hypothesis space but do not prune optimal solutions.

4.3.4 Constraint summary

We summarise our constraint framework as follows. Given a magic hypothesis H, the learner can infer the following extended constraints under the following conditions:

-

1.

If all instantiations of H which, together with the background knowledge, entail at least one positive example are incomplete, according to Proposition 1, we can prune all its specialisations.

-

2.

If all instantiations of H which, together with the background knowledge, entail at least one positive example are inconsistent, according to Proposition 2, we can prune all its non-recursive generalisations.

-

3.

If the magic hypothesis H has no instantiation which, together with the background knowledge, entail at least one positive example, according to Proposition 3, we can prune all non-recursive hypotheses which contain one of its specialisations as a subset.

While Proposition 1 can prune recursive hypotheses, Proposition 2 and Proposition 3 do not prune recursive hypotheses. Therefore, pruning is stronger when recursion is disabled.

We have described our representation of the hypothesis space with magic hypotheses. We have extended specialisation, generalisation, and redundancy constraints to prune magic hypotheses and we have demonstrated these extended constraints are optimally sound. The next section theoretically evaluates the gain over the size of the search space of using magic hypotheses compared to identifying constant symbols with unary predicates.

4.4 Theoretical analysis

Our representation includes magic hypotheses which contain magic variables. Each magic variable stands for the set of its substitutions. Therefore, we do not enumerate constant symbols in the hypothesis space by opposition with existing approach. Our experiments focus on comparing MagicPopper with approaches which enumerate possible constant symbols with unary body predicates. We focus in this section on theoretically evaluating the reduction over the hypothesis space size of not enumerating all candidate constant symbols as unary predicates, and instead using magic variables.

Proposition 4

Let \(D_b\) be the number of body predicates available in the search space, m be the maximum number of body literals allowed in a clause, c the number of constant symbols available, and n the maximum number of clauses allowed in a hypothesis. Then the maximum number of hypotheses in the hypothesis space can be multiplied by a factor of \((\frac{D_b+c}{D_b})^{mn}\) if representing constants with unary predicate symbols, one per allowed constant symbol, compared to using magic variables.

A proof of Proposition 4 is in the appendix. Proposition 4 shows that allowing magic variables can reduce the size of the hypothesis space compared to enumerating constant symbols through unary predicate symbols. The ratio is a increasing function of the number of constant symbols available and the complexity of hypotheses, measured as the number of clauses allowed in hypotheses n and the number of body literals allowed in clauses m. Similar analysis can be conducted for approaches which enumerate constant symbols in the arguments of clauses. More generally, Proposition 4 suggests that enumerating constant symbols can increase the size of the hypothesis space compared to using magic variables.

5 Implementation

We now describe MagicPopper, which implements our magic evaluation idea. We first describe Popper, on which MagicPopper is based.

5.1 Popper

Popper (Cropper & Morel, 2021) is a LFF learner. It takes as input a LFF input, which contains a set of positive (\(E^+\)) and negative (\(E^-\)) examples, background knowledge (B), a bound over the size of hypotheses allowed in \({{\mathcal {H}}}\), and a set of hypotheses constraints (C). Popper represents hypotheses in a meta-language \({\mathcal {L}}\). This meta-language \({\mathcal {L}}\) contains literals head_literal/4 and body_literal/4 representing head and body literals respectively. These literals have arguments (Clause,Pred,Arity,Vars) and denote that there is a head or body literal in the clause Clause, with the predicate symbol Pred, arity Arity, and variables Vars. For instance, the following set of literals:

represents the following clause with id 0:

To generate hypotheses, Popper uses an ASP program P whose models are hypothesis solutions represented in the meta-language \({\mathcal {L}}\). In other words, each model (answer set) of P represents a hypothesis. A simplified version of the base ASP program (without the predicate declarations which are problem specific) is represented in Fig. 5. Popper uses a generate, test, and constrain loop to find a solution. First, it generates a hypothesis as a solution to the ASP program P with the ASP system Clingo (Gebser et al., 2014). Popper searches for hypotheses by increasing size, the size being evaluated as the number of literals in a hypothesis. Popper tests this hypothesis against the examples, typically using Prolog. If the hypothesis is a solution, it is returned. Otherwise, the hypothesis is a failure: Popper identifies the kind of failure and builds constraints accordingly. For instance, if the hypothesis is inconsistent (entails a negative example) Popper builds a generalisation constraint. Popper adds these constraints to the ASP program P to constrain the subsequent generate steps. This loop repeats until a hypothesis solution is found or until there are no more models to the ASP program P.

Simplified Popper ASP base program for single clause programs. There is exactly one head literal per clause. There are at most N body literals per clause, where N is a user-provided parameter describing the maximum number of body literals allowed in a clause. Our modification is highlighted in bold: we allow at most M variables to be magic variables, where M is a user-provided parameter

5.2 MagicPopper

MagicPopper builds on Popper to support magic evaluation. MagicPopper likewise follows a generate, test, and constrain loop to find a solution. We describe in turn how each of these steps works.

5.2.1 Generate

Figure 5 shows our modification to Popper’s base ASP encoding in bold. In addition to head_literal/4 and body_literal/4, MagicPopper can express magic_literal/2. Magic literals have arguments (Clause,Var) and denote that the variable Var in the clause Clause is a magic variable. There can be at most M magic literals in a clause, where M is a user defined parameter with default value 4. This setting expresses the trade-off between search complexity and expressivity.

In addition to the standard Popper input, and a maximum number of magic values per clause, MagicPopper can receive information about which variables can be magic variables. This information can be provided with three different settings: Arguments, Types, and All. For instance, given the predicate declarations represented in Fig. 6, Fig. 7 illustrates how the user can provide additional bias with each of these settings. A user can specify individually a list of some arguments of some predicates (Arguments) or a list of variable types (Types). Otherwise, if no information is given, MagicPopper treats any variable as a potential magic variable (All). For any of these settings, MagicPopper searches for a subset of the variables specified by the user for the magic variables. Therefore, All always considers a larger hypothesis space than Arguments and Types. Arguments is the setting closest to mode declarations (Muggleton, 1995; Blockeel & De Raedt, 1998; Srinivasan, 2001; Corapi et al., 2011). Mode declarations however impose a stricter bias: while Arguments treats the flagged arguments as potential magic values, mode declarations specify an exact list of arguments which must be constant symbols.Footnote 2 With the All setting, MagicPopper can automatically identify which variable to treat as magic variables at the expense of more search. In Sect. 6.7, we experimentally evaluate the impact on learning performance of these different settings. In Sect. 6.8, we evaluate the impact on learning performance of allowing magic values (All setting) when it is unnecessary.

Example of the different bias settings for MagicPopper. Variables that can be magic variables are represented in bold. Arguments can treat as magic variables some specified arguments of specified predicates. Type can treat as a magic variable any variable of the specified types. All expects no additional information and may treat any variable as a magic variable

The output of the generate step is a hypothesis which may contain magic variables, such as the one shown on the right of Fig. 4. By contrast, most ILP approaches (Corapi et al., 2011; Evans & Grefenstette, 2018; Cropper & Morel, 2021) cannot generate hypotheses with magic variables but instead require enumerating constant symbols. Popper and \(\delta \) -ILP use unary predicates to represent constant symbols, as shown on the left of Fig. 4. Aspal precomputes all possible rules with some arguments grounded to constant symbols. Conversely, owing to the use of magic variables, MagicPopper benefits from a more compact representation of the hypothesis space.

5.2.2 Test

Magic evaluation is executed during the test step. To identify substitutions for magic variables, we add magic variables as new head arguments. We execute the resulting program on the positive examples. We save the substitutions for the new head variables. We then bound these substitutions to their corresponding magic variables and remove the additional head arguments.

Example 6

(Magic evaluation) Consider the magic hypothesis \(H_1\) below:

We add magic variables as new head variables. H thus becomes \(H_1^\prime \).

We execute \(H_1^\prime \) on the positive examples to find substitutions for the magic variable B. Assume the single positive example f([a, b, c]). We transform it into f([a, b, c], B) and we find the substitution 3 for the variable B. We bind this value to the magic variable in the hypothesis, which results in the following instantiation:

Example 7

(Magic evaluation of recursive hypothesis) Similarly, the recursive hypothesis \(H_2\) below becomes \(H_2^{\prime }\).

We execute \(H_2^\prime \) on the positive examples to find substitutions for the magic variables B and D.

With this procedure, MagicPopper only identifies constants which can be obtained from the positive examples. In this sense, MagicPopper does not consider irrelevant constant symbols.

Example 8

(Relevant instantiations) Given the positive examples \(E^+~=~\{f([a,e]),f([])\}\), we consider only the two instantiations \(I_{1,1}\) and \(I_{1,2}\) for the magic hypothesis \(H_1\):

We use Prolog to execute programs because of its ability to use lists and handle large, potentially infinite, domains. As a consequence of using Prolog, our reasoning to deduce candidate magic values is based on backward chaining, in contrast to systems that rely on forward chaining (Corapi et al., 2011; Evans & Grefenstette, 2018; Kaminski et al., 2018; Evans et al., 2021).

A limitation of the aforementioned approach is the execution time of learned programs to identify all possible bindings. This approach is especially expensive when a hypothesis contains multiple magic variables, in which case one must consider the combinations of their possible bindings.

Example 9

(Execution time complexity) Consider the hypothesis H:

The hypothesis H is the disjunction of two clauses, each of which contains one magic value, respectively B1 and B2. Since B1 and B2 can be bound to different constant symbols, this hypothesis is allowed in the search space despite having two clauses with the exact same literals. More generally, we allow identical clauses with magic variables.

This hypothesis means that any of two particular elements appears in a list. We search for substitutions for the magic variables B1 and B2. We call n the size of input lists. The number of substitutions for the magic variable B1 in the first clause is O(n). Similarly, the number of substitutions for the magic variable B2 in the second clause is O(n). Therefore, the number of instantiations for H is \(O(n^2)\).

5.2.3 Constrain

If MagicPopper identifies that a hypothesis has no instantiation, no complete instantiation, or no consistent instantiation, it generates constraints as explained in Sect. 4.3.4. Additionally, MagicPopper generates a banish constraint if no other constraints can be inferred. The banish constraint prunes this single hypothesis from the hypothesis space. In other words, it ensures that the same hypothesis will not be generated again in subsequent generate steps (Cropper & Morel, 2021). These constraints prune the hypothesis space and constrain the following iterations.

6 Experiments

We now evaluate our approach.

6.1 Experimental design

Our main claim is that MagicPopper can improve learning performance compared to current ILP systems when learning programs with magic values. Our experiments, therefore, aim to answer the question:

- Q1:

-

How well does MagicPopper perform compared to other approaches?

To answer Q1, we compare MagicPopper against Metagol, Aleph, and Popper.Footnote 3MagicPopper uses different biases than Metagol and Aleph. Therefore a direct comparison is difficult and our results should be interpreted as indicative only. By contrast, as MagicPopper is based on Popper, the comparison against Popper is more controlled. The experimental difference between the two is the addition of our magic evaluation procedure and the use of extended constraints.

A key limitation of approaches that enumerate all possible constants allowed in a rule (Corapi et al., 2011; Evans & Grefenstette, 2018; Cropper & Morel, 2021) is difficulty learning programs from infinite domains. By contrast, we claim that MagicPopper can learn in infinite domains. Therefore, our experiments aim to answer the question:

- Q2:

-

Can MagicPopper learn in infinite domains?

To answer Q2, we consider several tasks in infinite and continuous domains that require magic values as real numbers or integers.

Proposition 4 shows that our magic evaluation procedure can reduce the search space and thus improve learning performance compared to using unary body predicates. We thus claim that MagicPopper can improve scalability compared to Popper. To explore this claim, our experiments aim to answer the question:

- Q3:

-

How well does MagicPopper scale?

To answer Q3, we vary the number of (1) constant symbols in the background knowledge, (2) magic values in the target hypotheses, and (3) training examples. We use as baseline Popper. We compare our experimental results with our theoretical analysis from Sect. 4.4.

Unlike existing approaches, MagicPopper does not need to be told which variables may be magic variables but can automatically identify this information. However, it can use this information if provided by a user. To evaluate the importance of this additional information, our experiments aim to answer the question:

- Q4:

-

What effect does additional bias about magic variables have on the learning performance of MagicPopper?

To investigate Q4, we compare different settings for MagicPopper, each of which assumes different information regarding which variables may be magic variables. We use as baseline Popper.

Our approach should improve learning performance when learning programs with magic values. However, in practical applications, it is unknown whether magic values are necessary. To evaluate the cost in performance when magic values are unnecessary, our experiments aim to answer the question:

- Q5:

-

What effect does allowing magic values have on the learning performance when magic values are unnecessary?

To answer Q5, we compare the learning performance of MagicPopper and Popper on problems that should not require magic values. We set MagicPopper to allow any variable to potentially be a magic value.

6.1.1 Experimental settings

Given p positive and n negative examples, tp true positives and tn true negatives, we define the predictive accuracy as \(\frac{1}{2}(\frac{tp}{p}+\frac{tn}{n})\). We measure mean predictive accuracies, mean learning times, and standard errors of the mean over 10 repetitions. We use an 8-Core 3.2 GHz Apple M1 and a single CPU.Footnote 4

6.1.2 Systems settings

Aleph

Aleph is allowed constant symbols through the mode declarations or lazy evaluation.

Metagol

Metagol needs as input second-order clauses called metarules. We provide Metagol with a set of almost universal metarules for a singleton-free fragment of monadic and dyadic Datalog (Cropper & Tourret, 2020) and additional curry metarules to identify constant symbols as existentially quantified first-order variables.

Popper and MagicPopper

Both systems use Popper 2.0.0 (also known as Popper+) (Cropper & Hocquette, 2022). We provide Popper with one unary predicate symbol for each constant symbol available in the background knowledge. We set for both systems the same parameters bounding the search space (maximum number of variables and maximum number of literals in the body of clauses). Therefore, since MagicPopper does not count magic literals in program sizes, it considers a larger search space than Popper. We provide both systems with types for predicate symbols. In particular, unary predicates provided to Popper are typed. Therefore, to ensure a fair comparison, we provide MagicPopper with a list of types to describe the set of variables which may be magic variables. As explained in Sect. 5.2, we could have instead provided MagicPopper with a list of arguments of particular predicate symbols to describe the set of variables which may be magic variables. Providing a list of predicate arguments would have been a setting closer to mode declarations, which Aleph uses. However, when specifying types for magic variables, the search space is larger than when specifying particular arguments of some predicates symbols. Moreover, our setting specifies which variables can be magic variables, and MagicPopper searches for a subset of these variables. Conversely, modes specify which variables must be constant symbols. In this sense, this setting for MagicPopper considers a larger hypothesis space than Aleph. In Sect. 6.7, we evaluate and compare the effect on learning performance of these different settings for specifying magic variables.

6.2 Q1: comparison with other systems

6.2.1 Experimental domains

We compare MagicPopper against state-of-the-art ILP systems. This experiment aims to answer Q1. We consider several domains. Full descriptions of these domains are in the appendix. We use a timeout of 600s for each task.

IGGP

In inductive general game playing (IGGP) (Cropper et al., 2020), agents are given game traces from the general game playing competition (Genesereth & Björnsson, 2013). The task is to induce a set of game rules that could have produced these traces. We use four IGGP games which contain constant symbols: md (minimal decay), buttons, coins, and gt-centipede. We learn the next relation in each game, the goal relation for buttons, coins, gt-centipede and the legal relation for gt-centipede. These tasks involve the identification of respectively 5, 31, 3, 14, 6, 4, 29 and 8 magic values. Figures 8 and 9 represent examples of some target hypotheses. We measure balanced accuracies and learning times.

KRK

The task is to learn a chess pattern in the king-rook-king (krk) endgame, which is the chess ending with white having a king and a rook and black having a king. We learn the concept of rook protection by its king (Hocquette & Muggleton, 2020). An example target solution is presented in Fig. 10. This task involves identifying 4 magic values.

Program synthesis: list, powerof2 and append

For list, we learn a hypothesis describing the existence of the magic number ‘7’ in a list. Figure 2 in the introduction shows an example solution. For powerof2, we learn a hypothesis which describes whether a number is of the form \(2^k\), with k integer. These two problems involve learning a recursive hypothesis. For append, we learn that lists must have a particular suffix of size 2. For list, there are 4000 constants in the background knowledge. Examples are lists of size 500. For powerof2, examples are numbers between 2 and 1000, there are 1000 constants in the background knowledge. For append, examples are lists of size 10, there are 1000 constants in the background knowledge.

6.2.2 Results

Table 1 shows the learning times. It shows MagicPopper can solve each of the tasks in at most 100s, often a few seconds. To put these results into perspective, an approach that precomputes the hypothesis space (Corapi et al., 2011) would need to precompute at least \((\#\text {preds} \#\text {constants})^{\#\text {literals}}\) rules. For instance, for buttons-next, this approach would need to precompute at least \((5*16)^{10} = O(10^{19})\) rules, which is infeasible. Conversely, MagicPopper solves this task in 3 seconds.

Popper is based on enumeration of possible constant symbols: it uses unary predicate symbols, one for each possible constant symbol. Compared to Popper, MagicPopper has shorter learning times on seven tasks (md, buttons-goal, coins-goal, gt-centipede-goal, gt-centipede-legal, gt-centipede-next, krk, list, powerof2 and append) and longer learning times on two tasks (buttons-next and coins-next). A paired t-test confirms the significance of the difference for these ten tasks at the \(p<0.01\) level. For instance, MagicPopper can solve the krk problem in 6s while Popper requires almost 35s.

There are three main reasons for this improvement. First, MagicPopper reasons about magic hypotheses while Popper cannot. Each magic hypothesis represents the set of its possible instantiations, which alleviates the need to enumerate all possible constant symbols. The constraints MagicPopper formulates eliminate magic hypotheses, which prunes more instantiated programs. Second, compared to Popper, MagicPopper does not need additional unary predicates to represent constant symbols. This feature allows MagicPopper to learn shorter hypotheses with constant symbols as arguments instead. For instance, in the krk experiment, MagicPopper typically learns a hypothesis with 3 body literals while Popper typically needs 6 body literals, including 3 body literals to represent constant symbols. Popper thus needs to search up to a larger depth compared to MagicPopper. As demonstrated by Proposition 4, these two reasons lead to a smaller hypothesis space. Finally, MagicPopper tests hypotheses against the positive examples and only considers instantiations which, together with the background knowledge, entail at least one positive example. In this sense, MagicPopper never considers irrelevant constant symbols. For these three reasons, MagicPopper considers fewer hypotheses which explains the shorter learning times.

However, given a search bound, MagicPopper searches a larger space than Popper since it does not count the magic literals in the program size. MagicPopper considers the same programs as Popper, but also programs with magic values whose size would exceed the search bound if representing magic values with unary predicate symbols. Therefore, MagicPopper can require longer running time than Popper, which is the case for two tasks (buttons-next and coins-next).

Aleph restricts the possible constants to constants appearing in the bottom clause, which is the logically most-specific clause that explains an example. Aleph also can identify constant symbols through a lazy evaluation procedure (Srinivasan & Camacho, 1999), which has inspired our magic evaluation procedure. Therefore, Aleph does not consider irrelevant constant symbols but only symbols that can be obtained from the examples. Compared to Aleph, MagicPopper has shorter learning times on four tasks (buttons-next, coins-next, list, append). A paired t-test confirms the significance of the difference in learning times for these tasks at the \(p<0.01\) level. However, in contrast to Aleph, MagicPopper searches for optimal solutions. Moreover, MagicPopper is given a weaker bias about which variables can be magic variables.

Metagol identifies relevant constant symbols by constructing a proof for the positive examples. Therefore, it also considers only relevant constant symbols that can be obtained from the examples. Compared to MagicPopper, Metagol has longer learning times on 6 tasks, similar learning times on 3 tasks, and better learning time on three tasks.

Table 2 shows the predictive accuracies. MagicPopper achieves higher or equal accuracies than Metagol, Aleph, and Popper, apart on gt-centipede-goal. This improvement can be explained by the fact that MagicPopper can learn in domains other systems cannot handle. For instance, MagicPopper supports learning with predicate symbols of arity more than two, which is necessary for the IGGP games and the krk domain. By contrast, Metagol cannot learn hypotheses with arity greater than 2 given the set of metarules provided. Compared to MagicPopper, Aleph struggles to learn recursive hypotheses. However, Aleph performs well on the tasks which do not require recursion, reaching similar or better accuracy than MagicPopper on seven tasks (md, buttons-goal, gt-centipede-goal, gt-centipede-legal, gt-centipede-next krk, and append). Finally, compared to Popper, MagicPopper can achieve higher accuracies. For instance, on the list problem, MagicPopper reaches 100% accuracy while Popper achieves the default accuracy. Since it does not enumerate constant symbols, MagicPopper can search a smaller space than Popper, and thus its learning time can be shorter. Therefore, it is more likely to find a solution before timeout. Also, according to the Blumer bound (Blumer et al., 1989), given two hypotheses spaces of different sizes, searching the smaller space can result in higher predictive accuracy compared to searching the larger one if a target hypothesis is in both.

Given these results, we can positively answer Q1 and confirm that MagicPopper can outperform existing approaches in terms of learning times and predictive accuracies when learning programs with magic values.

6.3 Q2: learning in infinite domains

We evaluate the performance of MagicPopper in infinite domains and compare it against the performance of Popper, Aleph, and Metagol. This experiment aims to answer Q1 and Q2. We consider five tasks. Full descriptions are in the appendix. We use a timeout of 600s for each of these tasks.

6.3.1 Experimental domains

Learning Pi

The goal of this task is to learn a mathematical equation over real numbers expressing the relation between the radius of a disk and its area.

This task involves identifying the magic value pi up to floating-point precision. We allow a precision error of \(10^{-3}\). Figure 11 shows an example solution.

Equilibrium

The task is to identify a relation describing mechanical equilibrium for an object subject to its weight and other forces whose values are known. This task involves identifying the gravitational constant g up to floating-point precision. We allow a precision error of \(10^{-3}\). Figure 12 shows an example of the target hypothesis.

Drug design

The goal of this task is to identify molecule properties representing suitable medicinal activity. An example is a molecule which is represented by the atoms it contains and the pairwise distance between these atoms. Atoms have varying types. Figure 13 shows an example solution. This task involves identifying two magic values representing the particular atom types “o” and “h” and one magic value representing a specific distance between two atoms.

Program Synthesis: next and sumk

For next, we learn a hypothesis for identifying the element following a magic value in a list. For example, given the magic value 4.543, we may have the positive example next([1.246, 4.543, 2.156],2.156). Figure 14 shows an example solution. Examples are lists of size 500 of float numbers. For sumk, we learn a relation describing that two elements of a list have a sum equal to k, where k is an integer magic value. Examples are lists of size 50 of integer numbers. Figure 15 shows an example of target hypothesis.

6.3.2 Results

Tables 1 and 2 show the results. They show that, compared to Popper, MagicPopper achieves higher accuracy.Footnote 5Popper cannot identify hypotheses with magic values in infinite domains because it cannot represent an infinite number of constant symbols. Thus, it achieves the default accuracy. Metagol cannot learn hypotheses with arity greater than 2 given the metarules provided and therefore struggles on these tasks. It also struggles when the proof length is large, such as when examples are lists of large size. Aleph, through the use of lazy evaluation, performs well on the tasks which do not require recursion, especially pi and equilibrium. However, it struggles on next and sumk which both require recursion. The learning time of MagicPopper is better than that’s of Aleph on one of the two tasks Aleph can solve, but worse on the other. However, in contrast to Aleph, MagicPopper searches for optimal hypotheses. Moreover, MagicPopper searches a larger search space since it is given as bias the types of variables which can be magic variables while Aleph is given the arguments of some predicate symbols through the mode declarations.

These results demonstrate that MagicPopper can identify magic values in infinite domains. These results confirm our answer to Q1. Also, we positively answer Q2.

6.4 Q3: scalability with respect to the number of constant symbols

We now evaluate how well our approach scales. First, we evaluate how well our approach scales with the number of constant symbols. To do so, we need domains in which we can control the number of constant symbols. We consider two domains: list and md. In the list experiment, described in Sect. 6.2.1, we use an increasingly larger set of constant symbols disjoint from \(\{7\}\) in the background knowledge. In the md experiment, also described in Sect. 6.2.1, we vary the number of next values available. We use a timeout of 60s for each task. Full details are in the appendix.

6.4.1 Results

Figures 16 and 17 show the learning times of Popper, MagicPopper, Aleph, and Metagol versus the number of constant symbols. These results show that MagicPopper has a significantly shorter learning time than Popper. Popper needs a unary predicate symbol in the background knowledge for each constant symbol, thus the search space grows with the number of constant symbols. Moreover, Popper considers individually and exhaustively each of the candidate constant symbols. Therefore, Popper cannot scale to large background knowledge including a large number of constant symbols. It is overwhelmed by 800 constant symbols in the list domain and 200 constant symbols in the md domain, and it systematically reaches timeout after. By contrast, MagicPopper does not consider every constant symbol but only relevant ones which can be identified from executing the hypotheses on the examples. Thus, it can scale better and can learn from domains with more than 3 million constant symbols. This result supports Proposition 4, which demonstrated that allowing magic variables can reduce the size of the hypothesis space compared to adding unary predicate symbols and that the difference in the size of the search spaces increases with the number of constant symbols available in the background knowledge.

Figures 18 and 19 show the predictive accuracy of Popper, MagicPopper, Aleph, and Metagol versus the number of constant symbols. Popper rapidly converges to the default accuracy (50%) since it reaches timeout. Conversely, MagicPopper constantly achieves maximal accuracy and outperforms all other systems. In the md domain, negative examples must be sampled from a large number of constant symbols, which also can explain the drops in accuracy. Aleph struggles to learn recursive programs which explains its low predictive accuracy in the list domain. Moreover, Aleph is based on the construction of a bottom clause. The bottom clause can grow very large in both domains when the number of constant symbols augments, which can overwhelm the search. Metagol can learn programs with constant symbols using the curry metarules. It performs well and scales to a large number of constant symbols in the list experiment. However, the metarules provided are not expressive enough to support learning with higher-arity predicates, which in particular prevents Metagol from learning a solution for md for any of the numbers of constants tested.

These results confirm our answer to Q1. They also show that the answer to Q3 is that MagicPopper can scale well with the number of constant symbols, up to millions of constant symbols.

6.5 Q3: scalability with respect to the number of magic values

To evaluate scalability with respect to the number of magic values, we vary the number of magic values within the target hypothesis. We vary the number of magic values along two dimensions (1) the number of magic values within one clause, and (2) the number of magic values in different independent clauses.

6.5.1 Magic values in one clause

We first evaluate scalability with respect to the number of magic values in the same clause. We learn hypotheses of the form presented in Fig. 20, where the number of body literals varies. There are 100 constants in the background knowledge. Lists have size 100. We use a timeout of 60s for each task. Full experimental details are in the appendix.

Results

Figures 21 and 22 show the learning times and predictive accuracies. These results show that, for a small number of magic values, MagicPopper achieves shorter learning times than Popper. This results in higher predictive accuracies since Popper might not find a solution before timeout. From 3 magic values, both systems reach timeout and their performance is similar. When increasing the number of magic values, the number of body literals increases and more search is needed. In particular, Popper requires twice as many body literals compared to MagicPopper, as it needs unary predicates to represent constant symbols. MagicPopper evaluates magic values within the same clause jointly. For each positive example, it considers the cartesian product of their possible values. The complexity is of the order \(O(n^k)\), where n is the size of lists and k is the number of magic values. The complexity is exponential in the number of magic values, which limits scalability when increasing the number of magic values. These results show that MagicPopper can scale as well as Popper with respect to the number of magic values in the same clause, thus answering Q3. However, scalability is limited for both systems. More generally, scalability with respect to the number of magic values is limited for large inseparable programs, such as programs with several magic values in the same clause or in recursive clauses with the same head predicate symbol.

6.5.2 Magic values in multiple clauses

We now evaluate scalability with respect to the number of magic values in different independent clauses. We learn hypotheses of the form presented in Fig. 23, where the number of clauses varies. There are 500 constants in the background knowledge. Lists have size 500. Each clause is independent. We use a timeout of 60s for each task. Full experimental details are in the appendix.

Results

Figure 24 shows the learning times. The accuracy is maximal for both systems for any of the numbers of magic values tested. This result shows that MagicPopper and Popper both can handle a large number of magic values in different clauses, up to at least 70. Moreover, MagicPopper significantly outperforms Popper in terms of learning times. For instance, MagicPopper can learn a hypothesis with 50 magic values in 50 different clauses in about 2s, while Popper requires 14s. This result shows that MagicPopper can scale well, in particular better than Popper, with respect to the number of magic values in different clauses, thus answering Q3.

As the number of magic values increases, the target hypothesis has more clauses. Both systems must consider an increasingly larger number of programs to test. However, MagicPopper considers magic programs and only considers instantiations which cover at least one example, which is more efficient than enumerating all possible instantiations.

We use a version of Popper (Cropper, 2022) which learns non-separable programs independently and then combines them. This strategy is efficient to learn disjunctions of independent clauses, which explains the difference in scale from the previous experiment. For non-separable hypotheses, MagicPopper must evaluate magic variables jointly as described in the previous experiment.

6.6 Q3: scalability with respect to the number of examples

This experiment aims to evaluate how well MagicPopper scales with the number of examples. We learn the same hypothesis as in Sect. 6.4. This task involves learning a recursive hypothesis to identify a magic value in a list. We compare Popper and MagicPopper. We use the same material and methods as in Sect. 6.4. We vary the number of examples: for n between 1 and 3000, we sample n positive examples and n negative ones. Lists have size at most 50, and there are 200 constant symbols in the background knowledge. We use a timeout of 60s for each task.

6.6.1 Results

Figures 25 and 26 show the results. They show both MagicPopper and Popper can learn with up to thousands of examples. However, MagicPopper reaches timeout from 4000 examples while Popper reaches timeout from 9000 examples. Their accuracy consequently drops to the default accuracy from these points respectively. This result shows that MagicPopper has worse scalability than Popper with respect to the number of examples, thus answering Q3. For both Popper and MagicPopper, we observe a linear increase in the learning time with the number of examples. When increasing the number of examples, executing the candidate hypotheses over the examples takes more time. In particular, MagicPopper searches for substitutions for the magic variables which cover at least one positive example. Therefore potentially more bindings for magic variables can be identified. Then, more bindings are tried out over the remaining examples as the number of examples increases. MagicPopper eventually needs to consider every constant symbol as a candidate constant. Moreover, since MagicPopper does not take in account the magic literals into the program size, it can consider a larger number of programs with constant symbols than Popper for any given program size bound, which also explains how its learning time increases faster than the learning time of Popper. This result highlights one limitation of MagicPopper.

6.7 Q4: effect of the bias about magic variables

In contrast to mode-directed approaches (Muggleton, 1995; Srinivasan & Camacho, 1999; Corapi et al., 2011), MagicPopper does not need to be provided as input which variables should be magic variables but instead can automatically identify them. It can, however, use this additional information if given as input. We investigate the impact of this additional bias on learning performance and thus aim to answer Q4.

6.7.1 Material and methods

We consider the domains presented in Sect. 6.2.1. We compare three variants of MagicPopper:

- All:

-

we allow any variable to potentially be a magic variable.

- Types:

-

we allow any variable of types manually chosen to potentially be a magic variable. For instance, for md, we allow any variable of type agent, action and int to potentially be a magic variable.

- Arguments:

-

we manually specify a list of arguments of some predicates symbols that can potentially be magic variables. For instance, for md, we flag the second argument of next and the second and third arguments of does.

Arguments is most closely related to mode declarations approaches, which expect a specification for each argument of each predicate. However, the specifications of Arguments are more flexible since MagicPopper considers the flagged variables as potential magic variables and searches for a subset of these variables to bind to constant symbols. By contrast, mode declarations are stricter and specify exactly which arguments must be constants. Types is comparable to Popper, which is provided with types for the unary predicates in our experiments. Types, Arguments and mode declarations require a user to specify some information about which variables can be bound to constant symbols.

The variables which may be a magic variable in Arguments are a subset of those of Types, which themselves are a subset of those of All. In this sense, the search space is increasingly larger. We compare learning times and predictive accuracies for each of these systems. We provide learning times of Popper as a baseline. We use a timeout of 600s per task.

6.7.2 Results

Table 3 shows the learning times. These results show that in general, All requires learning times longer than or equal to Types, which in turn requires learning times longer than or equal to Arguments. For instance, All reaches timeout on the task list, while Types and Arguments require respectively 2s and 1s. In some experiments such as krk, Types and Arguments have equivalent bias, because the arguments specified are the only arguments of the types specified. Popper is provided with types for the unary predicates in these domains and thus rather is comparable with Types. Yet, Types outperforms Popper in terms of learning times. This result can be explained by the fact that Types is a variant of MagicPopper. As such, it considers magic hypotheses which represent the set of their instantiations. Therefore, in contrast to Popper, Types does not enumerate all possible candidate constants. Moreover, Types only considers instantiations which, together with the background knowledge, entail at least one positive example, while Popper considers every possible constant in the search space equally. Also, because Types does not require additional unary predicates, it can express hypotheses more compactly and can search up to a smaller depth. Popper can also achieve longer learning times than All whereas All searches a larger space. For instance, Popper requires 18s to solve the task powerof2 while All solves it in less than 1s.

Table 4 shows the predictive accuracies. These results show All can achieve lower predictive accuracies than Types and Arguments. For instance, All reaches 92% accuracy on coins-next while Types and Arguments reach 100% accuracy. There are two main reasons explaining this difference. First, All has a more expressive language, and in particular can express more specific hypotheses through the use of more constant symbols. It is thus more prone to overfitting. Second, All searches a larger search space. It consequently might not find an optimal solution before timeout. Moreover, according to the Blumer bound (Blumer et al., 1989), searching a larger search space can result in lower predictive accuracies.

We can conclude that MagicPopper can benefit from additional bias about which variables should be magic variables, and in particular it can achieve better learning performance. We thus can positively answer Q4. This experiment illustrates the impact of more bias. More bias can help reduce the search space and thus improve learning performance. However, this bias must be user provided.

More generally, choosing an appropriate bias is a key challenge in ILP (Cropper & Dumancic, 2022).

6.8 Q5: effect on learning performance for problems which do not require magic values

Our approach can improve learning performance for problems which require magic values. However, magic values are not always required and it is not always known whether a good solution requires magic values. We investigate in this experiment the impact on learning performance of unnecessarily allowing magic values and thus aim to answer Q5.

6.8.1 Material and methods