Abstract

Receiver operating characteristic (ROC) curves are used ubiquitously to evaluate scores, features, covariates or markers as potential predictors in binary problems. We characterize ROC curves from a probabilistic perspective and establish an equivalence between ROC curves and cumulative distribution functions (CDFs). These results support a subtle shift of paradigms in the statistical modelling of ROC curves, which we view as curve fitting. We propose the flexible two-parameter beta family for fitting CDFs to empirical ROC curves and derive the large sample distribution of minimum distance estimators in general parametric settings. In a range of empirical examples the beta family fits better than the classical binormal model, particularly under the vital constraint of the fitted curve being concave.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Through all realms of science and society, the assessment of the predictive ability of scores or features for binary outcomes is of critical importance. To give but a few examples, biomarkers are used to diagnose diseases, weather forecasts serve to anticipate extreme precipitation events, judges need to assess recidivism in convicts, banks use customers’ particulars to assess credit risk, and email messages are to be identified as spam or legitimate. In these and myriads of similar settings, receiver operating characteristic (ROC) curves are key tools in the evaluation of predictive potential (Fawcett 2006; Flach 2016).

A ROC curve is simply a plot of the hit rate (HR) against the false alarm rate (FAR) across the range of thresholds for a real-valued score. Specifically, consider the joint distribution \(\mathbbm {Q}\) of the pair (S, Y), where the score S is real-valued, and the event Y is binary, with the implicit understanding that higher values of S provide stronger support for the event to materialize (\(Y = 1\)). The joint distribution \(\mathbbm {Q}\) of (S, Y) is characterized by the prevalence \(\pi _1 = \mathbbm {Q}(Y = 1) \in (0,1)\) along with the conditional cumulative distribution functions (CDFs)

Any threshold value s can be used to predict a positive outcome (\(Y = 1\)) if \(S > s\) and a negative outcome (\(Y = 0\)) if \(S \le s\), to yield a classifier with hit rate (HR),

and false alarm rate (FAR),

Terminologies abound and differ markedly between communities. For example, the hit rate has also been referred to as true positive rate (TPR), sensitivity or recall. The false alarm rate is also known as false positive rate (FPR) or fall-out and equals one minus the true negative rate, specificity, or selectivity.

The term raw ROC diagnostic refers to the set-theoretic union of the points of the form \((\mathrm{FAR}(s), \mathrm{HR}(s))'\) within the unit square.Footnote 1 The ROC curve is a linearly interpolated raw ROC diagnostic, and therefore it also is a point set that may or may not admit a direct interpretation as a function. However, if \(F_1\) and \(F_0\) are continuous and strictly increasing, the raw ROC diagnostic and the ROC curve can be identified with a function R, where \(R(0) = 0\),

and \(R(1) = 1\). High hit rates and low false alarm rates are desirable, so the area under the ROC curve (AUC) is a widely used, positively oriented measure of the predictive potential of a score or feature. In data analytic practice, the measure \(\mathbbm {Q}\) is the empirical distribution of a sample \((s_i, y_i)_{i=1}^n\) of real-valued scores \(s_i\) and corresponding binary observations \(y_i\). In this setting, it suffices to consider the unique values of \(s_1, \ldots , s_n\) to generate the raw ROC diagnostic, and linear interpolation yields the empirical ROC curve, as illustrated in our data examples.

The remainder of our note is organized as follows. Section 2 establishes rigorous versions of fundamental theoretical results that thus far have been available informally or in special cases only. In particular, we demonstrate an equivalence between ROC curves and CDFs. In Sect. 3 we introduce the flexible yet parsimonious two-parameter beta model, which uses the CDFs of beta distributions to model ROC curves, and we discuss estimation and testing in this context. The paper closes with data examples in Sect. 4.

2 Fundamental properties of ROC curves

Consider the random vector (S, Y) where S is a real-valued score, feature, covariate, or marker, and Y is the binary response. As before, we refer to the joint distribution of (S, Y) as \(\mathbbm {Q}\). Let \(\pi _0 = 1 - \pi _1 = \mathbbm {Q}(Y = 0)\), and let

denote the marginal cumulative distribution function (CDF) of the score S. We write \(G(s-)\) for the left-hand limit of a function G at \(s \in \mathbbm {R}\), and we let \((a,b)'_{(1)} = a\) and \((a,b)'_{(2)} = b\) denote coordinate projections.

2.1 Raw ROC diagnostics and ROC curves

In this setting ROC diagnostics concern the points of the form \((\mathrm{FAR}(s),\mathrm{HR}(s))'\) within the unit square. Formally, the raw ROC diagnostic for the bivariate distribution \(\mathbbm {Q}\) is the point set

The raw ROC diagnostic along with a single marginal does not characterize \(\mathbbm {Q}\), due to its well known invariance properties (Fawcett 2006; Krzanowski and Hand 2009). However, the raw ROC diagnostic along with both marginal distributions determines \(\mathbbm {Q}\).

Theorem 1

The joint distribution \(\mathbbm {Q}\) of (S, Y) is characterized by the raw ROC diagnostic and the marginal distributions of S and Y.

Proof

The mapping \(g : [0,1]^2 \rightarrow [0,1]\) defined by \((a,b)' \mapsto (1 - a) \pi_0 + (1 - b) \pi_1\) induces a bijection between the raw ROC diagnostic \(R^*\) and the range of F. Therefore, it suffices to note that \(\mathbbm {Q}(S \le s, Y \le y) = 0\) for \(y < 0\),

for \(y \in [0,1)\), and \(\mathbbm {Q}(S \le s, Y \le y) = F(s)\) for \(y \ge 1\). \(\square\)

Briefly, a ROC curve is obtained from the raw ROC diagnostic by linear interpolation. Formally, the full ROC diagnostic or ROC curve is the point set

within the unit square, where

is a possibly degenerate, nondecreasing line segment. The choice of linear interpolation to complete the raw ROC diagnostic into the ROC curve (3) is natural and persuasive, as the line segment \(L_s\) represents randomized combinations of the classifiers associated with its end points.

The raw ROC diagnostic can be recovered from the ROC curve and the two marginal distributions, as the mapping g in the proof of Theorem 1 induces a bijection between the raw ROC diagnostic and the range of F that can be expressed in terms of \(\pi _1\) and \(\pi _0\). From this simple fact the following result is immediate.

Corollary 1

The joint distribution \(\mathbbm {Q}\) of (S, Y) is characterized by the ROC curve and the marginal distributions of S and Y.

Given a ROC curve R, an obvious task is to find CDFs \(F_0\) and \(F_1\) that realize R. For a particularly simple and appealing construction, let \(F_0\) be the CDF of the uniform distribution on the unit interval, and define \(F_1\) as \(F_1(s) = 0\) for \(s \le 0\),

and \(F_1(s) = 1\) for \(s \ge 1\), where the function \(R_+ : (0,1) \rightarrow [0,1]\) is induced by the ROC curve at hand, in that \(R_+(s) = \inf \left\{ b : (a,b)' \in R, a \ge s \right\}\).

2.2 Concave ROC curves

We proceed to elucidate the critical role of concavity in the interpretation and modelling of ROC curves. Its significance is well known and has been reviewed in monographs (Pepe 2003; Zhou et al. 2011). Nevertheless, we are unaware of any rigorous treatment in the extant literature that applies in both continuous and discrete settings, which is what we address now.

In the regular setting we suppose that \(F_1\) and \(F_0\) have continuous, strictly positive Lebesgue densities \(f_1\) and \(f_0\) in the interior of an interval, which is their common support. For every s in the interior of the support, we can define the likelihood ratio,

and the conditional event probability,

We note the equivalence of the following three conditions:

-

(a)

The ROC curve is concave.

-

(b)

The likelihood ratio is nondecreasing.

-

(c)

The conditional event probability is nondecreasing.

Theorem 2

In the regular setting statements (a), (b), and (c) are equivalent.

Next we consider the discrete setting where we assume that the support of the score S is finite or countably infinite. This setting includes, but is not limited to, the case of empirical ROC curves. For every s in the discrete support of S, we can define the likelihood ratio,

and the conditional event probability,

Theorem 3

In the discrete setting statements (a), (b), and (c) are equivalent.

We skip proofs as Corollary 2.8 of Mösching and Dümbgen (2021) implies the equivalence of (a) and (b) in both settings. Furthermore, \(\mathrm{LR}(s) \propto {\mathrm{CEP}}(s)/(1 - {\mathrm{CEP}}(s))\), and the function \(c \mapsto c/(1-c)\) is nondecreasing, which yields the equivalence of (b) and (c).

The critical role of concavity in the interpretation and modelling of ROC curves stems from the monotonicity condition (c) on the conditional event probability, which needs to be invoked to justify the thresholding that lies at the heart of ROC analysis. When theoretical models are fit to empirical ROC curves, the model parameters can be restricted to guarantee concavity. Empirical ROC curves typically fail to be concave, but can be morphed into their concave hull, by subjecting a score to the pool-adjacent violators algorithm, thereby converting it into an isotonic, calibrated probabilistic classifier (Fawcett and Niculescu-Mizil 2007).

2.3 An equivalence between ROC curves and probability measures

We move on to provide concise and practically relevant characterizations of ROC curves.

Theorem 4

There is a one-to-one correspondence between ROC curves and probability measures on the unit interval.

Proof

Given a ROC curve, we remove any vertical line segments, except for the respective upper endpoints, to yield the CDF of a probability measure on the unit interval. Conversely, given the CDF of a probability measure on the unit interval, we interpolate vertically at any jump points to obtain a ROC curve. This mapping is a bijection, and save for the symmetries in (4) it is realized by the construction in the proof of Corollary 1. \(\square\)

We say that a curve C in the Euclidean plane is nondecreasing if \(a_0 \le a_1\) is equivalent to \(b_0 \le b_1\) for points \((a_0, b_0)', (a_1, b_1)' \in C\). The following result then is immediate.

Corollary 2

The ROC curves are the nondecreasing curves in the unit square that connect the points \((0,0)'\) and \((1,1)'\).

Natural analogues apply under the further constraints of either strict or non-strict concavity. From applied perspectives, these results support a shift of paradigms in the statistical modelling of ROC curves. In extant practice, the emphasis is on modelling the conditional distributions \(F_0\) and \(F_1\). Our characterizations suggest a subtle but important change of perspective, in that ROC modelling can be approached as an exercise in curve fitting, with any nondecreasing curve that connects \((0,0)'\) to \((1,1)'\) being a permissible candidate.

3 Parametric models, estimation, and testing

The binormal model is by far the most frequently used parametric model and “plays a central role in ROC analysis” (Pepe 2003, p. 81). Specifically, the binormal model assumes that \(F_1\) and \(F_0\) are Gaussian with means \(\mu _1 \ge \mu _0\) and strictly positive variances \(\sigma _0^2\) and \(\sigma _1^2\), respectively. We are in the regular setting of Sect. 2.2, and the resulting ROC curve is represented by the function \(R : [0,1] \rightarrow [0,1]\) with \(R(0) = 0\),

and \(R(1) = 1\), where \(\Phi\) is the CDF of the standard normal distribution, \(\mu = (\mu _1 - \mu _0)/\sigma _1 \ge 0\) is a scaled difference in expectations, and \(\sigma = \sigma _0/ \sigma _1\) is the ratio of the respective standard deviations. The associated area under the curve is \(\mathrm{AUC}(\mu ,\sigma ) = \Phi (\mu /\sqrt{1 + \sigma ^2})\). A binormal ROC curve is concave only if \(\sigma = 1\) or, equivalently, if \(F_0\) and \(F_1\) differ in location only.

Our next result demonstrates that this restriction is unavoidable if location-scale families are used to model the class conditional distributions.

Proposition 1

Given any strictly increasing CDF F on \(\mathbbm {R}\), let \(F_0(s) = F((s - \mu _0)/\sigma _0)\) and \(F_1(s) = F((s - \mu _1)/\sigma _1)\) for some \(\mu _0, \mu _1 \in \mathbbm {R}\) and \(\sigma _0, \sigma _1 > 0\). Then the ROC curve associated with the conditional CDFs \(F_0\) and \(F_1\) is non-concave whenever \(\sigma _0 \not = \sigma _1\).

Proof

If \(\mathrm{HR}(s) < \mathrm{FAR}(s)\) for some \(s \in \mathbbm {R}\), the ROC curve extends below the diagonal and thus is non-concave. We note that \(\mathrm{HR}(s)< \mathrm{FAR}(s) \iff F_0(s)< F_1(s) \iff (s - \mu _0)/\sigma _0 < (s - \mu _1)/\sigma _1\) and consider two cases. If \(\sigma _1 > \sigma _0\) then \(F_0(s) < F_1(s)\) for \(s < (\mu _0 \sigma _1 - \mu _1 \sigma _0)/(\sigma _1 - \sigma _0)\); if \(\sigma _0 > \sigma _1\) then \(F_0(s) < F_1(s)\) for \(s > (\mu _0 \sigma _1 - \mu _1 \sigma _0)/(\sigma _0 - \sigma _1)\). \(\square\)

3.1 The beta model

Motivated and supported by the characterizations in Sect. 2, we propose a curve fitting approach to the statistical modelling of ROC curves, with the two-parameter family of the cumulative distribution functions (CDFs) of beta distributions being a particularly attractive model. Specifically, consider the beta family with ROC curves represented by the function

where \(b_{\alpha ,\beta }(q) \propto q^{\alpha - 1} (1-q)^{\beta - 1}\) is the density of the beta distribution with parameter values \(\alpha > 0\) and \(\beta > 0\). This type of ROC curve arises as a special case of the setting in the case study by Zou et al. (2004, p. 1263), which models the class conditional distributions via beta densities. A beta ROC curve is concave if \(\alpha \le 1\) and \(\beta \ge 2 - \alpha\), and its AUC value is \(\mathrm{AUC}(\alpha ,\beta ) = \beta / (\alpha + \beta )\) (Vogel 2019, Appendix 3.C). The condition for concavity is much less stringent than for the binormal family, where it constrains the admissible parameter space to a single dimension.

If further flexibility is desired, mixtures of beta CDFs, i.e., functions of the form

where \(w_1, \ldots , w_n > 0\) with \(w_1 + \cdots + w_n = 1\), \(\alpha _1, \ldots , \alpha _k > 0\), and \(\beta _1, \ldots , \beta _k > 0\), approximate any regular ROC curve to any desired accuracy, as demonstrated by the following result. Recall from Sect. 2.2 that in the regular setting the ROC curve can be identified with the function R in (1), where \(F_1\) and \(F_0\) have continuous, strictly positive Lebesgue densities \(f_1\) and \(f_0\) in the interior of an interval, which is their common support. A ROC curve is regular if it arises in this way and strongly regular if furthermore the derivative \(R'\) is bounded.

Theorem 5

For every strongly regular ROC curve R there is a sequence of mixtures of beta CDFs with integer parameters that converges uniformly to R.

Proof

We apply the construction in the proof of Corollary 1 and define \(F_1\) as in (4). Due to the assumption of strong regularity, \(F_1\) admits a density on (0, 1) that can be extended to a continuous function \(f_1\) on [0, 1]. The arguments in Bernstein’s probabilistic proof of the Weierstrass approximation theorem (Levasseur 1984) show that as \(n \rightarrow \infty\) the sequence

converges to \(f_1(q)\) uniformly in \(q \in [0,1]\). Furthermore, \(a_n = \int _0^1 m_n(q) \, \mathrm{d}q \rightarrow \int _0^1 f_1(q) \, \mathrm{d}q = 1\) as \(n \rightarrow \infty\), and for \(n = 1, 2, \ldots\) the mapping \(p \mapsto M_n(p) = \int _0^p m_n(q) \, \mathrm{d}q / a_n\) respresents a mixture of beta CDFs. The uniform convergence of \(m_n\) to \(f_1\) implies that for every \(\epsilon > 0\) there exists an \(n'\) such that

for all integers \(n > n'\) uniformly in \(p \in [0,1]\). The statement of the theorem follows. \(\square\)

3.2 Minimum distance estimation

For the parametric estimation of ROC curves for continuous scores various methods have been proposed, including maximum likelihood, approaches based on generalized linear models, and minimum distance estimation (Pepe 2003; Zhou et al. 2011). Here we pursue the minimum distance estimator, which is much in line with our curve fitting approach.

We assume a parametric model in the regular setting of Sect. 2.2, where now the ROC curve depends on a parameter \(\theta \in \Theta \subseteq \mathbbm {R}^k\). Specifically, we suppose that for each \(\theta \in \Theta\) the ROC curve is represented by a smooth function

where \(F_{1,\theta }\) and \(F_{0,\theta }\) admit continuous, strictly positive densities \(f_{1,\theta }\) and \(f_{0,\theta }\) in the interior of an interval, which is their common support. We also require that the true parameter value \(\theta _0\) is in the interior of the parameter space \(\Theta\), where the derivative

exists and is finite for \(p \in (0,1)\), and the partial derivative \(R_{(i)}(p;\theta )\) with respect to component i of the parameter vector \(\theta = (\theta _1, \ldots , \theta _k)\) exists and is continuous for \(i = 1, \ldots , k\) and \(p \in (0,1)\).

We adopt the asymptotic scenario of Hsieh and Turnbull (1996), where at sample size n there are \(n_0\) and \(n_1 = n - n_0\) independent draws from \(F_{0,\theta }\) and \(F_{1,\theta }\) with corresponding binary outcomes of zero and one, respectively, where \(\lambda _n = n_0/n_1\) converges to some \(\lambda \in (0,\infty )\) as \(n \rightarrow \infty\). For \(\theta \in \Theta\) we define the difference process \(\xi _n(p;\theta ) = \hat{R}_n(p) - R(p;\theta )\), where the function \(\hat{R}_n(p)\) represents the empirical ROC curve. The minimum distance estimator \(\hat{\theta }_n = (\hat{\theta }_1, \dots , \hat{\theta }_k)_n\) then satisfies

where \(\Vert \xi _n(\cdot \, ;\theta ) \Vert = (\int _0^1 \xi _n(p;\theta )^2 \, \mathrm{d}p)^{1/2}\) is the standard \(L_2\)-norm. If n is large, \(\hat{\theta }_n\) exists and is unique with probability approaching one (Millar 1984), and so we follow the extant literature in ignoring issues of existence and uniqueness.

The minimum distance estimator has a multivariate normal limit distribution in this setting, as suggested by the asymptotic result of Hsieh and Turnbull (1996) that under the usual \(\sqrt{n}\) scaling the difference process \(\xi _n(p; \theta )\) has limit

at \(\theta = \theta _0\), where \(B_1\) and \(B_2\) are independent copies of a Brownian bridge. In contrast to the results in Section 4 of Hsieh and Turnbull (1996), which concern minimum distance estimation for the binormal model and ordinal dominance curves, the following theorem applies to general parametric families and ROC curves.

Theorem 6

In the above setting the minimum distance estimator \(\hat{\theta }_n\) satisfies

as \(n \rightarrow \infty\), where the matrices A and C have entries

for \(i, j = 1, \ldots , k\), respectively, and where

is the covariance function of the process \(W(p;\theta )\) in (7) at \(\theta = \theta _0\).

Proof

We are in the setting of Theorem 2.2 of Hsieh and Turnbull (1996), according to which there exists a probability space with sequences \((B_{1,n})\) and \((B_{2,n})\) of independent versions of Brownian bridges such that

almost surely, and uniformly in p on every interval \([a,b] \subset (0,1)\). We proceed to verify the regularity conditions for Theorem 3.6 of Millar (1984). As regards the identifiability condition (3.2) and the differentiability condition (3.5) it suffices to note that \(\xi _n(p; \theta ) - \xi _n(p; \theta _0) = R(p;\theta _0) - R(p;\theta )\) is nonrandom, continuously differentiable with respect to p and the components of the parameter vector \(\theta\), and independent of n. The boundedness condition (3.3) is trivially satisfied and the convergence condition (3.4) is implied by (11). Finally, we apply (2.17), (2.18), (2.19), and (2.20) in Section II of Millar (1984) to yield (8) and (9), where the covariance function of the process in (7) is

whence \(K(s,t;\theta _0)\) is as stated in (10). \(\square\)

This result allows for asymptotic inference about model parameters, by plugging in \(\hat{\theta }_n\) for \(\theta _0\) in the expression for the asymptotic covariance. For the binormal model (5) we have \(\theta = (\mu ,\sigma )\), \(R_{(\mu )}(p;\theta ) = \varphi (\mu + \sigma \, \Phi ^{-1}(p))\), \(R_{(\sigma )}(p;\theta ) = \Phi^{-1}(p) \varphi (\mu + \sigma \, \Phi^{-1}(p))\), and \(R'(p;\theta ) = \sigma \, \varphi (\mu + \sigma \, \Phi ^{-1}(p)) / \varphi (\Phi ^{-1}(p))\), where \(\varphi\) is the standard normal density, so that the integrals in (9) can readily be evaluated numerically. Under the beta model (6) we have \(\theta = (\alpha ,\beta )\) and \(R'(p;\theta ) = b_{\alpha ,\beta }(p)\). While closed form expressions for the partial derivatives of \(R(p;\theta )\) with respect to \(\alpha\) and \(\beta\) exist, they are difficult to evaluate, and we approximate them with finite differences.

3.3 Testing goodness-of-fit

A natural question is whether a given parametric model fits the data at hand, and for doing this we propose a simple Monte Carlo test that applies to any parametric model class \(\mathcal {C}\). Specifically, given a dataset of size n with \(n_0\) instances where the binary outcome is zero and \(n_1 = n - n_0\) instances where it is one, our goodness-of-fit test proceeds as follows. We use the notation of Sect. 3.2 and denote the number of Monte Carlo replicates by M.

-

1.

Fit a model from class \(\mathcal {C}\) to the empirical ROC curve, to yield the minimum distance estimate \(\theta _\mathrm{data}\). Compute \(d_\mathrm{data}\) as the \(L_2\)-distance between the fitted and the empirical ROC curve.

-

2.

For \(m = 1, \ldots , M\),

-

(a)

draw a sample of size n under \(\theta _\mathrm{data}\), with \(n_0\) and \(n_1\) instances from \(F_{0,\theta _\mathrm{data}}\) and \(F_{1,\theta _\mathrm{data}}\) and associated binary outcomes of zero and one, respectively,

-

(b)

fit a model from class \(\mathcal {C}\) to the empirical ROC curve, to yield the minimum distance estimate, and

-

(c)

compute \(d_m\) as the \(L_2\)-distance between the fitted and the empirical ROC curve.

-

(a)

-

3.

Find a p-value based on the rank of \(d_\mathrm{data}\) when pooled with \(d_1, \ldots , d_M\). Specifically, \(p = (\# \{ i = 1, \ldots , M : d_\mathrm{data} \le d_i \} + 1) / (M+1)\).

Under the null hypothesis of the ROC curve being generated by a random sample within class \(\mathcal {C}\) the Monte Carlo p-value is very nearly uniform, as is readily seen in simulation experiments.

4 Empirical examples

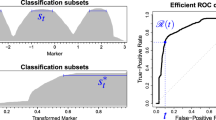

Empirical (black), fitted binormal (red) and fitted beta (blue) ROC curves in the unrestricted (solid) and concave (dashed) case for the datasets from Table 1 (Color figure online)

Basic information about the datasets in our empirical examples is given in Table 1. In the dataset from Etzioni et al. (1999), the ratio of free to total prostate-specific antigen (PSA) two years prior to diagnosis in serum from patients later found to have prostate cancer is compared to age-matched controls. The datasets from Sing et al. (2005, Figure1a) and Robin et al. (2011, Figure 1) are prominent examples in the widely used ROCR and pROC packages in R. They concern a score from a linear support vector machine (SVM) trained to predict the usage of HIV coreceptors, and the S100\(\beta\) biomarker as it relates to a binary clinical outcome. The dataset from Vogel et al. (2018, Figure 6d) considers probability of precipitation forecasts from the European Centre for Medium-Range Weather Forecasts (ECMWF) for the binary event of precipitation occurrence in the West Sahel region.

Figure 1 shows binormal and beta ROC curves fitted to the empirical ROC curves, both in the unrestricted case and under the constraint of concavity. The respective unrestricted and restricted minimum distance estimates, the fit in terms of the \(L_2\)-distance to the empirical ROC curve, and the p-value from the goodness-of-fit test of Sect. 3.3 with \(M = 999\) Monte Carlo replicates, are given in Table 1. In the unrestricted case, the binormal and beta fits are visually nearly indistinguishable. The fitted binormal ROC curves fail to be concave and change markedly when concavity is enforced. For the beta ROC curves, the differences between restricted and unrestricted fits are less pronounced, and in the example from Vogel et al. (2018) the unrestricted fit is concave. For this dataset, our goodness-of-fit test rejects both the unrestricted and the concave binormal model, but does not reject the beta model—so the use of the more flexible beta family is of relevance and import. Generally, in the constrained case the improvement in the fit under the more flexible beta model as compared to the classical binormal model is substantial.

For more detailed analyses of these datasets, which include a demonstration of the use of our asymptotic results to generate confidence bands, as well as four-parameter extensions of the beta family, we refer to Vogel (2019, Section 3.4). Omar and Ivrissimtzis (2019) discuss the use of parametric families of ROC curves specifically in machine learning. Datasets and code in R (R Core Team 2021) for replicating our results and implementing the proposed estimators and tests are available online (Vogel and Jordan 2021).

Notes

We use the notation \((a,b)'\) for a column vector in \(\mathbbm {R}^2\).

References

Etzioni, R., Pepe, M., Longton, G., Hu, C., & Goodman, G. (1999). Incorporating the time dimension in receiver operating characteristic curves: A case study of prostate cancer. Medical Decision Making, 19, 242–251.

Fawcett, T. (2006). An introduction to ROC analysis. Pattern Recognition Letters, 27, 861–874.

Fawcett, T., & Niculescu-Mizil, A. (2007). PAV and the ROC convex hull. Machine Learning, 68, 97–106.

Flach, P. A. (2016). ROC analysis. In C. Sammut & G. I. Webb (Eds.), Encyclopedia of Machine Learning and Data Mining. Boston: Springer.

Hsieh, F., & Turnbull, B. W. (1996). Nonparametric and semiparametric estimation of the receiver operating characteristic curve. Annals of Statistics, 24, 25–40.

Krzanowski, D. J., & Hand, D. (2009). ROC Curves for Continuous Data. Boca Raton: CRC Press.

Levasseur, K. M. (1984). A probabilistic proof of the Weierstrass approximation theorem. American Mathematical Monthly, 91, 249–250.

Millar, P. W. (1984). A general approach to the optimality of minimum distance estimators. Transactions of the American Mathematical Society, 286, 377–418.

Mösching, A., & Dümbgen, L. (2021). Estimation of a likelihood ratio ordered family of distributions — with a connection to total positivity. Preprint, arXiv:2007.11521v2.

Omar, L., & Ivrissimtzis, I. (2019). Using theoretical ROC curves for analysing machine learning binary classifiers. Pattern Recognition Letters, 128, 447–451.

Pepe, M. S. (2003). The Statistical Evaluation of Medical Tests for Classification and Prediction. Oxford: Oxford University Press.

R Core Team. (2021). R: A language and environment for statistical computing. R Foundation for Statistical Computing. Vienna, Austria

Robin, X., Turck, N., Hainard, A., Tiberti, N., Lisacek, F., Sanchez, J.-C., & Müller, M. (2011). pROC: An open-source package for R and S\(+\) to analyze and compare ROC curves. BMC Bioinformatics, 12, 77.

Sing, T., Sander, O., Beerenwinkel, N., & Lengauer, T. (2005). ROCR: Visualizing classifier performance in R. Bioinformatics, 21, 3940–3941.

Vogel, P. (2019). Assessing Predictive Performance: From Precipitation Forecasts Over the Tropics to Receiver Operating Characteristic Curves and Back. PhD Thesis, Karlsruhe Institute of Technology, Faculty of Mathematics, available online at https://publikationen.bibliothek.kit.edu/1000091649.

Vogel, P., & Jordan, A. I. (2021). Replication package for “Receiver operating characteristic (ROC) curves: Equivalences, beta model, and minimum distance estimation” (version v0.1.0) [data set]. Zenodo. https://doi.org/10.5281/zenodo.4681331

Vogel, P., Knippertz, P., Fink, A. H., Schlueter, A., & Gneiting, T. (2018). Skill of global raw and postprocessed ensemble predictions of rainfall over northern tropical Africa. Weather and Forecasting, 33, 369–388.

Zhou, X.-H., Obuchowski, N. A., & McClish, D. K. (2011). Statistical Methods in Diagnostic Medicine (2nd ed.). Hoboken: Wiley.

Zou, K. H., Wells, W. M., III., Kikinis, R., & Warfield, S. K. (2004). Three validation metrics for automated probabilistic image segmentation of brain tumours. Statistics in Medicine, 23, 1259–1282.

Acknowledgements

We are grateful to the handling editors, Tom Fawcett, Hendrik Blockeel and Peter Flach, two anonymous referees, Alexander I. Jordan, Johannes Resin, Dirk Tasche and Johanna Ziegel, and our project collaborators Andreas H. Fink, Peter Knippertz and Andreas Schlueter for a wealth of comments and encouragement. Furthermore, we thank Alexander I. Jordan for code review.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Funded by the Klaus Tschira Foundation and Deutsche Forschungsgemeinschaft (DFG, German Research Foundation)–Project-ID 257899354–TRR 165.

Editors: Tom Fawcett, Hendrik Blockeel

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gneiting, T., Vogel, P. Receiver operating characteristic (ROC) curves: equivalences, beta model, and minimum distance estimation. Mach Learn 111, 2147–2159 (2022). https://doi.org/10.1007/s10994-021-06115-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-021-06115-2