Abstract

Semi-supervised learning is the branch of machine learning concerned with using labelled as well as unlabelled data to perform certain learning tasks. Conceptually situated between supervised and unsupervised learning, it permits harnessing the large amounts of unlabelled data available in many use cases in combination with typically smaller sets of labelled data. In recent years, research in this area has followed the general trends observed in machine learning, with much attention directed at neural network-based models and generative learning. The literature on the topic has also expanded in volume and scope, now encompassing a broad spectrum of theory, algorithms and applications. However, no recent surveys exist to collect and organize this knowledge, impeding the ability of researchers and engineers alike to utilize it. Filling this void, we present an up-to-date overview of semi-supervised learning methods, covering earlier work as well as more recent advances. We focus primarily on semi-supervised classification, where the large majority of semi-supervised learning research takes place. Our survey aims to provide researchers and practitioners new to the field as well as more advanced readers with a solid understanding of the main approaches and algorithms developed over the past two decades, with an emphasis on the most prominent and currently relevant work. Furthermore, we propose a new taxonomy of semi-supervised classification algorithms, which sheds light on the different conceptual and methodological approaches for incorporating unlabelled data into the training process. Lastly, we show how the fundamental assumptions underlying most semi-supervised learning algorithms are closely connected to each other, and how they relate to the well-known semi-supervised clustering assumption.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In machine learning, a distinction has traditionally been made between two major tasks: supervised and unsupervised learning (Bishop 2006). In supervised learning, one is presented with a set of data points consisting of some input x and a corresponding output value y. The goal is, then, to construct a classifier or regressor that can estimate the output value for previously unseen inputs. In unsupervised learning, on the other hand, no specific output value is provided. Instead, one tries to infer some underlying structure from the inputs. For instance, in unsupervised clustering, the goal is to infer a mapping from the given inputs (e.g. vectors of real numbers) to groups such that similar inputs are mapped to the same group.

Semi-supervised learning is a branch of machine learning that aims to combine these two tasks (Chapelle et al. 2006b; Zhu 2008). Typically, semi-supervised learning algorithms attempt to improve performance in one of these two tasks by utilizing information generally associated with the other. For instance, when tackling a classification problem, additional data points for which the label is unknown might be used to aid in the classification process. For clustering methods, on the other hand, the learning procedure might benefit from the knowledge that certain data points belong to the same class.

As is the case for machine learning in general, a large majority of the research on semi-supervised learning is focused on classification. Semi-supervised classification methods are particularly relevant to scenarios where labelled data is scarce. In those cases, it may be difficult to construct a reliable supervised classifier. This situation occurs in application domains where labelled data is expensive or difficult obtain, like computer-aided diagnosis, drug discovery and part-of-speech tagging. If sufficient unlabelled data is available and under certain assumptions about the distribution of the data, the unlabelled data can help in the construction of a better classifier. In practice, semi-supervised learning methods have also been applied to scenarios where no significant lack of labelled data exists: if the unlabelled data points provide additional information that is relevant for prediction, they can potentially be used to achieve improved classification performance.

A plethora of learning methods exists, each with their own characteristics, advantages and disadvantages. The most recent comprehensive survey of the area was published by Zhu in 2005 and last updated in 2008 [see Zhu (2008)]. The book by Chapelle et al. (2006b) and the introductory book by Zhu and Goldberg (2009) also provide good bases for studying earlier work on semi-supervised learning. More recently, Subramanya and Talukdar (2014) provided an overview of several graph-based techniques, and Triguero et al. (2015) reviewed and analyzed pseudo-labelling techniques, a class of semi-supervised learning methods.

Since the survey by Zhu (2008) was published, some important developments have taken place in the field of semi-supervised learning. Across the field, new learning approaches have been proposed, and existing approaches have been extended, improved, and analyzed in more depth. Additionally, the rise in popularity of (deep) neural networks (Goodfellow 2017) for supervised learning has prompted new approaches to semi-supervised learning, driven by the simplicity of incorporating unsupervised loss terms into the cost functions of neural networks. Lastly, there has been increased attention for the development of robust semi-supervised learning methods that do not degrade performance, and for the evaluation of semi-supervised learning methods for practical purposes.

In this survey, we aim to provide the reader with a comprehensive overview of the current state of the research area of semi-supervised learning, covering early work and recent advances, and providing explanations of key algorithms and approaches. We present a new taxonomy for semi-supervised classification methods that captures the assumptions underlying each group of methods as well as the way in which they relate to existing supervised methods. In this, we provide a perspective on semi-supervised learning that allows for a more thorough understanding of different approaches and the connections between them. Furthermore, we shed new light on the fundamental assumptions underlying semi-supervised learning, and show how they connect to the so-called cluster assumption.

Although we aim to provide a comprehensive survey on semi-supervised learning, we cannot possibly cover every method in existence. Due to the sheer size of the literature on the topic, this would not only be beyond the scope of this article, but also distract from the key insights which we wish to provide to the reader. Instead, we focus on the most influential work and the most important developments in the area over the past twenty years.

The rest of this article is structured as follows. The basic concepts and assumptions of semi-supervised learning are covered in Sect. 2, where we also make a connection to clustering. In Sect. 3, we present our taxonomy of semi-supervised learning methods, which forms the conceptual basis for the remainder of our survey. Inductive methods are covered in Sects. 4 through 6. We first consider wrapper methods (Sect. 4), followed by unsupervised preprocessing (Sect. 5), and finally, we cover intrinsically semi-supervised methods (Sect. 6). Sect. 7 covers transductive methods, which form the second major branch of our taxonomy. Semi-supervised regression and clustering are discussed in Sect. 8. Finally, in Sect. 9, we provide some prospects for the future of semi-supervised learning.

2 Background

In traditional supervised learning problems, we are presented with an ordered collection of l labelled data points \(D_L = ((x_i, y_i))_{i=1}^l\). Each data point \((x_i, y_i)\) consists of an object \(x_i \in \mathcal {X}\) from a given input space \(\mathcal {X}\), and has an associated label \(y_i\), where \(y_i\) is real-valued in regression problems and categorical in classification problems. Based on a collection of these data points, usually called the training data, supervised learning methods attempt to infer a function that can successfully determine the label \(y^*\) of some previously unseen input \(x^*\).

In many real-world classification problems, however, we also have access to a collection of u data points, \(D_U = (x_i)_{i=l+1}^{l+u}\), whose labels are unknown. For instance, the data points for which we want to make predictions, usually called the test data, are unlabelled by definition. Semi-supervised classification methods attempt to utilize unlabelled data points to construct a learner whose performance exceeds the performance of learners obtained when using only the labelled data. In the remainder of this survey, we denote with \(X_L\) and \(X_U\) the collection of input objects for the labelled and unlabelled samples, respectively.Footnote 1

There are many cases where unlabelled data can help in constructing a classifier. Consider, for example, the problem of document classification, where we wish to assign topics to a collection of text documents (such as news articles). Assuming our documents are represented by the set of words that appear in it, one could train a simple supervised classifier that, for example, learns to recognize that documents containing the word “neutron” are usually about physics. This classifier might work well on documents containing terms that it has seen in the training data, but will inherently fail when a document does not contain predictive words that also occurred in the training set. For example, if we encounter a physics document about particle accelerators that does not contain the word “neutron”, the classifier is unable to recognize it as a document concerning physics. This is where semi-supervised learning comes in. If we consider the unlabelled data, there might be documents that connect the word “neutron” to the phrase “particle accelerator”. For instance, the word “neutron” would often occur in a document that also contains the word “quark”. Furthermore, the word “quark” would regularly co-occur with the phrase “particle accelerator”, which guides the classifiers towards classifying these documents as revolving around physics as well, despite having never seen the phrase “particle accelerator” in the labelled data.

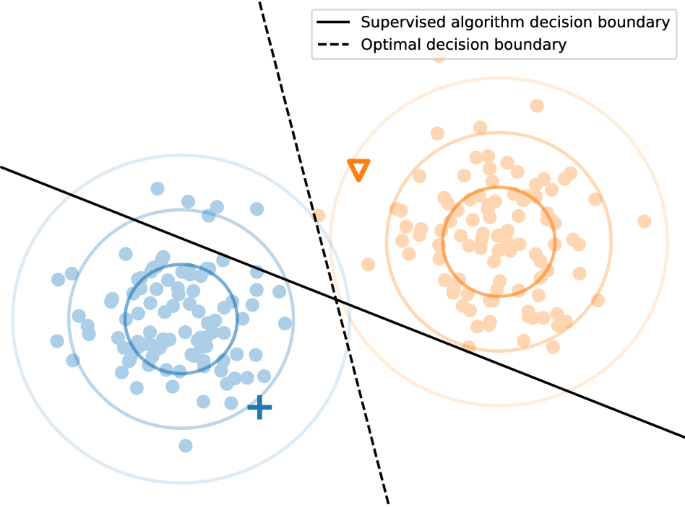

Figure 1 provides some further intuition towards the use of unlabelled data for classification. We consider an artificial classification problem with two classes. For both classes, 100 samples are drawn from a 2-dimensional Gaussian distribution with identical covariance matrices. The labelled data set is then constructed by taking one sample from each class. Any supervised learning algorithm will most likely obtain as the decision boundary the solid line, which is perpendicular to the line segment connecting the two labelled data points and intersects it in the middle. However, this is quite far from the optimal decision boundary. As is clear from this figure, the clusters we can infer from the unlabelled data can help us considerably in placing the decision boundary: assuming that the data stems from two Gaussian distributions, a simple semi-supervised learning algorithm can infer a close-to-optimal decision boundary.

A basic example of binary classification in the presence of unlabelled data. The unlabelled data points are coloured according to their true label. The coloured, unfilled circles depict the contour curves of the input data distribution corresponding to standard deviations of 1, 2 and 3 (Color figure online)

2.1 Assumptions of semi-supervised learning

A necessary condition of semi-supervised learning is that the underlying marginal data distribution p(x) over the input space contains information about the posterior distribution p(y|x). If this is the case, one might be able to use unlabelled data to gain information about p(x), and thereby about p(y|x). If, on the other hand, this condition is not met, and p(x) contains no information about p(y|x), it is inherently impossible to improve the accuracy of predictions based on the additional unlabelled data (Zhu 2008).

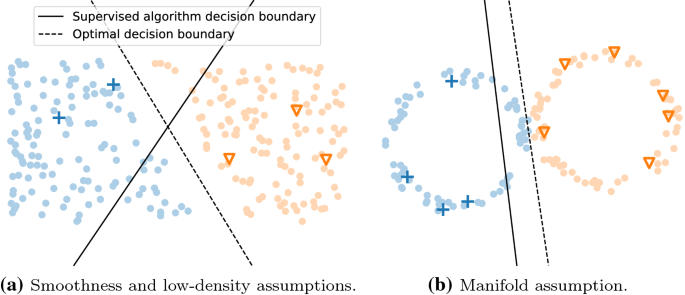

Fortunately, the previously mentioned condition appears to be satisfied in most learning problems encountered in the real world, as is suggested by the successful application of semi-supervised learning methods in practice. However, the way in which p(x) and p(y|x) interact is not always the same. This has given rise to the semi-supervised learning assumptions, which formalize the types of expected interaction (Chapelle et al. 2006b). The most widely recognized assumptions are the smoothness assumption (if two samples x and \(x'\) are close in the input space, their labels y and \(y'\) should be the same), the low-density assumption (the decision boundary should not pass through high-density areas in the input space), and the manifold assumption (data points on the same low-dimensional manifold should have the same label). These assumptions are the foundation of most, if not all, semi-supervised learning algorithms, which generally depend on one or more of them being satisfied, either explicitly or implicitly. Throughout this survey, we will elaborate on the underlying assumptions utilized by each specific learning algorithm. The assumptions are explained in more detail below; a visual representation is provided in Fig. 2.

2.1.1 Smoothness assumption

The smoothness assumption states that, for two input points \(x, x' \in \mathcal X\) that are close by in the input space, the corresponding labels \(y, y'\) should be the same. This assumption is also commonly used in supervised learning, but has an extended benefit in the semi-supervised context: the smoothness assumption can be applied transitively to unlabelled data. For example, assume that a labelled data point \(x_1 \in X_L\) and two unlabelled data points \(x_2, x_3 \in X_U\) exist, such that \(x_1\) is close to \(x_2\) and \(x_2\) is close to \(x_3\), but \(x_1\) is not close to \(x_3\). Then, because of the smoothness assumption, we can still expect \(x_3\) to have the same label as \(x_1\), since proximity—and thereby the label—is transitively propagated through \(x_2\).

2.1.2 Low-density assumption

The low-density assumption implies that the decision boundary of a classifier should preferably pass through low-density regions in the input space. In other words, the decision boundary should not pass through high-density regions. The assumption is defined over p(x), the true distribution of the input data. When considering a limited set of samples from this distribution, it essentially means that the decision boundary should lie in an area where few data points are observed. In that light, the low-density assumption is closely related to the smoothness assumption; in fact, it can be considered the counterpart of the smoothness assumption for the underlying data distribution.

Suppose that a low-density area exists, i.e. an area \(R \subset \mathcal {X}\) where p(x) is low. Then very few observations are expected to be contained in R, and it is thus unlikely that any pair of similar data points in R is observed. If we place the decision boundary in this low-density area, the smoothness assumption is not violated, since it only concerns pairs of similar data points. For high-density areas, on the other hand, many data points can be expected. Thus, placing the decision boundary in a high-density region violates the smoothness assumption, since the predicted labels would then be dissimilar for similar data points.

The converse is also true: if the smoothness assumption holds, then any two data points that lie close together have the same label. Therefore, in any densely populated area of the input space, all data points are expected to have the same label. Consequently, a decision boundary can be constructed that passes only through low-density areas in the input space, thus satisfying the low-density assumption as well. Due to their close practical relation, we depict the low-density assumption and the smoothness assumption in a single illustration in Fig. 2.

2.1.3 Manifold assumption

In machine learning problems where the data can be represented in Euclidean space, the observed data points in the high-dimensional input space \(\mathbb {R}^d\) are usually concentrated along lower-dimensional substructures. These substructures are known as manifolds: topological spaces that are locally Euclidean. For instance, when we consider a 3-dimensional input space where all points lie on the surface of a sphere, the data can be said to lie on a 2-dimensional manifold. The manifold assumption in semi-supervised learning states that (a) the input space is composed of multiple lower-dimensional manifolds on which all data points lie and (b) data points lying on the same manifold have the same label. Consequently, if we are able to determine which manifolds exist and which data points lie on which manifold, the class assignments of unlabelled data points can be inferred from the labelled data points on the same manifold.

2.2 Connection to clustering

In semi-supervised learning research, an additional assumption that is often included is the cluster assumption, which states that data points belonging to the same cluster belong to the same class (Chapelle et al. 2006b). We argue, however, that the previously mentioned assumptions and the cluster assumption are not independent of each other but, rather, that the cluster assumption is a generalization of the other assumptions.

Consider an input space \(\mathcal {X}\) with some objects \(X \subset \mathcal {X}\), drawn from the distribution p(x). A cluster, then, is a set of data points \(C \subseteq X\) that are more similar to each other than to other data points in X, according to some concept of similarity (Anderberg 1973). Determining clusters corresponds to finding some function \(f:X \rightarrow \mathcal {Y}\) that maps each input in \(x \in X\) to a cluster with label \(y = f(x)\), where each cluster label \(y \in \mathcal {Y}\) uniquely identifies one cluster. Since we do not have direct access to p(x) to determine a suitable clustering, we need to rely on some concept of similarity between data points in \(\mathcal {X}\), according to which we can assign clusters to similar data points.

The concept of similarity we choose, often implicitly, dictates what constitutes a cluster. Although the efficacy of any particular clustering method for finding these clusters depends on many other factors, the concept of similarity uniquely defines the interaction between p(x) and p(y|x). Therefore, whether two points belong to the same cluster can be derived from their similarity to each other and to other points. From our perspective, the smoothness, low-density, and manifold assumptions boil down to different definitions of the similarity between points: the smoothness assumption states that points that are close to each other in input space are similar; the low-density assumption states that points in the same high-density area are similar; and the manifold assumption states that points that lie on the same low-dimensional manifold are similar. Consequently, the semi-supervised learning assumptions can be seen as more specific instances of the cluster assumption: that similar points tend to belong to the same group.

One could even argue that the cluster assumption corresponds to the necessary condition for semi-supervised learning: that p(x) carries information on p(y|x). In fact, assuming the output space \(\mathcal {Y}\) contains the labels of all possible clusters, the necessary condition for semi-supervised learning to succeed can be seen to be the necessary condition for clustering to succeed. In other words: if the data points (both unlabelled and labelled) cannot be meaningfully clustered, it is impossible for a semi-supervised learning method to improve on a supervised learning method.

2.3 When does semi-supervised learning work?

The primary goal of semi-supervised learning is to harness unlabelled data for the construction of better learning procedures. As it turns out, this is not always easy or even possible. As mentioned earlier, unlabelled data is only useful if it carries information useful for label prediction that is not contained in the labelled data alone or cannot be easily extracted from it. To apply any semi-supervised learning method in practice, the algorithm then needs to be able to extract this information. For practitioners and researchers alike, this begs the question: when is this the case?

Unfortunately, it has proven difficult to find a practical answer to this question. Not only is it difficult to precisely define the conditions under which any particular semi-supervised learning algorithm may work, it is also rarely straightforward to evaluate to what extent these conditions are satisfied. However, one can reason about the applicability of different learning methods on various types of problems. Graph-based methods, for example, typically rely on a local similarity measure to construct a graph over all data points. To apply such methods successfully, it is important that a meaningful local similarity measure can be devised. In high-dimensional data, such as images, where Euclidean feature distance is rarely a good indicator of the similarity between data points, this is often difficult. As can be seen in the literature, most semi-supervised learning approaches for images rely on a weak variant of the smoothness assumption that requires predictions to be invariant to minor perturbations in the input (Rasmus et al. 2015; Laine and Aila 2017; Tarvainen and Valpola 2017). Semi-supervised extensions of supervised learning algorithms, on the other hand, generally rely on the same assumption as their supervised counterparts. For instance, both supervised and semi-supervised support vector machines rely on the low-density assumption, which states that the decision boundary should lie in a low-density region of the decision space. If a supervised classifier performs well in such cases, it is only natural to use the semi-supervised extension to the algorithm.

As is the case for supervised learning algorithms, no method has yet been discovered to determine a priori what learning method is best-suited for any particular problem. What is more, it is impossible to guarantee that the introduction of unlabelled data will not degrade performance. Such performance degradation has been observed in practice, and its prevalence is likely under-reported due to publication bias (Zhu 2008). The problem of potential performance degradation has been identified in multiple studies (Zhu 2008; Chapelle et al. 2006b; Singh et al. 2009; Li and Zhou 2015; Oliver et al. 2018), but remains difficult to address. It is particularly relevant in scenarios where good performance can be achieved with purely supervised classifiers. In those cases, the potential performance degradation is much larger than the potential performance gain.

The main takeaway from these observations is that semi-supervised learning should not be seen as a guaranteed way of achieving improved prediction performance by the mere introduction of unlabelled data. Rather, it should be treated as another direction in the process of finding and configuring a learning algorithm for the task at hand. Semi-supervised learning procedures should be part of the suite of algorithms considered for use in a particular application scenario, and a combination of theoretical analysis (where possible) and empirical evaluation should be used to choose an approach that is well suited to the given situation.

2.4 Empirical evaluation of semi-supervised learning methods

When evaluating and comparing machine learning algorithms, a multitude of decisions influence the relative performance of different algorithms. In supervised learning, these include the selection of data sets, the partitioning of those data sets into training, validation and test sets, and the extent to which hyperparameters are tuned. In semi-supervised learning, additional factors come into play. First, in many benchmarking scenarios, a decision has to be made which data points should be labelled and which should remain unlabelled. Second, one can choose to evaluate the performance of the learner on the unlabelled data used for training (which is by definition the case in transductive learning), or on a completely disjoint test set. Additionally, it is important to establish high-quality supervised baselines to allow for proper assessment of the added value of the unlabelled data. In practice, excessively limiting the scope of the evaluation can lead to unrealistic perspectives on the performance of the learning algorithms. Recently, Oliver et al. (2018) established a set of guidelines for the realistic evaluation of semi-supervised learning algorithms; several of their recommendations are included here.

In practical use cases, the partitioning of labelled and unlabelled data is typically fixed. In research, data sets used for evaluating semi-supervised learning algorithms are usually obtained by simply removing the labels of a large amount of data points from an existing supervised learning data set. In earlier research, the data sets from the UCI Machine Learning Repository were often used (Dua and Graff 2019). In more recent research on semi-supervised image classification, the CIFAR-10/100 (Krizhevsky 2009) and SVHN (Netzer et al. 2011) data sets have been popular choices. Additionally, two-dimensional toy datasets are sometimes used to demonstrate the viability of a new approach. Typically, these toy data sets consist of an input distribution where data points from each class are concentrated along a one-dimensional manifold. For instance, the popular half-moon data set consists of data points drawn from two interleaved half circles, each associated with a different class.

As has been observed in practice, the choice of data sets and their partitioning can have significant impact on the relative performance of different learning algorithms (see, e.g. Chapelle et al. 2006b; Triguero et al. 2015). Some algorithms may work well when the amount of labelled data is limited and perform poorly when more labelled data is available; others may excel on particular types of data sets but not on others. To provide a realistic evaluation of semi-supervised learning algorithms, researchers should thus evaluate their algorithms on a diverse suite of data sets with different quantities of labelled and unlabelled data.

In addition to the choice of data sets and their partitioning, it is important that a strong baseline is chosen when evaluating the performance of a semi-supervised learning method. After all, it is not particularly relevant to practitioners whether the introduction of unlabelled data improves the performance of any particular learning algorithm. Rather, the central question is: does the introduction of unlabelled data yield a learner that is better than any other learner—be it supervised or semi-supervised. As pointed out by Oliver et al. (2018), this calls for the inclusion of state-of-the-art, properly tuned supervised baselines when evaluating the performance of semi-supervised learning algorithms.

Several studies have independently evaluated the performance of different semi-supervised learning methods on various data sets. Chapelle et al. (2006b) empirically compared eleven diverse semi-supervised learning algorithms, using supervised support vector machines and k-nearest neighbours as their baseline. They included semi-supervised support vector machines, label propagation and manifold regularization techniques, applying hyperparameter optimization for each algorithm. Comparing the performance of the algorithms on eight different data sets, the authors found that no algorithm uniformly outperformed the others. Substantial performance improvements over the baselines were observed on some data sets, while performance was found to be degraded on others. Relative performance also varied with the amount of unlabelled data.

Oliver et al. (2018) compared several semi-supervised neural networks, including the mean teacher model, virtual adversarial training and a wrapper method called pseudo-label, on two image classification problems. They reported substantial performance improvements for most of the algorithms, and observed that the error rates typically declined as more unlabelled data points were added (without removing any labelled data points). Performance degradations were observed only when there was a mismatch between the classes present in the labelled data and the classes present in the unlabelled data. These results are promising indeed: they indicate that, in image classification tasks, unlabelled data can be employed by neural networks to consistently improve performance. It is an interesting avenue for future research to investigate whether these consistent performance improvements can also be obtained for other types of data. Furthermore, it is an open question whether the assumptions underlying these semi-supervised neural networks could be exploited to consistently improve the performance of other learning methods.

3 Taxonomy of semi-supervised learning methods

Over the past two decades, a broad variety of semi-supervised classification algorithms has been proposed. These methods differ in the semi-supervised learning assumptions they are based on, in how they make use of unlabelled data, and in the way they relate to supervised algorithms. Existing categorizations of semi-supervised learning methods generally use a subset of these properties and are typically relatively flat, thereby failing to capture similarities between different groups of methods. Furthermore, the categorizations are often fine-tuned towards existing work, making them less suited for the inclusion of new approaches.

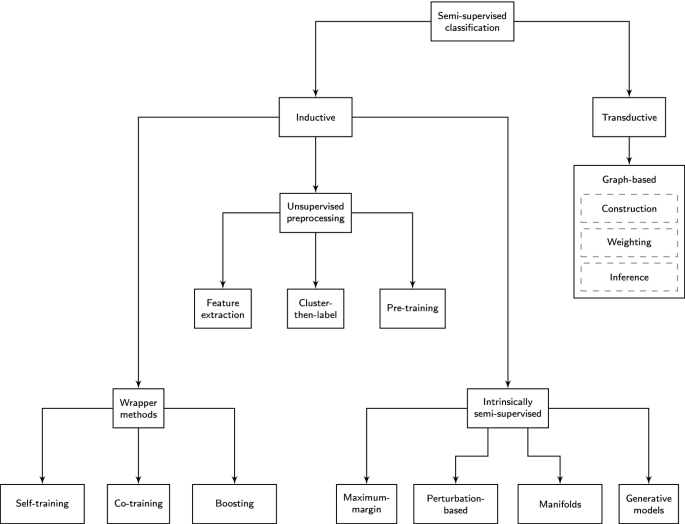

Visualization of the semi-supervised classification taxonomy. Each leaf in the taxonomy corresponds to a specific type of approach to incorporating unlabelled data into classification methods. In the leaf corresponding to transductive, graph-based methods, the dashed boxes represent distinct phases of the graph-based classification process, each of which has a multitude of variations

In this survey, we propose a new way to represent the spectrum of semi-supervised classification algorithms. We attempt to group them in a clear, future-proof way, allowing researchers and practitioners alike to gain insight into the way semi-supervised learning methods relate to each other, to existing supervised learning methods, and to the semi-supervised learning assumptions. The taxonomy is visualized in Fig. 3. At the highest level, it distinguishes between inductive and transductive methods, which give rise to distinct optimization procedures: the former attempt to find a classification model, whereas the latter are solely concerned with obtaining label predictions for the given unlabelled data points. At the second level, it considers the way the semi-supervised learning methods incorporate unlabelled data. This distinction gives rise to three distinct classes of inductive methods, each of which is related to supervised classifiers in a different way.

The first distinction we make in our taxonomy, between inductive and transductive methods, is common in the literature on semi-supervised learning (see, e.g. Chapelle et al. 2006b; Zhu 2008; Zhu and Goldberg 2009). The former, like supervised learning methods, yield a classification model that can be used to predict the label of previously unseen data points. The latter do not yield such a model, but instead directly provide predictions. In other words, given a data set consisting of labelled and unlabelled data, \(X_L, X_U \subseteq \mathcal {X}\), with labels \(\mathbf {y}_L \in \mathcal {Y}^l\) for the l labelled data points, inductive methods yield a model \(f: \mathcal {X} \mapsto \mathcal {Y}\), whereas transductive methods produce predicted labels \(\hat{\mathbf {y}}_U\) for the unlabelled data points in \(X_U\). Accordingly, inductive methods involve optimization over prediction models, whereas transductive methods optimize directly over the predictions \(\hat{\mathbf {y}}_U\).

Inductive methods, which generally extend supervised algorithms to include unlabelled data, are further differentiated in our taxonomy based on the way they incorporate unlabelled data: either in a preprocessing step, directly inside the objective function, or via a pseudo-labelling step. The transductive methods are in all cases graph-based; we group these based on the choices made in different stages of the learning process. In the remainder of this section, we will elaborate on the grouping of semi-supervised learning methods represented in the taxonomy, which forms the basis for our discussion of semi-supervised learning methods in the remainder of this survey.

3.1 Inductive methods

Inductive methods aim to construct a classifier that can generate predictions for any object in the input space. Unlabelled data may be used when training this classifier, but the predictions for multiple new, previously unseen examples are independent of each other once training has been completed. This corresponds to the objective in supervised learning methods: a model is built in the training phase and can then be used for predicting the labels of new data points.

3.1.1 Wrapper methods

A simple approach to extending existing, supervised algorithms to the semi-supervised setting is to first train classifiers on labelled data, and to then use the predictions of the resulting classifiers to generate additional labelled data. The classifiers can then be re-trained on this pseudo-labelled data in addition to the existing labelled data. Such methods are known as wrapper methods: the unlabelled data is pseudo-labelled by a wrapper procedure, and a purely supervised learning algorithm, unaware of the distinction between originally labelled and pseudo-labelled data, constructs the final inductive classifier. This reveals a key property of wrapper methods: most of them can be applied to any given supervised base learner, allowing unlabelled data to be introduced in a straightforward manner. Wrapper methods form the first part of the inductive side of the taxonomy, and are covered in Sect. 4.

3.1.2 Unsupervised preprocessing

Secondly, we consider unsupervised preprocessing methods, which either extract useful features from the unlabelled data, pre-cluster the data, or determine the initial parameters of a supervised learning procedure in an unsupervised manner. Like wrapper methods, they can be used with any supervised classifier. However, unlike wrapper methods, the supervised classifier is only provided with originally labelled data points. These methods are covered in Sect. 5.

3.1.3 Intrinsically semi-supervised methods

The last class of inductive methods we consider directly incorporate unlabelled data into the objective function or optimization procedure of the learning method. Many of these methods are direct extensions of supervised learning methods to the semi-supervised setting: they extend the objective function of the supervised classifier to include unlabelled data. Semi-supervised support vector machines (S3VMs), for example, extend supervised SVMs by maximizing the margin not only on labelled, but also on unlabelled data. There are intrinsically semi-supervised extensions of many prominent supervised learning approaches, including SVMs, Gaussian processes and neural networks, and we describe these in Sect. 6. We further group the methods inside this category based on the semi-supervised learning assumptions on which they rely.

3.2 Transductive methods

Unlike inductive methods, transductive methods do not construct a classifier for the entire input space. Instead, their predictive power is limited to exactly those objects that it encounters during the training phase. Therefore, transductive methods have no distinct training and testing phases. Since supervised learning methods are by definition not supplied with unlabelled data until the testing phase, no clear analogies of transductive algorithms exist in supervised learning.

Since no model of the input space exists in transductive learners, information has to be propagated via direct connections between data points. This observation naturally gives rise to a graph-based approach to transductive methods: if a graph can be defined in which similar data points are connected, information can then be propagated along the edges of this graph. In practice, all transductive methods we discuss are either explicitly graph-based or can implicitly be understood as such. We note that inductive graph-based methods also exist; we cover them in Sect. 6.3. Inductive as well as transductive graph-based methods are typically premised on the manifold assumption: the graphs, constructed based on the local similarity between data points, provide a lower-dimensional representation of the potentially high-dimensional input data.

Transductive graph-based methods generally consist of three steps: graph construction, graph weighting and inference. In the first step, the set of objects, X, is used to construct a graph where each node represents a data point and pairwise similar data points are connected by an edge. In the second step, these edges are weighted to represent the extent of the pairwise similarity between the respective data points. In the third step, the graph is used to assign labels to the unlabelled data points. Different methods for carrying out these three steps are discussed in detail in Sect. 7.

4 Wrapper methods

Wrapper methods are among the oldest and most widely known algorithms for semi-supervised learning (Zhu 2008). They utilize one or more supervised base learners and iteratively train these with the original labelled data as well as previously unlabelled data that is augmented with predictions from earlier iterations of the learners. The latter is commonly referred to as pseudo-labelled data. The procedure usually consists of two alternating steps of training and pseudo-labelling. In the training step, one or more supervised classifiers are trained on the labelled data and, possibly, pseudo-labelled data from previous iterations. In the pseudo-labelling step, the resulting classifiers are used to infer labels for the previously unlabelled objects; the data points for which the learners were most confident of their predictions are pseudo-labelled for use in the next iteration.

A significant advantage of wrapper methods is that they can be used with virtually any supervised base learner. The supervised base learner can be entirely unaware of the wrapper method, which simply passes pseudo-labelled samples to the base learner as if they were regular labelled samples. Although some wrapper methods require the base learner to provide probabilistic predictions, many wrapper methods relying on multiple base learners do not. For any particular wrapper method, the semi-supervised learning assumptions underlying it are dependent on the base learners that are used. In that sense, a wrapper method cannot be considered a learning method on its own: it only becomes a complete learning method when it is combined with a particular set of base learners.

A comprehensive survey of wrapper methods was published recently by Triguero et al. (2015). In addition to providing an overview of such methods, they also proposed a categorization and taxonomy of wrapper methods, which is based on (1) how many classifiers are used, (2) whether different types of classifiers are used, and (3) whether they use single-view or multi-view data (i.e. whether the data is split into multiple feature subsets). This taxonomy provides valuable insight into the space of wrapper methods.

We present a less complex taxonomy, focused on the three relatively independent types of wrapper methods that have been studied in the literature. Firstly, we consider self-training, which uses one supervised classifier that is iteratively re-trained on its own most confident predictions. Secondly, we consider co-training, an extension of self-training to multiple classifiers that are iteratively re-trained on each other’s most confident predictions. The classifiers are supposed to be sufficiently diverse, which is usually achieved by operating on different subsets of the given objects or features. Lastly, we consider pseudo-labelled boosting methods. Like traditional boosting methods, they build a classifier ensemble by constructing individual classifiers sequentially, where each individual classifier is trained on both labelled data and the most confident predictions of the previous classifiers on unlabelled data.

4.1 Self-training

Self-training methods (sometimes also called “self-learning” methods) are the most basic of pseudo-labelling approaches (Triguero et al. 2015). They consist of a single supervised classifier that is iteratively trained on both labelled data and data that has been pseudo-labelled in previous iterations of the algorithm.

At the beginning of the self-training procedure, a supervised classifier is trained on only the labelled data. The resulting classifier is used to obtain predictions for the unlabelled data points. Then, the most confident of these predictions are added to the labelled data set, and the supervised classifier is re-trained on both the original labelled data and the newly obtained pseudo-labelled data. This procedure is typically iterated until no more unlabelled data remain.

Self-training was first proposed by Yarowsky (1995) as an approach to word sense disambiguation in text documents, predicting the meaning of words based on their context. Since then, several applications and variations of self-training have been put forward. For instance, Rosenberg et al. (2005) applied self-training to object detection problems, and showed improved performance over a state-of-the-art (at that time) object detection model. Dópido et al. (2013) developed a self-training approach for hyperspectral image classification. They used domain knowledge to select a set of candidate unlabelled samples, and pseudo-labelled the most informative of these samples with the predictions made by the trained classifier.

The self-training paradigm admits a multitude of design decisions, including the selection of data to pseudo-label, the re-use of pseudo-labelled data in later iterations of the algorithm, and stopping criteria (see, e.g. Rosenberg et al. 2005; Triguero et al. 2015). The selection procedure for data to be pseudo-labelled is of particular importance, since it determines which data end up in the training set for the classifier. In typical self-training settings, where this selection is made based on prediction confidence, the quality of the confidence estimates significantly influences algorithm performance. In particular, the ranking of prediction probabilities for the unlabelled samples should reflect the true confidence ranking.

If well-calibrated probabilistic predictions are available, the respective probabilities can be used directly. In this case, the self-training approach is iterative and not incremental, as label probabilities for unlabelled data points are re-estimated in each step. In that case, the approach becomes similar to expectation-maximization (EM; Dempster et al. 1977). It has been particularly well studied in the context of naïve Bayes classifiers, which are inherently probabilistic (Nigam and Ghani 2000; Nigam et al. 2000, 2006). Wu et al. (2012b) recently applied semi-supervised EM with a naïve Bayes classifier to the problem of detecting fake product reviews on e-commerce websites.

Algorithms that do not natively support robust probabilistic predictions may require adaptations to benefit from self-training. Decision trees are a prime example of this: without any modifications or pruning, prediction probability estimates, which are generally calculated from the fraction of samples in a leaf with a certain label, are generally of low quality. This can be mainly attributed to the fact that most decision tree learning algorithms explicitly attempt to minimize the impurity in tree nodes, thereby encouraging small leaves and highly biased probability estimates (Provost and Domingos 2003). Tanha et al. (2017) attempted to overcome this problem in two distinct ways. Firstly, they applied several existing methods, such as grafting and Laplace correction, to directly improve prediction probability estimates. Secondly, they used a local distance-based measure to determine the confidence ranking between instances: the prediction confidence of an unlabelled data point is based on the absolute difference in the Mahalanobis distances between that point and the labelled data from each class. They showed improvements in performance of both decision trees and random forests (ensembles of decision trees) using this method (Tanha et al. 2017).

Leistner et al. (2009) also utilized self-training to improve random forests. Instead of labelling the unlabelled data \(\mathbf {x} \in X_U\) with the label predicted to be most likely, they pseudo-label each unlabelled data point independently for each tree according to the estimated posterior distribution \(p(y|\mathbf {x})\). Furthermore, they proposed a stopping criterion based on the out-of-bag-error: when the out-of-bag-error (which is an unbiased estimate of the generalization error) increases, training is stopped.

The base learners in self-training are by definition agnostic to the presence of the wrapper method. Consequently, they have to be completely re-trained in each self-training iteration. However, when a classifier can be trained incrementally (i.e. optimizing the objective function over individual data points or subsets of the given data), an iterative pseudo-labelling approach similar to self-training can be applied. Instead of re-training the entire algorithm in each iteration, data points can be pseudo-labelled throughout the training process. This approach was applied to neural networks by Lee (2013), who proposed the pseudo-label approach. Since the pseudo-labels predicted in the earlier training stages are generally less reliable, the weight of the pseudo-labelled data is increased over time. The pseudo-label approach exhibits clear similarities to self-training, but differs in the sense that the classifier is not re-trained after each pseudo-labelling step: instead, it is fine-tuned with new pseudo-labelled data, and therefore technically deviates from the wrapper method paradigm.

Limited studies regarding the theoretical properties of self-training algorithms exist. Haffari and Sarkar (2007) performed a theoretical analysis of several variants of self-training and showed a connection with graph-based methods. Culp and Michailidis (2008) analyzed the convergence properties of a variant of self-training with several base learners, and considered the connection to graph-based methods as well.

4.2 Co-training

Co-training is an extension of self-training to multiple supervised classifiers. In co-training, two or more supervised classifiers are iteratively trained on the labelled data, adding their most confident predictions to the labelled data set of the other supervised classifiers in each iteration. For co-training to succeed, it is important that the base learners are not too strongly correlated in their predictions. If they are, their potential to provide each other with useful information is limited. In the literature, this condition is usually referred to as the diversity criterion (Wang and Zhou 2010). Zhou and Li (2010) provided a survey of semi-supervised learning methods relying on multiple base learners. They jointly refer to these methods as disagreement-based methods, referring to the observation that co-training approaches exploit disagreements between multiple learners: they exchange information through unlabelled data, for which different learners predict different labels.

To promote classifier diversity, earlier co-training approaches mainly relied on the existence of multiple different views of the data, which generally correspond to distinct subsets of the feature set. For instance, when handling video data, the data can be naturally decomposed into visual and audio data. Such co-training methods belong to the broader class of multi-view learning approaches, which includes a broad range of supervised learning algorithms as well. A comprehensive survey of multi-view learning was produced by Xu et al. (2013). We cover multi-view co-training methods in Sect. 4.2.1. In many real-world problem scenarios, no distinct views of the data are known a priori. Single-view co-training methods address this problem either by automatically splitting the data into different views, or by promoting diversity in the learning algorithms themselves; we cover these methods in Sect. 4.2.2. We also briefly discuss co-regularization methods, in which multiple classifiers are combined into a single objective function, in Sect. 4.2.3.

4.2.1 Multi-view co-training

The basic form of co-training was proposed by Blum and Mitchell (1998). In their seminal paper, they proposed to construct two classifiers that are trained on two distinct views, i.e. subsets of features, of the given data. After each training step, the most confident predictions for each view are added to the set of labelled data for the other view. Blum and Mitchell applied the co-training algorithm to the classification for university web pages, using the web page text and the anchor text in links to the web page from external sources as two distinct views. This algorithm and variants thereof have been successfully applied in several fields, most notably natural language processing (Kiritchenko and Matwin 2001; Mihalcea 2004; Wan 2009).

The original co-training algorithm by Blum and Mitchell (1998) relies on two main assumptions to succeed: (1) each individual subset of features should be sufficient to obtain good predictions on the given data set, and (2) the subsets of features should be conditionally independent given the class label. The first assumption can be understood trivially: if one of the two feature subsets is insufficient to form good predictions, a classifier using that set can never contribute positively to the overall performance of the combined approach. The second assumption is related to the diversity criterion: if the feature subsets are conditionally independent given the class label, the predictions of the individual classifiers are unlikely to be strongly correlated. Formally, for any data point \(\mathbf {x}_i = \mathbf {x}^{(1)}_i \times \mathbf {x}^{(2)}_i\), decomposed into \(\mathbf {x}^{(1)}_i\) and \(\mathbf {x}^{(2)}_i\) for the first and second feature subset, respectively, the conditional independence assumption amounts to \(p(\mathbf {x}^{(1)}_i | \mathbf {x}^{(2)}_i, y_i) = p(\mathbf {x}^{(1)}_i | y_i)\). Dasgupta et al. (2002) showed that, under the previously mentioned assumptions, generalization error can be decreased by promoting agreement among the individual learners.

In practice, the second assumption is generally not satisfied: even if a natural split of features exists, such as in the experimental setup used by Blum and Mitchell (1998), it is unlikely that information contained in one view provides no information about the other view when conditioned on the class label (Du et al. 2011). Considering the university web page classification example, the anchor text of a link to a web page can indeed be expected to contain clues towards the content of the web page, even if it is known that the web page is classified as a faculty member’s home page. For example, if the link’s anchor text is “Dean of the Engineering Faculty”, one is more likely to find information about the dean of the engineering faculty than about any other person in the text of that page. Thus, several alternatives to this assumption have been considered.

Abney (2002) showed that a weak independence assumption is sufficient for successful co-training. Balcan et al. (2005) further relaxed the conditional independence assumption, showing that a much weaker assumption, which they dub the expansion assumption, is sufficient and to some extent necessary. The expansion assumption states that the two views are not highly correlated, and that individual classifiers never confidently make incorrect predictions.

Du et al. (2011) studied empirical methods to determine to what degree the sufficiency and independence assumptions hold. They proposed several methods for automatically splitting the feature set into two views, and showed that the resulting empirical independence and sufficiency is positively correlated with the performance of the co-trained algorithm, indicating that feature splits optimizing sufficiency and independence lead to good classifiers.

4.2.2 Single-view co-training

As shown by Du et al. (2011), co-training can be successful even when no natural split in a given feature set is known a priori. This observation is echoed throughout the literature on co-training, and many different approaches to applying co-training in this so-called single-view setting exist.

Chen et al. (2011) attempted to alleviate the need for pre-defined disjoint feature sets by automatically splitting the feature set in each co-training iteration. They formulated a single optimization problem closely related to co-training, incorporating both the requirement that the feature sets should be disjoint and the expansion property of Balcan et al. (2005). They showed promising results for this approach on a partially synthetic data set, where multiple views of each data point are automatically generated. Wang and Zhou (2010) reasoned about sufficient and necessary conditions for co-training to succeed, approaching co-training from a graph-based perspective, where label propagation is alternately applied to each learner. A downside of this approach is that, although inspired by co-training, it cannot be applied to an arbitrary supervised learning algorithm without modification: the operations resembling co-training are embedded in the objective function, which is optimized directly.

Several techniques have been proposed for splitting single-view data sets into multiple views. For instance, Wang et al. (2008b) suggested to generate k random projections of the data, and use these as the views for k different classifiers. Zhang and Zheng (2009) proposed to project the data onto a lower-dimensional subspace using principal component analysis and to construct the pseudo-views by greedily selecting the transformed features with maximal variance. Yaslan and Cataltepe (2010) do not transform the data to a different basis, but select the features for each view iteratively, with preference given to features with high mutual information with respect to the given labels.

Further approaches to apply algorithms resembling co-training to data sets where no explicit views are available focus on other ways of introducing diversity among the classifiers. For example, one can use different hyperparameters for the supervised algorithms (Wang and Zhou 2007; Zhou and Li 2005a), or use different algorithms altogether (Goldman and Zhou 2000; Xu et al. 2012; Zhou and Goldman 2004). Wang and Zhou (2007) provided both theoretical and empirical analyses on why co-training can work in single-view settings. They showed that the diversity between the learners is positively correlated with their joint performance. Zhou and Li (2005b) proposed tri-training, where three classifiers are alternately trained. When two of the three classifiers agree on their prediction for a given data point, that data point is passed to the other classifier along with the respective label. Crucially, tri-training does not rely on probabilistic predictions of individual classifiers, and can thus be applied to a much broader range of supervised learning algorithms.

The authors of the tri-training approach proposed to extend it to more than three learners—notably, to random forests (Li and Zhou 2007). The approach, known as co-forest, starts by training the decision trees independently on all labelled data. Then, in each iteration, each classifier receives pseudo-labelled data based on the joint prediction of all other classifiers on the unlabelled data: if the fraction of classifiers predicting a class \(\hat{y}_i\) for an unlabelled data point \(\mathbf {x}_i\) exceeds a certain threshold, the pseudo-labelled data point \((\mathbf {x}_i, y_i)\) is passed to the classifier. The decision trees are then all re-trained on their labelled and pseudo-labelled data. In the next iteration, all previously pseudo-labelled data is treated as unlabelled again. We note that, as the number of trees approaches infinity, this approach becomes a form of self-training.

Co-forest includes a mechanism for reducing the influence of possibly mislabelled data points in the pseudo-labelling step by weighting the newly labelled data based on prediction confidence. Deng and Zu Guo (2011) attempted to further prevent the influence of possibly mislabelled data points by removing “suspicious” pseudo-labellings. After each pseudo-labelling step, the prediction for each pseudo-labelled data point \(\mathbf {x}_i\) is compared to the (pseudo-)labels of its k nearest neighbours (both labelled and pseudo-labelled); in case of a mismatch, the pseudo-label is removed from \(\mathbf {x}_i\).

We note that in existing literature concerning co-forest, the size of the forest has always been limited to six trees. It has been empirically shown that, in supervised random forests, performance can substantially improve as the number of trees is increased (Oshiro et al. 2012). Therefore, it is likely that increasing the number of trees in co-forest will substantially affect relative performance compared to random forests.

4.2.3 Co-regularization

Co-training methods reduce disagreement between classifiers by passing information between them, in the form of pseudo-labelled data. Furthermore, the implicit objective of co-training is to minimize the error rate of the ensemble of classifiers. Sindhwani et al. proposed to make these properties explicit in a single objective function (Sindhwani et al. 2005; Sindhwani and Rosenberg 2008). They propose co-regularization, a regularization framework in which both the ensemble quality and the disagreement between base learners are simultaneously optimized. The key idea is to use an objective function comprised of two terms: one that penalizes incorrect predictions made by the ensemble, and another that directly penalizes different predictions of the base classifiers. To handle per-view noise within this framework, Yu et al. (2011) introduced Bayesian co-training, which uses a graphical model for combining data from multiple views and a kernel-based method for co-regularization. This model was extended to handle different noise levels per data point by Christoudias et al. (2009).

Co-training can be seen as a greedy optimization strategy for the co-regularization objective. The two components of the objective function are minimized in an alternating fashion: the prediction error of the ensemble is minimized by training the base learners independently, and the disagreement between classifiers is minimized by propagating predictions from one classifier to the others as if they were ground truth. We note, however, that the general co-regularization objective does not have to be optimized using a wrapper method, and many co-regularization algorithms use different approaches (see, e.g. Sindhwani and Rosenberg 2008; Yu et al. 2011).

4.3 Boosting

Ensemble classifiers consist of multiple base classifiers, which are trained and then used to form combined predictions (Zhou 2012). The simplest form of ensemble learning trains k base classifiers independently and aggregates their predictions. Beyond this simplistic approach, two main branches of supervised ensemble learning exist: bagging and boosting (Zhou 2012). In bagging methods, each base learner is provided with a set of l data points, which are sampled, uniformly at random with replacement, from the original data set (bootstrapping). The base classifiers are trained independently. When training is completed, their outputs are aggregated to form the prediction of the ensemble. In boosting methods, on the other hand, each base learner is dependent on the previous base learners: it is provided with the full data set, but with weights applied to the data points. The weight of a data point \(x_i\) is based on the performance of the previous base learners on \(x_i\), such that larger weights get assigned to data points that were incorrectly classified. The final prediction is obtained as a linear combination of the predictions of the base classifiers.

Technically, boosting methods construct a weighted ensemble of classifiers \(h_t\) in a greedy fashion. Let \(F_{T-1}(\mathbf {x}) = \sum _{t=1}^{T-1} \alpha _t \cdot h_t(\mathbf {x})\) denote the ensemble of classifiers \(h_t\) with weight \(\alpha _t\) at time \(T-1\). Furthermore, let \(\ell (\hat{y}, y)\) denote the loss function for predicting label \(\hat{y}\) for a data point with true label y. In each iteration of the algorithm, an additional classifier \(h_T\) is added to the ensemble with a certain weight \(\alpha _T\), such that the cost function

is minimized. Note that, at time T, the ensemble \(F_{T-1}\) is fixed. With particular choices of loss functions, such as \(\ell (\hat{y},y) = \exp (-\hat{y} \cdot y)\), the optimization problem yields a weighted classification problem for determining \(h_T\), and allows us to express the optimal \(\alpha _T\) in terms of the loss of \(h_T\) on the training data.

By definition, base learners in bagging methods are trained independently. Therefore, the only truly semi-supervised bagging method would apply self-training to individual base learners. Co-training, however, can be seen to be closely related to bagging methods: the only way classifiers interact is by the exchange of pseudo-labelled data; other than that, the classifiers can be trained independently and simultaneously. However, most co-training methods do not use bootstrapping, a defining characteristic of bagging methods. In boosting, on the other hand, there is an inherent dependency between base learners. Consequently, boosting methods can be readily extended to the semi-supervised setting, by introducing pseudo-labelled data after each learning step; this idea gives rise to the class of semi-supervised boosting methods.

Semi-supervised boosting methods have been studied extensively over the past two decades. The success achieved by supervised boosting methods, such as AdaBoost (Freund and Schapire 1997), gradient boosting, and XGBoost (Chen and Guestrin 2016), provides ample motivation for bringing boosting to the semi-supervised setting. Furthermore, the pseudo-labelling approach of self-training and co-training can be easily extended to boosting methods.

4.3.1 SSMBoost

The first effort towards semi-supervised boosting methods was made by Grandvalet et al., who extended AdaBoost to the semi-supervised setting. They proposed a semi-supervised boosting algorithm (Grandvalet et al. 2001), which they later extended and motivated from the perspective of gradient boosting (d’Alché Buc et al. 2002). A loss function is defined for unlabelled data, based on the predictions of the current ensemble and on the predictions of the base learner under construction. Experiments were conducted with multiple loss functions; the authors reported the strongest results using the expected loss of the new, combined classifier. The weighted error \(\epsilon _t\) for base classifier \(h_t\) is thus adapted to include the unlabelled data points, causing the weight term \(\alpha _t\) to depend on the unlabelled data as well.

Crucially, SSMBoost does not assign pseudo-labels to the unlabelled data points. As a result, it requires semi-supervised base learners to make use of the unlabelled data and is therefore intrinsically semi-supervised, in contrast to most other semi-supervised boosting algorithms, which are wrapper methods. Nevertheless, SSMBoost is included here, because it forms the foundation for all other forms of semi-supervised boosting algorithms, which do not require semi-supervised base learners.

4.3.2 ASSEMBLE

The ASSEMBLE algorithm, short for Adaptive Supervised Ensemble, pseudo-labels the unlabelled data points after each iteration, and uses these pseudo-labelled data points in the construction of the next classifier, thus alleviating the need for semi-supervised base learners (Bennett et al. 2002). As shown by its authors, ASSEMBLE effectively maximizes the classification margin in function space.

Since pseudo-labels are used in ASSEMBLE, it is not trivial to decide which unlabelled data points to pass to the next base learner. Bennett et al. (2002) proposed to use bootstrapping—i.e. sampling, uniformly at random, with replacement, l data points from the \(l + u\) labelled and unlabelled data points.

4.3.3 SemiBoost

The semi-supervised boosting algorithm SemiBoost addresses the problem of selecting data points to be used by the base learners by relying on the manifold assumption, utilizing principles from graph-based methods (Mallapragada et al. 2009). Each unlabelled data point is assigned a pseudo-label, and the corresponding prediction confidence is calculated based on a predefined neighbourhood graph that encodes similarity between data points. Then, a subset of these pseudo-labelled data points is added to the set of labelled data points for training the next base learner. The probability of a sample being selected for this subset is proportional to its prediction confidence. SemiBoost was successfully applied to object tracking in videos by Grabner et al. (2008).

SemiBoost uses the standard boosting classification model, expressing the final label prediction as a linear combination of the predictions of the individual learners. Its cost function, however, is highly dissimilar from the previously described semi-supervised boosting methods. Mallapragada et al. (2009) argue that a successful labelling of the test data should conform to the following three requirements. Firstly, the predicted labels of the unlabelled data should be consistent for unlabelled data points that are close to each other. Secondly, the predicted labels of the unlabelled data should be consistent with the labels of nearby labelled data points. And, thirdly, the predicted labels for the labelled data points should correspond to their true labels. These requirements are expressed in the form of a constrained optimization problem, where the first two are captured by the objective function, and the last is imposed as a constraint. In other words, the SemiBoost algorithm uses boosting to solve the optimization problem

where \(\mathcal {L}_U\) and \(\mathcal {L}_L\) are the cost functions expressing the inconsistency across the unlabelled and the combined labelled and unlabelled data, respectively, and \(\lambda \in \mathbb {R}\) is a constant governing the relative weight of the cost terms; A is an \(n \times n\) symmetric matrix denoting the pairwise similarities between data points. Lastly, \(F_T\) denotes the joint prediction function of the ensemble of classifiers at time T. We note that the optimization objective in Eq. 1 is very similar to the cost functions encountered in graph-based methods (see Sects. 6.3 and 7) in that it favours classifiers that consistently label data points on the same manifold. In graph-based methods, however, no distinction is generally made between labelled-unlabelled and unlabelled-unlabelled pairs.

4.3.4 Other semi-supervised boosting methods

The three previously discussed methods form the core of semi-supervised boosting research. Further work in the area includes RegBoost, which, like SemiBoost, includes local label consistency in its objective function (Chen and Wang 2011). In RegBoost, this term is also dependent on the estimated local density of the marginal distribution p(x). Several attempts have been made to extend the label consistency regularization to the multiclass setting (Tanha et al. 2012; Valizadegan et al. 2008).

5 Unsupervised preprocessing

We now turn to a second category of inductive methods, known as as unsupervised preprocessing, which, unlike wrapper methods and intrinsically semi-supervised methods, use the unlabelled data and labelled data in two separate stages. Typically, the unsupervised stage comprises either the automated extraction or transformation of sample features from the unlabelled data (feature extraction), the unsupervised clustering of the data (cluster-then-label), or the initialization of the parameters of the learning procedure (pre-training).

5.1 Feature extraction

Since the early days of machine learning, feature extraction has played an important role in the construction of classifiers. Feature extraction methods attempt to find a transformation of the input data such that the performance of the classifier improves or such that its construction becomes computationally more efficient. Feature extraction is an expansive research topic that has been covered by several books and surveys. We focus on a small number of particularly prominent techniques and refer the reader to the existing literature on feature extraction methods for further information (see, e.g. Guyon and Elisseeff 2006; Sheikhpour et al. 2017).

Many feature extraction methods operate without supervision, i.e. without taking into account labels. Principal component analysis, for example, transforms the input data to a different basis, such that they are linearly uncorrelated, and orders the principal components based on their variance (Wold et al. 1987). Other traditional feature extraction algorithms operate on the labelled data and try to extract features with high predictive power (see, e.g. Guyon and Elisseeff 2006).

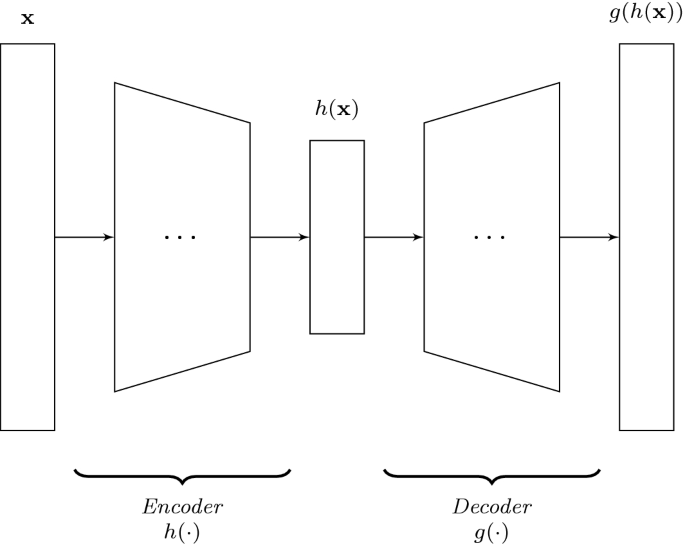

Recent semi-supervised feature extraction methods have mainly been focused on finding latent representations of the input data using deep neural networks (in Sect. 6.2.1, we further discuss neural networks). The most prominent example of this is the autoencoder: a neural network with one or more hidden layers that has the objective of reconstructing its input. By including a hidden layer with relatively few nodes, usually called the representation layer, the network is forced to find a way to compactly represent its input data. Once the network is trained, features are provided by the representation layer. A schematic representation of a standard autoencoder is provided in Fig. 4.

The network can be considered to consist of two parts: the encoder h, which maps an input vector \(\mathbf {x}\) to its latent representation \(h(\mathbf {x})\), and the decoder g, which attempts to map the latent representation back to the original \(\mathbf {x}\). The network is trained by optimizing a loss function penalizing the reconstruction error: a measure of inconsistency between the input \(\mathbf {x}\) and the corresponding reconstruction \(g(h(\mathbf {x}))\). Once the network is trained, the latent representation of any \(\mathbf {x}\) can be found by simply propagating it through the encoder part of the network to obtain \(h(\mathbf {x})\). A popular type of autoencoders is the denoising autoencoder, which is trained on noisy versions of the input data, penalizing the reconstruction error of the reconstructions against the noiseless originals (Vincent et al. 2008). Another variant, the contractive autoencoder, directly penalizes the sensitivity of the autoencoder to perturbations in the input (Rifai et al. 2011b).

Autoencoders attempt to find a lower-dimensional representation of the input space without sacrificing substantial amounts of information. Thus, they inherently act on the assumption that the input space contains lower-dimensional substructures on which the data lie. Furthermore, when applied as a preprocessing step to classification, they assume that two samples on the same lower-dimensional substructure have the same label. These observations indicate that the assumptions underlying autoencoders are closely related to the semi-supervised manifold assumption.

In some domains, data is not inherently represented as a meaningful feature vector. Since many common classification methods require such a representation, feature extraction is a necessity in those cases. The feature extraction step, then, consists of finding an embedding of the given object into a vector space by taking into account the relations between different input objects. Examples of such approaches can be found in natural language processing (Collobert et al. 2011; Mikolov et al. 2013) and network science (Grover and Leskovec 2016; Perozzi et al. 2014; Wang et al. 2016).

5.2 Cluster-then-label

Clustering and classification have traditionally been regarded as relatively disjoint research areas. However, many semi-supervised learning algorithms use principles from clustering to guide the classification process. Cluster-then-label approaches form a group of methods that explicitly join the clustering and classification processes: they first apply an unsupervised or semi-supervised clustering algorithm to all available data, and use the resulting clusters to guide the classification process.

Goldberg et al. (2009) first cluster the labelled data and a subset of the unlabelled data. A classifier is then trained independently for each cluster on the labelled data contained in it. Finally, the unlabelled data points are classified using the classifiers for their respective clusters. In the clustering step, a graph is constructed over the data points using the Hellinger distance; size-constrained spectral clustering is then applied to the resulting graph. Since the clustering is only used to segment the data, after which individual learners are applied to each cluster, the approach supports any supervised base learner.

Demiriz et al. (1999) first cluster the data in a semi-supervised manner, favouring clusters with limited label impurity (i.e. a high degree of consistency in the labels of the data points within a given cluster), and use the resulting clusters in classification. Dara et al. (2002) proposed a more elaborate preprocessing step, applying self-organizing maps (Kohonen 1998) to the labelled data in an iterative fashion. The unlabelled data points are then mapped, yielding a cluster assignment for each of them. If the cluster to which an unlabelled data point \(x_i\) is mapped contains only data points with the same label, that label is also assigned to \(x_i\). This process can be iterated, after which the resulting label assignments can be used to train an inductive classifier (in the work of Dara et al., a multilayer perceptron). We note that this approach can be regarded as a wrapper method (see Sect. 4).

5.3 Pre-training

In pre-training methods, unlabelled data is used to guide the decision boundary towards potentially interesting regions before applying supervised training.

This approach naturally applies to deep learning methods, where each layer of the hierarchical model can be considered a latent representation of the input data. The most commonly known algorithms corresponding to this paradigm are deep belief networks and stacked autoencoders. Both methods are based on artificial neural networks and aim to guide the parameters (weights) of a network towards interesting regions in model space using the unlabelled data, before fine-tuning the parameters with the labelled data.

Pre-training approaches have deep roots in the field of deep learning. Since the early 2000s, neural networks with multiple hidden layers (deep neural networks) have been gaining an increasing amount of attention. However, due to their high number of tunable parameters, training these networks has often been challenging: convergence tended to be slow, and trained networks were prone to poor generalization (Erhan et al. 2010). Early on, these problems were commonly addressed by employing unsupervised pre-training methods. Since then, this has been mostly superseded by the application of weight sharing, regularization methods and different activation functions. Consequently, the work we cover in this section mainly stems from the first decade of the 2000s. However, the underlying principles still apply, and are still used in other methods (such as ladder networks, see Sect. 6.2.2).

Deep belief networks consist of multiple stacked restricted Boltzmann machines (RBMs), which are trained layer-by-layer with unlabelled data in a greedy fashion (Hinton et al. 2006). The resulting weights are then used as the initialization for a deep neural network with the same architecture augmented by an additional output layer, enabling the model to be trained in a supervised manner on the labelled data.

Stacked autoencoders are very similar to deep belief networks, but they use autoencoders as their base models instead of RBMs. The autoencoders are trained layer-by-layer, where the encoding \(h(\mathbf {x})\) produced by each autoencoder is passed as the input to the next autoencoder, which is then trained to reconstruct it with minimal error. Finally, these trained autoencoders are combined, an output layer is added (as in deep belief networks), and the resulting network is trained on the labelled data in a supervised manner. The paradigm works with multiple types of autoencoders, including denoising and contractive autoencoders (Vincent et al. 2008; Rifai et al. 2011b).

Based on an empirical analysis of deep belief networks and stacked autoencoders, Erhan et al. (2010) suggested that unsupervised pre-training guides the neural network model towards regions in model space that provide better generalization. Deep neural networks are often motivated from the perspective that they learn a higher-level representation of the data at each layer. Thus, each layer of the network can be considered to contain a different representation of the input data. Both deep belief networks and stacked autoencoders attempt to guide the model in the extraction of these hierarchical representations, pushing the model towards the extraction of representations that are deemed informative. From that perspective, pre-training methods are closely related to the unsupervised feature extraction methods described earlier: they both use unlabelled data in an attempt to extract meaningful information from the input data. Crucially, however, the parameters used for unsupervised preprocessing can be changed in the supervised fine-tuning phase of pre-training methods, whereas they remain fixed after the unsupervised phase of feature extraction approaches.