Abstract

Trust-region methods have yielded state-of-the-art results in policy search. A common approach is to use KL-divergence to bound the region of trust resulting in a natural gradient policy update. We show that the natural gradient and trust region optimization are equivalent if we use the natural parameterization of a standard exponential policy distribution in combination with compatible value function approximation. Moreover, we show that standard natural gradient updates may reduce the entropy of the policy according to a wrong schedule leading to premature convergence. To control entropy reduction we introduce a new policy search method called compatible policy search (COPOS) which bounds entropy loss. The experimental results show that COPOS yields state-of-the-art results in challenging continuous control tasks and in discrete partially observable tasks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The natural gradient (Amari 1998) is an integral part of many reinforcement learning (Kakade 2001; Bagnell and Schneider 2003; Peters and Schaal 2008; Geist and Pietquin 2010) and optimization (Wierstra et al. 2008) algorithms. Due to the natural gradient, gradient updates become invariant to affine transformations of the parameter space and the natural gradient is also often used to define a trust-region for the policy update. The trust-region is defined by a bound of the Kullback–Leibler (KL) (Peters et al. 2010; Schulman et al. 2015) divergence between new and old policy and it is well known that the Fisher information matrix, used to compute the natural gradient is a second order approximation of the KL divergence. Such trust-region optimization is common in policy search and has been successfully used to optimize neural network policies.

However, many properties of the natural gradient are still under-explored, such as compatible value function approximation (Sutton et al. 1999) for neural networks, the approximation quality of the KL-divergence and the online performance of the natural gradient. We analyze the convergence of the natural gradient analytically and empirically and show that the natural gradient does not give fast convergence properties if we do not add an entropy regularization term. This entropy regularization term results in a new update rule which ensures that the policy looses entropy at the correct pace, leading to convergence to a good policy. We further show that the natural gradient is the optimal (and not the approximate) solution to a trust region optimization problem for log-linear models if the natural parameters of the distribution are optimized and we use compatible value function approximation.

We analyze compatible value function approximation for neural networks and show that the components of this approximation are composed of two terms, a state value function which is subtracted from a state-action value function. While it is well known that the compatible function approximation denotes an advantage function, the exact structure was unclear. We show that using compatible value function approximation, we can derive similar algorithms to Trust Region Policy Search that obtain the policy update in closed form. A summary of our contributions is as follows:

-

It is well known that the second-order Taylor approximation to trust-region optimization with a KL-divergence bound leads to an update direction identical to the natural gradient. However, what is not known is that when using the natural parameterization for an exponential policy and using compatible features we can compute the step-size for the natural gradient that solves the trust-region update exactly for the log-linear parameters.

-

When using an entropy bound in addition to the common KL-divergence bound, the compatible features allow us to compute the exact update for the trust-region problem in the log-linear case and for a state independent covariance also in the non-linear case.

-

Our new algorithm called Compatible Policy Search (COPOS), based on the above insights, outperforms comparison methods in both continuous control and partially observable discrete action experiments due to entropy control allowing for principled exploration.

2 Preliminaries

This section discusses background information needed to understand our compatible policy search approach. We first go into Markov decision process (MDP) basics and introduce the optimization objective. We continue by showing how trust region methods can help with challenges in updating the policy by using a KL-divergence bound, continue with the classic policy gradient update, introduce the natural gradient and the connection to the KL-divergence bound. Moreover, we introduce the compatible value function approximation and connect it to the natural gradient. Finally, this section concludes by showing how the optimization problem resulting from using an entropy bound to control exploration can be solved.

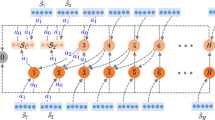

Following standard notation, we denote an infinite-horizon discounted Markov decision process (MDP) by the tuple \((\mathcal {S}, \mathcal {A}, p, r, p_0, \gamma )\), where \(\mathcal {S}\) is a finite set of states and \(\mathcal {A}\) is a finite set of actions. \(p(s_{t+1} | s_t, a_t)\) denotes the probability of moving from state \(s_t\) to \(s_{t+1}\) when the agent executes action \(a_t\) at time step t. We assume \(p(s_{t+1} | s_t, a_t)\) is stationary and unknown but that we can sample from \(p(s_{t+1} | s_t, a_t)\) either in simulation or from a physical system. \(p_0(s)\) denotes the initial state distribution, \(\gamma \in (0,1)\) the discount factor, and \(r(s_t,a_t)\) denotes the real valued reward in state \(s_t\) when agent executes action \(a_t\). The goal is to find a stationary policy \(\pi (a_t|s_t)\) that maximizes the expected reward \(\mathbb {E}_{s_0, a_0, \ldots } \left[ \sum _{t=0}^{\infty } \gamma ^t r(s_t, a_t) \right] \), where \(s_0 \sim p_0(s)\), \(s_{t+1} \sim p(s_{t+1} | s_t, a_t)\) and \(a_t \sim \pi (a_t| s_t)\). In the following we will use the notation for the continuous case, where \(\mathcal {S}\) and \(\mathcal {A}\) denote finite dimensional real valued vector spaces and \(\varvec{s}\) denotes the real-valued state vector and \(\varvec{a}\) the real-valued action vector. For discrete states and actions integrals can be replaced in the following by sums.

The expected reward can be defined as (Schulman et al. 2015)

where \(p_{\pi }(\varvec{s})\) denotes a (discounted) state distribution induced by policy \(\pi \) and

denote the state-action value function \(Q^{\pi }(\varvec{s}_t, \varvec{a}_t)\), value function \(V^{\pi }(\varvec{s}_t)\), and advantage function \(A^{\pi }(\varvec{s}_t, \varvec{a}_t)\).

The goal in policy search is to find a policy \(\pi (\varvec{a}| \varvec{s})\) that maximizes Eq. (1). Usually, policy search computes in each iteration a new improved policy \(\pi \) based on samples generated using the old policy \(\pi _{\text {old}}\) since maximizing Eq. (1) directly is too challenging. However, since the estimates for \(p_{\pi }(\varvec{s})\) and \(Q^{\pi }(\varvec{s}, \varvec{a})\) are based on the old policy, that is, \(p_{\pi _{\text {old}}(\varvec{s})}\) and \(Q^{\pi _{\text {old}}}(\varvec{s}, \varvec{a})\) are actually used in Eq. (1), they may not be valid for the new policy. A solution to this is to use Trust-Region Optimization methods which keep the new policy sufficiently close to the old policy. Trust-Region Optimization for policy search was first introduced in the relative entropy policy search (REPS) algorithm (Peters et al. 2010). Many variants of this algorithm exist (Akrour et al. 2016; Abdolmaleki et al. 2015; Daniel et al. 2016; Akrour et al. 2018). All these algorithms use a bound for the KL-divergence of the policy update which prevents the policy update from being unstable as the new policy will not go too far away from areas it has not seen before. Moreover, the bound prevents the policy from being too greedy. Trust region policy optimization (TRPO) (Schulman et al. 2015) uses this bound to optimize neural network policies. The policy update can be formulated as finding a policy that maximizes the objective in Eq. (1) under the KL-constraint:

where \(Q^{\pi _{\text {old}}}(\varvec{s},\varvec{a})\) denotes the future accumulated reward values of the old policy \(\pi _{\text {old}}\), \(p_{\pi _{\text {old}}}(\varvec{s})\) the (discounted) state distribution under the old policy, and \(\epsilon \) is a constant hyper-parameter. For an \(\epsilon \) small enough the state-value and state distribution estimates generated using the old policy are valid also for the new policy since the new and old policy are sufficiently close to each other.

Policy gradient We consider parameterized policies \(\pi _{\varvec{\theta }}(\varvec{a} | \varvec{s})\) with parameter vector \(\varvec{\theta }\). A policy can be improved by modifying the policy parameters in the direction of the policy gradient which is computed w.r.t. Eq. (1). The “vanilla” policy gradient (Williams 1992; Sutton et al. 1999) obtained by the likelihood ratio trick is given by

The Q-values can be computed by Monte-Carlo estimates (high variance), that is, \(Q^{\pi _{\text {old}}}(\varvec{s}_t,\varvec{a}_t)\approx \sum _{h = 0}^\infty \gamma ^h r_{t + h}\) or estimated by policy evaluation techniques (typically high bias). We can further subtract a state-dependent baseline \(V(\varvec{s})\) which decreases the variance of the gradient estimate while leaving it unbiased, that is,

Natural gradient Contrary to the “vanilla” policy gradient, the natural gradient (Amari 1998) method uses the steepest descent direction in a Riemannian manifold, so it is effective in learning, avoiding plateaus. The natural gradient can be obtained by using a second order Taylor approximation for the KL divergence, that is, \(\mathbb {E}_{p(\varvec{s})}\left[ \text {KL}\big (\pi _{\varvec{\theta }+ \varvec{\alpha }}(\cdot |\varvec{s})|| \pi _{\varvec{\theta }}(\cdot |\varvec{s})\big ) \right] \approx \varvec{\alpha }^T \varvec{F} \varvec{\alpha },\) where \(\varvec{F}\) is the Fisher information matrix (Amari 1998). The natural gradient is now defined as the update direction that is most correlated with the standard policy gradient and has a limited approximate KL, that is,

resulting in

where \(\eta \) is a Lagrange multiplier.

Compatible value function approximation It is well known that we can obtain an unbiased gradient with typically smaller variance if compatible value function approximation is used (Sutton et al. 1999). An approximation of the Monte-Carlo estimates \(\tilde{G}^{\pi _\text {old}}_{\varvec{w}}(\varvec{s}, \varvec{a}) = \varvec{\phi }(\varvec{s}, \varvec{a})^T \varvec{w}\) is compatible to the policy \(\pi _{\varvec{\theta }}(\varvec{a}| \varvec{s})\), if the features \(\varvec{\phi }(\varvec{s}, \varvec{a})\) are given by the log gradients of the policy, that is, \(\varvec{\phi }(\varvec{s}, \varvec{a}) = \nabla _{\varvec{\theta }} \log \pi _{\varvec{\theta }}(\varvec{a}| \varvec{s})\). The parameter \(\varvec{w}\) of the approximation \(\tilde{G}^{\pi _\text {old}}_{\varvec{w}}(\varvec{s}, \varvec{a})\) is the solution of the least squares problem

Peters and Schaal (2008) showed that in the case of compatible value function approximation, the inverse of the Fisher information matrix cancels with the matrix spanned by the compatible features and, hence, \(\nabla _{\varvec{\theta }} J_{\text {NAC}} = \eta ^{-1} \varvec{w}^*\). Another interesting observation is that the compatible value function approximation is in fact not an approximation for the Q-function but for the advantage function \(A^{\pi _\text {old}}(\varvec{s}, \varvec{a}) = Q^{\pi _\text {old}}(\varvec{s}, \varvec{a}) - V^{\pi _\text {old}}(\varvec{s})\) as the compatible features are always zero mean. In Sect. 3, we show how with compatible value function approximation the natural gradient directly gives us an exact solution to the trust region optimization problem instead of requiring a search for the update step size to satisfy the KL-divergence bound in trust region optimization.

Entropy regularization Recently, some approaches (Abdolmaleki et al. 2015; Akrour et al. 2016; Mnih et al. 2016; O’Donoghue et al. 2016) use an additional bound for the entropy of the resulting policy. The entropy bound can be beneficial since it allows to limit the change in exploration potentially preventing greedy policy convergence. The trust region problem is in this case given by

where the second constraint limits the expected loss in entropy (H() denotes Shannon entropy in the discrete case and differential entropy in the continuous case) for the new distribution [applying an entropy constraint only on \(\pi (\varvec{a}|\varvec{s})\) but adjusting \(\beta \) according to \(\pi _{\text {old}}(\varvec{a}|\varvec{s})\) is equivalent in (Akrour et al. 2016, 2018)]. The policy update rule can be formed for the constrained optimization problem by using the method of Lagrange multipliers:

where \(\eta \) and \(\omega \) are Lagrange multipliers (Akrour et al. 2016). \(\eta \) is associated with the KL-divergence bound \(\epsilon \) and \(\omega \) is related to the entropy bound \(\beta \). Note that, for \(\omega = 0\), the entropy bound is not active and therefore, the solution is equivalent to the standard trust region solution. It has been realized that the entropy bound is needed to prevent premature convergence issues connected with the natural gradient. We show that these premature convergence issues are inherent to the natural gradient as it always reduces entropy of the distribution. In contrast, entropy control can prevent this.

3 Compatible policy search with natural parameters

In this section, we analyze the natural gradient update equations for exponential family distributions. This analysis reveals an important connection between the natural gradient and the trust region optimization: Both are equivalent if we use the natural parameterization of the distribution in combination with compatible value function approximation. This is an important insight as the natural gradient now provides the optimal solution for a given trust region, not just an approximation which is commonly believed: for example, Schulman et al. (2015) have to use line search to fit the natural gradient update to the KL-divergence bound. Moreover, this insight can be applied together with the entropy bound to control policy exploration and get a closed form update in the case of compatible log-linear policies. Furthermore, the use of compatible value function approximation has several advantages in terms of variance reduction which can not be achieved with the plain Monte-Carlo estimates which we leave for future work. We also present an analysis of the online performance of the natural gradient and show that entropy regularization can converge exponentially faster. Finally, we present our new algorithm for Compatible Policy Search (COPOS) which uses the insights above.

3.1 Equivalence of natural gradients and trust region optimization

We first consider soft-max distributions that are log-linear in the parameters (for example, Gaussian distributions or the Boltzmann distribution) and subsequently extend our results to non-linear soft-max distributions, for example given by neural networks. A log-linear soft-max distribution can be represented as

Note that also Gaussian distributions can be represented this way [see, for example, Eq. (9)], however, the natural parameterization is commonly not used for Gaussian distributions. Typically, the Gaussian is parameterized by the mean \(\varvec{\mu }\) and the covariance matrix \(\varvec{\varSigma }\). However, the natural parameterization and our analysis suggest that the precision matrix \(\varvec{B} = \varvec{\varSigma }^{-1}\) and the linear vector \(\varvec{b} = \varvec{\varSigma }^{-1} \varvec{\mu }\) should be used to benefit from many beneficial properties of the natural gradient.

It makes sense to study the exact form of the compatible approximation for these log-linear models. The compatible features are given by

As we can see, the compatible feature space is always zero mean, which is inline with the observation that the compatible approximation \(\tilde{G}^{\pi _\text {old}}_{\varvec{w}}(\varvec{s}, \varvec{a})\) is an advantage function. Moreover, the structure of the features suggests that the advantage function is composed of a term for the Q-function \(\tilde{Q}_{\varvec{w}}(\varvec{s}, \varvec{a}) = \varvec{\psi }(\varvec{s}, \varvec{a})^ T\varvec{w}\) and for the value function \(\tilde{V}_{\varvec{w}}(\varvec{s}) = \mathbb {E}_{\pi (\cdot |\varvec{s})}\left[ \tilde{Q}_{\varvec{w}}(\varvec{s}, \varvec{a})\right] \), that is,

We can now directly use the compatible advantage function \(\tilde{G}^{\pi _\text {old}}_{\varvec{w}}(\varvec{s}, \varvec{a})\) in our trust region optimization problem given in Eq. (3). The resulting policy is then given by

Note that the value function part of \(\tilde{G}_{\varvec{w}}\) does not influence the updated policy. Hence, if we use the natural parameterization of the distributions in combination with compatible function approximation, then we directly get a parametric update of the form

Furthermore, the suggested update is equivalent to the natural gradient update: The natural gradient is the optimal solution for a given trust region problem and not just an approximation. However, this statement only holds if we use natural parameters and compatible value function approximation. Moreover, the update needs only the Q-function part of the compatible function approximation.

We can do a similar analysis for the optimization problem with entropy regularization given in Eq. (4) using Eq. (5). The optimal policy given the compatible value function approximation is now given by

In comparison to the standard natural gradient, the influence of the old parameter vector is diminished by the factor \(\eta / (\eta + \omega )\) which will play an important role for our further analysis.

3.2 Compatible approximation for neural networks

So far, we have only considered models that are log-linear in the parameters (ignoring the normalization constant). For more complex models, we need to introduce non-linear parameters \(\varvec{\beta }\) for the feature vector, that is, \(\varvec{\psi }(\varvec{s}, \varvec{a}) = \varvec{\psi }_{\varvec{\beta }}(\varvec{s}, \varvec{a})\). We are in particular interested in Gaussian policies in the continuous case and softmax policies in the discrete case as they are the standard for continuous and discrete actions, respectively. In the continuous case, we could either use a Gaussian with a constant variance where the mean is parameterized by a neural network, a non-linear interpolation linear feedback controllers with Gaussian noise or also Gaussians with state-dependent variance. For simplicity, we will focus on Gaussians with a constant covariance \(\varvec{\varSigma }\) where the mean is a product of neural network (or any other non-linear function) features \(\varphi _i(\varvec{s})\) and a mixing matrix \(\varvec{K}\) that could be part of the neural network output layer. The policy and the log policy are then

where \(\varvec{K} = (\varvec{k}_1, \ldots , \varvec{k}_N)\) and \(\varvec{U} = \varvec{K}^T \varvec{\varSigma }^{-1}\). To compute \(\varvec{\psi }(\varvec{s}, \varvec{a})\) we note that

To get \(\varvec{\psi }(\varvec{s}, \varvec{a})\) we note that some parts of Eq. (9) and thus of \(\nabla _{\varvec{\theta }} \log \pi _{\varvec{\theta }}(\varvec{a}| \varvec{s})\) do not depend on \(\varvec{a}\). We ignore those parts for computing \(\varvec{\psi }(\varvec{s}, \varvec{a})\) since \(\varvec{\psi }(\varvec{s}, \varvec{a}) \varvec{\theta }\) is the state-action value function and action independent parts of the state-action value function do not influence the optimal action choice. Thus we get

We then take the gradient w.r.t. the log-linear parameters \(\varvec{\theta }= (\varvec{\varSigma }^{-1}, \varvec{U})\) resulting in \(\nabla _{\varvec{\varSigma }^{-1}} \log \hat{\pi }(\varvec{a}| \varvec{s}) = - 0.5 \varvec{a} \varvec{a}^T\), \(\nabla _{\varvec{U}} \log \hat{\pi }(\varvec{a}| \varvec{s}) = \varvec{a} \varvec{\varphi }(\varvec{s})^T\), and \(\varvec{\psi }(\varvec{s}, \varvec{a}) = [- \text {vec}[0.5 \varvec{a} \varvec{a}^T], \text {vec}[\varvec{a} \varvec{\varphi }(\varvec{s})^T]]^T\), where \(\text {vec}[\cdot ]\) concatenates matrix columns into a column vector.

Note that the variances and the linear parameters of the mean are contained in the parameter vector \(\varvec{\theta }\) and can be updated by the update rule in Eq. (7) explained above. However, for obtaining the update rules for the non-linear parameters \(\varvec{\beta }\), we first have to compute the compatible basis, that is,

Note that due to the \(\log \) operator the derivative is linear w.r.t. log-linear parameters \(\varvec{\theta }\). For the Gaussian distribution in Eq. (8) the gradient of the action dependent parts of the log policy in Eq. (11) become

Now, in order to find the update rule for the non-linear parameters we will write the update rule for the policy using Eq. (5), and, using the value function formed by multiplying the compatible basis in Eq. (11) by \(\varvec{w}_{\beta }\) which is the part of the compatible approximation vector that is responsible for \(\varvec{\beta }\):

where we dropped action independent parts, which can be seen as part of the distribution normalization, from Eq. (12) to Eq. (13). Note that Eq. (15) represents the first order Taylor approximation of Eq. (14) at \(\varvec{w}_{\varvec{\beta }} / \eta = 0\). Moreover, note that rescaling of the energy function \(\varvec{\psi }_{\varvec{\beta }}(\varvec{s}, \varvec{a}) \varvec{\theta }\) is implemented by the update of the parameters \(\varvec{\theta }\) and hence can often be ignored for the update for \(\varvec{\beta }\). The approximate update rule for \(\varvec{\beta }\) is thus

Hence, we can conclude that the natural gradient is an approximate trust region solution for the non-linear parameters \(\varvec{\beta }\) as the first order Taylor approximation of the energy function is replaced by the real energy function after the update. Still, for the parameters \(\varvec{\theta }\), which in the end dominate the mean and covariance of the policy, the natural gradient is the exact trust region solution.

3.3 Compatible value function approximation in practice

Algorithm 1 shows the Compatible Policy Search (COPOS) approach (see Appendix B for a more detailed description of the discrete action algorithm version). In COPOS, for the policy updates in Eqs. (7) and (16), we need to find \(\varvec{w}\), \(\eta \), and \(\omega \). For estimating \(\varvec{w}\) we could use the compatible function approximation. In this paper, we do not estimate the value function explicitly but instead estimate \(\varvec{w}\) as a natural gradient using the conjugate gradient method which removes the need for computing the inverse of the Fisher information matrix explicitly [see for example Schulman et al. 2015]. As discussed before \(\eta \) and \(\omega \) are Lagrange multipliers associated with the KL-divergence and entropy bounds. In the log-linear case with compatible natural parameters, we can compute them exactly using the dual of the optimization objective Eq. (4) and in the non-linear case approximately. In the continuous action case, the basic dual includes integration over both actions and states but we can integrate over actions in closed form due to the compatible value function: we can eliminate terms which do not depend on the action. The dual resolves into an integral over just states allowing computing \(\eta \) and \(\omega \) efficiently. Please, see “Appendix A” for more details. Since \(\eta \) is an approximation for the non-linear parameters, we performed in the experiments for the continuous action case an additional alternating optimization twice: (1) we did a binary search to satisfy the KL-divergence bound while keeping \(\omega \) fixed, (2) we re-optimized \(\omega \) (exactly, since \(\omega \) depends only on log-linear parameters) keeping \(\eta \) fixed. For discrete actions it was sufficient to perform only an additional line search to update the non-linear parameters.

4 Analysis and illustration of the update rules

We will now analyze the performance of both update rules, with and without entropy, in more detail with a simple stateless Gaussian policy \(\pi (a) \propto \exp (-0.5 B a^2 + b a)\) for a scalar action a. Our real reward function \(R(a) = -0.5 R a^2 + r a\) is also quadratic in the actions. We assume an infinite number of samples to perfectly estimate the compatible function approximation. In this case \(\tilde{G}_{\varvec{w}}\) is given by \(\tilde{G}_{\varvec{w}}(a) = R(a) = -0.5 R a^2 + r a\) and \(\varvec{w} = [R,r]\). The reward function maximum is \(a^* = R^{-1}r\).

Natural gradients For now, we will analyze the performance of the natural gradient if \(\eta \) is a constant and not optimized for a given KL-bound. After n update steps, the parameters of the policy are given by \(B_n = B_0 + n R / \eta \), \(b_n = b_0 + n r / \eta \). The distance between the mean \(\mu _n = B_n^{-1} b_n\) of the policy and the optimal solution \(a^*\) is

We can see that the learned solution approaches the optimal solution, however, very slowly and heavily depending on the precision \(B_0\) of the initial solution. The reason for this effect can be explained by the update rule of the precision. As we can see, the precision \(B_n\) is increased at every update step. This shrinking variance in turn decreases the step-size for the next update.

Entropy regularization Here we provide the derivation of \(d_n\) for the entropy regularization case. We perform a similar analysis for the entropy regularization update rule. We start with constant parameters \(\eta \) and \(\omega \) and later consider the case with the trust region. The distance \(d_n = \mu _n - a^*\) is again a function of n. The updates for the entropy regularization result in the following parameters after n iterations

The distance \(d_n = \mu _n - a^*\) can again be expressed as a function of n:

with \(c_2 > 1\). Hence, also this update rule converges to the correct solution but contrary to the natural gradient, the part of the denominator that depends on n grows exponentially. As the old parameter vector is always multiplied by a factor smaller than one, the influence of the initial precision matrix \(B_0\) vanishes while \(B_0\) dominates natural gradient convergence. While the natural gradient always decreases variance, entropy regularization avoids the entropy loss and can even increase variance.

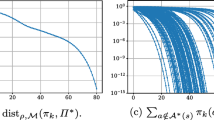

Empirical evaluation of constant updates We plotted the behavior of the algorithms, and standard policy gradient, for this simple toy task in Fig. 1 (top). We use \(\eta = 10\) and \(\omega = 1\) for the natural gradient and the entropy regularization and a learning rate of \(\alpha = 1000\) for the policy gradient. We estimate the standard policy gradients from 1000 samples. Entropy regularization performs favorably speeding up learning in the beginning by increasing the entropy. With constant parameters \(\eta \) and \(\omega \), the algorithm drives the entropy to a given target-value. The policy gradient performs better than the natural gradient as it does not reduce the variance all the time and even increases the variance. However, the KL-divergence of the standard policy gradient appears uncontrolled.

Comparison of different update rules with and without entropy regularization in the toy example of Sect. 4. The figures show the “Distance” between optimal and current policy mean [see Eq. (17)], the expected “Reward”, the expected “Entropy”, and the expected “KL-divergence” between previous and current policy over 200 iterations (x-axis). Top: Policy updates with constant learning rates and no trust region. Comparison of the natural gradient (blue), natural gradient with entropy regularization (green) and vanilla policy gradient (red). Bottom Policy updates with trust region. Comparison of the natural gradient (blue), natural gradient where the entropy is controlled to a set-value (green), natural gradient with zero entropy loss (cyan) and vanilla policy gradient (red). To summarize, without entropy regularization the natural gradient decreases the entropy too fast (Color figure online)

Empirical evaluation of trust region updates In the trust region case, we minimized the Lagrange dual at each iteration yielding \(\eta \) and \(\omega \). We chose at each iteration the highest policy gradient learning rate where the KL-bound was still met. For entropy regularization we tested two setups: (1) We fixed the entropy of the policy (that is, \(\gamma = 0\)), (2) The entropy of the policy was slowly driven to 0. Figure 1 (bottom) shows the results. The natural gradient still suffers from slow convergence due to decreasing the entropy of the policy gradually. The standard gradient again performs better as it increases the entropy outperforming even the zero entropy loss natural gradient. For entropy control, even more sophisticated scheduling could be used such as the step-size control of CMA-ES (Hansen and Ostermeier 2001) as a heuristic that works well.

5 Related work

Similar to classical reinforcement learning the leading contenders in deep reinforcement learning can be divided into value based-function methods such as Q-learning with deep Q-Network (DQN) (Mnih et al. 2015), actor-critic methods (Wu et al. 2017; Tangkaratt et al. 2018; Abdolmaleki et al. 2018), policy gradient methods such as deep deterministic policy gradient (DDPG) (Silver et al. 2014; Lillicrap et al. 2015) and policy search methods based on information theoretic/trust region methods, such as proximal policy optimization (PPO) (Schulman et al. 2017) and trust region policy optimization (TRPO) (Schulman et al. 2015).

Trust region optimization was introduced in the relative entropy policy search (REPS) method (Peters et al. 2010). TRPO and TNPG (Schulman et al. 2015) are the first methods to apply trust region optimization successfully to neural networks. In contrast to TRPO and TNPG, we derive our method from the compatible value function approximation perspective. TRPO and TNPG differ from our approach, in that they do not use an entropy constraint and do not consider the difference between the log-linear and non-linear parameters for their update. On the technical level, compared to TRPO, we can update the log-linear parameters (output layer of neural network and the covariance) with an exact update step while TRPO does a line search to find the update step. Moreover, for the covariance we can find an exact update to enforce a specific entropy and thus control exploration while TRPO does not bound the entropy, only the KL-divergence. PPO also applies an adaptive KL penalty term.

Kakade (2001); Bagnell and Schneider (2003); Peters and Schaal (2008); Geist and Pietquin (2010) have also suggested similar update rules based on the natural gradient for the policy gradient framework. Wu et al. (2017) applied approximate natural gradient updates to both the actor and critic in an actor-critic framework but did not utilize compatible value functions or an entropy bound. Peters and Schaal (2008); Geist and Pietquin (2010) investigated the idea of compatible value functions in combination with the natural gradient but used manual learning rates instead of trust region optimization. The approaches in (Abdolmaleki et al. 2015; Akrour et al. 2016) use an entropy bound similar to ours. However, the approach in (Abdolmaleki et al. 2015) is a stochastic search method, that is, it ignores sequential decisions and views the problem as black-box optimization, and the approach in (Akrour et al. 2016) is restricted to trajectory optimization. Moreover, both of these approaches do not explicitly handle non-linear parameters such as those found in neural networks. The entropy bound used in (Tangkaratt et al. 2018) is similar to ours, however, their method depends on second order approximations of a deep Q-function, resulting in a much more complex policy update that can suffer from the instabilities of learning a non-linear Q-function.

For exploration one can in general add an entropy term to the objective. In the experiments, we compare against TRPO with this additive entropy term. In preliminary experiments, to control entropy in TRPO, we also combined the entropy and KL-divergence constraints into a single constraint without success.

6 Experiments

In the experiments, we focused on investigating the following research question: Does the proposed entropy regularization approach help to improve performance compared to other methods which do not control the entropy explicitly? For selecting comparison methods we followed (Duan et al. 2016) and took four gradient based methods: Trust Region Policy Optimization (TRPO) (Schulman et al. 2015), Truncated Natural Policy Gradient (Duan et al. 2016; Schulman et al. 2015), REINFORCE (VPG) (Williams 1992), Reward-Weighted Regression (RWR) (Kober and Peters 2009) and two gradient-free black box optimization methods: Cross Entropy Method (CEM) (Rubinstein 1999), Covariance Matrix Adaption Evolution Strategy (CMA-ES) (Hansen and Ostermeier 2001). We used rllab Footnote 1 for algorithm implementation. We ran experiments in both challenging continuous control tasks and discrete partially observable tasks which we discuss next.

Average return and differential entropy over 10 random seeds of comparison methods in continuous Roboschool tasks (see, Fig. 3 for the other continuous tasks and Table 1 for a summary). Shaded area denotes the bootstrapped \(95\%\) CI. Algorithms were executed for 1000 iterations with 10,000 time steps (samples) in each iteration

Average return and differential entropy of comparison methods over 10 random seeds in continuous Roboschool tasks (see, Fig. 2 for the other continuous tasks and Table 1 for a summary). Shaded area denotes the bootstrapped \(95\%\) CI. Algorithms were executed for 1000 iterations with 10, 000 time steps (samples) in each iteration

Continuous tasks In the continuous case, we ran experiments in eight different Roboschool Footnote 2 environments which provide continuous control tasks of increasing difficulty and action dimensions without requiring a paid license.

We ran all evaluations, 10 random seeds for each method, for 1000 iterations of 10, 000 samples each. In all problems, we used the Gaussian policy defined in Eq. (8) for COPOS and TRPO (denoted by \(\pi _1(a|s)\)) with \(\max (10, \text {action dimensions})\) neural network outputs as basis functions, a neural network with two hidden layers each containing 32 tanh-neurons, and a diagonal precision matrix. For TRPO and other methods, except COPOS, we also evaluated a policy, denoted for TRPO by \(\pi _2(a|s)\), where the neural network directly specifies the mean and the diagonal covariance is parameterized with log standard deviations. In the experiments, we used high identical initial variances (we tried others without success) for all policies. We set \(\epsilon = 0.01\) (Schulman et al. 2015) in all experiments. For COPOS we used two equality entropy constraints: \(\beta = \epsilon \) and \(\beta =\)auto. In \(\beta =\)auto, we assume positive initial entropy and schedule the entropy to be the negative initial entropy after 1000 iterations. Since we always initialize the variances to one, higher dimensional problems have higher initial entropy. Thus \(\beta =\)auto reduces the entropy faster for high dimensional problems effectively scaling the reduction with dimensionality. Table 1 summarizes the results in continuous tasks: COPOS outperforms comparison methods in most of the environments. Figures 2 and 3 show learning curves and performance of COPOS compared to the other methods. COPOS prevents both too fast, and, too slow entropy reduction while outperforming comparison methods. Table 4 in “Appendix C” shows additional results for experiments where different constant entropy bonuses were added to the reward function of TRPO without success highlighting the necessity of principled entropy control.

Discrete control task Partial observability often requires efficient exploration due to non-myopic actions yielding long term rewards which is challenging for model-free methods. The Field Vision Rock Sample (FVRS) (Ross et al. 2008) task is a partially observable Markov decision process (POMDP) benchmark task. For the discrete action experiments we used as policy a softmax policy with a fully connected feed forward neural network consisting of 2 hidden layers with 30 tanh nonlinearities each. The input to the neural network is the observation history and the current position of the agent in the grid. To obtain the hyperparameters \(\beta \), \(\epsilon \), and the scaling factor for TRPO with additive entropy regularization, denoted with “TRPO ent reg”, we performed a grid search on smaller instances of FVRS. See “Appendix B” for more details about the setup. Results in Table 2 and Fig. 4 show that COPOS outperforms the comparison methods due to maintaining higher entropy. FVRS has been used with model-based online POMDP algorithms (Ross et al. 2008) but not with model-free algorithms. The best model-based results in (Ross et al. 2008) (scaled to correspond to our rewards, COPOS in parentheses) are 2.275 (1.94) in FVRS(5,5) and 2.34 (2.45) in FVRS(5,7).

7 Conclusions and future work

We showed that when we use the natural parameterization of a standard exponential policy distribution in combination with compatible value function approximation, the natural gradient and trust region optimization are equivalent. Furthermore, we demonstrated that natural gradient updates may reduce the entropy of the policy according to a schedule which can lead to premature convergence. To combat the problem of bad entropy scheduling in trust region methods we proposed a new compatible policy search method called COPOS that can control the entropy of the policy using an entropy bound. In both challenging high dimensional continuous and discrete tasks the approach yielded state-of-the-art results due to better entropy control. In future work, an exciting direction is to apply efficient approximations to compute the natural gradient (Bernacchi et al. 2018). Moreover, we have started work on applying the proposed algorithm in challenging partially observable environments found for example in autonomous driving where exploration and sample efficiency is crucial for finding high quality policies (Dosovitskiy et al. 2017).

References

Abdolmaleki, A., Lioutikov, R., Peters, J., Lau, N., Reis, L., & Neumann, G. (2015). Model-based relative entropy stochastic search. In Advances in Neural Information Processing Systems (NIPS), MIT Press.

Abdolmaleki, A., Springenberg, J. T., Tassa, Y., Munos, R., Heess, N., & Riedmiller, M. (2018). Maximum a posteriori policy optimisation. In Proceedings of the international conference on learning representations (ICLR).

Akrour, R., Abdolmaleki, A., Abdulsamad, H., & Neumann, G. (2016). Model-free trajectory optimization for reinforcement learning. In Proceedings of the international conference on machine learning (ICML).

Akrour, R., Abdolmaleki, A., Abdulsamad, H., Peters, J., & Neumann, G. (2018). Model-free trajectory-based policy optimization with monotonic improvement. Journal of Machine Learning Research, 19(14), 1–25.

Amari, S. (1998). Natural gradient works efficiently in learning. Neural Computation, 10(2), 251–276.

Bagnell, J. A., & Schneider, J. (2003). Covariant policy search. IJCAI.

Bernacchia, A., Lengyel, M., & Hennequin, G. (2018). Exact natural gradient in deep linear networks and its application to the nonlinear case. In Advances in Neural Information Processing Systems (NIPS), Curran Associates, Inc., pp 5945–5954.

Boyd, S., & Vandenberghe, L. (2004). Convex optimization. Cambridge: Cambridge University Press.

Daniel, C., Neumann, G., Kroemer, O., & Peters, J. (2016). Hierarchical relative entropy policy search. Journal of Machine Learning Research (JMLR), 17(93), 1–50.

Dosovitskiy, A., Ros, G., Codevilla, F., Lopez, A., & Koltun, V. (2017). CARLA: An open urban driving simulator. In Conference on robot learning, pp. 1–16.

Duan, Y., Chen, X., Houthooft, R., Schulman, J., & Abbeel, P. (2016). Benchmarking deep reinforcement learning for continuous control. In Proceedings of the 33nd international conference on machine learning, ICML 2016, New York City, NY, USA, June 19–24, 2016, pp 1329–1338. http://jmlr.org/proceedings/papers/v48/duan16.html.

Geist, M., & Pietquin, O. (2010). Revisiting natural actor-critics with value function approximation. In International conference on modeling decisions for artificial intelligence, Springer, pp. 207–218.

Hansen, N., & Ostermeier, A. (2001). Completely derandomized self-adaptation in evolution strategies. Evolutionary Computation, 9(2), 159–195.

Kakade, S. (2001). A natural policy gradient. In T. G. Dietterich, S. Becker, & Z. Ghahramani (Eds.), Advances in neural information processing systems 14 (NIPS 2001) (pp. 1531–1538). Cambridge: MIT Press.

Kober, J., & Peters, J. R. (2009). Policy search for motor primitives in robotics. In D. Koller, D. Schuurmans, Y. Bengio, & L. Bottou (Eds.), Advances in neural information processing systems 21 (pp. 849–856). Red Hook: Curran Associates, Inc.

Lillicrap, T. P., Hunt, J. J., Pritzel, A., Heess, N., Erez, T., Tassa, Y., Silver, D., & Wierstra, D. (2015). Continuous control with deep reinforcement learning. arXiv:1509.02971.

Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., et al. (2015). Human-level control through deep reinforcement learning. Nature, 518(7540), 529–533.

Mnih, V., Badia, A. P., Mirza, M., Graves, A., Lillicrap, T., Harley, T., Silver, D., & Kavukcuoglu, K. (2016) Asynchronous methods for deep reinforcement learning. In International conference on machine learning, pp. 1928–1937.

O’Donoghue, B., Munos, R., Kavukcuoglu, K., & Mnih, V. (2016). PGQ: Combining policy gradient and q-learning. arXiv:1611.01626.

Peters, J., & Schaal, S. (2008). Natural actor-critic. Neurocomputing, 71(7–9), 1180–1190.

Peters, J., Mülling, K., & Altun, Y. (2010). Relative entropy policy search. In AAAI Atlanta, pp. 1607–1612.

Ross, S., Pineau, J., Paquet, S., & Chaib-Draa, B. (2008). Online planning algorithms for POMDPs. Journal of Artificial Intelligence Research, 32, 663–704.

Rubinstein, R. (1999). The cross-entropy method for combinatorial and continuous optimization. Methodology and Computing in Applied Probability, 1(2), 127–190.

Schulman, J., Levine, S., Abbeel, P., Jordan, M., & Moritz, P. (2015). Trust region policy optimization. In Proceedings of the 32nd International Conference on Machine Learning (ICML-15), pp. 1889–1897.

Schulman, J., Wolski, F., Dhariwal, P., Radford, A., & Klimov, O. (2017). Proximal policy optimization algorithms. arXiv:1707.06347.

Silver, D., Lever, G, Heess, N., Degris, T., Wierstra, D., & Riedmiller, M. (2014). Deterministic policy gradient algorithms. In ICML.

Sutton, R. S., McAllester, D., Singh, S., & Mansour, Y. (1999). Policy gradient methods for reinforcement learning with function approximation. In Proceedings of the 12th international conference on neural information processing systems, MIT Press, Cambridge, MA, USA, NIPS’99, pp. 1057–1063.

Tangkaratt, V., Abdolmaleki, A., & Sugiyama, M. (2018). Guide Actor-Critic for Continuous Control. In Proceedings of the international conference on learning representations (ICLR).

Wierstra, D., Schaul, T., Peters, J., & Schmidhuber, J. (2008). Natural evolution strategies. In IEEE congress on evolutionary computation, IEEE, pp 3381–3387.

Williams, R. J. (1992). Simple statistical gradient-following algorithms for connectionist reinforcement learning. Machine Learning, 8(3–4), 229–256.

Wu, Y., Mansimov, E., Grosse, R. B., Liao, S., & Ba, J. (2017). Scalable trust-region method for deep reinforcement learning using kronecker-factored approximation. In Advances in neural information processing systems (NIPS), pp. 5279–5288.

Acknowledgements

This work was supported by EU Horizon 2020 project RoMaNS and ERC StG SKILLS4ROBOTS, project references #645582 and #640554, and, by German Research Foundation project PA 3179/1-1 (ROBOLEAP).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Editors: Karsten Borgwardt, Po-Ling Loh, Evimaria Terzi, Antti Ukkonen.

Appendices

Solution for the Lagrange multipliers

In order to compute a solution to the optimization objective with a KL-divergence and an entropy bound, we solve, using the dual of the problem, for the Lagrange multipliers associated with the bounds. We first discuss for the continuous action case how we optimize the multipliers exactly in the case of only log-linear parameters, continue with how we find an approximate solution in the case of also non-linear parameters, and then discuss the discrete action case.

1.1 Computing Lagrange multipliers \(\eta \) and \(\omega \) for log-linear parameters

Minimize the dual of the optimization objective (see e.g. Akrour et al. (2016) for a similar dual)

w.r.t. \(\eta \) and \(\omega \). Note that action independent parts of \(\log \pi (\varvec{a}| \varvec{s})\) in Eq. (18) do not have an effect on the choice of \(\eta \) and \(\omega \) and we will discard them.

For a Gaussian policy

we get

where

and k is the dimensionality of actions. We got the end result by completing the square.

1.2 Computing \(\eta \) and \(\omega \) for non-linear parameters

Similarly to the log-linear parameters we minimize the dual

w.r.t. \(\eta \) and \(\omega \). As before action independent parts of \(\log \pi (\varvec{a}| \varvec{s})\) in Eq. (18) do not have an effect on the choice of \(\eta \) and \(\omega \) and we will discard them.

In our Linear Gaussian policy with constant covariance

we have

where \(\text {const} = - \sqrt{(2\pi )^k |\varvec{\varSigma }|}\) and \(\varvec{U} = \varvec{K}^T \varvec{\varSigma }^{-1}\). Therefore,

where we are able to split the equation into action-value and value parts depending on whether they depend on \(\varvec{a}\). Using the action-value part \(\frac{\partial }{\partial \varvec{\beta }} \varvec{\varphi }(\varvec{s})^T \varvec{U} \varvec{a}\) to estimate \(\varvec{w}_3\) we get

where \(\varvec{w}_a(s)^T = \varvec{w}_3^T \frac{\partial \varvec{\varphi }(\varvec{s})^T}{\partial \varvec{\beta }} \varvec{U}\). By completing the square we get

where

and k is the dimensionality of actions.

1.3 Derivation of the dual for the discrete action case

To derive the dual of our trust region optimization problem with entropy regularization and discrete actions we start with following program, where we replaced the expectation with integrals and use the compatible value function for the returns.

Since we are in the discrete action case we will be using sums for the brevity of the derivation, but the same derivation can be also done with integrals. Using the method of Lagrange multipliers Boyd and Vandenberghe (2004), we obtain following Lagrange

We differentiate now the Lagrange with respect to \(\pi (\varvec{a} | \varvec{s})\) and obtain following system

Setting it to zero and rearranging terms results in

where the last term can be seen as a normalization constant

Plugging Eqs. (47) and (48) into Eq. (45) results in the dual [similar to Akrour et al. (2016)] used for optimization

Technical details for discrete action experiments

Here, we provide details on the experiments with discrete actions. Table 3 shows details on the hyper-parameters used in the Field Vision RockSample (FVRS) experiments and Algorithm 2 describes details on the discrete action algorithm.

Additional continuous control experiments with a TRPO entropy bonus

Table 4 shows additional results for continuous control in the Roboschool environment. In these experiments, an additonal entropy bonus is added to the reward function of TRPO.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Pajarinen, J., Thai, H.L., Akrour, R. et al. Compatible natural gradient policy search. Mach Learn 108, 1443–1466 (2019). https://doi.org/10.1007/s10994-019-05807-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-019-05807-0