Abstract

The Projective Clustering Ensemble (PCE) problem is a recent clustering advance aimed at combining the two powerful tools of clustering ensembles and projective clustering. PCE has been formalized as either a two-objective or a single-objective optimization problem. Two-objective PCE has been recognized as more accurate than its single-objective counterpart, although it is unable to jointly handle the object-based and feature-based cluster representations.

In this paper, we push forward the current PCE research, aiming to overcome the limitations of all existing PCE formulations. We propose a novel single-objective PCE formulation so that (i) the object-based and feature-based cluster representations are jointly considered, and (ii) the resulting optimization strategy follows a metacluster-based methodology borrowed from traditional clustering ensembles. As a result, the proposed formulation features best suitability to the PCE problem, thus guaranteeing improved effectiveness. Experiments on benchmark datasets have shown how the proposed approach achieves better average accuracy than all existing PCE methods, as well as efficiency superior to the most accurate existing metacluster-based PCE method on larger datasets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

After more than four decades, a large number of algorithms has been developed for data clustering, focusing on different aspects such as data types, algorithmic features, and application targets (Gan et al. 2007). In the last few years, there has been an increasing interest in developing advanced tools for data clustering. In this respect, projective clustering and clustering ensembles represent two of the most important directions of research.

The goal of projective clustering (or projected clustering) (Ng et al. 2005; Yiu and Mamoulis 2005; Achtert et al. 2006; Domeniconi et al. 2007; Moise et al. 2008) is to discover projective clusters, i.e., subsets of the input data having different (possibly overlapping) subsets of features (subspaces) associated with them. Projective clustering is closely related to the subspace clustering problem (Agrawal et al. 1998; Parsons et al. 2004; Kriegel et al. 2009; Moise et al. 2009), as both detect clusters that exist in different subspaces; however, the goal of subspace clustering is to search for all clusters in all meaningful subspaces, whereas projective clustering methods output a single partition of the input dataset. Projective clustering aims to solve issues that typically arise in high-dimensional data, such as sparsity and concentration of distances (Beyer et al. 1999; Hinneburg et al. 2000; Tomasev et al. 2011). Existing projective clustering methods (Kriegel et al. 2009; Moise et al. 2009) can be classified into four main approaches: bottom-up (it finds subspaces recognized as “interesting” and assigns each data object to the most similar subspace, Moise et al. 2008; Sequeira and Zaki 2004), top-down (it finds the subspaces starting from the full feature space, Ng et al. 2005; Liu et al. 2000; Yip et al. 2004, 2005; Achtert et al. 2006; Aggarwal et al. 1999; Woo et al. 2004; Böhm et al. 2004), soft (it produces soft data clusterings, Moise et al. 2008; Chen et al. 2008, and/or clusterings having differently weighted feature-to-cluster assignments, Domeniconi et al. 2007; Chen et al. 2008), and hybrid (it combines elements of both projective and subspace clustering approaches, Procopiuc et al. 2002; Achtert et al. 2007; Kriegel et al. 2005).

The problem of clustering ensembles (Strehl and Ghosh 2002; Topchy et al. 2005; Domeniconi and Al-Razgan 2009; Ghosh and Acharya 2011), also known as consensus clustering (Nguyen and Caruana 2007) or aggregation clustering (Gionis et al. 2007), is stated as follows: given a set of clustering solutions, or ensemble, to derive a consensus clustering that properly summarizes the solutions in the ensemble. The input ensemble is typically generated by varying one or more aspects of the clustering process, such as the clustering algorithm, the parameter setting, and the number of features, objects or clusters. The majority of the existing clustering ensemble methods follow the instance-based approach or the metacluster-based approach, or a combination of both (i.e., hybrid). In particular, the metacluster-based approach lies in the principle “to cluster clusters” (Bradley and Fayyad 1998; Strehl and Ghosh 2002; Boulis and Ostendorf 2004), whereby a new dataset is inferred whose objects are the clusters that belong to the clustering solutions in the ensemble. This dataset is then partitioned in order to produce a set of metaclusters (i.e., sets of clusters), and the consensus clustering is finally computed by assigning each data object to the metacluster that optimizes a specific criterion (e.g., majority voting).

Projective clustering and clustering ensembles have been recently treated in a unified framework (Gullo et al. 2009, 2013). The underlying motivation of that study is that many real-world application problems are high dimensional and lack a-priori knowledge. Examples are: clustering of multi-view data, privacy preserving clustering, news or document retrieval based on pre-defined categorizations, and distributed clustering of high-dimensional data. To address both issues simultaneously, the problem of projective clustering ensembles (PCE) is hence formalized, whose goal is to compute a projective consensus clustering from an ensemble of projective clustering solutions. Intuitively, each projective cluster is characterized by a distribution of memberships of the objects as well as a distribution over the features that belong to the subspace of that cluster. Figure 1 illustrates a projective clustering ensemble with three projective clustering solutions, which are obtained according to different views over the same dataset. A projective cluster is graphically represented as a rectangle filled with a color gradient, where higher intensities correspond to larger membership values of objects to the cluster. Clusters of the same clustering may overlap with their gradient (i.e., objects can have multiple assignments with different degrees of membership), and colors change to denote that different groupings of objects are associated with different feature subspaces. In the figure, a projective consensus clustering is derived by suitably “aggregating” the ensemble members. In particular, the first projective consensus cluster is derived by summarizing \(C'_{1}\), \(C''_{2}\), and \(C_{2}'''\), the second is derived from \(C'_{2}\), \(C''_{3}\), and \(C_{3}'''\), and the third is derived from \(C'_{4}\), \(C''_{1}\), and \(C_{1}'''\). Note that the resulting color in each projective consensus cluster resembles a merge of colors in the original projective clusters, which means that a projective consensus cluster is associated with a subset of features shared by the objects in the original clusters.

Illustration of a projective clustering ensemble and derived consensus clustering. Each gradient refers to the cluster memberships over all objects. Colors denote different feature subspaces associated with the projective clusters. (The color version of this figure is available only in the electronic edition)

Two formulations of PCE have been proposed in Gullo et al. (2013), namely two-objective PCE and single-objective PCE. The former consists in the optimization of two objective functions, which separately consider the data object clustering and the feature-to-cluster assignment. The latter embeds in one objective function the object-based and feature-based representations of the various clusters. A heuristic developed for two-objective PCE, called MOEA-PCE, has shown to be particularly accurate, although it has drawbacks concerning efficiency, parameter setting, and interpretability of results. In contrast, the heuristic developed for single-objective PCE, called EM-PCE, has shown better efficiency while being outperformed by two-objective PCE in terms of effectiveness. An attempt to improve the accuracy of single-objective PCE has been proposed in Gullo et al. (2010); the approach is based on a corrective term designed to achieve a better balance between the object-to-cluster assignment and the feature-to-cluster assignment when measuring the error of a candidate projective consensus clustering. Despite the achieved improvement in accuracy, the heuristics in Gullo et al. (2010) are still outperformed by two-objective PCE, thus suggesting that the path indicated by the two-objective formulation is the one to be followed. Nevertheless, the two-objective PCE suffers from a major weakness: it does not take into consideration the interrelation between the object-based and the feature-based cluster representations. In a nutshell, according to the early two-objective PCE formulation, consensus clusterings whose clusters have both object-based and feature-based representations that well comply with the input ensemble but that are not correctly “coupled” with one another might mistakenly be recognized as ideal. This fact can lead to projective consensus clustering solutions that contain conceptual flaws in their cluster composition. By preventing this scenario, one can improve the two-objective PCE formulation and corresponding methods.

Contributions

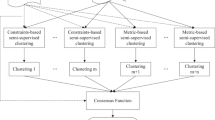

In this paper, we pursue a new approach to the study of PCE, which is motivated by our insights on the theoretical foundations of two-objective and single-objective PCE formulations. Our aim is to provide a stronger tie between the PCE and the traditional clustering ensemble problem. By investigating the opportunity of adapting existing approaches for clustering ensembles to the PCE problem, we propose a new single-objective formulation of PCE which resembles a metacluster-based clustering ensemble approach and extends our first attempt in this regard (Gullo et al. 2011). The key idea underlying our proposal is to define a function that measures the distance of a projective clustering solution from a given ensemble, in such a way that the object-based and the feature-based cluster representations are considered as a whole. We show that the new PCE formulation is theoretically sound and has advantages over the ones proposed previously (Gullo et al. 2011, 2013). It enables the development of heuristic algorithms that can exploit the results obtained by the majority of existing clustering ensemble methods. Specifically, we define a heuristic that follows a metacluster-based approach, called Enhanced MetaCluster-Based Projective Clustering Ensembles (E-CB-PCE), which computes the consensus clustering starting from a partition (i.e., metaclustering) inferred from the set of clusters belonging to the ensemble components. Compared to the previously developed metacluster-based heuristics in Gullo et al. (2011), E-CB-PCE involves more constraints aimed to ensure a total coverage of the ensemble components in terms of clusters selected for deriving the projective consensus clustering solution. Experimental results have revealed that the proposed E-CB-PCE is on average more accurate than all previous PCE methods, according to each of the selected assessment criteria. Moreover, E-CB-PCE has shown to improve the efficiency of its most direct competitor CB-PCE (Gullo et al. 2011) on larger datasets up to two orders of magnitude.

Organization of the paper

The remainder of this paper is organized as follows. Section 2 provides the background on the PCE problem and its early single-objective and two-objective formulations. Section 3 focuses on the proposed metacluster-based PCE approach. Section 4 presents the two developed heuristics for the metacluster-based PCE along with an analysis of their computational complexity. Section 5 describes the experimental evaluation and presents the results, and Sect. 6 concludes the paper. Finally, we give the proofs of all the theoretical results of the paper in the Appendix.

2 Early Projective Clustering Ensembles (PCE)

Let \(\mathcal{D}\) be a set of data objects, where each \(\mathbf {o} \in \mathcal{D}\) is an \(|\mathcal{F}|\)-dimensional point defined over a feature space \(\mathcal{F}\).Footnote 1 A projective cluster C defined over \(\mathcal{D}\) is a pair 〈Γ C ,Δ C 〉, where

-

Γ C denotes the object-based representation of C. It is a \(|\mathcal{D}|\)-dimensional real-valued vector whose components Γ C,o ∈[0,1], \(\forall \mathbf {o} \in\mathcal{D}\), represent the object-to-cluster assignment of o to C, i.e., the probability Pr(o|C) that the object o belongs to C;

-

Δ C denotes the feature-based representation of C. It is an \(|\mathcal{F}|\)-dimensional real-valued vector whose components Δ C,f ∈[0,1], \(\forall f \in\mathcal{F}\), represent the feature-to-cluster assignments of the f-th feature to C, i.e., the probability Pr(f|C) that the feature f is informative for cluster C (f belongs to the subspace associated with C).

The object-based (Γ C ) and the feature-based (Δ C ) representations of a projective cluster C implicitly define the projective cluster representation matrix (for short, projective matrix) X C of C. X C is a \(|\mathcal{D}| \times|\mathcal{F}|\) matrix that stores, \(\forall \mathbf {o} \in\mathcal{D}\), \(f \in\mathcal{F}\), the probability of the intersection of the events “object o belongs to C” and “feature f belongs to the subspace associated with C”. Under the assumption of independence between the two events, such a probability is equal to Pr(C|o)=Γ C,o joint with Pr(f|C)=Δ C,f . Hence, given \(\mathcal{D} = \{\mathbf {o}_{1}, \ldots, \mathbf {o}_{|\mathcal {D}|} \}\) and \(\mathcal{F} = \{1, \ldots, |\mathcal{F}| \}\), the matrix X C can formally be defined as:

A couple of interesting results put in relation the projective matrix and the object/feature-based representation of a projective cluster. Such results are formalized in the following two propositions. We will exploit them in the remainder of the paper.

Proposition 1

For any two projective clusters C, C′ it holds that X C =X C′ if and only if Γ C =Γ C′ and Δ C =Δ C′.

Proposition 2

For any projective cluster

C, its object-based representation

and feature-based representation

and feature-based representation

can uniquely be derived from its projective matrix

X

C

as follows:

can uniquely be derived from its projective matrix

X

C

as follows:

where X C (i,j) denotes the element (i,j) of the matrix X C .

A projective clustering solution, denoted by \(\mathcal{C}\), is defined as a set of projective clusters that satisfy the following conditions:

The semantics of any projective clustering \(\mathcal{C}\) is that for each projective cluster \(C \in\mathcal{C}\), the objects belonging to C are close to each other if (and only if) they are projected onto the subspace associated with C.

A projective ensemble \(\mathcal{E}\) is defined as a set of projective clustering solutions. No information about the ensemble generation strategy (algorithms and/or setups), nor original feature values of the objects within \(\mathcal{D}\) are provided along with \(\mathcal{E}\). Moreover, each projective clustering solution in \(\mathcal{E}\) may contain in general a different number of clusters.

The goal of PCE is to derive a projective consensus clustering that properly summarizes the projective clustering solutions within the input projective ensemble.

2.1 Single-objective PCE

A first PCE formulation proposed in Gullo et al. (2013) is based on a single-objective function:

where

and α>1 is a positive integer that ensures non-linearity of the objective function w.r.t. Γ C,o . To solve the optimization problem based on the above function, the EM-based Projective Clustering Ensembles (EM-PCE) heuristic is defined. EM-PCE iteratively looks for the optimal values of Γ C,o (resp. Δ C,f ) while keeping Δ C,f (resp. Γ C,o ) fixed, until convergence.

Weaknesses of single-objective PCE

The objective function at the basis of the problem in (3) does not allow for a perfect balance between object- and feature-to-cluster assignments when measuring the error of a candidate projective consensus clustering solution. This weakness is formally shown in Gullo et al. (2010) and avoided by adjusting (3) with a corrective term. The final form of the problem based on the corrected objective function is the following:

where

The above optimization problem is tackled in Gullo et al. (2010) by proposing two different heuristics. The first one, called E-EM-PCE, follows the same scheme as the EM-PCE algorithm for the early single-objective PCE formulation. The second heuristic, called E-2S-PCE, consists of two sequential steps that handle the object-to-cluster and the feature-to-cluster assignments separately.

2.2 Two-objective PCE

PCE is also formulated in Gullo et al. (2013) as a two-objective optimization problem, whose functions take into account the object-based (function Ψ o ) and the feature-based (function Ψ f ) cluster representations of a given projective ensemble \(\mathcal{E}\), respectively:

where the argmin function is over all possible projective clustering solutions \(\mathcal{C}\) that satisfy the conditions reported in (2) (this makes the searching space in principle infinite), and

Functions \(\overline{\psi}_{o}\) and \(\overline{\psi}_{f}\) are defined as \(\overline{\psi}_{o}(\mathcal{C}', \mathcal{C}'') = \frac{1}{2}( \psi _{o}(\mathcal{C}',\mathcal{C}'') + \psi_{o} (\mathcal{C}'', \mathcal{C}') )\) and \(\overline{\psi}_{f}(\mathcal{C}', \mathcal{C}'') = \frac{1}{2} ( \psi_{f}(\mathcal{C}',\mathcal{C}'') + \psi_{f} (\mathcal{C}'', \mathcal {C}') )\), respectively, where

and \(J (\mathbf {u}, \mathbf {v} ) = (\mathbf {u} \mathbf {v}^{\mathrm{T}} ) / (\|\mathbf {u}\|_{2}^{2} + \|\mathbf {v}\|_{2}^{2} - \mathbf {u} \mathbf {v}^{\mathrm{T}} ) \in[0,1]\) denotes the extended Jaccard similarity coefficient (also known as Tanimoto coefficient) between any two real-valued vectors u and v (Strehl et al. 2000).

The problem defined in (7) is solved by a heuristic, called MOEA-PCE, in which a Pareto-based Multi-Objective Evolutionary Algorithm is exploited to avoid combining the two objective functions into a single one.

Weaknesses of two-objective PCE

Experimental evidence in Gullo et al. (2013) has shown that the two-objective PCE formulation is much more accurate than the single-objective counterpart. Nevertheless, the original two-objective PCE also suffers from an important conceptual issue which has been firstly identified in Gullo et al. (2011), and we further investigate in this paper. The existence of this issue proves that the accuracy of two-objective PCE can still be improved, which is a major goal of this work. We unveil this issue in the following example.

Example 1

Let \(\mathcal{E}\) be a projective ensemble defined over a set \(\mathcal {D}\) of data objects and a set \(\mathcal{F}\) of features. Suppose that \(\mathcal{E}\) contains only one projective clustering solution \(\mathcal {C}\) and that \(\mathcal{C}\) in turn contains two projective clusters C′ and C″, whose object- and feature-based representations are different from one another, i.e., \(\exists \mathbf {o} \in\mathcal{D}\) s.t. Γ C′,o ≠Γ C″,o , and \(\exists f \in\mathcal{F}\) s.t. Δ C′,f ≠Δ C″,f .

Let us consider two candidate projective consensus clusterings \(\mathcal {C}_{1} = \{C'_{1}, C''_{1} \}\) and \(\mathcal{C}_{2} = \{C'_{2}, C''_{2} \}\). We assume that \(\mathcal{C}_{1} = \mathcal{C}\), whereas \(\mathcal{C}_{2}\) is defined as follows. Cluster \(C'_{2}\) has object- and feature-based representations given by Γ C′ (i.e., the object-based representation of the first cluster C′ within \(\mathcal{C}\)) and Δ C″ (i.e., the feature-based representation of the second cluster C″ within \(\mathcal{C}\)), respectively; cluster \(C''_{2}\) has object- and feature-based representations given by Γ C″ (i.e., the object-based representation of the second cluster C″ within \(\mathcal{C}\)) and Δ C′ (i.e., the feature-based representation of the first cluster C′ within \(\mathcal{C}\)), respectively. According to (8), it is easy to see that:

Thus, both candidates \(\mathcal{C}_{1}\) and \(\mathcal{C}_{2}\) minimize the objectives of the early two-objective PCE formulation reported in (7), and hence, they are both recognized as optimal solutions. This conclusion is conceptually wrong, because only \(\mathcal{C}_{1}\) should be recognized as an optimal solution, since only \(\mathcal{C}_{1}\) exactly corresponds to the unique solution of the ensemble. Conversely, \(\mathcal{C}_{2}\) is not well-representative of the ensemble \(\mathcal{E}\), as the object- and the feature-based representations of its clusters are inversely associated to each other w.r.t. the associations in \(\mathcal{C}\). Indeed, in \(\mathcal{C}_{2}\),  and

and  , whereas, the solution \(\mathcal{C} \in \mathcal{E}\) is such that C′=〈Γ

C′,Δ

C′〉 and C″=〈Γ

C″,Δ

C″〉.

, whereas, the solution \(\mathcal{C} \in \mathcal{E}\) is such that C′=〈Γ

C′,Δ

C′〉 and C″=〈Γ

C″,Δ

C″〉.

The issue described in the above example arises because the two-objective PCE formulation ignores that the object-based and the feature-based representations of a projective cluster are strictly coupled to one another and, therefore, they need to be considered as a whole. In other words, in order to effectively evaluate the quality of a candidate projective consensus clustering, detecting the correct object-based and feature-based representations of the various clusters in a standalone fashion is not enough; instead, a mapping between the two (i.e., which object-based representation a feature-based representation should be coupled with and vice versa) should be properly discovered as well. For this purpose, the objective functions Ψ o and Ψ f should not be kept separated, but they should somehow be put in relation to one another. We show next how this can be overcome by employing a metacluster-based PCE formulation.

3 Metacluster-based PCE

3.1 Early metacluster-based PCE formulation

In our previous work (Gullo et al. 2011), we attempted to solve the main drawback of two-objective PCE shown in Example 1 by proposing the following alternative formulation based on a single-objective function:

where Ψ of is a function designed to measure the “distance” of any well-defined projective clustering solution \(\mathcal{C}\) from \(\mathcal{E}\) in terms of both data clustering and feature-to-cluster assignment. To define Ψ of , we resorted to the early two-objective PCE formulation and adapted the (asymmetric) measure therein involved to the new setting:

where

and

In order to measure the similarity between any pair C′,C″ of projective clusters jointly in terms of object-based representation and feature-based representation, the corresponding projective matrices X C′ and X C″ are compared to each other. To accomplish this, the two matrices are first linearized as mono-dimensional vectors, and then compared by means of some distance measure between real-valued vectors. We resorted to the Tanimoto similarity coefficient (also known as extended Jaccard coefficient), as it represents a trade-off solution between Euclidean and Cosine measures in terms of scale/translation invariance (Strehl et al. 2000). Moreover, it has a fixed-range codomain ([0,1]), which is a desirable property in the design of the proposed objective function. More precisely, the generalized definition of the Tanimoto coefficient operating on real-valued matrices is as follows:

where \(\mathbf {{X}}_{i} \hat{\mathbf {{X}}}_{i}^{\mathrm{T}}\) denotes the scalar product between the i-th rows of matrices X and \(\hat{\mathbf {{X}}}\).

It is easy to note that the PCE formulation reported in (9) is well-suited to measure the quality of a candidate consensus clustering in terms of both object-to-cluster and feature-to-cluster assignments as a whole. As shown in Gullo et al. (2011), this allows for overcoming the conceptual disadvantages of both early single-objective and two-objective PCE. Particularly, the issue described in Example 1 does not arise anymore with the PCE formulation in (9). Indeed, considering again the two candidate projective consensus clusterings \(\mathcal{C}_{1}\) and \(\mathcal{C}_{2}\) of Example 1, it is straightforward to see that:

and hence, \(\mathcal{C}_{1}\) would be correctly recognized as an optimal solution, whereas \(\mathcal{C}_{2}\) would not.

Weaknesses of early metacluster-based PCE formulation

Although the early metacluster-based PCE formulation in (9) mitigates the issues of two-objective PCE, we will show how to further improve that formulation. For this purpose, next we provide a detailed analysis of the theoretical properties of the formulation in (9), with the ultimate goal of defining an enhanced metacluster-based PCE formulation that discards the controversial aspects of the earlier formulation.

Let us denote the expression \(1 - \hat{J} (\mathbf {{X}}_{C'},\mathbf {{X}}_{C''})\) with T(X C′,X C″) or, more simply, T(C′,C″), for any two clusters C′, C″. Since \(\hat{J} \in[0,1]\), T(⋅,⋅) is regarded as Tanimoto distance (Gullo et al. 2011). Combining (10), (11), and (12), we have that:

By discarding the constant terms and observing that

we can rewrite Ψ of as:

Thus, the objective function Ψ of corresponds to a sum of two objective functions \(\varPsi'_{\mathit{of}}\) and \(\varPsi''_{\mathit{of}}\), which are the focus of the following discussion. Let us introduce the variable \(x(\hat{C}, C) \in\{0,1\}\), which is 1 if cluster \(\hat{C}\), belonging to any projective clustering solution within the ensemble \(\mathcal{E}\), is “mapped” to the cluster C of the candidate projective consensus clustering \(\mathcal{C}\), and 0 otherwise. The meaning of the “mapping” between clusters is clarified next.

Explaining the \(\varPsi'_{\mathit{of}}\) function

The \(\varPsi'_{\mathit{of}}\) function models a modified version of the K-Means problem (Jain and Dubes 1988), where (i) the input dataset for the clustering task corresponds to the set of projective clusters belonging to all solutions in the ensemble \(\mathcal{E}\), i.e., the set \(\{\hat {C}\mid \hat{C} \in\hat{\mathcal{C}}, \hat{\mathcal{C}} \in\mathcal{E}\} \), (ii) each “object” in such a dataset is hence a projective cluster represented by its projective matrix, (iii) the centers have the form of projective matrices satisfying the constraints defined in (2), and (iv) the distance between objects and centers is computed according to the Tanimoto distance T(⋅,⋅), rather than the classic squared Euclidean distance. Formally, the \(\varPsi'_{\mathit{of}}\) function models the following optimization problem:

Due to the analogies with K-Means, it is easy to see that the solution for the above problem is a set of K “center” projective matrices (representing the various clusters in the optimal \(\mathcal {C}^{*}\)) that minimize the sum of the Tanimoto distances between each projective cluster in the ensemble and its closest center. In the following example we show that such a problem might not be particularly appropriate in the context of PCE.

Example 2

Figure 2(a) shows an ensemble composed by three projective clustering solutions. Projective clusters in each solution are depicted as colored rectangles whose color shade corresponds to a certain representation provided by its corresponding projective matrix. Similar shades denote similar projective matrices, and, therefore, similar clusters.Footnote 2 In this example, the clusters within the same projective clustering solution are highly similar to each other, and are highly dissimilar from the clusters of the other solutions. Assuming K=3 clusters in the output projective consensus clustering, the optimal partition of the ensemble of Fig. 2(a) according to the problem defined in (15)–(16) would correspond to the one identified by the solutions in the ensemble themselves. This leads to the optimal consensus clustering reported in Fig. 2(b), whose clusters C 1, C 2, and C 3 take the colors red, green, and blue as a result of the summarization of the sets \(\{C'_{1}, C'_{2}, C'_{3}\}\), \(\{C''_{1}, C''_{2}, C''_{3}\}\), \(\{C'''_{1}, C'''_{2}, C'''_{3}\}\), respectively. This solution is actually not very intuitive for PCE, since a good projective consensus clustering is supposed to summarize the information available from the ensemble by putting in relation clusters that belong to different solutions of the ensemble. Therefore, it would be much more meaningful if a consensus clustering would have the form reported in Fig. 2(c), whose grey-shaded clusters \(C^{*}_{1}\), \(C^{*}_{2}\), and \(C^{*}_{3}\) derive from mixing the red, green, and blue colors of the sets \(\{C'_{1}, C''_{1}, C'''_{1}\}\), \(\{C'_{2}, C''_{2}, C'''_{2}\}\), \(\{C'_{3}, C''_{3}, C'''_{3}\}\), respectively.

(a) A projective ensemble where, for each projective cluster, different projective matrix representations are depicted with different color shades, (b) optimal projective consensus clustering according to the \(\varPsi'_{\mathit{of}}\) objective function, (c) expected projective consensus clustering. (The color version of this figure is available only in the electronic edition)

Explaining the \(\varPsi''_{\mathit{of}}\) function

The optimization problem defined by the \(\varPsi''_{\mathit{of}}\) function in (14) can be rewritten as follows:

Let us informally explain what is given above. Consider first the case where the number K of clusters in the output consensus clustering is equal to 1 and consider all subsets C of \(\{\hat{C}\mid \hat{C} \in\mathcal{C}, \mathcal{C} \in\mathcal {E} \}\) that satisfy the following condition: each solution within the ensemble \(\mathcal{E}\) has exactly one cluster in C; also, for each C, let X C denote the projective matrix that (i) satisfies (2), and (ii) minimizes the sum d C of the Tanimoto distances between itself and (the projective matrices of) all members of C. The problem defined by \(\varPsi''_{\mathit{of}}\) (for K=1) aims to find a matrix \(\mathbf {{X}}^{*}_{\mathbf{C}}\) such that d C is minimum; the final output would be a projective clustering \(\mathcal {C}^{*}\) composed by only one cluster whose projective matrix corresponds to the matrix \(\mathbf {{X}}^{*}_{\mathbf{C}}\). This interpretation can easily be generalized to the case K>1; indeed, in this case, all K optimal solution matrices (i.e., projective clusters in \(\mathcal{C}^{*}\)) would necessarily have the same form, as formally stated in the next proposition.

Proposition 3

Given a projective ensemble \(\mathcal{E}\), let ϒ denote the set of all clusterings obtained by taking exactly one cluster from each ensemble member, i.e., \(\varUpsilon= \{ \mathbf{C}\mid \mathbf{C} \subseteq\{\hat{C}\mid \hat{C} \in\hat{\mathcal{C}} \wedge\hat{\mathcal{C}} \in\mathcal{E} \} \wedge|\hat{\mathcal{C}} \cap\mathbf{C}| = 1, \forall\hat{\mathcal {C}} \in\mathcal{E} \}\). Moreover, let \(\mathbf {{X}}^{*} = \arg \min_{\hat{X}} \min_{\mathbf{C} \in\varUpsilon} \sum_{C \in\mathbf{C}} T(\hat{\mathbf {{X}}},\mathbf {{X}}_{C})\ \mbox{\textit{s.t.}}\ \hat{\mathbf {{X}}}\ \mbox{\textit{satisfies} (2)}\). Given an integer K≥1, it holds that the optimal projective consensus clustering for the optimization problem defined in (17)–(18) is \(\mathcal{C}^{*} = \{C_{1}^{*}, \ldots, C_{K}^{*} \}\) s.t. \(\mathbf {{X}}_{C^{*}_{1}} = \cdots= \mathbf {{X}}_{C^{*}_{K}} = \mathbf {{X}}^{*}\).

The above result provides a clearer explanation of the optimization problem based on the objective function \(\varPsi''_{\mathit{of}}\). Some issues that may arise with \(\varPsi''_{\mathit{of}}\) are discussed in the following example.

Example 3

The ensemble illustrated in Fig. 3(a) contains three projective clustering solutions whose clusters are very different from each other (different color shades), but are similar to some clusters from other solutions in the ensemble. In particular, \(C'_{1}\), \(C''_{1}\), \(C'''_{1}\) are exactly the same (red shade), and the other groups of similar clusters are \(C'_{2}\), \(C''_{2}\), \(C'''_{2}\) (green shade), and \(C'_{3}\), \(C''_{3}\), \(C'''_{3}\) (blue shade). According to Proposition 3, the optimal solution for the optimization problem based on \(\varPsi''_{\mathit{of}}\) with K=3 is the one depicted in Fig. 3(b), as the clusters in the red-shaded group are more similar to each other than the green-shaded and blue-shaded groups. This solution is conceptually far away from the ideal one reported in Fig. 3(c), which is expected to be composed by three different clusters, each one summarizing a group of clusters with different shades.

(a) A projective ensemble where, for each projective cluster, different projective matrix representations are depicted with different color shades, (b) optimal projective consensus clustering according to the \(\varPsi''_{\mathit{of}}\) objective function, (c) expected projective consensus clustering. (The color version of this figure is available only in the electronic edition)

3.2 Enhanced metacluster-based PCE formulation

We have discussed above the limitations due to the treatment of the functions \(\varPsi'_{\mathit{of}}\) and \(\varPsi''_{\mathit{of}}\) as separate (Gullo et al. 2011). Combining \(\varPsi'_{\mathit{of}}\) and \(\varPsi''_{\mathit{of}}\) into a single function somehow mitigates their respective undesired effects. Nevertheless, it is hard to understand to which extent each one of the two objective functions really contributes to hide the weaknesses of the other. Hence, it is more appropriate to have a problem formulation based on a single objective function which does not suffer from either of the drawbacks illustrated in Examples 2 and 3. We achieve this goal by proposing a modified metacluster-based PCE formulation, whose details are discussed next.

Let us first consider again the issue due to the function \(\varPsi '_{\mathit{of}}\) described in Example 2. We recall that a major problem in this case is that the summarization provided by the clusters in the optimal consensus clustering might put in relation clusters from the same solutions in the ensemble, rather than coupling clusters from different ensemble components. It can be noted that this arises because the mappings of the various clusters in the ensemble to the center matrices are unconstrained (cf. the formal definition of the problem in (15)–(16)). The issue can be overcome by defining such mappings so to guarantee that each center is associated with (at least) one cluster from each different solution in the ensemble. These constraints actually correspond to those defined in (18), which is indeed the optimization problem based on function \(\varPsi''_{\mathit{of}}\).

Focusing now on the function \(\varPsi''_{\mathit{of}}\), a possible solution to the issue described in Example 3 is to constrain the clusters forming the output projective consensus clustering to be different from each other. Alternatively, one can observe that the issue of Example 3 is mainly due to the fact that not all clusters in the ensemble are required to be mapped to a cluster of the projective consensus clustering. Based on this consideration, we can fix such an issue by resorting to some constraints of the problem based on the other function \(\varPsi '_{\mathit{of}}\), particularly the constraints listed in (16). Thus, in conclusion, the constraints reported in (16) and (18) represent a solution to the issues pertaining the functions \(\varPsi'_{\mathit{of}}\) and \(\varPsi''_{\mathit{of}}\), respectively. As such, we define our enhanced metacluster-based PCE formulation by involving both these constraints. We hereinafter refer to this problem as CB-PCE enhanced.

Problem 1

(CB-PCE enhanced)

Given a projective ensemble \(\mathcal{E}\) defined over a set \(\mathcal {D}\) of objects and a set \(\mathcal{F}\) of features, and an integer K>0, find a projective clustering solution \(\mathcal{C}^{*}\) such that \(|\mathcal{C}^{*}| = K\) and:

Note that the constraints (20) and (21) have been slightly modified w.r.t. the original ones in (16) and (18), in order to handle the cases where the number of clusters of some ensemble solutions is smaller or larger than the number of clusters in the output consensus clustering. In summary, the constraints in (20) force each cluster \(\hat{C}\) of the ensemble to be mapped to at least one cluster in the candidate projective consensus clustering \(\mathcal {C}\), while the constraints in (21) ensure that each cluster \(C \in\mathcal{C}\) is coupled with at least one cluster from each ensemble solution \(\hat{\mathcal{C}}\).

4 Heuristics for CB-PCE enhanced

The CB-PCE enhanced problem can formally be shown to be NP-hard. The detailed proof, which is reported in the Appendix, is based on a reduction from the Jaccard Median problem (Chierichetti et al. 2010). This reduction shows that CB-PCE enhanced remains hard even on a restricted version of the problem where the input dataset is a singleton and the number of clusters in both the output projective consensus clustering and each ensemble solution is 1.

Lemma 1

Let

CB-PCE restricted

be a special version of the

CB-PCE enhanced

problem where (i) \(|\mathcal{D}|= 1\), (ii) K=1 (K

denotes the number of clusters in the output projective consensus clustering), (iii) \(|\hat{\mathcal{C}}| = 1\), \(\forall\hat{\mathcal{C}} \in \mathcal{E}\), (iv)  , \(\forall\hat{C},\hat{C}'\), where

, \(\forall\hat{C},\hat{C}'\), where

, and (v) \(\varDelta _{\hat{C}, f} = \frac {1}{n_{\mathcal{E}}}\), \(\forall f \in\mathcal{F}\), \(\forall\hat{C} \in\hat{\mathcal{C}}\), \(\forall\hat{\mathcal{C}} \in\mathcal{E}\). CB-PCE restricted

is

NP-hard.

, and (v) \(\varDelta _{\hat{C}, f} = \frac {1}{n_{\mathcal{E}}}\), \(\forall f \in\mathcal{F}\), \(\forall\hat{C} \in\hat{\mathcal{C}}\), \(\forall\hat{\mathcal{C}} \in\mathcal{E}\). CB-PCE restricted

is

NP-hard.

Theorem 1

CB-PCE enhanced is NP-hard.

The above results prompted us to develop heuristics for approximating CB-PCE enhanced. In this respect, consider the formulation of CB-PCE enhanced reported in (19)–(21) and suppose that, for any input ensemble \(\mathcal{E}\), the optimal mappings between the clusters \(\hat{C}\) within \(\mathcal{E}\) and the clusters C of the output projective consensus partition are available, i.e., suppose we know in advance the optimal \(x(\hat{C},C)\) values, \(\forall\hat{\mathcal{C}} \in\mathcal{E}\), \(\forall\hat{C} \in\hat{\mathcal{C}}\), \(\forall C \in\mathcal{C}\). Within this view, a metacluster might be derived for each \(C \in \mathcal{C}\) that contains all clusters \(\hat{C}\) in the ensemble that are mapped to C (i.e., such that \(x(\hat{C}, C) = 1\)). In this way, the optimum for CB-PCE enhanced would be found by computing, for each metacluster, the projective matrix that minimizes the sum of the distances from all members of that metacluster under the constraints in (2). Unfortunately, neither the optimal \(x(\hat{C}, C)\) mappings can be known in advance as they are part of the optimization process, nor the computation of the optimal projective matrices given an optimal mapping is feasible, as it is easy to observe from Lemma 1 that even this subproblem is NP-hard.

Nevertheless, the above reasoning interestingly reveals that CB-PCE enhanced can conceptually be split into two different sub-problems: the first one concerning the mapping of the clusters within the ensemble to the clusters in the output projective consensus clustering, and the second one consisting in finding optimal projective matrices given that mapping. To tackle these sub-problems, we improve upon the CB-PCE heuristic originally introduced in Gullo et al. (2011), as it still complies with the two-step nature of CB-PCE enhanced.

4.1 The CB-PCE heuristic

Algorithm 1 sketches the CB-PCE heuristic defined in Gullo et al. (2011). For clarity of presentation, the following symbols are used in addition to the notation provided in Sect. 2: M denotes a set of metaclusters (i.e., a set of sets of clusters), \(\mathcal{M} \in\mathbf{M}\) denotes a metacluster (i.e., a set of clusters), and \(M \in\mathcal{M}\) denotes a cluster (i.e., a set of data objects). The mapping of clusters in the ensemble to clusters in the output projective consensus clustering is approximated in CB-PCE by exploiting a basic idea in traditional metacluster-based clustering ensembles, namely grouping all clusters in the ensemble in order to form a set of metaclusters (cf. Sect. 2). This is achieved by invoking the function metaclusters (Line 2), which aims to cluster the set of clusters from all solutions within the input ensemble \(\mathcal{E}\). This function exploits the matrix P of pairwise distances between all clusters in \(\mathcal{E}\) (Line 1), which are computed using the Tanimoto distance defined in (13).

The second conceptual step of the CB-PCE enhanced problem consists in finding a suitable projective matrix representation for each cluster in the output consensus clustering, given the various metaclusters (Lines 4–8). As shown above, the ideal solution concerning the direct optimization of the sum of the Tanimoto distances is NP-hard. Therefore the idea underlying CB-PCE is to derive projective matrices by focusing on the optimization of a different criterion which is easier to solve and well-suited to find reasonable and effective approximations. Specifically, the solution provided by CB-PCE is to adapt the widely used majority voting (Strehl and Ghosh 2002) to the context at hand.

Deriving projective matrices from metaclusters

For each metacluster we derive a projective matrix that optimizes the majority voting criterion. This is sufficient to obtain an approximation of the solution of the problem, since deriving the corresponding object- and feature-based cluster representations given a projective matrix can be performed easily by using Proposition 2. However, due to the use of the majority voting criterion, it is possible to derive object- and feature-based representation vectors directly, without requiring the computation of the projective matrix.

The object- and feature-based representations of each projective cluster to be included into the output consensus clustering \(\mathcal {C}^{*}\) are denoted as  and

and  , \(\forall\mathcal{M} \in\mathbf{M}\), respectively. More precisely,

, \(\forall\mathcal{M} \in\mathbf{M}\), respectively. More precisely,  (resp.

(resp.  ) is the object-based (resp. feature-based) representation of the projective cluster within \(\mathcal {C}^{*}\) corresponding to the metacluster \(\mathcal{M}\). Let us derive the values of

) is the object-based (resp. feature-based) representation of the projective cluster within \(\mathcal {C}^{*}\) corresponding to the metacluster \(\mathcal{M}\). Let us derive the values of  first. Since the ensemble can in principle contain projective clusterings that are soft at the clustering level, the majority voting criterion leads to the definition of the following optimization problem:

first. Since the ensemble can in principle contain projective clusterings that are soft at the clustering level, the majority voting criterion leads to the definition of the following optimization problem:

where

and α>1 is an integer that guarantees the non-linearity of the objective function Q w.r.t. \(\varGamma _{\mathcal{M}, \mathbf {o}}\), needed to ensure \(\varGamma ^{*}_{\mathcal{M}, \mathbf {o}} \in[0,1]\) (rather than {0,1}).Footnote 3 The final solution for such a problem is stated in the next theorem. The details of its derivation are in the Appendix.

Theorem 2

The optimum of the problem defined in (22)–(24) is (\(\forall\mathcal{M}\), ∀o):

A similar argument applies to  . In this case, the problem to be solved is as follows:

. In this case, the problem to be solved is as follows:

where \(B_{\mathcal{M}, f} = |\mathcal{M}|^{-1} \sum_{M \in\mathcal{M}} 1-\varDelta _{M, f}\) and β plays the same role as α in the function Q. The solution of such a problem is similar to that derived for \(\varGamma _{\mathcal{M},\mathbf {o}}^{\ast}\).

Theorem 3

The optimum of the problem defined in (25)–(27) is (\(\forall\mathcal{M}\), ∀f):

4.2 The E-CB-PCE heuristic

Although CB-PCE provides an approximation to CB-PCE enhanced that reasonably exploits its intrinsic two-step nature, it does not take into account the new findings of this work. In particular, a major issue of CB-PCE is due to the approximation of the optimal mappings between clusters in the ensembles and clusters in the projective consensus clustering: it satisfies only the constraints listed in (20), and it ignores the constraints in (21). Indeed, the clustering of all the clusters in the ensemble in CB-PCE is carried out by using a standard clustering algorithm, and this is clearly not sufficient to enforce that each output metacluster must contain at least one cluster from each different projective clustering solution of the ensemble, which is what the constraints in (21) require. For this purpose, we describe next a modified version of CB-PCE, called Enhanced CB-PCE (E-CB-PCE). E-CB-PCE follows the overall scheme of CB-PCE reported in Algorithm 1. The only difference is that it incorporates a well-suited (local-search) procedure to be used as the metaclusters subroutine in Line 2, whose main goal is to produce metaclusters satisfying the constraints in (21) along with those in (20).

The outline of the proposed method is given in Function 2. The set of metaclusters is initialized in such a way that the constraints in (20)–(21) are satisfied (Line 1). A score V for the set of metaclusters is computed, corresponding to the sum of all pairwise Tanimoto distances between the clusters in the same metacluster (Line 2). The procedure performs an iterative step aimed at improving the score V (Lines 3–20). In particular, we employ a local search that moves a projective cluster M from its source metacluster \(\mathcal{M}_{s}\) to a target metacluster \(\mathcal{M}_{t} \neq\mathcal{M}_{s}\) (Lines 7–15). The move that causes the largest decrease in the score V is performed (Lines 16–19). The method terminates when no valid move that improves the current score V is available, i.e., a local minimum of V has been reached.

The evaluation of the move of a cluster M from its source metacluster \(\mathcal{M}_{s}\) to a target metacluster \(\mathcal{M}_{t} \neq\mathcal {M}_{s}\) is performed by the method evaluateMove presented in Function 3. This method returns the relative score \(\hat{V}\) of the move along with a “swap” projective cluster \(\hat{M}\) within \(\mathcal{M}_{t}\) that aims to replace M in \(\mathcal{M}_{s}\). If no swapping is required, no swap cluster is returned (i.e., \(\hat{M} = \mathbf{nil}\)). The move is evaluated by distinguishing between two cases. Let us denote with \(\mathcal{C}_{M}\) the projective clustering solution in the input ensemble which contains M. If the source metacluster \(\mathcal{M}_{s}\) contains one or more clusters of \(\mathcal{C}_{M}\) besides M, removing M from \(\mathcal{M}_{s}\) does not violate the constraints in (21). Therefore, in this case, the score \(\hat{V}\) is computed as the sum of the Tanimoto distances of M from all the clusters in the new metacluster \(\mathcal {M}_{t}\) minus the distances between M and all the members of the old metacluster \(\mathcal{M}_{s}\) (Lines 4–5). Otherwise, if \(\mathcal{M}_{s}\) does not contain any other cluster of \(\mathcal{C}_{M}\), the move evaluation also takes into account that a “swap” is needed to ensure that the constraints in (21) are satisfied (Lines 7–8). More precisely, M needs to be replaced with another cluster from \(\mathcal{C}_{M}\) that currently belongs to the target metacluster \(\mathcal{M}_{t}\). Such a cluster from \(\mathcal{M}_{t}\) is chosen in such a way that the resulting score \(\hat{V}\) is minimized.

4.3 Computational analysis

The bottleneck of the early CB-PCE heuristic is the computation of the pairwise Tanimoto distances between the clusters in the ensemble (Line 1 in Algorithm 1). For any single pair of clusters, the Tanimoto distance in principle takes \(\mathcal{O}(|\mathcal{D}||\mathcal{F}|)\) time, as it requires to consider all the elements of the \(|\mathcal{D}| \times|\mathcal{F}|\) projective matrices of those clusters. Nevertheless, we show in Proposition 4 that the Tanimoto distance formula can be rewritten in such a way that its computation takes instead \(\mathcal{O}(|\mathcal{D}| + |\mathcal {F}|)\) time.

Proposition 4

For any two projective clusters C, C′ it holds that:

where

Thanks to the above result, the proposed E-CB-PCE improves upon the efficiency of the early CB-PCE. In particular, E-CB-PCE can now achieve a better time complexity for the Tanimoto distance of a single pair of clusters without introducing any approximation, which was the solution exploited in our previous work to obtain a speed-up (cf. FCB-PCE heuristic in Gullo et al. 2011).

Time complexity of E-CB-PCE

Next we discuss in detail the computational complexity of the proposed E-CB-PCE. We are given: a set \(\mathcal{D}\) of data objects, each one defined over a feature space \(\mathcal{F}\), a projective ensemble \(\mathcal{E}\) defined over \(\mathcal{D}\) and \(\mathcal{F}\), and a positive integer K representing the number of clusters in the output projective consensus clustering. It is also reasonable to assume that the size \(|\mathcal{C}|\) of each solution \(\mathcal{C}\) in \(\mathcal{E}\) is \(\mathcal{O}(K)\). The complexity of E-CB-PCE can be broken down into three stages:

-

1.

Pre-processing: it concerns the computation of the pairwise distances between clusters, by applying the Tanimoto distance T(⋅,⋅) to projective matrices. According to Proposition 4, any single distance computation can be performed in \(\mathcal{O}(|\mathcal{D}| + |\mathcal{F}|)\) time. Thus, computing the overall pairwise matrix takes \(\mathcal{O}(K^{2} |\mathcal{E}|^{2} (|\mathcal{D}|+|\mathcal{F}|))\) time;

-

2.

Meta-clustering: it concerns the clustering of the \(\mathcal{O}(K |\mathcal{E}|)\) clusters of all the solutions in the ensemble according to the procedure described in Function 2. This procedure is based on a local-search optimization strategy of an objective function that is quadratic in the number of all clusters within the input ensemble. Thus, its time complexity is \(\mathcal {O}(I K^{2} |\mathcal{E}|^{2})\), where I is the number of iterations to convergence.

-

3.

Post-processing: it concerns the assignment of objects and features to the metaclusters based on Theorems 2 and 3. According to those theorems, both the object and the feature assignments need to look up all the clusters in each metacluster only once; thus, for each object and for each feature, it takes \(\mathcal{O}(K|\mathcal{E}|)\) time. Performing this step for all objects and features leads to a total cost of \(\mathcal{O}(K|\mathcal{E}| (|\mathcal{D}|+|\mathcal{F}|))\) for the entire post-processing step.

It can be noted that the first step is an offline phase, i.e., a phase to be performed only once in case of a multi-run execution, whereas the second and third are online steps. Thus, as summarized in Table 1 (where we also report the complexities of the earlier MOEA-PCE, EM-PCE (Gullo et al. 2013), E-EM-PCE, E-2S-PCE (Gullo et al. 2010), and CB-PCE, FCB-PCE (Gullo et al. 2011) methods),Footnote 4 we can conclude that the offline, online, and total (i.e., offline + online) complexities of E-CB-PCE are \(\mathcal{O}(K^{2} |\mathcal{E}|^{2} (|\mathcal {D}| + |\mathcal{F}|))\), \(\mathcal{O}(K|\mathcal{E}|(IK|\mathcal{E}| + |\mathcal{D}| + |\mathcal{F}|))\), and \(\mathcal{O}(K^{2} |\mathcal {E}|^{2} (I + |\mathcal{D}| + |\mathcal{F}|))\), respectively.

5 Experimental evaluation

We evaluated accuracy and efficiency of the proposed E-CB-PCE algorithm and compared it with the early PCE methods, i.e., MOEA-PCE, EM-PCE (Gullo et al. 2009, 2013), E-EM-PCE, E-2S-PCE (Gullo et al. 2010), and CB-PCE (Gullo et al. 2011). In the following, we introduce our evaluation methodology which includes the selected datasets, the strategy used for generating the projective ensembles, the setup of the proposed algorithms, and the assessment criteria for the projective consensus clusterings. Finally, we discuss obtained experimental results.

5.1 Evaluation methodology

5.1.1 Datasets

We selected 22 publicly available datasets with different characteristics in terms of number of objects, features, and classes. In the summary provided in Table 2, the first fifteen datasets are from the UCI Machine Learning Repository (Asuncion and Newman 2010), the next four datasets are from the UCR Time Series Classification/Clustering Page (Keogh et al. 2003), whereas the last three datasets are synthetically generated and selected from Müller et al. (2009). Note that the synthetic datasets originally had overlapping clusters. We selected for each dataset the maximal subset of data objects forming a partition and the corresponding natural subspace for each cluster in the partition.

5.1.2 Projective ensemble generation

We adopted a basic strategy for projective ensemble generation, which consists in selecting a (projective) clustering algorithm and varying the parameter(s) of that algorithm in order to guarantee the diversity of the solutions within the projective ensemble. We were not interested in comparing projective clustering algorithms and assessing the impact of their performance on projective ensemble generation, since generating projective ensembles with the highest quality is not a goal of this work; nevertheless, we resorted to a state-of-the-art algorithm, LAC, whose effectiveness in the context of projective clustering has been already proven (Domeniconi et al. 2007). The diversity of the projective clustering solutions was ensured by randomly choosing the initial centroids and varying the LAC’s parameter h.

Note that LAC yields projective clusterings that have hard object-to-cluster assignments and have weighted feature-to-cluster assignments. Therefore, in order to test the ability of the proposed algorithm to also deal with soft clustering solutions and with solutions having unweighted feature-to-cluster assignments, we generated each projective ensemble \(\mathcal{E}\) as a composition of four equally-sized subsets, denoted as \(\mathcal{E}_{1}\), \(\mathcal{E}_{2}\), \(\mathcal{E}_{3}\), and \(\mathcal{E}_{4}\) and defined as follows:

-

\(\mathcal{E}_{1}\) contains solutions that have hard object-to-cluster assignments and weighted feature-to-cluster assignments, i.e., solutions as provided by standard LAC;

-

\(\mathcal{E}_{2}\) contains solutions that have hard object-to-cluster assignments and unweighted feature-to-cluster assignments. Starting from a LAC solution \(\mathcal{C}\) defined over a set \(\mathcal{D}\) of data objects and a set \(\mathcal {F}\) of features, a projective clustering \(\mathcal{C}'\) having unweighted feature-to-cluster assignments is derived such that \(\varDelta _{C', f} = \mathbf{I} [\varDelta _{C',f} \geq|\mathcal{F}|^{-1}\sum _{f' \in\mathcal{F}} \varDelta _{C', f'} ]\), \(\forall C' \in\mathcal{C}',\ \forall f \in\mathcal{F}\), where I[A] is the indicator function, which is equal to 1 when the event A is true, and 0 otherwise;

-

\(\mathcal{E}_{3}\) contains solutions that have soft object-to-cluster assignments and weighted feature-to-cluster assignments. Starting from a LAC solution \(\mathcal{C}\), a soft projective clustering \(\mathcal{C}''\) is derived by computing the Γ C″,o values (\(\forall C'' \in\mathcal{C}''\), \(\forall \mathbf {o} \in\mathcal{D}\)), proportionally to the distance of o from the centroids \(\overline{C}''\) of the clusters C″:

$$\varGamma _{C'', \mathbf {o}} = \frac{\sum_{f \in\mathcal{F}} ( o_f - \overline {C}_f^{\prime\prime} )^2}{\sum_{C \in\mathcal{C}''}\sum_{f \in\mathcal {F}} ( o_f - \overline{C}_f )^2}, $$where the f-th feature \(\overline{C}_{f}\) of the centroid of any cluster C is defined as \(\overline{C}_{f} = |C|^{-1} \sum_{\mathbf {o} \in C} o_{f}\).

-

\(\mathcal{E}_{4}\) contains solutions that have soft object-to-cluster assignments and unweighted feature-to-cluster assignments. The solutions are derived from standard LAC solutions according to the methods employed for generating \(\mathcal{E}_{2}\) and \(\mathcal{E}_{3}\), respectively.

For each dataset, we generated 10 different projective ensembles; all results we present in the following correspond to averages over these projective ensembles.

5.1.3 Assessment criteria

We assessed the quality of a projective consensus clustering \(\mathcal{C}\) using both external and internal cluster validity criteria: the former is based on the similarity of \(\mathcal{C}\) w.r.t. a reference classification, whereas the latter is based on the average similarity w.r.t. the solutions in the input projective ensemble \(\mathcal{E}\).

Similarity w.r.t. the reference classification (external evaluation)

This evaluation stage exploits the availability of a reference classification, hereinafter denoted as \(\widetilde{\mathcal{C}}\), for any given dataset \(\mathcal{D}\). Note that all the selected datasets are coupled with a reference classification that provides information about the ideal object-to-cluster assignments \(\varGamma _{\widetilde{C}, \mathbf {o}}\) (\(\forall\widetilde{C} \in\widetilde{\mathcal{C}}\), \(\forall \mathbf {o} \in\mathcal{D}\)), which are hard assignments. The \(\varDelta _{\widetilde{C},f}\) feature-to-cluster assignments are instead defined according to the following approaches:

-

For the synthetic datasets N30, D75, and S2500, which already provide information about the ideal subspaces assigned to each group of objects identified by the reference classification, these subspaces are directly used to define unweighted \(\varDelta _{\widetilde{C},f}\) feature-to-cluster assignments in \(\widetilde {\mathcal{C}}\).

-

For the remaining datasets, the \(\varDelta _{\widetilde{C},f}\) values are derived by applying the procedure suggested in Domeniconi et al. (2007) to the reference classification \(\widetilde{\mathcal{C}}\): given the \(\varGamma _{\widetilde{C}, \mathbf {o}}\) values (\(\forall\widetilde{C} \in\widetilde {\mathcal{C}}\), \(\forall \mathbf {o} \in\mathcal{D}\)) originally provided along with \(\widetilde{\mathcal{C}}\), the \(\varDelta _{\widetilde{C},f}\) values are computed as:

$$\varDelta _{\widetilde{C}, f} = \frac{\exp (-U(\widetilde{C},f)/{h} )}{\sum_{f' \in\mathcal{F}} \exp (-U(\widetilde{C},f')/{h} )}, $$where the LAC parameter h is set to 0.2 and:

$$U(\widetilde{C},f) = \biggl(\sum_{\mathbf {o} \in\mathcal{D}}\varGamma _{\widetilde{C}, \mathbf {o}} \biggr)^{ -1} \sum_{\mathbf {o} \in \mathcal{D}} \varGamma _{\widetilde{C},\mathbf {o}} (\overline {C}_{f}-o_{f} )^2, \qquad \overline{C}_{f} = \biggl(\sum_{\mathbf {o} \in\mathcal{D}} \varGamma _{\widetilde{C},\mathbf {o}} \biggr)^{ -1} \sum_{\mathbf {o} \in \mathcal{D}} \varGamma _{\widetilde{C},\mathbf {o}} \times o_{f}. $$

In order to compute the similarity between a projective consensus clustering \(\mathcal{C}\) and a reference classification \(\widetilde {\mathcal{C}}\), we resort to the popular F1-measure (van Rijsbergen 1979). Particularly, here we provide a definition of F1-measure that enables a comparison between projective clustering having soft object/feature-to-cluster assignments. Given a projective cluster \(C \in\mathcal{C}\), the precision P(C) and the recall R(C) are defined as:

and the F1-measure is defined as:

The values of the F1-measure belong to the interval [0,1], where larger values indicate more accurate projective consensus clusterings. The overlap(⋅,⋅) and size(⋅) functions quantify the degree of overlap between two projective clusters and the size of a projective cluster, respectively. Such functions are defined based on three ways of comparing the various projective clusters, namely object-based (o), feature-based (f), and object & feature-based (of), which respectively account for the object-based representations only of the projective clusters to be compared, the feature-based representation only, or both. We hence define variants of the overlap(⋅,⋅) and the size(⋅) functions to handle each of the three cases:

-

Object-based (measure F1 o ): \(\mathit{overlap}(C',C'') = \sum_{\mathbf {o} \in \mathcal{D}} \varGamma _{C',\mathbf {o}} \varGamma _{C'', \mathbf {o}}\), \(\mathit{size}(C) = \sum_{\mathbf {o} \in\mathcal{D}} \varGamma _{C,\mathbf {o}}\);

-

feature-based (measure F1 f ): \(\mathit{overlap}(C',C'') = \sum_{f \in \mathcal{F}} \varDelta _{C',f} \varDelta _{C'', f}\), \(\mathit{size}(C) = \sum_{f \in \mathcal{F}} \varDelta _{C, f}\);

-

object & feature-based (measure F1 of ): \(\mathit{overlap}(C',C'') = ( \sum _{\mathbf {o} \in\mathcal{D}} \varGamma _{C',\mathbf {o}} \varGamma _{C'', \mathbf {o}} )\times (\sum_{f \in\mathcal{F}} \varDelta _{C',f} \varDelta _{C'', f} )\), \(\mathit{size}(C) = ( \sum_{\mathbf {o} \in\mathcal{D}} \varGamma _{C,\mathbf {o}} ) ( \sum_{f \in \mathcal{F}} \varDelta _{C, f} )\).

Similarity w.r.t. the projective ensemble solutions (internal evaluation)

Any valid projective consensus clustering \(\mathcal{C}\) should comply with the information available from the input projective ensemble \(\mathcal{E}\). In this respect, we carried out an evaluation stage to measure the average similarity between a projective consensus clustering and the solutions within \(\mathcal{E}\). We define the object & feature-based measure \(\overline{F1}_{\mathit{of}}\) (object-based \(\overline{F1}_{o}\) and feature-based \(\overline{F1}_{f}\) are defined similarly) as follows:

All these measures range from [0,1]; moreover, the larger the values \(\overline{F1}_{\mathit{of}}\), \(\overline{F1}_{o}\), or \(\overline {F1}_{f}\) are, the larger the similarity between the projective consensus clustering \(\mathcal{C}\) and the solutions within the projective ensemble is, and hence the better the quality of \(\mathcal{C}\).

5.1.4 Parameter setting

To set the parameters α and β of the proposed E-CB-PCE, we performed a leave-one-dataset-out approach: for each dataset the performance of E-CB-PCE on the other datasets was assessed for different values of the parameter(s), and the value(s) that achieved the maximum F1 of was then used to obtain a projective clustering solution for the left-out dataset. In general, we observed that the settings were scarcely influenced by any specific dataset, which indicates that a relatively easy setup can be performed on new datasets for which a reference classification or other a-priori knowledge is not available. Particularly, the best-performance scores were mostly reached by setting both α and β to 2. For the competing methods, we set the parameters as suggested in their respective papers.

5.2 Results

Accuracy

Tables 3, 4, 5 give the results of the external evaluation w.r.t. the reference classification (assessment criteria F1 of , F1 o , and F1 f , respectively), and Tables 6, 7, 8 give the results of the internal evaluation w.r.t. the projective ensemble solutions (assessment criteria \(\overline{F1}_{\mathit{of}}\), \(\overline {F1}_{o}\), and \(\overline{F1}_{f}\), respectively). All algorithms involved in the comparison are nondeterministic, thus all tables contain average results over 50 different runs along with the corresponding standard deviations (in parentheses). Moreover, to improve the readability of the results, for each competitor and assessment criterion, we summarize the average gain of E-CB-PCE w.r.t. the competing method in Table 9.

As observed in the summary reported in Table 9, E-CB-PCE achieved better average accuracy performance w.r.t. both MOEA-PCE and CB-PCE, thus showing that the most recent findings of this work incorporated into the proposed E-CB-PCE actually give the expected outcome. E-CB-PCE was more accurate than both MOEA-PCE and CB-PCE on 16 out of 22 datasets on average, while achieving average gains up to 0.147 (\(\overline{F1}_{\mathit{of}}\) assessment criterion) and 0.078 (F1 f assessment criterion) w.r.t. MOEA-PCE and CB-PCE, respectively.

Larger improvements were produced by E-CB-PCE w.r.t. the early single-objective PCE methods, i.e., EM-PCE, E-EM-PCE, and E-2S-PCE. The average gains achieved by E-CB-PCE w.r.t. EM-PCE, E-EM-PCE, and E-2S-PCE reported in Table 9 were in general larger than those observed w.r.t. the remaining competing methods MOEA-PCE and CB-PCE.

Looking at the standard deviations reported in Tables 3–8, it can be observed that the proposed E-CB-PCE was quite insensitive to its random component. The standard deviations were in the order of 10−3 in most cases, while being in the order of 10−2 in the remaining cases.

Efficiency

Table 10 shows the runtimes (in milliseconds) of the various algorithms involved in the comparison. As expected, the proposed E-CB-PCE was slower than EM-PCE on most datasets, while clearly outperforming MOEA-PCE. The runtimes of E-CB-PCE were one or two orders of magnitude smaller than those of MOEA-PCE on average, up to four orders on Isolet. Only on one dataset, MOEA-PCE was more efficient than E-CB-PCE (Glass), even though the runtimes of the two methods remained of the same order of magnitude.

Compared to CB-PCE, the proposed E-CB-PCE was faster on 11 datasets, resulting in one order faster on 3 of them (i.e., Multiple-Features, Waveform, Amazon), and two orders faster on 4 datasets (i.e., Isolet, Gisette, p53-Mutants, Arcene). In general, we observed that the performance of E-CB-PCE mainly depended on the number of iterations needed for Function 2 to converge. In particular, due to the nature of the local-search moves performed at each iteration, the runtime of Function 2 could negatively compensate the performance gain achieved by E-CB-PCE w.r.t. CB-PCE in the other steps of the heuristic. However, as observed in the measurements reported, this mostly affected the smaller datasets.

6 Conclusion

In our previous work (Gullo et al. 2009) we introduced a framework in which projective clustering and clustering ensembles are addressed simultaneously. This resulted in the formulation of a new problem called projective clustering ensembles (PCE). Since the original formulation, research efforts have been made to design a single-objective function that keeps the object-based and the feature-based cluster representations joined together, and at the same time facilitates the adaptation of a conventional clustering ensemble approach to the PCE problem. In this paper, we presented the latest advance of PCE by proposing a metacluster-based formulation and related heuristics, which are theoretically and experimentally proven to best fit the PCE problem.

We expect that alternative approaches to the PCE problem will be developed in the next few years. One general direction we envision is moving the burden of the computation from the clustering side to a proper representation model. In this respect, we argue that an approach worth exploring is tensorial models and related tensor decomposition methods (Kolda and Bader 2009). In a nutshell, a tensor model (e.g., a third-order tensor) can provide an integrated representation of the relevant dimensions in the input ensemble (i.e., the objects, the features, and the clusters); in addition, during the tensor decomposition, a consensus clustering, or even multiple consensus clusterings, can be induced in a straightforward manner. Interestingly, the ability of some existing tensor decomposition methods to generate an unfolding of the tensor in which the correlations among all dimensions (i.e., aspects in the ensemble) are preserved, might play an important role in establishing natural mappings between the clusters in the input ensemble and the clusters of the consensus clustering. Moreover, the common problem due to the unavailability of feature relevance values could be relaxed in a tensor modeling, thus enabling a new generation of clustering ensemble methods.

Notes

Vectorial notation here denotes row vectors.

The meaning of the colors here is different from Fig. 1, where different colors and gradients refer to the distinct parts of the two-fold projective cluster representation, i.e., the feature-to-cluster assignment and the object-to-cluster assignment, respectively. Here, instead, we focus on the unified view of the representation provided by the projective matrix.

An alternative way of obtaining \(\varGamma ^{*}_{\mathcal{M}, \mathbf {o}} \in[0,1]\) is to employ regularization terms (Li and Mukaidono 1999).

In Table 1, t denotes the population size for the genetic algorithm at the basis of MOEA-PCE.

References

Achtert, E., Böhm, C., Kriegel, H., Kröger, P., Müller-Gorman, I., & Zimek, A. (2006). Finding hierarchies of subspace clusters. In Proc. European conf. on principles and practice of knowledge discovery in databases (PKDD) (pp. 446–453).

Achtert, E., Böhm, C., Kriegel, H., Kröger, P., Müller-Gorman, I., & Zimek, A. (2007). Detection and visualization of subspace cluster hierarchies. In Proc. int. conf. on database systems for advanced applications (DASFAA) (pp. 152–163).

Aggarwal, C. C., Procopiuc, C. M., Wolf, J. L., Yu, P. S., & Park, J. S. (1999). Fast algorithms for projected clustering. In Proc. ACM SIGMOD int. conf. on management of data (pp. 61–72).

Agrawal, R., Gehrke, J., Gunopulos, D., & Raghavan, P. (1998). Automatic subspace clustering of high dimensional data for data mining applications. In Proc. ACM SIGMOD int. conf. on management of data (pp. 94–105).

Asuncion, A., & Newman, D. (2010). UCI Machine Learning Repository. http://archive.ics.uci.edu/ml/.

Beyer, K. S., Goldstein, J., Ramakrishnan, R., & Shaft, U. (1999). When is “Nearest neighbor” meaningful? In Proc. int. conf. on database theory (ICDT) (pp. 217–235).

Böhm, C., Kailing, K., Kriegel, H. P., & Kröger, P. (2004). Density connected clustering with local subspace preferences. In Proc. IEEE int. conf. on data mining (ICDM) (pp. 27–34).

Boulis, C., & Ostendorf, M. (2004). Combining multiple clustering systems. In Proc. European conf. on principles and practice of knowledge discovery in databases (PKDD) (pp. 63–74).

Bradley, P. S., & Fayyad, U. M. (1998). Refining initial points for K-means clustering. In Proc. int. conf. on machine learning (ICML) (pp. 91–99).

Chen, L., Jiang, Q., & Wang, S. (2008). A probability model for projective clustering on high dimensional data. In Proc. IEEE int. conf. on data mining (ICDM) (pp. 755–760).

Chierichetti, F., Kumar, R., Pandey, S., & Vassilvitskii, S. (2010). Finding the Jaccard median. In Proc. ACM-SIAM symposium on discrete algorithms (SODA) (pp. 293–311).

Domeniconi, C., & Al-Razgan, M. (2009). Weighted cluster ensembles: methods and analysis. ACM Transactions on Knowledge Discovery from Data (TKDD), 2(4), 17.

Domeniconi, C., Gunopulos, D., Ma, S., Yan, B., Al-Razgan, M., & Papadopoulos, D. (2007). Locally adaptive metrics for clustering high dimensional data. Data Mining and Knowledge Discovery, 14(1), 63–97.

Gan, G., Ma, C., & Wu, J. (2007) Data clustering: theory, algorithms, and applications. ASA-SIAM series on statistics and applied probability.

Ghosh, J., Acharya, A.: (2011). Cluster ensembles. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 1(4), 305–315.

Gionis, A., Mannila, H., & Tsaparas, P. (2007). Clustering aggregation. ACM Transactions on Knowledge Discovery from Data (TKDD), 1(1), 4.

Gullo, F., Domeniconi, C., & Tagarelli, A. (2009). Projective clustering ensembles. In Proc. IEEE int. conf. on data mining (ICDM) (pp. 794–799).

Gullo, F., Domeniconi, C., & Tagarelli, A. (2010). Enhancing single-objective projective clustering ensembles. In Proc. IEEE int. conf. on data mining (ICDM) (pp. 833–838).

Gullo, F., Domeniconi, C., & Tagarelli, A. (2011). Advancing data clustering via projective clustering ensembles. In Proc. ACM SIGMOD int. conf. on management of data (pp. 733–744).

Gullo, F., Domeniconi, C., & Tagarelli, A. (2013). Projective clustering ensembles. Data Mining and Knowledge Discovery (DAMI), 26(3), 452–511.

Hinneburg, A., Aggarwal, C. C., & Keim, D. A. (2000). What is the nearest neighbor in high dimensional spaces? In Proc. int. conf. on very large data bases (VLDB) (pp. 506–515).

Jain, A., & Dubes, R. (1988). Algorithms for clustering data. New York: Prentice Hall.

Keogh, E., Xi, X., Wei, L., & Ratanamahatana, C. A. (2003). The UCR time series classification/clustering page. http://www.cs.ucr.edu/~eamonn/time_series_data/.

Kolda, T. G., & Bader, B. W. (2009). Tensor decompositions and applications. SIAM Review, 51, 455–500.

Kriegel, H., Kroger, P., Renz, M., & Wurst, S. (2005). A generic framework for efficient subspace clustering of high-dimensional data. In Proc. IEEE int. conf. on data mining (ICDM) (pp. 250–257).

Kriegel, H., Kröger, P., & Zimek, A. (2009). Clustering high-dimensional data: a survey on subspace clustering, pattern-based clustering, and correlation clustering. ACM Transactions on Knowledge Discovery from Data (TKDD), 3(1), 1–58.

Li, R. P., & Mukaidono, M. (1999). Gaussian clustering method based on maximum-fuzzy-entropy interpretation. Fuzzy Sets and Systems, 102(2), 253–258.

Liu, B., Xia, Y., & Yu, P. S. (2000). Clustering through decision tree construction. In Proc. int. conf. on information and knowledge management (CIKM) (pp. 20–29).

Moise, G., Sander, J., & Ester, M. (2008). Robust projected clustering. Knowledge and Information Systems, 14(3), 273–298.

Moise, G., Zimek, A., Kröger, P., Kriegel, H. P., & Sander, J. (2009). Subspace and projected clustering: experimental evaluation and analysis. Knowledge and Information Systems (KAIS), 21(3), 299–326.

Müller, E., Günnemann, S., Assent, I., & Seidl, T. (2009). Evaluating clustering in subspace projections of high dimensional data. Proceedings of the VLDB Endowment (PVLDB), 2(1), 1270–1281. http://dme.rwth-aachen.de/en/OpenSubspace/evaluation.

Ng, E. K. K., Fu, A. W. C., & Wong, R. C. W. (2005). Projective clustering by histograms. IEEE Transactions on Knowledge and Data Engineering (TKDE), 17(3), 369–383.

Nguyen, N., & Caruana, R. (2007). Consensus clustering. In Proc. IEEE int. conf. on data mining (ICDM) (pp. 607–612).

Parsons, L., Haque, E., & Liu, H. (2004). Subspace clustering for high dimensional data: a review. SIGKDD Explorations, 6(1), 90–105.

Procopiuc, C. M., Jones, M., Agarwal, P. K., & Murali, T. M. (2002). A Monte Carlo algorithm for fast projective clustering. In Proc. ACM SIGMOD int. conf. on management of data (pp. 418–427).

van Rijsbergen, C. (1979). Information retrieval. Stoneham: Butterworths.

Sequeira, K., & Zaki, M. (2004). SCHISM: a new approach for interesting subspace mining. In Proc. IEEE int. conf. on data mining (ICDM) (pp. 186–193).

Strehl, A., & Ghosh, J. (2002). Cluster ensembles—a knowledge reuse framework for combining multiple partitions. Journal of Machine Learning Research, 3, 583–617.

Strehl, A., Ghosh, J., & Mooney, R. (2000). Impact of similarity measures on web-page clustering. In Proc. of the AAAI workshop on artificial intelligence for web search (pp. 58–64).

Tomasev, N., Radovanovic, M., Mladenic, D., & Ivanovic, M. (2011). The role of hubness in clustering high-dimensional data. In Proc. Pacific-Asia conf. on advances in knowledge discovery and data mining (PAKDD) (pp. 183–195).

Topchy, A. P., Jain, A. K., & Punch, W. F. (2005). Clustering ensembles: models of consensus and weak partitions. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 27(12), 1866–1881.

Woo, K., Lee, J., Kim, M., & Lee, Y. (2004). FINDIT: a fast and intelligent subspace clustering algorithm using dimension voting. Information and Software Technology, 46(4), 255–271.

Yip, K. Y., Cheung, D. W., & Ng, M. K. H. (2004). A practical projected clustering algorithm. IEEE Transactions on Knowledge and Data Engineering (TKDE), 16(11), 1387–1397.

Yip, K. Y., Cheung, D. W., & Ng, M. K. (2005). On discovery of extremely low-dimensional clusters using semi-supervised projected clustering. In Proc. IEEE int. conf. on data engineering (ICDE) (pp. 329–340).

Yiu, M. L., & Mamoulis, N. (2005). Iterative projected clustering by subspace mining. IEEE Transactions on Knowledge and Data Engineering (TKDE), 17(2), 176–189.

Author information

Authors and Affiliations

Corresponding author

Additional information

Editors: Emmanuel Müller, Ira Assent, Stephan Günnemann, Thomas Seidl, Jennifer Dy.

Appendix: Proofs

Appendix: Proofs

1.1 A.1 Proofs of Sect. 2

Proposition 1

For any two projective clusters C, C′ it holds that X C =X C′ if and only if Γ C =Γ C′ and Δ C =Δ C′.

Proof

Let us prove both the directions of the implication. Proving X

C

=X

C′⇐Γ

C

=Γ

C′∧Δ

C

=Δ

C′ is immediate: by definition,  and

and  . Regarding X

C

=X

C′⇒Γ

C

=Γ

C′∧Δ

C

=Δ

C′, we first note that:

. Regarding X

C

=X

C′⇒Γ

C

=Γ

C′∧Δ

C

=Δ

C′, we first note that:

By summing the statements in (28) over j, we obtain:

which implies \(\varGamma _{C,\mathbf {o}_{i}}= \varGamma _{C',\mathbf {o}_{i}}, \forall i\), since \(\sum_{j=1}^{|\mathcal{F}|} \varDelta _{C,j} = \sum_{j=1}^{|\mathcal{F}|}\varDelta _{C',j} = 1\) by definition. Similarly, by summing the statements in (28) over i leads to:

where the last derivation holds since the result \(\varGamma _{C,\mathbf {o}_{i}}= \varGamma _{C',\mathbf {o}_{i}}, \forall i\), shown above clearly implies \(\sum_{i=1}^{|\mathcal{D}|} \varGamma _{C,\mathbf {o}_{i}} = \sum_{i=1}^{|\mathcal{D}|}\varGamma _{C',\mathbf {o}_{i}}\). □

Proposition 2

For any projective cluster

C, its object-based representation

and feature-based representation

and feature-based representation

can uniquely be derived from its projective matrix

X

C

as follows:

can uniquely be derived from its projective matrix

X

C

as follows:

where X C (i,j) denotes the element (i,j) of the matrix X C .

Proof

The first statement holds since: