Abstract

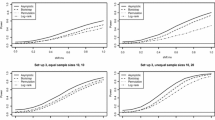

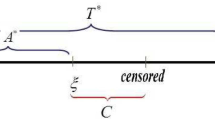

In the analysis of censored survival data, simultaneous confidence bands are useful devices to help determine the efficacy of a treatment over a control. Semiparametric confidence bands are developed for the difference of two survival curves using empirical likelihood and compared with the nonparametric counterpart. Simulation studies are presented to show that the proposed semiparametric approach is superior, with the new confidence bands giving empirical coverage closer to the nominal level. Further comparisons reveal that the semiparametric confidence bands are tighter and, hence, more informative. For censoring rates between 10 and 40 %, the semiparametric confidence bands provide a relative reduction in enclosed area amounting to between 2 and 10 % over their nonparametric bands, with increased reduction attained for higher censoring rates. The methods are illustrated using an University of Massachusetts AIDS data set.

Similar content being viewed by others

References

Andersen PK, Borgan O, Gill RD, Keiding N (1993) Statistical models based on counting processes., Springer series in statisticsSpringer, New York

Bhattacharya R, Subramanian S (2014) Two-sample location-scale estimation from semiparametric random censorships model. J Multivar Anal 132:25–38

Claeskens G, Hjort NL (2008) Model selection and model averaging., Cambridge series in statistical and probabilistic mathematicsCambridge University Press, Cambridge

Collett D (2002) Modelling binary data. CRC Press, Boca Raton

Cox DR, Snell EJ (1989) Analysis of binary data. Chapman and Hall, London

Dabrowska DM, Doksum KA, Song J (1989) Graphical comparison of cumulative hazards for two populations. Biometrika 76:763–773

Dikta G (1998) On semiparametric random censorship models. J Stat Plan Inference 66:253–279

Dikta G, Kvesic M, Schmidt C (2006) Bootstrap approximations in model checks for binary data. J Am Stat Assoc 101:521–530

Dikta G (2014) Asymptotically efficient estimation under semi-parametric random censorship models. J Multivar Anal 124:10–24

Einmahl JHJ, McKeague IW (1999) Confidence tubes for multiple quantile plots via empirical likelihood. Ann Stat 27:1348–1367

Hjort NL, McKeague IW, Van Keilegom I (2009) Extending the scope of empirical likelihood. Ann Stat 37:1079–1111

Hollander M, McKeague IW, Yang J (1997) Likelihood ratio-based confidence bands for survival functions. J Am Stat Assoc 92:215–226

Hosmer DW, Lemeshow S, May S (2008) Applied survival analysis: regression modeling of time to event data. Wiley, New York

Kalbfleisch J, Prentice R (2002) The statistical analysis of failure time data. Wiley, New York

Lee J, Hyun S (2011) Confidence bands for the difference of two survival functions under the additive risk model. J Appl Stat 38:785–797

Li G (1995) On nonparametric likelihood ratio estimation of survival probabilities for censored data. Stat Probab Lett 25:95–104

Lin DY, Fleming TR, Wei LJ (1994) Confidence bands for survival curves under the proportional hazards model. Biometrika 81:73–81

McKeague IW, Zhao Y (2002) Simultaneous confidence bands for ratios of survival functions via empirical likelihood. Stat Probab Lett 60:405–415

McKeague IW, Zhao Y (2005) Comparing distribution functions via empirical likelihood. Int J Biostat 1(1):Article 5

Mondal S, Subramanian S (2014) Model assisted Cox regression. J Multivar Anal 123:281–303

Mondal S, Subramanian S (2015) Simultaneous confidence bands for Cox regression from semiparametric random censorship. Lifetime Data Anal. doi:10.1007/s10985-015-9323-2

Owen A (1988) Empirical likelihood ratio confidence intervals for a single functional. Biometrika 75:237–249

Owen A (1991) Empirical likelihood for linear models. Ann Stat 19:1725–1747

Parzen MI, Wei LJ, Ying Z (1997) Simultaneous confidence intervals for the difference of two survival functions. Scand J Stat 24:309–314

Qin G, Tsao M (2003) Empirical likelihood inference for median regression models for censored survival data. J Multivar Anal 85:416–430

Shen J, He S (2006) Empirical likelihood for the difference of two survival functions under right censorship. Stat Prob Lett 76:169–181

Subramanian S (2004) The missing censoring-indicator model of random censorship. In: Balakrishnan N, Rao CR (eds) Advances in survival analysis, handbook of statistics, vol 23. Elsevier, Amsterdam, pp 123–141

Subramanian S, Dikta G (2009) Inverse censoring weighted median regression. Stat Methodol 6:594–603

Subramanian S (2012) Model-based likelihood confidence intervals for survival functions. Stat Probab Lett 82:626–635

Subramanian S, Zhang P (2013) Model-based confidence bands for survival functions. J Stat Plan Inference 143:1166–1185

Thomas DR, Grunkemeier GL (1975) Confidence interval estimation of survival probabilities for censored data. J Am Stat Assoc 70:865–871

Yang S (2013) Semiparametric inference on the absolute risk reduction and the restricted mean survival difference. Lifetime Data Anal 19:219–241

Yang S, Prentice RL (2005) Semiparametric analysis of short-term and long-term hazard ratios with two sample survival data. Biometrika 92:1–17

Yuan M (2005) Semiparametric censorship model with covariates. Test 14:489–514

Zhang M-J, Klein JP (2001) Confidence bands for the difference of two survival curves under proportional hazards model. Lifetime Data Anal 7:243–254

Acknowledgments

We express our sincere thanks to an Associate Editor and a reviewer whose comments improved the overall quality of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Proof of Eq. (2.13)

Then, suppressing the dependence on t, we can write \(R = \tilde{R}_1 + \tilde{R}_2 + \tilde{R}_3 +\tilde{R}_4\), where

However, writing \(\tilde{R}_1 = R_1 - \breve{R}_1\), where

we see that

Next we write \(\tilde{R}_1 + \tilde{R}_2 \equiv R_1 + R_2\), where \(R_2=\tilde{R}_2 - \breve{R}_1\). Then, after some algebra, we obtain

Applying exactly the same technique, we have that \(\tilde{R}_3+\tilde{R}_4\equiv R_3+R_4\), where

Therefore, we have shown that \(R(t)=R_1+R_2+R_3+R_4\). It now follows that

We prove a number of lemmas. Recall that \(\tilde{\kappa }_i(t)=\sum _{j=1}^{n_i}I(Z_{ij}\le t),i=1,2\). Define

\(\square \)

Lemma 1

The process \(n_i^{1/2}(\hat{\zeta }_i-F_i)\) is asymptotically equivalent to \(S_i\cdot n_i^{1/2}(\hat{\Lambda }_i-\Lambda _i)\).

Proof

Add and subtract \(\int _0^t S_i(s) d\hat{\Lambda }_i(s)\) and apply the Duhamel equation to get

Interchanging the order of integration, we get, uniformly for \(t\in [0,t_i]\) such that \(y_i(t_i)>0\),

Applying Lenglart’s inequality, it follows that

\(\square \)

Noting that \(\int _0^t S_i^2(s) d\Lambda _i(s)=(1-S_i^2(t))/2\), next define

Lemma 2

The process \(n_i^{1/2}\left( \hat{\zeta }_i^{(2)}-\frac{1-S_i^2}{2}\right) \) is asymptotically equivalent to \(S_i^2n_i^{1/2} (\hat{\Lambda }_i-\Lambda _i)\).

Proof

Add and subtract \(\int _0^t S_i^2(s) d\hat{\Lambda }_i(s)\) and apply the Duhamel equation to get

Interchanging the order of integration, we get, uniformly for \(t\in [0,t_i]\) such that \(y_i(t_i)>0\),

Note that the integrand above equals \(S_i^2(u) - S_i^2(t)\). It follows that

\(\square \)

Recall that \(\Vert h\Vert _{\tau _1}^{\tau _2} = \sup _{t\in [\tau _1,\tau _2]}|h(t)|\). For our next result, define

Lemma 3

For \(i=1,2\), we have \(\Vert \hat{\zeta }_i-\tilde{\zeta }_i\Vert _{\tau _1}^{\tau _2} = o_p(n^{-1/2})\).

Proof

Following McKeague and Zhao (2005), see their Eq. (A.6), we can show that

Under continuity of \(H_i\), the first quantity on the right side of Eq. (5.4) is bounded above by

From Eq. (5.1), the second quantity on the right side of Eq. (5.4) is bounded above by \(\hat{\zeta }_i(\tau _2)\ {\buildrel \mathrm{a.s.} \over \longrightarrow }\ F_i(\tau _2)\), see also Theorem 2.4 of Dikta (1998) and Lemma 1. Thus, \(\hat{\zeta }_i(\tau _2)=O_p(1)\) which, combined with Eqs. (5.4) and (5.5), completes the proof. \(\square \)

Lemma 4

The Lagrange multiplier, \(\hat{\lambda }(t)\equiv \hat{\lambda }\), solving Eq. (2.12), satisfies

Proof

For our semiparametric setting, we adapt the approach of McKeague and Zhao (2005), who follow Li (1995). From Eq. (2.12), write \(\alpha (t)=I_1(t)+I_2(t)\), where

First assume that \(\hat{\lambda }> 0\). Since \(\mathrm{log}(1-x) + x\) is decreasing for \(x \in (0,1)\), we have that

It follows that

Since, for \(x>0\), the inequality \(n_1/(n_1+x)\le n_2/(n_2+x)\) holds whenever \(n_1\le n_2\), we have the following lower bound for the first term on the right hand side of Eq. (5.8):

Note that the second term on the right hand side of Eq. (5.8) equals \(-\tilde{\zeta }_1(t)\), see Eq. (5.3). Using the fact that \(-\mathrm{log} (1-x) \ge x\) when \(0 \le x < 1\), we obtain

Since \(n_1/(n_1-x)\ge n_2/(n_2-x)\), when \(x>0\) and \(n_1\le n_2\), we obtain the lower bound

From Eqs. (5.8)–(5.10), we obtain

From the right hand side of Eq. (5.11), combining the first and third terms gives a nonnegative number. Since \(1/(1 - x) \ge 1 + x\) when \(x < 1\), the fourth term is bounded below by

provided that \(|\hat{\lambda }|\hat{S}_2(T_{2j})/n_2<1\) almost surely. To show this, assume no ties and note from Eq. (2.14) that \(0<\hat{\lambda }<\min _{j:T_{2j}\le t}\{(r_{2j}-d_{2j}\hat{m}_{2j})/\hat{S}_2(T_{2j})\}\). Also, \(\bar{Y}_2(t)/\hat{S}_2(t){\buildrel \mathrm{a.s.} \over \longrightarrow }1-G_2(t)<1\) uniformly over \([\tau _1,\tau _2]\). For large enough \(n_2\), \(\epsilon \) sufficiently small, and some \(T_{2l}\ne 0\), we have

almost surely. We therefore obtain a lower bound for \(\alpha (t)\) given by

from which Eq. (5.6) follows. Proof of Eq. (5.7) can be shown by analogous techniques. \(\square \)

Lemma 5

The Lagrange multiplier, \(\hat{\lambda }(t)\equiv \hat{\lambda }\), solving Eq. (2.12), satisfies \(\Vert \hat{\lambda }\Vert _{\tau _1}^{\tau _2} = O_p(n^{1/2})\).

Proof

That the denominators of Eqs. (5.6) and (5.7) are each \(O_p(1)\), uniformly for \(t\in [\tau _1,\tau _2]\), follows from \(\Vert \hat{\Lambda }_i-\Lambda _i\Vert _0^{\tau _2}=o(1)\) almost surely (cf. Theorem 2.4 of Dikta 1998) and Lemma 2. It remains to show that the numerators of Eqs. (5.6) and (5.7) are each \(O_p(n^{-1/2})\), uniformly for \(t\in [\tau _1,\tau _2]\). By applying Lemma 3, it suffices to show that \(\alpha (t) + \hat{\zeta }_1(t) - \hat{\zeta }_2(t) = O_p(n^{-1/2})\). Let \(n_i/n\rightarrow p_i\) as \(n\rightarrow \infty \). We then have by Lemma 1 and results from Sect. 2.1 that

where W is the zero-mean Gaussian process with covariance function given by Eq. (2.17). \(\square \)

1.1 Proof of Theorem 1

From Eq. (2.12), we can write \(\alpha (t)=f_1(\hat{\lambda })-f_2(-\hat{\lambda })\), where

Note that \(f_i(0) = - \tilde{\zeta }_i(t)\) [cf. Eq. (5.3)]. Before applying a Taylor’s expansion, we note that

Recall that \(\gamma _i(t)\) is defined by Eq. (2.15). Write \(n_if_i^{'}(0)=\tilde{\gamma }_i(t)\), so that

For \(i=1,2\), let \(|\hat{\xi }_i|\le |\hat{\lambda }|\). Taylor’s expansion about 0 yields

Applying the Glivenko–Cantelli lemma to \(r_{ij}\) and Lemma 5, we have \(\Vert f_i''(\hat{\xi }_i)\Vert _{\tau _1}^{\tau _2}=O_p(n_i^{-2})\). Therefore, by Lemma 5, it follows that \(\Vert f^{''}_i(\hat{\xi }_i)\hat{\lambda }^2\Vert _{\tau _1}^{\tau _2}=O_p(n_i^{-1})=O_p(n^{-1})\). Furthermore, \(\tilde{\gamma }_i(t)\) is uniformly consistent for \(\gamma _i(t)\) over \([0,\tau _2]\). It follows from Eq. (5.14) and Eq. (2.16) that

Solving for \(\hat{\lambda }\), we obtain

To complete the Proof of Theorem 1, consider Eq. (2.13). Using Taylor expansions of \(\log (1+x)\) and \(\log (1-x)\) about 0, the leading term of \(-2R(t)\) is a product of \(\hat{\lambda }^2\) and a random scaling factor expressed as a double sum, and equals

Each single sum can be expressed as an integral as in the Proof of Lemma 1. Applying Eq. (2.6.10) of Andersen et al. (1993), it follows that the double sum equals \(\sigma _\mathrm{d}^2(t)/n +o_p(1)\), uniformly over \([0, \tau _2]\). Therefore, applying Eq. (5.15), the leading term of \(-2R(t)\) equals

The other terms of \(-2R(t)\) are proportional to

each of which is \(o_p(1)\), uniformly for \(t\in [0,\tau _2]\). For example, when \(l=3\), we have

which, uniformly for \(t\in [0,\tau _2]\), equals

Now apply Lemma 3 and Eq. (5.12) to complete the Proof of Theorem 1. \(\square \)

1.2 Large sample justification of the multiplier bootstrap

Write \(\mathbb {\hat{H}}^*_i(t) = L^*_{n_i, 1}(t) + L^*_{n_i, 2}(t)\), where \(L^*_{n_i, 1}(t)\) and \(L^*_{n_i, 2}(t)\) are defined by Eqs. (2.20) and (2.21). To show that \({\mathbb {W}}^*\) defined by Eq. (2.19) has the limit distribution as that of \(W/\sigma _\mathrm{d}\), it suffices to show that \({\hat{\mathbb {H}}}^*_i(\cdot )\) has the same weak limit as \(\hat{\mathbb {H}}_i(t)=L_{n_i,1}(t)+L_{n_i,2}(t)+o_p(1)\). Let \({\mathbb {P}}_{n_i}\), \({\mathbb {E}}_{n_i}\), \(\mathrm{Cov}_{n_i}\), and \(\mathrm{Var}_{n_i}\) be the probability measure, expectation, covariance, and variance with respect to the bootstrap, that is, conditioned on the sample \(\{(Z_{ij}, \delta _{ij}), j = 1, \ldots , n_i\}\). To show that \(\hat{\mathbb {H}}_i^*(t)\) has the limiting covariance structure given by Eq. (2.1), note that

Strong consistency of \(\hat{\varvec{\theta }}_i\), assumption \(A_6\) of Dikta (1998) and the arguments in the Proof of Theorem 2.4 of Dikta (1998) imply that \(\Vert m_i(\cdot , \varvec{\hat{\theta }}_i)-m_i(\cdot , \varvec{\theta }_i)\Vert _{0}^{\tau _2}=o(1)\) almost surely. Likewise, \(\hat{\alpha }(\cdot ,\cdot )\) is strongly uniformly consistent over \([0,\tau _2]\times [0,\tau _2]\). Finally, \(\Vert \bar{Y}_i-y_i\Vert _0^{\tau _2}=o(1)\) almost surely. The first quantity on the right hand side of Eq. (5.17) can be computed to yield

By the strong law of large numbers, for almost all sample sequences \(\{Z_{ij},\delta _{ij}, 1\le j\le n_i\}\), \({\mathbb {E}}_{n_i}(L^*_{n_i,1}(s), L^*_{n_i,2}(t))\) converges to the first term on the right hand side of Eq. (2.1). The second quantity on the right hand side of Eq. (5.17) can be computed to yield

By the strong law of large numbers, for almost all sample sequences \(\{Z_{ij},\delta _{ij}, 1\le j\le n_i\}\), \({\mathbb {E}}_{n_i}(L^*_{n_i,2}(s), L^*_{n_i,2}(t))\) converges to the second term on the right hand side of Eq. (2.1). One of the cross-product moment terms on the right hand side of Eq. (5.17) is given by

with the other term given in an analogous way. The aforementioned arguments, followed by applying iterated conditional expectation with conditioning by \(Z_{i1}\), implies that the two cross-moment terms in Eq. (5.17) are each zero.

To show that \(\hat{\mathbb {H}}^*_i(\cdot )\) converges weakly to a zero-mean Gaussian process we verify Lindeberg’s condition and tightness. We can write \(\mathbb {\hat{H}}^*_i(t) = L^*_{n_i,1}(t) + L^*_{n_i,2}(t) = \sum _{j=1}^{n_i}B_{ij}(t)G_{ij}\), where

Let \(s^2_i = \sum _{j=1}^{n_i} \mathrm{Var}_{n_i}[B_{ij}(t)G_{ij}] = \sum _{j=1}^{n_i} B_{ij}^2(t)\). As in Mondal and Subramanian (2015), it can be shown that for almost all sample sequences \(\{Z_{ij},\delta _{ij}, 1\le j\le n_i\}\), for any \(\eta _i > 0\),

Let \(K \ge 3\). To verify tightness, we follow Mondal and Subramanian (2015) to show that

The sum on the right hand side of inequality (5.19) is given by

which can be shown equal to

Therefore, the left hand side of inequality (5.19) is finite and tightness is verified.

Rights and permissions

About this article

Cite this article

Ahmed, N., Subramanian, S. Semiparametric simultaneous confidence bands for the difference of survival functions. Lifetime Data Anal 22, 504–530 (2016). https://doi.org/10.1007/s10985-015-9348-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10985-015-9348-6