Abstract

Particulate isotopic analysis in nuclear forensics has developed rapidly during the past two decades due to technical advances in determining the isotopic composition of individual particles. This paper introduces basic statistical concepts that can be applied by analysts to understand the importance of statistical adequacy when interpretating particle data. While these basic statistical methods provide a useful point-of-entry to particle data analysis, more sophisticated statistical and modeling approaches are needed to extract maximal information from such datasets in the future.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Processes within the nuclear fuel cycle such as isotope enrichment, fuel reprocessing, and reactor operation result in the generation of actinide bearing particles. While nuclear facilities are engineered with controls to mitigate exposure of both workers and the environment to the release of such particles (e.g., hot cells, glove boxes, ventilation, containment buildings), in practice not every single particle can be realistically contained. Small quantities of particulate can be released during routine operations [1,2,3], as has been demonstrated by particulate studies of soils, air filters, as well as water and river sediment samples surrounding nuclear facilities [3,4,5,6]. In addition, significantly larger quantities of radioactive particulate have been released into the environment by above-ground nuclear testing or following nuclear accidents such as Chornobyl or Windscale [7, 8].

Isotopic and elemental analysis of actinide particles can provide useful information regarding the specific nuclear characteristics of their source, as the signatures recorded within a particle reflect its unique history, possibly providing information on the enrichment, fuel composition, reactor type, or fission processes of the source. Isotopic analysis of actinide particles has developed rapidly during the past several decades due to technical advances that make it possible to determine reliable elemental and isotopic compositions from individual particles [9, 10]. Early isotope measurements were established using fission track analysis for particle identification, coupled with thermal ionization mass spectrometry (TIMS) [3, 11, 12]. This is a time-consuming technique that requires irradiation and micro-sampling of particles and can typically provide analysts with only relatively small datasets on the timescale characteristic of a dedicated analytical campaign. The advent of rapid acquisition techniques such as secondary ionization mass spectrometry (SIMS) and laser ablation–inductively coupled plasma–mass spectrometry (LA-ICP-MS) now permits analysis of many individual particles from a single sample collection or location [10, 13,14,15,16,17,18,19,20].

As with all methodologies, analysts are constantly engaged in optimization efforts to reduce analysis time/costs; increase the size of datasets; enhance sensitivity to analytes-of-interest; mitigate bias resulting from spectral interferences and other analytical artifacts; and improve measurement precision/repeatability while maintaining accuracy [19]. Analytical workflows that apply multiple techniques or analysis modes in succession are of particular interest as they can enable rapid sample throughput by pre-screening for distinct particle subpopulations [15, 21,22,23]. For example, in SIMS analysis, automated particle measurement (APM) capabilities permit quick surveying of an entire sample planchet by ion imaging to simultaneously 1) determine precise particle locations, and 2) estimate both individual particle compositions and the population’s compositional variance. The APM results are then used to drive higher-precision, more time-intensive, conventional microbeam analysis of targeted single particles [22, 24].

A key objective of modern particle studies is to determine the overall distribution and frequency of isotopic compositions within a particle population, since this improves the fidelity with which we can make inferences about its source (Fig. 1). Such population distributions might aid in identifying important minor components in a source composition; detecting contamination signatures; or helping to deconvolute particle populations with a complex history. Here, we define ‘population’ in its statistical sense [25], as the collection of all objects that are of interest. Using this definition, we classify a radionuclide particle population as all particles that are produced from a source. This could be a finite number of particles contained within a glove box, or the effectively infinite number of particles produced during a nuclear accident like Chornobyl. Our aim is to assess some of the properties of the source based on a relatively small sub-set of the particles that have been collected and analyzed (Fig. 1). We term this sub-set of particles the sample [25].

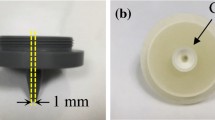

Conceptual illustration of the relationship between the source composition and the population distribution of a sample of particles derived from the source. In this example, the particle source (a fuel pellet) and a sample of particles collected from it should have matching compositions and other material properties. If this is the case, then particle analysis will provide important information about the source of the particles

Not surprisingly, the wide range of analytical techniques and sampling methods employed for particle analysis over the past decades has resulted in a variable number of particles being analyzed and interpreted across different studies. Although some source materials have a complex compositional population, analysts are often tasked with making inferences about the characteristics of a source from a limited number of particle measurements (e.g., n < 20). With what certainty can it be asserted that such “low-n” particle datasets adequately capture the compositional distribution of the source populations? We attempt to address this question by introducing relevant, basic statistical concepts that may be used in the forensic analysis of radionuclide particle populations. Many of the concepts we describe originate from statistical approaches already utilized within Earth Sciences, particularly detrital zircon geochronology studies from the past two decades [26,27,28]. These concepts include recognizing the importance of compositional variation in the source material and the statistical adequacy of sample sizes. To introduce these concepts, we present examples of isotopic measurements using simulated particle data to examine the effect of sampling size on interpretation/comparison of particle samples.

Compositional variation in the particle source and its impact on particle populations

If there is inherent compositional variation in the source that generates a radionuclide particle population, or if the composition of the source changes over time, such compositional variation should be reflected in a particle population derived from said source. Indeed, the efficacy of particle studies rests on the assumption that data obtained from the analysis of a set of particles mirrors the compositional variance of the source material. This presents a potentially significant challenge to nuclear safeguards, particularly the ability to detect signatures of undeclared activities within the already broad variations in composition commonly generated in nuclear reactors, enrichment plants, and reprocessing facilities [29, 30].

For example, a Gas Centrifuge Enrichment Plant (GCEP) may contain particles exhibiting a broad range of uranium isotope compositions [29, 30] as a result of small quantities of process material leaking from different points in the enrichment cascade. Although sampling trials by the IAEA [30] have demonstrated the practical utility of particle samples for assessing declared enrichment levels, interpretation of such enrichment data can be complicated by the presence of legacy particles from past production operations, recycling of reactor material, or possible cross-contamination between enrichments plants. Accurate safeguards characterization of a GCEP’s enrichment activities therefore requires sampling of the entire range of particles occurring within the facility.

Compositional variation is also anticipated in particles generated by nuclear reactor operations. For example, in some reactors, the localized burn-up at the rim of UO2 fuel pellets can be 2–3 times higher than its average [31], resulting from the strong resonance absorption of epithermal neutrons by 238U at the rim of the pellet [32]. This so-called “rim-effect” can result in large radial variation in the isotopic composition of fission products across an individual fuel pellet. On a larger scale, the heterogeneity of the neutron flux spectrum within a nuclear reactor, coupled with neutron leakage at the end fuel assemblies, can result in non-uniform axial-burnup profiles in spent fuels [33, 34]. In pressurized water reactors, this phenomenon is characterized by a near-cosine, axial-shaped flux that depletes fuel more rapidly at the center of a fuel assembly than at its ends [33]. Variations in the geometry of neutron absorbers and shuffling of fuel assemblies during reactor operations can further complicate the burn-up history, resulting in complex three-dimensional isotopic variation in fuel assemblies across the reactor. Similar phenomena come into play in other reactor designs including RBMK, BWR and MAGNOX reactors, resulting in complex compositional variation in spent fuels.

It is therefore conceivable and oft likely that particle populations derived from reactor operations or spent reactor fuels will display broad compositional variation. In such cases, measuring a limited number of particles could significantly bias analytical results with respect to the true average burn-up composition of a reactor, thus leading analysts to spurious conclusions about the facility’s burnup history.

In addition, there are subtler effects to consider that might contribute to complexity in particle populations. For example, the degree to which a particle population mirrors the compositional variation of its source could be dependent on characteristics of the source material and the nature of the release. Released particles might be present in different physio-chemical forms varying in size, structure, and charge properties [9]. These factors will all influence the particulate environmental mobility and their relative propensity for dispersion. Particle populations derived from powders and friable source materials, such as uranium oxide pellets, are likely to have compositional distributions that closely match the variance of the source as these materials are relatively well mixed compared to metals. In solid materials, such as metals, particles will be predominately derived from the material’s surface, and as such may introduce an intrinsic compositional bias, especially in materials that exhibit internal compositional zoning or heterogeneity.

Consequently, there is a crucial need for analysts to consider whether particle datasets constitute a representative sample of the particle population’s source. Such an assessment requires an understanding of the statistical adequacy of the size of the dataset being interpreted.

Particle populations and the importance of sampling size

Figure 2 illustrates some of the problems intrinsic to the isotopic characterization of particle populations when using a sub-set of the total population. Assume Fig. 2A represents a mono-isotopic particle population from a low enrichment uranium fuel source; this population contains an unknown number of particles of uranium with a singular isotopic composition. Characterizing the isotopic signature of this population is simple: a sample comprising one particle can be analyzed for uranium isotopic composition, upon which it can be stated that the isotopic composition of every particle in the source is known with a precision limited only by the sensitivity of the analytical instrument/method. Now consider the particle population shown in Fig. 2B, where the source population consists of bimodal particles with two different isotopic compositions; most particles have one isotopic composition, and a small proportion of particles has a second distinct isotopic composition. Accurately characterizing the signature of this second fuel source requires that the particles sampled and analyzed be representative of the isotopic composition/distribution of the population. In this regard, a third more complex multimodal source population can be envisioned, with particles spanning a larger compositional range and with several more narrowly defined compositional groups (Fig. 2C). In this case the analysis of hundreds of particles would be required to fully characterize the population.

This concept illustrates a challenge faced by analysts of radionuclide particle datasets, as the compositional variation/distribution of the particle source is often unknown with little to no a priori constraints. Moreover, particle sample size can be inherently small, often limited to analysis of tens of particles. While the absence of a particular composition or compositional groups in a dataset can be highly informative to analysts, such “non-observations” might result simply from an inadequate number of analyses and/or from biases in sample processing, particle analysis, and/or data reduction and interpretation. Given these possibilities, with what certainty then can a particle dataset be said to adequately capture the compositional variation/distribution of its source?

To explore the dimension of sample size in particle data analysis, we implemented a Monte Carlo modeling approach to create a series of synthetic datasets intended to simulate uranium particle analyses by large geometry–secondary ion mass spectrometry (LG-SIMS; Fig. 3). In this scenario we focused modeling efforts on a relatively simple case of 10,000 uranium particles with a variable 235U/238U composition. The dataset was generated by first randomly assigning a grain size to each particle within a range typically observed in particles sourced from enrichment plants, 0.5–2.0 µm diameter [5]. Second, the number of 235U/238U compositional modes in the population were specified as well as their modal abundance in the population. The modal abundances were set so that the mode with smallest abundance varied from 1 to 10% of the total population. The compositional modes varied between 235U and 238U ratios of 0.003 and 0.004 with a standard deviation around each modal composition of 2%. These modes were then randomly assigned to each particle in the population. For each of these steps randomization was accomplished using the ‘randperm’ function in MATLAB. This method allows randomization of compositional modes across all particle sizes in the population, although we note the possibility for size–compositional correlation in real-world samples. Using the assigned particle size and composition, a corresponding measurement uncertainty was assigned to each particle to simulate results of LG-SIMS particle analysis. We note that, in reality, it is impossible to fully model the discrete changes in ablation and transmission rates typical of SIMS instrumentation; nonetheless, these model terms represent a good approximation of actual SIMS analytical conditions. Figure 4 illustrates the typical output from a synthetic dataset of 1000 particles graphed as a probability density plot.

We then used the synthetic particle population to assess the effect of sample size by repeatedly modeling a series of sample analyses of between 2 and 200 particles randomly drawn from the full, 10,000-particle synthetic dataset. The resulting compositional distribution was then compared against the model distribution of the synthetic population. The model was set so that the abundances of the smallest component in the population varied from 1 to 10%. At each sample size the compositional distribution was compared for 100 iterations, for a total of 10,000 analyses.

The modeling results are illustrated in Fig. 5, wherein the probability of missing the minor particle component is plotted as a function of the number of particles analyzed (n). This visualization allows us to estimate the number of particle analyses required to have a given probability of detecting a minor component in a population. Not surprisingly, the likelihood of detecting the minor population component diminishes both with decreasing n and decreasing minor component percentage. These results are consistent with previous numerical simulation studies used to assess the probability of missing a minor age component within a detrital zircon population [27, 35, 36]. We believe these same simulation approaches and findings are broadly applicable to radionuclide particle analyses. For example, Dodson et al. [36] expressed the likelihood of missing a component in a population as a function of a standard binominal probability formula;

where the probability (P) that a component in the population is missed is a function of the number of particles that are analyzed (n) for a given proportion of the total number of fractions (f) within a population. This equation has been used previously to argue that at least 60 particles from a sample must be measured in order to reduce the probability of failing to identify a component at a fraction of 1 in 20 (f = 0.05) to P < 5% (Fig. 5) [36]. The results of our modelling closely match those expected from Eq. 1. We note that the requirement to identify the 1 in 20 component fraction previously used in geological investigations may represent a rather extreme case for actinide particle analysis, especially when the analyst has some prior knowledge of the particle source. However, it is not unreasonable for us to expect wide compositional variation in some cases, such as the interpretation of particles from spent fuel sources or enrichment facilities previously outlined, or in complex environmental samples containing a mixture of naturally and anthropogenic-sourced uranium-bearing particulates.

Our modeling data confirm that in cases where a variation in isotopic composition is anticipated, the interpretation of particle data might require a larger sample size than is achievable or was analyzed. We believe this to be a particularly important consideration when the number of radionuclide particles available to be analyzed (n) is extremely low. For example, fission track analysis for particle identification coupled with thermal ionization mass spectrometry (FT-TIMS) is a commonly used particle analysis technique by the IAEA for safeguards monitoring [3]. However, this is a time-consuming technique requiring the irradiation and micro-sampling of particles, typically providing analysts with only small datasets of no more than 10–20 individual particles per sample. If we consider the relatively extreme case of the interpretation of a dataset of 10 particles, then we can determine the probability of missing a 1:10 fraction of the population to be ~ 30% and is considerably larger for smaller fractions (Fig. 5). These results highlight the utility of automated particle mapping techniques that can collect large datasets that maybe more representative of a sample [22, 24].

It is important to note some of the limitations of the synthetic modelling presented in Fig. 5, as well as the standard binominal probability formula. For example, Vermeesch [27], shows that in the case of identifying multiple components within a population, then the required sample size can be much larger than that estimated by the binominal formula. In addition, our synthetic LG-SIMS dataset by necessity represents a sanitized analytical population when compared to complex, real-world datasets. In reality, particle populations might be characterized by complications that need to be accounted for in statistical/modeling treatments, including particle clustering/agglomeration, the number of compositional modes within the population, and their compositional difference (or delta) [37]. Most importantly, binomial probability theory does not account for any of the critical “dimensions-of-interest” relevant to empirical particle analysis as described above, including but not limited to two important components 1) measurement quality/precision, and 2) the compositional difference between the two particle groups. This is especially pertinent for the interpretation of LG-SIMS APM data, where the lower count rates associated with beam rastering can inhibit the ability to distinguish between isotopic endmembers [24].

To illustrate this point, a second Monte Carlo model was developed to quantify the effect of measurement precision and delta in resolving a minor particle group from a majority group (Fig. 6). This second MCM approach assumes 500 total particle analyses and a 1% minor particle group. However, the model imposes additional layers of empirical complexity arising from 1) counting-statistics based analytical uncertainties on the target test analyte, 235U/238U, for an assumed quasi-uniform distribution of particle sizes (e.g., representative of LG-SIMS analysis), and 2) a variable compositional “delta” between the major and minor particle groups. The model was then run 10,000 times for each combination of compositional “delta” value and imposed number of minor endmember particles measured. For each simulation step, the mean square weighted deviation (MSWD) was calculated for the entire 500-particle dataset. The MSWD is a powerful metric for assessing whether analytical data are statistically consistent with derivation from a singular normal distribution [38,39,40].

Rephrased in the context of environmental particle analysis, the MSWD can communicate probabilistically the following outcomes: 1) Homogeneity: observed data variance is explained by associated analytical uncertainties, consistent with a compositionally homogenous particle population at the level of said uncertainties; 2) Overdispersion/heterogeneity: observed measurement variance exceeds that anticipated from analytical uncertainties, indicating either underestimated uncertainty or (more likely) a compositionally heterogeneous population; or 3) Under-dispersion: observed measurement variance is less than that anticipated from analytical uncertainties, indicating overestimation of said uncertainties. The MSWD is frequently applied in isotope geochemistry to assess the goodness-of-fit of geochronological “isochrons” [41] and weighted-mean calculations [42], wherein multiple measurements are integrated to constrain events in deep geologic time with maximal precision. For univariate normally distributed data, the expectation value of the MSWD is 1 with an associated 95% confidence level of [2 √(2/(N − 1))], where N is the number of analyses.

A snapshot of one such simulation output is shown in Fig. 6A, which imposed a compositional “delta” of 3% and 5 minor particle group measurements. The MSWD value of 1.06 for this simulation is identical to the expectation MSWD value of 1.00 ± 0.13 at the 95% confidence level, meaning that the 5 particles measured from the 1% group cannot be differentiated from the major 99% particle group at this level of statistical adequacy. This simulation output then registers as a minor particle group “nonindentification”. This sample treatment is then applied over the entirety of the modeled dimensionality and, upon completion, for each model state population statistics are applied to determine the probability of minor endmember identification. The results are then summarized in a three-dimensional surface plot in (x, y, z), where x is the number of minor endmember particles measured; y is the compositional "delta” between the major and minor particle groups; and z is the probability of minor particle group detection via the MSWD metric (Fig. 6B). The obvious initial insight from this preliminary modeling exercise is that the answer to the “needle in a haystack” question is more complicated than suggested by simple binomial probability theory; indeed, analytical uncertainty alone masks the presence of the minor particle group across much of the assessed dimensionality. For example, in the 10,000 Monte Carlo simulations run with the same conditions as Fig. 6A (i.e., 3% compositional “delta”, 5 minor particle group measurements), the probability of minor component detection via the MSWD metric was only about 33%. Most importantly, this preliminary modeling treatment captures only a fraction of the dimensionality relevant to empirical particle analysis and points to the need for more comprehensive exploration of the statistical adequency of particle measurements.

Statistical tools for comparison of particle datasets

Analysts may be asked to quantitatively compare particle data from different samples or against model data to determine their degree of similarity. There are several tools that can be used for this type of statistical comparison. At the broadest scale, a visual approach may be all that is required and can be achieved using several different methods including: i) simple three-isotope plots (e.g., 235U/238U vs. 234U/238U); ii) histograms with frequency or proportion of isotopic compositions plotted against analyst-selected binned compositions; iii) as probability density plots (PDPs); iv) as kernel density plots that first plot isotopic composition in a frequency plot and then impose a kernel of some bandwidth to constrain the shape of the distribution.

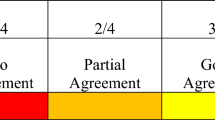

Using a visual-only approach for comparison can however be problematic, as this relatively qualitative method can be influenced by biases or pre-conceptions of the analysts. Moreover, and especially in the case of “low-n” datasets typical of particle population characterization (see above), purely visual/graphical comparison does not communicate quantitative uncertainty/confidence in the comparative assessment. A more quantifiable approach then is to use statistical tools for comparison. Quartile–Quartile (Q–Q) plots [43] and Kolmogorov–Smrinov (K–S) tests [44] represent two simple yet powerful approaches. A Q–Q plot is a graphical technique for determining if two datasets come from populations with a common distribution, in which the quantiles of the two datasets are plotted against one other. K–S tests are nonparametric tests that quantify differences between cumulative distributions to examine either 1) the goodness-of-fit of a dataset to a theoretical distribution, or 2) whether two discrete populations are statistically equivalent. Placed in the context of environmental particle analysis the K–S test estimates the probability that two samples could have been selected at random from the same population. The test generates a p-value that relates to the probability that the observed maximum difference between the samples is due to sampling error versus a true difference between the populations. If p is > 0.05 (95% confidence), then the test fails to reject the null hypothesis that the particles were from the same population.

The utility of Q–Q & K–S tests for comparison of particle data is illustrated in Fig. 7, where we compare two synthetic particle datasets mimicking spent fuel particles from a BWR and PWR reactor. The synthetic spent fuel particle data were generated using compositions from the SFCOMPO 2.0 database [45] for the Calvert Cliffs (PWR) and Cooper-1 (BWR) to fit an axial burn-up profile for each reactor. A synthetic dataset of 10,000 particles was then randomly distributed along the simulated burn-up profile. This produced a continuous distribution of particles, from which between 25 and 250 particles were randomly drawn for comparison tests, the results of which are illustrated in Fig. 7.

At relatively large sample sizes (500 particles, 250 particles from each population) both the Q–Q and K–S tests perform well in differentiating between the two reactors (Fig. 7). For example, at a sample size of 250 particles the p value (< 0.001) generated by the two-sample K–S test indicates that the samples are statistically different (Fig. 7C). In addition, Fig. 7A illustrates the usefulness of Q–Q plots in illustrating the difference between two populations, in this case the BWR particles have consistently higher 235U/238U values than the corresponding PWR particles. However, as illustrated in Fig. 7B–D, there is a significant penalty to the statistical power of both tests with smaller sample size, in part due to the insensitivity of the tests to the distribution at the tails. In the K–S test illustrated in Fig. 7D, the analysis of 25 particles from each reactor particle population (50 total) generates a high p value (0.468) and fails to reject the null hypothesis that the particles were from the same population. Similarly, the level the scatter in the Q–Q plot becomes more difficult to interpret and again emphasizes the need for analysts to understand statistical adequacy when comparing particle datasets.

Conclusions

In this work we used synthetic modeling of particle data and basic statistical principals to demonstrate the importance of sample size in the analysis and interpretation of particle datasets. Analysts should both be aware of the statistical adequacy of their datasets and understand the limitations of their data, especially when n is small. We note that there can be significant complexity in particle populations derived from nuclear processes, and any attempt to interpret data derived thereof requires 1) an appreciation of the physical characteristics of the source material, and 2) statistical approaches capable of handling multidimensional data. Crucially, the basic probability/statistical methods we describe represent only a small fraction of the methods that can (and should) be applied to this problem. While these basic statistical methods provide a valuable basis for preliminary data assessment, development of sophisticated statistical tools specifically tailored to particle analysis is needed to further advance objective particle dataset interpretation.

References

IAEA (2011) Radioactive particles in the environment: sources, particle characterization and analytical techniques. International Atomic Energy Agency, Vienna

Tamborini G (2004) SIMS analysis of uranium and actinides in microparticles of different origin. Microchim Acta 145(1):237–242

Donohue DL (1998) Strengthening IAEA safeguards through environmental sampling and analysis. J Alloys Compd 271–273:11–18

Salbu B, Krekling T (1998) Characterisation of radioactive particles in the environment†. Analyst 123(5):843–850

Stebelkov V (2005) Informativeness of morphology analysis of uranium microparticles from industrial dust at nuclear plants. In: GLOBAL 2005: Proceedings of the international conference on nuclear energy systems for future generation and global sustainability. Atomic Energy Society of Japan, Japan

Byrnes I et al (2020) Characterization of radioactive particles from the Dounreay nuclear reprocessing facility. Sci Total Environ 727:138488

Steinhauser G (2018) Anthropogenic radioactive particles in the environment. J Radioanal Nucl Chem 318(3):1629–1639

Pöllänen R et al (1995) Transport of large particles released in a nuclear accident. Finn Centre Radiat Nucl Saf

Salbu B, Lind OC (2020) Analytical techniques for charactering radioactive particles deposited in the environment. J Environ Radioact 211:106078

Park R et al (2022) Quantitative and isotopic analysis of single micrometer-sized uranium particles using multiple mass spectrometric techniques. J Radioanal Nucl Chem

Stetzer O et al (2004) Determination of the 235U content in uranium oxide particles by fission track analysis. Nucl Instrum Methods Phys Res Sect A 525(3):582–592

Lee C-G et al (2006) Improved method of fission track sample preparation for detecting particles containing fissile materials in safeguards environmental samples. Jpn J Appl Phys 45(3L):L294

Konegger-Kappel S, Prohaska T (2016) Spatially resolved analysis of plutonium isotopic signatures in environmental particle samples by laser ablation-MC-ICP-MS. Anal Bioanal Chem 408(2):431–440

Lloyd NS et al (2009) Precise and accurate isotopic analysis of microscopic uranium-oxide grains using LA-MC-ICP-MS. J Anal At Spectrom 24(6):752–758

Esaka F, Magara M (2014) Secondary ion mass spectrometry combined with alpha track detection for isotope abundance ratio analysis of individual uranium-bearing particles. Talanta 120:349–354

Hubert A et al (2014) Measurement of the isotopic composition of uranium micrometer-size particles by femtosecond laser ablation-inductively coupled plasma mass spectrometry. Spectrochim Acta Part B 93:52–60

Boulyga SF, Prohaska T (2008) Determining the isotopic compositions of uranium and fission products in radioactive environmental microsamples using laser ablation ICP–MS with multiple ion counters. Anal Bioanal Chem 390(2):531–539

Wimpenny J et al (2023) Rapid isotopic analysis of uranium particles by laser ablation MC-ICP-MS. J Anal At Spectrom 38:827

Groopman EE, Williamson TL, Simons DS (2022) Improved uranium particle analysis by SIMS using O3− primary ions. J Anal At Spectrom 37(10):2089–2102

Pointurier F, Pottin A-C, Hubert A (2011) Application of nanosecond-UV laser ablation-inductively coupled plasma mass spectrometry for the isotopic analysis of single submicrometer-size uranium particles. Anal Chem 83(20):7841–7848

Hedberg PML et al (2015) Multiple ion counting measurement strategies by SIMS—a case study from nuclear safeguards and forensics. J Anal At Spectrom 30(12):2516–2524

Peres P et al (2013) Nuclear safeguards applications using LG-SIMS with automated screening capabilities. Surf Interface Anal 45(1):561–565

Gao J et al (2023) Comparison of AMS, TIMS, and SIMS techniques for determining uranium isotope ratios in individual particles. J Mass Spectrom 58(2):e4905

Tenner TJ et al (2023) New particle working standards for NWAL particle laboratory calibration and quality control—LANL LG-SIMS characterization and evaluation. In: Proceedings of the INMM & ESARDA Joint Annual Meeting 2023: pp. 1–10

Walpole RE, Myers RH, Myers SL (2012) Probability and statistics for engineers and scientists. Prentice Hall International

Pullen A et al (2014) What happens when n = 1000? Creating large-n geochronological datasets with LA-ICP-MS for geologic investigations. J Anal At Spectrom 29(6):971–980

Vermeesch P (2004) How many grains are needed for a provenance study? Earth Planet Sci Lett 224(3):441–451

Ibañez-Mejia M et al (2018) Use and abuse of detrital zircon U-Pb geochronology—a case from the Río Orinoco delta, eastern Venezuela. Geology 46(11):1019–1022

Donohue DL (2002) Peer reviewed: strengthened nuclear safeguards. Anal Chem 74(1):28A-35A

Bush W et al (2001) IAEA experience with environmental sampling at gas centrifuge enrichment plants in the European Union. In: Proceedings of the Symposium on International Safeguards: Verification and Nuclear Material Security

Rondinella VV, Wiss T (2010) The high burn-up structure in nuclear fuel. Mater Today 13(12):24–32

Lassmann K et al (1994) The radial distribution of plutonium in high burnup UO2 fuels. J Nucl Mater 208(3):223–231

Wagner JC, DeHart M (2000) Review of axial burnup distribution considerations for burnup credit calculations. Oak Ridge National Lab (ORNL), Oak Ridge

Wu S-C, Chao D-S, Liang J-H (2018) Compound effects of operating parameters on burnup credit criticality analysis in boiling water reactor spent fuel assemblies. Nucl Eng Technol 50(1):18–24

Andersen T (2005) Detrital zircons as tracers of sedimentary provenance: limiting conditions from statistics and numerical simulation. Chem Geol 216(3):249–270

Dodson MH et al (1988) A search for ancient detrital zircons in Zimbabwean sediments. J Geol Soc 145(6):977–983

Aitchison J et al (2000) Math Geol 32(3):271–275

Wendt I, Carl C (1991) The statistical distribution of the mean squared weighted deviation. Chem Geol Isot Geosci Sect 86(4):275–285

McLean NM, Bowring JF, Bowring SA (2011) An algorithm for U-Pb isotope dilution data reduction and uncertainty propagation. Geochem Geophys Geosyst 12(6)

Spencer CJ, Yakymchuk C, Ghaznavi M (2017) Visualising data distributions with kernel density estimation and reduced chi-squared statistic. Geosci Front 8(6):1247–1252

Borg LE et al (2011) Chronological evidence that the Moon is either young or did not have a global magma ocean. Nature 477(7362):70–72

Keller CB, Schoene B, Samperton KM (2018) A stochastic sampling approach to zircon eruption age interpretation. Geochem Perspect Lett 8:31–35

Wilk MB, Gnanadesikan R (1968) Probability plotting methods for the analysis of data. Biometrika 55(1):1–17

Berger VW, Zhou Y (2014) Kolmogorov–Smirnov test: overview. Wiley statsref

Michel-Sendis F et al (2017) SFCOMPO-2.0: An OECD NEA database of spent nuclear fuel isotopic assays, reactor design specifications, and operating data. Ann Nuclear Energy 110:779–788

Acknowledgements

The authors gratefully acknowledge the valuable suggestions provided by an anonymous reviewer that improved the quality of the paper. This work was supported by the U.S. Department of Energy through the Los Alamos National Laboratory. Los Alamos National Laboratory is operated by Triad National Security, LLC, for the National Nuclear Security Administration of U.S. Department of Energy (Contract No. 89233218CNA000001). Savannah River National Laboratory is managed by Battelle Savannah River Alliance, LLC under Contract No. 89303321CEM000080 with the U.S. Department of Energy. This document is approved for unlimited public release through Los Alamos National Laboratory under LA-UR-23-22433.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Inglis, J.D., Samperton, K.M., Reinhard, A.A. et al. An introduction to the significance of sample size in particle analyses for nuclear forensics and radiological investigations. J Radioanal Nucl Chem 332, 3371–3381 (2023). https://doi.org/10.1007/s10967-023-08999-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10967-023-08999-x