Abstract

The laboratory for instrumental neutron activation analysis at the Reactor Institute Delft, Delft University of Technology uses a network of 3 gamma-ray spectrometers with well-type detectors and 2 gamma-ray spectrometers with coaxial detectors, all equipped with modern sample changers, as well as 2 spectrometers with coaxial detectors at the two fast rabbit systems. A wide variety of samples is processed through the system, all at specific optimized (and thus different) analytical protocols, and using different combination of the spectrometer systems. The gamma-ray spectra are analyzed by several qualified operators. The laboratory therefore needs to anticipate on the occurrence of random and systematic inconsistencies in the results (such as bias, non-linearity or wrong assignments due to spectral interferences) resulting from differences in operator performance, selection of analytical protocol and experimental conditions. This has been accomplished by taking advantage of the systematic processing of internal quality control samples such as certified reference materials and blanks in each test run. The data from these internal quality control analyses have been stored in a databank since 1991, and are now used to assess the various method performance indicators as indicators for the method’s robustness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Instrumental neutron activation analysis (INAA) distinguishes itself from other methods for multi-element determination by, amongst others, the fact that the chemical matrix of the test portion has no, or barely noticeable influence on the trueness of the results. Calibration, based on the comparative method or on the single comparator method [1, 2], is therefore ‘once and for all’, making the technique suitable for analyzing a large variety of matrices. The chemical matrix has often no effect to the degree of trueness of the results, which also implies that there is no need for having quality control (“trueness control”) samples of a matrix, closely matching the unknown sample matrix. The quality control sample is selected on basis of (i) known amount of the element(s) of interest, (ii) suitability for irradiation and measurement of the material under the same conditions as the real samples and (iii) detectability of the radionuclide(s) of the element(s) of interest under these conditions.

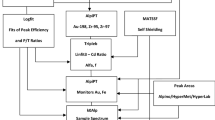

The laboratory for instrumental neutron activation analysis at the Reactor Institute Delft, Delft University of Technology uses a network of 3 gamma-ray spectrometers with well-type detectors and 2 gamma-ray spectrometers with coaxial detectors, all equipped with modern sample changers, as well as 2 spectrometers with coaxial detectors at the two fast rabbit systems. Details on the system have been published in the past [3]. Activated samples are often measured using more than one detector, and all spectra recorded are analysed and interpreted simultaneously [4]. The spectrometers with the sample changers are automated and can all run 24 h per day and 7 days per week. The quality management system, implemented at the end of the 1980 s [5] and accredited since 1993, currently meets the requirements of the NEN-EN-ISO/IEC 17025:2005.

The in-house developed gamma-ray spectrum analysis and interpretation software [4] has an module for graphical inspection of the outcome of quality control sample analyses [6]. The control charts could display (i) the mass fraction of an element as a function of analysis date, (ii) the mass fraction of an element for all types of quality control materials analyzed and (iii) the (normalized) mass fraction of all elements as a function of their value. The underlying databases with measurement results of any quality control sample have been kept up-to-date since the introduction in the early 1990s, and the number of options has been expanded for selecting parameters to be displayed in a control chart. Also the number of quality control samples has increased considerably, with (certified) reference materials forming the largest category.

A wide variety of samples is processed through the system, all at specific optimized (and thus different) analytical protocols and using different combination of the spectrometer systems. The gamma-ray spectra are analyzed by several qualified operators; between 1991 and 2010 approximately 15 persons, some of them for a period of 1–2 years; others during a longer period. The operators select the analytical protocol (selection of irradiation, decay, counting time and detector geometry(ies) to be used. Though the spectrum analysis and peak fitting is highly automated, sometimes manual intervention is necessary, e.g. for complex multiplets. The laboratory has to anticipate continuously on a situation that the human factor affects the quality of the results. Demonstrating the robustness of this system is therefore a continuous challenge.

The software and charts allow for trend analysis and for assessment of analytical performance indicators such as trueness, precision, reproducibility and linearity. In addition, the bias of the method, the linearity and repeatability are assessed quantitatively. These indicators are averaged values derived from analyses of many different types of control samples, and for many elements also at a range of mass fractions and/or in the presence of interferences. As such, they implicitly reflect to some extent the robustness of the INAA method itself.

Quality assessment

Data sorting

An overview of the selectable parameters and the sorting functions for the control charts is given in Table 1, together with an indication of the information that may be derived. Examples of control charts have been published before [6].

Trueness

The bias of the method is assessed though the analysis over time of a large set of certified reference materials. For each element in each material, the weighted mean of the measured mass fractions and its external and internal standard errors are calculated. The mean mass fraction is than divided by the certified mass fraction to obtain a bias factor with its standard error––the larger of the internal and external standard errors is used to this end. The weighted mean of the bias factors for a given element in all reference materials is then calculated, with its internal standard error. Also, the overall χ 2 r is calculated from the zeta-scores of the group averages and the certified mass fractions.

The data taken into consideration are the grand total, the data from the last 10 years, the last 5 years, the last 2 years and the data from the last year. This is necessary because the method and its calibration factors are continuously evolving.

The test is performed in the course of the annual evaluation of the first line internal quality control. The Laboratory for INAA has defined an acceptance criterion for the bias: an element passes the test when the observed bias factor with its standard error implies a 67% probability of the true value lying between 0.95 and 1.05 (so that an observed bias factor of 1.00 ± 0.05 just passes the test). An element fails the trueness test when the bias factor deviates from unity by more than 5%, and at the same time deviates from unity by more than 3 standard deviations. In other cases, the test is inconclusive.

In any case, if the data from the last year prove the method to be unbiased for some element, failure to meet a criterion in earlier years is acceptable. The data obtained in the last year might be too few to draw a conclusion for some elements, in which case data from previous years will do.

Range and linearity

The INAA method is, from first principles, linear in that the induced radioactivity is linear proportional to the amount of element present. This linearity applies also to the measured peak area, and the algorithm that determines the peak area [7, 8]. The Laboratory for INAA’s requirement for the linearity therefore is more stringent than for the trueness: the contribution of non-linearity to the bias at any concentration level must be smaller than 0.25%.

Even at very low levels, accurate measurements of elemental mass fractions can be obtained by determining peak areas at the energies in the spectrum where the radionuclide of interest is expected to show up. The precision of such measurements below the detection limits will be very poor, but some end-users of the INAA data prefer this to only having a detection limit at their disposal. This leads to the Laboratory’s requirement for the working range: it should be from 0 to 1 kg/kg.

Still there are a few caveats. If the element of interest is a major constituent of the material to be analyzed, the neutron self-shielding behavior and/or the gamma-ray self-absorption properties of the sample may become dependent on the mass of the element present, and the induced activity and/or the measured peak area would no longer be linearly proportional to its mass. Also, if the element to be determined is the major source of radioactivity in the material, the count rates in the measurement will be determined by the element concentration and linearity might be at risk due to dead time.

Neutron self-shielding, gamma-ray self-absorption and dead time and the requirements for the trueness of the corresponding correction methods are discussed separately below.

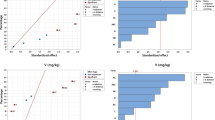

The verification of the linearity is based on a similar test as for the trueness: the bias factors found in that test are correlated to the certified concentrations by linear regression.

An element passes the test when the slope of the regression line with its combined standard uncertainty implies a 67% probability of the true value lying between −0.005 and 0.005 (so that the slope 0.000 ± 0.005 just passes the test). An element fails the linearity test when the slope deviates from zero by more than 0.005, and at the same time deviates from zero by more than 3 standard deviations. In other cases, the test is inconclusive.

Repeatability and reproducibility

The INAA software reports the combined standard uncertainty for each element. The combined standard uncertainty, or “precision” is calculated from the known sources of the type A evaluations of uncertainty in the analysis procedure (counting statistics being the main one). If a sample is analyzed repeatedly by the same analysis protocol, this “precision” can be interpreted as the repeatability of the method.

The requirement for the precision is that the standard error of the mean value, SEMext (i.e. observed variation) should not significantly exceed the SEMint (variation expected from calculations) for analysis runs of a homogenous material, performed the same way.

If SEMext > SEMint, than the implied unexplained variation should be less than 0.5%.

If SEMint > SEMext, than, for the test to be conclusive, it should be demonstrated that an additional source of variation of 0.5% would have increased SEMext significantly in the χ 2 r –sense, using

where N is the number of observations, and V is the extra variability.

Reproducibility is tested the same way and at the same time, by selecting a homogeneous material and measurements thereof by different procedures, detectors and technicians. The requirement is that the unexplained variation arrived at from the results should be less than 1%.

Robustness

The robustness of the method depends mostly on the performance of the operators, as outlined in the Introduction. The related induced variance is already incorporated in the variance of the trueness, repeatability and reproducibility. The only external factor potentially affecting the INAA results is the climate in the counting room. Temperature has an effect on the energy calibration of the detectors, that might lead to misidentification of radionuclides. Since this is the most serious error that could be made, the requirement is that it should never happen. This requirement is tested along with the trueness of the method as described above––if robustness should be lacking, the method would fail that test.

Regular review

All tests described here are performed annually as integral part of the annual evaluation of the results of the first line internal quality control. This is necessary because the method and its calibration factors are continuously evolving. The data taken into consideration are the grand total, the data from the last 10 years, the last 5 years, the last 2 years and the data from the last year. If a test for a more recent dataset is inconclusive, the dataset from the next larger time span can be used to decide on validity. Failures to pass a test are studied in depth and corrective actions may be defined.

Results

Trueness

An example of the raw data from the program is shown in the Table 2. The data shown (here for an evaluated period of one year only, 2010) are, for each element, the bias factor and its standard error, an indication of the element passing the trueness test or not, the slope of the regression line when correlating bias factors to certified concentrations and its uncertainty, an indication of the element passing the linearity test or not, the number of reference materials involved, and the lowest and highest certified concentrations for the element present in the dataset.

Out of the 67 in the scope, 51 elements pass the trueness test for the entire 1991–2010 period. For some, the test is inconclusive (the bias factor found is too imprecise; 2 cases, i.e. Er and Re), or there are no reference data at all (9 cases, i.e. Ge, Ir, Nb, Os, Rh, Ru, Te, Tm and Y). Other elements (5 cases, i.e. Cu, Gd, S, Si and U) failed the test. Over the year 2010, 38 elements passed the trueness test; the test was inconclusive for 13 elements and no reference data were available for 16 other elements.

Linearity

The same raw data as discussed above demonstrate the linearity of the method. Not a single element fails the test in any of the time spans.

Repeatability, reproducibility

In order to exclude sample-to-sample variations from this test, a “multi-standard” in-house material was used for this test, which is based on a solution with 20 elements present, routinely used as an internal stability check in a wide range of analysis procedures.

Of the 20 elements, over e.g., the period 2007–2008, Cr exhibits the smallest SEMint = 0.096 %, with a SEMext = 0.085 %, at N = 169 observations, including all analysis procedures, detectors and analysts. A χ 2 r = 0.78 was calculated from

Since SEMint is (slightly) larger than SEMext in this case, the question is what amount of extra variation V would raise SEMext significantly in the χ 2 r sense. The answer is V = 0.475%, which would give a χ 2 r = 1.18, calculated from

which is the threshold value for significance at α = 0.05 at N = 169.

The requirement for the magnitude of unexplained variation is that it should be less than 5%, so that the requirement for reproducibility turns out to be fulfilled.

For the repeatability test, the Cr results in multi-standard were used again, but this time only the results obtained in one specific end-user oriented project were utilized (all analyses by same person, same detector and only with slight variations in decay time).

SEMext = 0.09%, and SEMint = 0.22%, at N = 36 observations. By the same procedure as described above, the amount of extra variation that would significantly increase SEMext is V = 0.35% (which would give a χ 2 r = 1.42, being the threshold value for α = 0.05 at N = 36), so that the repeatability criterion is met.

It may be noted that the reproducibility of these measurements is 0.085% × √169 = 1.1 %, and that the repeatability is 0.09% × √36 = 0.5%. In both cases, the RID software slightly overestimates these values with 0.096% × √169 = 1.2% and 0.22% × √36 = 1.2%: It stays on the safe side when reporting “precision”.

Cross-sensitivity

Some typical examples of potential errors due to presence of interferences are shown in Table 3. It is well-known that in NAA the determination of La by 140La is interfered by the presence of U because of the resulting 140Ba fission product formed, decaying via 140La. The determination of Zn via 65Zn is interfered by the presence of Sc, resulting in 46Sc and causing difficulties due to the limited energy resolution of the detectors (gamma-ray energies of 65Zn and 46Sc 1115 and 1120 keV, respectively). The determination of Co via 60Co is interfered by the presence of Br and the formation of 82Br, leading to a spectral interference at the 1332.5 keV line of 60Co by a coincidence summing peak at 1330.8 keV (554.3 + 776.5 keV). Deviations from linearity by 0.0005 and less are considered negligible in views of the validity criterion of 0.005, so effort was made to show the values beyond four significant digits.

Discussion

Out of the 67 in the scope, 51 elements as determined within the Laboratory for INAA passed the trueness test. The 16 elements that did not pass the trueness test receive currently special treatment until they pass the test at some point in the future, e.g. as a result of improvements to the system:

-

When one of the seven elements (i.e. Er, Re, Cu, Gd, S, Si and U) with bias information available is reported to an end-user, it must be ascertained that the reported trueness does not imply a smaller possible bias than the one found in this test.

-

For the nine elements (i.e. Ge, Ir, Nb, Os, Rh, Ru, Te, Tm and Y) where there are no data in this test: aliquots from single-element calibrators with metrologically traceable property values are included in the analysis runs if mass fractions are requested. These calibrators have been added to the database of reference materials, so that information that can be used in this test will be collected over time.

-

One way or the other, efforts are being made to validate the method for these 16 elements.

The performance of INAA passes the linearity test without exception. For some elements, not enough data is available to draw a conclusion. However, not a single element fails the test. The mass fraction ranges used for the test span 6 orders of magnitude for some elements (like Al and Br). This result was expected from the scientific understanding of the technique. The INAA method also passes the cross-sensitivity, reproducibility and repeatability tests.

The approach described in this paper was accepted by the Dutch Council for Accreditation for compliance with the requirements in Clause 5.4 “Test and Calibration Methods and Method Validation” of the ISO/IEC17025:2005. However, it should be noted that in principle the tests does not provide a conclusive answer on the validity of the routine test results since this validity depends on the target uncertainty as defined by the customer.

The methods described in this paper are also implemented in the k0-IAEA software [9].

Conclusion

Having a database of analysis results obtained from CRMs available makes it possible to assess a variety of quality parameters such as robustness, trueness, repeatability, and linearity. Such databases allow for inspection of trends but also for the effectiveness of corrective actions, e.g. by renewed calibration following for instance an unacceptable bias. Together, these assessments constitute the “validation” for the method. This paper demonstrates how that was done to the satisfaction of the Dutch Council for Accreditation.

References

De Bruin M, Korthoven PJM (1972) Anal Chem 44:2382–2385

Obrusník I, Blaauw M, Bode P (1991) J Radioanal Nucl Chem 152:507–518

Bode P (2000) J Radioanal Nucl Chem 245:127–132

Blaauw M (1994) Nucl Instr Meth 353:269–271

Bode P, Van Dalen JP (1994) J Radioanal Nucl Chem 179:141–148

Bode P (1997) J Radioanal Nucl Chem 215:87–94

Blaauw M, Gelsema SJ (1999) Nucl Instr Meth A422:417–422

Blaauw M (1999) Nucl Instr Meth A432:74–76

Rossbach M, Blaauw M, Bacchi M, Lin X (2007) J Radioanal Nucl Chem 274:657–662

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Bode, P., Blaauw, M. Performance and robustness of a multi-user, multi-spectrometer system for INAA. J Radioanal Nucl Chem 291, 299–305 (2012). https://doi.org/10.1007/s10967-011-1178-8

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10967-011-1178-8