Abstract

Self-serving cognitive distortions are biased or rationalizing beliefs and thoughts that originate from the individual persistence into immature moral judgment stages during adolescence and adulthood, increasing the individual’s engagement in antisocial or immoral conducts. To date, the literature examining trajectories of cognitive distortions over time and their precursors is limited. This study sought to fill this gap, by examining effortful control and community violence exposure as individual and environmental precursors to developmental trajectories of cognitive distortions in adolescence. The sample consisted of 803 Italian high school students (349 males; Mage = 14.19, SD = 0.57). Three trajectories of cognitive distortions were identified: (1) moderately high and stable cognitive distortions (N = 311), (2) moderate and decreasing cognitive distortions (N = 363), and (3) low and decreasing cognitive distortions (N = 129). Both low effortful control and high exposure to community violence were significant predictors for moderately high and stable trajectory of cognitive distortions. These results point to the importance of considering moral development as a process involving multiple levels of individual ecology, highlighting the need to further explore how dispositional and environmental factors might undermine developmental processes of morality.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Social-cognitive theories posit that people act upon their interpretation of social events (Crick and Dodge 1994). According to Bandura’s (1986) social-cognitive formulations, the cognitive evaluation of events that take place in the child’s environment, how the child interprets these events, and how competent the child feels in responding in various ways provide the basis for child’s purposeful action. Growing up in a violent environment may lead children to see the world as a hostile and dangerous place (Guerra et al. 2003; Schwartz et al. 2000), and that violence itself is a useful means for conflict resolution (Dodge et al. 2006). The internalization of these schemas of the world, along with the development of normative beliefs about violence, amplify the risk for behaving aggressively. In terms of such cognitive process models, self-serving cognitive distortions well represent schemas that influence the individual’s encoding, interpretation, attribution, and evaluation, and thereby, impact the individual’s behavior in social situations (Barriga et al. 2001). However, to date, few studies have systematically examined the precursors of biases affecting moral cognition (Dragone et al. 2020; Hyde et al. 2010). Nonetheless, it is noteworthy that the processing of information for behaving in a specific way is a function of one’s ability to assemble action-relevant information that can be used to guide action. This capability supports the exhibition of self-control by directing attention, delaying or suppressing inappropriate responses, and moderating emotional arousal (Wikström and Treiber 2009). Overall, a number of studies have suggested a key-role of self-regulatory abilities in the development of moral emotions and behavior (Eisenberg et al. 2016), whereas studies examining possible associations between self-regulation and moral cognition are scarce. Starting from these considerations and using a social-cognitive perspective, the current investigation is intended to fill the gap in the literature by investigating the role of exposure to community violence and self-regulatory abilities—representing risk factors at environmental and individual levels of the individual’s ecology—in predicting specific longitudinal trajectories of moral cognitive distortions.

Cognitive Distortions in the Framework of Moral Developmental Delay

The notion of self-serving cognitive distortions was introduced by Gibbs et al. to describe young offenders’ inaccurate or biased ways of attending to or conferring meaning upon experiences (e.g., Barriga and Gibbs 1996; Gibbs et al. 1995). Such cognitive errors were defined as “self-serving” as they assist the individual to self-justify acts that are in contrast with moral beliefs, such as antisocial behaviors, thus protecting the self from developing a negative self-image. Following Gibbs’ (2013) theory of moral development, self-serving cognitive distortions originate from a delay that occurs in moral development.

There are several aspects of Gibbs’ approach to the study of morality that are common to other current developmentalist theories (e.g., social domain theory; Smetana 2006; Turiel 1998), such as the importance of social experiences in constructing children and adolescents moral judgments (Arsenio and Lemerise 2004; Dodge and Rabiner 2004). However, differently from other moral theorists that emphasize the role of the social context, Gibbs retains the Kohlberg’s cognitive-developmental perspective (Kohlberg 1984), hypothesizing four stages of moral development, grouped into immature and mature levels (2013). This perspective based on developmental stages has been supported in reviews of studies conducted in over 40 countries around the world (Gibbs 2013), specifically showing an age trend of moral decision-making from immature to mature stages. Starting from a re-conceptualization of Kohlberg’s (1984) moral developmental theory, Gibbs assumes that the sequence of developmental stages identified by Kohlberg is not an obligatory process, and a moral delay can happen, with the persistence of the earliest levels of morality in adolescence and adulthood. As reported by Gibbs (2013), in this stage of arrested moral development, adolescents display cognitive biases (i.e., cognitive distortions), making her/him judge their moral transgression as acceptable and does not feel the deviant behavior as dissonant with common moral standards. Organizing the extant literature on cognitive distortions, Gibbs et al. (1995) introduced a four-category typological model of self-serving cognitive distortions: self-centered, blaming others, minimizing/mislabeling, and assuming the worst. “Self-Centered” cognitive distortions are defined as attitudes wherein the individual focuses on his/her own opinions, expectations, needs, and rights to such an extent that the opinions or needs of others are rarely considered or respected. “Blaming Others” involves cognitive schemas of misattributing the blame for one’s own behavior to sources outside the individual (i.e., external locus of control). “Minimizing” is defined as distortions, where the antisocial behavior is seen as an acceptable, perhaps necessary, way to achieve certain goals. “Mislabeling” is defined as a belittling and dehumanizing way of referring to others. Finally, “Assuming the Worst” represents cognitive distortions where the individual attributes hostile intentions to others, considers the worst-case scenario as inevitable, or sees his/her own behavior as beyond improvement.

In literature, there are several other theoretical accounts of self-serving cognitive distortions (e.g., Bandura’s moral disengagement, or Sykes’ and Matza’s neutralization theory), that, however, do not overlap with Gibbs et al.’s (1995) work. Specifically, a first consideration one might give in comparing Bandura’s moral disengagement perspective with Gibbs et al.’s self-serving cognitive distortions concerns the moral functioning they hypothesized as being behind the cognitive mechanisms that lead to behave (or not behave) aggressively. Indeed, according to Bandura, moral (or immoral) agency depends on self-regulatory processes of individual moral standards and anticipatory self-sanctions that must be activated to come into play (Bandura 2002), rather than depending on moral reasoning. As a result, selective activation and disengagement of internal control would permit various types of conduct with the same moral standards. Self-serving cognitive distortions, as theorized by Gibbs et al. (1995), instead, seem to be relatively stable cognitive mechanisms that, once internalized, are applied by the individual in the interaction within the social environment. Furthermore, the conceptualization of the relationship between cognitive distortions and antisocial or aggressive behavior is thought to be quite different across models. While other theories posit that they precede (or occur during) antisocial behavior, Gibbs et al. (1995) suggest the possibility of multidirectional causality, so that self-serving cognitive distortions may precede and/or follow behavior. In their attempt to integrate various neutralization concepts in a unique moral neutralization approach, Ribeaud and Eisner (2010) noticed that self-serving cognitive distortions, as conceptualized by Gibbs et al., did not totally overlap with Sykes and Matza (1957) or Bandura’s (2002) moral disengagement, in that they are theorized to more specifically conflate moral rationalizations with biased information processing. Assuming the worst, in particular, which partly overlaps Bandura’s concept of external attribution of blame, overcomes such a concept, including the attribution of hostile intentions to others, that is typical of social information processing models (Crick and Dodge 1994).

Effortful Control and Community Violence as Precursors of Trajectories of Cognitive Distortions

According to the social-cognitive perspective, there are several individual and contextual factors that may contribute to the emergence of certain specific cognitive routines, scripts, and schemas (Huesmann 1998). In the stadial framework of moral cognitive development theorized by Gibbs, cognitive distortions are supposed to decline with maturation of the prefrontal cortex, thus suggesting that self-regulatory abilities, that are related to the functioning of the prefrontal cortex, are required for the maturation of moral decisions (Gibbs 2013). However, studies that investigate moral cognition together with self-regulatory abilities are not common in the literature. Overall, self-regulation has been studied by developmental researchers based on a temperamental perspective. Research in the moral field has primarily investigated the role of self-regulatory abilities in the development of moral emotions and behavior (Eisenberg et al. 2016), with a specific focus on the role of effortful control. Rothbart et al. defined effortful control as the self-regulation component of temperament, pertaining to the ability of voluntary inhibiting behavior when appropriate (called “inhibitory control”), activating behavior when needed (called “activation control”), and/or focus or shift attention as needed (called “attentional control”) (Rothbart et al. 2011). Empirical studies have showed that effortful control is related to adolescent sympathy and prosocial behaviors (Carlo et al. 2012; Eisenberg 2010). Indeed, effortful control would enable children to modulate emotional responses adequately to focus on the needs of others (experiencing sympathy) and assist others (acting in morally desirable ways with others). To our knowledge, little is known about whether and how effortful control is associated with the development of moral cognition, although there is empirical evidence supporting the hypothesis that they could be related. First, both low effortful control and bias in cognitive distortions have been found to predict aggressive adolescent behavior (e.g., Esposito et al. 2017; Hardy et al. 2015). Furthermore, it is hypothesized in social-cognitive models, that children who are poor at regulating emotions or lacking in focused attention are more likely to fail with information processing and show biases in the evaluation of social events (Wikström and Treiber 2009). However, to date, these relationships have not been examined in a systematic way.

By contrast, how context may influence the acquisition of pro-violent attitudes is well explained by social-cognitive theories, such as the observational learning theory (Bandura 1978). What children learn from models they observe within their daily contexts are not only specific behaviors, but also complex social scripts that, once established because of repeated exposure, are easily retrieved from memory to serve as cognitive guides for behavior (Dodge et al. 2006). Through inferences they make from repeated observations, children also develop beliefs about the world in general and about what kind of behavior is acceptable. Overall, it is theorized that being exposed to violent contexts increases the likelihood that children will (1) incorporate aggressive social scripts, (2) develop hostile and unsafe world schemas for interpreting environmental cues and making attributions about others’ intentions, and (3) acquire normative beliefs about violence, suggesting the appropriateness of behaving aggressively (Huesmann 1998). This process results in more sanctioning violent beliefs, more positive moral evaluations of aggressive acts, and in more justification for inappropriate behavior, inconsistent with society’s and individual’s moral norms. This is defined by Huesmann and Kirwil (2007) as “cognitive desensitization to violence.” Indeed, although desensitization is more properly used to refer to emotional changes that occur with repeated violence exposure, it is possible to also talk about “desensitization” when changes regard cognitive aspects that lead the individual to develop stronger pro-violent attitudes (i.e., attitudes approving violence as a means of regulating interpersonal contacts; Huesmann 1998). Consistent with this idea, several years before, Ng-Mak et al. (2002) formulated the “pathologic adaptation” model identifying repeated exposure to community violence as a precursor to a normalization of violence through moral disengagement, in which youths then experience less emotional arousal in response to violence, on one hand, and facilitates aggressive behavior, on the other. However, the development of moral disengagement as a consequence of repeated exposure to violence has not yet been tested empirically in a systematic way.

Beginning with the idea that potential precursors of moral disengagement should be experiences that directly model or at least expose children to attitudes and beliefs condoning the use of antisocial behavior (e.g., distribution and selling of illegal drugs, using violence as a primary conflict resolution strategy), Hyde et al. (2010) focused on neighborhood impoverishment as a potential precursor of moral disengagement, finding a significant association. Moreover, Wilkinson and Carr (2008) tried to raise this point using qualitative data from male violent offenders, reporting that individuals respond to exposure to violence in many ways, some of which seemed to be consistent with traditional concepts of moral disengagement.

Nonetheless, several studies have found significant associations between community violence and acceptance of violence cognitions, or bias of social information processing (see, for example, Allwood and Bell 2008; Bradshaw et al. 2009), whereas Bacchini et al. (2013) showed that higher levels of exposure to community violence as a witness, along with the perception of higher levels of deviancy among peers, reduced the strength of moral criteria for judging moral violations. Overall, to the best of our knowledge, only one study (Dragone et al. 2020) has systematically examined how being exposed to community violence is associated with cognitive distortions as intended in their moral dimension (Arsenio and Lemerise 2004; Ribeaud and Eisner 2010), finding a significant, even marginal, association of witnessing community violence with cognitive distortions over time. Furthermore, this relationship was not bidirectional (i.e., cognitive distortions did not predict violence exposure over time), shedding light on the importance of further investigate the predictive relationship of violence experiences within the community on the development of cognitive distortions over time.

The Present Study

The central goal of this study was to investigate how low effortful control and exposure to community violence were associated with developmental trajectories of moral cognitive distortions. As this was the first study focusing on self-serving cognitive distortions as theorized by Gibbs, no specific hypotheses were done about developmental trajectories. Overall, it was expected that the membership to the most “distorted” group (e.g., high levels of cognitive distortions that show stability or increment over time) was predicted by low effortful control and high frequency of exposure to community violence. Adolescent gender and social desirability were included as controls to ensure that the associations of effortful control and violence exposure with trajectories of CDs were adjusted for their potential confounding effects.

Methods

Participants and Procedure

The participants were part of a currently ongoing longitudinal study (ALP; Arzano Longitudinal Project) aimed at investigating the determinants and pathways of typical and atypical development from early to late adolescence. The study design included four data points (one-year intervals) from two cohorts of adolescents who were enrolled in grade nine (Time 1 of the study) in 2013 and 2016, respectively. At Time 1 (T1), the sample consisted of 803 Italian adolescents (349 males and 454 females; Mage = 14.19, SD = 0.57) attending several public schools in the metropolitan area of Naples (Italy). The most part of the sample was recruited from two high schools (34.4% and 41.9%), whereas the remaining proportion came from other 20 schools in the same geographic area. The neighborhoods served by these schools is characterized by serious social problems, such as high unemployment (41%), high school-dropout rates (18.8%), and the presence of organized crime, with rates that are among the highest in Italy [Istituto Nazionale di Statistica (ISTAT) 2016]. National statistics are also supported by findings of prior empirical research, documenting that adolescents living in Naples are massively exposed to neighborhood violence in their everyday life (Bacchini and Esposito 2020).

Data collection took place during the spring of 2013 and 2016 (T1), 2014 and 2017 (Time 2; T2), 2015 and 2018 (Time 3; T3) and 2016 and 2019 (Time 4; T4). Parents’ written consent and adolescents’ assent were obtained prior to the administration of questionnaires, which was conducted during classroom sessions by trained assistants. Additionally, they were informed about the voluntary nature of participation and their right to discontinue at any point without penalty. The socioeconomic condition of participants’ families reflected the Italian National profile (Istituto Nazionale di Statistica (ISTAT) 2016). Approximately 50% of the fathers and mothers had a low level of education (middle school diploma or less), 28% had a high school diploma and approximately 8% had a university degree.

Measures

Effortful control

To evaluate temperamental effortful control at T1, adolescents were asked to rate items from the long version of the Early Adolescent Temperament Questionnaire—Revision (EATQ-R) (Ellis and Rothbart 2001). Items were rated on a 5-point Likert scale ranging from, “almost never true” (1) to “almost always true” (5). The multi-componential structure of effortful control has arisen from the factorial analysis of the questionnaire (Ellis and Rothbart 2001). The measure was computed by averaging item ratings of the activation control (e.g., “If I have a hard assignment to do, I get started right away”), attention control (e.g., “I pay close attention when someone tells me how to do something”), and inhibitory control (e.g., “When someone tells me to stop doing something, it is easy for me to stop”) scales, after recoding inversely formulated items (α = 0.77, ωh = 0.67). Items were translated from English into Italian by two native Italian speakers, experts in psychology and fluent in English, and then back translated by a native English speaker to ensure its comparability to the English version.

Exposure to community violence

Exposure to community violence was self-reported during T1 using the Exposure to Community Violence Questionnaire (Esposito et al. 2017), consisting of 12 items. Adolescents were asked to report the frequency (from 1 = “never” to 5 = “more than five times”) of being a victim or a witness of violent incidents that had occurred during the last year in their neighborhood. Sample items were, “How many times have you been chased by gangs, other kids, or adults?” and “How many times have you seen somebody get robbed?” For each participant, items of each scale were averaged to form a global score of exposure to community violence (α = 0.85, ωh = 0.68).

Self-serving cognitive distortions

At each time point, participants responded to the 39 items in the, “How I think Questionnaire” (HIT) (Barriga et al. 2001; Italian validation by Bacchini et al. (2016)), measuring self-serving cognitive distortions. Each item was rated on a 6-point Likert scale from, “agree strongly” to “disagree strongly.” A sample item was: “If someone leaves a car unlocked, they are asking to have it stolen.” The mean response to the 39 items is the overall HIT score, with higher scores indicating higher levels of cognitive distortions (αs range from 0.95 to .097; ωh range from 0.84 and 0.90).

Social desirability

As a control variable, participants were asked to complete 12 items from the Lie scale of the Big Five Questionnaire (Caprara et al. 1993) to asses social desirability bias. Items were rated on a 5-point Likert-type scale ranging from “very false for me” to “very true for me”. Sample items were: “I’ve always gotten along with everyone” and “I’ve never told a lie.” The scale score was created by averaging items score, with higher scores reflecting higher levels of socially desirable responding (α = 0.77; ωh = 0.78).

Attrition Analysis

All T1 participants completed the HIT Questionnaire at T2. Seventy-two (9%) of T1 and T2 participants did not complete the questionnaire at T3, and 151 (18.8%) at T4. The total attrition rate was mainly due to the absence of adolescents from school during assessments. The Little’s test (Little and Rubin 2002) for data was missing completely at random (MCAR) in SPSS 21 was significant, χ2 (26) = 73.69, p < 0.001, suggesting that missingness was not completely at random. Independent t-tests comparing mean differences between missing and non-missing cases suggested that those who dropout on measures of cognitive distortions at T3 and T4 reported higher levels of cognitive distortions at previous time point, and higher levels of community violence exposure, effortful control and social desirability at T1 (all ps < 0.05).

Analytic Approach

The main analyses were conducted in Mplus 8 (Muthén and Muthén 1998-2017). Missing data were handled using full-information maximum-likelihood (FIML) method with the assumption that the data were missing at random (MAR; Little and Rubin 1989). As indicated in previous work (Wang and Bodner 2007), FIML is an especially useful missing-data treatment in longitudinal designs because the outcome scores for dropouts tend to be correlated with their own previously recorded responses from earlier waves (i.e., an MAR pattern).

Latent growth mixture models (GMMs) were used for identifying distinct growth developmental trajectories (or classes) of cognitive distortions (Muthén 2004; Muthén and Muthén 2000). Extending the logic of multiple-group growth models, where groups are defined a priori, the GMM identifies classes of individuals post hoc, such that individuals that are in the same class have similar trajectories and individuals in different classes have sufficiently divergent trajectories (Grimm et al. 2017). Using a person-centered approach, GMMs are extremely useful for developmental researchers, in that they allow not only the identification of different classes of intra-individual (within-person) change, but also to test hypotheses about inter-individual (between-person) differences in that intra-individual change by examining (1) antecedents, or predictors, and (2) consequences, or distal outcomes, of class membership (Wickrama et al. 2016).

The GMM analysis consisted of three steps. First, simple growth models were run to determine the growth parameters for the GMM. Specifically, three unconditional (without any covariate) latent growth models were tested and compared: (1) intercept only, in which each individual has an intercept, but no change over time is estimated, (2) intercept and linear slope, which allows individual scores to change linearly over time and permits individuals to differ in their rates of change, and (3) intercept and quadratic slope, in which the rate of change is assumed to be non-linear. To compare these nested models, the value of −2log likelihood (−2LL) was used to perform χ2 tests with degrees of freedom equal to the difference in the number of degrees of freedom between the models.

Second, once the best model was tested for the linear growth model, five specific models were fit to examine class differences within certain parameters of the linear growth model. The first model (M1; Table 2) was the baseline (invariance) model, where all estimated parameters were invariant across classes. This model treats the data as if there is only one class. The second model (M2; Table 3) was a latent class growth model (LCG), in which means are estimated and within-class variances fixed to zero. This model assumes that all individual trajectories within a class are homogeneous. In the third model (M3; Table 3), the means of the intercept and slope are class specific. That is, individuals are probabilistically placed in classes that differ in their baseline levels and rates of change.

The fourth model (M4; Table 3) is the means and covariances model, where the average trajectories, the magnitude of between-person differences in the intercept and slope, and the association between intercepts and slopes within each class are class specific. Finally, in the fifth model (M5; Table 3), classes are allowed to differ in all estimated parameters of the linear growth model: means, covariances, and residual variances. These latter, particularly, provide information about within-person fluctuations in scores over time. As recommended by Grimm et al. (2017), within each model type, models with various numbers of classes were fit, starting with two-class models, and increased the number of latent classes incrementally until the model encountered convergence issues or the model fit indicates that additional classes are unlikely to produce viable results.

Models were compared based on fit criteria and the interpretation of model parameters. First, model convergence was examined. Second, it was examined the information criteria: BIC, AIC, and sample size adjusted BIC. In general, lower values indicate a better fitting model. Third, likelihood ratio tests [Lo Mendell Rubin (LMR-LRT), or the bootstrap likelihood ratio test], which provide additional information for model selection within each model type (e.g., M2 models), were examined. Statistically significant values indicate that dropping one class from the model would significantly worsen the model fit. Afterward, a probability lower than 0.001 for a two-class model indicate that this is preferred to the one-class model, and so on.

Fourth, it was examined the entropy statistic and the average posterior probabilities. Specifically, entropy is a standardized index (i.e., ranging from 0 to 1) of model-based classification accuracy. Higher values indicate improved enumeration accuracy, which indicates clear class separation (Nagin 2005). The entropy statistic is based on estimated posterior probabilities for each class. For example, a probability of 0.91 suggests that 91% of subjects in the assigned class fit that category, while 9% of the subjects in that given class are not accurately described by that category (Fanti and Henrich 2010). Overall, the model with lower information criteria, higher entropy, average posterior probability values, and statistically significant p values for the likelihood ratio tests show the better fit. However, this information was also supplemented with substantive knowledge of the phenomena being studied to identify the model that best represented the data, as generally recommended (Grimm et al. 2017; Muthén 2003).

After selecting the optimal model, the third and final step was to extend the GMM to include covariates as predictors of trajectory class membership. Some researchers (Li and Hser 2011; Lubke and Muthén 2007; Muthén 2004) have recommended using the model with covariates when determining the appropriate number of latent classes, whereas, others (Enders and Tofighi 2007) have argued that class enumeration should be done without covariates. The latter approach was used, because it assures that the latent classes are based on the longitudinal trajectories and not the covariates (Grimm et al. 2017).

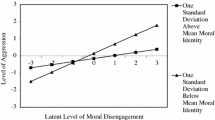

Following this suggestion, auxiliary variables were included as predictors in the GMM using a three-step approach (Nylund-Gibson et al. 2014). After the first step, which consisted in the estimation of the unconditional (without covariates) mixture model, in the second step, individual probabilities of trajectory class membership estimated from the latent class posterior probabilities were used to classify individuals into one or another class, while retaining knowledge of the uncertainty of that classification (in principle, the standard error of that classification). Third, a new latent GMM was formulated that examined the relationships among the covariates and the latent class variable. Thus, the effects of covariates can be studied while both assuring that the latent class variable is only derived from the repeated measures and the uncertainty inherent in the classification is taken into consideration. Specifically, adolescent gender, exposure to community violence and effortful control at T1 were added as predictors of longitudinal trajectories of cognitive distortions (see Fig. 1). According to this procedure, when a predictor is included in a GMM, a multinomial regression is performed to investigate the influence of a predictor on between-class variation.

Results

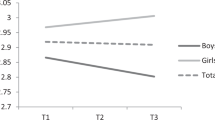

Descriptive statistics and correlations between all variables used in the study are shown in Table 1. As can be observed, all the study’s variables significantly associated each other. Females reported marginally significant lower levels of community violence exposure and cognitive distortions at T2 and T3.

The model fit information for all estimated models is presented in Table 2. The significant change of −2LL between the no-growth and linear growth models suggested that the linear growth model fit the data significantly better than the no-growth model. The change of −2LL was also marginally significant between the linear and quadratic growth models. However, the inspection of the other model fit indices and parameters indicated a better fit for the linear model.

When examining the model convergence of GMM, as noted by asterisks in Table 3, several models did not properly converge. Specifically, the variance of the slope was negative in one or more of the classes in the two- and three-class means models (M3) and covariance models (M4). In the four-class M4 and M5, the best log likelihood value was not replicated, indicating that additional classes are unlikely to produce viable results. Given these results, our evaluation was then specifically focused on M2 and M5.

With respect to the information criteria (BIC, AIC, and sample size adjusted BIC), it was evident that there was a decrease when moving from one to two classes and from two to three classes in both models (M2 and M5). The likelihood ratio tests for M2 and M5 indicate that the two-class model was preferred to the one-class model (all ps < 0.001), and the three-class model was preferred to the two-class model (p = 0.05 and < 0.001, respectively). The entropy statistic was higher for the two-class LCG model (0.77) compared with the three-class LCG and two- and three-class means, covariances, and residual variance models. However, for the three-class models, the entropy value was the same for M2 and M5; diagonal probabilities for the three-class M5 ranged from 0.87 to 0.91, and from 0.82 to 0.91 in the three-class M2.

Based on this information, the three-class means, covariances, and residual variance model (M5) was selected as the best fitting model. The identified three trajectories classes are shown in Fig. 2: (1) moderately high and stable cognitive distortions (N = 311), (2) moderate and decreasing cognitive distortions (N = 363), and (3) low and decreasing cognitive distortions (N = 129).

Parameter estimates from this model are shown in Table 4. Specifically, the mean rate per year of cognitive distortions was not statistically significant for class 1. Class 2 and class 3 included adolescents with a significant negative mean rate of change over time. In class 1 and 2, there was a significant negative covariance between the intercept and slope, indicating that CD levels tend to decrease more slowly over time in adolescents who had higher values at T1. Moreover, there were significant between-person differences at the intercept in all classes, whereas significant between person differences in the slope were found for class 1 and 2.

Precursors of Developmental Trajectories of Cognitive Distortions

Table 5 presents the logistic coefficients and odds ratio resulting from the multinomial logit regression analysis, in which classes were regressed on exposure to community violence and effortful control at T1, controlling for gender and social desirability. In interpreting the multinomial coefficients, class 2 (moderate and decreasing) was used as the reference class. Specifically, the log-odds of being in class 1 (moderately high and stable) in comparison to the log-odds of being in class 2 increased with the amount of exposure to community violence. Similarly, low levels of effortful control and being male increase the log-odds of being in class 1 relative to class 2, whereas high effortful control increase the log-odds of being in class 3. No significant associations were found between social desirability and trajectories of class membership.

Discussion

Self-serving cognitive distortions have been described as thinking errors that originate from the individual persistence into immature moral judgment stages during adolescence and adulthood, increasing the risk of individual’s engagement in antisocial or immoral conducts (Gibbs 2013). The aim of the current study was to investigate the trajectories of moral cognitive distortions in adolescence, and the simultaneous contribution of effortful control and community violence exposure as individual- and environmental-level factors, respectively, in making adolescents more vulnerable to use cognitive distortions when interpreting social situations. Using a person-centered approach, developmental trajectories of cognitive distortions in adolescence were first identified. Then, effortful control and being exposed to community violence were tested as potential predictors of those trajectories, controlling for adolescent gender and social desirability.

Overall, three trajectories were identified to best explain variation in cognitive distortions over time. Approximately 45% of the sample demonstrated a moderately high and relatively stable trajectory of cognitive distortions, whereas 39% reported initially moderate levels of cognitive distortions that decreased over time. Finally, a small number of participants (16%) showed low initial levels of cognitive distortions, with a decreasing tendency over time.

The identified trajectories were partially consistent with those identified by Paciello et al. (2008). The authors found a general decline of moral disengagement over time that, in their opinion, could reflect changes in cognitive and social structures occurring during adolescence and that, in turn, promote moral reasoning and then moral agency (Eisenberg 2000). Following Gibbs’ theory, the resulted declining trajectories of the current study, including about half size of the study’s sample, could be interpreted as a means of a developmental process, through which, along with other cognitive and socioemotional achievements, youths progress from a relatively superficial level, characterized by schema generating a higher use of egocentric and pragmatic self-serving thinking errors or cognitive distortions, to a more mature level of interpersonal and sociomoral reasoning, in which they can take on the roles or consider the perspective of others (Gibbs 1995). This perspective that recognizes that moral maturity occurs over time is also supported by research adopting the moral domain approach (Tisak and Turiel 1988) with children of different age. More specifically, moral domain theorists have showed that understanding within the moral domain develops from a focus on concrete harm in early childhood to an understanding of fairness in later childhood (Smetana 2006). Differently from cognitive-developmental theorist, however, the domain theory does not elaborate on the cognitive or affective processes that allow for this development to occur.

The other half size of the study’s sample was identified in the trajectory with initially moderately high and, over time, stable cognitive distortions. This finding highlights a high likelihood, for high school youths, to display a persistent and pronounced egocentric bias that consolidates into cognitive distortions (Barriga et al. 2000), perhaps reflecting a prolonged immature or superficial moral judgment stage. The condition where cognitive distortions persist over time has been described by Gibbs as a “moral developmental delay,” that has been widely documented among antisocial and delinquent youth (Gibbs 2013).

Predictors of Cognitive Distortions Trajectories

When examining how effortful control and community violence exposure were related to developmental trajectories of cognitive distortions, the results indicated that both factors have an impact on the likelihood to show a developmental tendency rather than another. As concerns effortful control, two main results emerged: first, high effortful control was associated with initially lower and, over time, decreasing levels of cognitive distortions, supporting the hypothesis that moral development progresses along with cognitive and socioemotional development, as suggested by Gibbs (2013); and second, low effortful control predicted moderately high and stable cognitive distortions over time, indicating that the persistence of thinking errors during adolescence could depend on an individual failure to moderate emotional responses, direct attention, organize information, and delay impulsive action (Wikström and Treiber 2009).

Furthermore, it was found that a high frequency of exposure to community violence was a significant risk factor for being in the class with higher and tendentially stable cognitive distortions, relative to the moderate and decreasing class. This finding seems to be consistent with the hypothesis that youths could become desensitized to violence after repeated exposure (Huesmann and Kirwil 2007; Mrug et al. 2015), as well as with other previous findings highlighting a strict association between community violence and the development of positive attitudes toward violence (Allwood and Bell 2008), normative beliefs about violence (McMahon et al. 2013), or hostile attributional bias (Bradshaw et al. 2009). Despite the relatively great number of studies that have examined single indicators of the alteration of youths’ cognitive processes through their experiences of violence, the results of this study extend prior findings by addressing this issue from a moral perspective. This finding is supported by a recent study that investigated the longitudinal and reciprocal effects between community violence exposure and cognitive distortions in a sample of Italian adolescents (Dragone et al. 2020). More in detail, the authors found that violence exposure within the community significantly predicted cognitive distortions one year later, whereas cognitive distortions did not predict violence exposure longitudinally. What this approach allows for speculation is that growing up in a violent neighborhood, as well as aversive parenting or deviant peers in previous studies (Ribeaud and Eisner 2015), might undermine the normative process of moral development, thus, causing the moral delay hypothesized by Gibbs, that consolidates into self-serving cognitive distortions (Gibbs 2004).

Overall, these findings support the need for the design and implementation of preventive interventions aiming at strengthening youth moral cognition and enhancing individual self-regulatory abilities when facing with social problems. Examples of programs have been widely documented in the literature (Gibbs 2013; Steinberg 2014). Within Gibbs’ theoretical framework, a school-based program, the “EQUIP for educators,” has been developed and implemented in several contexts (Van der Velden et al. 2010). The EQUIP for educators is an adapted version of the original EQUIP program designed for the treatment of juvenile offenders (Equipping Youth to Help One Another; Gibbs et al. 1995). One of the most strength of this program, in line with this study’s findings, is that it takes into account and attempts to transform the distorted and harmful culture where cognitive distortions develop and persist over time. Through the establishment of a mutual help approach, this psychoeducational program aims to equip youth with mature moral judgment, and skills to manage anger and correct cognitive distortions.

With respect to control variables, males were more likely to show high and stable levels of cognitive distortions over time, whereas, gender was not a significant predictor of showing low and decreasing cognitive distortions. These results are consistent with gender differences found in the previous literature, such that, while moral judgment does not appear to vary according to gender and cognitive deficits do seem to represent risk factors for both genders (Barriga et al. 2001), males generally self-reported more cognitive distortions than females (Lardén et al. 2006; Owens et al. 2012). Further studies are yet needed to understand how these differences originate.

Limitations and Future Directions

In interpreting these findings, some limitations must be considered. First, all measurements in the study relied exclusively on adolescent self-reporting. Despite the effects were controlled for social desirability in the current study, future studies may benefit from utilizing a multi-informant approach (e.g., parent reports for temperamental constructs) jointly with self-reporting measures. Furthermore, data concerning the frequency of being exposed to community violence could benefit from a more objective and comprehensive description of violence in the everyday lives of adolescents, including official data from national census agencies and police departments. Another limitation concerns the generalizability of the results, as the study included a sample from a limited geographic area in Southern Italy. As described above, the context where data were collected is characterized by serious social problems, that expose adolescents to high risk situations (e.g., violence exposure, youth delinquency, gang affiliation). This might shape culture-specific beliefs and values, that in turn might influence an individual’s cognitions and behaviors (Bacchini et al. 2015). More research is needed to confirm that the explanatory model proposed in this study applies to populations from other, possibly differing, cultural contexts. Furthermore, based on the current findings, future studies should investigate potential gender-related differences in pattern of development of cognitive distortions.

Conclusion

The current findings extend the literature concerning the role of individual self-regulatory mechanisms and environmental factors on the development of moral cognition. Using a person-centered approach, the findings of the present study indicated that: (1) most adolescents exhibited declining levels of self-serving cognitive distortions over time, and (2) adolescents who exhibited initially higher levels of cognitive distortions also showed a tendency to remain stable over time; they were typically male youth, with low effortful control and a high frequency of being exposed to community violence. These results point to the importance of considering moral development as a process involving multiple levels of individual ecology. Future studies may deepen the investigation of other social, cognitive, and biological factors that could influence trajectories of moral cognition, both for expanding knowledge about the development and persistence of biases in moral cognition in adolescence (and their adjustment-related outcomes), and to design appropriate interventions that could prevent adolescents from developing these biases. The findings of the current study call specific attention to the need to support children and adolescents in developing skills that could help them to successfully cope with violence and high-risk environments, including self-regulatory abilities, social problem-solving skills and moral education.

References

Allwood, M. A., & Bell, D. J. (2008). A preliminary examination of emotional and cognitive mediators in the relations between violence exposure and violent behaviors in youth. Journal of Community Psychology, 36(8), 989–1007. https://doi.org/10.1002/jcop.20277.

Arsenio, W. F., & Lemerise, E. A. (2004). Aggression and moral development: integrating social information processing and moral domain models. Child Development, 75(4), 987–1002. https://doi.org/10.1111/j.1467-8624.2004.00720.x.

Bacchini, D., Affuso, G., & Aquilar, S. (2015). Multiple forms and settings of exposure to violence and values: unique and interactive relationships with antisocial behavior in adolescence. Journal of Interpersonal Violence, 30(17), 3065–3088. https://doi.org/10.1177/0886260514554421.

Bacchini, D., Affuso, G., & De Angelis, G. (2013). Moral vs. non-moral attribution in adolescence: environmental and behavioural correlates. European Journal of Developmental Psychology, 10(2), 221–238. https://doi.org/10.1080/17405629.2012.744744.

Bacchini, D., De Angelis, G., Affuso, G., & Brugman, D. (2016). The structure of self-serving cognitive distortions: a validation of the “How I Think” questionnaire in a sample of italian adolescents. Measurement and Evaluation in Counseling and Development, 49(2), 163–180. https://doi.org/10.1177/0748175615596779.

Bacchini, D., & Esposito, C. (2020). Growing up in violent contexts: differential effects of community, family, and school violence on child adjustment. In N. Balvin & D. Christie (Eds.) Children and peace. peace psychology book series. Cham: Springer.

Bandura, A. (1978). Social learning theory of aggression. Journal of Communication, 28(3), 12–29.

Bandura, A. (1986). The explanatory and predictive scope of self-efficacy theory. Journal of Social and Clinical Psychology, 4(3), 359–373. https://doi.org/10.1521/jscp.1986.4.3.359.

Bandura, A. (2002). Social cognitive theory in cultural context. Applied Psychology, 51(2), 269–290. https://doi.org/10.1111/1464-0597.00092.

Barriga, A. Q., & Gibbs, J. C. (1996). Measuring cognitive distortion in antisocial youth: development and preliminary validation of the “How I Think” questionnaire. Aggressive Behavior, 22(5), 333–343.

Barriga, A. Q., Gibbs, J. C., Potter, G. B., & Liau, A. K. (2001). How I think (HIT) questionnaire manual Champaign, IL: Research Press.

Barriga, A. Q., Landau, J. R., Stinson, B. L., Liau, A. K., & Gibbs, J. C. (2000). Cognitive distortion and problem behaviors in adolescents. Criminal Justice and Behavior, 27(1), 333–343. https://doi.org/10.1177/0093854800027001003.

Bradshaw, C. P., Rodgers, C. R., Ghandour, L. A., & Garbarino, J. (2009). Social–cognitive mediators of the association between community violence exposure and aggressive behavior. School Psychology Quarterly, 24(3), 199–210. https://doi.org/10.1037/a0017362.

Caprara, G. V., Barbaranelli, C., Borgogni, L., & Perugini, M. (1993). The “Big Five Questionnaire”: a new questionnaire to assess the five factor model. Personality and Individual Differences, 15(3), 281–288. https://doi.org/10.1016/0191-8869(93)90218-r.

Carlo, G., Crockett, L. J., Wolff, J. M., & Beal, S. J. (2012). The role of emotional reactivity, self‐regulation, and puberty in adolescents’ prosocial behaviors. Social Development, 21, 667–685. https://doi.org/10.1111/j.1467-9507.2012.00660.x.

Crick, N. R., & Dodge, K. A. (1994). A review and reformulation of social information-processing mechanisms in children’s social adjustment. Psychological Bulletin, 115(1), 74–101. https://doi.org/10.1037/0033-2909.115.1.74.

Dodge, K. A., Coie, J. D., & Lynam, D. (2006). Aggression and antisocial behavior in youth. In W. Damon & R. M. Lerner & N. Eisenberg (Eds.), Handbook of child psychology: vol. 3. social, emotional, and personality development (6th ed.; pp. 719–788). New York: John Wiley.

Dodge, K. A., & Rabiner, D. L. (2004). Returning to roots: on social information processing and moral development. Child Development, 75(4), 1003–1008.

Dragone, M., Esposito, C., De Angelis, G., Affuso, G., & Bacchini, D. (2020). Pathways linking exposure to community violence, self-serving cognitive distortions and school bullying perpetration: a three-wave study. International Journal of Environmental Research and Public Health, 17(1), 188–205. https://doi.org/10.3390/ijerph17010188.

Eisenberg, N. (2000). Emotion, regulation, and moral development. Annual Review of Psychology, 51(1), 665–697. https://doi.org/10.1146/annurev.psych.51.1.665.

Eisenberg, N. (2010). Empathy-related responding: links with self-regulation, moral judgment, and moral behavior. In M. Mikulincer & P. R. Shaver (Eds.), Prosocial motives, emotions, and behavior: the better angels of our nature (pp. 129–148). American Psychological Association. https://doi.org/10.1037/12061-007.

Eisenberg, N., Smith, C. L., & Spinrad, T. L. (2016). Effortful control: relations with emotion regulation, adjustment, and socialization in childhood. In K. D. Vohs & R. F. Baumeister (Eds.), Handbook of self-regulation: research, theory and applications (3rd ed., pp. 458–478). New York: Guilford Press.

Ellis, L. K., & Rothbart, M. K. (2001). Revision of the early adolescent temperament questionnaire [Poster session]. biennial meeting of the Society for Research in Child Development, Minneapolis, MN, United States.

Enders, C. K., & Tofighi, D. (2007). Centering predictor variables in cross-sectional multilevel models: a new look at an old issue. Psychological Methods, 12(2), 121–138. https://doi.org/10.1037/1082-989X.12.2.121.

Esposito, C., Bacchini, D., Eisenberg, N., & Affuso, G. (2017). Effortful control, exposure to community violence, and aggressive behavior: exploring cross‐lagged relations in adolescence. Aggressive Behavior, 43(6), 588–600. https://doi.org/10.1002/ab.21717.

Fanti, K. A., & Henrich, C. C. (2010). Trajectories of pure and co-occurring internalizing and externalizing problems from age 2 to age 12: findings from the National Institute of Child Health and Human Development Study of Early Child Care. Developmental Psychology, 46(5), 1159–1175. https://doi.org/10.1037/a0020659.

Gibbs, J. C. (1995). The cognitive developmental perspective. In W. M. Kurtines & J. L. Gewirtz (Eds.), Moral development: an introduction (pp. 27–48). Boston: Allyn & Bacon.

Gibbs, J. C. (2004). Moral reasoning training: the values component. In P. Goldstein, R. Nensén, B. Daleflod & M. Kalt (Eds.), New perspectives on aggression replacement training: practice, research, and application (pp. 50–72). West Sussex: Wiley.

Gibbs, J. C (2013). Moral development and reality: beyond the theories of Kohlberg, Hoffman, and Haidt. 3rd ed. New York: Oxford University Press. https://doi.org/10.1093/acprof:osobl/9780199976171.001.0001.

Gibbs, J. C., Potter, G. B., & Goldstein, A. P. (1995). The EQUIP program: teaching youth to think and act responsibly through a peer-helping approach. Champaign, IL: Research Press. https://doi.org//10.1037/e552692012-085.

Grimm, K. J., Ram, N., & Estabrook, R. (2017). Growth modeling: structural equation and multilevel modeling approaches. London: The Guilford Press.

Guerra, N. G., Huesmann, R. L., & Spindler, A. (2003). Community violence exposure, social cognition, and aggression among urban elementary school children. Child Development, 74, 1561–1576. https://doi.org/10.1111/1467-8624.00623.

Hardy, S. A., Bean, D. S., & Olsen, J. A. (2015). Moral identity and adolescent prosocial and antisocial behaviors: interactions with moral disengagement and self-regulation. Journal of Youth and Adolescence, 44, 1542–1554. https://doi.org/10.1007/s10964-014-0172-1.

Huesmann, L. R. (1998). The role of social information processing and cognitive schema in the acquisition and maintenance of habitual aggressive behavior. New York: Academic Press.

Huesmann, L. R., & Kirwil, L. (2007). Why observing violence increases the risk of violent behavior in the observer. Cambridge: Cambridge University Press.

Hyde, L. W., Shaw, D. S., & Moilanen, K. L. (2010). Developmental precursors of moral disengagement and the role of moral disengagement in the development of antisocial behavior. Journal of Abnormal Child Psychology, 38(2), 197–209. https://doi.org/10.1007/s10802-009-9358-5.

Istituto Nazionale di Statistica (ISTAT) (2016). Bes 2016. Il benessere equo e sostenibile in Italia [Bes 2016. The equitable and sustainable welfare in Italy]. Roma: Istituto Nazionale di Statistica. https://www.istat.it/it/files/2016/12/BES-2016.pdf.

Kohlberg, L. (1984). Essays on moral development: vol. 2. The psychology of moral development. San Francisco: Harper & Row.

Lardén, M., Melin, L., Holst, U., & Långström, N. (2006). Moral judgement, cognitive distortions and empathy in incarcerated delinquent and community control adolescents. Psychology, Crime & Law, 12(5), 453–462. https://doi.org/10.1080/10683160500036855.

Li, L., & Hser, Y. I. (2011). On inclusion of covariates for class enumeration of growth mixture models. Multivariate Behavioral Research, 46(2), 266–302. https://doi.org/10.1080/00273171.2011.556549.

Little, R. J., & Rubin, D. B. (1989). The analysis of social science data with missing values. Sociological Methods & Research, 18(2-3), 292–326.

Little, R. J., & Rubin, D. B. (2002). Statistical analysis with missing data. New York: Wiley. https://doi.org/10.1002/9781119013563.

Lubke, G., & Muthén, B. O. (2007). Performance of factor mixture models as a function of model size, covariate effects, and class-specific parameters. Structural Equation Modeling, 14(1), 26–47. https://doi.org/10.1080/10705510709336735.

McMahon, S. D., Todd, N. R., Martinez, A., Coker, C., Sheu, C. F., Washburn, J., & Shah, S. (2013). Aggressive and prosocial behavior: community violence, cognitive, and behavioral predictors among urban African American youth. American Journal of Community Psychology, 51(3-4), 407–421. https://doi.org/10.1007/s10464-012-9560-4.

Mrug, S., Madan, A., Cook, E. W., & Wright, R. A. (2015). Emotional and physiological desensitization to real-life and movie violence. Journal of Youth and Adolescence, 44(5), 1092–1108. https://doi.org/10.1007/s10964-014-0202-z.

Muthén, B. (2003). Statistical and substantive checking in growth mixture modeling: comment on Bauer and Curran. Psychological Methods, 8(3), 369–377. https://doi.org/10.1037/1082-989X.8.3.369.

Muthén, B. (2004). Latent variable analysis. In D. Kaplan (Ed.), Handbook of quantitative methodology for the social sciences (pp. 345–368). Newbury Park, CA: Sage. https://doi.org/10.4135/9781412986311.n19.

Muthén, L. K., & Muthén, B. O. (1998-2017). Mplus user’s guide (8th ed.). Los Angeles, CA: Muthén & Muthén.

Muthén, B., & Muthén, L. K. (2000). Integrating person‐centered and variable‐centered analyses: growth mixture modeling with latent trajectory classes. Alcoholism: Clinical and Experimental Research, 24(6), 882–891. https://doi.org/10.1111/j.1530-0277.2000.tb02070.x.

Nagin, D. (2005). Group-based modeling of development. Cambridge: Harvard University Press.

Ng-Mak, D. S., Salzinger, S., Feldman, R., & Stueve, A. (2002). Normalization of violence among inner-city youth: a formulation for research. American Journal of Orthopsychiatry, 72, 92–101. https://doi.org/10.1037/0002-9432.72.1.92.

Nylund-Gibson, K., Grimm, R., Quirk, M., & Furlong, M. (2014). A latent transition mixture model using the three-step specification. Structural Equation Modeling: A Multidisciplinary Journal, 21(3), 439–454. https://doi.org/10.1080/10705511.2014.915375.

Owens, L., Skrzypiec, G., & Wadham, B. (2012). Thinking patterns, victimisation and bullying among adolescents in a South Australian metropolitan secondary school. International Journal of Adolescence and Youth, 19(2), 190–202. https://doi.org/10.1080/02673843.2012.719828.

Paciello, M., Fida, R., Tramontano, C., Lupinetti, C., & Caprara, G. V. (2008). Stability and change of moral disengagement and its impact on aggression and violence in late adolescence. Child Development, 79(5), 1288–1309. https://doi.org/10.1111/j.1467-8624.2008.01189.x.

Ribeaud, D., & Eisner, M. (2010). Risk factors for aggression in pre-adolescence: risk domains, cumulative risk and gender differences—results from a prospective longitudinal study in a multi-ethnic urban sample. European Journal of Criminology, 7(6), 460–498. https://doi.org/10.1177/1477370810378116.

Ribeaud, D., & Eisner, M. (2015). The nature of the association between moral neutralization and aggression: a systematic test of causality in early adolescence. Merrill-Palmer Quarterly, 61(1), 68–84. https://doi.org/10.13110/merrpalmquar1982.61.1.0068.

Rothbart, M. K., Ellis, L. K., & Posner, M. I. (2011). Temperament and self-regulation. In K. D. Vohs & R. F. Baumeister (Eds.), Handbook of self-regulation: research, theory, and applications (p. 441–460). New York, NY: Guilford Press.

Schwartz, D., Dodge, K. A., Pettit, G. S., & Bates, J. E. (2000). Friendship as a moderating factor in the pathway between early harsh home environment and later victimization in the peer group. Developmental Psychology, 36(5), 646–662. https://doi.org/10.1037/0012-1649.36.5.646.

Smetana, J. G. (2006). Social-cognitive domain theory: consistencies and variations in children’s moral and social judgments. In M. Killen & J. G. Smetana (Eds.), Handbook of moral development (pp. 119–153). Mahwah, NJ: Lawrence Erlbaum Associates Publishers.

Steinberg, L. (2014). Age of opportunity: lessons from the new science of adolescence. New York: Houghton Mifflin Harcourt.

Sykes, G., & Matza, D. (1957). Techniques of neutralization: a theory of delinquency. American Sociological Review, 22(6), 664–670. https://doi.org/10.2307/2089195.

Tisak, M. S., & Turiel, E. (1988). Variation in seriousness of transgressions and children’s moral and conventional concepts. Developmental Psychology, 24(3), 352–357. https://doi.org/10.1037/0012-1649.24.3.352.

Turiel, E. (1998). The development of morality. In W. Damon & N. Eisenberg (Eds.), Handbook of child psychology, vol. 3: social, emotional, and personality development (5th ed., pp. 863–932). New York: Wiley.

Van der Velden, F., Brugman, D., Boom, J., & Koops, W. (2010). Effects of EQUIP for educators on students’ self-serving cognitive distortions, moral judgment, and antisocial behavior. Journal of Research in Character Education, 8(1), 77–95.

Wang, M., & Bodner, T. E. (2007). Growth mixture modeling: Identifying and predicting unobserved subpopulations with longitudinal data. Organizational Research Methods, 10(4), 635–656.

Wickrama, K. K., Lee, T. K., O’Neal, C. W., & Lorenz, F. O. (2016). Higher-order growth curves and mixture modeling with Mplus: a practical guide. New York: Routledge.

Wikström, P. O. H., & Treiber, K. H. (2009). Violence as situational action. International Journal of Conflict and Violence (IJCV), 3(1), 75–96. https://doi.org/10.4119/ijcv-2794.

Wilkinson, D. L., & Carr, P. J. (2008). Violent youths’ responses to high levels of exposure to community violence: what violent events reveal about youth violence. Journal of Community Psychology, 36(8), 1026–1051. https://doi.org/10.1002/jcop.2027.

Acknowledgements

Open access funding provided by Università degli Studi di Napoli Federico II within the CRUI-CARE Agreement.

Authors’ Contributions

C.E. conceived of the study, participated in its design and coordination, performed the statistical analysis and drafted the paper; G.A. participated in the design and coordination of the study and helped to interpret the data and draft the paper; M.D. performed the measurement and helped to draft the paper; D.B. conceived of the study, participated in its design and coordination and helped to draft the manuscript. All authors read and approved the final manuscript.

Data Sharing and Declaration

This article’s data will not be deposited.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. The study was approved by the Ethics Committee of University of Naples “Federico II,” Department of Humanistic Studies.

Informed Consent

Written informed consent was obtained from the parents. Informed assent was obtained from all adolescent participants included in the study.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Esposito, C., Affuso, G., Dragone, M. et al. Effortful Control and Community Violence Exposure as Predictors of Developmental Trajectories of Self-serving Cognitive Distortions in Adolescence: A Growth Mixture Modeling Approach. J Youth Adolescence 49, 2358–2371 (2020). https://doi.org/10.1007/s10964-020-01306-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10964-020-01306-x