Abstract

In this paper, we study the existence and uniqueness of the random periodic solution for a stochastic differential equation with a one-sided Lipschitz condition (also known as monotonicity condition) and the convergence of its numerical approximation via the backward Euler–Maruyama method. The existence of the random periodic solution is shown as the limit of the pull-back flows of the SDE and the discretized SDE, respectively. We establish a convergence rate of the strong error for the backward Euler–Maruyama method with order of convergence 1/2.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Periodicity is widely exhibited in a large number of natural phenomena like oscillations, waves, or even lying behind many complicated ensembles such as biological and economic systems. However, periodic behaviors are often found to be subject to random perturbation or under the influence of noise. Physicists have attempted to study random perturbations to periodic solutions for some time by considering a first linear approximation or asymptotic expansions in small noise regime, but this approach restricted its applicability to the small fluctuation (c.f. Van Kampen [13], Weiss and Knoblock [16]). It was only until recently that the random periodic solution was endowed with a proper definition (c.f. Zhao and Zheng [19], Feng, Zhao and Zhou [8]), which is compatible with definitions of both the stationary solution (also termed as random fixed points) and the deterministic periodic solution. It gives a rigorous and clearer understanding to physically interesting problems of certain random phenomena with a periodic nature and also represents a long time limit of the underlying random dynamical system.

Let us recall the definition of the random periodic solution for stochastic semi-flows given in [8]. Let H be a separable Banach space. Denote by \((\Omega ,\mathcal{F},{\mathbb {P}},(\theta _s)_{s\in {\mathbb {R}}})\) a metric dynamical system and \(\theta _s:\Omega \rightarrow \Omega \) is assumed to be measurably invertible for all \(s\in {\mathbb {R}}\). Denote \(\Delta :=\{(t,s)\in {\mathbb {R}}^2, s\le t\}\). Consider a stochastic semi-flow \(u: \Delta \times \Omega \times H\rightarrow H\), which satisfies the following standard condition

for all \(r\le s\le t\), \(r, s,t\in {\mathbb {R}}\), for a.e. \(\omega \in \Omega \). We do not assume the map \(u(t,s,\omega ): H\rightarrow H\) to be invertible for \((t,s)\in \Delta ,\ \omega \in \Omega \).

Definition 1

A random periodic path of period \(\tau \) of the semi-flow \(u: \Delta \times \Omega \times H\rightarrow H\) is an \(\mathcal{F}\)-measurable map \(y:{\mathbb {R}}\times \Omega \rightarrow H\) such that

for any \(\omega \in \Omega \).

Building on this new concept, there have been more recent progresses toward understanding the random periodicity of various stochastic systems. The existence of random periodic solutions to stochastic differential equations (SDEs) and stochastic partial differential equations (SPDEs) are initially studied in [8] and [4], with additive noise. Instead of following the traditional geometric method of establishing the Poincaré mapping, a new analytical method for coupled infinite horizon forward-backward integral equations is introduced. It was then followed by the study on the anticipating random periodic solutions (c.f. Feng, Wu and Zhao: [6] and [7]). Regarding applications, Chekroun, Simonnet and Ghil [3] employed random periodic results to climate dynamics, and Wang [14] observed random periodicity behavior in the study of bifurcations of stochastic reaction diffusion equations.

In general, random periodic solutions cannot be solved explicitly. One may treat the numerical approximation that stay sufficient close to the true solution as a good substitute to study stochastic dynamics. It is worth mentioning here that this is a numerical approximation of an infinite time horizon problem. The classical numerical approaches including the Euler–Marymaya method and a modified Milstein method to simulate random period solutions of a dissipative system with global Lipschitz condition have been investigated in [5], which is the first paper that numerical schemes were used to approximate the random period trajectory.

In this paper, we study the random periodic solutions of stochastic differential equations with weakened conditions on the drift term compared to [5] and simulate them via the backward Euler–Maruyama method. Let \(W :{\mathbb {R}} \times \Omega \rightarrow {\mathbb {R}}^d\) be a standard two-sided Wiener process on the probability space \((\Omega , {\mathcal {F}}, {\mathbb {P}})\), with the filtration defined by \({\mathcal {F}}_s^{t}:=\sigma \{W_u-W_v:s<v\le u<t\}\) and \({\mathcal {F}}^{t}={\mathcal {F}}^{t}_\infty =\vee _{s\le t}{\mathcal {F}}^{t}_s\). Throughout this paper, we shall use \(\vert \cdot \vert \) for the Euclidean norm, \(\Vert u\Vert :=\sqrt{{\mathbb {E}}[\vert u\vert ^2]}\) and \(\Vert u\Vert _p:=\root p \of {{\mathbb {E}}[\vert u\vert ^p]}\). We are interested in the \({\mathbb {R}}^d\)-valued random periodic solution to a SDE of the form

where \(\xi \) is a \({\mathcal {F}}^{t_0}\)-measurable random initial condition. In addition, A, f, and g, and \(\xi \) satisfy the following assumptions:

Assumption 1

The linear operator \(A :{\mathbb {R}}^d \rightarrow {\mathbb {R}}^d\) is self-adjoint and positive definite.

Assumption 1 implies the existence of a positive, increasing sequence \((\lambda _i)_{i\in [d]} \subset {\mathbb {R}}\) such that \(0<\lambda _1 \le \lambda _2 \le \cdots \lambda _d\), and of an orthonormal basis \((e_i)_{i\in [d]}\) of \( {\mathbb {R}}^d\) such that \(A e_i = \lambda _i e_i\) for every \(i \in [d]\), where \([d]:=\{1,\ldots ,d\}\).

Assumption 2

The mapping \(f :{\mathbb {R}} \times {\mathbb {R}}^d \rightarrow {\mathbb {R}}^d\) is continuous and periodic in time with period \(\tau \). Moreover, there exists a \(C_f \in (0,\infty )\) such that

for all \(u,u_1, u_2 \in {\mathbb {R}}^d\) and \(t \in [0,\tau )\).

Assumption 3

The diffusion coefficient functions \(g :{\mathbb {R}} \rightarrow {\mathbb {R}}\) is continuous and periodic in time with period \(\tau \). Moreover, we assume there exists a constant \(\sigma >0\) such that \(\sup _{s\in [0,\tau )}\vert g(s)\vert <\sigma \) and \(\vert g(t_1)-g(t_2)\vert \le \sigma \vert t_2-t_1\vert \) for all \(t_1,t_2 \in [0,\tau )\).

It is well known that under these assumptions the solution \(X_{\cdot }^{t_0} :[t_0,T] \times \Omega \rightarrow {\mathbb {R}}^d\) to (3) is uniquely determined by the variation-of-constants formula

1.1 The Pull-Back

We know there exists a standard \({\mathbb {P}}\)-preserving ergodic Wiener shift \(\theta \) such that \(\theta _t (\omega )(s)=W_{t+s}-W_{t}\) for \(s,t\in {\mathbb {R}}\). We will show that when \(k\rightarrow \infty \), the pull-back \(X^{-k\tau }_t(\xi )\) has a limit \(X^* _t\) in \(L^2(\Omega )\) and \(X^* _t\) is the random periodic solution of SDE (3), satisfying

To achieve it, we need additional assumptions on \(\xi \) and f.

Assumption 4

\(C_f<\lambda _1\).

Assumption 5

There exists a constant \(C_\xi \) such that \(\Vert \xi \Vert <C_\xi \).

Assumption 6

There exists a constant \({\hat{C}}_f\) such that \(\Big \vert f(t,u)-\frac{\langle f(t,u),u\rangle }{\vert u\vert ^2}u\Big \vert \le {\hat{C}}_f(1+\vert u\vert )\) for \(u\in {\mathbb {R}}^d, t\in [0,\tau )\).

Assumption 6 together with Assumptions 1 to 3 ensures the existence of a global semiflow generated from SDE (3) with additive noise [11]. Section 3 is devoted to the first main result, which claims the existence and uniqueness of random periodic solutions to the SDE (3) under the one-sided Lipschitz condition on the drift.

Theorem 7

Under Assumptions 1 to 6, there exists a unique random periodic solution \(X^{*}_t(\cdot )\in L^2(\Omega )\) such that the solution of (3) satisfies

In Sect. 4, we derive additional properties of the solution such as the uniform boundedness for a higher moment of \(X^{-k\tau }_t\) and solution regularity under an additional Assumption 13, which imposes superlinearity of f and assumes a larger lowerbound for \(\lambda _1\) compared to Assumption 4. Those properties will play an important role in proving the order of convergence of the backward Euler–Maruyama in Theorem 19.

1.2 The Backward Euler–Maruyama

For stiff ordinary differential equations, the implicit method is preferred due to its good performance even on a time grid with a large step size [15]. For its stochastic counterpart such as (3), we shall approximate the solution using the backward Euler–Maruyama method, the simplest version of implicit methods for SDEs.

Let us fix an equidistant partition \({\mathcal {T}}^h:=\{jh,\ j\in {\mathbb {Z}} \}\) with stepsize \(h\in (0,1)\). Note that \({\mathcal {T}}^h\) stretches along the real line because eventually we are dealing with an infinite time horizon problem in the form of (5). Then to simulate the solution to (3) starting at \(-k\tau \), the backward Euler–Maruyama method on \({\mathcal {T}}^h\) is given by the recursion

for all \(j \in {\mathbb {N}}\), where the initial value \({\hat{X}}_{-k\tau }^{-k\tau } = \xi \), and \(\Delta W_{-k\tau +jh}:=W_{-k\tau +(j+1)h}-W_{-k\tau +jh}\). Note that due to the periodicity of f (c.f. Assumption 2), we write \(f(-k\tau +jh, {\hat{X}}_{-k\tau +jh}^{-k\tau } )\) as \(f(jh, {\hat{X}}_{-k\tau +jh}^{-k\tau })\), and similar arguments for the g term.

The implementation of (7) requires solving a nonlinear equation at each iteration. Theorem 16 ensures the well-posedness of difference equation (7) under Assumptions 1 to 4. We explore the random periodicity of its solution in Sect. 5 and prove the second main result in our paper:

Theorem 8

Under Assumptions 1 to rm 5, for any \(h\in (0,1)\) with \(\tau =nh\), \(n\in {\mathbb {N}}\), the backward Euler–Maruyama method (7) admits a random period solution on \({\mathcal {T}}^h\).

We also determine a strong order 1/2 for the backward Euler–Maruyama method in Theorem 19 and Corollary 20. Compared to Theorem 3.4 and Theorem 4.2 in [5] which imposed condition on the size of h (to be sufficient small) because of the implementation of explicit numerical methods, we benefit a flexible choice of stepsize h from using the backward Euler–Maruyama method even in the infinite horizon case.

Finally we assess the performance of the backward Euler–Maruyama method via a numerical experiment and compare it with the one of the classical Euler–Maruyama method under various steps. The result shows that the backward Euler–Maruyama method is able to converge to the random periodic solution when the stepsize is fairly large while Euler–Maruyama method diverges.

2 Preliminaries

In this section, we present a few useful mathematical tools for later use.

Lemma 9

(The Grönwall inequality: a continuous version [1]) Let I denote a time interval in form of \([I_-,I^+]\). Let a, b and u be real-valued functions defined on I. Assume that a and b are continuous and that the negative part of a is integrable on every closed and bounded subinterval of I. Then if b is nonnegative and if u satisfy the following inequality

then

If in addition, the function a is non-decreasing, then

Lemma 10

(The Grönwall inequality: a discrete version [17, 18]) Consider two nonnegative sequences \((u_n)_{n\in {\mathbb {N}}}, (a_n)_{n\in {\mathbb {N}}} \subset {\mathbb {R}}\) which for some given \(w \in [0,\infty )\) satisfy

Then, for all \(n \in {\mathbb {N}}\), it also holds true that

where \(c_j:=\frac{1}{(1+w)^j}\) for \(j\in {\mathbb {N}}\).

Also the crucial but simple equality for the analysis of the backward Euler–Maruyama is

3 Existence and Uniqueness of the Random Periodic Solution

In this section, we focus on the existence and uniqueness of the random periodic solution to SDE (3). To achieve it, we first show there is a uniform bound for the second moment of its solution under necessary assumptions.

Lemma 11

For SDE (3) with given initial condition \(\xi \) and satisfying Assumptions 1 to 5, we have

where \(K_2:=\frac{\sigma ^2+2C_f}{2\lambda _1}\).

Proof of Lemma 11

Applying Itô formula to \(e^{2\lambda _1 t}\Vert X_{t}^{-k\tau }(\xi )\Vert ^2\) and taking the expectation yield

Note that \(2(\lambda _1I-A)\) is non-positive definite. Then making use of Assumptions 2 and 3 gives

Denote \(K_1:= e^{-2\lambda _1 k\tau }\big (\Vert \xi \Vert ^2-\frac{\sigma ^2+2C_f}{2\lambda _1}\big )\), \(K_2:=\frac{\sigma ^2+2C_f}{2\lambda _1}\) and \(K_3:=2C_f\). Note that \(K_3\le 2\lambda _1\) because of Assumption 4. By the Grönwall inequality, we have that

Note that \(K_1e^{2\lambda _1 k\tau }+K_2=\Vert \xi \Vert ^2\). By Assumption 5, it leads to

\(\square \)

Then we explore the solution dependence on initial conditions.

Lemma 12

Let Assumptions 1 to 3 hold. Denote by \(X_t^{-k\tau }\) and \(Y_t^{-k\tau }\) two solutions of SDE (3) with different initial values \(\xi \) and \(\eta \). Then

In addition, if Assumption 4 holds, then for every \(\epsilon >0\), there exists a \(t\ge -k\tau \) such that it holds

whenever \({\tilde{t}}\ge t\).

Proof of Lemma 12

Define \(E_t^{-k\tau }:=X_t^{-k\tau }-Y_t^{-k\tau }\). From (4), we have that

Similar as the proof of Lemma 11, we apply Itô formula to \(e^{2\lambda _1 t}\vert E_t^{-k\tau }\vert ^2\), take the expectation, make use of Assumption 2 and get

Applying Eqn. (10) gives the desired inequality. The claim in (14) follows if Assumption 4 holds. \(\square \)

With Lemmas 11, 12 and Assumption 6, the main result Theorem 7 can be shown by following the same argument in the proof of Theorem 2.4 in [5].

4 More Results on the Solution

In this section, we explore some properties of the solution to 3 for analysis later.

Assumption 13

There exists a constant \(q\in (1,\infty )\) and a positive L such that

for \(t_1,t_2\in [0,\tau )\) and \(u_1,u_2\in {\mathbb {R}}^d\). In addition, there exists a positive number \(p\in [4q-2,\infty )\) such that

The first property we will show is the uniform boundedness for the p-th moment of the SDE solution.

Proposition 14

Under Assumptions 1 to 5 and 13, the solution to (3) satisfies

Proof of Proposition 14

From the proof of Lemma 11, we know that

Then applying Itô formula to \(e^{p\lambda _1 t}\vert X_{t}^{-k\tau }\vert ^p=\big (e^{2\lambda _1 t}\vert X_{t}^{-k\tau }\vert ^2\big )^{p/2}\) and taking into consideration \(2(\lambda _1I-A)\) being non-positive definite give

Now by the Young inequality

and the inequality from fundamental calculus,

we have that

where \({\hat{K}}_1:= e^{-p\lambda _1 k\tau }\big (\Vert \xi \Vert ^p_p-\gamma _p\big )\). Because of Assumption 13, the rest simply follows the same way as the end of the proof for Lemma 11. \(\square \)

Following a similar argument as in Proposition 5.4 and 5.5 [2], we can easily get the following bounds for analysis later.

Proposition 15

Let Assumptions 1 to 5 and 13 hold. Then there exists a positive constant \(C_{q,A,f}\) which depends on q, d, A,\(C_f\) only, such that

for all \(t_1,t_2\ge -k\tau \). Moreover,

for all \(t_3,t_4\in [t_1,t_2]\).

5 The Random Periodic Solution of the Backward Euler–Maruyama Scheme

In this section, we will prove that the backward Euler–Maruyama method (7) admits a unique discretized random period solution. To achieve this, let us first show the existence and uniqueness of solution to the targeted scheme.

Theorem 16

(Well-posedness) Let Assumptions 1 to 4 be satisfied. Then for any \(h\in (0,1)\), there exists a unique \({\mathbb {R}}^d\)-valued sequence \(({\hat{X}}^{-k\tau }_{jh})_{h\in {\mathbb {N}}}\) satisfying the difference equation (7) on the associated time grid \({\mathcal {T}}^h\).

Proof of Theorem 16

Let \(h \in (0,1)\) and define \(G :{\mathbb {R}}^d \rightarrow {\mathbb {R}}^d\) by \(G_(\zeta ) = \zeta +Ah\zeta - h f(t, \zeta )\) for all \(\zeta \in {\mathbb {R}}^d\) and \(t\in [0,\tau )\). Then it holds

Because of Assumption 4, we have \(L_{G_t} :=1 +\lambda _1h- C_f h>1\). Hence, the uniform monotonicity theoremFootnote 1 (c.f. Proposition 3.5 in [10]) is applicable. In particular, the sequence \(({\hat{X}}^{-k\tau }_{jh})_{h\in {\mathbb {N}}}\) defined by

for every \(j \in {\mathbb {N}}\) satisfies (7). \(\square \)

The next Lemma claims there is a uniform bound for the second moment of the numerical solution under necessary assumptions.

Lemma 17

Under Assumptions 1 to 5, for any \(h\in (0,1)\), it holds for the backward Euler–Maruyama method (7) on \({\mathcal {T}}^h\) that

Proof of Lemma 17

First note that from (11), we have that for any \(N\in {\mathbb {N}}\)

From (7), we have that

Note that \({\mathbb {E}}\langle g\big ((N-1)h\big )\Delta W_{-k\tau +(N-1)h},{\hat{X}}_{-k\tau +(N-1)h}^{-k\tau }\rangle =0\). Taking the expectation of both sides of (22) and making use of Assumption 2 give

Then cancelling the same term on both side gives

Let \(\alpha :=\frac{2C_f+\sigma ^2}{2(\lambda _1-C_f)}\). Rearranging the terms above gives

By iteration, this leads to

Because of Assumptions 4 and 5, the term on the right-hand side above can be bounded by \(\Vert \xi \Vert ^2+\alpha \), which is independent of k, N and h. \(\square \)

The next result shows two numerical solutions starting from different initial conditions can be arbitrarily close after sufficiently many iterations.

Lemma 18

Under Assumptions 1 to 5, define \({\hat{X}}_{-k\tau +Nh}^{-k\tau }\) and \({\hat{Y}}_{-k\tau +Nh}^{-k\tau }\) solutions of the backward Euler–Maruyama scheme on \({\mathcal {T}}^h\). Then there exists an \(N^*\) such that for any \(N\ge N^*\), \(\Vert {\hat{X}}_{-k\tau +Nh}^{-k\tau }-{\hat{Y}}_{-k\tau +Nh}^{-k\tau }\Vert <\epsilon \).

Proof of Lemma 18

Define \(D_N:={\hat{X}}_{-k\tau +Nh}^{-k\tau }-{\hat{Y}}_{-k\tau +Nh}^{-k\tau }\). Let us use (11) again, which allows us to examine the following term:

Following a similar argument as in the proof of Lemma 17, this leads to

By iteration, we have

Because of \(\lambda _1>C_f\), the assertion follows. \(\square \)

Proof of Theorem 8

First we shall show that there exists a limit of \({\hat{X}}_{t}^{-k\tau }\) in \(L^2(\Omega )\). Note from Lemma 17, it holds \({\hat{X}}_{-k\tau +Nh}^{-k\tau }\in L^2(\Omega )\) for \(N\in {\mathbb {N}}\). For \(t=-k\tau +Nh\), by using the semi-flow property we have for \(m\in {\mathbb {N}}\)

Both sides are the same process and \({\hat{X}}^{-k\tau }_t\) on the RHS has a different initial condition. Denote \(M:=nk\), then by Lemma 18 we have for \(\epsilon >0\) there exists a \(M^*\) such that for \(M\ge M^*\)

Then we construct the Cauchy sequence \(({\hat{X}}^{-k\tau }_t)_{k\in {\mathbb {N}}}\) converging to some limit \({\hat{X}}^*_t\) in \(L^2(\Omega )\). Also it is not hard to show that the convergence is independent of the initial point. For \(k\rightarrow \infty \), we have from Lemma 18

Now let us verify the random periodicity of the backward Euler–Maruyama scheme by induction. Let us examine two terms \({\hat{X}}_{-k\tau +Nh}^{-k\tau }(\theta _\tau \omega )\) and \({\hat{X}}_{-(k-1)\tau +Nh}^{-(k-1)\tau }(\omega )\), where \(t=-k\tau +Nh\). For \({\hat{X}}_{-k\tau +Nh}^{-k\tau }(\theta _\tau \omega )\), we have the expression

where

For \({\hat{X}}_{-(k-1)\tau +Nh}^{-(k-1)\tau }(\omega )\), we have its expression given by

By induction and by the pathwise uniqueness of the solution of the backward Euler–Maruyama scheme (Theorem 16), we have that

Finally from (5) and the fact \(t=-k\tau +Nh\), we have

Therefore, \({\hat{X}}_{t}^*(\theta _\tau \omega )={\hat{X}}_{t+\tau }^{*}( \omega )\) \({\mathbb {P}}\)-a.s. \(\square \)

6 Error Analysis

Theorem 19

Under Assumptions 1 to 5 and 13, for any \(h\in (0,1)\) with \(\tau =nh\), \(n\in {\mathbb {N}}\), there exists a constant C that depends on q, A, f, g and d such that the backward Euler–Maruyama method (7) approximates the true solution of (3) on \({\mathcal {T}}^h\) with

Proof of Theorem 19

First note that

Define \(e_N:=X^{-k\tau }_{-k\tau +Nh}-{\hat{X}}^{-k\tau }_{-k\tau +Nh}\). Then

By the Young’s inequality

and Assumption 2, we are able to choose \(\epsilon _0^2:=h(\lambda _1-C_f){/3}\) such that

By Proposition 15, we know there exists a constant C depending on q, A, f and g such that

Note that \(\beta \) is bounded because of Proposition 15. Then from (11) and the estimate above, we have that

Define \({\hat{\alpha }}:=\frac{\beta h}{(\lambda _1-C_f)^2}\). The inequality above can be rearranged to

By iteration and assuming \({\hat{X}}^{-k\tau }_{-k\tau }=X^{-k\tau }_{-k\tau }=\xi \), we have

Finally due to Assumption 4 (alternatively, Assumption 13), we have \(\Vert e_N\Vert ^2\le \frac{\beta h}{(\lambda _1-C_f)^2}.\) Then the assertion follows. \(\square \)

Corollary 20

Under Assumptions 1 to 6 and 13, for any \(h\in (0,1)\) with \(\tau =nh\), \(n\in {\mathbb {N}}\), there exists a constant C that depends on q, A, f, g and d such that the exact and numerical random periodic solutions of (7) given in Theorems 7 and 8 satisfy

Proof of Corollary 20

The result simply follows from

\(\square \)

7 Numerical Analysis

In this section, we consider the following one-dimensional SDE example

It is easily verified that the associated period is 1 and Assumptions 1 to 6 and 13 are fulfilled with \(\lambda _1=10\), \(C_f=2\) and \(\sigma =0.05\). Thus, (28) has a random periodic solution according to Theorem 7 and its backward Euler–Maruyama simulation also admits a random periodic path. First, let us show, the scheme converges to its random periodic path regardless its initial condition. To achieve this, we choose the time grid between \(t_0=-10\) and \(T=0\) with stepsize 0.05, generate a Brownian realization on the time grid, and set two initial conditions to be 0.2 and \(-0.3\). Two simulated paths can then be obtained in Fig. 1 by applying the backward Euler–Maruyama method in (7) iteratively on the time grid, with given initial condition and shared Brownian realization. As shown in Fig. 1, two paths coincide shortly after the start. Note in theory \({\hat{X}}_{t}^{*}={\hat{X}}_{t}^{-\infty }\), but we take pull-back time \(-10\) as this is already enough to generate a good convergence to the random periodic paths for \(t\ge -9\).

As discussed in [5], there are two ways to demonstrate the periodicity. The easier approach is to simulate the processes \({\hat{X}}_{t}^{*}(\omega )=X^{-30}_t(\omega ,0.2)\)for \(t\in [-4,-1]\) and \({\hat{X}}_{t}^{*}(\theta _{-1}\omega )=X^{-30}_t(\theta _{-1}\omega ,0.2)\) for \(t\in [-3,0]\). We can observe that the two segmented processes are identical in Fig. 2 due to \({\hat{X}}_{t-1}^{*}(\omega )={\hat{X}}_{t}^{*}(\theta _{-1}\omega )\).

The other way to check random periodicity of path X with period \(\tau \) is to verify whether or not \({\hat{X}}^{*}(t,\theta _{-t}\omega )\) is periodic with period \(\tau \). To test it, we need to consider \(X_t^{t_0}(\theta _{-t}\omega )\). Note that for any fixed \(r\in {\mathbb {R}}\) we have that

Now set \(t_0=0\), and \(X_{0}^{0}(\theta _{-r}\omega )=x_0\). For each fixed r, we simulate the path of Eqn. (29) through the backward Euler–Maruyama method up to \(t=r\). Then we obtain the evaluation of \({\hat{X}}^{0}(r,\theta _{-r}\omega )\). To allow convergence, we look at the path pattern from \(t=2\) to \(t=5\) in Fig. 3. Apparently we have obtained a periodic pull-back path as expected, which in turn shows the random periodicity of the original path.

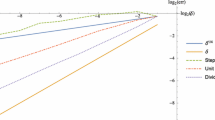

Finally, we test the order of convergence of the backward Euler–Maruyama method and compare the performance with (forward) Euler–Maruyama method. For its approximation, we first generated a reference solution with a small step size of \(h_{\text {ref}}=2^{-15}\). This reference solution was then compared to numerical solutions with larger step sizes \(h\in \{2^{-i}: i=4,5,6,7,8\}\). The error plot is shown in Fig. 4. We plot the Monte Carlo estimates of the root-mean-squared errors versus the underlying temporal step size, i.e., the number i on the x-axis indicates the corresponding simulation is based on the temporal step size \(h = 2^{-i}\). Both methods give the order of convergence above 1, which is beyond the theoretical order of convergence. When the stepsize is large, say, \(h=2^{-4}\), the Euler–Maruyama method has the error 0.048 which is almost five times of the error 0.011 from the backward Euler–Maruyama method. Indeed if we relax the stepsize to \(h=2^{-3}\), the Euler–Maruyama diverges while the backward Euler–Maruyama method still converges as expected. This further supports Theorem 8 and the advantage of backward Euler–Maruyama method: the backward Euler–Maruyama method converges regardless the size of stepsize (\(h<1\)).

Numerical experiment for simulating the random periodic solution of SDE (28): Step sizes versus \(L^2\) error

Data Availability

The datasets generated during and/or analyzed during the current study are available in the Github repository, https://github.com/yuewu57/RPS_BackwardEuler.

References

Bellman, R.: The stability of solutions of linear differential equations. Duke Math. J. 10(4), 643–647 (1943). https://doi.org/10.1215/s0012-7094-43-01059-2

Beyn, W.J., Isaak, E., Kruse, R.: Stochastic C-stability and B-consistency of explicit and implicit Euler-type schemes. J. Sci. Comput. 67(3), 955–987 (2016). https://doi.org/10.1007/s10915-015-0114-4

Chekroun, M.D., Simonnet, E., Ghil, M.: Stochastic climate dynamics: random attractors and time-dependent invariant measures. Phys. D 240(21), 1685–1700 (2011). https://doi.org/10.1016/j.physd.2011.06.005

Feng, C.R., Zhao, H.Z.: Random periodic solutions of SPDEs via integral equations and Wiener–Sobolev compact embedding. J. Funct. Anal. 251(10), 119–149 (2011). https://doi.org/10.1016/j.jfa.2012.02.024

Feng, C.R., Liu, Y., Zhao, H.Z.: Numerical approximation of random periodic solutions of stochastic differential equations. Z. Angew. Math. Phys. 68(5), 1–32 (2017). https://doi.org/10.1007/s00033-017-0868-7

Feng, C.R., Wu, Y., Zhao, H.Z.: Anticipating random periodic solutions—I. SDEs with multiplicative linear noise. J. Funct. Anal. 271(2), 365–417 (2016). https://doi.org/10.1016/j.jfa.2016.04.027

Feng, C.R., Wu, Y., Zhao, H.Z.: Anticipating Random Periodic Solutions—II. SPDEs with Multiplicative Linear Noise, arXiv:1803.00503

Feng, C.R., Zhao, H.Z., Zhou, B.: Pathwise random periodic solutions of stochastic differential equations. J. Differ. Equ. 251, 119–149 (2011). https://doi.org/10.1016/j.jde.2011.03.019

Ortega, J.M., Rheinboldt, W.C.: Iterative solution of nonlinear equations in several variables, Reprint of the 1970 original. Classics in Applied Mathematics, 30. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA, 2000. xxvi+572 pp

Riedel, S., Wu, Y.: Semi-implicit Taylor schemes for stiff rough differential equations, arXiv:2006.13689

Scheutzow, M., Schulze, S.: Strong completeness and semi-flows for stochastic differential equations with monotone drift. J. Math. Anal. Appl. 446(2), 1555–1570 (2017). https://doi.org/10.1016/j.jmaa.2016.09.049

Stuart, A.M., Humphries, A.R.: Dynamical Systems and Numerical Analysis, volume 2 of Cambridge Monographs on Applied and Computational Mathematics, Cambridge Monographs on Applied and Computational Mathematics, 2. Cambridge University Press, Cambridge, 1996. xxii+685 pp

Van Kampen, N.G.: Stochastic Processes in Physics and Chemistry. Lecture Notes in Mathematics, vol. 888. North-Holland, Amsterdam (1981)

Wang, B.X.: Existence, stability and bifurcation of random complete and periodic solutions of stochastic parabolic equations. Nonlinear Anal. 103, 9–25 (2014). https://doi.org/10.1016/j.na.2014.02.013

Wanner, G., Hairer, E.: Solving ordinary differential equations II. Springer, Berlin, 1996. xvi+614 pp

Weiss, J.B., Knobloch, E.: A stochastic return map for stochastic differential equations. J. Stat. Phys. 58, 863–883 (1990). https://doi.org/10.1007/BF01026555

Willett, D., Wong, J.S.W.: On the discrete analogues of some generalizations of Grönwall’s inequality. Monatsh. Math. 69, 362–367 (1965). https://doi.org/10.1007/BF01297622

Yevik, A., Zhao, H.Z.: Numerical approximations to the stationary solutions of stochastic differential equations. SIAM J. Numer. Anal. 49(4), 97–1416 (2011). https://doi.org/10.1137/100797886

Zhao, H.Z., Zheng, Z.H.: Random periodic solutions of random dynamical systems. J. Differ. Equ. 246(5), 2020–2038 (2009). https://doi.org/10.1016/j.jde.2008.10.011

Funding

This work is supported by the Alan Turing Institute for funding this work under EPSRC Grant EP/N510129/1 and EPSRC for funding though the Project EP/S026347/1, titled “Unparameterised multi-modal data, high order signatures, and the mathematics of data science.”

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

Authors are required to disclose financial or non-financial interests that are directly or indirectly related to the work submitted for publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, Y. Backward Euler–Maruyama Method for the Random Periodic Solution of a Stochastic Differential Equation with a Monotone Drift. J Theor Probab 36, 605–622 (2023). https://doi.org/10.1007/s10959-022-01178-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-022-01178-w

Keywords

- Random periodic solution

- Stochastic differential equations

- Monotone drift

- Backward Euler–Maruyama method