Abstract

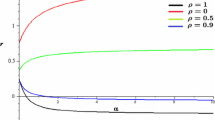

We consider a system of interacting Moran models with seed-banks. Individuals live in colonies and are subject to resampling and migration as long as they are active. Each colony has a seed-bank into which individuals can retreat to become dormant, suspending their resampling and migration until they become active again. The colonies are labelled by \({\mathbb {Z}}^d\), \(d \ge 1\), playing the role of a geographic space. The sizes of the active and the dormant population are finite and depend on the location of the colony. Migration is driven by a random walk transition kernel. Our goal is to study the equilibrium behaviour of the system as a function of the underlying model parameters. In the present paper, under a mild condition on the sizes of the active populations, the system is well defined and has a dual. The dual consists of a system of interacting coalescing random walks in an inhomogeneous environment that switch between an active state and a dormant state. We analyse the dichotomy of coexistence (= multi-type equilibria) versus clustering (= mono-type equilibria) and show that clustering occurs if and only if two random walks in the dual starting from arbitrary states eventually coalesce with probability one. The presence of the seed-bank enhances genetic diversity. In the dual this is reflected by the presence of time lapses during which the random walks are dormant and do not move.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Background, Motivation and Outline

Dormancy is an evolutionary trait observed in plants, bacteria and other microbial populations, where an organism enters a reversible state of low metabolic activity as a response to adverse environmental conditions. The dormant state of an organism in a population is characterized by interruption of basic reproduction and phenotypic development during periods of environmental stress [24, 29]. The dormant organisms reside in what is called a seed-bank of the population. After a varying and possibly large number of generations, dormant organisms can be resuscitated under more favourable conditions and reprise reproduction after becoming active by leaving the seed-bank. This strategy is known to have important implications for the genetic diversity and overall fitness of the underlying population [23, 24], since the seed-bank of a population often acts as a buffer against evolutionary forces such as genetic drift, selection and environmental variability. The importance of dormancy has led to several attempts to model seed-banks from a mathematical perspective ([1, 2]; see also [3] for a broad overview).

In [2] and [1], the Fisher–Wright model with seed-bank was introduced and analysed. In the Fisher–Wright model with seed-bank, individuals live in a colony, are subject to resampling where they adopt each other’s type, and move in and out of the seed-bank where they suspend resampling. The seed-bank acts as a repository for the genetic information of the population. Individuals that reside inside the seed-bank are called dormant and those that reside outside are called active. Both the long-time behaviour and the genealogy of the population were analysed for the continuum model obtained by letting the size of the colony tend to infinity, called the Fisher–Wright diffusion with seed-bank.

In [13,14,15], the continuum model was extended to a spatial setting in which individuals live in multiple colonies, labelled by a countable Abelian group playing the role of a geographic space. In the spatial model with seed-banks, each colony is endowed with its own seed-bank and individuals are allowed to migrate between colonies. The goal was to understand the change in behaviour compared to the spatial model without seed-bank.

Most papers on seed-banks deal with the large-colony-size limit, for which the evolution is described by a system of coupled SDE’s. In [19], a multi-colony Fisher–Wright model with seed-banks was introduced where the colony sizes are finite. However, this model is restricted to homogeneous population sizes and a finite geographic space. The present paper introduces an individual-based spatial model with seed-banks in continuous time where the sizes of the underlying populations are finite and vary across colonies. The latter make the model more interesting from a biological perspective, but raise extra technical challenges. The key tool that we use to tackle these challenges is stochastic duality [4, 11]. The spatial model introduced in this paper fits in the realm of interacting particle systems, which often admit additional structures such as duality [25, 28]. In particular, our spatial model can be viewed as a hybrid of the well-known Voter Model and the generalized Symmetric Exclusion Process, 2j-SEP, \(j\in {\mathbb {N}}/2\) [5, 11, 26]. Both the Voter Model and the 2j-SEP enjoy the stochastic duality property, and our system inherits this as well: it is dual to a system consisting of coalescing random walks with repulsive interactions. The resulting dual process shares striking resemblances with the dual processes of the Voter Model and 2j-SEP, because the original process is a modified hybrid of them. It has been recognized in the literature [1, 2, 23, 24, 31] that qualitatively different behaviour may occur when the exit time of a typical individual from the seed-bank can become large. In the present paper, we are able to model this phenomenon as well, due to the inhomogeneity in the seed-bank sizes. Our main goals are the following:

-

(1)

Introduce a model with seed-banks whose size is finite and depends on the geographic location of the colony. Prove existence and uniqueness of the process via well-posedness of an associated martingale problem and duality with a system of interacting coalescing random walks.

-

(2)

Identify a criterion for coexistence (= convergence towards multi-type equilibria) and clustering (= convergence towards mono-type equilibria). Show that there is a one-parameter family of equilibria controlled by the density of types.

-

(3)

Identify the domain of attraction of the equilibria.

-

(4)

Identify the parameter regime under which the criterion for clustering is met. In case of clustering, find out how fast the mono-type clusters grow in space-time. In case of coexistence, establish mixing properties of the equilibria.

In the present paper, we settle (1) and (2). In [18], we will address (3) and (4). We focus on the situation where the individuals can be of two types. The extension to infinitely many types, called the Fleming–Viot measure-valued diffusion, only requires standard adaptations and will not be considered here.

The paper is organized as follows: In Sect. 2, we give a quick definition of the model and state our main theorems about the well-posedness, the duality and the clustering criterion. In Sect. 3, we give a more detailed definition of the model, prove that the martingale problem associated with its generator is well-posed, establish duality with an interacting seed-bank coalescent, demonstrate that the system exhibits a dichotomy between clustering and coexistence, and formulate a necessary and sufficient condition for clustering to prevail in terms of the dual, called the clustering criterion. Sections 4–6 are devoted to the proof of our main theorems.

2 Main Theorems

In Sect. 2.1, we give a quick definition of the system. In Sect. 2.2, we argue that under mild conditions on the sizes of the active population, the system is well defined and has a dual that consists of finitely many interacting coalescing random walks.

2.1 Quick Definition of the Multi-colony System

Individuals live in colonies labelled by \({\mathbb {Z}}^d\), \(d \ge 1\), which plays the role of a geographic space. (In what follows, the geographic space can be any countable Abelian group.) Each colony has an active population and a dormant population. Each individual carries one of two types: \(\heartsuit \) and \(\spadesuit \). Individuals are subject to:

-

(1)

Active individuals in any colony resample with active individuals in any colony.

-

(2)

Active individuals in any colony exchange with dormant individuals in the same colony.

For (1) we assume that each active individual at colony i at rate a(i, j) uniformly draws an active individual at colony j and adopts its type. For (2) we assume that each active individual at colony i at rate \(\lambda \) uniformly draws a dormant individual at colony i and the two individuals trade places while keeping their type (i.e. the active individual becomes dormant and the dormant individual becomes active). Note that dormant individuals do not resample.

At each colony i, we register the pair \((X_i(t),Y_i(t))\), representing the number of active, respectively, dormant individuals of type \(\heartsuit \) at time t at colony i. We write \((N_i,M_i)\) to denote the size of the active, respectively, dormant population at colony i. The resulting Markov process is denoted by

and lives on the state space

where \([n] = \{0,1,\ldots ,n\}\), \(n \in {\mathbb {N}}\). In Sect. 3.2, we will show that under mild assumptions on the model parameters, the Markov process in (2.1) is well defined and has a dual \((Z^*(t))_{t \ge 0}\). The latter consists of finite collections of particles that perform interacting coalescing random walks, with rates that are controlled by the model parameters.

Let \({\mathcal {P}}\) be the set of probability distributions on \({\mathcal {X}}\) defined by

We say that (2.1) exhibits clustering if the distribution of Z(t) converges to a limiting distribution \(\mu \in {\mathcal {P}}\) as \(t\rightarrow \infty \). Otherwise, we say that it exhibits coexistence. In Sect. 3.2, we will show that clustering is equivalent to coalescence occurring eventually with probability 1 in the dual consisting of two particles. This will be the main route to the dichotomy.

For simplicity, we let the exchange rate \(\lambda \in (0,\infty )\) be the same for every colony, and let the migration kernel be translation invariant and irreducible.

Assumption 2.1

(Homogeneous migration) The migration kernel \(a(\cdot ,\cdot )\) satisfies:

-

\(a(\cdot ,\cdot )\) is irreducible in \({\mathbb {Z}}^d\).

-

\(a(i,j) = a(0,j-i)\) for all \(i,j\in {\mathbb {Z}}^d \).

-

\(c:=\displaystyle \sum _{i\in {\mathbb {Z}}^d} a(0,i) < \infty \) and \(a(0,0)=\frac{1}{2}\). \(\Box \)

The former of the last two assumptions ensures that the way genetic information moves between colonies is homogeneous in space, while the latter ensures that the total rate of resampling is finite and that resampling is possible also at the same colony. Since it is crucial for our analysis that the population sizes remain constant, we view migration as a change of types without the individuals actually moving themselves. In this way, genetic information moves between colonies, while the individuals themselves stay put.

We write

to denote the ratio of the size of the active and the dormant population in colony i.

2.2 Well-Posedness and Duality

Theorem 2.2

(Well-posedness and duality) Suppose that Assumption 2.1 is in force. Then, the Markov process \((Z(t))_{t\ge 0}\) in (2.1) has a factorial moment dual \((Z^*(t))_{t\ge 0}\) living in the state space \({\mathcal {X}}^*\subset {\mathcal {X}}\) consisting of all configurations with finite mass, and the martingale problem associated with (2.1) is well posed under either of the two following conditions:

-

(a)

\(\lim _{\Vert i\Vert \rightarrow \infty } \Vert i\Vert ^{-1} \log N_i = 0\) and \(\sum _{i\in {\mathbb {Z}}^d} \mathrm{e}^{\delta \Vert i\Vert } a(0,i)<\infty \) for some \(\delta >0\),

-

(b)

\(\sup _{i\in {\mathbb {Z}}^d\backslash \{0\}} \Vert i\Vert ^{-\gamma } N_i < \infty \) and \(\sum _{i\in {\mathbb {Z}}^d} \Vert i\Vert ^{d+\gamma +\delta } a(0,i)<\infty \) for \(\gamma >0\) and some \(\delta >0\).

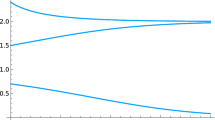

Theorem 2.2 provides us with two sufficient conditions under which the system is well-defined and has a tractable dual. It shows a trade-off: the more we restrict the tails of the migration kernel, the less we need to restrict the sizes of the active population. The sizes of the dormant population play no role because all the events (resampling, migration and exchange) in our model are initiated by active individuals and dormant individuals do not feel the spatial extent of the geographic space. Theorem 3.10, Corollary 3.11 and Theorem 3.13 in Sect. 3.2 contain the fine details.

2.3 Equilibrium: Coexistence Versus Clustering

Theorem 2.3

(Equilibrium) If the initial distribution of the system is such that each active and each dormant individual adopts a type with the same probability independently of other individuals, then the system admits a one-parameter family of equilibria.

-

The family of equilibria is parameterized by the probability to have one of the two types.

-

The system converges to a mono-type equilibrium if and only if two random walks in the dual starting from arbitrary states eventually coalesce with probability one.

Theorem 2.3 tells us that the system converges to an equilibrium when it is started from a specific class of initial distributions, namely products of binomials. It also provides a criterion in terms of the dual that determines whether the equilibrium is mono-type or multi-type. Theorem 3.14, Corollary 3.15 and Theorem 3.17 in Sect. 3.2 contain the fine details.

3 Basic Theorems: Duality, Well-Posedness and Clustering Criterion

In Sect. 3.1, we define and analyse the single-colony model. In Sect. 3.2, we do the same for the multi-colony model. Our focus is on well-posedness, duality and convergence to equilibrium.

3.1 Single-Colony Model

3.1.1 Definition: Resampling and Exchange

Consider two populations, called active and dormant, consisting of N and M haploid individuals, respectively. Individuals in the population carry one of two genetic types: \(\heartsuit \) and \(\spadesuit \). Dormant individuals reside inside the seed-bank, active individuals reside outside. The dynamics of the single-colony Moran model with seed-bank is as follows:

-

Each individual in the active population carries a resampling clock that rings at rate 1. When the clock rings, the individual randomly chooses an active individual and adopts its type.

-

Each individual in the active population also carries an exchange clock that rings at rate \(\lambda \). When the clock rings, the individual randomly chooses a dormant individual and exchanges state, i.e. becomes dormant and forces the chosen dormant individual to become active. During the exchange, the two individuals retain their type.

Since the sizes of the two populations remain constant, we only need two variables to describe the dynamics of the population, namely the number of a type-\(\heartsuit \) individuals in both populations (see Table 1).

Let x and y denote the number of individuals of type \(\heartsuit \) in the active and the dormant population, respectively. After a resampling event, (x, y) can change to \((x-1,y)\) or \((x+1,y)\), while after an exchange event (x, y) can change to \((x-1,y+1)\) or \((x+1,y-1)\). Both changes in the resampling event occur at rate \(x\frac{N-x}{N}\). In the exchange event, however, to see (x, y) change to \((x-1,y+1)\), an exchange clock of a type-\(\heartsuit \) individual in the active population has to ring (which happens at rate \(\lambda x\)), and that individual has to choose a type-\(\spadesuit \) individual in the dormant population (which happens with probability \(\frac{M-y}{M}\)). Hence, the total rate at which (x, y) changes to \((x-1,y+1)\) is \(\lambda x \frac{M-y}{M}\). By the same argument, the total rate at which (x, y) changes to \((x+1,y-1)\) is \(\lambda (N-x)\frac{y}{M}\).

For convenience we multiply the rate of resampling by a factor \(\frac{1}{2}\), in order to make it compatible with the Fisher–Wright model. Thus, the generator G of the process is given by

where

describes the Moran resampling of active individuals at rate \(\frac{1}{2}\) and

describes the exchange between active and dormant individuals at rate \(\lambda \). From here onwards, we denote the Markov process associated with the generator G by

where X(t) and Y(t) are the number of type-\(\heartsuit \) active and dormant individuals at time t, respectively. The process Z has state space \([N]\times [M]\), where \([N]=\{0,1,\ldots ,N\}\) and \([M]=\{0,1,\ldots ,M\}\). Note that Z is well defined because it is a continuous-time Markov chain with finitely many states.

3.1.2 Duality and Equilibrium

The classical Moran model is known to be dual to the block-counting process of the Kingman coalescent. In this section, we show that the single-colony Moran model with seed-bank also has a coalescent dual.

Definition 3.1

(Block-counting process) The block-counting process of the interacting seed-bank coalescent (defined in Definition 3.5) is the continuous-time Markov chain

taking values in the state space \([N] \times [M]\) with transition rates

where \(K=\frac{N}{M}\) is the ratio of the sizes of the active and the dormant population. \(\square \)

The first two transitions in (3.6) correspond to exchange, the third transition to resampling. Later in this section we describe the associated interacting seed-bank coalescent process, which gives the genealogy of Z.

The following result gives the duality between Z and \(Z^*\).

Theorem 3.2

(Duality) The process Z is dual to the process \(Z^*\) via the duality relation

where \({\mathbb {E}}\) stands for generic expectation. On the left the expectation is taken over Z with initial state \(Z(0)=(X,Y)\in [N]\times [M]\); on the right the expectation is taken over \(Z^*\) with initial state \(Z^*(0)=(n,m)\in [N]\times [M]\).

Note that the duality relation fixes the factorial moments and thereby the mixed moments of the random vector (X(t), Y(t)). This enables us to determine the equilibrium distribution of Z.

Although the above duality is new in the literature on seed-banks, the notion of factorial duality is not uncommon in mathematical models involving finite and fixed population sizes [8, 12]. Similar types of dualities are often found for other models too (e.g. self-duality of independent random walks, exclusion and inclusion processes, etc. [11]). Remarkably, in the special case where \(N=M=2j\) for some \(j\in {\mathbb {N}}/2,\) Giardinà et al. (2009) [11, Section 3.2] identified the same duality relation as in (3.7) as a self-duality for the generalized 2j-SEP on two-sites. This is not surprising given the fact that the exchange rates between active and dormant individuals defined in Table 1 are precisely the rates (up to rescaling) for the 2j-SEP on two sites. We refer the reader to Sect. 4.1 to gain further insights into this.

Proposition 3.3

(Convergence of moments) For any \((X,Y),(n,m)\in [N]\times [M]\) with \((n,m)\ne (0,0)\),

Since the vector (X(t), Y(t)) takes values in \([N]\times [M]\), which has \((N+1)(M+1)\) points, the above proposition determines the limiting distribution of (X(t), Y(t)).

Corollary 3.4

(Equilibrium) Suppose that Z starts from initial state \((X,Y)\in [N]\times [M]\). Then, (X(t), Y(t)) converges in law as \(t\rightarrow \infty \) to a random vector \((X_\infty ,Y_\infty )\) whose distribution is given by

Note that the equilibrium behaviour of Z is the same as for the classical Moran model without seed-bank. The fixation probability of type \(\heartsuit \) is \(\tfrac{X+Y}{N+M}\), which is nothing but the initial frequency of type-\(\heartsuit \) individuals in the entire population. Even though the presence of the seed-bank delays the time of fixation, because its size is finite size it has no significant effect on the overall qualitative behaviour of the process. We will see in Sect. 3.2 that the situation is different in the multi-colony model.

3.1.3 Interacting Seed-Bank Coalescent

In our model, the genealogy of a sample taken from the finite population of \(N+M\) individuals is governed by a partition-valued coalescent process similarly as for the genealogy of the classical Moran model. However, due to the presence of the seed-bank, blocks of a partition are marked as A (active) and D (dormant). Unlike in the genealogy of the classical Moran model, the blocks interact with each other. This interaction is present because of the restriction to finite size of the active and the dormant population. For this reason, we name the block process an interacting seed-bank coalescent. For convenience, we will use the word lineage to refer to a block in a partition.

Let \({\mathcal {P}}_k\) be the set of partitions of \(\{1,2,\ldots ,k\}\). For \(\xi \in {\mathcal {P}}_k\), denote the number of lineages in \(\xi \) by \(|\xi |\). Furthermore, for \(j,k,l\in {\mathbb {N}}\), define

The state space of the process is \({\mathcal {P}}_{N,M}=\{(\xi ,\varvec{u})\,:\,\xi \in {\mathcal {P}}_{N+M},\varvec{u}\in {\mathcal {M}}_{|\xi |,N,M}\}\). Note that \({\mathcal {P}}_{N,M}\) contains only those marked partitions of \(\{1,2,\ldots ,N+M\}\) that have at most N active lineages and M dormant lineages. This is because we can only sample at most N active and M dormant individuals from the population.

Before we give the formal definition, let us adopt some notation. For \(\pi ,\pi ^\prime \in {\mathcal {P}}_{N,M}\), we say that \(\pi \succ \pi ^\prime \) if \(\pi ^\prime \) can be obtained from \(\pi \) by merging two active lineages. Similarly, we say that \(\pi \bowtie \pi ^\prime \) if \(\pi ^\prime \) can be obtained from \(\pi \) by altering the state of a single lineage (\(A\rightarrow D\) or \(D\rightarrow A\)). We write \(|\pi |_A\) and \(|\pi |_D\) to denote the number of active and dormant lineages present in \(\pi \), respectively.

Definition 3.5

(Interacting seed-bank coalescent) The interacting seed-bank coalescent is the continuous-time Markov chain with state space \({\mathcal {P}}_{M,N}\) characterized by the following transition rates:

\(\square \)

The factor \(1-\frac{|\pi |_D}{M}\) in the transition rate of a single active lineage when \(\pi \) becomes dormant reflects the fact that as the seed-bank gets full, it becomes more difficult for an active lineage to enter the seed-bank. Similarly, as the number of active lineages decreases due to the coalescence, it becomes easier for a dormant lineage to leave the seed-bank and become active. This also tells us that there is a repulsive interaction between the lineages of the same state (A or D). Due to this interaction, it is tricky to study the coalescent. As N, M get large, the interaction becomes weak. As \(N,M\rightarrow \infty \), after proper space-time scaling, the coalescent converges weakly to a limit coalescent where the interaction is no longer present. In fact, it can be shown that when both the time and the parameters are scaled properly, the coalescent converges weakly as \(N,M\rightarrow \infty \) to the seed-bank coalescent described in [1].

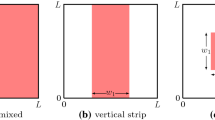

Scheme of transitions for an interacting particle system with an active reservoir of size \(N=6\) and a dormant reservoir of size \(M=2\), so that \(K=\tfrac{N}{M}=\tfrac{6}{2}=3\). The effective rate for each of n active particles to become dormant is \(\lambda \tfrac{M-m}{M}\) when the dormant reservoir has m particles. Similarly, the effective rate for each of m dormant particles to become active is \(\lambda K \tfrac{N-n}{N}\) when the active reservoir has n particles

We can also describe the coalescent in terms of an interacting particle system with the help of a graphical representation (see Fig. 1). The interacting particle system consists of two reservoirs, called active reservoir and dormant reservoir, having N and M labelled sites, respectively, each of which can be occupied by at most one particle. The particles in the active and dormant reservoir are called active and dormant particles, respectively. The active particles can coalesce with each other, in the sense that if an active particle occupies a labelled site where an active particle is present already, then the two particles are glued together to form a single particle at that site. Active particles can become dormant by moving to an empty site in the dormant reservoir, while dormant particles can become active by moving to an empty site in the active reservoir. The transition rates are as follows:

-

An active particle tries to coalesce with another active particle at rate \(\frac{1}{2}\) by choosing uniformly at random a labelled site in the active reservoir. If the chosen site is empty, then it ignores the transition; otherwise, it coalesces with the active particle present at the new site.

-

An active particle becomes dormant at rate \(\lambda \) by moving to a random labelled site in the dormant reservoir when the chosen site is empty; otherwise, it remains in the active reservoir.

-

A dormant particle becomes active at rate \(\lambda K\) by moving to a random labelled site in the active reservoir when the chosen site is empty; otherwise, it remains in the dormant reservoir.

Clearly, the particles interact with each other due to the finite capacity of the two reservoirs. If \(N,M\rightarrow \infty \), then the probability to choose an empty site in a reservoir tends to 1, and so the system converges (after proper scaling) to an interacting particle system where the particles move independently between the two reservoirs.

Note that if we define \(n_t=\) number of active particles at time t and \(m_t=\) number of dormant particles at time t, then \(Z^*=(n_t,m_t)_{t\ge 0}\) is the block-counting process defined in Definition 3.1. Also, if we remove the labels of the sites in the two reservoirs and represents the particle configuration by an element of \({\mathcal {P}}_{N,M}\), then we obtain the interacting seed-bank coalescent described in Definition 3.5. Even though it is natural to describe the genealogical process via a partition-valued stochastic process, we will stick with the interacting particle system description of the dual, since this will be more convenient for the multi-colony model.

3.2 Multi-colony Model

In this section, we consider multiple colonies, each with their own seed-bank. Each colony has an active population and a dormant population. We take \({\mathbb {Z}}^d\) as the underlying geographic space where the colonies are located (any countable Abelian group will do). With each colony \(i\in {\mathbb {Z}}^d\) we associate a variable \((X_i,Y_i)\), with \(X_i\) and \(Y_i\) the number of type-\(\heartsuit \) active and dormant individuals, respectively, at colony i. Let \((N_i,M_i)\) denote the size of the active and the dormant population at colony i. In each colony, active individuals are subject to resampling and migration, and to exchange with dormant individuals that are in the same colony. Dormant individuals are not subject to resampling and migration.

Since it is crucial for our duality to keep the population sizes constant, we consider migration of types without the individuals actually moving themselves. To be precise, by a migration from colony j to colony i we mean that an active individual from colony i randomly chooses an active individual from colony j and adopts its type. In this way, the genetic information moves from colony j to colony i, while the individuals themselves stay put.

3.2.1 Definition: Resampling, Exchange and Migration

We assume that each active individual at colony i resamples from colony j at rate a(i, j), adopting the type of a uniformly chosen active individual at colony j. Here, the migration kernel \(a(\cdot ,\cdot )\) is assumed to satisfy Assumption 2.1. After a migration to colony i, the only variable that is affected is \(X_i\), the number of type-\(\heartsuit \) active individuals at colony i. The final state can be either \(X_i-1\) or \(X_i+1\) depending on whether a type-\(\heartsuit \) active individual from colony i chooses a type-\(\spadesuit \) active individual from another colony or a type-\(\spadesuit \) active individual from colony i chooses a type-\(\heartsuit \) active individual from another colony. The rate at which \(X_i\) changes to \(X_i-1\) due to a migration from colony j is

while the rate at which \(X_i\) changes to \(X_i+1\) due to a migration from colony j is

Note that for \(i=j\) the migration rate is

which is the same as the effective birth and death rate in the single-colony Moran model. Thus, the resampling within each colony is already taken care of via the migration.

It remains to define the associated exchange mechanism between the active and the dormant individuals in a colony. The exchange mechanism is the same as in the single-colony model, i.e. in each colony each active individual at rate \(\lambda \) performs an exchange with a dormant individual chosen uniformly from the seed-bank of that colony. For simplicity, we take the exchange rate \(\lambda \) to be the same in each colony.

The state space \({\mathcal {X}}\) of the process is

A configuration \(\eta \in {\mathcal {X}}\) is denoted by \(\eta = (X_i,Y_i)_{i\in {\mathbb {Z}}^d}\), with \(X_i\in [N_i]\) and \(Y_i\in [M_i]\).

For each \(i\in {\mathbb {Z}}^d\), let \(\varvec{\delta }_{i,A}=(X_n,Y_n)_{n\in {\mathbb {Z}}^d}\) and \(\varvec{\delta }_{i,D}=({\hat{X}}_n,{\hat{Y}}_n)_{n\in {\mathbb {Z}}^d}\) be the configurations defined as

For two configurations \(\eta = ({\bar{X}}_i,{\bar{Y}}_i)_{i\in {\mathbb {Z}}^d}\) and \(\xi = ({\hat{X}}_i,{\hat{Y}}_i)_{i\in {\mathbb {Z}}^d}\), we define \(\eta \pm \xi := (X_i,Y_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}\) by setting, for each \(i\in {\mathbb {Z}}^d\),

Throughout the remainder of this paper, we adopt the convention given in (3.14) for addition and subtraction of configurations in \({\mathcal {X}}\).

The generator L for the process, acting on functions in

is given by

where

describes the resampling of active individuals in different colonies (= migration),

describes the resampling of active individuals in the same colony, and

describes the exchange of active and dormant individuals in the same colony.

From now on, we denote the process associated with the generator L by

with \(X_i(t)\) and \(Y_i(t)\) representing the number of type-\(\heartsuit \) active and dormant individuals at colony i at time t, respectively. Since Z is an interacting particle system, in order to show existence and uniqueness of the process, we can in principle follow the method described by Liggett in [25, Chapter I, Section 3]. However, for Liggett’s method to work, a uniform bound on the sizes \((N_i, M_i)_{i\in {\mathbb {Z}}^d}\) is needed that we want to avoid. Fortunately, if L is a Markov pregenerator (see [25, Definition 2.1]), then we can construct the process by providing a unique solution to the martingale problem for L. The following proposition tells us that L is indeed a Markov pregenerator and thus prepares the ground for proving the well-posedness of the martingale problem for L.

Proposition 3.6

(Pregenerator) The generator L defined in (3.16), acting on functions in \({\mathcal {D}}\) defined in (3.15), is a Markov pregenerator.

The existence of solutions to the martingale problem will be shown by using the techniques described in [25]. In order to establish uniqueness of the solution, we will need to exploit the dual process.

3.2.2 Duality

The dual process is a block-counting process associated with a spatial version of the interacting seed-bank coalescent described in Sect. 3.1.3. We briefly describe the spatial coalescent process in terms of an interacting particle system. At each site \(i\in {\mathbb {Z}}^d\), there are two reservoirs, an active reservoir and a dormant reservoir, with \(N_i\in {\mathbb {N}}\) and \(M_i\in {\mathbb {N}}\) labelled locations, respectively. Each location in a reservoir can accommodate at most one particle. As before, we refer to the particles in an active and dormant reservoir as active particles and dormant particles, respectively. The dynamics of the interacting particle system is as follows (see Fig. 2).

-

An active particle at site \(i\in {\mathbb {Z}}^d\) becomes dormant at rate \(\lambda \) by moving to a random labelled location (out of \(M_i\) many) in the dormant reservoir at site i when the chosen labelled location is empty; otherwise, it remains in the active reservoir.

-

A dormant particle at site \(i\in {\mathbb {Z}}^d\) becomes active at rate \(\lambda K_i\) with \(K_i=\tfrac{N_i}{M_i}\) by moving to a random labelled location (out of \(N_i\) many) in the active reservoir at site i when the chosen labelled location is empty; otherwise, it remains in the dormant reservoir.

-

An active particle at site i chooses a random labelled location (out of \(N_j\) many) from the active reservoir at site j at rate a(i, j) and does the following:

-

If the chosen location in the active reservoir at site j is empty, then the particle moves to site j and thereby migrates from the active reservoir at site i to the active reservoir at site j.

-

If the chosen location in the active reservoir at site j is occupied by a particle, then it coalesces with that particle.

-

Note that an active particle can migrate between different sites in \({\mathbb {Z}}^d\) and can coalesce with another active particle even when they are at different sites in \({\mathbb {Z}}^d\). For simplicity, we will impose the same assumptions on the migration kernel \(a(\cdot ,\cdot )\) as stated in Assumption 2.1. A configuration \((\eta _i)_{i\in {\mathbb {Z}}^d}\) of the particle system is an element of \(\prod _{i\in {\mathbb {Z}}^d}\{0,1\}^{N_i}\times \{0,1\}^{M_i}\). For \(i\in {\mathbb {Z}}^d\), \(\eta _i\) represents the state of the labelled locations in the active and the dormant reservoir at site i (1 means occupied by a particle, 0 means empty).

Below we give the definition of the block-counting process associated with the spatial coalescent process described above. Although it is an interesting problem to construct the block-counting process starting from a configuration with infinitely many particles, we will restrict ourselves to configurations with finitely many particles only because this makes the state space countable. Thus, the block-counting process is a continuous-time Markov chain on a countable state space, and hence, in the definition below, it suffices to specify the possible transitions and their respective rates only.

Definition 3.7

(Dual) The dual process

is a continuous-time Markov chain with state space

and with transition rates

where the configurations \(\varvec{\delta }_{i,A},\varvec{\delta }_{i,D}\in {\mathcal {X}}^* \subset {\mathcal {X}}\) are as in (3.13), and additions and subtractions of configurations are performed in accordance with (3.14). \(\square \)

Here, \(n_i(t)\) and \(m_i(t)\) are the number of active and dormant particles at site \(i\in {\mathbb {Z}}^d\) at time t. The first transition describes the coalescence of an active particle at site i with other active particles elsewhere. The second and third transitions describe the movement of particles between the active and the dormant reservoir at site i. The fourth transition describes the migration of an active particle from site i to site j. The following lemma tells us that the dual process \(Z^*\) is a well-defined and non-explosive (equivalent to uniqueness) Feller process on the countable state space \({\mathcal {X}}^*\).

Lemma 3.8

(Uniqueness of dual) There exists a unique minimal Feller process \((Z^*(t))_{t\ge 0}\) on \({\mathcal {X}}^*\) with transition rates given in (3.23).

Before we proceed we recall the definition of the martingale problem.

Definition 3.9

(Martingale problem) Suppose that \((L,{\mathcal {D}})\) is a Markov pregenerator, and let \(\eta \in {\mathcal {X}}\). A probability measure \({\mathbb {P}}_\eta \) (or, equivalently, a process with law \({\mathbb {P}}_\eta \)) on \(D([0,\infty ),{\mathcal {X}})\) is said to solve the martingale problem for L with initial point \(\eta \) if

-

\({\mathbb {P}}_\eta [\xi _{(\cdot )}\in D([0,\infty ),{\mathcal {X}}): \xi _0=\eta ]=1\).

-

\((f(\eta _t)-\int _{0}^{t} (Lf)(\eta _s)\,\mathrm{d}s)_{s \ge 0}\) is a martingale relative to \(({\mathbb {P}}_\eta ,({\mathcal {F}}_t)_{t\ge 0})\) for all \(f\in {\mathcal {D}}\), where \((\eta _t)_{t\ge 0}\) is the coordinate process on \(D([0,\infty ),{\mathcal {X}})\) and \(({\mathcal {F}}_t)_{t\ge 0}\) is the filtration given by \({\mathcal {F}}_t:=\sigma (\eta _s\,|\,s\le t)\) for \(t\ge 0.\) \(\Box \)

The following theorem gives the duality relation between the dual process \(Z^*\) and any solution to the martingale problem for \((L,{\mathcal {D}})\). This type of duality is sometimes referred to as martingale duality.

Theorem 3.10

(Duality relation) Let the process Z with law \({\mathbb {P}}_\eta \) be a solution to the martingale problem for \((L,{\mathcal {D}})\) starting from initial state \(\eta =(X_i,Y_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}\). Let \(Z^*\) be the dual process with law \({\mathbb {P}}^\xi \) starting from initial state \(\xi =(n_i,m_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}^*\). For \(t\ge 0\), let \(\varGamma (t)\) be the random variable defined by

Suppose that the sizes \((N_i)_{i\in {\mathbb {Z}}^d}\) of the active populations are such that for any \(T>0\),

Then, for any \(t\ge 0\),

where the expectations are taken with respect to \({\mathbb {P}}_\eta \) and \({\mathbb {P}}^\xi \), respectively.

Note that the duality function is a product over all colonies of the duality function that appeared in the single-colony model. The infinite products are well defined: all but finitely many factors are 1, because of our assumption that there are only finitely many particles in the dual process. Also note that there is no restriction on \((M_i)_{i\in {\mathbb {Z}}^d}\), the sizes of the dormant populations. This is because dormant individuals do not migrate and therefore do not feel the spatial extent of the system.

At first glance, it may seem that (3.25) places a severe restriction on \((N_i)_{i\in {\mathbb {Z}}^d}\), the sizes of the active populations. However, this is not the case. The following corollary provides us with a large class of active population sizes for which Theorem 3.10 is true under mild assumptions on the migration kernel \(a(\cdot ,\cdot )\).

Corollary 3.11

(Duality criterion) Suppose that Assumption 2.1 is in force. Then, (3.25) and consequently the duality relation in (3.26) hold for every \((N_i)_{i\in {\mathbb {Z}}^d} \in {\mathcal {N}}\), where

-

(a)

either

$$\begin{aligned} {\mathcal {N}} := \left\{ (N_i)_{i\in {\mathbb {Z}}^d} \in {\mathbb {N}}^{{\mathbb {Z}}^d}:\, \lim _{\Vert i\Vert \rightarrow \infty } \frac{1}{\Vert i\Vert } \log N_i = 0\right\} \end{aligned}$$(3.27)when \(\sum _{i\in {\mathbb {Z}}^d} \mathrm{e}^{\delta \Vert i\Vert }a(0,i) <\infty \) for some \(\delta >0\),

-

(b)

or

$$\begin{aligned} {\mathcal {N}} := \left\{ (N_i)_{i\in {\mathbb {Z}}^d} \in {\mathbb {N}}^{{\mathbb {Z}}^d}:\, \sup _{i\in {\mathbb {Z}}^d\backslash \{0\}}\frac{N_i}{\Vert i\Vert ^\delta } < \infty \right\} \end{aligned}$$(3.28)when \(\sum _{i\in {\mathbb {Z}}^d} \Vert i\Vert ^\gamma a(0,i) <\infty \) for some \(\delta >0 \) and \(\gamma >d+\delta \).

Corollary 3.11 shows a trade-off: the more we restrict the tails of the migration kernel, the less we need to restrict the sizes of the active populations.

3.2.3 Well-Posedness

We use a martingale problem for the generator L defined in (3.16), in the sense of [9, p.173], to construct Z. The following proposition gives existence of solutions for any choice of the reservoir sizes. As for the uniqueness of solutions, we will see that a restriction on the sizes of the active populations is required.

Proposition 3.12

(Existence) Let L be the generator defined in (3.16) acting on the set of local functions \({\mathcal {D}}\) defined in (3.15). Then, for all \(\eta \in {\mathcal {X}}\) there exists a solution \({\mathbb {P}}_\eta \) (a probability measure on \(D([0,\infty ),{\mathcal {X}})\)) to the martingale problem of \((L,{\mathcal {D}})\) with initial state \(\eta \).

The following theorem gives the well-posedness of the martingale problem for \((L,{\mathcal {D}})\) under a restricted class of sizes of the active populations and thus proves the existence of a unique Feller Markov process describing our multi-colony model.

Theorem 3.13

(Well-posedness) Let \((N_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {N}}\) and \((M_i)_{i\in {\mathbb {Z}}^d}\in {\mathbb {N}}^{{\mathbb {Z}}^d}\), and let L be the generator defined in (3.16) acting on the set of local functions \({\mathcal {D}}\) defined in (3.15). Then, the following hold:

-

For all \(\eta \in \prod _{i\in {\mathbb {Z}}^d}[N_i]\times [M_i]\), there exists a unique solution Z in \(D([0,\infty ),{\mathcal {X}})\) of the martingale problem for \((L,{\mathcal {D}})\) with initial state \(\eta \).

-

Z is Feller and strong Markov, and its generator is an extension of \((L,{\mathcal {D}})\).

In view of the above result, from here onwards, we implicitly assume that the restriction on \((N_i)_{i\in {\mathbb {Z}}^d}\) to \({\mathcal {N}}\) is always in force.

3.2.4 Equilibrium

Let us set \(Z_i(t):=(X_i(t),Y_i(t))\) for \(i\in {\mathbb {Z}}^d\) and denote by \(\mu (t)\) the distribution of Z(t). Further, for each \(\theta \in [0,1]\) and \(i\in {\mathbb {Z}}^d,\) let \(\nu _{\theta }^i\) be the probability measure on \([N_i]\times [M_i]\) defined as

For \(\theta \in [0,1]\), let \(\nu _\theta \) be the distribution on \({\mathcal {X}}\) defined by \(\displaystyle \nu _\theta :=\bigotimes _{i\in {\mathbb {Z}}^d}\nu _\theta ^i\) and set

Let \(D\,:\,{\mathcal {X}}\times {\mathcal {X}}^*\rightarrow [0,1]\) be the function defined by

Theorem 3.14

(Convergence to equilibrium) Suppose that \(\mu (0)=\nu _\theta \in {\mathcal {J}}\) for some \(\theta \in [0,1]\). Then, there exists a probability measure \(\nu \) determined by the parameter \(\theta \) such that

-

\(\lim \limits _{t\rightarrow \infty }\mu (t) = \nu \).

-

\(\nu \) is an equilibrium for the process Z.

-

\({\mathbb {E}}_\nu [D(Z(0);\eta )] = \lim \limits _{t\rightarrow \infty } {\mathbb {E}}^\eta [\theta ^{|Z^*(t)|}]\), where \(D(\cdot ,\cdot )\) is defined in (3.31), the right expectation is taken w.r.t. the dual process \(Z^*\) started at configuration \(\eta =(n_i,m_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}^*\) and \(|Z^*(t)|:=\sum _{i\in {\mathbb {Z}}^d} [n_i(t)+m_i(t)]\) is the total number of dual particles present at time t.

Corollary 3.15

Let \(\nu \) be the equilibrium measure of Z in Theorem 3.14 corresponding to \(\theta \in [0,1]\). Then,

3.2.5 Clustering Criterion

We next analyse the long-time behaviour of the multi-colony Moran model with seed-banks. Our interest is to capture the nature of the equilibrium. To be precise, we investigate whether coexistence of different types is possible in equilibrium. The measures \(\bigotimes _{i\in {\mathbb {Z}}^d}\delta _{(0,0)}\) and \(\bigotimes _{i\in {\mathbb {Z}}^d}\delta _{(N_i,M_i)}\) are the trivial equilibria where the system concentrates on only one of the two types. When the system converges to an equilibrium that is not a mixture of these two trivial equilibria, we say that coexistence happens. For \(i\in {\mathbb {Z}}^d,\) let us denote the frequency of type-\(\heartsuit \) active and dormant individuals at colony i at time t by \(x_i(t):=\tfrac{X_i(t)}{N_i}\) and \( y_i(t):=\tfrac{Y_i(t)}{M_i}\) respectively.

Definition 3.16

(Clustering and Coexistence) The system is said to exhibit clustering if the following hold:

-

\(\lim \limits _{t\rightarrow \infty }{\mathbb {P}}_\eta (x_i(t)\in \{0,1\})=1,\quad \lim \limits _{t\rightarrow \infty }{\mathbb {P}}_\eta (y_i(t)\in \{0,1\})=1\),

-

\(\lim \limits _{t\rightarrow \infty }{\mathbb {P}}_\eta (x_i(t)\ne x_j(t))=0,\quad \lim \limits _{t\rightarrow \infty }{\mathbb {P}}_\eta (y_i(t)\ne y_j(t))=0\),

-

\(\lim \limits _{t\rightarrow \infty }{\mathbb {P}}_\eta (x_i(t)\ne y_j(t))=0\),

for all \(i,j\in {\mathbb {Z}}^d\) and any initial configuration \(\eta \in {\mathcal {X}}\). Otherwise, the system is said to exhibit coexistence. \(\square \)

The above conditions make sure that if an equilibrium exists, then it is a mixture of the two trivial equilibria.

The following criterion, which follows from Corollary 3.11, gives an equivalent condition for clustering.

Theorem 3.17

(Clustering criterion) The system clusters if and only if in the dual process defined in Definition 3.7 two particles, starting from any locations in \({\mathbb {Z}}^d\) and any states (active or dormant), coalesce with probability 1.

Note that the system clusters if and only if the genetic variability at time t between any two colonies converges to 0 as \(t\rightarrow \infty \). From the duality relation in Theorem 3.10, it follows that this quantity is determined by the state of the dual process starting from two particles.

4 Proofs: Duality and Equilibrium for the Single-Colony Model

Section 4.1 contains the proof of Theorem 3.2, which follows the algebraic approach to duality described in [4, 30]. Section 4.2 contains the proof of Proposition 3.3 and Corollary 3.4, which uses the duality in the single-colony model.

4.1 Duality and Change of Representation

Before we proceed with the proof of Theorem 3.2, and other results related to stochastic duality, it is worth stressing the importance of duality theory. Though originally introduced in the context of interacting particle systems, over the last decade duality theory has gained popularity in various fields, ranging from statistical physics and stochastic analysis to population genetics. One reason behind this wide interests is the simplification that duality provides: it often allows one to extract information about a complex stochastic process through a simpler process. To date, in the literature there exist two systematic approaches towards duality, namely pathwise construction and Lie-algebraic framework. The former of the two approaches is more practical and widespread in the context of mathematical population genetics [7, 16, 20, 21], while the latter has been developed more recently and reveals deeper mathematical structures behind duality, and often also provides a larger class of duality functions (see, for example, [4, 10, 17, 30] for a general overview and further references). In what follows, we adopt the Lie algebraic framework suggested by Carinci et al. (2015) [4] and prepare the ground for this setting. The downside is that this approach does not capture the underlying genealogy of the original process. However, it does offer the opportunity to obtain a larger class of duality functions by applying symmetries from the Lie algebra to an already existing duality function [11]. In this paper, we refrain from exploring the latter aspect of the Lie-algebraic framework.

We start with briefly recalling that a (real) Lie algebra \({\mathfrak {g}}\) is a linear space over \({\mathbb {R}}\) endowed with a so-called Lie bracket \([\cdot ,\cdot ]:\,{\mathfrak {g}}\times {\mathfrak {g}}\rightarrow {\mathfrak {g}}\) that is bilinear, skew-symmetric and satisfies the Jacobi identity [30]. The requirement of the bilinearity and skew-symmetry uniquely characterizes a Lie bracket by its action on a basis of \({\mathfrak {g}}\). An example of a (real) Lie algebra is the well-known \(\mathfrak {su}(2)\)-algebra, which is the three-dimensional vector space over \({\mathbb {R}}\) defined by the action of a Lie bracket on its basis elements \(\{J^+, J^-, J^0\}\) as

For \(\alpha \in {\mathbb {N}}\), let \(V_\alpha \) be the linear space of all functions \( f:\,[\alpha ] \rightarrow {\mathbb {R}}\), and let \(\mathfrak {gl}(V_\alpha )\) denote the space of all linear operators on \(V_\alpha \). Note that \(\mathfrak {gl}(V_\alpha )\) is a \((1+\alpha )^2\)-dimensional Lie algebra with the natural choice of Lie bracket given by \([A,B]:=AB-BA\) for \(A,B\in \mathfrak {gl}(V_\alpha )\). Let us define the operators \(J^{\alpha ,\pm },J^{\alpha ,0},A^{\alpha ,\pm },A^{\alpha ,0} \in \mathfrak {gl}(V_\alpha )\) acting on \( f\,:\,[\alpha ] \rightarrow {\mathbb {R}}\) as

It is straightforward to see that

which are the same commutation relations as in (4.1). Thus, for each \(\alpha \in {\mathbb {N}}\), the Lie homomorphism \(\phi _\alpha :\,\mathfrak {su}(2)\rightarrow \mathfrak {gl}(V_\alpha )\) defined by its action on the generators \(\{J^+, J^-, J^0\}\) given by

is a finite-dimensional representation of \(\mathfrak {su}(2)\). Similarly, we can verify that \(\{J^{\alpha ,+},J^{\alpha ,-},J^{\alpha ,0}\}\), \(\alpha \in {\mathbb {N}}\), form a representation of the dual \(\mathfrak {su}(2)\)-algebra (defined by the commutation relations in (4.1), but with opposite signs).

Below we introduce the notion of duality between two operators and prove a lemma that will be crucial in the proof of duality of both the single-colony and the multi-colony model. The relevance to our context of the above discussion on \(\mathfrak {su}(2)\) and its dual algebra will become clear as we go along.

Definition 4.1

(Operator duality) Let A and B be two operators acting on functions \(f:\,\varOmega \rightarrow {\mathbb {R}}\) and \(g:\,{\hat{\varOmega }}\rightarrow {\mathbb {R}}\), respectively. We say that A is dual to B with respect to the duality function \(D:\,\varOmega \times {\hat{\varOmega }}\rightarrow {\mathbb {R}}\), denoted by \(A\overset{D}{\longrightarrow }B\), if \((AD(\cdot ,y))(x)=(BD(x,\cdot ))(y)\) for all \((x,y)\in \varOmega \times {\hat{\varOmega }}\). \(\Box \)

The following lemma intertwines the \(\mathfrak {su}(2)\) and its dual algebra with a duality function.

Lemma 4.2

(Single-colony intertwiner) For \(\alpha \in {\mathbb {N}}\), let \(d_\alpha :\,[\alpha ]\times [\alpha ]\rightarrow [0,1]\) be the function defined by

Then, the following duality relations hold:

Proof

By straightforward calculations, it can be shown that \(d_\alpha (x,n)\) satisfies the relations

from which the above dualities in (4.6) follow immediately. \(\square \)

Remark 4.3

(Seed-bank and \(\mathfrak {su}(2)\) -algebra) The basic idea behind the algebraic approach to duality is to write the generator of a given process in terms of simple operators that form a representation of some known Lie algebra and to make an ansatz to obtain an intertwiner of the chosen representation. The intertwiner \(d_\alpha \) in the above lemma was first identified in [12, Lemma 1] as a duality function in disguise for the classical duality between the Moran model and the block-counting process of Kingman’s coalescent. Recently, in [4] this duality was put in the algebraic framework by deriving it from an intertwining via \(d_\alpha \) of two representations of the Heisenberg algebra \({\mathscr {H}}(2)\). The connection of \(d_\alpha \) to the \(\mathfrak {su}(2)\)-algebra was also made in [11, Section 3.2], where the authors obtained a self-duality function of 2j-SEP factorized in terms of \(d_\alpha \) by considering symmetries related to the \(\mathfrak {su}(2)\)-algebra. The relation of our seed-bank model to the \(\mathfrak {su}(2)\)-algebra becomes clear once we realize that the seed-bank component in our single-colony model is an inhomogeneous version of the 2j-SEP on two sites. Thus, it is natural to expect that the classical duality of Moran model can be retrieved from representations of \(\mathfrak {su}(2)\)-algebra as well. The above lemma indeed provides the ingredients to establish the duality of our single-colony model from representations of the \(\mathfrak {su}(2)\)-algebra. Although it is possible to guess the dual process of the single-colony model without going into the Lie-algebraic framework, the true usefulness of this approach lies in identifying the dual of the spatial model, where such speculation is no longer feasible. \(\Box \)

Proof of Theorem 3.2

Recall that both \(Z=(X(t),Y(t))_{t\ge 0}\) and \(Z^*=(n_t,m_t)_{t\ge 0}\) live on the state space \(\varOmega =[N]\times [M]\). Let \(D:\,\varOmega \times \varOmega \rightarrow [0,1]\) be the function defined by

Let \(G=G_{\text {Mor}}+G_{\text {Exc}}\) be the generator of the process Z, where \(G_{\text {Mor}},G_{\text {Exc}}\) are as in (3.2)–(3.3). Also note from (3.7) that the generator \({\widehat{G}}\) of the dual process is given by \({\widehat{G}}=G_{\text {King}}+G_{\text {Exc}}\) where \(G_{\text {King}}:\,C(\varOmega )\rightarrow C(\varOmega )\) is defined as

Since \(\varOmega \) is countable, it is enough to show the generator criterion for duality, i.e.

In our notation, (4.10) translates into \(G\overset{D}{\longrightarrow }{\widehat{G}}\). It is somewhat tedious to verify (4.10) by direct computation. Rather, we will write down a proof with the help of the elementary operators defined in (4.2). This approach will also reveal the underlying change of representation of the two operators \(G,{\widehat{G}}\) that is embedded in the duality.

Note that

where the subscripts indicate which variable of the associated function the operators act on. For example, \(J^{N,+}_1\) and \(J^{M,+}_2\) act on the first and second variable, respectively. So, for a function \(f:\,[N]\times [M]\rightarrow {\mathbb {R}}\), we have \((J^{N,+}_1f)(n,m)=(J^{N,+}f(\,\cdot \,;\,m))(n)\) and \((J^{M,+}_2f)(n,m)=(J^{M,+}f(n\,;\,\cdot \,))(m)\). The equivalent version of Lemma 4.2 holds for these operators with subscript as well, except that the duality function is D. In other words, \(J^{N,+}_1\overset{D}{\longrightarrow }A^{N,+}_1\), \(J^{M,+}_2\overset{D}{\longrightarrow }A^{M,+}_2\), and so on. Using these duality relations and the representations in (4.11), we have \(G_{\text {Mor}}\overset{D}{\longrightarrow }G_{\text {King}}\) and \(G_{\text {Exc}}\overset{D}{\longrightarrow }G_{\text {Exc}}\), where we use:

-

Two operators acting on different sites commute with each other.

-

For some duality function d and operators \(A,B,{\hat{A}},{\hat{B}}\), if \(A\overset{d}{\longrightarrow }{\hat{A}},B\overset{d}{\longrightarrow }{\hat{B}}\), then, for any constants \(c_1,c_2\), \( AB\overset{d}{\longrightarrow }{\hat{B}}{\hat{A}}\) and \(c_1A+c_2B\overset{d}{\longrightarrow }c_1{\hat{A}}+c_2{\hat{B}}\).

Since \(G=G_{\text {Mor}}+G_{\text {Exc}}\) and \({\widehat{G}}=G_{\text {King}}+G_{\text {Exc}}\), we have \(G\overset{D}{\longrightarrow } {\widehat{G}}\), which proves the claim. \(\square \)

4.2 Equilibrium

Proof (Proof of Proposition 3.3.)

For \(x\in {\mathbb {R}}\) and \(r\in {\mathbb {N}}\), let \((x)_r\) be the falling factorial defined as

where we put \((x)_r=1\) when \(r=0\). For any \(n\in {\mathbb {N}}_0\), we can write \(x^n\) as

where the constants \(c_{n,j}\) (known as the Stirling numbers of the second kind) are unique and depend only on n and \(j\in [n]\). Let \((n,m)\in \varOmega =[N]\times [M]\) be such that \((n,m)\ne (0,0)\), and let \((n_t,m_t)_{t\ge 0}\) be the dual process in Definition 3.7. It follows from (4.13) and Theorem 3.2 that

where \(D:\,\varOmega \times \varOmega \rightarrow [0,1]\) is the duality function in Theorem 3.2, defined by

and the expectation in the last line of (4.14) is with respect to the dual process. Let T be the first time at which there is only one particle left in the dual, i.e. \( T=\inf \{t>0:\,n_t+m_t=1\}\). Note that, for any initial state \((i,j)\in \varOmega \backslash \{(0,0)\}\), \(T<\infty \) with probability 1, and the distribution of \((n_t,m_t)\) converges as \(t\rightarrow \infty \) to the invariant distribution \(\tfrac{N}{N+M}\delta _{(1,0)}+\tfrac{M}{N+M}\delta _{(0,1)}\). So, for any \((i,j)\in \varOmega \backslash \{(0,0)\}\),

where we use that the second term after the first equality converges to 0 because \(T<\infty \) with probability 1. Combining (4.16) with (4.14), we get

where the last equality follows from (4.13) and the fact that \(c_{n,0}c_{m,0}=0\) when \((n,m)\ne (0,0)\). \(\square \)

Proof (Proof of Corollary 3.4.)

Note that the distribution of a two-dimensional random vector \((Z_1,Z_2)\) taking values in \([N]\times [M]\) is determined by the mixed moments \({\mathbb {E}}[Z_1^iZ_2^j]\), \(i,j\in [N]\times [M]\). For \(i\in I=[NM]\), let \(p_i={\mathbb {P}}((Z_1,Z_2)=f^{-1}(i))\), where \(f:\,[N]\times [M]\rightarrow I\) is a bijection. For \(i\in I\), let \(c_i={\mathbb {E}}[Z_1^xZ_2^y]\), where \((x,y)=f^{-1}(i)\). We can write \(\varvec{c}=A\varvec{p}\), where \(\varvec{p}=(p_i)_{i\in I}, \varvec{c}=(c_i)_{i\in I}\) and A is an invertible \((N+1)(M+1)\times (N+1)(M+1)\) matrix. Hence, \(\varvec{p}=A^{-1}\varvec{c}\) is uniquely determined by the mixed moments, and convergence of the mixed moments of (X(t), Y(t)) as shown in Proposition 3.3 is enough to conclude that (X(t), Y(t)) converges in distribution as \(t\rightarrow \infty \) to a random vector \((X_\infty ,Y_\infty )\) taking values in \([N]\times [M]\). The distribution of \((X_\infty ,Y_\infty )\) is also uniquely determined and is given by \(\tfrac{X+Y}{N+M}\delta _{(N,M)}+(1-\tfrac{X+Y}{N+M})\delta _{(0,0)}\). \(\square \)

5 Proofs: Duality and Well-Posedness for the Multi-colony Model

In Sect. 5.1, we give the proof of Lemma 3.8. In Sect. 5.2, we introduce equivalent versions for the multi-colony setting of the operators defined in (4.2) for the single-colony setting, and use these to prove Theorem 3.10 and Corollary 3.11. In Sect. 5.3, we prove Propositions 3.6, 3.12 and Theorem 3.13.

5.1 Proof of Lemma 3.8

Proof

Note that the rate-matrix is nothing but the dual generator \(L_\text {dual}\) obtained from the rates specified in (3.23). The action of \(L_\text {dual}\) on a function \(f:\,{\mathcal {X}}^*\rightarrow {\mathbb {R}}\) is given by

where \(\xi =(n_i,m_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}^*\) and the configurations \(\varvec{\delta }_{i,A},\varvec{\delta }_{i,D}\in {\mathcal {X}}^* \subset {\mathcal {X}}\) are as in (3.13). It is enough to show that \(L_\text {dual}\) satisfies the well-known Foster–Lyapunov criterion for stability (see, for example, [27, Theorem 2.1] or [6, Theorem (1.11)] for Markov processes on countable state spaces), i.e.

for some \(p>0\) with \(V:\,{\mathcal {X}}^*\rightarrow (0,\infty )\) a function such that there exist \((E_k)_{k\in {\mathbb {N}}}\) with \(E_k\uparrow {\mathcal {X}}^*\) and \(\inf _{x\not \in E_k}V(x)\rightarrow \infty \) as \(k\rightarrow \infty \).

Let us define the function \(V\,:\,{\mathcal {X}}^*\rightarrow (0,\infty )\) as

and, for \(k\in {\mathbb {N}},\) set

Since \({\mathcal {X}}^*\) contains configurations with finitely many particles, V is well defined. It is straightforward to see that

Let \(\xi =(n_i,m_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}^*\) be arbitrary. Note that, for any \(i,j\in {\mathbb {Z}}^d\) with \(i\ne j\),

and so by using (5.1) we obtain

where \(c=\sum _{i\in {\mathbb {Z}}^d}a(0,i)<\infty \). Hence, setting \(p:=\max \{1,c\}>0\), we have that

which proves our the claim. \(\square \)

5.2 Duality

5.2.1 Generators and Intertwiners

Let \(f\in C({\mathcal {X}})\) and \(\eta =(X_i,Y_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}\), and let \(\varvec{\delta }_{i,A},\varvec{\delta }_{i,D}\) be as in (3.13). Define the action of the multi-colony operators as in Table 3.

The same duality relations as in Lemma 4.2 hold for these operators as well. The only difference is that the duality function becomes the site-wise product of the duality functions appearing in the single-colony model.

Lemma 5.1

(Multi-colony intertwiner) Let \(D:\,{\mathcal {X}}\times {\mathcal {X}}^*\rightarrow [0,1]\) be the function defined by

where \((X_k,Y_k)_{k\in {\mathbb {Z}}^d}\in {\mathcal {X}}\) and \((n_k,m_k)_{k\in {\mathbb {Z}}^d}\in {\mathcal {X}}^*\). Then, for every \(i\in {\mathbb {Z}}^d\) and \(s\in \{0,+,-\}\),

Proof

The proof is exactly the same as the proof of Lemma 4.2.\(\square \)

Proposition 5.2

(Generator criterion) Let L be the generator defined in (3.16), and \({\hat{L}}\) the generator of the dual process defined in Definition 3.7. Furthermore, let \(D:\,{\mathcal {X}}\times {\mathcal {X}}^*\rightarrow [0,1]\) be the function defined in Lemma 5.1. Then, \(L\overset{D}{\longrightarrow }{\hat{L}}\).

Proof

Recall that \(L=L_{\text {Mig}}+L_{\text {Res}}+L_{\text {Exc}}\), where \(L_{\text {Mig}},L_{\text {Res}},L_{\text {Ex}}\) are defined in (3.17)–(3.19). In terms of the operators defined earlier, these have the following representations:

Similarly, the generator \({\hat{L}}\) of the dual process defined in Definition 3.7 acting on \(f\in C({\mathcal {X}}^*)\) is given by \({\hat{L}}={\hat{L}}_{\text {Mig}}+L_{\text {Exc}}+L_\text {King}\), where

for \(\xi =(n_i,m_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}^*\). The representations of these operators are

From Lemma 5.1 and the representations in (5.11)–(5.13), we see that \(L_\text {Mig}\overset{D}{\longrightarrow }{\hat{L}}_\text {Mig}, \,L_\text {Res}\overset{D}{\longrightarrow }L_\text {King}\) and \(L_\text {Ex}\overset{D}{\longrightarrow }L_\text {Ex}\), which yields \(L\overset{D}{\longrightarrow }{\hat{L}}\). \(\square \)

As shown in [22, Proposition 1.2], the generator criterion is enough to get the required duality relation of Theorem 3.10 when both L and \({\hat{L}}\) are Markov generators of Feller processes. Since it is not a priori clear whether L (or its extension) is a Markov generator, we need to use [9, Theorem 4.11, Corollary 4.13].

5.2.2 Proof of Duality Relation

Proof of Theorem 3.10

We combine [9, Theorem 4.11 and Corollary 4.13] and reinterpret these in our context:

-

Let \((\eta _t)_{t\ge 0}\) and \((\xi _t)_{t\ge 0}\) be two independent processes on \(E_1\) and \(E_2\) that are solutions to the martingale problem for \((L_1,{\mathcal {D}}_1)\) and \((L_2,{\mathcal {D}}_2)\) with initial states \(x\in E_1\) and \(y\in E_2\). Assume that \(D:\,E_1\times E_2\rightarrow {\mathbb {R}}\) is such that \(D(\,\cdot \,;\,\xi )\in {\mathcal {D}}_1\) for any \(\xi \in E_2\) and \(D(\eta \,;\,\cdot )\in {\mathcal {D}}_2\) for any \(\eta \in E_1\). Also assume that for each \(T>0\), there exists an integrable random variable \(U_T\) such that

$$\begin{aligned}&\sup _{0\le s,t\le T} | D(\eta _t;\xi _s)| \le U_T, \,\, \sup _{0\le s,t\le T} |(L_1D(\,\cdot \,;\xi _s))(\eta _t)| \le U_T,\nonumber \\&\qquad \sup _{0\le s,t\le T} |(L_2D(\eta _t;\,\cdot \,))(\xi _s)| \le U_T. \end{aligned}$$(5.14)If \((L_1D(\,\cdot \,;y))(x)=(L_2D(x\,;\,\cdot \,))(y)\), then \({\mathbb {E}}_x[D(\eta _t;y)]={\mathbb {E}}^y[D(x,\xi _t)]\) for all \(t\ge 0\).

To apply the above, pick \(E_1={\mathcal {X}}\), \(E_2={\mathcal {X}}^*\), \(L_1=L\), \(L_2=L_\text {dual}\), \({\mathcal {D}}_1={\mathcal {D}}\), \({\mathcal {D}}_2=C({\mathcal {X}}^*)\), where \(L_\text {dual}\) is the generator of the dual process \(Z^*\) and set D to be the function defined in Lemma 5.1. Note that since \({\mathcal {D}}\) contains local functions only, \(D(\,\cdot \,;\xi )\in {\mathcal {D}}\) for any \(\xi \in {\mathcal {X}}^*\) and, since \({\mathcal {X}}^*\) is countable, \(D(\eta \,;\,\cdot \,)\in C({\mathcal {X}}^*)\) for any \(\eta \in {\mathcal {X}}\). Fix \(x=(X_i,Y_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}\) and \(y=(n_i,m_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}^*\). Note that by Proposition 5.2, \((L_1D(\,\cdot \,;y))(x)=(L_2D(x\,;\,\cdot \,))(y)\). Pick \((\xi _t)_{t\ge 0}\) to be the process \(Z^*\) with initial state y. Note that \((\xi _t)_{t\ge 0}\) is the unique solution to the martingale problem for \((L_\text {dual},C({\mathcal {X}}^*))\) with initial state y. Let \((\eta _t)_{t\ge 0}\) denote any solution Z to the martingale problem for \((L,{\mathcal {D}})\) with initial state x. Fix \(T>0\) and note that for \(0\le s,t<T\),

and

The random variable \(\varGamma (t)\) defined in Theorem 3.10 is stochastically increasing in time t, and if we change the configuration \(\eta _t\) outside the box \([0,\varGamma (s)]^d\cap {\mathbb {Z}}^d\), then the value of \(D(\eta _t;\xi _s)\) does not change. Consequently, all the summands in (5.15) for \(\Vert i\Vert >\varGamma (s), i\in {\mathbb {Z}}^d\), are 0, and since \(\varGamma (s)\le \varGamma (T)\), we have the estimate

where \(c=\sum _{i\in {\mathbb {Z}}^d}a(0,i)\). Now, by Definition 3.7, the process \((\xi _t)_{t\ge 0}\) is the interacting particle system with coalescence in which the total number of particles can only decrease in time, and so \(\sum _{i\in {\mathbb {Z}}^d}(n_i(s)+m_i(s))\le N\), where \(N=\sum _{i\in {\mathbb {Z}}^d} (n_i+m_i)\). Also, since \(s\le T\), for \(i \in {\mathbb {Z}}^d\) with \(\Vert i\Vert >\varGamma (T)\) we have \(n_i(s)=m_i(s)=0\). Hence, from (5.16) we get

Define the random variable \(U_T\) by

Then, combining (5.17)–(5.18) with the fact that the function D takes values in [0, 1], we see that \(U_T\) satisfies all the conditions in (5.14), while assumption (3.25) in Theorem 3.10 ensures the integrability of \(U_T\). \(\square \)

5.2.3 Proof of Duality Criterion

Proof of Corollary 3.11

Let \(\xi =(n_i,m_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}^*\) and \(T>0\) be fixed. By Theorem 3.10, it suffices to show that for any \((N_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {N}}\),

where \({\mathbb {P}}^\xi \) is the law of the dual process \(Z^*\) started from initial state \(\xi \). Let \(n=\sum _{i\in {\mathbb {Z}}^d}(n_i+m_i)\) be the initial number of particles, and let N(t) be the total number of migration events within the time interval [0, t]. We will construct a Poisson process \(N^*\) via coupling such that \(N(t)\le N^*(t)\) for all \(t\ge 0\) with probability 1. For this purpose, let us consider n independent particles performing a random walk on \({\mathbb {Z}}^d\) according to the migration kernel \(a(\cdot ,\cdot )\). For each \(k=1,\ldots ,n\), let \(\xi _k(t)\) and \(\xi ^*_k(t)\) denote the position of the k-th dependent and independent particle at time t, respectively. We take \(\xi _k(0)=\xi _k^*(0)\) and couple each k-th interacting particle with the k-th independent particle as below:

-

If the independent particle makes a jump from site \(\xi _k^*(t)\) to \(j^*\in {\mathbb {Z}}^d\), then the dependent particle jumps from \(\xi _k(t)\) to \(j=\xi _k(t)+(j^*-\xi _k^*(t))\) with probability \(p_k(t)\) given by

$$\begin{aligned} p_k(t){=} {\left\{ \begin{array}{ll} 1{-}\tfrac{n_j(t)}{N_j} &{} \text { if the dependent particle is in an } active \text { and }{} non-coalesced \text { state,}\\ 0 &{} \text { otherwise,} \end{array}\right. }\nonumber \\ \end{aligned}$$(5.21)where \(n_j(t)\) is the number of active particles at site j.

-

The dependent particle does the other transitions (waking up, becoming dormant and coalescence) independently of the previous migration events, with the prescribed rates defined in Definition 3.7.

Note that since the migration kernel is translation invariant, under the above coupling the effective rate at which a dependent particle migrates from site i to j is \(n_i a(i,j) (1-\tfrac{n_j}{N_j})\) when there are \(n_i\) and \(n_j\) active particles at site i and j, respectively. Also, if \(N_k(t)\) and \(N_k^*(t)\) are the number of migration steps made within the time interval [0, t] by the k-th dependent and independent particle, respectively, then under this coupling \(N_k(t)\le N^*_k(t)\) with probability 1. Set \(N^*(\cdot )=\sum _{k=1}^{n}N_k^*(\cdot )\). Then, clearly,

Also, \(N^*\) is a Poisson process with intensity cn, since each independent particle migrates at a total rate c.

Let \(Y_l,X_l\in {\mathbb {Z}}^d\) denote the step at the l-th migration event in the dependent and independent particle systems, respectively. Note that \((X_l)_{l\in {\mathbb {N}}}\) are i.i.d. with distribution \((a(0,i))_{i\in {\mathbb {Z}}^d}\). Since, under the above coupling, a dependent particle copies the step of an independent particle with a certain probability (possibly 0), and \(\varGamma (0)\) is the minimum length of the box within which all n dependent particles at time 0 are located, we have, for any \(t\ge 0\),

Therefore,

where \( S_{N^*(T)}=\sum _{l=1}^{N*(T)}|X_l|\).

To prove part (a), note that \({\mathbb {E}}[\mathrm{e}^{\delta S_{N^*(T)}}] < \infty \) and so, by Chebyshev’s inequality,

Thus, the inequality in (5.24) reduces to

where

For \(k\in {\mathbb {N}}\), let \(\alpha _k = \# \{i\in {\mathbb {Z}}^d:\,\Vert i\Vert _\infty =k\}\). Then, \(\alpha _k= (2k+1)^d-(2k-1)^d \le 4^d k^{d-1}\). Hence,

where \(c_k=\sup \{N_i:\, \Vert i\Vert _\infty =k,i\in {\mathbb {Z}}^d\}\). Since under the assumption of part (a), \(\lim _{k \rightarrow \infty }\tfrac{1}{k}\log c_k = 0\), there exists a \(K\in {\mathbb {N}}\) such that \(c_k\le \mathrm{e}^{\delta k/2}\) for all \(k\ge K\). Hence, using (5.26), we find that

which settles part (a).

To prove part (b), note that under the assumption \(\sum _{i\in {\mathbb {Z}}^d}\Vert i\Vert ^\gamma a(0,i) <\infty \) for some \(\gamma >d+\delta \), we have \({\mathbb {E}}[S_{N^*(T)}^\gamma ]<\infty \), and since \(S_{N^*(T)}\) is a positive random variable, we get

From (5.24) we get

where \(V={\mathbb {E}}[S_{N^*(T)}^\gamma ]\). By the assumption of part (b), there exists a \(C >0\) such that

and so using (5.28), we obtain

which settles part (b). \(\square \)

5.3 Well-Posedness

In this section, we prove Propositions 3.6, 3.12 and Theorem 3.13.

5.3.1 Existence

Since the state space \({\mathcal {X}}\) is compact, the theory described in [25, Chapter I, Section 3] is applicable in our setting without any significant changes. The interacting particle systems in [25] have state space \(W^S\), where W is a compact phase space and S is a countable site space. In our setting, the site space is \(S={\mathbb {Z}}^d\), but the phase space differs at each site, i.e. \([N_i]\times [M_i]\) at site \(i \in {\mathbb {Z}}^d\). The general form of the generator of an interacting particle system in [25] is

where the sum is taken over all finite subsets T of S, and \(\eta ^\xi \) is the configuration

For finite \(T\Subset {\mathcal {X}}\), \(c_T(\eta ,d\xi )\) is a finite positive measure on \(W_T=W^T\). To make the latter compatible with our setting, we define \(W_T=\prod _{i\in T}[N_i]\times [M_i]\). The interpretation is that \(\eta \) is the current configuration of the system, \(c_T(\eta ,W_T)\) is the total rate at which a transition occurs involving all the coordinates in T, and \(c_T(\eta ,d\xi )/c_T(\eta ,W_T)\) is the distribution of the restriction to T of the new configuration after that transition has taken place. Fix \(\eta =(X_i,Y_i)_{i\in {\mathbb {Z}}^d}\in {\mathcal {X}}\). Comparing (5.34) with the formal generator L defined in (3.16), we see that the form of \(c_T(\cdot ,\cdot )\) is as follows:

-

\(c_T(\eta ,d\xi )=0\) if \(|T|\ge 2\).

-

For \(|T|=1\), let \(T=\{i\}\) for some \(i\in {\mathbb {Z}}^d\). Then, \(c_T(\eta ,\cdot )\) is the measure on \([N_i]\times [M_i]\) given by

$$\begin{aligned} c_T(\eta ,\cdot )= & {} X_i\left[ \,\sum _{j\in {\mathbb {Z}}^d}a(i,j)\tfrac{N_j-X_j}{N_j}\right] \delta _{(X_i-1,Y_i)}(\cdot )+(N_i-X_i)\nonumber \\&\quad \times \left[ \,\sum _{j\in {\mathbb {Z}}^d}a(i,j) \tfrac{X_j}{N_j}\right] \delta _{(X_i+1,Y_i)}(\cdot )\nonumber \\&\quad +\lambda X_i\tfrac{M_i-Y_i}{M_i}\,\delta _{(X_i-1,Y_i+1)}(\cdot )+\lambda (N_i-X_i) \tfrac{Y_i}{M_i}\,\delta _{(X_i+1,Y_i-1)}(\cdot ).\nonumber \\ \end{aligned}$$(5.36)Note that the total mass is

$$\begin{aligned} \begin{aligned} c_T(\eta ,W_T)&= X_i\left[ \sum _{j\in {\mathbb {Z}}^d}a(i,j)\tfrac{N_j-X_j}{N_j}\right] +(N_i-X_i) \left[ \sum _{j\in {\mathbb {Z}}^d}a(i,j)\tfrac{X_j}{N_j}\right] \\&\qquad +\lambda X_i\frac{M_i-Y_i}{M_i}+\lambda (N_i-X_i)\tfrac{Y_i}{M_i}. \end{aligned}\nonumber \\ \end{aligned}$$(5.37)

Lemma 5.3

(Bound on rates) Let \(c=\sum _{i\in {\mathbb {Z}}^d} a(0,i) <\infty \). For a finite set \(T\Subset {\mathbb {Z}}^d\), let \(c_T=\sup _{\eta \in {\mathcal {X}}}c_T(\eta ,W_T)\). Then, \(c_T\le (c+\lambda ) {\mathbf {1}}_{\{|T|=1\}}\sup _{i\in T} N_i\) with \(c = \sum _{i\in {\mathbb {Z}}^d} a(0,i)\).

Proof

Clearly, \(c_T=0\) if \(|T|\ge 2\). So let \(T=\{i\}\) for some \(i\in {\mathbb {Z}}^d\). We see that for \(\eta =(X_k,Y_k)_{k\in {\mathbb {Z}}^d}\), \(c_T(\eta ,W_T)\le c X_i + c(N_i-X_i) + \lambda X_i +\lambda (N_i-X_i)=(c+\lambda )N_i=(c+\lambda )\sup _{i\in T} N_i\). \(\square \)

Proof of Proposition 3.6

By [25, Proposition 6.1 of Chapter I], it suffices to show that

where the sum is taken over all finite subsets \(T\Subset S\) containing \(i\in S\). Since in our case \(S={\mathbb {Z}}^d\), we let \(i\in {\mathbb {Z}}^d\) be fixed. By Lemma 5.3, the sum reduces to \(c_{\{i\}}\), and clearly \(c_{\{i\}}\le (c+\lambda ) N_i < \infty \). \(\square \)

Proof of Proposition 3.12

By [25, Proposition 6.1 and Theorem 6.7 of Chapter I], to show existence of solutions to the martingale problem for \((L,{\mathcal {D}})\), it is enough to prove that (5.38) is satisfied. But we already showed this in the proof of Proposition 3.6. \(\square \)

5.3.2 Uniqueness

Before we turn to the proof of Theorem 3.13, we state and prove the following proposition, which, along with the duality established in Corollary 3.11, will play a key role in the proof of the uniqueness of solutions to the martingale problem.

Proposition 5.4

(Separation) Let \(D:\,{\mathcal {X}}\times {\mathcal {X}}^*\rightarrow [0,1]\) be the duality function defined in Lemma 5.1. Define the set of functions \({\mathcal {M}}=\{D(\,\cdot \,;\,\xi ):\,\xi \in {\mathcal {X}}^*\}\). Then, \({\mathcal {M}}\) is separating on the set of probability measures on \({\mathcal {X}}\).

Proof

Let \({\mathbb {P}}\) be a probability measure on \({\mathcal {X}}=\prod _{i\in {\mathbb {Z}}^d}[N_i]\times [M_i]\). It suffices to show that the finite-dimensional distributions of \({\mathbb {P}}\) are determined by \(\{\int _{{\mathcal {X}}} f\,d\,{\mathbb {P}}:\,f\in {\mathcal {M}}\}\). Note that it is enough to show the following:

-

Let \(X=(X_1,X_2,\ldots ,X_n)\in \prod _{i=1}^{n}[N_i]\) be an n-dimensional random vector with some distribution \({\mathbb {P}}_X\) on \(\prod _{i=1}^{n}[N_i]\). Then, \({\mathbb {P}}_X\) is determined by the family

$$\begin{aligned} {\mathcal {F}}=\left\{ {\mathbb {E}}\left[ \,\prod _{i=1}^n \tfrac{\left( {\begin{array}{c}X_i\\ \alpha _i\end{array}}\right) }{\left( {\begin{array}{c}N_i\\ \alpha _i\end{array}}\right) }\right] :\, (\alpha _i)_{1\le i\le n}\in \prod _{i=1}^n[N_i]\right\} . \end{aligned}$$(5.39)

By (4.13), the family \({\mathcal {F}}\) is equivalent to the family

containing the mixed moments of \((X_1,\ldots ,X_n)\). Since X takes a total of \(N=\prod _{i=1}^n (N_i+1)\) many values, we can write the distribution \({\mathbb {P}}_X\) as the N-dimensional vector \(\varvec{p}=(p_1,\ldots ,p_N)\), where \(p_i={\mathbb {P}}_X(X=f^{-1}(i))\) and \(f:\,\prod _{i=1}^{n}[N_i]\rightarrow \{1,\dots ,N\}\) is the bijection defined by

Note that \({\mathcal {F}}^*\) also contains N elements, and so we can write \({\mathcal {F}}^*\) as the N-dimensional vector \(\varvec{e}=(e_1,\ldots ,e_N)\), where \(e_i={\mathbb {E}}[\prod _{k=1}^{n}X_k^{\alpha _k}],(\alpha _1,\ldots ,\alpha _n)=f^{-1}(i)\). We show that there exists an invertible linear operator that maps \(\varvec{p}\) to \(\varvec{e}\). Indeed, for \(i=1,\ldots ,n\), define the \((N_i+1)\times (N_i+1)\) Vandermonde matrix \(A_i\),

Being Vandermonde matrices, all \(A_i\) are invertible. Finally, define the \(N\times N\) matrix A by \(A= A_1 \otimes A_2 \otimes \cdots \otimes A_n\), where \(\otimes \) denotes the Kronecker product for matrices. Then, A is invertible because all \(A_i\) are. Also, we can check that \(A\varvec{p}=\varvec{e}\), and hence, the distribution of X given by \(\varvec{p}=A^{-1}\varvec{e}\) is uniquely determined by \(\varvec{e}\), i.e. the family \({\mathcal {F}}^*\). \(\square \)

Proof of Theorem 3.13

We use [9, Proposition 4.7], which states the following (reinterpreted in our setting):

-

Let \({\mathcal {S}}_1\) be compact and \({\mathcal {S}}_2\) be separable. Let \(x\in {\mathcal {S}}_1,y\in {\mathcal {S}}_2\) be arbitrary and \(D:\,{\mathcal {S}}_1\times {\mathcal {S}}_2\rightarrow {\mathbb {R}}\) be such that the set \(\{D(\,\cdot \,;z):\,z\in {\mathcal {S}}_2\}\) is separating on the set of probability measures on \({\mathcal {S}}_1\). Assume that for any two solutions \((\eta _t)_{t\ge 0}\) and \((\xi _t)_{t\ge 0}\) of the martingale problem for \((L_1,{\mathcal {D}}_1)\) and \((L_2,{\mathcal {D}}_2)\) with initial states x and y, the duality relation holds: \({\mathbb {E}}_x[D(\eta _t,y)]={\mathbb {E}}^y[D(x,\xi _t)]\) for all \(t\ge 0\). If for every \(z\in {\mathcal {S}}_2\) there exists a solution to the martingale problem for \((L_2,{\mathcal {D}}_2)\) with initial state z, then for every \(\eta \in {\mathcal {S}}_1\) uniqueness holds for the martingale problem for \((L_1,{\mathcal {D}}_1)\) with initial state \(\eta \).

Pick \({\mathcal {S}}_1={\mathcal {X}}\), \({\mathcal {S}}_2={\mathcal {X}}^*\), \((L_1,{\mathcal {D}}_1)=(L,{\mathcal {D}})\) and \((L_2,{\mathcal {D}}_2)=(L_\text {dual},C({\mathcal {X}}^*))\), where \(L_\text {dual}\) is the generator of the dual process \(Z^*\). Note that in our setting the martingale problem for \((L_\text {dual},C({\mathcal {X}}^*))\) is already well-posed (the unique solution is the dual process \(Z^*\) in Lemma 3.8). Hence, combining the above observations with Proposition 5.4 and Corollary 3.11, we get uniqueness of the solutions to the martingale problem for \((L,{\mathcal {D}})\) for every initial state \(\eta \in {\mathcal {X}}\).

The second claim follows from [25, Theorem 6.8 of Chapter I]. \(\square \)

6 Proofs: Equilibrium and Clustering Criterion