Abstract

In this paper, we study the moment generating function and the moments of occupation time functionals of one-dimensional diffusions. Assuming, specifically, that the process lives on \({{\,\mathrm{{\mathbb {R}}}\,}}\) and starts at 0, we apply Kac’s moment formula and the strong Markov property to derive an expression for the moment generating function in terms of the Green kernel of the underlying diffusion. Moreover, the approach allows us to derive a recursive equation for the Laplace transforms of the moments of the occupation time on \({{\,\mathrm{{\mathbb {R}}}\,}}_+\). If the diffusion has a scaling property, the recursive equation simplifies to an equation for the moments of the occupation time up to time 1. As examples of diffusions with scaling property, we study in detail skew two-sided Bessel processes and, as a special case, skew Brownian motion. It is seen that for these processes our approach leads to simple explicit formulas. The recursive equation for a sticky Brownian motion is also discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

To determine the distribution of the time that a stochastic process spends in a set (up to a given time) is a classical, much studied and well understood problem. For diffusions, the Feynman–Kac formula is the basic tool to attack the problem. If the generator of the diffusion has smooth coefficients, this approach calls for solving a parabolic differential equation with a boundary condition. See, e.g., for Brownian motion Durrett [7, Sect. 4], and for diffusions Karatzas and Shreve [15, Sect. 5.7]. We refer also to Borodin and Salminen [6] for explicit examples of various one-dimensional diffusions.

Undoubtedly, the most famous occupation time distribution is the arcsine law, which dates back to Lévy [21]. According to this, for a standard Brownian motion W, letting \(A^W_1\) denote the time W is positive up to time 1, it holds that

where \({{\,\mathrm{{\mathbf {P}}}\,}}_0\) is the probability measure associated with W (when initiated at 0). For proofs of (1) based on the Feynman–Kac formula, see [15, p. 273] and Mörters and Peres [23, p. 214]. In the latter one, instead of inverting the double Laplace transform, the moments of the distribution are calculated, and that approach is, in a sense, closer to the one discussed in this paper.

We are interested in the occupation times for a regular one-dimensional diffusion. More precisely, let \((X_t)_{t\ge 0}\) be such a diffusion and introduce, for \(t\ge 0\),

where \({{\,\mathrm{Leb}\,}}\) denotes the Lebesgue measure. Recall that in [28] Truman and Williams calculated, applying the Feynman–Kac method, a formula for the moment generating function of the occupation time up to an independent exponential time for a fairly general (positively recurrent) diffusion. Watanabe [31] extended the result to hold for a general regular (gap) diffusion exploiting the random time change techniques. However, earlier Barlow, Pitman and Yor [4] derived the formula in case of a skew two-sided Bessel process using excursion theory. This result was connected in [31] with a distribution found by Lamperti in [18]. In [24] Pitman and Yor proved, and also extended, the general formula presented in [31] using the excursion theory (but they also discuss an approach via the Feynman–Kac method). We refer also to Watanabe, Yano and Yano [32], where the inversion of the Laplace transform is discussed, and to Kasahara and Yano [16] for results of the asymptotic behavior of the density at 0.

Our main contributions are, firstly, a new expression for the moment generating function of the occupation time up to an exponential time, as well as a recursive equation for the Laplace transforms of the moments of the occupation time. A novel feature in our analysis is, perhaps, that it is based explicitly on Kac’s moment formula and not on the Feynman–Kac formula. In spite of the fact that both formulas have been known for decades, we have not been able to find precisely these results in the literature. It is seen that the general formula in [31] can be obtained from our formula via some straightforward calculations. Secondly, the recursive formula for the moments is solved for skew two-sided Bessel processes. Somewhat surprisingly, the result says that the moments are polynomials in the dimension and skewness parameters. Although the density of the occupation time is known in this case, it does not seem to be possible to find the general formula for the moments via integration, but numerical integration can, of course, be performed to check the formula for some given values of the parameters. Skew Brownian motion is a special case of a skew two-sided Bessel process obtained when the dimension parameter is \(-1/2\), and in this case the formula for the moments is simpler.

The paper is organized as follows. In the next section, some basic ingredients from the theory of diffusions are recalled. We also introduce the diffusions for which the occupation times are studied later in the paper. In Sect. 3, we discuss Kac’s moment formula for integral functionals. Although this formula is well known, a short proof is included for completeness of the presentation. Section 4 contains the formula for the moment generating function, see (19), and it is also proved that this coincides with the formula in [31]. In Sect. 5, we derive the recursive equation for the Laplace transforms of the moments of the occupation time, see Theorem 2. In the proof, a technical result, Lemma 1, is needed, the proof of which is given in “Appendix”. In Sect. 6, we apply the results on a number of diffusions and present formulas for the skew two-sided Bessel process, skew Brownian motion, oscillating Brownian motion, Brownian spider and sticky Brownian motion.

2 Preliminaries on Diffusions

Let \(X=(X_t)_{t\ge 0}\) be a regular diffusion taking values on an interval \(I\subseteq {{\,\mathrm{{\mathbb {R}}}\,}}\). For simplicity, it is assumed that X is conservative, i.e., \({{\,\mathrm{{\mathbf {P}}}\,}}_x(X_t\in I)=1\) for all \(x\in I\) and \(t\ge 0,\) where \({{\,\mathrm{{\mathbf {P}}}\,}}_x\) stands for the probability measure associated with X when initiated at x. To fix ideas, we suppose that \(0\in I\). In this section, we briefly describe the setup for the diffusion X.

The notations m and S are used for the speed measure and the scale function, respectively. It is assumed that S is normalized to satisfy \(S(0)=0\). Recall that X has a transition density p with respect to m, that is, for any Borel subset B of I it holds that

Moreover, for \(\lambda > 0\) let \(\varphi _\lambda \) and \(\psi _\lambda \) denote the decreasing and increasing, respectively, positive and continuous solutions of the generalized ODE (see [6, p. 18])

The solutions \(\psi _\lambda \) and \(\varphi _\lambda \) are unique up to a multiplicative constant when appropriate boundary conditions are imposed. The Wronskian constant \(\omega _{\lambda }\) is defined as

where the superscripts \(^+\) and \(^-\) denote the right and left derivatives with respect to the scale function S. We remark that in case x is not a sticky point it holds that \(\psi ^{+}_{\lambda }(x) = \psi ^{-}_{\lambda }(x)\) and \(\varphi ^{+}_{\lambda }(x) = \varphi ^{-}_{\lambda }(x)\) [13, p. 129] (cf. [25, Thm. 3.12, p. 308]). The Green kernel (also called the resolvent kernel) with respect to the speed measure m [13, p. 150] is given by

and satisfies

It is well known that the first hitting time \(H_y:=\inf \{t\ge 0 : X_t=y\}\) has a density with respect to the Lebesgue measure. Especially for \(H_0\), we use the notation

for the \({{\,\mathrm{{\mathbf {P}}}\,}}_x\)-density of \(H_0\) and

for its Laplace transform. Moreover, let

Using the continuity of \(\varphi _\lambda \), it follows from (4) that \(\lim _{x\downarrow 0}{\widehat{f}}(x;\lambda )=1\). We also need the following result regarding the kth derivative of \({\widehat{f}}(x;\lambda )\), which is perhaps known, but we do not have any reference.

Lemma 1

For any \(\lambda >0\) and \(k\ge 1\),

Proof

A proof based on spectral representations is given in “Appendix”. \(\square \)

Let \(t>0\) and consider the occupation time on \({{\,\mathrm{{\mathbb {R}}}\,}}_+:=[0,+\infty )\) up to time t:

If X is a self-similar process (see [27] p. 70), that is, for any \(a\ge 0\) there exists \(b\ge 0\) such that

then, as is easily seen, for any fixed \(t\ge 0\),

Example 1

Skew two-sided Bessel processes are introduced in [4]; see also [1, 5, 31]. A skew two-sided Bessel process \((X^{(\nu ,\beta )}_t)_{t\ge 0}\) with parameter \(\nu \in (-1,0)\) and skewness parameter \(\beta \in (0,1)\) is a diffusion on \({{\,\mathrm{{\mathbb {R}}}\,}}\) with the speed measure

and the scale function

The generator is given by

with the domain

Recall that for a “one-sided” Bessel diffusion on \({{\,\mathrm{{\mathbb {R}}}\,}}_+\) reflected at zero [6, p. 137] we have the fundamental solutions

for \(x>0\). The limits

follow from the fact that, for \(\nu \in (-1,0)\) and when \(x\rightarrow 0\),

Let

and

Since \({\widehat{\psi }}_\lambda \) and \({\widehat{\varphi }}_\lambda \) are solutions of \({{\,\mathrm{{\mathcal {G}}\!}\,}}f(x) = \lambda f(x)\) on \({{\,\mathrm{{\mathbb {R}}}\,}}_+\), we immediately see that \(\psi _\lambda \) is also a solution when \(x>0\), while for \(x<0\)

and, hence,

Thus, \(\psi _\lambda \) is a solution of the equation

Furthermore, \(\psi _\lambda \) is continuous since

using Euler’s reflection formula. Notice that from

and (9) it follows that

and, therefore,

so the scale derivative of \(\psi _\lambda \) is also continuous. Finally, from the continuity of \(\psi _\lambda \) and the fact that \({\widehat{\psi }}_\lambda \) is an increasing and \({\widehat{\varphi }}_\lambda \) a positive and decreasing function on \({{\,\mathrm{{\mathbb {R}}}\,}}_+\) (also note that \(\sin (-\pi \nu )>0\)), it follows that \(\psi _\lambda \) is positive and increasing on \({{\,\mathrm{{\mathbb {R}}}\,}}\). After using a similar procedure for \(\varphi _\lambda \), we conclude that the functions \(\psi _\lambda \) and \(\varphi _\lambda \) are increasing and decreasing, respectively, positive and continuous solutions of (10). These functions are also given in the recent paper [1], albeit there with a different normalization.

Using the functions \(\psi _\lambda \) and \(\varphi _\lambda \) as defined above, the corresponding Wronskian is given by

and the Green kernel can be obtained using (3). In particular, we get, for \(y>0\), that

Note that \(G_\lambda (0,y) = \frac{1}{2} {\widehat{G}}_\lambda (0,y)\), where \({\widehat{G}}_\lambda \) is the Green kernel for the one-sided Bessel process reflected at zero [6, p. 137] and also that the skewness parameter \(\beta \) is not present in the expression for \(G_\lambda (0,y)\).

A skew two-sided Bessel process has the scaling property; more precisely, (7) holds with \(b=\sqrt{a}\). This can be verified through a straightforward calculation using the Green kernel.

For a skew two-sided Bessel process, the skewness parameter \(\beta \) has a similar interpretation as for a skew Brownian motion, namely that it corresponds to the probability of the process being positive at any given time, when initiated at 0. This follows from

where in the last step an integral formula for modified Bessel functions of the second kind [11, Eq. (6.561.16)] has been applied. Inverting the Laplace transform gives that, for any \(t>0\),

Example 2

Skew Brownian motion with skewness parameter \(\beta \in (0,1)\) is a diffusion which behaves like a standard Brownian motion when away from a certain skew point, here taken to be 0, while the sign of every excursion from the skew point is chosen by an independent Bernoulli trial with parameter \(\beta \), see [2, 4, 19, 30, 31]. This corresponds to a skew two-sided Bessel process with \(\nu =-1/2\), but for the convenience of the reader we write down explicit expressions. In this case, we have the speed measure

and the scale function

The fundamental solutions to (10) are obtained by inserting \(\nu =-1/2\) into the expressions for \(\psi _\lambda \) and \(\varphi _\lambda \) in the previous section, and after a suitable scaling we get

and

The Wronskian is in this case \(w_\lambda = 2\sqrt{2\lambda }\). We also get that

Example 3

Oscillating Brownian motion (see, e.g., [20]) is a diffusion \(({\widetilde{X}}_t)_{t\ge 0}\) which is characterized by the speed measure

with \(\sigma _{-}>0, \sigma _{+}>0\), and the scale function \({\widetilde{S}}(x) = x\). The fundamental solutions associated with oscillating Brownian motion are (cf. [22])

and

and the corresponding Wronskian is given by \({\widetilde{w}}_\lambda = \sqrt{2\lambda }\big ( \frac{1}{\sigma _+} + \frac{1}{\sigma _-} \big )\). This gives that

It can be checked that the oscillating Brownian motion has the scaling property. In fact, it holds that

where \((X_t)_{t\ge 0}\) denotes the skew Brownian motion with \(\beta =\sigma _-/(\sigma _++\sigma _-)\) and S is its scale function.

Example 4

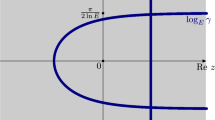

Sticky Brownian motion is a diffusion which behaves like a standard Brownian motion when on excursions away from a certain given point, which we take to be 0. The crucial property which distinguishes sticky Brownian motion from standard Brownian motion is that the occupation time at 0 for sticky Brownian motion is positive up to any given time \(t>0\) a.s. if the process starts from 0, i.e.,

where \((X_t)_{t\ge 0}\) denotes the sticky Brownian motion. However, a sticky Brownian motion does not stay any time interval at 0 (such a behavior would violate the strong Markov property). The scale function and the speed measure are

respectively, where \(\gamma >0\) and \(\varepsilon _{\{0\}}\) denotes the Dirac measure at 0. The fundamental solutions are (see [6, p. 127] and references therein)

and

with Wronskian \(w_\lambda = 2\sqrt{2\lambda } + 2\lambda \gamma \). In particular, this gives that

3 Kac’s Moment Formula

In this section, we recall the classical moment formula for integral functionals due to Kac [14]. See [9] for formulas for additive functionals in a framework of a general strong Markov process, and also for further references. Our aim here is to present the formula in a form directly applicable to the case at hand.

Let X be a regular diffusion taking values on an interval \(I\subseteq {{\,\mathrm{{\mathbb {R}}}\,}}\), as defined above. For a measurable and bounded function V define for \(t>0\)

Proposition 1

(Kac’s moment formula) For \(t>0\), \(x\in I\) and \(n=1,2,\dotsc \),

Proof

The formula clearly holds for \(n=1\). For \(n\ge 2\) consider

where the last step holds due to the symmetry of the function

Consequently, using the Markov property and the induction assumption,

which proves the claim. \(\square \)

4 Moment Generating Function

In this section, as in the previous one, it is assumed that X is a regular diffusion taking values in the interval \(I\subseteq {{\,\mathrm{{\mathbb {R}}}\,}}\), as introduced in Sect. 2. Recall that \(A_t\) is the occupation time on \({{\,\mathrm{{\mathbb {R}}}\,}}_+\) up to time t, as defined in (6). Let \(T\sim {\text {Exp}}(\lambda )\) be an exponentially distributed random variable independent of X. We here derive an expression for the moment generating function of \(A_T\), which always exists, since \(A_t\le t\) for all t.

Theorem 1

Let \(I^+:=I \cap [0,\infty )\). For \(x>0\),

and

Proof

Equation (18) follows from

together with

From Kac’s moment formula (17) with \(V(x)={{\,\mathrm{\mathbb {1}}\,}}_{[0,\infty )}(x)\), it follows that

From this, we obtain the formula

using the convolution formula for the Laplace transform \((\mathcal {L})\). Inserting (18) into the right-hand side of (20) and putting \(x=0\), we can solve the resulting expression for \({{\,\mathrm{{\mathbf {E}}}\,}}_0({{\,\mathrm{\mathrm {e}}\,}}^{-rA_T})\), which gives

proving the result. \(\square \)

In the next corollary, we connect formula (19) with the result in [31, Cor. 2], which is a special case of [24, Eq. (68)] and also corresponds to [28, Eq. (110)]. Here we consider the occupation times on both \({{\,\mathrm{{\mathbb {R}}}\,}}_+\) and \({{\,\mathrm{{\mathbb {R}}}\,}}_-:=(-\infty ,0)\):

respectively. Formula (21) coincides, when multiplied by \(\lambda ^{-1}\), with [24, Eq. (68)] (without the local time term).

Corollary 1

For \(r,q\ge 0\),

where the superscript \(^-\) denotes the left derivative with respect to the scale function S.

Remark 1

If the point 0 is included in \(A_t^-\) instead, rather than in \(A_t^+\), then the left derivatives in (21) should be replaced by right derivatives. Note, however, that there is a difference only if 0 is a sticky point, since otherwise the left and right scale derivatives are equal.

Proof of Corollary 1

We first prove (21) when \(q=0\). Since for \(y>0\) we have that

we can rewrite (19) as

For the integral in the numerator, it holds that

Recall the following integration by parts formula for a Lebesgue–Stieltjes integral, with functions U and V being of finite variation and at least one of them continuous on (a, b):

Since \(\varphi \) is continuous and of finite variation, we get, applying (23) and (24), that

and likewise

Subtracting (25) from (26) yields

Furthermore, if b is an upper boundary point of the diffusion which is either non-exit or exit-and-entrance with reflection, then it holds that \(\varphi _{\lambda }^- (b) = 0\) (see [13, p. 130, Table 1]). Since the lower boundary point of \(I^+\) is 0, we thereby get that

Inserting this into (22) yields

where \(w_\lambda = \psi ^{-}_{\lambda }(0)\varphi _\lambda (0)-\psi _\lambda (0)\varphi ^{-}_{\lambda }(0)\) has been inserted, see (2). We now extend this result. Since \(A_T^{+}+A_T^{-}=T\),

where \(r=p+q\). On the other hand,

where \({\widehat{T}}\sim {\text {Exp}}(\lambda +q)\). Applying (27) gives

which proves (21). \(\square \)

Remark 2

If we instead consider the occupation times on the intervals \([\alpha ,+\infty )\) and \((-\infty ,\alpha )\) for some \(\alpha \in {{\,\mathrm{{\mathbb {R}}}\,}}\), then (21) holds when 0 is replaced with \(\alpha \) everywhere.

5 Recursive Formula for the Moments

In this section, we use Kac’s moment formula to derive our main result, namely a recursive equation for the Laplace transforms of the moments of \(A_t\) for fixed \(t>0\). When the diffusion is a self-similar process, the expression becomes a recursion for moments of \(A_1\) instead (which is easily transformed into a recursion for moments of \(A_t\), since in this case \(A_t=tA_1\)). It is assumed that X is a regular diffusion taking values in the interval \(I\subseteq {{\,\mathrm{{\mathbb {R}}}\,}}\) as defined in Sect. 2. We introduce the Laplace transform of \(A_t\) via

If there is no ambiguity, the variables t and \(\lambda \) in the notation of the Laplace transforms are omitted; for instance, we shall write \({{\,\mathrm{{\mathcal {L}}}\,}}\{{{\,\mathrm{{\mathbf {E}}}\,}}_x(A_t^n)\}\) instead of \({{\,\mathrm{{\mathcal {L}}}\,}}_t\{{{\,\mathrm{{\mathbf {E}}}\,}}_x(A_t^n)\}(\lambda )\).

Theorem 2

Let \(I^+:=I \cap [0,\infty )\). The Laplace transforms of the moments of \(A_t\) for X starting from 0 are given for \(n=1\) by

and for \(n=2,3,\dotsc \) by

where

Moreover, under the scaling property (7), for all \(\lambda >0\),

and

Remark 3

Equation (29) can be rewritten as

where \(U_n(\lambda ):=\frac{\lambda ^{n+1}}{n!} {\widehat{A}}_0(\lambda ;n)\).

Proof of Theorem 2

Taking the Laplace transform on both sides of Kac’s moment formula (17) with \(V(x)={{\,\mathrm{\mathbb {1}}\,}}_{[0,\infty )}(x)\) yields

Substituting \(n=1\) and \(x=0\) in (33) gives (28). To derive (29), we first find an expression for \({\widehat{A}}_x(\lambda ;n)\) in terms of \({\widehat{A}}_0(\lambda ;k)\) for different k. For any starting point \(x>0\),

where \(\theta _t\) is the usual shift operator. By the strong Markov property,

We have the following Laplace transforms with respect to t:

and

Hence, taking the Laplace transforms on the both sides of (34) gives

Note that in the summation the term with \(k=n\) disappears, since \({\widehat{A}}_0(\lambda ;0) = 1/\lambda \). Inserting the expression in (35) into both sides of (33) and solving for \({\widehat{A}}_0(\lambda ;n)\) gives

where

Note, however, that x is not present on the left-hand side of (36), so the right-hand side cannot depend on x either. Thus, we may choose any \(x>0\). We show now that the limit of the right-hand side of (36) exists when \(x\downarrow 0\). Since

it is seen by induction that \(\lim _{x\downarrow 0} D_{k}(x;\lambda )\) exists for all values of k. Consequently, recalling that \(\lim _{x\downarrow 0}{\widehat{f}}(x;\lambda )=1\), we may write

We calculate the limit of \(D_{k}(x;\lambda )\) from the explicit expression (37). Using the result in Lemma 1,

which is the right-hand side of (30). The proof of the recursive Eq. (29) is now complete.

Assume finally that the process X is self-similar so that (8) holds. Then \({{\,\mathrm{{\mathbf {E}}}\,}}_0(A_t^n) = t^n {{\,\mathrm{{\mathbf {E}}}\,}}_0(A_1^n)\) and thus

It is clear that in this case (31) follows immediately from (28). After inserting (38) into (29), it is seen that \(\lambda \) is only left in \(D_k(\lambda ),\, k=1,2,...,n-1\). Putting \(n=2\) we find that \(D_1(\lambda )\) does not depend on \(\lambda \), and, by induction, we conclude that \(D_k(\lambda )\) does not depend on \(\lambda \) for any k. \(\square \)

6 Examples

6.1 Skew Two-Sided Bessel Processes

We now apply the results in Theorems 1 and 2 on the skew two-sided Bessel process, which is described in Example 1. In this case, the function \({\widehat{f}}\) is

and the Green kernel is given in (11). Note that here \(I^+ = [0,\infty )\). We derive the next result from (19) in Theorem 1. Alternatively, formula (21) in Corollary 1 could have been used.

Proposition 2

Let \(T\sim \text {Exp}(\lambda )\) independent of X. For any \(r\ge 0\),

Proof

The identity is trivial when \(r=0\). For \(r>0\), it follows from (19) that

where we need to calculate the integrals \(\varDelta _1\) and \(\varDelta _2\). From (12), we already have that

Recalling (11) and (39), the second integral becomes

after applying an integral formula for modified Bessel functions of the second kind [11, Eq. (6.576.4)]. The hypergeometric function can in this case be rewritten using an incomplete beta function [11, Eq. (8.391)] as

Inserting this into (42) yields

When inserting \(\varDelta _1\) and \(\varDelta _2\) into (41), we obtain

which proves the claim. \(\square \)

Note that the expression in (40) can be rewritten as

which is equivalent to (4.a) in [4]; see also [18, 31].

Next we apply Theorem 2 to find a recursive formula for the moments of \(A_1\).

Theorem 3

(Skew two-sided Bessel process) For \(n\ge 1\),

Proof

Equations (30)–(32) hold, since the skew two-sided Bessel process is self-similar. From (31) and (12), we obtain the first moment,

Next we calculate the coefficients \(D_k(\lambda )\) as given in (30). Setting \(k=1\) gives

where the integral is given by (42) with \(r=0\) (note that the hypergeometric function is equal to 1 in this case). In order to find \(D_{k}(\lambda )\) for \(k>1\), we need to differentiate \({\widehat{f}}(x;\lambda )\) with respect to \(\lambda \). Let

for which it can be shown by induction that

Writing

and differentiating (see, e.g., [6, Appx. 5] for general formulas) gives

Combining this with (11) yields, for \(k=1,2,\dotsc \),

where

by an integration formula for modified Bessel functions [11, Eq. (6.576.4)]. Inserting this and changing the order of summation,

In the case \(j=k\), the inner sum has only one term, namely

When \(j<k\), we show that the inner sum is zero. Note that for any \(k<i<2k\) the summand is zero, since \(0 \le 2k-1-i < k-1\). Thus, when \(j<k\) we can always choose 2j as the upper limit for the summation index, and the inner sum becomes

using, in the third step, the identity [10, Eq. (3.49)]

valid for \(n,m\in {{\,\mathrm{{\mathbb {N}}}\,}}\) and, in case \((m < n), (x\notin \{m, \dotsc , n-1\}).\) From this, we conclude that only the term corresponding to \(j=k\) remains in the expression for \(D_{k+1}(\lambda )\), which thus simplifies to

Reducing the index k by 1 and recalling that \(D_1(\lambda )=\beta \nu \), we conclude that

for all \(k\ge 1\). Inserting this and (44) into (32) results in the recursion

where the last step follows by the identity [10, Eq. (1.49)]

This proves the theorem. \(\square \)

Corollary 2

The mapping \(\beta \mapsto {{\,\mathrm{{\mathbf {E}}}\,}}_0(A_1^n)\) is continuous and increasing.

Proof

Since \(\nu \in (-1,0)\), it follows from the properties of the gamma function that

for all \(n\in {{\,\mathrm{{\mathbb {Z}}}\,}}_+\). The result then immediately follows from (43) by induction. \(\square \)

In the following, we use \(\left[ \begin{array}{c}{n}\\ {k}\end{array}\right] \) for unsigned Stirling numbers of the first kind and \(\left\{ \begin{array}{c}{n}\\ {k}\end{array}\right\} \) for Stirling numbers of the second kind. They are defined recursively through

for \(n,k\in {{\,\mathrm{{\mathbb {Z}}}\,}}\), with initial conditions

The combinatorial interpretation of these numbers is that \(\left[ \begin{array}{c}{n}\\ {k}\end{array}\right] \) counts the number of permutations of n elements with k disjoint cycles, whereas \(\left\{ \begin{array}{c}{n}\\ {k}\end{array}\right\} \) is the number of ways to partition a set of n elements into k nonempty subsets. The notation for Stirling numbers varies between different authors; we use the notation recommended in [17].

From the recursion in (43), we derive the following explicit expression for the moments of \(A_1\).

Theorem 4

(Skew two-sided Bessel process) For any \(n\ge 1\),

In the proof of Theorem 4, we need the following result.

Lemma 2

For any \(n, m, l \in {{\,\mathrm{{\mathbb {N}}}\,}}\),

Proof

Using the well known and very much similar identity [12, Eq. (6.29)]

we prove (49) by induction. It is easy to verify that (49) holds for any \(m,l\in {{\,\mathrm{{\mathbb {N}}}\,}}\) when \(n=0\) (both sides are zero except when \(m=l=0\), in which case both sides are equal to 1). Assume that (49) holds for \(n=N\) and all \(m,l\in {{\,\mathrm{{\mathbb {N}}}\,}}\). Now let \(n=N+1\) and let m and l be arbitrary numbers in \({{\,\mathrm{{\mathbb {N}}}\,}}\). Using the recursion in (47), we get that

where in the second step we have used both the induction assumption and (50). Thus, (49) holds also for \(n=N+1\) and all \(m,l\in {{\,\mathrm{{\mathbb {N}}}\,}}\). The result follows by induction. \(\square \)

Proof of Theorem 4

The result is proved by induction from Theorem 3. The identity (48) holds for \(n=1\), since \({{\,\mathrm{{\mathbf {E}}}\,}}_0(A_1)=\beta \). Assume that (48) holds for all \(n\in \{1,2,\dotsc ,N\}\). We wish to prove that it then also holds for \(n=N+1\), that is,

Here the last step follows from the identity [12, Eq. (6.11)]

which gives that

On the other hand, by the recursive formula (43) we have

Applying (52), the binomial coefficient in the summand can be rewritten as

and using the induction assumption it follows that

When this is inserted into (53), the result should be equivalent to (51). After subtracting the identical first terms, we are left with polynomials in \(\nu \) and \(\beta \) on both sides, and thus it suffices to show that the coefficients are equal. Indeed,

where the second step follows from Lemma 2. The last step holds since

using both (47) and the identity [12, Eq. (6.15)]

This completes the proof for \(n=N+1\). By induction, (48) holds for all \(n\ge 1\). \(\square \)

Remark 4

The distribution of \(A_1\) for a skew two-sided Bessel process starting from 0 has been characterized in [4]. The moments of \(A_1\) can also be calculated numerically for particular values of \(\beta \) and \(\nu \) by integrating the known density [31], for \(x\in (0,1)\),

We call the distribution induced by this density a Lamperti distribution, as it was first found in [18]. The result in (48) does not, however, seem to be easily obtainable through analytic integration. For the special case of skew Brownian motion, see Remark 6.

6.2 Skew Brownian Motion

Let now \((X_t)_{t\ge 0}\) be a skew Brownian motion, see Example 2. This corresponds to a skew Bessel process with \(\nu =-1/2\), and thus it follows from (45) that

and the recursion in (43) becomes

Since the recursion has already been solved for skew Bessel processes, the moments of \(A_1\) for skew Brownian motion can be obtained from Theorem 4.

Theorem 5

(Skew Brownian motion) For any \(n\ge 1\),

Proof

Substituting \(\nu =-1/2\) in (48) yields

where in the last step the identity [33, Eq. (18)]

has been applied. A change of order of summation now gives the result. \(\square \)

Remark 5

The result in Theorem 5 was first proved by solving the recursive formula (56) in a different way than in the proof of Theorem 4. However, later the authors became aware that the identity (58) is already found in [33], and thus the result follows from (48), as shown above. The earlier, alternative method will be presented with additional comments in a future publication.

Corollary 3

(Standard Brownian motion) For \(\beta =1/2\) and \(n\ge 1\), it holds that

and, hence, \(A_1\) has the arcsine law.

Proof

The result follows from (57) when inserting \(\beta =1/2\) and applying (46). Since \(A_1\) is bounded, its moments determine the distribution uniquely, and we recover Lévy’s arcsine law for \(A_1\). \(\square \)

Remark 6

The distribution function of \(A_1\) for skew Brownian motion is given in [31, Eq. (2.5), p. 158] as

This yields the density (see [32, p. 782] and [2, p. 196])

which corresponds to (54) with \(\nu =-1/2\). From this density, it is possible to calculate the moments of \(A_1\) by integration. However, using this approach we have not been able to obtain the expression in (57).

6.3 Oscillating Brownian Motion

Let \(({{\widetilde{X}}}_t)_{t\ge 0}\) be an oscillating Brownian motion, see Example 3. From (15), it is easily seen that the law of \(A_1\) is the same as the corresponding law for a skew Brownian motion. However, here we wish to demonstrate the use of Theorem 2 and therefore give a proof of the following result based on formula (32) therein.

Theorem 6

(Oscillating Brownian motion) For any \(n\ge 1\),

Proof

For oscillating Brownian motion,

Recalling (14), Eq. (30) then becomes

where \(D_k(\lambda )\) is as for skew Brownian motion. In other words, by (55),

Since

we thus arrive at the same recursion as in (56), except with \(\sigma _{-}/(\sigma _{+}+\sigma _{-})\) instead of \(\beta \). Thus, the result follows from Theorem 5. \(\square \)

6.4 Brownian Spider

For a positive integer n, let \(I_1,\dotsc ,I_n\) be half-lines in \({{\,\mathrm{{\mathbb {R}}}\,}}^2\) meeting at the origin. Such a configuration can be seen as a graph G, say, with one vertex and n infinite edges. A Brownian spider, also called Walsh’s Brownian motion on a finite number of rays, see [3, 4, 19, 29, 30], is a diffusion on G such that when away from the origin on a ray and before hitting the origin, it behaves on that ray like an ordinary one-dimensional Brownian motion, but as the process reaches the origin one of the half-lines is chosen randomly for its “next” excursion. A rigorous construction of the probability measure governing a Brownian spider can be done using the excursion theory, see [4].

Let \((X_t)_{t\ge 0}\) denote a Brownian spider living on G, and for every \(i\in \{1,2,...n\}\) let \(p_i\) be the probability for choosing half-line \(I_i\) when at the origin. For any subset \({\mathcal {I}}\subseteq \{1,2,\dotsc ,n\}\), consider the occupation time of X on \(\{I_i:\, i\in {\mathcal {I}}\},\) i.e.,

From the excursion theoretical construction of the Brownian spider, it can be deduced that \(A_t^{\mathcal {I}}\) has the same law as the occupation time on \({{\,\mathrm{{\mathbb {R}}}\,}}_+\) for a skew Brownian motion with the skewness parameter \(\beta := \sum _{i\in {\mathcal {I}}} p_i\). Thus, we deduce the following result from (57).

Theorem 7

(Brownian spider) For any \(n\ge 1\),

We refer also to the recent paper [34] for results concerning distributions of occupation times for diffusions on a multiray.

6.5 Sticky Brownian Motion

In this section, we highlight the use of the moment formula for a process that does not have the scaling property. Moreover, we wish to understand how the presence of a sticky point affects the formula. To this end, let \(X=(X_t)_{t\ge 0}\) be a Brownian motion sticky at 0 with stickyness parameter \(\gamma >0\), see Example 4. Since X behaves like a standard Brownian motion on excursions from 0, the Laplace transform of the first hitting time of 0 when \(X_0=x>0\) is as for standard Brownian motion, i.e.,

In this section, besides \(A^X_t\) defined in (6), we also consider

Clearly,

where \(A_t\) and \(B_t\) are used as notation for \(A^X_t\) and \(B^X_t,\) respectively. Since 0 is a sticky point, the second term on the right-hand side of (59) is strictly positive for all \(t>0\) a.s. (if X starts at 0). We use here the notation \(D^A_n(\lambda )\) instead of \(D_n(\lambda )\) as defined in (30):

Using the explicit form (16) for the Green kernel yields for \(n=1\)

where

Comparing the expressions for the Green kernels in (13) and (16) and recalling (55), as well as Lemma 1, it is seen that for \(n\ge 2\)

where

The values for \(D^B_n(\lambda )\) are obtained in the same way, and we conclude the discussion above in the following result.

Proposition 3

It holds that

and, for \(n\ge 2\),

with \(H(\lambda )\) and \(T_n\) given in (60) and (61), respectively.

Recall the recursive Eq. (29) for the Laplace transforms of the moments of \(B_t\) (an analogous formula holds for \(A_t\)):

Next we show that this recursive equation can be solved similarly as in the case of skew Brownian motion. The corresponding equation for the Laplace transforms of \(A_t\) does not seem to allow such a simple solution.

Proposition 4

For \(n=1,2,\dotsc \),

Proof

Introducing \(U_k(\lambda ) := \lambda ^{k+1}{\widehat{B}}_k(\lambda )/k!\) as in Remark 3, Eq. (62) can be rewritten as

since

Clearly, (64) is of the same form as (56) and, consequently, by Theorem 5,

which is the same as

and the claimed formula (63) follows. \(\square \)

Consider now a regular diffusion \(X=(X_t)_{t\ge 0}\) as introduced in Sect. 2. In [31, p. 161] are given necessary and sufficient conditions in terms of the speed measure of X that ensure that \(A^X_t/t\) converges in distribution to a random variable \(\xi \) which is Lamperti-distributed, i.e., the density of \(\xi \) is given by (54). It is a fairly simple matter to check these conditions for a sticky Brownian motion with the limiting random variable being then arcsine-distributed. We conclude the paper by showing that this result is also easily obtained for both \(A_t/t\) and \(B_t/t\) using the recursive equation. Notice that in this case the convergence of the moments is equivalent to the convergence in distribution.

Proposition 5

For \(n=1,2,\dotsc \)

and

Proof

We prove (66) for \(B_t\) with induction from (62). An analogous reasoning is valid for \(A_t\) and, hence, the details are omitted. The claim for \(B_t\) holds for \(n=1\), as is easily seen from (65). Multiplying (64) with n! yields

By the induction assumption, the limit of the right-hand side exists as \(\lambda \rightarrow 0\), implying

From equation (56) and Corollary 3 it is seen that the expression in the outer parenthesis of (68) is as claimed in (66). The statement (67) follows by evoking the Tauberian theorem presented in [8, p. 423], which is applicable since \(t\mapsto {{\,\mathrm{{\mathbf {E}}}\,}}_0(B_t^n)\) is increasing. \(\square \)

References

Alili, L., Aylwin, A.: On the semi-group of a scaled skew Bessel process. Stat. Probab. Lett. 145, 96–102 (2019)

Appuhamillage, T., Bokil, V., Thomann, E., Waymire, E., Wood, B.: Occupation and local times for skew Brownian motion with applications to dispersion across an interface. Ann. Appl. Probab. 21(1), 183–214 (2011)

Barlow, M., Pitman, J., Yor, M.: On Walsh’s Brownian Motions. In: Séminaire de Probabilités, XXIII, Lecture Notes in Math., vol. 1372, pp. 275–293. Springer, Berlin (1989)

Barlow, M., Pitman, J., Yor, M.: Une Extension multidimensionnelle de la loi de l’arc sinus. In: Séminaire de Probabilités, XXIII, Lecture Notes in Math., vol. 1372, pp. 294–314. Springer, Berlin (1989)

Blei, S.: On symmetric and skew Bessel processes. Stoch. Process. Appl. 122(9), 3262–3287 (2012)

Borodin, A.N., Salminen, P.: Handbook of Brownian Motion—Facts and Formulae, 2nd corr. edn. Birkhäuser, Basel (2015)

Durrett, R.: Stochastic Calculus: A Practical Introduction. CRC Press, Boca Raton (1996)

Feller, W.: An Introduction to Probability Theory and Its Applications, vol. II. Wiley, New York (1966)

Fitzsimmons, P.J., Pitman, J.: Kac’s moment formula and the Feynman–Kac formula for additive functionals of a Markov process. Stoch. Process. Appl. 79(1), 117–134 (1999)

Gould, H.W.: Combinatorial Identities. Henry W. Gould, Morgantown, WV (1972)

Gradshteyn, I.S., Ryzhik, I.M.: Table of Integrals, Series, and Products, 7th edn. Elsevier/Academic Press, Amsterdam (2007)

Graham, R.L., Knuth, D.F., Knuth, D.E., Patashnik, O.: Concrete Mathematics, 2nd edn. Addison-Wesley, Reading (1994)

Itô, K., McKean Jr., H.P.: Diffusion Processes and Their Sample Paths. Springer, Berlin (1974)

Kac, M.: On some connections between probability theory and differential and integral equations. In: Neyman, J. (ed.) Proceedings of the Second Berkeley Symposium on Mathematical Statistics and Probability, 1950, pp. 189–215. Univ. of California Press, Berkeley (1951)

Karatzas, I., Shreve, S.E.: Brownian Motion and Stochastic Calculus, 2nd edn. Springer, Berlin (1991)

Kasahara, Y., Yano, Y.: On a generalized arc-sine law for one-dimensional diffusion processes. Osaka J. Math. 42(1), 1–10 (2005)

Knuth, D.E.: Two notes on notation. Am. Math. Mon. 99(5), 403–422 (1992)

Lamperti, J.: An occupation time theorem for a class of stochastic processes. Trans. Am. Math. Soc. 88, 380–387 (1958)

Lejay, A.: On the constructions of the skew Brownian motion. Probab. Surv. 3, 413–466 (2006)

Lejay, A., Pigato, P.: Statistical estimation of the oscillating Brownian motion. Bernoulli 24(4B), 3568–3602 (2018)

Lévy, P.: Sur certains processus stochastiques homogènes. Compos. Math. 7, 283–339 (1939)

Mordecki, E., Salminen, P.: Optimal stopping of oscillating Brownian motion. Electron. Commun. Probab. 24(50), 1–12 (2019)

Mörters, P., Peres, Y.: Brownian Motion. Cambridge University Press, Cambridge (2010)

Pitman, J., Yor, M.: Hitting, occupation and inverse local times of one-dimensional diffusions: martingale and excursion approaches. Bernoulli 9(1), 1–24 (2003)

Revuz, D., Yor, M.: Continuous Martingales and Brownian Motion, 3rd edn. Springer, Berlin (1999)

Salminen, P., Vallois, P.: On subexponentiality of the Lévy measure of the diffusion inverse local time; with applications to penalizations. Electron. J. Probab. 14, 1963–1991 (2009)

Sato, K.: Lévy Processes and Infinitely Divisible Distributions. Cambridge University Press, Cambridge (1999)

Truman, A., Williams, D.: A generalised arc-sine law and Nelson’s stochastic mechanics of one-dimensional time-homogeneous diffusions. In: M.A. Pinsky (ed.) Diffusion Processes and Related Problems in Analysis, Vol. I (Evanston, IL, 1989), pp. 117–135. Birkhäuser, Boston (1990)

Vakeroudis, S., Yor, M.: A scaling proof for Walsh’s Brownian motion extended arc-sine law. Electron. Commun. Probab. 17(63), 1–9 (2012)

Walsh, J.B.: A diffusion with a discontinuous local time. In: Temps locaux, Astérisque, vol. 52–53, pp. 37–45. Société mathématique de France, Paris (1978)

Watanabe, S.: Generalized arc-sine laws for one-dimensional diffusion processes and random walks. In: Cranston, M.C., Pinsky, M.A. (eds.) Stochastic Analysis (Ithaca, NY, 1993), Proceedings of Symposia in Pure Mathematics, Vol. 57, pp. 157–172. Amer. Math. Soc., Providence (1995)

Watanabe, S., Yano, K., Yano, Y.: A density formula for the law of time spent on the positive side of one-dimensional diffusion processes. J. Math. Kyoto Univ. 45(4), 781–806 (2005)

Yang, S.L., Qiao, Z.K.: The Bessel numbers and Bessel matrices. J. Math. Res. Expos. 31(4), 627–636 (2011)

Yano, Y.: On the joint law of the occupation times for a diffusion process on multiray. J. Theor. Probab. 30(2), 490–509 (2017)

Acknowledgements

Open access funding provided by Abo Akademi University (ABO). We thank Ernesto Mordecki for a discussion which triggered the research presented in this paper. The research of D. Stenlund was supported in part by a grant from the Magnus Ehrnrooth foundation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Proof of Lemma 1

Appendix: Proof of Lemma 1

Our proof is based on the spectral representation of the \({{\,\mathrm{{\mathbf {P}}}\,}}_x\)-density f of \(H_0\). To fix ideas let \(x>0\). The main source of the proof is [26], where also further references and results can be found. The key fact presented in [26, Prop. 3.3] is that f has the representation

where

with

Recall that m is the speed measure and S is the scale function such that \(S(0)=0\). The notation \({\widehat{\varDelta }}\) stands for a \(\sigma \)-finite measure on \((0,\infty )\) such that

From (69), it is seen that \(x\mapsto c_n(x), n=1,2,\dotsc \) are positive, continuous and increasing with \(\lim _{x\downarrow 0} c_n(x) = 0\). Hence,

where in the second step the use of dominated convergence is justified by the estimate in [26, Eq. (3.12)], i.e., for \(x<1\)

Consider now for \(k=1,2,\dots \)

Letting here \(x\downarrow 0\) and evoking (71) yields the claim in Lemma 2, i.e., (5) – once we have checked that the limit can be taken inside the integrals. For this, recall for \(x<1\) the following estimate from [26, p. 1972]:

Consequently, by Fubini’s theorem

By (70), the right-hand side of (72) is finite. Hence, by dominated convergence,

as claimed in (5).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Salminen, P., Stenlund, D. On Occupation Times of One-Dimensional Diffusions. J Theor Probab 34, 975–1011 (2021). https://doi.org/10.1007/s10959-020-00993-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-020-00993-3

Keywords

- Additive functional

- Arcsine law

- Green kernel

- Oscillating Brownian motion

- Brownian spider

- Lamperti distribution