Abstract

We introduce a new type of random walk where the definition of edge repellence/reinforcement is very different from the one in the “traditional” reinforced random walk models and investigate its basic properties, such as null versus positive recurrence, transience, as well as the speed. The two basic cases will be dubbed “impatient” and “ageing” random walks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Model

Consider an infinite connected graph G. The set of edges will be denoted by E(G). With some slight abuse of notation, \(v\in G\) will mean that v is a vertex of G. Consider a random walk \(X=\{X_n\}_{n\ge 0}\) on the vertex set of G, with the jumps restricted to E(G).

Assumption 1

(Non-degeneracy) The jumps have strictly positive probabilities for each edge in both directions. In particular, X is an irreducible Markov chain on G.

Fix a vertex \(v_0\in G\) which we call “origin”, and assume that the walk starts at this point, \(X_0=v_0\); for \(G={\mathbb {Z}}^d\), the default will be \(v_0:={\mathbf {0}}\).

Definition 1

(Passage times) A sequence \(s_0,s_1,s_2,\ldots \) of non-negative real numbers will be called a sequence of passage times if \(s_0=1\).

Definition 2

(Walk modified by passage times) We will modify the walk in such a way that if it has crossed an edge e exactly k times before, then it takes \(s_k\) units of time (as opposed to 1) to cross this edge again, in either direction; in particular, it takes one unit of time to cross the edge for the first time.

The two basic cases are as follows:

Definition 3

(Impatient and ageing walks) Let \(s_0,s_1,s_2,\ldots \) be a given sequence of passage times. We will call the corresponding (modified) walk

-

(i)

impatientFootnote 1 when \(s_k\downarrow 0\);

-

(ii)

ageing when \(s_k\uparrow \infty \).

Remark 1

If \(s_k\downarrow s_\infty >0\) or \(s_k\uparrow s_\infty <\infty \), then the questions (about recurrence, speed, etc.) we investigate in this paper will be equivalent to the corresponding ones related to the original random walk; hence, these cases are not interesting and are not considered in our paper.

To have a more formal definition, note the main feature of the process we are studying: the system’s “actual” time depends on the local time of the walk.

Definition 4

(Actual time) Let

be the number of occasions edge \(e\in E(G)\) has been crossed by time m. Then, the actual time after \(m\ge 1\) steps is

In fact, it is more convenient to work in continuous time by extending the random function T (time) as the nondecreasing function

with the convention that for \(t<1\), the value of the first sum is zero and then \(T(t)=t s_{Z((X_{0},X_{1}),0)}=t s_0=t.\)

Let the random function \(U:[0,\infty )\rightarrow [0,\infty )\) denote the right continuous generalized inverse of T, that is, \(U(t):=\sup \{s: T(s)\le t\}.\) With the exception of one case, we will work with \(s_k>0\) for all \(k\ge 0\), and then T is strictly increasing in t and \(U=T^{-1}\).

Definition 5

(Definition of \(X^{{\mathrm {imp}}}\)and\(X^{\mathrm {age}}\)via time change) The impatient random walk \(X^{{\mathrm {imp}}}\) (ageing random walk \(X^{\mathrm {age}}\)) is defined via

or, equivalently, by

where U is as above. Thus, \(X^{{\mathrm {imp}}}\) and \(X^{\mathrm {age}}\) move discontinuouslyFootnote 2 according to the actual time.

1.2 Motivation

Imagine that at every edge, one has to perform a certain task. For example, the edge represents a piece of road, where driving through is not trivial for some reason. Or that piece of connection between the vertices is itself a small maze, one has to learn to solve. Then, the more one solved it in the past, the quicker it goes, and so the impatient walk models a learning process.Footnote 3

Similarly, one can think of a model where the roads, or paths which are often used deteriorate with time, and therefore passing them becomes harder and harder and thus takes more and more time. It seems that ageing random walk can provide a good model for this situation.

While the model we introduce is somewhat reminiscent of the famous edge-reinforced random walk (see [7] for a survey) as well as the “cookie” walk (introduced in [8]), the behaviour in our model differs significantly from these latter ones, since in our case the transition probabilities remain intact, while “reinforcement” affects only passage times.

1.3 Notation

As usual, \({\mathbb {Z}}_+\) will denote the set of non-negative integers and \({\mathbb {Z}}^d\) will denote the d-dimensional integer lattice. We will write \(a_n\asymp b_n\) if \(\lim _{n\rightarrow \infty } a_n/b_n=1\) and \(a_n\sim b_n\) if \(a_n/b_n=O(1)\) and \(b_n/a_n=O(1)\). The letters \({\mathbb {P}}\) and \({{\mathbb {E}}}\) will denote the probability and expectation corresponding to the impatient/ageing random walk, respectively; when the starting point is emphasized, we will write \({\mathbb {P}}^{v_{0}}\) and \({{\mathbb {E}}}^{v_{0}}\).

1.4 Questions We Investigate

One object we would like to study is \({{\widetilde{\tau }}}\), the return time to the origin for the impatient walk, which is defined precisely as follows.

Definition 6

(Actual return time\({{\widetilde{\tau }}}\)) Define \(\tau _n\) and \({{\widetilde{\tau }}}_n\), \(n=0,1,2,\ldots \), by

(with the implicit assumption \(\tau _{-1}(v_0)=0\)) the latter being the total actual time spent by \(X^{{\mathrm {imp}}}\) during the excursion from the origin to the origin starting at \(X^{\mathrm {imp}}(T(n))=v_0\).

An interesting phenomenon which arises in our model is that, depending on the passage times, \(X^{{\mathrm {imp}}}\) can be positive recurrent even if the original walk was null recurrent.

Remark 2

The distribution of \({{\widetilde{\tau }}}_n\) will in general depend on the history of the process \({\mathcal {F}}_n:=\sigma \{X_0,X_1,\ldots ,X_{\tau _{n-1}}\}\).

Another aspect of interest is the spatial speed (spread) of the process. We now need a definition.

Definition 7

(Infinitely impatient walk) Consider the walk on \(G:={\mathbb {Z}}_+\), and an extreme case, when \(s_k=0\) for all \(k\ge 1\). That is, old edges are passed instantaneously. We call this walk the “infinitely impatient walk” and denote it by \(X^{\mathrm {inf.imp}}\).

Clearly, \(X^{\mathrm {inf.imp}}\) just steps to the right every time unit, because excursions to the left happen in “infinitesimally small” times. Hence, it spreads with constant speed. On the other hand, when \(s_k=1\), for \(k=1,2,\ldots ,\) we get the classical random walk for which the range up to n scales with \(\sqrt{n}\). So, this indicates that the scaling is always between \(\sqrt{n}\) and n, and it depends on the passage times in some way. See also Remark 3 and Theorem 8. This latter theorem will also shed some light on how the classical ArcSine Law is modified in our setting.

1.5 Basic Notions and a Useful Lemma

When the passage times are summable, \(X^{{\mathrm {imp}}}\) can only spend a finite amount of time (uniformly bounded by S) on any given edge. Accordingly, we make the following definition.

Definition 8

(Strongly and weakly impatient random walks) The random walk will be called strongly impatient if

-

(A1)

\(1\le S:=\sum _{k=0}^{\infty } s_k <\infty ,\) and weakly impatient if

-

(A2)

\(S:=\sum _{k=0}^{\infty } s_k =\infty .\)

Remark 3

(Strong/weak impatience and speed) Clearly, when the walk is strongly impatient, it spends at most S time on each edge, and so by time t (we are talking about “actual time” here), it has visited at least \(\lfloor t/S\rfloor \) different edges. This means that the spread (measured by the number of distinct edges crossed by the process up to time \(t>0\)) is linear, with the constant being between 1 / S and 1 (because it has spent at least one unit of time on each edge visited). It would be desirable to figure out how the constant depends on the passage times. A reasonable conjecture is that it is 1 / S. See Sect. 5.

In order to actually change linearity and get closer to order \(\sqrt{t}\), one needs the walk to be weakly impatient. And so the question, in this case, is how the passage times will determine the order between linear and square root.

Next, regarding recurrence, we make the following definitions.

Definition 9

(Recurrence and positive recurrence) We will call the impatient random walk

- (B1) :

-

recurrent if \({{\widetilde{\tau }}}_n(v_0)<\infty \), \({\mathbb {P}}^{v_{0}}\)-a.s. for all \(n\ge 0\) and all \(v_0\in G\);

- (B2) :

-

transient if it is not recurrent;

- (C1) :

-

positive recurrent if \({{\mathbb {E}}}^{v_{0}} {{\widetilde{\tau }}}_n(v_0)<\infty \) for all \(n\ge 0\) and all \(v_0\in G\);

- (C2) :

-

null recurrent if it is recurrent and \({{\mathbb {E}}}^{v_{0}} {{\widetilde{\tau }}}_n(v_0)=\infty \) for all \(n\ge 0\) and all \(v_0\in G\).

Clearly, if \({{\widetilde{\tau }}}_n(v_0)<\infty \) a.s. for some \(n\ge 0,v_0\in G\), then X must be recurrent, in which case \({{\widetilde{\tau }}}_n(v_0)<\infty \) a.s. for all \(n\ge 0\) and \(v_0\in G\).

A similar statement holds for positive recurrence.

Theorem 1

(Process property) Recall Assumption 1 and assume also that X on G is recurrent. Then, \(X^{\mathrm {imp}}\) is either positive recurrent or null recurrent. In other words, the properties in (C1–C2) do not depend on the choice of n or \(v_0\).

Proof

We prove this statement by verifying that:

-

(i)

for given \(v_0\in G\), the property does not depend on \(n\ge 0\);

-

(ii)

for \(n=0\), the property does not depend on choice of \(v_0\in G\).

-

(i)

Assume first that \({{\mathbb {E}}}^{v_{0}} {{\widetilde{\tau }}}_0(v_0)<\infty \). Then, because of the monotonicity of s, \({{\mathbb {E}}}^{v_{0}} {{\widetilde{\tau }}}_n(v_0)<\infty \) for \(n>0\), as well.

Now assume that \({{\mathbb {E}}}^{v_{0}} {{\widetilde{\tau }}}_0(v_0)=\infty \). Let \(e=(v_0,v_1)\) be an outgoing edge from \(v_0\). If \(n\ge 1\), then (because of the non-degeneracy of X on G) the event

$$\begin{aligned} A_n:=\{X_0=v_0,\quad X_1=v_1,\quad X_2=v_0,\quad X_3=v_1,\ldots , X_{2n-1}=v_1,\quad X_{2n}=v_0\} \end{aligned}$$(i.e. the first n excursions consist only of traversing e back and forth, so that this edge has been crossed 2n times) has a positive probability.

On \(A_n\), the next passage time on e has been set to \(s_{2n}\), while no other edge has been crossed. It is enough to show that

$$\begin{aligned} {{\mathbb {E}}}({{\widetilde{\tau }}}_n(v_0)\mid A_n)=\infty . \end{aligned}$$(1)Now, (1) would certainly be true without the impatience mechanism of the model, as we assumed that \({{\mathbb {E}}}^{v_{0}} {{\widetilde{\tau }}}_0=\infty \), so our task is to prove that this mechanism does not change the validity of (1).

To this end, let \(\eta \) denote the number of crossings of e in the \(n+1\)st excursion starting at \(v_0\). Then, either \(\eta =0\) (when both the initial and the last edges are different from e), or \(\eta =1\) (when the final edge is e and the initial edge is not, or vice versa), or \(\eta =2\) (when both the initial and the last edges are e). Recall the notion of “actual time” from Definition 4. If \(p_j:={\mathbb {P}}(\eta =j)\) for \(j=0,1,2\), then the expected actual time spent on e in the excursion with \(s_0=1\) initial passage time is \(p_1 +p_2 (1+s_1)\), whereas with \(s_{2n}\) initial passage time it is \(p_1 s_{2n}+p_2(s_{2n}+s_{2n+1}).\) These are finite quantities, and therefore resetting the initial time to \(s_{2n}\) from \(s_0\) does not change the finiteness of the expected actual return time.

-

(ii)

Assume now that \(a:={{\mathbb {E}}}^{v_0} {{\widetilde{\tau }}}_0(v_0)<\infty \). We will show that for any \(v_1\ne v_0\) we also have \({{\mathbb {E}}}^{v_1} {{\widetilde{\tau }}}_0(v_1)<\infty \). Since G is connected, without the loss of generality, we may (and will) assume that they \(v_1\) and \(v_0\) are neighbours on G.

Let \(p_{0\rightarrow 1}:={\mathbb {P}}^{v_{0}}(X_1=v_1)>0\). Note that \({{\mathbb {E}}}(\kappa \Vert X_0=v_0,X_1=v_1)<\infty ,\) where

$$\begin{aligned} \kappa&:=T(\min \{k\ge 1:\ X_k=v_0\}),\quad \text {since }\\ a&\ge p_{0\rightarrow 1}\cdot {{\mathbb {E}}}(\kappa \Vert X_0=v_0,X_1=v_1). \end{aligned}$$

Once we have this, using an argument very similar to the one in part (i), one can see that \({{\mathbb {E}}}^{v_1} (\kappa )<\infty \) also holds.

Let \(X_0=v_1\). The argument below shows even that the expected time it takes to return to \(v_1\)after visiting \(v_0\)en route is finite. Note that, because of the monotonicity of s, the expectation of the actual time for \(X^{{\mathrm {imp}}}\) to return to \(v_0\) is not more than a; the same is true for the consecutive excursions from \(v_0\) to \(v_0\). However, during each of these excursions the walk X will visit \(v_1\) with probability at least \(p_{0\rightarrow 1}\). Therefore, if \(\xi \) is an auxiliary geometric random variable with range \(\{0,1,2,\ldots \}\) and parameter \(p_{0\rightarrow 1}\) under the law \({\mathbf {P}}\), then, using that \({{\mathbb {E}}}^{v_{1}} \kappa <\infty \), we have

completing the proof. \(\square \)

Given that the original walk is recurrent, so are the impatient and ageing random walks, since \(T(n)<\infty \), whenever \(n<\infty \). The question of positive versus null recurrence is not so trivial though. The statement below follows from the obvious inequality \({{\widetilde{\tau }}}\le \tau \) for \(X^{{\mathrm {imp}}}\).

Remark 4

If the original walk X is positive recurrent, then \(X^{{\mathrm {imp}}}\) is also positive recurrent.

Finally, define \(M:=\mathsf{card}\{(X_{i-1},X_i),\ i=1,\ldots ,\tau _1(v_0)\}\), that is, M is the number of distinct edges crossed by X between consecutive visits to the origin. The following statement involving M will be useful later.

Lemma 1

(Size of excursion area) The impatient walk is

-

(i)

positive recurrent, provided that the walk is strongly impatient and \({{\mathbb {E}}}M<\infty \);

-

(ii)

null recurrent, provided that \({{\mathbb {E}}}M=\infty \).

Proof

Let \(v_0\in G\). In the strongly impatient case,

and so \({{\mathbb {E}}}_{v_{0}} M\le \tau _1(v_0)\le S{{\mathbb {E}}}_{v_{0}} M.\) The lower estimate \(M\le \tau _1(v_0)\) is, however, always true, whether the impatience is strong or not. Hence, the result follows from these observations and Theorem 1. \(\square \)

Corollary 1

(Switching from null to positive, recurrence) If the original walk X is null recurrent but \({{\mathbb {E}}}M<\infty \) holds, then strong impatience turns null recurrence into positive recurrence (for \(X^{{\mathrm {imp}}}\)).

This is the case, for example, for the random walk mentioned in the second part of Theorem 4 later.

2 Impatient Simple Random Walk on \({\mathbb {Z}}^d\), \(d=1,2\), and Generalization

It turns out that for \(d=1,2\), impatience cannot change the null-recurrent character of the simple random walk.

Theorem 2

The impatient simple random walks on \({\mathbb {Z}}^1\) and on \({\mathbb {Z}}^2\) are null recurrent for any sequence of passage times.

Proof

Let M be as before.

-

\(\underline{d=1:}\) We may assume that \(v_0={\mathbf {0}}\) and that the first step of the walk is to the right, \(X_1=1\). The probability to reach vertex \(m\ge 1\) before returning to the origin is 1 / m, hence \({\mathbb {P}}(M\ge m)=1/m\), \(m=1,2,\ldots \). (Note that the number of distinct edges visited by the one-dimensional walk coincides with its maximum during the excursion). Consequently, \({{\mathbb {E}}}M=\infty \) and by Lemma 1 the impatient random walk is null recurrent.

-

\(\underline{d=2:}\) one can easily show (e.g. by coupling) that the quantity M for \(d=2\) is stochastically larger than for \(d=1\), so \({{\mathbb {E}}}M=\infty \) here and we again can use Lemma 1. \(\square \)

Remark 5

As the following example shows, one can easily construct a null recurrent random walk on \({\mathbb {Z}}^2\) (and similarly on \({\mathbb {Z}}^d\), \(d\ge 3\)) such that the strongly impatient random walk with the same transition probabilities has \({{\mathbb {E}}}M<\infty \) and hence is positive recurrent.

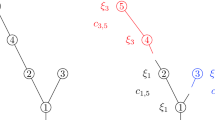

Indeed, consider the following random walk on \({\mathbb {Z}}^2\). Let \(|(x,y)|:= \max (|x|,|y|)\) be the “norm” of a vertex, let orbit \(O_k=\{(x,y): |(x,y)|=k\}\), \(k=1,2,3,\ldots \) that is, the squares with sides of length 2k parallel to the axes and the centre in the origin (please see the picture, each orbit has a distinctive colour).

Assume that the transition probabilities are the following: once the walker is on \(O_k\), it goes to the previous orbit \(O_{k-1}\) with probability \(\frac{2}{3} \cdot 2^{-k}\), or to the next orbit \(O_{k+1}\) with probability \(\frac{1}{3}\cdot 2^{-k}\), and with the remaining probability \(1-2^{-k}\), it goes clockwise staying on \(O_k\). (This needs to be adjusted somewhat at the four corners of \(O_k\).)

If we consider the embedded process, defined as the norm of the point whenever it changes, then it is a positive recurrent random walk with probability 2 / 3 going towards the origin, and 1 / 3 going away from the origin. Also, when the walker reaches orbit \(O_k\), it stays there a geometric number of steps, on average \(2^k\) steps.

The probability to reach orbit \(O_k\) before returning to the origin is of order \(2^{-k}\). Therefore, the average number of steps the walk makes is of order \( \sum _{k=1}^{\infty } 2^{-k} \cdot 2^k=\infty . \) At the same time, the average number of distinct vertices the walker visits before returning to the origin is bounded by \( \sum _{k=1}^{\infty } 2^{-k} \cdot 8k < \infty \) since each orbit has 8k distinct vertices. Consequently, the expected number of distinct vertices (and edges) visited by the walk is finite.

A generalization of the one-dimensional case is as follows. Let X be a nearest-neighbour walk with the outward drift \(b(x)\in (-1,1)\) at vertex \(x\in {\mathbb {Z}}^1\), that is,

and for \(m\ge 1\), define

so that \(I^r(b),I^l(b)\in [0,\infty ].\)

Theorem 3

(Size of drift) Assume that X on \({\mathbb {Z}}^1\) is null recurrent.

-

(a)

If either \(I^r(b)=\infty \) or \(I^l(b)=\infty \), then \(X^{\mathrm {imp}}\) on \({\mathbb {Z}}^1\) is null recurrent as well, for any sequence of passage times.

-

(b)

If \(I^r(b),I^l(b)<\infty \), then \(X^{\mathrm {imp}}\) on \({\mathbb {Z}}^1\) is positive recurrent whenever the walk is strongly impatient.

Proof

Clearly, positive recurrence holds exactly when the expected return time is finite whether we condition it on \(X_1=1\) or on \(X_1=-\,1\). Assuming, for example, that \(X_1=1\), we now link the finiteness of \(I^r(b)\) with the finiteness of the expected return time; an analogous argument holds for the case when \(X_1=-\,1\), concerning the finiteness of \(I^l(b)\), so we omit it. Thus, suppose \(X_1=1\) from now on. By Lemma 1, it is enough to show that

in the sense that both sides are either finite and equal, or they are both infinite.

To compute \({{\mathbb {E}}}M\) for the nearest-neighbour one-dimensional random walk on \({\mathbb {Z}}\), note that now M is the rightmost lattice point reached in an excursion, and use the electrical network representation (see e.g. [3]) with resistors \(R_x\) located between x and \(x+1\) satisfying

Without the loss of generality, we may set \(R_0:=1\). Therefore,

Consequently,

yielding (2). \(\square \)

3 Lamperti and Lamperti-Type Walks

In Sect. 2, we have seen that for the symmetric random walk in dimensions one and two, impatience cannot turn null recurrence into positive recurrence. We are, therefore, going to consider certain random walks which are “just barely null recurrent”, and show that in those cases impatience can actually make them positive recurrent. Our models will be related to the “Lamperti walk” (see [6]).

We first need a general result, presented in the next subsection.

3.1 A General Formula for Nearest-Neighbour Walk on \({\mathbb {Z}}_+\)

Let \(G:={\mathbb {Z}}_+\) and consider a nearest-neighbour ageing or impatient random walk \(X^*\) (i.e. \(X^*=X^{{\mathrm {imp}}}\) or \(X^*=X^{{\mathrm {age}}}\)), with the passage times \(\{s_i\}\), where the underlying random walk X is positive recurrent. For \(m\ge 2\), let (with using \({\mathbb {P}}\) for X too)

Lemma 2

For \(X^*\), the expected actual length (in time) of the first excursion from 0, denoted by \({{\widetilde{\tau }}}=\widetilde{\tau }_1(0)\) is

Proof

Since every time the walk traverses the edge \((m,m+1)\) rightwards, it must traverse it again before reaching m again, it follows that

as claimed. \(\square \)

In fact, using the method of electric networks just like in the proof of Theorem 3 (see again [3]), we are in possession of the useful formulae:

where \(R_i, i\ge 1\) are the resistors of the electrical network corresponding to our random walk.

3.2 Lamperti Walk: \(b(x)\asymp c/x\) on \({\mathbb {Z}}_+\)

As an application to Lemma 2, we obtain a theorem concerning a case when short enough passage times can turn null recurrence into positive recurrence. But first we need the following statement (see e.g. Proposition 7.1 (i)–(iii) in [2]).

Proposition 1

Let X be a random walk on \({\mathbb {Z}}_+\), with drift \(b(x)\asymp c/x\). Then, X is positive recurrent if \(c<-1/2\), null recurrent for \(c\in [-1/2,1/2]\), and transient for \(c>1/2\).

Theorem 4

Let X be a recurrent random walk on \({\mathbb {Z}}_+\), with drift \(b(x)\asymp c/x\) (recurrence means \(c\le 1/2\)). Furthermore, let the passage times satisfy \(s_j\asymp j^{-\alpha }\), \(\alpha >0\). Then, \(X^{{\mathrm {imp}}}\) is positive recurrent if and only if \(c<\min \left\{ 0,\frac{\alpha -1}{2}\right\} \).

In particular, when \(c\in [-1/2,0)\), X is null recurrent but \(X^{{\mathrm {imp}}}\) is positive recurrent whenever \(\alpha >1+2c\) (for example, when \(\alpha >1\), i.e. the impatience is strong).

Remark 6

In fact, \(X^{{\mathrm {imp}}}\) is positive recurrent for any strongly impatient walk when \(c<0\) (the proof is similar).

The diagram below summarizes the results in Theorem 4.

Proof of Theorem 4

As before, the resistors satisfy

Using the Taylor approximation of \(\log (1+z)\) for small |z| along with the integral approximation of monotone series, it is an easy exercise to show that, defining \(R_0:=1\), as \(m\rightarrow \infty \),

provided \(c\ne \frac{1}{2}\) (when, \(c=\frac{1}{2}\), one has \(\sum _{i=0}^m R_i\sim \log m\); this case will be treated at the end of the proof). Now, (4) implies that, as \(m\rightarrow \infty \),

Similarly, using (5),

By (3) and the monotonicity of \(s_j\), in order to establish whether \(X^{{\mathrm {imp}}}\) is positive recurrent or not, we thus have to analyse the finiteness of

Clearly, if \(\mathrm {const}\) denotes a constant in (0, m), then

and since \((1-\mathrm {const}/m)^{m+1}\uparrow \hbox {e}^{-\mathrm {const}}\) as \(m\rightarrow \infty \), one has

Since \(c_1/m\le a_m\le c_2/m\) for some \(0<c_1<c_2\), it follows that

with some \(c_3>0\).

We now show that on the other hand

holds with some other constant \(c_4>0\) (possibly depending on \(\alpha \)). It will then follow that

proving the statement of the theorem. To verify (6), denote \(\gamma :=\hbox {e}^{-c_1}\in (0,1)\); one has then

-

if \(\alpha >1\) then \(\mathsf {SUM}(m)\le \sum _{j=1}^{\infty } j^{-\alpha }<\infty \);

-

if \(\alpha =1\) then using Riemann-sum approximation for the function \(f(x)=1/x\),

$$\begin{aligned} \mathsf {SUM}(m)&\le \sum _{j=1}^{m} \frac{1}{j} + \sum _{j=m+1}^{\infty } \left( 1-\frac{c_1}{m}\right) ^{j+1} \frac{1}{j} \le \sum _{j=1}^{m} \frac{1}{j} + \sum _{k=1}^{\infty } \gamma ^{k} \sum _{j=km+1}^{(k+1)m} \frac{1}{j}\\&\le \log m+\sum _{k=1}^{\infty } \gamma ^{k} \int _{km}^{(k+1)m} \frac{1}{j}= \log m \\&+ \sum _{k=1}^{\infty } \log (1+1/k)\gamma ^{k} \sim \log m = h_1(m); \end{aligned}$$ -

if \(\alpha <1\) then

$$\begin{aligned} \mathsf {SUM}(m)&\le \sum _{j=1}^{\infty } \left( 1-\frac{c_1}{m}\right) ^{j+1} \frac{1}{j^{\alpha }} \le \sum _{k=0}^{\infty } \gamma ^{k} \sum _{j=km+1}^{(k+1)m} \frac{1}{j^{\alpha }} \le \sum _{k=0}^{\infty } \gamma ^k \frac{m}{(km+1)^{\alpha }}\\&\le m^{1-\alpha } \sum _{k=0}^{\infty } \frac{\gamma ^k}{k^\alpha } \sim m^{1-\alpha }=h_{\alpha }(m), \end{aligned}$$

and thus (6) has been established.

It remains to consider the case \(c=\frac{1}{2}\). Now, we have \(p_m\sim \frac{1}{\log m}\) and \(\mathsf {SUM}(m)\ge O(1)\), so \({{\mathbb {E}}}{{\tilde{\tau }}} \sim \sum _{m} p_m \mathsf {SUM}(m)\ge \sum _m \frac{1}{\log m}=\infty \) for any value of \(\alpha \ge 0\), ruling out positive recurrence for \(X^{{\mathrm {imp}}}\). Since X is recurrent, so is \(X^{{\mathrm {imp}}}\), and thus \(X^{{\mathrm {imp}}}\) is in fact null recurrent in this case. \(\square \)

3.3 Lamperti-Type Walk on \({\mathbb {Z}}_{+}\) with Very Small Inward Drift

Consider a random walk on the non-negative integers and assume now that the drift is much weaker than for the original Lamperti case, that is, that for large x,

where \(D<0\). While the corresponding walk X is obviously null recurrent for any \(D\in {\mathbb {R}}\) (compare it with the classical Lamperti case), the following result shows that there is a phase transition for the behaviour of the impatient walk \(X^{{\mathrm {imp}}}\).

Theorem 5

\(X^{{\mathrm {imp}}}\) is

-

(i)

null recurrent for any \(D\ge -1/2\) and any sequence of passage times;

-

(ii)

positive recurrent for any \(D<-1/2\), provided it is strongly impatient.

Proof

We use Theorem 3. We have

hence \(I^r(b)<\infty \) if and only if \(D<-1/2\). \(\square \)

4 Expectation Calculations for Hitting Times and Positive Recurrence in One-Dimension: The Passage Generating Function

In this section, we perform some calculations concerning one-dimensional hitting times and analyse one-sided positive/null recurrence for the walk.

We start with notation. We assume \(d=1\), and \(\sigma _n, n\in {\mathbb {Z}}\) will denote the hitting time by \(X^{\mathrm {imp}}\) of n. Then, \(T_n=\sigma _{-n}\wedge \sigma _n\) is the exit time from \((-\,n,n)\) (i.e. the hitting time of \(\{-n,n\}\)), when starting at \(-n\le x\le n\). Introduce the shorthand \(s_j^*:=s_{2j-2}+s_{2j-1}\) for \(j\ge 1,\) and note that \(\sum s_j^*=\sum s_j\in [1,\infty ]\). Let \(u\in {\mathbb {Z}}^1\) and \(v=u+h,\ h\ge 1\). With n, u and h (and thus v too) fixed, define the following quantities:

-

1.

For two-sided hitting times, define

$$\begin{aligned} \rho _m^{(x)}:={\mathbb {P}}_{x}(X\ \text {reaches}\ m\ \text {before}\ \pm n)={\mathbb {P}}_{x}(T_n>\tau _{m}), \end{aligned}$$for \(-n\le x,m\le n\).

-

2.

For one-sided hitting times define

$$\begin{aligned} r_m^{(x)}:={\mathbb {P}}_{u+x}(X\ \text {reaches}\ u+m\ \text {before}\ v)={\mathbb {P}}_{u+x}(\tau _v>\tau _{u+m}), \end{aligned}$$for all \(x,m\in {\mathbb {Z}}^1\) and note that \(r_m^{(x)}=0\) for \(m\ge h\) and \(r_m^m=1\).

Remark 7

In a concrete situation, the quantities \(\rho _m^{(x)},r_m^{(x)}\) are of course, computable as ratios involving resistors.

4.1 Passage Generating Function and Passage Radius

The following notion will be useful for impatient as well as ageing walks.

Definition 10

(Passage generating function and passage radius) The power series

will be called the passage generating function. In particular, \(\phi (1)=\sum s_j^*=\sum s_j\in [1,\infty ]\). The corresponding radius of convergence will be called the passage radius for \(\phi \):

Of course, for the original motion X (\(s_j\equiv 1\)), one has

Remark 8

(Strong impatience, super-ageing and \(R^{\mathrm {pass}}\)) Note the following.

-

(i)

It is clear that strong impatience implies that \(R^{\mathrm {pass}}\ge 1\), while weak impatience implies that \(R^{\mathrm {pass}}= 1.\)

-

(ii)

In the strongly impatient case, we can normalize the passage times so that \(\sum s_j^*=\sum s_j=1\) (at the expense of speeding up time by a constant factor), and then \(\phi \) is actually a probability generating function. This has the practical advantage that we can use well-known formulae for \(\phi \) in the strongly impatient case.

-

(iii)

In the ageing case, it is possible that \(R^{\mathrm {pass}}=0\) (“super-ageing”).

4.2 “Positive Recurrence to the Right” (PRR) for Impatient Walk

Let X be a recurrent walk on \({\mathbb {Z}}^1\).

Definition 11

(One-sided positive recurrence) We say that \(X^{\mathrm {imp}}\) is positive recurrent to the right if \({{\mathbb {E}}}_u \sigma _v<\infty \) for \(u<v\) and null recurrent to the right if \({{\mathbb {E}}}_u \sigma _v=\infty \) for \(u<v\). We will show below (see Remark 9) that this definition does not actually depend on choice of u or v.

The definition for the positive/null recurrence to the left (when \(u>v\)) is analogous.

Our fundamental result about one-sided positive recurrence is as follows.

Theorem 6

(Criterion for PRR) \(X^{\mathrm {imp}}\) is positive recurrent to the right if and only if

Proof

Let \(u<v\in {\mathbb {Z}}^1\) and define

-

\((I):={{\mathbb {E}}}_u\)(actual time spent between u and v until hitting v);

-

\(({ II}):={{\mathbb {E}}}_u\)(actual time spent between \(-\infty \) and u before hitting v).

If \(v-u=h\), then we can calculate (I) by considering the expected local actual times on the edges between u and v as follows.

In the impatient regime, \(s_j\le C\) for \(j\ge 1\) with some \(C>0\), and as \(r^{(m)}_{m-1}<1\) for all m, the last term is bounded by

Consequently, \((I)<\infty \).

Similarly, we can calculate \(({ II})\) by considering the expected local actual times on the edges which are “below” u.

Hence,

Clearly, \({{\mathbb {E}}}_u \sigma _v =(I)+({ II}),\) and, since \((I)<\infty \), we are done. \(\square \)

Remark 9

(Consistency of the definition) It is easy to see that the condition (7) does not depend on the choice of u or v (for non-degenerate X). For example, for a fixed u, if we increase h, as this is shown in the above proof: only \(({ II})\) will change; (I) is always finite.

A similar calculation shows that the condition is invariant under fixing v and changing u.

We can refine Theorem 6 as follows.

Corollary 2

Consider the following, simpler condition, involving the original walk only:

-

(a)

Then (8) is a necessary condition for the PRR property for \(X^{\mathrm {imp}}\), no matter what the passage times are, as long as we rule out that \(\lim _{m\rightarrow -\infty }r_{m-1}^{(m)}=0\).

-

(b)

If either the impatience is strong, or \(r:=\sup _{m\in {\mathbb {Z}}}r_{m-1}^{(m)}<1\) then (8) is a sufficient condition for PRR for \(X^{\mathrm {imp}}\).

Proof

-

(a)

This follows from Theorem 6 and the fact that \(\phi (t)\) is bounded away from zero if \(t>\epsilon >0\).

-

(b)

For strong impatience, \(\phi \left( r_{m-1}^{(m)}\right) \le \phi (1)<\infty \) for all m, giving the assertion. If \(r<1\), then \(\phi \left( r_{m-1}^{(m)}\right) \le \phi (r)<\infty \) for all m, and we are done again.

\(\square \)

Remark 10

(Original process) Taking \(s_k=1, k\ge 1\), we obtain

as the criterion for the positive recurrence of X, in which case the positive recurrence of \(X^{\mathrm {imp}}\) follows immediately. So, to avoid this trivial situation, we can always assume that

Example 1

(SRW) For simple random walk, no passage time sequence can lead to positive recurrence to the right.Footnote 4 Indeed, for \(m<0\) we have \(r_m^{(0)}=\frac{h}{h-m}\) and \(r_{m-1}^{(m)}=\frac{h-m}{h-m+1}\). Thus, for every \(j\ge 1\),

and so the quantity in (7) after change in the order of summation equals

More generally, we have

Corollary 3

If there exists a \(j\ge 1\) for which

then \(X^{\mathrm {imp}}\) is null recurrent to the right.

4.3 Expected Exit Times: Two-Sided

In this section, we consider ageing random walks.Footnote 5 The first piece of information about the speed we are aiming to obtain is the expected actual time to reach \(\pm \, n\) starting from the origin, that is, \({{\mathbb {E}}}_0 T_n\). Let \(n\ge 2\) and \(0\le m\le n-2\). Each edge \((m,m+1)\) can be crossed \(0,1,2,\ldots \) times before the walk reaches \(\pm \, n\), unlike the cases for recurrence to the right, where the parity of those times is fixed. Note also that the actual time spent on the edge \((n-1,n)\) is always either 0 or \(s_0\) and hence finite, as the walk can traverse it at most once before exiting \((-\,n,n)\). Similar statement holds for the edge \((-\,n, -n+1)\).

Once the walk started at 0 reaches m, which happens with probability \(\rho _m^{(0)}\), it can either exit \((-\,n,n)\) without ever crossing \((m,m+1)\) (in fact, it must be then \(-n\)), or cross this edge at least once—the latter happens with probability \(\rho _{m+1}^{(m)}\). If the walk reached \(m+1\), a similar argument shows that to cross \((m,m+1)\) once again, going leftwards, before exiting \((-\,n,n)\) has probability \(\rho _{m}^{(m+1)}\). Consequently, the expected actual time spent on the edge \((m,m+1)\) before exiting the interval equals

where we defined

and used that, by the Markov property, \(\rho _m^{(0)} \rho _{m+1}^{(m)}=\rho _{m+1}^{(0)}\). Hence, if \({\widetilde{T}}_n^{+}\) denotes the total actual time spent on the edges \((0,1),(1,2)\ldots (n-2,n-1)\) before exiting the interval, then

By the irreducibility of the walk, \(0<\rho ^{(0)}_m<1\) and \(0<\gamma _m<1\) for all relevant m, and so we conclude that \({{\mathbb {E}}}_0 {\widetilde{T}}_n^{+}\) is finite if and only if

Similarly, let \({\widetilde{T}}_n^{-}\) denote the total actual time spent on the edges \((-\,n+1,-n+2),\ldots ,(-1,0)\) before exiting the interval. Conducting an analogous argument, one can compute \({{\mathbb {E}}}_0 {\widetilde{T}}_n^{-}\), which, taking into account that

leads to the following criterion.

Recall Assumption 1.

Theorem 7

Consider a one-dimensional walk and let \(n\ge 2\). Then \({{\mathbb {E}}}_0 {\widetilde{T}}_n<\infty \) if and only if

where \(\gamma _m\) is defined by (9).

As a consequence, we can see that if ageing is either very slow or very fast, then the behaviour of the original walk becomes irrelevant.

Corollary 4

(Slow ageing and super-ageing) Consider a one-dimensional walk, and let \(n\ge 2\).

-

1.

If \(R^{\mathrm {pass}}= 1\) (“slow ageing”), then \({{\mathbb {E}}}_0 {\widetilde{T}}_n<\infty \) holds whatever the original walk is.

-

2.

If \(R^{\mathrm {pass}}=0\) (“super-ageing”), then \({{\mathbb {E}}}_0 {\widetilde{T}}_n=\infty \) holds whatever the original walk is.

5 The Spatial Spread of the Process

Let \(R_t\) denote the number of distinct edges crossed by \(X^{\mathrm {imp}}\) up to actual time \(t>0\). If \(G={\mathbb {Z}}\), then \(R_t=\max _{0\le s\le t}X^{\mathrm {imp}}_s-\min _{0\le s\le t}X^{\mathrm {imp}}_s\).

Assuming that the walk is strongly impatient with \(\sum _k s_k=S\), clearly

Problem 1

(Strongly impatient recurrent walk) Assume that \(X^{\mathrm {imp}}\) is strongly impatient and the classical random walk X on G is recurrent. Is it true for \(X^{\mathrm {imp}}\) that

Problem 2

(Strongly impatient transient walk) Assume that \(X^{\mathrm {imp}}\) is strongly impatient and the classical random walk X on G is transient. Is it true for \(X^{\mathrm {imp}}\) that

If so, what is the limit?

Problem 3

(Weakly impatient walk) Assume that \(X^{\mathrm {imp}}\) is weakly impatient. What is the asymptotic behaviour of \(R_t\) as \(t\rightarrow \infty \)?

6 Comparison with Classical ArcSine Law

Can one prove a generalized ArcSine Law? Recall that one way of formulating the classical ArcSine Law is that the proportion of time spent on the right (left) by the walker has a limiting distribution. More precisely, for \(0<x<1\),

as \(n \rightarrow \infty \) (another formulation is that if k(n) denotes the last return time to the origin up to 2n, then k(n) / n has a limiting distribution).

Now, in our case, the left-hand side depends on the passage times. Intuitively, if the random walk with the given passage times is impatient, then the limiting distribution of the proportions is more balanced than in the classical case.

In fact, if the excursion time (between returns to the origin) has finite expectation (e.g. when M has finite expectation and passage times are strongly impatient, or the cases computed in Sect. 3.1), then by the Renewal Theorem, the limit is completely balanced (\(=1/2\))!

Problem 4

(Modified ArcSine Law) What happens when X is simple random walk and the excursion time has infinite expectation? How will the passage times modify the ArcSine Law, making the limit more balanced?

The following theorem may be considered as an initial step in this direction; it indicates that for strong enough impatience, a behaviour much more balanced than for the classical ArcSine Law is exhibited.

Theorem 8

(Infinitely impatient RW) On \({\mathbb {Z}}\), consider the “infinitely impatient” random walk, \(X^{\mathrm {inf.imp}}\), that is, let \(s_j=0, j\ge 1\). Let \(R_n\) denote the time spent on the right axis up to time \(n\ge 0\), and \(L_n\) the time spent on the left axis up to time n. Then

Proof

Let \({\mathcal {R}}^n_{l,r}\) denote the event that the range of the walk up to actual time \(n\ge 2\) is [l, r] with \(l\le 0, r\ge 0\). Just like in Sect. 3.1, it is easy to see that

Of course, for \(X^{\mathrm {inf.imp}}\), the size of the range increases by one at each unit (actual) time.

Therefore, identifying right and left with “heads” and “tails”, \(R_n\) can be identified with the number of heads in the following experiment: We first toss a fair coin. Then we turn it over with probability 1 / 3, and with probability 2 / 3 we do nothing. Next we turn it over with probability 1 / 4, etc. Finally, in the n-th step we turn it over with probability \(1/(n-1)\).

Using this equivalent formulation, the claim follows from Theorem 1 in [4], where a more general “coin turning” model is investigated. \(\square \)

Remark 11

Breiman [1] proved a generalization of the ArcSine Law in the 1960s, and this was picked up by Mason et al. [5] recently. The point is that one can have a nice limit even if the law of the excursion is different from the classical one for simple random walk (in [5], take \(X_i\) to be a random sign and \(Y_i\) the excursion time for the ith excursion).

7 Space-Dependent Impatience

Here, we modify the definition of impatience given in Sect. 1. Suppose now that the passage times for an edge e do not depend on the number of times the edge has been crossed, but rather on the location of this edge in the graph G; thus \(s_0(e)=s_1(e)=\cdots =s(e)\), following our definition of the passage times. As before, fix some specific vertex \(v_0\) of the graph and call it the origin. For a vertex v in G, let the distance from \(v_0\) to v, denoted by \(\Vert v\Vert \in \{0,1,2,\ldots \}\), be the number of edges on the shortest path connecting \(v_0\) and v, and for an edge \(e=(v_1,v_2)\) let \(\Vert e \Vert =\min \{\Vert v_1\Vert ,\Vert v_2\Vert \}\).

Let X be a unit time random walk on G, i.e. a Markov chain whose transitions are restricted to the edges of G. It is then conceivable that while \(X_n\) is null recurrent, \(X^{{\mathrm {imp}}}\) may still be positive recurrent, provided \(s(e)\rightarrow 0\) sufficiently quickly as \(\Vert e\Vert \rightarrow \infty \).

For any two vertices v and u, let us define

where \(\sigma _u:=\min \{k\ge 1\mid X_k=u\}\) for u (\(\sigma _v\) is defined analogously).

Assumption 2

We assume the following about the random walk X.

-

(Uniform ellipticity) There is a universal constant \(\varepsilon >0\) such that for any edge \(e=(v_1,v_2)\) in the graph \({\mathbb {P}}(X_{n+1}=v_2\Vert X_n=v_1)\ge \varepsilon \), \({\mathbb {P}}(X_{n+1}=v_1\Vert X_n=v_2)\ge \varepsilon \).

-

(Return symmetry) There is a universal constant \(\rho \ge 1\) such that for any v in the graph

$$\begin{aligned} \rho ^{-1} \, p(v,v_0)\le p(v_0,v)\le \rho \, p(v,v_0). \end{aligned}$$

Remark 12

Observe that:

-

(a)

uniform ellipticity implies that the graph is of uniformly bounded degree, i.e. there is \(D\ge 1\) such that each vertex of the graph has at most D edges coming out of it and that there are no oriented edges on G;

-

(b)

return symmetry implies that the underlying random walk cannot be positive recurrent.

Note that part (b) of the above remark follows from the following observation. For each \(v\ne v_0\), the walk starting at \(v_0\) has the probability \(p(v_0,v)\) of hitting v before returning to the origin \(v_0\). However, after reaching v, the walk makes a geometric number of returns to v itself before coming back to \(v_0\). The expected number of such returns, including the very first visit, is \(\frac{1}{p(v,v_0)}\). Thus, the total expected number of vertices visited by the walk (with multiplicity) equals \(\sum _{v\in G} \frac{p(v_0,v)}{p(v,v_0)}\) which is infinite since each term is at least \(\rho ^{-1}\). Hence, the expected number of steps the walk makes before returning to \(v_0\) is also infinite.

Later we will show that SRW both on \({\mathbb {Z}}\) and \({\mathbb {Z}}^2\) satisfy the above assumptions.

Theorem 9

Let X be a null-recurrent random walk on G, satisfying Assumption 2, with \(X_0=v_0\). Then, \(X^{{\mathrm {imp}}}\) is positive recurrent if and only if

Proof

First of all, it is clear that

where \(\xi (e)\) is the number of times edge e is crossed (in either direction) prior to \(\sigma _{v_0}\); formally, for \(e=(u_1,u_2)\),

Consequently, to establish the statement of the theorem it suffices to show that \({{\mathbb {E}}}\xi (e)\) are bounded above and below by some positive constants not depending on e.

To this end, observe first that \(p(v_0,v)\rightarrow 0\) as \(\Vert v\Vert \rightarrow \infty \), since X is recurrent. Indeed, for \(n\ge 1\), one has

where \({\mathbb {P}}^0(\cdot ):={\mathbb {P}}(\cdot \mid X_0=v_0)\). Now \({\mathbb {P}}^0(\sigma _{v_{0}}\ge n)\) tends to zero as \(n\rightarrow \infty \) and \({\mathbb {P}}^0(\sigma _v\le n)=0\) whenever \(\Vert v\Vert >n.\)

Next, note that the number of vertices at distance at most k from \(v_0\) is bounded above by \(\sum _{i=1}^k d(d-1)^{i-1}<\infty \), so we can safely assume from now on that \(p(v_0,v)\) is small.

Let \(u\in G\) and with a slight abuse of notations let \(\xi (u)=\sum _{n=1}^{\sigma _{v_0}} 1_{X_n=u}\) be the number of times u is visited before \(\sigma _{v_0}\). Let \(e=(u_1,u_2)\in E(G)\). Since, after each visit of \(u_i\), \(i=1,2\), the walk crosses e with probability at least \(\varepsilon \), and to cross e it has to visit one of the endpoints, we have

(In fact, the right inequality holds even without expectation signs!) Therefore, if we show that the \({{\mathbb {E}}}\xi (u)\) are bounded above and below, uniformly over \(u\in G\), then we are done.

However, once vertex u is reached (and this happens with probability \(p(v_0,u)\)) the number of returns to it before hitting \(v_0\) is geometric with success probability \(p(u,v_0)\) and mean \(\frac{1}{p(u,v_0)}\), and consequently,

which belongs to the interval \([\rho ^{-1},\rho ]\) for all u, because of the second part of Assumption 2 (see also the argument after Remark 12). The theorem is thus proven. \(\square \)

Recall that the graph G is called transitive if, viewed from any vertex v in G, it is isomorphic to G viewed from \(v_0\).

Proposition 2

Let X be a recurrent simpleFootnote 6 random walk on the transitive graph G. Then, Assumption 2 is satisfied.

Proof

The uniform ellipticity assumption is trivially satisfied since X is a symmetric random walk, and each vertex is incident to the same number of edges, because of transitivity of G. Now, we have \(p(v_0,v)=p(v,v_0)\) by transitivity again, and thus one can set \(\rho =1\). \(\square \)

Corollary 5

The symmetric random walks on \({\mathbb {Z}}^1\) and on \({\mathbb {Z}}^2\) satisfy Assumption 2. Assuming \(v_0=\mathbf{0}\), if \(s(e)\sim \Vert e\Vert ^{-\alpha }\) for some \(\alpha >0\), then \(X^{{\mathrm {imp}}}\) is positive recurrent if and only if

-

\(\alpha >1\), in case of \({\mathbb {Z}}^1\);

-

\(\alpha >2\), in case of \({\mathbb {Z}}^2\).

Proof

Both \(G={\mathbb {Z}}^1\) and \(G={\mathbb {Z}}^2\) are transitive, and the symmetric random walks on them are recurrent. Hence, by Proposition 2, Assumption 2 is fulfilled. Consequently, the positive recurrence of \(X^{{\mathrm {imp}}}\) is equivalent to the finiteness of

yielding the required result. \(\square \)

Notes

The intuitive meaning is clear: the more the walker crosses the same edge, the faster it happens.

But one can also imagine that the walker is actually crossing the edge continuously with a speed depending on the passage time sequence.

Our original model was more mundane: a person window shopping who gets bored quickly by the stores of any street she has already visited.

We have already verified this with another method—see Theorem 2.

It is easy to see that in the impatient case, the expectation we study is always finite for any irreducible walk.

I.e. X jumps to each neighbour with the same probability.

References

Breiman, L.: On some limit theorems similar to the arc-sin law. Teor. Verojatnost. Primenen. 10, 351–360 (1965)

Crane, E., Georgiou, N., Volkov, S., Wade, A.R., Waters, R.J.: The simple harmonic urn. Ann. Probab. 39(6), 2119–2177 (2011)

Doyle, P.G., Snell, L.: Random Walks and Electric Networks. Carus Mathematical Monographs, vol. 22. Mathematical Association of America, Washington, DC (1984)

Engländer, J., Volkov, S.: Turning a coin over instead of tossing it. J. Theor. Probab. (2016). https://doi.org/10.1007/s10959-016-0725-1

Kevei, P., Mason, D.M.: The asymptotic distribution of randomly weighted sums and self-normalized sums. Electron. J. Probab. 17(46), 1–21 (2012)

Lamperti, J.: Criteria for the recurrence or transience of stochastic process. I. J. Math. Anal. Appl. 1, 314–330 (1960)

Pemantle, R.: A survey of random processes with reinforcement. Probab. Surv. 4, 1–79 (2007)

Zerner, M.P.W.: Multi-excited random walks on integers. Probab. Theory Relat. Fields 133(1), 98–122 (2005)

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Bálint Tóth on the occasion of his 60th birthday.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The hospitality of Microsoft Research, Redmond, and of the University of Washington, Seattle, is gratefully acknowledged by the first author. Research of the second author was supported in part by the Swedish Research Council Grant VR2014–5157.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Engländer, J., Volkov, S. Impatient Random Walk. J Theor Probab 32, 2020–2043 (2019). https://doi.org/10.1007/s10959-019-00901-4

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-019-00901-4