Abstract

In this paper, we suggest a new framework for analyzing primal subgradient methods for nonsmooth convex optimization problems. We show that the classical step-size rules, based on normalization of subgradient, or on knowledge of the optimal value of the objective function, need corrections when they are applied to optimization problems with constraints. Their proper modifications allow a significant acceleration of these schemes when the objective function has favorable properties (smoothness, strong convexity). We show how the new methods can be used for solving optimization problems with functional constraints with a possibility to approximate the optimal Lagrange multipliers. One of our primal-dual methods works also for unbounded feasible set.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Motivation. The first method for unconstrained minimization of nonsmooth convex function was proposed in [8]. This was a primal subgradient method

with constant step sizes \(h_k \equiv h > 0\), where \(f'(x_k)\) is a subgradient of the objective function at the point \(x_k\). In the next years, there were developed several strategies for choosing the steps (see [7] for historical remarks and references). Among them, the most important one is the rule of the first-order divergent series:

with the optimal choice \(h_k = O(k^{-1/2})\). As a variant, it is possible to use in (2.9) the normalized directions

Another alternative for the step sizes is based on the known optimal value \(f^*\) [7]:

In both cases, the corresponding schemes, as applied to functions with bounded subgradients, have the optimal rate of convergence \(O(k^{-1/2})\), established for the best value of the objective function observed during the minimization process [2]. The presence of simple set constraints was treated just by applying to the minimization sequence an Euclidean projection onto the feasible set.

The next important advancement in this area is related to development of the mirror descent method (MDM) ( [2], see also [1]). In this scheme, the main information is accumulated in the dual space in the form of aggregated subgradients. For defining the next test point, this object is mapped (mirrored) to the primal space by a special prox-function, related to a general norm. Thus, we get an important possibility of describing the topology of convex sets by the appropriate norms.

After this discovery, during several decades, the research activity in this field was concentrated on the development of dual schemes. One of the drawbacks of the classical MDM is that the new subgradients are accumulated with the vanishing weights \(h_k\). It was corrected in the framework of dual averaging [4], where the aggregation coefficients can be even increasing, and the convergence in the primal space is achieved by applying some vanishing scaling coefficients. Another drawback is related to the fact that the convergence guarantees are traditionally established only for the best values of the objective function. This inconvenience was eliminated by development of quasi-monotone dual methods [6], where the rate of convergence is proved for all points of the minimization sequence.

Thus, at some moment, primal methods were almost forgotten. However, in this paper we are going to show that in some situations the primal schemes are very useful. Moreover, there is still space for improvement of the classical methods. Our optimism is supported by the following observations.

Firstly, from the recent developments in Optimization Theory, it becomes clear that the size of subgradients of objective functions for the problems with simple set constraints must be defined differently. Hence, the usual norms in the rules (1.3) and 1.4) can be replaced by more appropriate objects.

Secondly, for an important class of quasi-convex functions, linear approximations do not work properly. Hence, for corresponding optimization problems, only the primal schemes can be used. Finally, as we will see, the proper primal schemes provide us with a very simple and natural possibility for approximating the optimal Lagrange multipliers for problems with functional constraints, eliminating somehow the heavy machinery, which is typical for the methods based on Augmented Lagrangian.

Contents. In Sect. 2, we present a new subgradient method for minimizing the quasi-convex function on a simple set. Its justification is based on a new concept of directional proximity measure, which is a generalization of the old technique initially presented in [3]. In this method, we apply an indirect strategy for choosing the step size, which needs a solution of a simple univariate equation. In unconstrained case, this strategy is reduced to the normalization step (1.3). The main advantage of the new method is the possibility of automatic acceleration for functions with Hölder-continuous gradients.

In Sect. 3, we present a method for solving the composite minimization problem with a max-type objective function. For choosing the step size, we use a proper generalization of the rule (1.4), based on an optimal value of the objective. This method admits a linear rate of convergence for smooth strongly convex functions (see Sect. 4). Note that a simple example demonstrates that the classical rule does not benefit from strong convexity. Method of Sect. 3 automatically accelerates on functions with Hölder continuous gradient.

In Sect. 5, we consider a minimization problem with a single max-type constraint containing an additive composite term. For this problem, we present a switching strategy, where the steps for the objective function are based on the rule of Sect. 2, and for improving the feasibility we use the step-size strategy of Sect. 3. For controlling the step sizes, we suggest a new rule of the second-order divergent series:

For the bounded feasible sets, it eliminates an unpleasant logarithmic factor in the convergence rate. The method automatically accelerates for the problem with smooth functional components. It is interesting that the rates of convergence for the objective function and the constraints can be different.

The remaining sections of the paper are devoted to the methods, which can approximate optimal Lagrange multipliers for convex problems with functional inequality constraints. In Sect. 6, we consider the simplest switching strategy of this type, where for the steps with the objective function we use the rule of Sect. 2, and for the steps with violated constraints we use the rule of Sect. 3. In the method of Sect. 7, both steps are based on the rule of Sect. 2. In both cases, we obtain the rates of convergence for infeasibility of the generated points, and the upper bound for the duality gap, computed for the simple estimates of the optimal dual multipliers. Such an estimate is formed as a sum of steps at active iterations for each violated constraint divided by the sum of steps at iterations when the objective function was active.

In Sect. 8, we provide the theoretical guarantees for our estimates of the optimal Lagrange multipliers in terms of the value of dual function. They depend on the depth of Slater condition of our problem. Finally, in Sect. 9, we present a switching method, which can generate the approximate dual multipliers for problems with unbounded feasible set.

Notation. Denote by \({\mathbb {E}}\) a finite-dimensional real vector space, and by \({\mathbb {E}}^*\) its dual space composed by linear functions on \({\mathbb {E}}\). For such a function \(s \in {\mathbb {E}}^*\), denote by \(\langle s, x \rangle \) its value at \(x \in {\mathbb {E}}\). For measuring distances in \({\mathbb {E}}\), we use an arbitrary norm \(\Vert \cdot \Vert \). The corresponding dual norm is defined in a standard way:

Sometimes it is convenient to measure distances in \({\mathbb {E}}\) by Euclidean norm \(\Vert \cdot \Vert _B\). It is defined by a self-adjoint positive-definite linear operator \(B: {\mathbb {E}}\rightarrow {\mathbb {E}}^*\) in the following way:

In case of \({\mathbb {E}}= {\mathbb {R}}^n\), for \(x \in {\mathbb {R}}^n\), we use the notation \(\Vert x \Vert ^2_2 = \sum \limits _{i=1}^n (x^{(i)})^2\).

For a differentiable function \(f(\cdot )\) with convex and open domain \(\mathrm{dom \,}f \subseteq {\mathbb {E}}\), denote by \(\nabla f(x) \in {\mathbb {E}}^*\) its gradient at point \(x \in \mathrm{dom \,}f\). If f is convex, it can be used for defining the Bregman distance between two points \(x, y \in \mathrm{dom \,}f\):

In this paper, we develop new proximal-gradient methods based on a predefined prox-function \(d(\cdot )\), which can be restricted to a convex open domain \(\mathrm{dom \,}d \subseteq {\mathbb {E}}\). This domain always contains the basic feasible set of the corresponding optimization problem. We assume that \(d(\cdot )\) is continuously differentiable and strongly convex on \(\mathrm{dom \,}d\) with parameter one:

Thus, combining the definition (1.6) with inequality (1.7), we get

2 Subgradient method for quasi-convex problems

Consider the following constrained optimization problem:

where the function \(f_0(\cdot )\) is closed and quasi-convex on \(\mathrm{dom \,}f_0\) and the set \(Q \subseteq \mathrm{dom \,}f_0\) is closed and convex. Denote by \(x^*\) one of the optimal solutions of (2.1) and let \(f_0^* = f_0(x^*)\). We assume that

Let us assume that at any point \(x \in Q\) it is possible to compute a vector \(f_0'(x) \in {\mathbb {E}}^* \setminus \{0\}\), satisfying the following condition: for any \(y \in {\mathbb {E}}\), we have:

(If \(y \not \in \mathrm{dom \,}f_0\), then \(f_0(y) {\mathop {=}\limits ^{\textrm{def}}}+ \infty \).) If \(f_0(\cdot )\) is differentiable at x, then \(f'_0(x) = \nabla f_0(x)\).

In order to justify the rate of convergence of our scheme, we need the following characteristic of problem (2.1):

If \(y \not \in \mathrm{dom \,}f_0\) and \(r = \Vert y - x^* \Vert \), then \(\mu (r) = + \infty \). In view of assumption (2.2), this function is finite at least in some neighborhood of the origin.

We say that vector \(s \in {\mathbb {E}}\) defines a direction in \({\mathbb {E}}\) if \(\Vert s \Vert = 1\). If \(\langle f_0'(x), s \rangle > 0\), we call it a recession direction of function \(f_0(\cdot )\) at point \(x \in Q\). Using such a direction, we can define the directional proximity measure of point \(x \in Q\) as follows:

Lemma 1

Let s be a recession direction at point \(x \in Q\). Then

Proof

Indeed, let us define \(y = x^* + \delta _s(x) s\). Then

Since \(f_0(\cdot )\) is quasi-convex, this means that \(f_0(y) {\mathop {\ge }\limits ^{(2.3)}} f_0(x)\). Therefore,

\(\square \)

In our analysis, we use the following univariate functions:

where \({{\bar{x}}} \in Q\). The optimal solution of this optimization problem is denoted by \(T_{{{\bar{x}}}}(\lambda )\). Note that function \(\varphi _{{{\bar{x}}}}(\cdot )\) is convex and continuously differentiable with the following derivative:

Thus, \(\varphi _{{{\bar{x}}}}(0) = 0\), \(T_{{{\bar{x}}}}(0) = {{\bar{x}}}\), and \(\varphi '_{{{\bar{x}}}}(0) = 0\). Consequently, \(\varphi _{{{\bar{x}}}}(\lambda ) \ge 0\) for all \(\lambda \ge 0\).

The basic subgradient method for solving the problem (2.1) looks as follows.

Lemma 2

At each iteration \(k \ge 0\) of method (2.9), for any \(x \in Q\), we have

Proof

Note that the first-order optimality condition for the problem at Step b) can be written as follows:

Therefore, we have

\(\square \)

In our method, we will use an indirect control of the dual step sizes \(\{ \lambda _k \}_{k\ge 0}\), which is based on a predefined sequence of primal step-size parameters \(\{ h_k \}_{k\ge 0}\). As we will prove by Lemma 3, the convergence of the process can be derived, for example, from the following standard conditions:

Then, inequality (2.10) justifies the choice of the dual step-size parameter \(\lambda _k\) as a solution to the following equation:

Example 1

If \(Q = {\mathbb {E}}\) and \(d(x) = \hbox { }\ {1 \over 2}\Vert x \Vert ^2_B\), then \(\varphi _{{{\bar{x}}}}(\lambda ) {\mathop {=}\limits ^{(2.7)}} {\lambda ^2 \over 2} (\Vert f'_0({{\bar{x}}}) \Vert ^*_B)^2\), and equation (2.13) gives us \(\lambda _k = h_k /\Vert f'_0(x_k) \Vert ^*_B\). In this case, method (2.9) coincides with the classical variant of subgradient method \(x_{k+1} = x_k - h_k {f'_0(x_k) \over \Vert f'_0(x_k) \Vert ^*_B}\). However, for \(Q \ne {\mathbb {E}}\), the rule (2.13) allows a proper scaling of the step size by the boundary of feasible set. \(\square \)

At each iteration of method (2.9), the corresponding value of \(\lambda _k\) can be found by an efficient one-dimensional search procedure, based, for example, on a Newton-type scheme. Since the latter scheme has local quadratic convergence, we make a plausible assumption that it is possible to compute an exact solution of the equation (2.13). This solution has the following important interpretation (in view of (2.8)):

At the same time, we have \(\langle \lambda _k f_0'(x_k) + \nabla d(x_{k+1}) - \nabla d(x_k), x_k - x_{k+1} \rangle {\mathop {\ge }\limits ^{(2.11)}} 0\). Thus

Hence

Our complexity bounds follow from the rate of convergence of the following values:

Lemma 3

Let condition (2.13) be satisfied. Then for any \(N \ge 0\), we have

Proof

Indeed, in view of inequality (2.10), for \(r_k = \beta _d(x_k,x^*)\), we have

On the other hand,

Hence,

Summing up these inequalities for \(k = 0, \dots , N\), we obtain inequality (2.16). \(\square \)

Let us look now at one example of the rate of convergence of method (2.9) for an objective function from a nonstandard problem class. For simplicity, let us measure distances by Euclidean norm \(\Vert x \Vert _B\) (see (1.5)). In this case, we can take \(d(x) = \hbox { }\ {1 \over 2}\Vert x \Vert ^2_B\) and get \(\beta _d(x,y) = \hbox { }\ {1 \over 2}\Vert x - y \Vert ^2_B\). Let us assume that the function \(f_0(\cdot )\) in problem (2.1) is p-times continuously differentiable on \({\mathbb {E}}\) and its pth derivative is Lipschitz-continuous with constant \(L_p\). Then we can bound the function \(\mu (\cdot )\) in (2.4) as follows:

where all norms for derivatives are induced by \(\Vert \cdot \Vert _B\).

Let us fix the total number of steps \(N \ge 1\) and assume the bound \(R_0 \ge \Vert x_0 - x^* \Vert _B\) be available. Then, defining the step sizes

we get \(\delta ^*_N = \min \limits _{0 \le k \le N} \delta _k \; {\mathop {\le }\limits ^{(2.16)}}{R_0 \over \sqrt{N+1}}\). Hence, in view of inequality (2.18), we have

Note that the first p coefficients in estimate (2.20) depend on the local properties of the objective function at the solution, and only the last term employs the global Lipschitz constant for the pth derivative. Clearly, we do not need to know the bounds for all these derivatives in order to define the step-size strategy (2.19).

3 Step-size control for max-type convex problems

Let us consider now the following problem of composite optimization

where \(\psi (\cdot )\) is a simple closed convex function, and

with all \(f_i(\cdot )\), \(1 \le i \le m\), being closed and convex on \(\mathrm{dom \,}\psi \). Denote by

the linearization of function \(f(\cdot )\), and by \(x_* \in \mathrm{dom \,}\psi \) an optimal solution of this problem.

Similarly to (2.7), let us define the following univariate functions:

where \({{\bar{x}}} \in \mathrm{dom \,}\psi \). The unique optimal solution of this optimization problem is denoted by \({{\hat{T}}}_{{{\bar{x}}}}(\lambda )\). Note that function \({{\hat{\varphi }}}_{{{\bar{x}}}}(\cdot )\) is convex and continuously differentiable with the following derivative:

where \({{\hat{T}}} = {{\hat{T}}}_{{{\bar{x}}}}(\lambda )\). Thus, \({{\hat{\varphi }}}_{\bar{x}}(0) = 0\), \({{\hat{T}}}_{{{\bar{x}}}}(0) = {{\bar{x}}}\), and \({{\hat{\varphi }}}'_{\bar{x}}(0) = 0\). Hence, \({{\hat{\varphi }}}_{{{\bar{x}}}}(\lambda ) \ge 0\) for all \(\lambda \ge 0\). Let us prove the following variant of Lemma 2.

Lemma 4

Let \({{\bar{x}}} \in \mathrm{dom \,}\psi \) and \({{\hat{T}}} = {{\hat{T}}}_{{{\bar{x}}}}(\lambda )\) for some \(\lambda \ge 0\). Then

Proof

In view of the first-order optimality condition for the minimization problem in (3.3), for all \(x \in \mathrm{dom \,}\psi \), we have

Therefore, we get

\(\square \)

In this section, we analyze the following optimization scheme.

Suppose that the optimal value \(F_*\) is known. In view of convexity of the function \(f(\cdot )\), we have

Hence, for points \(\{ x_k \}_{k \ge 0}\), generated by method (3.7), we have

This observation explains the following step-size strategy:

This strategy has a natural optimization interpretation.

Lemma 5

Let \(\lambda _k\) be defined by (3.9). Then

Proof

Indeed,

\(\square \)

Lemma 5 has two important consequences allowing us to estimate the rate of convergence of method (3.7) with the step-size rule (3.9). Namely, for any \(k \ge 0\) we have

Note that method (3.7) is not monotone. However, inequality (3.11) gives us a global rate of convergence for the following characteristic:

where \(r_0 = \beta _d(x_0,x_*)\).

Theorem 1

Let all functions \(f_i(\cdot )\) have Hölder-continuous gradients:

where \(\nu \in [0,1]\) and \(L_{\nu } \ge 0\). Then for the step-size rule (3.9) in method (3.7) we have

Proof

Indeed, let \(\rho _k = \Vert x_{i_k} - x_{i_k+1} \Vert \) for some \(i_k\), \(0 \le i_k \le k\). Then for any \(k \ge 0\) we have

It remains to use inequality (3.13). \(\square \)

Note that the step-size strategy (3.9) does not depend on the Hölder parameter \(\nu \) in the condition (3.14). Hence, the number of iterations of method (3.7), (3.9), ensuring an \(\epsilon \)-accuracy in function value, is bounded from above by the following quantity:

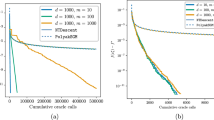

To conclude the section, let us show that the step-size rule (3.9) can behave much better than the classical one.

Example 2

Let \({\mathbb {E}}= {\mathbb {R}}^2\), \(\Vert x \Vert ^2 = x^T x\), and \(f(x) = \hbox { }\ {1 \over 2}(x^{(1)})^2 + \hbox { }\ {1 \over 2}(x^{(2)}-1)^2\) with \(\nabla f(x) = (x^{(1)}, x^{(2)}-1)^T\). Define \(\psi (x) = \textrm{Ind} \, Q\), the indicator function of \(Q = \Big \{ x \in {\mathbb {R}}^2:\; x^{(2)} \le 0 \Big \}\). Then \(f_*=0.5\). Consider the point \(x_k= (x^{(1)}_k, 0)^T\). By the classical step-size rule, we have

Thus, \(x^{(2)}_{k+1} = 0\) and \(x^{(1)}_{k+1} = x^{(1)}_k - {\left( x^{(1)}_k\right) ^3 \over 2\left( 1+ \left( x^{(1)}_k\right) ^2 \right) }\). This means that \(x_k^{(1)} = O(k^{-1/2})\).

On the other hand, the rule (3.10) as applied to the same point \(x_k\) defines \(x = x_{k+1}\) as an intersection of two lines

This means that \(x^{(1)}_k \left( x^{(1)}_k - x^{(1)}_{k+1}\right) = \hbox { }\ {1 \over 2}\left( x^{(1)}_k\right) ^2\), and we get the linear rate of convergence to the optimal point \(x_* = 0\). \(\square \)

4 Step-size control for problems with smooth strongly convex components

A linear rate of convergence, demonstrated by method (3.7) in the Example 2, provides us with motivation to look at behavior of this method on smooth and strongly convex problems. Let us introduce the Euclidean metric by (1.5) and define \(d(x) = \hbox { }\ {1 \over 2}\Vert x \Vert ^2_B\), \(x \in {\mathbb {E}}\). In this case,

Suppose that all functions \(f_i(\cdot )\), \(i = 1, \dots , m\) in (3.2) are continuously differentiable and have Lipschitz-continuous gradients with the same constant \(L_f \ge 0\):

In the notation of Sect. 3, these inequalities imply the following upper bound for the objective function of problem (3.1):

Hence, for the sequence of points \(\{ x_k \}_{k \ge 0}\), generated by method (3.7), we have

Thus, we can prove the following statement.

Theorem 2

Under condition (4.1), the rate of convergence of method (3.7) can be estimated as follows:

If in addition functions \(f_i(\cdot )\), \(i = 1, \dots , m\), are strongly convex:

with \(\mu _f > 0\), then the rate of convergence is linear:

Proof

Indeed, substituting inequality (4.3) into relation (3.11), we have

Summing up these inequalities for \(k = 0, \dots , T-1\), we get inequality (4.4).

If in addition, the conditions (4.5) are satisfied, then

Note that the first-order optimality conditions for problem (3.1) can be written in the following form:

Therefore, inequality (4.8) implies that

Thus, \(\Vert x_{k+1} - x_* \Vert ^2_B {\mathop {\le }\limits ^{(4.7)}} {L_f \over \mu _f + L_f} \Vert x_k - x_* \Vert ^2_B\), and we get inequality (4.6). \(\square \)

5 Convex minimization with max-type composite constraint

Let us show that both step-size strategies described in Sects. 2 and 3 can be unified in one scheme for solving constrained optimization problems. In this section, we deal with the problem in the following semi-composite form:

where \(\psi (\cdot )\) is a simple closed convex function, \(Q \subseteq \mathrm{dom \,}\psi \) is a closed convex set, and

with all functions \(f_i(\cdot )\), \(i = 0, \dots , m\), being closed and convex on \(\mathrm{dom \,}\psi \). We assume Q to be bounded:

In order to solve problem (5.1), we propose a method, which combines two different types of iterations. One of them improves the feasibility of the current point, and the second one improves its optimality.

Iteration of the first type is based on the machinery developed in Sect. 3 with the particular value \(F_* = 0\). It is applied to some point \(x_k \in Q\).

Note that for \(F(x_k) \le 0\), we have \(\lambda _k = 0\) and \({{\hat{T}}}_{x_k}(\lambda _k) = x_k\).

For iteration k of the second type, we need to choose a primal step-size bound \(h_k > 0\). Then, at the test point \(y_k \in Q\), we define the function \(\varphi _{y_k}(\cdot )\) by (2.7) and apply the following rule:

Since in both rules parameters \(\lambda _k\) are functions of the test points, we will use shorter notations \(T(y_k)\) and \({{\hat{T}}}(x_k)\). Consider the following optimization scheme.

Thus, method (5.5) is defined by a sequence of primal step bounds \({{{\mathcal {H}}}} = \{ h_k \}_{k \ge 0}\). However, since in (5.5) we apply a switching strategy, it is impossible to say in advance what will be the type of a particular kth iteration. Therefore, as compared with the classical conditions (2.12), we need additional regularity assumptions on \({{{\mathcal {H}}}}\).

It will be convenient to relate this sequence with another sequence of scaling coefficients \({{{\mathcal {T}}}} = \{ \tau _k \}_{k\ge 0}\), satisfying the second-order divergence condition (compare with (2.12)).

Note that condition (5.6) ensures \(\sum \limits _{k=0}^{\infty } \tau _k = + \infty \). Thus, it is stronger than (2.12). In order to transform the sequence \(\mathcal{T}\) into convergence rate of some optimization process, we need to introduce the following characteristic.

Definition 1

For a sequence \({{{\mathcal {T}}}}\), the integer-valued function a(k), \(k \ge 0\), is called the divergence delay (of degree two) if \(a(k) \ge 0\) is the minimal integer value such that

Clearly, condition (5.6)\(_b\) ensures that all values a(k), \(k \ge 0\), are well defined. At the same time, from (5.6)\(_a\), we have \(a(k+1) \ge a(k)\) for any \(k \ge 0\).

Let us give two important examples of such sequences.

Example 3

(a) Let us fix an integer \(N \ge 0\) and define

Then condition (5.6)\(_a\) is valid and \(\sum \limits _{i=k}^{k+a(k)} \tau _i^2 = {a(k)+1 \over N+1}\). Thus, \(a(k)= N\).

(b) Consider the following sequence:

For \(k \ge 1\), denote by \(S_k = \sum \limits _{i=0}^{k-1} \tau _i^2\). Then \(S_1 = 2\), \(S_2 = 3\), and the difference

is monotonically increasing in \(k \ge 1\). Thus, \(a(k) \le k-1\) for all \(k \ge 1\). \(\square \)

Let us analyze the performance of the method (5.5) with an appropriately chosen sequence \({{{\mathcal {H}}}}\). Namely, let us choose

where the sequence \({{{\mathcal {T}}}}\) satisfies condition (5.6) and D is taken from (5.2).

We are interested only in the values of objective function \(f_0(\cdot )\) computed at the points with small values of the functional constraint \(F(\cdot )\). As we will see, these points are involved in Step 2b). For the total number of steps \(N \ge 1 + a(0)\), denote

Let us define the following directional proximity measures:

We are interested in the rate of convergence to zero of the following characteristic:

Theorem 3

For any \(N \ge 1 + a(0)\), the number \(k(N)\ge 0\) is well defined and \(\delta _N^* < h_{k(N)}\).

Moreover, if all functions \(f_i(\cdot )\), \(i = 1, \dots , m\), have Hölder-continuous gradients on Q with parameter \(\nu \in [0,1]\) and constant \(L_{\nu }>0\), then, for any \(k \in \mathcal{F}_N\), we have

Proof

Let us bound the distances \(r_k = \beta _d(x_k,x_*)\) for \(k \ge k(N)\). If \(k \not \in {{{\mathcal {F}}}}_N\), then

If \(k \in {{{\mathcal {F}}}}_N\), then

Summing up these inequalities for \(k = k(N), \dots N-1\), we obtain

Thus, \(\delta ^*_N < h_{k(N)}\). Finally, inclusion \(k \in {{{\mathcal {F}}}}_N\) implies

Since \(F_* = 0\), we get

and this is inequality (5.12). \(\square \)

As a straightforward consequence of Theorem 3, we have the following rate of convergence in function value:

where the function \(\mu (\cdot )\) is defined by (2.4).

Thus, the actual rate of convergence of the method (5.5) depends on the rate of convergence of sequence \({{{\mathcal {T}}}}\) and the magnitude of divergence delay. For example, for the choice (5.9), in view of inequality \(a(k) \le k-1\), we have

Hence, for \(N = 2M\), the choice (5.9) ensures \(k(N) \ge M = N/2\), and we have

It is interesting that the rate of convergence (5.12) for the constraints can be higher than the rate (5.13) for the objective function.

6 Approximating Lagrange Multipliers, I

Despite the good convergence rate (5.13), when the number of functional components m in problem (5.1) is big, the implementation of one iteration (5.3) can be very expensive. In this section, we consider a simpler switching strategy for solving convex optimization problems with potentially many functional constraints. Our method is also able to approximate the corresponding optimal Lagrange multipliers.

Consider the following constrained optimization problem:

where all functions \(f_i(\cdot )\) are closed and convex, \(i = 0, \dots , m\), and set Q is closed, convex, and bounded. Denote by \(x^*\) one of its optimal solutions. Sometimes we use notation \(\bar{f}(x) = (f_1(x), \dots , f_m(x))^T \in {\mathbb {R}}^m\). Denote \({{{\mathcal {F}}}} = \{ x \in Q: \; {{\bar{f}}}(x) \le 0 \}\).

We assume that functions \(f_i(\cdot )\) are subdifferentiable on Q and it is possible to compute their subgradients with uniformly bounded norms:

Denote \({{\bar{M}}} = (M_1, \dots , M_m)^T \in {\mathbb {R}}^m\).

For the set Q, we assume existence of a prox-function \(d(\cdot )\) defining the corresponding Bregman distance \(\beta _d(\cdot ,\cdot )\). In our methods, we need to know a constant D such that

Let us introduce the Lagrangian

and the dual function \(\phi ({{\bar{\lambda }}}) = \min \limits _{x \in Q} {{{\mathcal {L}}}}(x,{{\bar{\lambda }}})\). By Sion’s theorem, we know that

Our first method is based on two operations presented in Sects. 2 and 3. For defining iterations of the first type, we need functions \(\varphi _{i,x}(\lambda )\) with \(\lambda \ge 0\), parameterized by \(x \in Q\), and defined as follows:

An appropriate value of \(\lambda \) can be found from the equation

and used for setting \(x_{k+1} = T_{i,x_k}(\lambda )\), the optimal solutions of problem (6.5). In this section, we perform this iteration only for the objective function (\(i=0\)). The possibility of using the steps \(T_{i,x_k}(\lambda )\) for inequality constraints is analyzed in Sects. 7 and 9.

The iteration of the second type is trying to improve feasibility of the current point \(x_k \in Q\) (compare with (5.3)). It needs computation of all Bregman projections

for indexes \(i = 1, \dots , m\). The first-order optimality condition for problem (6.7) is as follows:

where \(T = {{\hat{T}}}_i(x_k)\) and \(\lambda = \lambda _i(x_k) \ge 0\) is the optimal Lagrange multiplier for the linear inequality constraint in (6.7). We assume that this multiplier can be also computed. Note that for \(x = x_k\) we have

Hence, if \(T \ne x_k\), then \(\lambda _i(x_k) > 0\).

Consider the following optimization scheme.

Let us prove that method (6.9) can find an approximate solution of the primal-dual problem (6.1), (6.4). Let us choose a sequence \({{{\mathcal {T}}}}\) satisfying condition (5.6) and define

where D satisfies inequality (6.3). Then, for the number of steps \(N \ge 1 + a(0)\), let us define function k(N) by the first equation in (5.11). Now we can define the following objects:

Clearly, the vectors of dual multipliers \({{\bar{\lambda }}}_*(N) = (\lambda ^*_1(N), \dots , \lambda ^*_m(N))\) are defined only if \(\mathcal{A}_0(N) \ne \emptyset \). As usual, the sum over an empty set of iterations is assumed to be zero.

Theorem 4

Let the sequence of step bounds \({{{\mathcal {H}}}}\) in method (6.9) be defined by (6.10). Then for any \(N \ge 1 + a(0)\), we have \({{{\mathcal {A}}}}_0(N) \ne \emptyset \). Moreover, if all functions \(f_i(\cdot )\), \(0 = 1, \dots , m\), satisfy (6.2), then

Proof

Indeed, we have

Let us fix some \(x \in Q\) and denote \(r_k(x) = \beta _d(x_k,x)\). Then, for \(k \in {{{\mathcal {A}}}}_0(N)\), we have

At the same time, from the first-order optimality condition for problem (6.5), we have

Hence,

On the other hand,

Therefore,

Let us assume now that \({{{\mathcal {A}}}}_0(N) = \emptyset \). Then \(B_N < 0\), and this is impossible since the point \(x_*\) is feasible. Thus, we have proved that \({{{\mathcal {A}}}}_0(N) \ne \emptyset \).

Further, for any \(k \in {{{\mathcal {A}}}}_0(N)\) we have

Hence, \(r_k^0 = \Vert x_{k+1} - x_k \Vert > 0\) and we conclude that

Thus, we have proved that \(B_N \le M_0 \sigma _0(N) h_{k(N)}\). Dividing this inequality by \(\sigma _0(N) > 0\), we get the bound (6.13).

Finally, note that for any \(k \in {{{\mathcal {A}}}}_0(N)\) we have \(\Vert {{\hat{T}}}_i(x_k) - x_k \Vert \le h_k\) for all i, \(1 \le i \le m\). Note that either \(f_i(x_k) \le 0\), or \(f_i(x_k) > 0\) and \(f_i(x_k) + \langle f'_i(x_k), {{\hat{T}}}_i(x_k) - x_k) \rangle = 0\). In the latter case, we have

Thus, inequality (6.12) is proved. \(\square \)

Note that for the choice of scaling sequence (5.9), method (5.5) is globally convergent. In this case, the computation of Lagrange multipliers by (6.11) for all values of N, requires storage of all coefficients \(\{ \lambda _k \}_{k \ge 0}\). This inconvenience can be avoided if we decide to accumulate the sums for Lagrange multipliers only starting from the moments \(k(N_q)\) with \(N_q = 2^q\), \(q \ge 1\). Then the method (5.5) will be allowed to stop only at the moments \(2N_q\).

7 Approximating Lagrange Multipliers, II

Method (6.9) has one hidden drawback. If \(i_k \ge 1\), then

Thus, this scheme most probably generates infeasible approximations of the optimal point, which violate some of the functional constraints. In order to avoid this tendency, we propose a scheme which uses for both types of iterations (improving either feasibility or optimality) the same step-size rule (6.6).

Thus, for both types of iterations, we use the same step-size strategy (6.6). Note that for any \(i = 0, \dots , m\) and \(T_i = T_{i,x_k}(\lambda _{i,k})\), we have

Hence, since \(T_i \ne x_k\), we have

In method (7.1), we choose \({{{\mathcal {H}}}}\) in accordance to (6.10). Then, for \(N \ge 1 + a(0)\), we define function k(N) by the first equation in (5.11) and introduce by (6.11) the approximations of the optimal Lagrange multipliers.

Theorem 5

Let the sequence of points \(\{ x_k \}_{k \ge 0}\) be generated by method (7.1). Then for any \(N \ge 1 + a(0)\) the set \({{{\mathcal {A}}}}_0(N)\) is not empty. Moreover, if all functions \(f_i(\cdot )\), \(i = 1, \dots , m\), satisfy (6.2), then for all \(k \in {{{\mathcal {A}}}}_0(N)\) we have

Proof

As in the proof of Theorem 4, for \(x \in Q\), we denote \(r_k(x) = \beta _d(x_k,x)\), and

Then, for \(k \in {{{\mathcal {A}}}}_0(N)\), we have proved that

On the other hand,

Note that the first-order optimality condition for problem (2.7) is as follows:

where \(T_i\) is its optimal solution, Therefore, for all \(x \in Q\) an \(k \not \in {{{\mathcal {A}}}}_0(N)\), we have

MThus, as in the proof of Theorem 4, we conclude that

Since \({{{\mathcal {A}}}}_0(N) \ne \emptyset \) (see the proof of Theorem 4), dividing this inequality by \(\sigma _0(N) > 0\), we get inequality (7.4).

Finally, note that for all \(k \in {{{\mathcal {A}}}}_0(N)\) and \(i = 1, \dots , m\), we have

Thus, inequality (7.3) is proved. \(\square \)

8 Accuracy guarantees for the dual problem

In Sects. 6 and 7, we developed two convergent methods (6.9) and (7.1), which are able to approach the optimal solution of the primal problem (6.1), generating in parallel an approximate solution of the dual problem (6.4). Indeed, for \(N \ge 1 + a(0)\), denote

Then, in view of Theorems 4 and 5, we have

Since \(\phi ({{\bar{\lambda }}}^*(N)) \le f^*_0\), this inequality justfies that the point \(x^*_N\) is a good approximate solution to primal problem (6.1).

However, note that in our reasonings, we did not assume yet the existence of an optimal solution to the dual problem (6.4). It appears that under our assumptions, this may not happen. In this case, since \(f_0^*(N)\) can be significantly smaller than \(f_0^*\), inequality (8.1) cannot justify that vector \({{\bar{\lambda }}}^*(N)\) delivers a good value of the dual objective function.

Let us look at the following example.

Example 4

Consider the following problem

In this case, \({{{\mathcal {L}}}}(x,\lambda ) = x^{(2)} + \lambda (1 - x^{(1)})\). Hence,

Thus, there is no duality gap: \(\phi _* {\mathop {=}\limits ^{\textrm{def}}}\sup \limits _{\lambda \ge 0} \phi (\lambda ) = 0\). However, the optimal dual solution \(\lambda ^*\) does not exist.

Let us look now at the perturbed feasible set

where \(\epsilon > 0\) is sufficiently small. Note that it contains a point with the second coordinate equal to \(\phi _* - \sqrt{\epsilon (2-\epsilon )}\). This means that the condition (8.1) can guarantee only that

Hence, for dual problems with nonexisting optimal solutions, we can expect a significant drop in the quality of approximation in terms of the function value. \(\square \)

Thus, in our complexity bounds, we need to take into account the size of optimal dual solution \({{\bar{\lambda }}}^* \in {\mathbb {R}}^m_+\). Let the sequence \(\{ x_k \}_{k \ge 0}\) be generated by one of the methods (6.9) or (7.1). Then, for any \(k \ge 0\), we have

Thus, we have proved the following theorem.

Theorem 6

Under conditions of Theorem 4 or 5, for all \(N \ge 1 + a(0)\) and all \(k \in {{{\mathcal {A}}}}_0(N)\), we have

Proof

Indeed, in view of inequalities (6.12) and (7.3), we have \(\max \limits _{1 \le i \le m} {1 \over M_i} f_i(x_k) \le h_{k(N)}\). Thus, we get the bound (8.3) from (8.1) and (8.2). \(\square \)

Recall that the size of optimal dual multipliers can be bounded by the standard Slater condition. Namely, let us assume existence of a point \({{\hat{x}}} \in Q\) such that

Then, in accordance to Lemma 3.1.21 in [5], we have

Therefore,

Thus, the Slater condition provides us with the following bound:

which is valid for all \(N \ge 1 + a(0)\). Note that we are able to compute vector \({{\bar{\lambda }}}^*(N)\) without computing values of the dual function \(\phi (\cdot )\), which can be very complex. In fact, computational complexity of a single value \(\phi (\lambda )\) can be of the same order as the complexity of solving the initial problem (6.1), or even more.

9 Subgradient method for unbounded feasible set

In the previous sections, we looked at optimization methods applicable to the bounded sets (see condition (6.3)). If this is not true, the second-order divergence condition (5.6) cannot help, and we need to find another way of justifying efficiency of the subgradient schemes. This is the goal of the current section.

We are still working with the problem (6.1), satisfying condition (6.2). However, the set Q is not bounded anymore. Hence, we cannot count on Sion’s theorem (6.4).

In our method, we have a sequence of scaling coefficients \(\Gamma = \{ \gamma _k \}_{k \ge 0}\) satisfying condition

a tolerance parameter \(\epsilon > 0\), and a rough estimate for the distance to the optimum \(D_0 > 0\).

For functions \( \varphi _{i,y}(\cdot )\) defined by (6.5) with \(y \in Q\), denote by \(a = a_{i,k}(y)\) the unique solution of the equation

Let us look at the following optimization scheme.

It seems that now, the selection of violated constraint by Step 2 looks more natural than in the methods (6.9) and (7.1).

For \(x \in Q\), denote \(\Delta _k(x) = \gamma _k\Big (\beta _d(x_k,x) - \beta _d(x_0,x)\Big )\). This is a linear function of x. In what follows, we assume the Bregman distance is convex with respect to its first argument:

This property is not very common. However, it is valid for the following two important examples.

Example 5

1. Let \(d(x) = \hbox { }\ {1 \over 2}\Vert x \Vert ^2_B\) ( see (1.5)). Then \(\beta _d(x,y) = \hbox { }\ {1 \over 2}\Vert x - y \Vert ^2_B\) and (9.4) holds.

2. Let \(Q = \{ x \in {\mathbb {R}}^n_+: \; \sum \limits _{i=1}^n x^{(i)} = 1 \}\). Define \(d(x) = \sum \limits _{i=1}^n x^{(i)} \ln x^{(i)}\). Then, for \(x, y \in Q\), we have \(\beta _d(x,y) = \sum \limits _{i=1}^n y^{(i)} \ln {y^{(i)} \over x^{(i)}}\), and (9.4) holds also. \(\square \)

Now we can prove the following statement.

Lemma 6

Let the Bergman distance \(\beta _d(\cdot ,\cdot )\) satisfy (9.4). Then, for the sequence \(\{ x_k \}_{k \ge 0}\) generated by method (9.3), and any \(x \in Q\), we have

Proof

Note that

Since the first-order optimality conditions for point \(x_{k+1}\) tells us that

we have

and this is inequality (9.5). \(\square \)

Since \(\Delta _0(x) = 0\), we can sum up the inequalities (9.5) for \(k = 0, \dots , N-1\) with \(N \ge 1\), and get the following consequence:

which is valid for all \(x \in Q\).

In order to approximate the optimal Lagrange multipliers of problem (6.4), we need to introduce the following objects:

Denote \({{\hat{\lambda }}}(N) = ({{\hat{\lambda }}}_1(N), \dots , \hat{\lambda }_m(N))^T \in {\mathbb {R}}^m_+\) and \(f_0^*(N) = \min \limits _{k \in \mathcal{S}_0(N)} f_0(x_k)\). Note that for all \(k \in {{{\mathcal {S}}}}_0(N)\) we have

For our convergence result, we need to assume existence of the optimal solution \(x^*\) to problem (6.1). Let us introduce some bound

which is not used in the method (9.3). Denote \(Q_D = \{ x \in Q: \; \beta _d(x_0,x) \le D \}\). Clearly, if we replace in problem (6.1) the set Q by \(Q_D\), then its optimal solution will not be changed. However, now we can correctly define a restricted dual function \(\phi _D(\lambda ) = \min \limits _{x \in Q_D} {{{\mathcal {L}}}}(x,\lambda )\). Let us prove our main result.

Theorem 7

Let functional components of problem (6.1) satisfy the following condition:

Then, as far as

where \(\rho _0 = \min \limits _{y \in {{{\mathcal {F}}}}}\beta _d(x_0,y)\), we have \({{{\mathcal {S}}}}_0(N) \ne \emptyset \) and \({{\hat{\sigma }}}_0(N) > 0\). Moreover, if

then

Proof

Indeed, for any \(x \in Q\), we have

Hence, in view of inequality (9.6), we get the following bound

Note that \({\hat{\sigma }}_0(N) + \sum \limits _{i=1}^m {{\hat{\sigma }}}_i(N) = \sum \limits _{k=0}^{N-1} a_k\). Therefore, this inequality can be rewritten as follows:

In view of condition (9.2), for \(T_i = T_{i,y_k}\left( {a_{i,k}(y_k) \over \gamma _{k+1}} \right) \), we have

Thus, \(M a_k(y_k) {\mathop {\ge }\limits ^{(9.9)}} \Big [ 2 \gamma _{k+1} (\gamma _{k+1} - \gamma _k) D_0 \Big ]^{1/2}\), and we conclude that

Let us assume that \({{{\mathcal {S}}}}_0(N) = \emptyset \). Then \(\hat{\sigma }_0(N) = 0\) and for \(x = \arg \min \limits _{y \in {{{\mathcal {F}}}}} \beta _d(x_0,y)\) inequality (9.13) leads to the following relation:

However, this cannot happen in view of condition (9.10). Hence, inequality (9.10) implies \({{{\mathcal {S}}}}_0(N) \ne \emptyset \) and \({{\hat{\sigma }}}_0(N) > 0\). In this case, inequality (9.13) can be rewritten as follows:

Maximizing the right-hand side of this inequality in \(x \in Q_d\), we get

Hence, inequality (9.11) implies (9.12). \(\square \)

Thus, for the fast rate of convergence of method (9.3), we need to ensure a fast growth of the valies \(\Sigma _N\). Let us choose

Then \(\gamma _{k+1}(\gamma _{k+1} - \gamma _k) = {\sqrt{k+1} \over \sqrt{k+1} + \sqrt{k}} \ge \hbox { }\ {1 \over 2}\). Hence,

In this case, inequality (9.12) can be ensured in \(O(\epsilon ^{-2})\) iterations.

Note that method (9.3) is quite different from the existing optimization schemes. Let us write down how it looks like in the case \(Q = {\mathbb {E}}\), \(d(x) = \hbox { }\ {1 \over 2}\Vert x \Vert ^2_B\), and the parameter choice (9.15). In this case, the Eq. (9.2) can be written as follows:

Thus, \(a_k = \sqrt{2 \gamma _{k+1}(\gamma _{k+1} - \gamma _k) D_0 } \cdot {1 \over \Vert f'_{i_k}(y_k) \Vert ^*_B} \approx {\sqrt{D_0} \over \Vert f'_{i_k}(y_k) \Vert ^*_B}\), and the method looks as follows:

As compared with other known switching primal-dual schemes (e.g. Section 3.2.5 in [5]), in method (9.17), we apply variable step sizes, which allow faster movements in the beginning of the process.

10 Conclusions

In this paper, we presented several new primal subgradient methods with a better control of step sizes. Our main observation is that for constrained minimization problems, in a proper definition of the actual size of subgradients of convex function, we must take into account the position of the test point with respect to the boundary of the feasible set. In the simplest variant, this can be done by ensuring the size of the proximal step to be equal to a prescribed control parameter.

In this way, we develop new methods for solving quasi-convex problems (Sect. 2) and show that their convergence rate automatically adjust the favorable local structure of the optimal solution. After that, in Sects. 3 and 4, we analyze new schemes for minimizing max-type convex functions and functions with smooth strongly convex components. Our main theoretical improvement there is the scheme with the linear rate of convergence for the problem, where the classical step-size strategy ensures only a sublinear rate.

The remaining part of the paper is devoted to problems with non-trivial functional constraints. In Sect. 5, we present a method for solving problems with single constraint in the composite form (it seems that this formulation is new). For treating this kind of problems, we introduce a new condition of diverging series of squares of the control step-size parameters and define divergence delay, which shows how quickly the method can eliminate the past. Our new method can automatically adjust to the best Hölder class containing the functional components.

In the last three sections of the paper, we present methods, which can efficiently approximate optimal Lagrange Multipliers by a simple switching strategies. All these schemes ensure the optimal rate of convergence. They differ one from another by involvement of Slater condition into the final efficiency bound and by the way they treat unbounded feasible sets.

Our new technique is based on ability to solve some auxiliary univariate problems, related to the prox-type operations. However, for the simple feasible sets, these problems are easy. As a benefit, we get new methods which can adjust to the problem structure and move with much longer steps, especially in the beginning of the process.

References

Juditsky, A., Nemirovski, A.: First order methods for nonsmooth convex large-scale optimization. Optim. Mach. Learn. 121–148 (2011)

Nemirovsky, A., Yudin, D.: Problem Complexity and Method Efficiency in Optimization. Wiley, New York (1983)

Nesterov, Y.: Minimization methods for nonsmooth convex and quasi-convex functions. Ekon. Mat. Metody 20(3), 519–531 (1984). (in Russian; translated as Matekon)

Nesterov, Y.: Primal-dual subgradient methods for convex problems. Math. Program. 120(1), 261–283 (2009)

Nesterov, Y.: Lectures on Convex Optimization. Springer, Berlin (2018)

Nesterov, Y., Shikhman, V.: Quasi-monotone subgradient methods for nonsmooth convex minimization. JOTA 165, 917–940 (2015)

Polyak, B.: Introduction to Optimization. Optimization Software, New York (1987)

Shor, N.: On the structure of algorithms for the numerical solution of optimal planning and design problems. Ph.D. Dissertation, Cybernetics Institute, Academy of Sciences of the Ukrainian SSR, Kiev (1964). (in Russian)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Boris S. Mordukhovich.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This paper has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (Grant agreement No 788368). It was also supported by Multidisciplinary Institute in Artificial intelligence MIAI@Grenoble Alpes (ANR-19-P3IA-0003).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nesterov, Y. Primal Subgradient Methods with Predefined Step Sizes. J Optim Theory Appl (2024). https://doi.org/10.1007/s10957-024-02456-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10957-024-02456-9